Abstract

Removing or filtering outliers and mislabeled instances prior to training a learning algorithm has been shown to increase classification accuracy, especially in noisy data sets. A popular approach is to remove any instance that is misclassified by a learning algorithm. However, the use of ensemble methods has also been shown to generally increase classification accuracy. In this paper, we extensively examine filtering and ensembling. We examine 9 learning algorithms individually and ensembled together as filtering algorithms as well as the effects of filtering in the 9 chosen learning algorithms on a set of 54 data sets. We compare the filtering results with using a majority voting ensemble. We find that the majority voting ensemble significantly outperforms filtering unless there are high amounts of noise present in the data set. Additionally, for most cases, using an ensemble of learning algorithms for filtering produces a greater increase in classification accuracy than using a single learning algorithm for filtering.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The goal of supervised machine learning is to induce an accurate generalizing function \(\mathcal {F}: X \mapsto Y\) from a set of input feature vectors \(X =\{x_1, x_2, \ldots ,x_n\}\) and a corresponding set of of label vectors \(Y =\{y_1, y_2, \ldots , y_n\}\). The quality of the induced function \(\mathcal {F}\) by a learning algorithm is dependent on the quality of the data used for training. However, many real-world data sets are inherently noisy where the noise in a data set can be label noise and/or attribute noise. Label noise has been shown to be more detrimental than attribute noise (Zhu and Wu 2004) and is the focus of this paper. Noise can arise from various sources such as subjectivity, human errors, and sensor malfunctions. Most learning algorithms are designed to tolerate a certain degree of noise by avoiding overfitting the training data. There are two general approaches for handling class noise: (1) creating learning algorithms that are robust to noise such as the C4.5 algorithm for decision trees (Quinlan 1993) and (2) preprocessing the data prior to inducing a model of the data such as filtering (Wilson 1972; Brodley and Friedl 1999), weighting (Rebbapragada and Brodley 2007; Smith and Martinez 2014) or correcting (Teng 2003) noisy instances.

Previous works have generally examined filtering in a limited context using a single or very few learning algorithms and/or using a limited number of data sets. This may be in part due to the extra computational requirement to first filter a data set and then induce a model of the data using the filtered data set. As such, previous works were generally limited to investigating relatively fast learning algorithms such as decision trees (John 1995) and nearest-neighbor algorithms (Tomek 1976; Wilson and Martinez 2000). In addition, filtering prior to using instance-based learning algorithms was motivated in part to reduce the number of instances that have to be stored and because instance-based learning algorithms are more sensitive to noise than other learning algorithms. Most previous works also added artificial noise to the data set to show that filtering, weighting, or cleaning the data set is beneficial (5–50 % of the instances become noisy). In this work, we examine filtering misclassified instances and using a majority voting ensemble on a set of 54 data sets and 9 learning algorithms without adding artificial noise. The artificial noise was added in previous works to show that filtering/weighting/cleaning provided significant improvements with noisy data sets. Within the context of the benefits of filtering established by the previous work, we examine the extent to which filtering affects the performance of a learning algorithm without adding artificial noise to a data set. This also avoids making assumptions about the generation of the noise which may or may not be accurate. It also shows the effect of filtering on the inherent noise in real-world data sets that is not known before hand.

The results provide insights on the robustness of a majority voting ensemble and when to employ a misclassification filter. Using a larger number of data sets allows for more statistical confidence in the results than if only a small number of data sets are used. We find that, in general, a voting ensemble is robust to noise and achieves significantly higher classification accuracy trained on unfiltered data than a single learning algorithm trained on filtered data. For filtering, we find that using an ensemble filter achieves significantly higher classification accuracy than using a single learning algorithm filter. On data sets with higher percentages of inherent noisy instances, however, using the ensemble filter achieves higher classification accuracy than a voting ensemble for some learning algorithms. Training a voting ensemble on filtered training data significantly decreases classification accuracy compared to training a voting ensemble on unfiltered training data. This is likely due to a reduction of diversity in the induced models of the ensemble.

In the next section, we present previous works for handling noise in supervised classification problems. A mathematical motivation for filtering misclassified instances is presented in Sect. 3. We then present our experimental methodology in Sect. 4 followed by a presentation of the results in Sect. 5. In Sect. 6 we provide conclusions and directions for future work.

2 Related work

As many real-wold data sets are inherently noisy, most learning algorithms are designed to tolerate a certain degree of noise. Typically, learning algorithms are designed to be somewhat robust to noise by making a trade-off between the complexity of the induced model and optimizing the induced function on the training data to prevent overfit. Some techniques to avoid overfit include early stopping using a validation set, pruning (such as in the C4.5 algorithm for decision trees Quinlan 1993), or regularization by adding a complexity penalty to the loss function Bishop and Nasrabadi (2006). Some previous works have examined how class noise and attribute noise affects the performance of various learning algorithms (Zhu and Wu 2004; Nettleton et al. 2010) and found that class noise is generally more harmful than attribute noise and that noise in the training set is more harmful than noise in the test set. Further, some learning algorithms have been adapted specifically to better handle label noise. For example, noisy instances are problematic for boosting algorithms (Schapire 1990; Freund 1990) where more weight is placed upon misclassified instances, which often include mislabeled and noisy instances. To address this, Servedio (2003) presented a boosting algorithm that does not place too much weight on any single training instance. For support vector machines, Collobert et al. (2006) use the ramp-loss function to place a bound on the maximum penalty for an instance that lies on the wrong side of the margin. Lawrence and Schölkopf Lawrence and Schölkopf (2001) explicitly model the possibility that an instance is mislabeled using a generative model and then use expectation maximization to update the probability that an instance is mislabeled.

Preprocessing the data set is another approach that explicitly handles label noise. This can be done by removing noisy instances, weighting the instances, or correcting incorrect labels. All three approaches first attempt to identify which instances are noisy by various criteria. Filtering noisy instances has received much attention and has generally resulted in an increase in classification accuracy (Gamberger et al. 2000; Smith and Martinez 2011). One frequently used filtering technique removes any instance that is misclassified by a learning algorithm (Wilson 1972) or set of learning algorithms (Brodley and Friedl 1999). Verbaeten and Van Assche (2003) further pursued the idea of using an ensemble for filtering using ideas from boosting and bagging. Other approaches use learning algorithm heuristics to remove noisy instances. Segata et al. (2009), for example, remove instances that are too close or on the wrong side of the decision surface generated by a support vector machine. Zeng and Martinez (2003) remove instances while training a neural network that have a low probability of being labeled correctly where the probability is calculated using the output from the neural network. Filtering has the potential downside of discarding useful instances. However, it is assumed that there are significantly more non-noisy instances and that throwing away a few correct instances with the noisy instances will not have a negative impact on a large data set.

Weighting the instances in a training set has the benefit of not discarding any instances. Rebbapragada and Brodley (2007) weight the instances using expectation maximization to cluster instances that belong to a pair of the classes. The probabilities between classes for each instances is compiled and used to weight the influence of each instance. Smith and Martinez (2014) examine weighting the instances based on their probability of being misclassified.

Similar to weighting the training instances, data cleaning does not discard any instances, but rather strives to correct the noise in the instances. As in filtering, the output from a learning algorithm has been used to clean the data. Automatic data enhancement (Zeng and Martinez 2001) uses the output from a neural network to correct the label for training instances that have a low probability of being correctly labeled. Polishing (Teng 2000, 2003) trains a learning algorithm (in this case a decision tree) to predict the value for each attribute (including the class). The predicted (i.e. corrected) attribute values for the instances that increase generalization accuracy on a validation set are used instead of the uncleaned attribute values.

We differ from the related work in that we do not add artificial noise to the data sets when we examine filtering. Thus, we avoid making any assumptions about the noise source and focus on the noise inherent in the data sets. We also examine the effects of filtering on a larger set of learning algorithms and data sets providing more significance to the generality of the results.

3 Modeling class noise in a discriminative model

Lawrence and Schölkopf (2001) proposed to model a data set probabilistically using a generative model that models the noise process. They assume that the joint distribution \(p(x,y,\hat{y})\) (where x is the set of input features, \(\hat{y}\) is the observed, possibly noisy, class label given in the training set, and y is the actual unkown class label) is factorized as \(p(\hat{y}|y)p(x|y)p(y)\) as shown in Fig. 1a. However, since modeling the prior distribution of the unobserved random variable y is not feasible, it is more practical to estimate the prior distribution of \(p(\hat{y})\) with some assumptions about the class noise as shown in Fig. 1b.

Graphical model of the generative probabilistic model proposed by Lawrence and Schölkopf (2001)

Here, we follow the premise of Lawrence and Schölkopf by explicitly modeling the possibility that an instance is misclassified. Rather than using a generative model, though, we use a discriminative model since we are focusing on classification tasks and do not require the full joint distribution. Also, discriminative models have been shown to yield better performance on classification tasks (Ng and Jordan 2001). Using a discrimintative model that accounts for class noise motivates our investigation of filtering and using a majority voting ensemble.

Let T be a training set composed of instances \(\langle x_i, \hat{y}_i\rangle \) drawn i.i.d. from the underlying data distribution \(\mathcal {D}\). Each instance is composed of an input vector \(x_i\) with a corresponding possibly noisy label vector \(\hat{y}_i\). Given the training data T, a learning algorithm generally seeks to find the most probable hypothesis h that maps each \(x_i \mapsto \hat{y}_i\). For supervised classification problems, most learning algorithms maximize \(p(\hat{y}_i|x_i,h)\) for all instances in T. This is shown graphically in Fig. 2a where the probabilities are estimated using a discriminative approach such as a neural network or a decision tree to induce a hypothesis of the data. Using Bayes’ rule and decomposing T into its individual constituent instances, the maximum a posteriori hypothesis is:

In Eq. 1, the MAP hypothesis h is found by finding a global optima where all instances are included in the optimization problem. However, noisy instances are often detrimental for finding the global optima since they are not representative of the true (and unknown) underlying data distribution \(\mathcal {D}\). The possibility of label noise is not explicitly modeled in this form—completely ignoring \(y_i\). Thus, label noise is generally handled by avoiding overfit such that more probable, simpler hypotheses are preferred (p(h)). The possibility of label noise can be modeled explicitly by including the latent random variable \(y_i\) with \(x_i\) and \(\hat{y}_i\). Thus, an instance is the triplet \(\langle x_i, \hat{y}_i, y_i\rangle \) and a supervised learning algorithm seeks to maximize \(p(\hat{y}_i|x_i,y,h)\)—modeled graphically in Fig. 2b. Using the model in Fig. 2b, the MAP hypothesis becomes:

Equation 2 shows that for an instance \(x_i\), the probability of an observed class label (\(p(\hat{y}_i|x_i,y_i,h)\)) should be weighted by the probability of the actual class (\(p(y_i|x_i,h)\)).

What we are really interested in is the probability that \(y_i = \hat{y}_i\). Using a discriminative model h trained on T, we can calculate \(p(y_i|\hat{y}_i,x_i,h)\) as

Since the quantity \(p(y|\hat{y}_i, h)\) is unknown, \(p(y_i|\hat{y}_i,x_i,h)\) can be approximated as \(p(\hat{y}_i|x_i,h)\) assuming that \(p(y_i|\hat{y}_i, h)\) is represented in h. In other words, the induced discriminative model is able to model if one class label is more likely than another class label given an observed, possibly noisy, label. Otherwise, all class labels are assumed to be equally likely given an observed label. Thus, \(p(y_i|\hat{y}_i,x_i,h)\) can be approximated by finding the class distributions for a given \(x_i\) from an induced discriminative model. That is, after training a learning algorithm on T, the class distribution for an instance \(x_i\) can be calculated based on the output from the learning algorithm. As shown in Eq. 1, \(p(\hat{y}_i|x_i,h)\) is found naturally through a derivation of Bayes’ law. The quantity \(p(\hat{y}_i|x_i,h)\) is the maximum likelihood of an instance given a hypothesis h which a learning algorithm tries to maximize for each instance. Further, the dependence on a specific h can be removed by summing over all possible hypotheses h in \(\mathcal {H}\) and multiplying each \(p(\hat{y}_i|x_i,h)\) by p(h):

This formulation is infeasible though because (1) it is not practical (or possible) to sum over the set of all hypotheses, (2) calculating p(h) is non-trivial, and 3) not all learning algorithms produce a probability distribution. These limitation make probabilistic generative models attractive, such as the kernel Fisher discriminant algorithm (Lawrence and Schölkopf 2001). However, for classification tasks, generative models generally have a higher asymptotic error than discriminative models (Ng and Jordan 2001). The following section shows how we estimate \(p(y_i|\hat{y}_i,x_i,h)\).

This framework for modeling class noise in a discriminative model motivates the use of removing instances with low \(p(y_i| \hat{y}_i, h)\) and the use of ensembles to lessen the dependence on a given hypothesis h. Following Eq. 2, removing instances with low \(p(y_i| x_i, h)\) will increase the global p(h|T) since \(p(\hat{y}_i|x_i,y_i,h)\) will be low. Further, following Eq. 3, an ensemble should theoretically be more robust to the bias of a particular hypothesis and noise by utilizing multiple overfit avoidance techniques. This motivates our examination of a majority voting ensemble composed of models induced by different learning algorithmsFootnote 1 as a filtering technique and as a classifier.

4 Methodology

In this section, we present how we calculate \(p(y_i|\hat{y}_i, x_i, h)\) and the learning algorithms and data set that we use in our analysis. We also provide an overview of our experimentss.

4.1 Calculating \(p(y_i|\hat{y}_i,x_i,h)\)

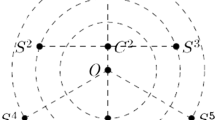

To calculate \(p(y_i|\hat{y}_i,x_i,h)\) for each instance, we use an induced model from training a learning algorithm on the training set T. To lessen the dependence of \(p(y_i|\hat{y}_i,x_i,h)\) on a particular h, we estimate marginalizing over the hypothesis space \(\mathcal {H}\) by selecting a diverse set of learning algorithms to represent \(\mathcal {H}\). The diversity of the learning algorithm refers to the learning algorithms not having the same classification for all of the instances and is determined using unsupervised meta-learning (UML) (Lee and Giraud-Carrier 2011). UML first uses Classifier Output Difference (COD) (Peterson and Martinez 2005) to measure the diversity between learning algorithms. COD measures the distance between two learning algorithms as the probability that the learning algorithms make different predictions. UML then clusters the learning algorithms based on their COD scores with hierarchical agglomerative clustering. We considered 20 learning algorithms from Weka with their default parameters (Hall et al. 2009). The resulting dendrogram is shown in Fig. 3, where the height of the line connecting two clusters corresponds to the distance (COD value) between them. A cut-point of 0.18 was chosen to create 9 clusters and a representative algorithm from each cluster was used to create a diverse set of learning algorithms. The learning algorithms that were used are listed in Table 1. UML provides a diverse set of learning algorithms intended to be representative of \(\mathcal {H}\).

4.2 Experiments

Given a method for estimating \(p(y_i|\hat{y}_i, x_i, h)\) and for lessening the dependence on a specific h, we examine several techniques for filtering instances with low \(p(y_i|\hat{y}_i, x_i, h)\) and for constructing a voting ensemble.

4.2.1 Misclassification filters

In this paper, we examine misclassification filters which filter any instance that is misclassified by a given learning algorithm. Given that a number of different learning algorithms could be employed for filtering, we conduct an extensive evaluation of filtering misclassified instances using a diverse set of learning algorithms as described in the previous section. Each learning algorithm first filters instances from the training set that were misclassified and then induces a model of the data using the filtered training set. Misclassification filters using a single learning algorithm establish a good baseline to compare against.

4.2.2 Ensemble filter

We also examine using an ensemble filter–removing instances that are misclassified by different percentages of the 9 learning algorithms. The ensemble filter more closely approximates \(p(y|\hat{y}_i,x_i)\) from Eq. 3 since it sums over a set of learning algorithms (which in this case were chosen to be diverse and represent a larger subset of the hypothesis space \(\mathcal {H}\)) lessening the dependence on a single hypothesis h. For the ensemble filter, \(p(y|\hat{y}_i,x_i)\) is estimated using a subset of learning algorithms \(\mathcal {L}\):

where \(l_j(T)\) is the hypothesis from the jth learning algorithm trained on training set T. From Eq. 3, p(h) is estimated as \(\frac{1}{|\mathcal {L}|}\) for the jth hypothesis generated from training the learning algorithms in \(\mathcal {L}\) on T and as zero for all of the other hypotheses in \(\mathcal {H}\). Also, \(p(\hat{y}_i|x_i, l_j(T))\) is estimated using the indicator function \(\mathbbm {1}(h(x_i)=\hat{y}_i)\) since not all learning algorithms produce a probability distribution over the output classes. Set up as such, the ensemble filter filters an instance that is misclassified by x % of the learning algorithms in the ensemble. In this paper, we examine an ensemble filter, removing instances that are misclassified by 50, 70, and 90 percent of the learning algorithms in the ensemble. One of the problems of using an ensemble filter is having to choose the percentage of learning algorithms that misclassify an instance for filtering. For the results, we report the accuracy from the percentage that produces the highest accuracy using 5 by 10-fold cross-validation to choose the best percentage for each data set. This method highlights the impact of using an ensemble filter, however, in practice a validation set is often used to determine the percentage that would be used.

4.2.3 Adaptive filter

We also examine an adaptive filtering approach that iteratively adds a learning algorithm to a set of filtering learning algorithms by selecting the learning algorithm from a set of candidate learning algorithms \(\mathcal {L}\) that produces the highest classification accuracy on a validation set when added to the set of learning algorithms used for filtering, as shown in Algorithm 1. The function \(\textit{runLA}(F)\) trains a learning algorithm on a data set using the filter set F to filter the instances and returns the accuracy of the learning algorithm on a validation set. As with the ensemble filter, instances are removed that are misclassified by a given percentage of the filtering learning algorithms. The idea is to choose an optimal subset of learning algorithms through a greedy search of the candidate filtering algorithms. For the results, we report the accuracy from the percentage that produces the highest accuracy using n-fold cross-validation to choose the best percentage for each data set.

4.2.4 Majority voting esemble

In addition, we examine the use of a majority voting ensemble compared to filtering misclassified instances. The majority voting ensemble is composed of the diverse set learning algorithms as described in Sect. 4.1. The classification of an instance is the class that receives the most votes from the trained ensembled models.

4.3 Evaluation

Each method is evaluated using 5 by 10-fold cross-validation (running 10-fold cross validation 5 times, each time with a different seed to partition the data). We examine filtering using the 9 chosen learning algorithms on a set of 47 data sets from the UCI data repository and 7 non-UCI data sets (Thomson and McQueen 1996; Salojärvi et al. 2005; Sayyad Shirabad and Menzies 2005; Stiglic and Kokol 2009). For filtering, we examine two methods for training the filtering algorithms: (1) removing the misclassified instances when trained on the entire training set and (2) using cross-validation on the training set that removes instances that are misclassified in the validation set. The number of folds for using cross-validation for the training set was set to 2, 3, 4, and 5. Table 2 shows the data sets used in this study organized according to the number of instances, number of attributes, and attribute type. The non-UCI data sets are in bold.

Statistical significance between pairs of algorithms is determined using the Wilcoxon signed-ranks test as suggested by Demšar (2006). We emphasize the extensive nature of this evaluation:

-

1.

Filtering is examined on 9 diverse learning algorithms.

-

2.

9 diverse learning algorithms are examined as misclassification filtering techniques.

-

3.

In addition to the single algorithm misclassification filters, an ensemble filter and an adaptive filter are examined.

-

4.

Each filtering method is examined on a set of 54 data sets using 5 by 10-fold cross-validation.

-

5.

Each filtering method is examined on the entire training set as well as using 2-, 3-, 4-, and 5-fold cross-validation.

-

6.

A majority voting ensemble is examined on a set of 54 data sets using 5 by 10-fold cross-validation.

5 Results

In this section, we present the results from filtering the 54 data sets using (1) a biased misclassification filter (the same learning algorithm to filter misclassified instances is used to induce a model of the data), (2) the ensemble filter, and (3) the adaptive filter as well as a voting ensemble. Our results can be summarized as follows: (1) using a voting ensemble is generally preferable to filtering, (2) when filtering, our results suggest that using the ensemble filter in all cases produces the best results, and (3) filtering is preferable to a voting ensemble in some cases with high amounts of noise. Except for the adaptive filter, we find that using cross-validation on the training set for filtering results in a lower accuracy (and often significantly lower) than using the entire training set and, as such, the following results for the biased filter and the ensemble filter are from using the entire training set for filtering rather than using cross-validation. We first show how filtering affects each learning algorithm in Sect. 5.1. Next, we examine using a set of data set measures to determine when filtering is the most effective in Sect. 5.2. In Sect. 5.3, we compare filtering with a voting ensemble.

5.1 Filtering results

The results of the biased, ensemble, and adaptive filters are summarized in Table 3—showing the average classification accuracy for each learning algorithm and filtering algorithm pair.Footnote 2 The values in bold represent those that are a statistically significant improvement over not filtering. The results of the statistical significance tests for each of the learning algorithms is provided in Tables 10, 11, 12, 13, 14, 15, 16, 17 and 18 in “Appendix 1”.

We find that using a biased filter does not significantly increase the classification for any of the learning algorithms and that using a biased filter significantly decreases the classification accuracy for the LWL, naïve Bayes, Ridor and RIPPER learning algorithms (Tables 13, 14, 17, 18). These results suggest that simply removing the misclassified instances by a single learning algorithm is not sufficient. Bear in mind that these results reflect not adding any artificial noise to the training set. In the case where artificial noise is added to the training set (as was commonly done in previous works), using a biased filter may result in an improvement in accuracy. However, most real-world scenarios do not artificially add noise to their data set but are concerned with the inherent noise found within it.

For all of the learning algorithms, the ensemble filter significantly increases the classification accuracy over not filtering and over the other filtering techniques. An ensemble generally provides better predictive performance than any of the constituent learning algorithms (Polikar 2006) and generally yields better results when the underlying ensembled models are diverse (Kuncheva and Whitaker 2003). Thus, by using a more powerful model, only the noisiest instances are removed. This provides empirical evidence supporting the notion that filtering instances with low \(p(\hat{y}_i|x_i)\) that are not dependent on a single hypothesis is preferred to filtering instances where the probability of the class is dependent on a particular hypothesis \(p(\hat{y}_i|x_i,h)\) as outlined in Eq. 3.

Surprisingly, the adaptive filter does not outperform the ensemble filter and in, one case, it does not even outperform training on unfiltered data. Perhaps this is because it overfits the training data since the best accuracy is chosen on the training set. Adaptive filtering has significantly better results when cross-validation is used to filter misclassified instances as opposed to removing misclassified instances that were also used to train the filtering algorithm. Even with using the results with cross-validation, the results are not significantly better than using the ensemble filter.

Examining each learning algorithm individually, we find that some learning algorithms are more robust to noise than others. To determine which learning algorithms are more robust to noise, we compare the accuracy of the learning algorithms without filtering to the accuracy obtained using the ensemble filter. The p values from the Wilcoxon signed-ranks statistical significance test are shown in Table 4 ordered from greatest (least significant impact) to least reading from left to right. We see that random forests and decision trees are the most robust to noise as filtering has the least significant impact on their accuracy. This is not too surprising given that the C4.5 algorithm was designed to take noise into account and random forests are built using decision trees. Ridor and 5-nearest neighbor (IB5) are more robust to noise, but still greatly improve with filtering. IB5 is more robust to noise since it compares with the 5 nearest neighbors of an instance. If K were set to 1, then filtering would have a greater effect on the accuracy. Filtering has the most significant effect on the accuracy of the last five learning algorithms: MLP, NNge, LWL, RIPPER, and naïve Bayes.

5.2 Analysis of when to filter

Using only the inherent noise in a data set, the efficacy of filtering is limited and can be detrimental in some data sets. Thus, we examine the cases in which filtering significantly improves the classification accuracy. This investigation is similar to the recent work by Sáez et al. (2013) who investigate creating a set of rules to understand when to filter using a 1-nearest neighbor learning algorithm. They use a set of data complexity measures from Ho and Basu (2002). The complexity measures are designed for binary classification problems, yet we do not limit ourselves to binary classification problems. As such, we use a subset of the data complexity measures shown in Table 5 that have been extended to handle multi-class problems (Orriols-Puig et al. 2009). In addition, we also examine a set of hardness measures (Smith et al. 2014) shown in Table 6. The hardness measures are designed to determine and characterize instances that have a high likelihood of being misclassified and are taken with respect to a specific instance. For the hardness measures, the “disjunct” refers to the class leaf in a decision tree that classifies an investigated instance. We examine using the set of data complexity measures and the hardness measures to create rules and/or a classifier to determine when to use filtering. We set up the classification problem similar to Sáez et al. where the features are the complexity measures and the hardness measures. The class label is set to “TRUE” if filtering significantly improves the classification accuracy for a data set using the Wilcoxon signed-ranks test otherwise it is set to “FALSE”. We also examine predicting the difference in accuracy between using and not using a filter. Unlike Sáez et al., we find that the data complexity measures and the hardness measures do not create a satisfactory classifier to determine when to filter. Granted, we examine more learning algorithms and do not artificially add noise to the data sets which provides for few data sets where filtering significantly improves the classification accuracy. In the study by Sáez et al., 75 % of the data sets had at least 5 % noise added providing more positive examples. More future work is required to determine when to use filtering on unmodified data sets. Based on our results, we would recommend always using the ensemble filter for all of the learning algorithms as it significantly outperforms the other filtering techniques.

5.3 Voting ensemble versus filtering

In this section, we compare the results of filtering using the ensemble filter with a voting ensemble. The voting ensemble uses the same learning algorithms as the ensemble filter (Table 1) and the vote from each learning algorithm is equally weighted. Table 7 compares the voting ensemble with using the ensemble filter on each of the investigated learning algorithms giving the average accuracy, the p-value, and the number of times that the accuracy of a voting ensemble is greater than, equal to, or less than using the ensemble filter. The results for each data set are provided in Table 19 in “Appendix 2”. With no artificially generated noise, a voting ensemble achieves significantly higher classification accuracy than the ensemble filter for each of the examined learning algorithms. This is not too surprising considering that previous research has shown that ensemble methods address issues that are common to all non-ensemble learning algorithms (Dietterich 2000) and that ensemble methods generally obtain a greater accuracy than that from a single learning algorithm that makes up part of the ensemble (Opitz and Maclin 1999). Considering the computational requirements for training, using a voting ensemble for classification rather than filtering appears to be more beneficial.

Many previous studies (Zhu and Wu 2004; Lawrence and Schölkopf 2001; Brodley and Friedl 1999; Verbaeten and Van Assche 2003) have shown that when a large amount of artificial noise is added to a data set (i.e. \({\ge }10\,\%\)), then filtering outperforms a voting ensemble. We examine which of the 54 data sets have a high percentage of noise using instance hardness (Smith et al. 2014) to identify suspected noisy instances. Instance hardness approximates the likelihood that an instance will be misclassified by evaluating the classification of an instance from a set of learning algorithms \(\mathcal {L}\): \(p(\hat{y}_i|x_i,\mathcal {L})\). The set of learning algorithms \(\mathcal {L}\) is composed of the learning algorithms shown in Table 1. The instances that have a probability greater than 0.9 of being misclassified we consider to be noisy instances. Table 8 shows the accuracies from a voting ensemble and the considered learning algorithms using the ensemble filter for the subset of data sets with more than 10 % noisy instances. Examining the more noisy data sets shows that the gains from using the ensemble filter are more noticeable. However, only 9 out of the 54 investigated data sets were identified as having more than 10 % noisy instances. We ran a Wilcoxon signed-ranks test, but with the small sample size it is difficult to determine the statistical significance of using the ensemble filter over using a voting ensemble. Based on the small sample provided here, training a learning algorithm on a filtered data set is statistically equivalent to training a voting ensemble classifier. The computational complexity required to train an ensemble is less than that to train an ensemble for filtering followed by training another learning algorithm from the filtered data set. A single learning algorithm trained on the filtered data set has the benefit that only one learning algorithm is queried for a novel instance. Future work will include discovering if a smaller subset of learning algorithms for filtering approximates using the ensemble filter in order to reduce the computational complexity.

Examining the more noisy data sets shows that filtering has a more significant effect on classification accuracy, however, the amount of noise is not the only factor that needs to be considered. For example, 32.2 % of the instances in the primary-tumor data set are noisy, yet only one learning algorithm achieves a greater classification accuracy than the voting ensemble. On the other hand, the classification accuracy on the ar1 and ozone data sets for all of the considered learning algorithms trained on filtered data is greater than using a voting ensemble despite only having 3.3 and 0.5 % noisy instances respectively. Thus, there are other unknown data set features affecting when filtering is appropriate. Future work also includes discovering and examining data set features that are indicative of when filtering should be used.

We further investigate the robustness of the majority voting ensemble to noise by applying the ensemble filter to the training data for the voting ensemble. We find that a majority voting ensemble is significantly better without filtering. The summary results are shown in Table 9 and the full results for each data set can be found in Table 20 in “Appendix 2”. Table 9 divides the data sets into subsets that have more than 10 % noisy instances (“Noisy”), and those that have an original accuracy less than 90, 80, 70, 60, and 50 % averaged across the investigated learning algorithms (\({<}N\,\%\)). Even with harder data sets and more noisy instances, using unfiltered training data produces significantly higher classification accuracy for the voting ensemble. Thus, we find that a majority voting ensemble is more robust to noise than filtering in most cases. The strength of a voting ensemble comes from the diversity of the ensembled learning algorithms. However, the induced models from the learning algorithms trained on the filtered training data are less diverse since the diversity often comes from how a learning algorithm treats a noisy instance, lessening the power of the voting ensemble. This is evidenced as we examined a voting ensemble consisting of C4.5, random forest, and Ridor which are three of the more similar learning algorithms using unsupervised meta-learning (see Sect. 4). When trained on the filtered training data, the less diverse voting ensemble achieves a significantly lower classification average accuracy of 82.09 % compared to 83.62 % from the voting ensemble composed of the 9 examined learning algorithms. Thus, some noise in the training set is beneficial to create diversity in the ensemble.

6 Conclusions and Discussion

In this paper, we presented an extensive empirical evaluation of misclassification filters on a set of 54 multi-class data sets and 9 diverse learning algorithms. As opposed to other work on filtering, we used a large set of data sets and learning algorithms and we did not artificially add noise to the data set. In previous works, noise was added to a data set to verify that the noise filtering method was effective and that filtering was more effective when more noise was present. However, the artificial noise may not be representative of the actual noise and the impact of filtering on an unmodified data set is not always clear.

Using a set of multi-class data sets, we focused our analysis on accuracy. However, for many 2-class problems other metrics could be more indicative of good performance such as precision or recall. We also did not examine the case of class imbalance that may affect the probability of a class or cases where one class may be more important than another such as a false negative in diagnosing a terminal disease. These are important issues that arise in many real-world machine learning applications. In cases of extreme data imbalance, all of the instances of a majority class could be removed because they have low \(p(y_i|\hat{y}_i, x_i, h)\). Thus, other techniques to account for class imbalance should also be used. This risk also highlights a benefit of using a voting ensemble as instances will not be discarded. However, the voting ensemble requires a higher computational budget to induce the ensembled models. For dealing with class imbalance or class weighted differently by importance, we suggest using a voting ensemble with another technique that address the class imbalance or weighted classes.

Through our experiments we found that, without artificially adding label noise, using the same learning algorithm for filtering and for inducing a model of the data can be significantly detrimental and does not significantly increase the classification accuracy even when examining harder data sets. Using the ensemble filter significantly improved the accuracy over not filtering and outperformed both the adaptive filtering method and using each learning algorithm individually as a filter for all of the investigated learning algorithms. We compared filtering with a voting ensemble and found that a voting ensemble achieves significantly higher classification accuracy than any of the other considered learning algorithms trained on filtered data. A majority voting ensemble trained on unfiltered data significantly outperforms a voting ensemble trained on filtered data. Thus, a voting ensemble exhibits robustness to noise in the training set and is preferable to filtering.

Notes

As opposed to an ensemble composed of models linduced by the same learning algorithm such as bagging or boosting.

The NNge learning algorithm did not finish running two data sets: eye-movements and Magic telescope. RIPPER did not finish on the lung cancer data set. In these cases, the data sets are omitted from the presented results. As such, NNge was evaluated on a set of 52 data sets and RIPPER was evaluated on a set of 53 data sets.

References

Bishop CM, Nasrabadi NM (2006) Pattern recognition and machine learning, vol 1. Springer, New York

Brodley CE, Friedl MA (1999) Identifying mislabeled training data. J Artif Intell Res 11:131–167

Collobert R, Sinz F, Weston J, Bottou L (2006) Trading convexity for scalability. In: Proceedings of the 23rd international conference on machine learning, pp 201–208

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Dietterich TG (2000) Ensemble methods in machine learning. In: Multiple classifier systems, Lecture Notes in Computer Science, vol 1857. Springer, Berlin, pp 1–15

Freund Y (1990) Boosting a weak learning algorithm by majority. In: Proceedings of the third annual workshop on computational learning theory, pp 202–216

Gamberger D, Lavrač N, Džeroski S (2000) Noise detection and elimination in data preprocessing: experiments in medical domains. Appl Artif Intell 14(2):205–223

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. SIGKDD Explor Newsl 11(1):10–18

Ho TK, Basu M (2002) Complexity measures of supervised classification problems. IEEE Trans Pattern Anal Mach Intell 24:289–300

John G.H (1995) Robust decision trees: removing outliers from databases. In: Knowledge discovery and data mining, pp 174–179

Kuncheva LI, Whitaker CJ (2003) Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach Learn 51(2):181–207

Lawrence ND, Schölkopf B (2001) Estimating a kernel fisher discriminant in the presence of label noise. In: In Proceedings of the 18th international conference on machine learning, pp 306–313

Lee J, Giraud-Carrier C (2011) A metric for unsupervised metalearning. Intell Data Anal 15(6):827–841

Nettleton DF, Orriols-Puig A, Fornells A (2010) A study of the effect of different types of noise on the precision of supervised learning techniques. Artif Intell Rev 33(4):275–306

Ng AY, Jordan MI (2001) On discriminative vs. generative classifiers: a comparison of logistic regression and naive bayes. In: Advances in neural information processing systems, vol 14, pp 841–848

Opitz DW, Maclin R (1999) Popular ensemble methods: an empirical study. J Artif Intell Res 11:169–198

Orriols-Puig A, Macià N, Bernadó-Mansilla E, Ho TK (2009) Documentation for the data complexity library in C\(++\). Tech. Rep. 2009001, La Salle—Universitat Ramon Llull

Peterson AH, Martinez TR (2005) Estimating the potential for combining learning models. In: Proceedings of the ICML Workshop on meta-learning, pp 68–75

Polikar R (2006) Ensemble based systems in decision making. IEEE Circuits Syst Mag 6(3):21–45

Quinlan JR (1993) C4.5: Programs for machine learning. Morgan Kaufmann, San Mateo

Rebbapragada U, Brodley CE (2007) Class noise mitigation through instance weighting. In: Proceedings of the 18th European conference on machine learning, pp 708–715

Sáez JA, Luengo J, Herrera F (2013) Predicting noise filtering efficacy with data complexity measures for nearest neighbor classification. Pattern Recognit 46(1):355–364

Salojärvi J, Puolamäki K, Simola J, Kovanen L, Kojo I, Kaski S (2005) Inferring relevance from eye movements: feature extraction. Tech. Rep. A82, Helsinki University of Technology

Sayyad Shirabad J, Menzies T (2005) The PROMISE repository of software engineering databases. School of Information Technology and Engineering, University of Ottawa, Canada . http://promise.site.uottawa.ca/SERepository/

Schapire RE (1990) The strength of weak learnability. Mach Learn 5:197–227

Segata N, Blanzieri E, Cunningham P (2009) A scalable noise reduction technique for large case-based systems. In: Proceedings of the 8th international conference on case-based reasoning: case-based reasoning research and development, pp 328–342

Servedio RA (2003) Smooth boosting and learning with malicious noise. J Mach Learn Res 4:633–648

Smith MR, Martinez T (2011) Improving classification accuracy by identifying and removing instances that should be misclassified. In: Proceedings of the IEEE international joint conference on neural networks, pp 2690–2697

Smith MR, Martinez T (2014) Reducing the effects of detrimental instances. In: Proceedings of the 13th international conference on machine learning and applications, pp 183–188

Smith MR, Martinez T, Giraud-Carrier C (2014) An instance level analysis of data complexity. Mach Learn 95(2):225–256

Stiglic G, Kokol P (2009) GEMLer: gene expression machine learning repository. University of Maribor, Faculty of Health Sciences. http://gemler.fzv.uni-mb.si/

Teng C (2003) Combining noise correction with feature selection. Data warehousing and knowledge discovery, Lecture Notes in Computer Science, vol 2737, pp 340–349

Teng CM (2000) Evaluating noise correction. In: PRICAI, pp 188–198

Thomson K, McQueen RJ (1996) Machine learning applied to fourteen agricultural datasets. Tech. Rep. 96/18, The University of Waikato

Tomek I (1976) An experiment with the edited nearest-neighbor rule. IEEE Trans Syst Man Cybern 6:448–452

Verbaeten S, Van Assche A (2003) Ensemble methods for noise elimination in classification problems. In: Proceedings of the 4th international conference on multiple classifier systems, pp 317–325

Wilson DL (1972) Asymptotic properties of nearest neighbor rules using edited data. IEEE Trans Syst Man Cybern 2–3:408–421

Wilson DR, Martinez TR (2000) Reduction techniques for instance-based learning algorithms. Mach Learn 38(3):257–286

Zeng X, Martinez TR (2001) An algorithm for correcting mislabeled data. Intell Data Anal 5:491–502

Zeng X, Martinez TR (2003) A noise filtering method using neural networks. In: Proceedings of the international workshop of soft computing techniques in instrumentation, measurement and related applications

Zhu X, Wu X (2004) Class noise vs. attribute noise: a quantitative study of their impacts. Artif Intell Rev 22:177–210

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Statistical Significance Tables

This section provides the results from the statistical significance tests comparing not filtering with filtering with a biased filter, the ensemble filter, and the adaptive filter for the investigated learning algorithms. The results are in Tables 10, 11, 12, 13, 14, 15, 16, 17 and 18. The p values with a value \({<}\)0.05 are in bold and “greater-equal-less” refers to the number of times that the algorithm listed in the row is greater than, equal to, or less than the algorithm listed in the column.

Appendix 2: Ensemble results for each data set

This section provides the results for each data set comparing a voting ensemble with filtering using the ensemble filter for each investigated learning algorithm as well as filtering using the ensemble filter for a voting ensemble. The results comparing a voting ensemble with filtering for each investigated non-ensembled learning algorithm are shown in Table 19. The bold values represent the highest classification accuracy and the rows highlighted in gray are the data sets where filtering with the ensemble filter increased the accuracy over the voting ensemble for all learning algorithms. The results comparing a voting ensemble with a filtered voting ensemble are shown in Table 20. The bold values for the “Ens” column represent if the voting ensemble trained on unfiltered data achieves higher accuracy while the bold values for the “FEns” columns represent if the voting ensemble trained on filtered data achieves higher accuracy than the voting ensemble trained on unfiltered data.

Rights and permissions

About this article

Cite this article

Smith, M.R., Martinez, T. The robustness of majority voting compared to filtering misclassified instances in supervised classification tasks. Artif Intell Rev 49, 105–130 (2018). https://doi.org/10.1007/s10462-016-9518-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-016-9518-2