Abstract

Objective

T2 maps are more vendor independent than other MRI protocols. Multi-echo spin-echo signal decays to a non-zero offset due to imperfect refocusing pulses and Rician noise, causing T2 overestimation by the vendor’s 2-parameter algorithm. The accuracy of the T2 estimate is improved, if the non-zero offset is estimated as a third parameter. Three-parameter Levenberg–Marquardt (LM) T2 estimation takes several minutes to calculate, and it is sensitive to initial values. We aimed for a 3-parameter fitting algorithm that was comparably accurate, yet substantially faster.

Methods

Our approach gains speed by converting the 3-parameter minimisation problem into an empirically unimodal univariate problem, which is quickly minimised using the golden section line search (GS).

Results

To enable comparison, we propose a novel noise-masking algorithm. For clinical data, the agreement between the GS and the LM fit is excellent, yet the GS algorithm is two orders of magnitude faster. For synthetic data, the accuracy of the GS algorithm is on par with that of the LM fit, and the GS algorithm is significantly faster. The GS algorithm requires no parametrisation or initialisation by the user.

Discussion

The new GS T2 mapping algorithm offers a fast and much more accurate off-the-shelf replacement for the inaccurate 2-parameter fit in the vendor’s software.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The MRI transverse relaxation time T2 depends on the type of tissue, and its local digression from empirical normal values may be indicative of the onset of a disease. To use such knowledge for diagnostic purposes, maps of T2 values at individual voxels need to be established. Clinical applications of T2 mapping include [1, 2] myelin water-imaging in multiple sclerosis and brain cancer, schizophrenia, phenylketonuria, identification of myocardial oedema in inflammatory pathologies and acute ischaemia, mapping of carotid artery plaque components, knee cartilage imaging, or T2-mapping of the posterior cruciate ligament from an MRI of the knee, among others.

This study was prompted by a prostate cancer project at the Department of Urology, University Hospital Brno, Czech Republic. It has been known for two decades [3] that lower values of the transverse relaxation time in prostate T2 maps are highly correlated with lower citrate values, which in turn indicate malignancy. Confirming this finding, the authors of [4] also observed that the mean T2 values were similar for all of the tested tissue classes on two different MR scanners from different vendors, which suggests that the T2 value may be robust to changes in protocols and MR vendors and, therefore, suitable as one tool in a future multiparametric quantitative approach for diagnosing prostate cancer.

Transverse magnetisation decay is, in general, multiexponential [1, 2]. Some approaches attempt to find exact values of several decay time constants of a particular body tissue such as the prostate [5], yet tissues differ in both the number of dominant time constants and in their values, which makes multiexponential fitting difficult to generalise. Furthermore, the accuracy of multiexponential estimates is value dependent: the separation of exponential components that are closer than a factor of 2 requires very high-quality data [5]. To find the parameters of a multiexponential fit, a nonlinear least-squares (NLLS) problem needs to be solved, but this process is computationally demanding. For example, a NLLS voxelwise 3-parameter fit on a 256 × 256 voxel image takes several minutes to complete and, thus, does not qualify for interactive data post-processing.

To provide quick T2 maps for clinical use, the fast non-iterative log-linear (LL) method, which performs a linear fit of the monoexponential model Eq. (1) after a log transformation, has been widely implemented by scanner vendors [6].

Equation (1) describes the monoexponential model for a particular volume element j. Each volume element has its own transverse magnetisation decay curve with specific values \(\left( {M_{0}^{j} ,T_{2}^{j} } \right)\).

An important issue pertaining to the LL method is that of noise. Due to the logarithmic operation that is used to linearise the model (1), noise is amplified at small echo values [2, 7]. To deal with this issue, the authors of [7] presented a fast iterative nonlinear least-squares method of noise reduction for the 2-parameter fit, which in low-intensity T2 value tissues such as muscles and adipose tissues produces less noise than the LL method.

Multi-echo spin echo (MSE) data that are used for monoexponential fitting (Fig. 1a, b) violate the assumption of purely exponential decay in 3 aspects (Fig. 1c, d):

-

1.

the first echo is smaller than the second echo,

-

2.

instead of decreasing monotonically, the echo values slightly oscillate,

-

3.

for many voxels, the echoes converge to some positive offset rather than to zero.

a, b T2 echoes at echo times 10 and 310 ms, i.e. the first and the last echo of 32 echoes lying 10 ms apart that were acquired using the MRI MSE sequence. Ideally, the brightness of the echoes within the tissue should decrease exponentially from left to right. c, d T2 echoes from arbitrarily picked voxels at all 32 echo times (TE =10, 20, 30,. .., 320 ms). The decay is not purely exponential: the first echo is smaller than the second echo, the decay oscillates rather than being monotonic, and in some cases, late echoes do not converge to zero

The signal increase from the first to the second echo is caused by imperfections in the refocusing pulses in the MSE sequence, as detailed in [8, 9]. Refocusing pulses in the MSE should have a flip angle of exactly 180º to guarantee an exponential decay with T2, whereas in reality, imperfect slice excitation profiles and B1 inhomogeneity lead to lower flip angle values and give rise to stimulated echo contributions to the overall signal intensity. Stimulated echoes decay according to a combination of T1 and T2 relaxation rather than according to T2 alone. This makes the echo amplitude jump from the first to the second echo and causes signal oscillations between odd and even echoes [9,10,11]. Oscillations due to imprecise flip angles present a major contribution to the offset and a major error source in T2 quantification.

The non-zero offset may also be due to the presence of Rician or Rayleigh noise; hence, the expected zero offset will not result even for perfect refocusing [11]. The offset may also be affected by the echo spacing and the echo train length.

Authors of [11] admit that monoexponential fitting is not the most appropriate method of data fitting in T2 relaxometry, yet they argue that monoexponential fitting methods are used in the majority of clinical and preclinical studies. To improve T2 estimates from data that have been distorted by imperfect flip angles and Rician noise, the researchers augmented the model Eq. (1) by adding a non-zero offset to the monoexponential model. Different voxels j of an MRI image possess the different offset values \(c^{j} \,\):

Report [11] assessed the potential benefit of non-zero offsets for fitting accuracy by comparing four methods:

-

1.

all echoes fitted with the simple exponential without offset, Eq. (1),

-

2.

all echoes fitted with the exponential with offset, Eq. (2),

-

3.

the first echo was discarded, and the remaining echoes were fitted with Eq. (1),

-

4.

the first echo was discarded, and the remaining echoes were fitted with exponential + offset, Eq. (2)

The reference T2 values (i.e. ground truth) that were used for the comparison were known either from extended phase graph (EPG) simulations [12] or from T2 relaxometry on an agarose/water-mixture phantom. Report [11] concludes that, after discarding the first echo, most of the remaining systematic error in T2 could be eliminated by the offset as a fitting parameter (method 4).

The efficacy of skipped echo methods for T2 quantification has been examined in [13]. The authors compared three monoexponential fitting strategies—exponential fitting for T2 using all echoes, skipping the first echo, or skipping all odd echoes—against computational modelling of the exact signal decay using Bloch equation-simulated, phantom and in vivo MSE data. They optimised a 2-parameter monoexponential model to fit the simulated or measured MSE data. For this model, which lacked a constant offset, their results show that even if skipped methods represent a step forward over standard exponential fitting, they nevertheless result in a substantial T2 error, and they recommend fitting for the actual decay curve using full Bloch equation modelling and measured flip angle maps that account for all echo pathways.

To compensate for the effect of stimulated echoes in a multiexponential decay model, the authors of [14] present an approach that is based on the estimation of the inhomogeneity of the B1 field and the resulting refocusing angle. The estimated refocusing angle was then inputted as a voxel-specific parameter called A to the EPG algorithm, both for the simulated tri-exponential and the in vivo data. The value A was used to calculate many decay curves over a range of 40 logarithmically spaced T2 values ranging from 0.015 to 2 s. This yielded an objective function for the non-negative least-squares (NNLS) fit. Using the NNLS method, they obtained a T2 distribution that minimised the Euclidian norm of the solution; however, this produced narrow, sharp peaks. To increase the solution’s robustness in the presence of noise, regularisation using a minimum energy smoothing constraint was added to the NNLS fitting procedure. The paper [14] does not explain why—without regularisation—the NNLS method produced sharp peaks. By a simple linear algebra, the problem of fitting a linear combination of 40 linearly independent sampled curves (EPG curves or exponentials) to 32 echoes is underdetermined and has infinitely many exact solutions, some of which will produce sharp peaks, and others smooth ones.

From [14], it is also not clear what benefit the smoothing of the T2 distribution had for the numerical model they used in simulations. The model consisted of exactly three discrete peaks located at 20 ms, 100 ms, and 2 s. For this numerical model, the realistic solution has exactly three sharp peaks. To obtain the correct solution, the regularisation should emphasise sharp peaks instead of smoothing them.

The authors of [14] claimed that the exponential model did an extremely poor job of finding the echo magnitudes. They quoted computing times slightly less than 30 min for stimulated echo analysis of seven 256 × 256 voxel slices of 32 echo data frames, making use of parallel computing with eight physical processor cores.

To restrict the set of T2 distributions to the least complex, regularisation can be used [2]. In this approach, the objective function is a sum of two terms that are optimised simultaneously: the first term penalises the misfit between model and measurement, while the second term evaluates the energy of the T2 distribution, weighted by a constant µ. High values of µ increase the constraints, resulting in broad T2 distributions at the expense of a higher misfit, whilst very low µ values result in narrow T2 distributions.

T2 mapping errors that are caused by measurement noise can, to some extent, be algorithmically suppressed [2]. To avoid noise effects in T2 mapping using MSE, the shortest echo should have a signal–noise ratio (SNR) that is greater than 100, which may require signal averaging. Image smoothing also has the effect of increasing the SNR. A non-local mean filter can be used to increase the SNR across regions with similar characteristics. Alternatively, voxels can be averaged across a region of interest, but this discards information about the noise that is associated with each constituent component and hinders a statistical comparison of T2 values across different regions, between subjects, or over time [2].

Errors can also be incurred by using magnitude instead of complex-valued MR data. The authors of [15] observe that this simplification results in biased T1, T2* and T2 estimates at low SNRs, and they employ several estimation techniques which use real or complex-valued MR data to achieve an unbiased estimation. The estimators were then compared in terms of bias (accuracy), variance (reciprocal of precision) and computation time. For the T2 simulation studies, complex-valued MR data were generated using monoexponential decay with a complex multiplier representing proton density and initial phase. For T2 studies on experimental data, high-SNR T2 MSE datasets of in vivo rat brains under 2% isoflurane anaesthesia were acquired in a single session on a 4.7-T system. The reconstructed complex-valued datasets that resulted from these scans were incrementally degraded by adding complex additive white Gaussian noise to reveal the relative robustness of the different methods to additional noise, and parametric maps were estimated at each stage. Simulations and experiments demonstrated that the estimation techniques that used complex-valued data provided the minimum-variance unbiased estimates of parametric maps and markedly outperformed the commonly used magnitude-based estimators under most conditions, even compared to the magnitude-based techniques that account for Rician noise characteristics. However, the authors also mention some limitations of the estimators that are based on complex-valued data, such as unexpected phase deviations while deterministic phase evolution is assumed.

Several other researchers have reported that the introduction of an offset may significantly reduce the T2 estimation error both in simulations and on phantoms with known relaxation times, yet in most approaches the offset was found either manually—by choosing the best-performing value out of a preselected range—or heuristically, e.g. by taking the mean of the late echoes [11].

Manual offset selection is not feasible for full-size MRI images, which can have, e.g. 256 × 256 = 65,536 different voxels with different offsets. Taking the mean of the late echoes for an offset may well be automated, yet the estimates do not rely on optimisation of some objective function; hence, their optimality cannot be measured. References to algorithms that automatically find the optimum M0, T2, and c values of Eq. (2) are rare. The earliest mention of an automatic 3-parameter matching of Eq. (2) and the only publicly available implementation that was capable of yielding numerical results for our MRI prostate data was the ImageJ MRI Processor plug-in [16]. The plug-in offers the following two fitting algorithms:

-

1.

the Levenberg–Marquardt algorithm,

-

2.

the general purpose Simplex algorithm.

The user can specify the number of iterations and has the option to force the offset parameter to 0. In addition, echo times can be explicitly defined. The MRI Processor's LM method takes approximately 4 min to calculate the M0, T2, and c parameters for 256 × 256 voxels of a 32-image MSE sequence on an Intel i7 CPU laptop. MRI Processor's Simplex algorithm did not converge for our data.

Another implementation of the LM nonlinear least-squares algorithm was reported in [11] for comparison of the four fitting cases (with/without the first echo, with/without offset), yet no execution times were quoted.

A method for an automatic classification of tissues using T1 and T2 relaxation times from prostate MRI is presented in [17]. To create PET/MR attenuation maps, the authors classify the different attenuation regions from MRIs at the pelvis level using the T1 and T2 relaxation times and anatomical knowledge. The T2 relaxation maps are computed from MSE imaging of the prostate using the 3-parameter fit to Eq. (2). To find the M0, T2 and c parameters, the researchers use a proprietary bi-square weights nonlinear least-squares fitting method that was developed in MATLAB. The algorithm automatically extracts the background and fits only the body region to minimise the fitting time. Approximately, 5 min is needed to compute the T2 values for all voxels of the reduced foreground image.

Rather than calculating the offset numerically from MSE acquisitions, in [18], the offset is found as a function of the MRI sequence parameters (flip angle, number of pulses, repetition time, etc.). The authors of [18] designed a novel T2 mapping sequence that was different from the usual MSE. This novel method enables a robust estimation of T2 maps using a 3-parameter fit model. In conclusion, the 3-parameter model of [18] for T2 relaxation accurately models myocardial T2 mapping, which is not true when the conventional 2-parameter model is used for curve–fitting.

The introductory review confirmed that adding offset to the monoexponential fit of the measured transverse relaxation curve substantially improves the estimate, yet it is expensive in terms of its computation time: compared to the seconds needed to compute the fast yet inaccurate LL fit, it takes several minutes to find the 3-parameter NLLS fit of Eq. (2) from a 32 MSE sequence for all of the 256 × 256 image voxels.

Our goal was to develop a T2 3-parameter matching algorithm with execution times in the seconds range and with the accuracy of a 3-parameter NLLS fit. The idea for how to achieve this was to modify the NLLS optimisation in such a way that only one variable needed to be optimised iteratively, with the other two variables being uniquely defined by the current iteration of the first one. Reducing the number of problem variables would lead to shorter computation times.

Materials and methods

The algorithm

Below, the algorithm will be elaborated in 4 steps:

-

1.

we will formulate the problem of finding the exponential fit parameters \(\left( {M_{0} ,T_{2} ,c} \right)\) of Eq. (2) in terms of the least-square minimisation of the sum of errors between the measured echoes and the fitting function

-

2.

instead of solving the least-squares problem for the exponential fit, which is difficult, we will apply a weighted linear regression to its logarithm with the fixed offset c, which is easy,

-

3.

to account for noise boost caused by the logarithmic operation, we will apply a compensatory weighting for the measured echoes,

-

4.

we will show that an accurate estimate of c can be easily found using a one-dimensional golden section (GS) line search, since the modified objective function empirically turns out to be unimodal with respect to c

The idea to iteratively optimise c with \(\left( {M_{0} ,T_{2} } \right)\) uniquely defined by the value of c that resulted from the current line-search operation leads to a reduction in the number of optimisation variables from 3 to 1, since M0 and T2 are projected onto c. In this regard, our approach is reminiscent of the variable projection method [19], yet the similarity ends here. While variable projection algorithms generally apply to a class of least-squares problems with separable linear parameters that are eliminated by substituting partial derivatives with respect to the non-linear variables, we do not use partial derivatives at all. Instead, we keep only one linear parameter, the offset \(c\), and calculate new iterates of \(\left( {M_{0} ,T_{2} } \right)\) using noise-weighted linear regression on the log error.

Least-squares fit of sampled echoes by an exponential and an offset

All computations below are done voxelwise. For readability, we omit the voxel index j in Eq. (2) and make the replacement \(T_{2} = 1/R_{2}\):

The parameters M0, R2 and c of the exponential fit Eq. (3) are required to minimise the sum G of square errors between the measured echoes \(\vec{e}\) and the corresponding samples of the fit function \(\vec{y}\):

with:

Replacing the exponential fit Eq. (4) with linear regression

For a fixed c, we replace the computationally intensive nonlinear minimisation in 2 variables \(\left( {M_{0} ,R_{2} } \right)\) from Eq. (4) with simple linear regression, in which the sum of the squared errors between the logarithm of the echo minus the offset and the logarithm of the exponential function value is minimised. We introduce \(d_{i}\) and \(f_{i}\):

and instead of Eq. (4), we minimise the weighted sum Q of squared errors between the logarithms with respect to \(L_{0}\) and \(R_{2}\):

where \(\vec{f} = \left[ {f_{1} ,f_{2} ,...,f_{N} } \right]^{T}\)

In Eq. (6), \(W\) is a positive semi-definite weighting matrix that is designed to compensate for the overemphasis on small echoes, as derived in the next paragraph.

\(Q\left( {R_{2} ,L_{0} } \right)\) is a non-negative quadratic function; hence, its minimum coincides with the zeros of the partial derivatives:

which resolves to:

and the parameter \(M_{0}\) of the exponential fit reads:

In Eq. (11), \(M_{0}\) can take on only positive values, yet this is a natural constraint for the underlying physical problem of exponential magnetisation decay.

Small echoes are overweighted due to the logarithm: the method of compensation

The fitting equation at the echo time ti reads:

where \(\delta e_{i}\) is the fitting error (noise). The left-hand side of Eq. (12) has a Taylor expansion in \(\delta e_{i}\):

i.e., the fitting error \(\delta e_{i}\) at the echo time ti is amplified by:

which becomes large when there are small differences between the echo \(e_{i}\) and the current estimate of the offset \(c\). This overweighting of fitting errors can be compensated for by multiplying the logarithmic errors in Eq. (6) by the reciprocal of Eq. (14) that was calculated with the current offset estimate. Because of the logarithmic operation in Eq. (5), we only perform this weighting when\(e_{i} > c\). When \(e_{i} \le c\), a weight of 0 is selected. Considering all of the echo times, we obtain the diagonal weighting matrix \({\rm {diag}}\left( {\vec{e} - c \cdot \vec{1}} \right)^{ + }\), where

This yields:

i.e. \(W\) in (6) becomes:

Finding the offset c

Equations (5), (9), (10), (11), and (17) compute the exponential fit parameters in the case of a fixed c. It remains to be shown how to find the minimiser c in Eq. (4) with fixed \(\left( {M_{0} ,R_{2} } \right)\) values that resulted from Eqs. (9) and (11).

The parameter c uniquely defines the R2, L0 and M0 values that are computed by Eqs. (9) (10) and (11), respectively, in which c is part of the equations \(f_{i} = \log \left( {e_{i} - c} \right)\) and \(D = {\text{diag}}\left( {\vec{e} - c \cdot \vec{1}} \right)\). Indeed, in Eq. (5), \(L_{0} = L_{0} \left( c \right)\), \(M_{0} = M_{0} \left( {L_{0} \left( c \right)} \right) = M_{0} \left( c \right)\) and \(R_{2} = R_{2} \left( c \right)\), hence:

is a function of a single variable c. It resulted empirically from extensive tests (65,536 fits for a 256 × 256 MRI slice) using MSE patient data as well as using synthetic data that for voxels with more or less regularly decreasing echoes, \(G\left( c \right)\) is unimodal. Two typical shapes of \(G\left( c \right)\) as a function of a varying offset c are shown in Fig. 2b for two voxels that were selected at random from the biological tissue of Fig. 2a. Both curves exhibit a well-pronounced single minimum. Thus, the minimiser c can be computed very quickly using a one-dimensional minimum search. We use the golden section method [20] to find the minimum of G(c).

Unimodality of the cost function G(c) of the exponential fit. a Two voxels (indicated with a triangle and a circle) were arbitrarily chosen from the T2 echo at 20 ms. b To show unimodality at these voxels, G(c) was evaluated at 10 equidistantly spaced values of c that increased linearly with the iteration number. The curve marked with the circle achieves its minimum, i.e., the best exponential fit, in the fourth iteration, the curve that is marked with the triangle achieves the minimum in the second iteration

The golden section search needs two initial values between which the optimum is searched. Since the offset due to imperfect flip angles or Rician noise is always non-negative, we selected the offset lower bound as 0 for all voxels. The upper bound was chosen equal to the 10th echo value at a given voxel with the following reasoning: for an exponential with T2 = 100 ms and 10 ms echo spacing, the 10th echo is still ~33% above the minimum of the exponential; thus, it is safely above the offset, even if noise is present.

Each algorithm step is simultaneously performed for all voxels using matrix operations. A detailed flowchart of the algorithm is shown in Fig. 3.

Noise identification and masking

In the T2 maps that are generated using either the vendor's software, or the GS method, or the LM method, noise is visible in the background and in gas-filled body cavities. On a closer examination, the MSE signal in noisy voxels does not exhibit a steady decrease. Instead, the echoes have low levels and oscillate heavily. This condition is easily recognised by comparing the total variation (TV) of the echo signal at a given voxel to the difference between the maximum and the minimum echo value. Total variation of the echo sequence \(\vec{e}\)is defined as:

For a purely monotonic decrease, the total variation is equal to the difference between the maximum and the minimum echoes. The more oscillatory the signal is, the more its total variation exceeds the span of the echo values. The T2 values at voxels exceeding a properly chosen ratio of total variation and signal span can be labelled as noise.

We use the total variation-generated signal mask to exclude voxels with noisy MSE data from a comparison of the results of the different T2 mapping methods. Such a decision is done by multiplying the respective T2 values or the derived data by the TV-generated mask. The mask must properly distinguish voxels where the total variation is high as a result of noise and which are rejected, from the accepted high-TV voxels where the echo train oscillates non-monotonically between odd and even echoes as a result of low refocusing pulse flip angles. The total variation value for given flip angles can be numerically calculated using the extended phase graph theory [8, 10]. In this study, we excluded from comparison all voxels with MSE total variation that exceeded the span of the echo values by a factor greater than 2. Since the highest TV factor that was found in the EPG simulations with a 120° flip angle was 1.4, a TV threshold of 2 does not filter out oscillations that are due to imperfect flip angles. Figure 4 compares a raw T2 map of the GS algorithm, the TV-generated mask, and the masked T2 map with high-TV, low-confidence voxels marked in red.

Total variation-based noise-masking. a Prostate T2 map calculated by the GS algorithm. Noise appears in low proton density regions, such as the background and the colon. b TV-generated mask. Black marks low-confidence voxels, i.e., such voxels where the total variation of the echoes exceeds the echo range by a factor of 2 or more, and thus probably represents noise. Accepted voxels are those with TV factors less than 2, in which TV might have been increased by imperfect refocusing pulses, and they are tagged white. c Denoised T2 map. Low-confidence T2 regions with a high TV of the echo train are highlighted with red to distinguish them from the trusted low T2 values (dark)

Performance evaluation of the new GS algorithm for monoexponential T2 fitting with offset

To assess the effectiveness of the MATLAB implementation of the new GS algorithm, the accuracy, speed and agreement of its results were compared to the 3-parameter monoexponential fitting that resulted from the Levenberg–Marquardt NLLS optimisation of the squared-error sum (4) in MATLAB. In addition, the widely used LL 2-parameter monoexponential algorithm was compared to the two 3-parameter methods.

Four types of test data—three synthetic datasets with known ground truth and one dataset with real medical MRI data—were used for this evaluation.

Dataset A Sampled exponential functions with randomly generated initial values and T2 that were distorted by Rician noise.

As stated in [11], one possible reason for a non-zero offset in T2 relaxation may be Rician noise. Typical signal–noise ratios for Rician noise are between 20 and 30 [21]. Rician noise is not additive [22]. To simulate its behaviour, we used the Rician noise algorithm that was provided in [22] with the aim to determine whether for finite SNRs, the 3-parameter model improves the T2 estimate compared to a that of 2-parameter LL fit and to compare the fitting accuracy, agreement and speed of the two 3-parameter methods, GS and LM. We first generated synthetic decaying 256 × 256-voxel 32-image sequences consisting of exponentials with random uniformly distributed initial values \(M_{0} \in \left\langle {0,4096} \right\rangle\) and \(T_{2} \in \left\langle {20\,ms,200\,ms} \right\rangle\):

The values of \(M_{0}\) represent proton density values in 12-bit image encoding. From these exponentials, four datasets with the SNRs 20, 30, 50 and ∞ were subsequently created by contaminating them with Rician noise, which introduced a non-negative offset to the simulated data. This procedure yielded 65,536 sampled exponentials with different initial values and decay rates, and the offsets were produced by the Rician noise. The 32 images with exponentially decaying grey-scale values were saved in the DICOM format to allow unified input to the tested GS, LM and LL algorithms. Figure 5 shows one noiseless and one Rician noise-distorted decay curve for an arbitrarily selected voxel of the image frame.

The randomly generated T2 matrices provided the ground truth for an assessment of accuracy of the new 3-parameter GS method, the LM method, and the 2-parameter LL method.

Dataset B EPG-simulated MSE sequences with randomly generated proton densities and T2 values and a fixed T1 value.

We used the method of extended phase graphs [8, 10] to simulate MSE decay curves that had non-zero offsets due to imperfect refocusing. To keep the effects of imperfect refocusing apart from those of Rician noise, the dataset B assumes SNR → ∞ (no noise). Using the EPG MATLAB implementation that was provided by the author of [12], we generated synthetic MSE image sequences containing 32 frames with 256 × 256 voxels. Four simulated MSE sequences were created for refocusing flip angles of 120°, 140°, 160° and 180°. As in Dataset A, random uniformly distributed proton density values and transverse relaxation times and a constant longitudinal relaxation time \(T_{1} = 3000\,\,{\text{ms}}\) were input voxelwise to the EPG simulation routine, which calculated the simulated echoes at echo times \(TE \in \left\{ {10,20,...,320\,\,\,ms} \right\}\). The example in Fig. S1 shows the dependence of the EPG-simulated echoes on the flip angles. The generated EPG image sequences were saved in the DICOM format.

Dataset C EPG-simulated MSE sequences with randomly generated proton densities and T2 values and a fixed T1 value that were distorted by Rician noise.

This dataset combines the effects of imperfect flip angles with those of Rician noise. For each of the refocusing flip angles 120°, 140°, 160° and 180°, the EPG method [12] was used to generate a simulated echo sequence at times \(TE \in \left\{ {10,20,...,320\,\,\,ms} \right\}\). Subsequently, the echo sequences were distorted by Rician noise with SNR=30 and were finally saved in the DICOM format.

Dataset D Prostate patient MRI data acquired on a 3-T scanner.

MRI slice data consisting of 32 MSE prostate images that were acquired 10 ms apart with a 256 × 256-voxel resolution were used to validate the GS algorithm and to compare it with the 3-parameter LM fit. In total, we had 21 prostate image slices (3 subjects, 7 slices each) of 2 prostate patients and one healthy volunteer at our disposal, of which only one is presented here to save space.

The ground truth of Datasets A–C was used to assess the accuracy of the respective T2 mapping method. To improve the T2 fit in all three methods, we discarded the first "non-monotone" echo, as proposed in [9].

Accuracy and agreement evaluation of the GS, LM and LL methods

Our approach to the assessment of the accuracy and agreement is inspired by the Bland–Altman (BA) method that was used in [6]; however, it could not be applied here, since the pairwise differences between our GS, LM, LL results or the ground truth are not normally distributed, as required. Instead, we assessed the accuracy and agreement using 95% limits on the empirical cumulative distribution function (ECDF):

where n = 256 × 256 (total number of voxels), t is the independent variable of the ECDF, \(x_{i}\) is the absolute value of the difference between sample pairs that were provided by GS, LM, LL or ground truth, and \(1_{{x_{i} \le t}}\) is an indicator of all \(x_{i}\)that are less than t. The value \(F_{n} \left( t \right)\) is, thus, an estimate of the probability that the absolute difference between the two quantities (e.g. between the GS solution and the ground truth) is less than t. If we find in the calculation of the absolute differences between the GS T2 map and the ground truth the value of t at which\(F_{n} \left( t \right) = 0.95\), then the absolute error between the GS solution and the ground truth will be less than or equal to t with a 95% probability.

To classify the agreement of two methods, agreement limits need to be defined. Like in the BA method, the agreement limits are application specific. We related them to the normal T2 relaxation times, which, according to [23], for human body tissues lie between T2min = 28 and T2max = 767 ms. Considering these values, we chose the agreement limits as follows:

-

excellent agreement: 95% of the values of absolute differences lie below (T2min/5) ~ 5 ms

-

good agreement: 95% of the values of absolute differences lie below T2min = 28 ms

-

poor agreement: the 95% limit is greater than T2min = 28 ms

An assessment of accuracy differs from that of agreement in that the result of a method is compared with the ground truth, rather than with the result of another method. The aforementioned limits are used for classification of accuracy, as well. The symbols T2_GS, T2_LM, T2_LL and T2_true refer to the T2 maps that were calculated using the GS, LM or LL methods, or to the ground truth.

Three-parameter exponential fit with offset using the Levenberg–Marquardt algorithm in MATLAB

Authors who have disclosed the details of their monoexponential 3-parameter T2 fit used the LM algorithm to find the unknown fit parameters [11, 16]. The LM implementation in MATLAB R2016b (lsqcurvefit) does not allow for constraints to be imposed on the solution, and it often yielded implausible results for our test data, such as negative values for proton density, whereas the true \(M_{0} ,\,\,T_{2} ,\,\,{\text{and}}\,\,c\) values are always non-negative by their physical nature or by the test data design. To constrain the search space, we chose a widely used MATLAB implementation of the Levenberg–Marquardt method levmar [24], which provides for box constraints. A compiled version of levmar was downloaded from [25].

For all test data, the parameter search was constrained to the ranges \(M_{0} \in \left\langle {0,20000} \right\rangle\), \(R_{2} \in \left\langle {0 + ,1} \right\rangle\) and \(c \in \left\langle {0,20000} \right\rangle\) These bounds cover our synthetic MRI test series by design as well as the true MRI data where the maximum echo values of 4095 (i.e. 212−1) were observed, obviously owing to the A/D converter resolution.

Our extensive calculations show that the convergence of LM optimisation depends heavily on the algorithm initialisation. This finding is explained by the fact that the 3-parameter minimisation of the objective function (4) is a non-convex problem, which can easily be verified by a numerical example. For example, the initialisation with mid-values of the search ranges led to very poor optimisation results. After experimenting with different combinations of initial values, we decided to use the following data-dependent initialisation: \(M_{{00}} = e_{1} ,\;R_{{20}} = \frac{1}{{10T_{E} }}\log \frac{{e_{2} }}{{e_{{12}} }},\;c_{0} = 0\). For a pure exponential without offset and without noise, these \(M_{00} ,\; R_{20} ,\; c_{0}\) values are true parameter values.

Results

Table 1 summarises the accuracy, agreement and execution time results for the four test datasets. To visualise the results, a number of empirical cumulative distribution functions are plotted in the Supplementary material.

Accuracy and agreement of the GS, LM and LL methods

Dataset A For the Rician-distorted data, the new GS method achieves the best accuracy of all methods in the SNR = 20 case, and it is almost on a par with the LM method for the other SNRs. The LL accuracy is poor for SNR = 20 and 30 cases and is only barely good for the SNR = 50 case. At SNR → ∞ all three methods, GS, LM and LL, are excellent. Since SNR → ∞ is unrealistic, the use of the LL method for monoexponential fitting is discouraged.

The agreement between the GS and the LM methods is good to excellent, and these methods are interchangeable with respect to their accuracy (cf. Fig. S2).

Dataset B For the EPG-simulated MSE sequences, the accuracy of both the GS and the LM methods is good for the 120° flip angle and excellent for the 140º, 160º, and 180º flip angles. Their agreement is excellent in every case. The errors from the LL method are approximately 4 times higher than those of the GS and LM methods, and this method only becomes excellent with perfect refocusing. In conclusion, for MSE sequences with imperfect refocusing, an offset introduction in the monoexponential transverse relaxation fit significantly improves the T2 estimate (cf. Fig. S3).

Dataset C The presence of Rician noise with SNR = 30 in the simulated EPG sequences, which is a realistic condition, decreases the GS and LM accuracies to good for all flip angles. The agreement between the GS and LM methods is good. The errors of the LL method are roughly two times higher than those of the other methods, indicating once again that the monoexponential LL fit is not suitable for MRI data that are subject to both imperfect refocusing and Rician noise (cf. Fig. S4).

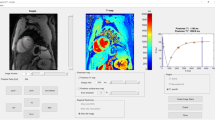

Dataset D For the prostate patient MRI data, the agreement between the new GS and the LM method is excellent, and their respective 95% agreement with the LL method is achieved at a difference of as much as 70 (Fig. S5 b). The LL method cannot be recommended as a T2 quantitation method due to its severe T2 overestimation (cf. Fig. S5). Figure 6 visually compares the T2 maps that were calculated using the GS method (Fig. 6a), the LM method (Fig. 6b) and the LL fit method (Fig. 6c). The T2 overestimation by the LL method appears as lighter colours throughout the body tissue.

Comparison of the T2 maps for MRI prostate data obtained from a patient with a 3-T scanner. Visually, the agreement between a the new GS and b the LM method is excellent, but the agreement between the a, c GS and LL, and b, c LM and LL methods is poor, with the LL method strongly overestimating the T2 values. The colour scale corresponds to the calculated T2 values. Note: only the voxels that were not excluded by the total variation-dependent mask are depicted in a, b and c

Speed comparison of monoexponential fitting with an offset between the new GS-based algorithm and the LM method

Computation times were recorded on an i7 4-core 2.6 GHz laptop for the four test data types and are listed in the last two columns of Table 1.

For the Levenberg–Marquardt MATLAB levmar implementation [24], the default configuration of the OPTI toolbox [25] was left unchanged, i.e. for each voxel, there were at most 1500 iterations and a maximum of 10,000 objective function evaluations. Both the absolute and the relative convergence tolerance of the solver were 1e−7 by default since the tests with tolerance values of 1e−4 and even 1e0 showed that these options did not change the number of iterations for any of the 256 × 256 voxels. Although dataset sizes were identical, the computation times varied considerably, as the last column of Table 1 shows. While the shortest execution times of approximately 114 s were observed for EPG-simulated data, the execution time was as high as 312 s for the real MRI MSE data.

The computation time of the GS algorithm needed to reconstruct a 256 × 256 voxel MRI slice is approximately 2.9 s for 32 echoes. For the true MRI data, the GS algorithm is, thus, approximately two orders of magnitude faster than the levmar MATLAB LM implementation.

Discussion

Vendor software for T2 relaxometry mostly uses a simple 2-parameter monoexponential model [2, 6] that is matched using the log-linear algorithm. The LL algorithm is fast and non-iterative and as such always provides a unique solution, but it considerably overestimates the T2 relaxation times in the cases of when the MRI MSE echoes decay to some non-zero final offset. The offset may have two major causes: flip angles of refocusing pulses which are less than 180°, as assumed by the CPMG sequence, and Rician noise due to using the magnitude rather than the real and imaginary part of the MRI data from the frequency space for reconstruction [11, 26].

Motivated by the observation from our prostate patient data, where the MSE brightness often decays to a non-zero final value, our goal was to obtain a better match of the decay curve by introducing a non-zero additive offset to the monoexponential decay model. The most widely used solution that has been adopted by other authors—a 3-parameter least-squares fit using the Levenberg–Marquardt algorithm—worked for our data, as well, yet the run times were in the range of several minutes, and we aimed at achieving a comparable accuracy much faster. We extended the LL algorithm by introducing an offset, with the proton density and T2 estimate uniquely determined by this offset, which made the objective function of the fit to depend on the offset alone. We observed that the modified objective function was unimodal with respect to the offset value. This unimodality enabled us to design a new algorithm, which was labelled GS because the optimum parameters were found using a one-dimensional golden section search on the offset. The resulting GS line search is approximately two orders of magnitude faster than the 3-parameter LM optimisation of the non-convex objective function. To our knowledge, our approach to reducing the number of variables has not previously been presented elsewhere.

To counteract the noise amplification at small echoes that was caused by the logarithmic operation, we proposed a Taylor expansion-based compensation which eliminates distortions caused by noise.

In addition to the decisive speed edge of the GS algorithm over the LM fit, its convergence properties are equally important. Due to the non-convexity of the objective function, LM minimisation yields results that heavily depend on initial values. For example, we observed that the LM algorithm fails to converge if non-specific initial values such as the middle of the allowable parameter range are selected, and we were only able to obtain usable results with data-dependent initialisation. Furthermore, users of the LM algorithm have to parameterise a host of termination criteria, which is impossible to do without a profound knowledge of the algorithm internals. These reasons might be why the clinical use of the LM algorithm has so far never been reported. In contrast, the initial search range of the GS algorithm is automatically selected depending on the measured echo train data, and it always converges by virtue of the unimodality. The GS iterations can simply be stopped after a low, fixed number of golden section steps.

To assess the accuracy and agreement of the GS, LM and LL methods, we defined a cumulative distribution-based procedure to establish the 95% accuracy and agreement ranges. To enable a quick classification, we chose limits of excellent, good and poor accuracy and agreement. The limits are related to the range of T2 values that are found in human body tissues. On synthetic data with known ground truth, the accuracy of the new GS method and the LM method matched. On the true MRI data, the agreement between GS and LM was excellent.

To exclude noisy T2 map regions from comparison, we proposed a novel approach to identify noise in the echo train. Our method uses a total variation-based criterion that can be used to pinpoint regions with low signal and strong variation, such as gas-filled body cavities. The distinction between voxels corresponding to body tissues and voxels that map gas-filled space is based on the fact that the spin echo decay in tissue voxels is more or less steadily decreasing, with the total variation of the echo train close to the range of echo values, whereas in void voxels, the echo train has low values and oscillates substantially. We use the TV–range ratio as a threshold to distinguish between tissue and empty space voxels. To admit a possible TV increase due to imperfect refocusing flip angles, voxels with a TV–range ratio of less than 2 are accepted as valid. The resulting noise mask can help medics to identify regions where T2 estimates are untrustworthy. To our knowledge, our approach to identifying noisy voxels in T2 maps has so far not been proposed elsewhere.

Consistent matrix implementation of the GS method without any scalar operations enabled us to accommodate the whole algorithm in a mere 90 MATLAB code lines. We only used entry-wise matrix operations: addition, subtraction, Hadamard multiplication and Hadamard division. These simple matrix operations are easy to port to the C programming language, where they may occupy some 100 lines of code. In this way, the GS algorithm can be efficiently implemented in C by replacing the MATLAB statements with calls to the above pre-programmed matrix operation functions without the need for either MATLAB or third-party numerical libraries such as LAPACK.

The GS algorithm thus possesses 4 decisive advantages over the LM 3-parameter monoexponential fit: it is much simpler, it is approximately two orders of magnitude faster, it does not require the user to provide initialisation or termination criteria, and it can be efficiently implemented without third-party numerical math packages.

Since it is known that the transverse magnetisation decay is, in general, multiexponential [1, 2, 5], the limitations of the monoexponential model with offset should be briefly addressed. If the tissue under study contains two compartments, one with a fast T2 decay of, e.g., 100 ms and another one with, e.g., T2 = 700 ms, then during a relatively short echo train length of 320 ms, the second decay curve decreases only by ~17%. If the proton density of the slow component is low, the slow component might be assigned to the offset and remain unnoticed.

This example shows that the multiexponential model captures the T2 distribution of a tissue better than the monoexponential model with offset. On the other hand, it needs to be clarified if the mathematical problem of estimating multiple T2 values from measured MRI data can be solved with reasonable accuracy. An indicator of the solution accuracy is the condition number, which–in some norm—measures how errors in the input data (in the T2 relaxometry the CPMG echo train) are amplified in the output data (i.e. the estimated proton densities and T2 values). Well-conditioned problems possess low condition numbers, ill-conditioned problems have large condition numbers. The condition number is given by the mathematical model alone, i.e. by the function that maps the input data to the outputs. The condition number does not depend on the algorithm used to solve the mathematical problem. An ill-conditioned system of equations will strongly amplify the errors in the input data regardless of the algorithm used to solve it. A system of linear equations is ill-conditioned when the rows or columns of the system matrix are almost linearly dependent. This is the case for the widely adopted logarithmic gridding approach to multiexponential T2 fitting as in [14], where higher T2 values in the spectrum are multiples of the lower neighbour by a small factor, e.g. 1.1337. Starting with T2 = 15 ms, a spectrum of 40 T2 values covers the range up to 2000 ms. If only the first 32 exponentials are used for the multiexponential gridding fit, the condition number calculated by MATLAB is ~ 2.8 × 1018, which is extremely high. It means that errors like the Rician noise in input data can cause changes 2.8 × 1018 times as large in the estimated weights of the exponential functions in the spectrum. As a consequence, more or less correct weights can be calculated only when the noise level is very low.

The topic of conditioning of the multiexponential T2 fitting has also been investigated in [27], whose authors state that in the multiexponential estimation problem, the resulting nonlinear minimization is typically difficult due to local minima and ill-conditioning. In particular, when a slowly decaying component is present in a dataset with few samples, it is often possible to accurately model the signal using a combination of faster components.

We tested the sensitivity of the multiexponential gridding approach to noise using the versatile relaxometry suite written in MATLAB (https://github.com/qMRLab/qMRLab) which was made publicly available by the authors of [28]. The multiexponential fitting code was implemented according to the method described in [29]. To work with a known ground truth, we generated 32 samples of a biexponential decay curve with two sharp peaks at T2 = 25 and T2 = 120, and distorted it with Rician noise for six different signal–noise ratios SNR = ∞, 500, 200, 100, 50 and 30. The results in the Supplementary material confirm that—due to its ill-conditioning—the gridding-based multiexponential fit gets extremely distorted by noise, and for our sampled biexponential function failed to locate the T2 peaks for SNRs less than 100. Our results permit the following conclusions:

-

the multiexponential model with logarithmically distributed T2 spectrum describes the T2 distribution in MRI relaxometry better than the monoexponential model with offset,

-

contrary to the the monoexponential model with offset, the multiexponential model with logarithmic T2 distribution does not account for the non-zero mean of the Rician noise, which may lead to a bias in the T2 estimates,

-

due to its ill-conditioning the T2 spectrum returned by the multiexponential fitting will be strongly affected in the presence of noise and the estimated T2 values may lie far from the true values,

-

due to possible spurious peaks in the multiexponential fit, a T2 peak appearing in the computed T2 spectrum does not necessarily imply that there is a compartment with that T2 in the biological tissue under study

The above results should be taken into account when comparing the monoexponential model with offset to the multiexponential gridding approach.

In conclusion, we developed a new, fast, simple and accurate algorithm for the estimation of T2 values under the influence of both Rician noise and imperfect refocusing using a monoexponential model with offset. Contrary to the LM algorithm, the use of which has so far been reported only under laboratory conditions, the GS T2-mapping algorithm presents a ready-made solution that is perfectly suited for MRI software vendors. Since it is applicable to models involving a single exponential function and an offset, the approach might also be useful for other types of monoexponential fit, such as T1 relaxometry.

References

Bjarnas TA, Editor (2011) Introduction to Quantitative T2 with emphasis on Medical Imaging. https://sourceforge.net/projects/intro2qt2/. Accessed 7 June 2018

Dowell NG, Wood TC (2018) T2: Transverse relaxation time. In: Cercignani M, Dowell NG, Tofts PS (eds) Quantitative MRI of the brain: principles of physical measurement, 2nd edn. CRC Press, Boca Raton, pp 83–95

Liney GP, Turnbull LW, Lowry M, Turnbull LS, Knowles AJ, Horsman A (1997) In vivo quantification of citrate concentration and water T2 relaxation time of the pathologic prostate gland using 1H MRS and MRI. Magn Reson Imaging 15:1177–1186

Dinh H, Souchon R, Melodelima C, Bratan F, Mège-Lechevallier F, Colombel M, Rouvière O (2015) Characterization of prostate cancer using T2 mapping at 3T: a multi-scanner study. Diagn Interv Imaging 96:365–372

Storås TH, Gjesdal KI, Gadmar ØB, Geitung JT, Kløw NE (2008) prostate magnetic resonance imaging: multiexponential T2 decay in prostate tissue. J Magn Reson Imaging 28:1166–1172

Pei M, Nguyen TD, Thimmappa ND, Salustri C, Dong F, Cooper MA, Li J, Prince MR, Wang Y (2015) Algorithm for fast monoexponential fitting based on auto-regression on linear operations data. Magn Reson Med 73:843–850

Li X, Hornak JP (1994) T2 Calculations in MRI: linear versus nonlinear methods. J Img Sci Tech 38:154–157

Hennig J (1991) Echoes-how to generate, recognize, use or avoid them in mr-imaging sequences. Concepts Magn Reson 3:125–143

Neumann D, Blaimer M, Jakob PM, Breuer FA (2014) Simple recipe for accurate T2 quantification with multi spin-echo acquisitions. Magn Reson Mater Phy 27:567–577

Hennig J (1988) Multiecho imaging sequences with low refocusing flip angles. J Magn Reson Imaging 78:397–407

Milford D, Rosbach N, Bendszus M, Heiland S (2015) Mono-exponential fitting in T2-relaxometry: relevance of offset and first echo. PLoS One 10(12):e0145255

Weigel M (2015) Extended phase graphs: dephasing, RF pulses, and echoes—pure and simple. J Magn Reson Imaging 41:266–295

McPhee KC, Wilman AH (2018) Limitations of skipping echoes for exponential T2 fitting. J Magn Reson Imaging 48:1432–1440

Prasloski T, Mädler B, Xiang Q-S, MacKay A (2012) Applications of stimulated echo correction to multicomponent T2 analysis. Magn Reson Med 67:1803–1814

Umesh Rudrapatna S, Bakker CJG, Viergever MA, van der Toorn A, Dijkhuizen RM (2017) Improved estimation of MR relaxation parameters using complex-valued data. Magn Reson Med 77:385–397

MRI Processor: ImageJ plug-in that calculates parametric maps in MR images. https://imagejdocu.tudor.lu/doku.php?id=plugin:filter:mri_processor:start. Accessed 7 June 2018

Bojorquez JZ, Bricq S, Brunotte F, Walker PM, Lalande A (2016) A novel alternative to classify tissues from T1 and T2 relaxation times for prostate MRI. Magn Reson Mater Phy 29:777–788

Akçakaya M, Basha TA, Weingärtner S, Roujol S, Berg S, Nezafat R (2015) Improved quantitative myocardial T2 mapping: impact of the fitting model. Magn Reson Med 74:93–105

Golub GH, Pereyra V (1973) The differentiation of pseudoinverses and nonlinear least squares problems whose variables separate. SIAM J Numer Anal 10:413–432

Luenberger DG, Ye Y (2008) Fibonacci and golden section search. Linear and nonlinear programming. Springer, New York, pp 217–219

Sijbers J, den Dekker AJ, Raman E, Van Dyck D (1999) Parameter estimation from magnitude MR images. Int J Imaging Syst Technol 10:109–114

Ridgway G (2008) Rice/Rician distribution. The MathWorks, MATLAB Central, File Exchange. https://www.mathworks.com/matlabcentral/fileexchange/14237-rice-rician-distribution?focused=5109004&tab=example. Accessed 15 Jan 2019

Bojorquez JZ, Bricq S, Acquitter C, Brunotte F, Walker PM, Lalande A (2017) What are normal relaxation times of tissues at 3 T? Magn Reson Imaging 35:69–80

Lourakis MIA. Levenberg-Marquardt nonlinear least squares algorithms in C/C++. https://users.ics.forth.gr/~lourakis/levmar/. Accessed 6 April 2018

OPTI Toolbox. A free MATLAB toolbox for optimization. https://inverseproblem.co.nz/OPTI/. Accessed 6 April 2018

Gudbjartsson H, Patz S (1995) The Rician distribution of noisy MRI data. Magn Reson Med 34:910–914

Björk M, Zachariah D, Kullberg J, Stoica P (2016) A multicomponent T2 relaxometry algorithm for myelin water imaging of the brain. Magn Reson Med 75:390–402

Cabana JF, Gu Y, Boudreau M, Levesque IR, Atchia Y, Sled JG, Narayanan S, Arnold DL, Pike GB, Cohen-Adad J, Duval T, Vuong MT, Stikov N (2016) Quantitative magnetization transfer imaging made easy with qMTLab: Software for data simulation, analysis, and visualization. Concepts Magn Reson. https://doi.org/10.1002/cmr.a.21357

MacKay A, Whittall K, Adler J, Li D, Paty D, Graeb D (1994) In vivo visualization of myelin water in brain by magnetic resonance. Magn Reson Med 31:673–677

Acknowledgements

We acknowledge the core facility MAFIL of CEITEC, which was supported by the Czech BioImaging large RI project (LM2015062 funded by MEYS CR), for their support with obtaining the scientific data that were presented in this paper. The corresponding author also appreciates the valuable discussions on the theoretical aspects of the algorithm with M. Kozubek from the Centre for Biomedical Image Analysis, Faculty of Informatics, Masaryk University, as well as his comments that helped streamline the manuscript.

Funding

This work was supported by the Czech BioImaging large infrastructure project (LM2015062 funded by MEYS CR).

Author information

Authors and Affiliations

Contributions

JM: Study conception and design, analysis and interpretation of data, drafting the manuscript, PH: Analysis and interpretation of data, DP: Acquisition of data, TT: Acquisition of data.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest to disclose.

Ethical approval

All procedures that were performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants that were included in the study.

Code availability

The MATLAB code for the algorithm described in this paper is available at https://github.com/tandzin/golden-section.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Michálek, J., Hanzlíková, P., Trinh, T. et al. Fast and accurate compensation of signal offset for T2 mapping. Magn Reson Mater Phy 32, 423–436 (2019). https://doi.org/10.1007/s10334-019-00737-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10334-019-00737-3