Abstract

A pragmatic method for assessing the accuracy and precision of a given processing pipeline required for converting computed tomography (CT) image data of bones into representative three dimensional (3D) models of bone shapes is proposed. The method is based on coprocessing a control object with known geometry which enables the assessment of the quality of resulting 3D models. At three stages of the conversion process, distance measurements were obtained and statistically evaluated. For this study, 31 CT datasets were processed. The final 3D model of the control object contained an average deviation from reference values of −1.07 ± 0.52 mm standard deviation (SD) for edge distances and −0.647 ± 0.43 mm SD for parallel side distances of the control object. Coprocessing a reference object enables the assessment of the accuracy and precision of a given processing pipeline for creating CT-based 3D bone models and is suitable for detecting most systematic or human errors when processing a CT-scan. Typical errors have about the same size as the scan resolution.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Background

Three dimensional (3D) human bone models are required for many applications such as anatomic visualization, animation, numeric computation of forces, implant design and optimization in trauma and orthopedics, template generation in navigation, shape analysis for medical and anthropological purposes, surgical planning, Medical Rapid Prototyping, or computer-aided surgery (CAS). Messmer et al.1 describe a database of 3D bone models created and maintained just for such applications. A standard processing pipeline for generating 3D bone models (see Fig. 1) is described covering acquisition of computed tomography (CT) image data of post mortem specimens, segmentation, triangulation, and reverse engineering.

Some of the applications relying on 3D bone models like implant design and optimization or surgical planning need precise and validated 3D models where the geometry of the virtual bones must correspond as precise as possible to the real bones. The accuracy of a final 3D model depends on many factors and parameters of the data acquisition process and of the different subsequent data conversion steps. These include human processing errors and processing limitations.

For example, the image slice spacing and the degree of overlap of consecutive slices are scan parameters which are known to have an influence on the quality of CT-based 3D reconstructions. Schmutz et al.2 report on the effect of CT slice spacing on the geometry of 3D models. Their results demonstrate that the models geometric accuracy decreased with an increase in the slice spacing. They recommend a slice spacing of no greater than 1 mm for the accurate reconstruction of a bone’s articulating surfaces. Shin et al.3 investigated the effects of different degrees of overlap between image slices on the quality of reconstructed 3D models. Based on their results, an overlap of 50% is recommended. Souza et al.4 illustrate the effect of partial volume effects in volume rendering of CT scans and present methods for correcting them.

Another parameter that influences the accuracy of reconstructed 3D models is the segmentation threshold. Ward et al.5 investigated the suitability of various thresholds for cortical bone geometry and density measurements by peripheral quantitative computed tomography. They show that the thresholds significantly influence the accuracy of the final results, especially if the geometry is close to the scan resolution. In clinical practice, usually manual or semiautomatic segmentation tools are applied.6 They are highly parameterized, interactively used, and therefore, an additional source of final shape variations.

Drapikowski7 analyzed the geometric structure of surface-based models derived from CT and MRI imaging and determined features such as the local inclination angle of the normal to the surface relative to the direction of scanning, the local radius of curvature, and the distance between slices. He found that these features have a significant influence on the accuracy of the reconstructed surface-based models and presented a model for uncertainty estimation and its visualization directly on the surfaces. However, he also states that there are some more influencing factors that are difficult to model.

Very often, reconstructed surface models consist of a huge number of triangles and have to be simplified for practical use. It is evident that the simplification process reduces the resolution of the final model. Cigogni et al.8 presented a tool for coloring the input surface according to the approximation error.

When looking at the multilevel reconstruction process consisting of the original object, image acquisition, segmentation, and mesh extraction, Bade et al.9 identified 11 relevant artifacts that can be partially reduced or eliminated by appropriate smoothing at mesh level. They classified the surface models into compact, flat, and elongated objects and proposed appropriate smoothing methods for their visual improvement.

In each of the stages of the processing pipeline, there exist many more parameters and sources of possible errors that could influence the precision of the final geometry. Therefore, it is necessary to control and specify the data conversion process. However, because of the large number of influencing factors, it is not realistic to identify each of them and to specify its influence quantitatively.

We found that it is sufficient to use a pragmatic method for controlling the quality of the 3D bone models and to specify their accuracy and precision within a reasonable interval of confidence. In this paper, some aggregate sources of errors are investigated, and a pragmatic method and its results for validating and specifying a given conversion pipeline are presented. There exist many publications focusing in a scientific manner on certain steps with isolated parameters. This work, however, presents results from many image datasets acquired during the last 4 years, regarding certain process steps as black boxes.

Methods

The AO Research Institute Davos maintains a database with CT-scans and 3D bone models. These 3D bone models are used in many clinically relevant applications and projects in fields such as teaching, implant design and optimization, or surgical planning. The post mortem bone CT-scans of the database stem from specimens bequeathed to research projects, compliant with local ethical committee guidelines. Most of the scans are accompanied by the same European Forearm Phantom (EFP, QRM GmbH, Germany). The database contains hundreds of scans of this EFP, and it is evident to use them for geometrical validation and specification of our processing pipeline. To do so, the phantom data are processed in the same way as the bone data. At several relevant stages of the conversion pipeline illustrated in Figure 1, certain distances of the phantom are measured and compared to the reference values obtained by initial mechanical dimensioning of the EFP. Thus, significant deviations can be detected immediately in the framework of a regular quality control.

Moreover, the statistical evaluation of the measured data allows a specification of the processing steps. For this work, phantom data from 31 CT-scans of the last 4 years were evaluated, and paired t tests were employed to quantify the influence of processing steps. Our hypothesis is that the results from the phantom measurements are close to results on “clinical data” within the limits of the measured specifications. We think that this is evident as the phantom is scanned together with the bones and has similar density properties as real bones. We are aware that phantom measurements rely on air–water gray value steps of about 1,000 Hounsfield units and that the waterbone gray value step of real bones surrounded by soft tissue is about 1,500 Hounsfield units in clinical scans, or about 2,500 Hounsfield units in some cadaveric bone scans with air–bone gray value steps. We suppose that potential effects on edge to edge measurements, induced by these different material transitions, are negligible. Moreover, the shape variability of real bones is much richer than that of the phantom, but it is not possible within the scope of this work to investigate exhaustingly the influence of the processing pipeline on all aspects of shape variability. In this work, we look only at the distances between edges and parallel planes of the phantom to estimate the effect of the processing pipeline on two different aspects of shape. Note that the purpose of this work is not to conduct a sophisticated shape analysis of the phantom but to look closer at distance measures that are often made on virtual bones and at the influence of the processing pipeline on such distance measures.

In order to establish initial reference values for the EFP, 12 landmarks on the phantom were defined as illustrated in Figure 2.

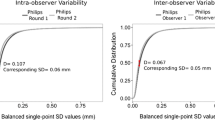

The first eight landmarks are homologous points at the edges, and they serve to determine the four diagonal distances between the opposite edge points. Edge points represent detail of shape and are sensible to smoothing and partial volume effect for example. These first eight landmarks were repeatedly measured (nine times) by the same operator with a MicroScribe Mx (Immersion Corporation, USA) having a specified accuracy of 0.1016 mm. It is a common 3D measurement device for measuring positions of landmarks on real objects. The position of the EFP in space was not changed during the measuring process. Then the mean values and standard deviations of the landmarks were computed. The four diagonal distances of the distance matrix of these mean values according to Eq. 1 serve as reference values for all subsequent measurements during the conversion process. For reasons of symmetry, they are independent of each other and should have the same distribution.

The remaining four landmarks P 9 to P 12 lie pairwise on two flat opposite planes and need not to be homologous because they are only used to define lines on the phantom surfaces. They represent compact shape, and in contrast to the first eight points, they are not corner points, and therefore, they are less influenced by smoothing operations. They are placed manually on the corresponding phantom surfaces and are used to compute the distance of the corresponding opposite plane pair. It is straightforward to determine line equations from two points and the distances between two lines. If the lines are not parallel, their distance is independent of the position of the points on the parallel planes. Initially, the plane distance of the EFP was repeatedly measured (20×) with a calibrated sliding caliper with a resolution of 0.01 mm. The resulting average value r plane serves as an additional reference value. Thus, five reference values can be used in total for all subsequent measurements.

The different steps of the processing pipeline are illustrated in Figure 1. In the first step, the specimens are scanned together with the EFP. The helical scans were produced on different CT-Scanners (Aquilion,Toshiba; Somatom 64, Siemens; XtremeCT, Scanco Medical) with different scanning parameters, image resolutions, slice distances, slice thicknesses, and filter kernels influencing the resulting 3D DICOM (DCM) image stack. We suppose that clinical scanners are regularly maintained and calibrated and should be exact within the resolution of the scan. Otherwise, local geometric distortions could be detected by the proposed method. To improve the scan quality with respect to subsequent segmentation it is recommended to reduce the noise by higher voltages (120–140 kV) and higher tube currents as well as to augment the resolution of scans by small slice distances (0.4 mm) and small in-plane resolutions (0.4 × 0.4 mm) for post mortem scans. The slice distance and in-plane resolution of all of phantom scans were smaller than 1.2 mm. Most resolution values were 0.4 mm. As a reconstruction kernel, we used typical kernels also used for clinical applications. On the one hand, hard bone kernels produce indeed sharp edges, but they augment significantly the noise and produce edge artifacts such as important over- and undershoots at the edges.

After scanning the DICOM images are anonymized and archived together with the available metadata such as scan parameters, subject age, height, gender, and weight.

In the next step, the regions of interest are cropped from the image stacks. This step reduces the data size and facilitates, thereby, the subsequent segmentation phase. Segmentation is performed by means of the segmentation editor of the Amira Software (Visage Imaging, Inc.) which offers several tools for automatic and manual segmentation. A complete automatic segmentation of a bone, however, is currently not possible. All scans need a manual finish by experts. In particular, the metaphyseal regions of the bones and all regions with thin cortical walls are subject to the partial volume effect of the scans and must be manually controlled or segmented. This manual intervention is a critical step that lowers precision and repeatability of the segmentation process because it depends on window settings and local segmentation thresholds. Normally, however, the variations are within one to three voxels that are given by the 3D image resolution.

After segmentation (the labeling of the voxels), the labels are filtered to smooth their surface to make them suitable for automatic triangulation. This smoothing process flattens sharp edges, peaks, and valleys adding some more imprecision to the bone model. This impreciseness depends on the filter methods and their parameters and has the order of magnitude of one or two times the image resolution to be able to reduce aliasing and noise effects of the scan. Moreover, filter parameters have to be also adapted to the bone type and the purpose of the application of the resulting 3D models. A large femur, for example, used for visualization purposes or statistical shape analysis can be more generously smoothed than a thin orbital wall model of the cranium for individual analysis.

From the final label field, an Amira module reconstructs polygonal surfaces. Many parameters such as the size of a smoothing kernel, minimal edge length, or optional simplification of the final surface influence the precision of the final result. The final triangulated surface can be saved in Standard Triangulation Language or Surface Tesselation Language (STL) format, which is supported by many other software packages. It is widely used for rapid prototyping and computer-aided manufacturing. STL files are the input for the next processing step, producing nonuniform rational B-spline surfaces (NURBS). This step is performed with the reverse engineering software Raindrop Geomagic Studio 10 (Geomagic Inc., USA). After cleaning, further smoothing, and repairing holes and triangle intersections, many patches (about 150 for a femur) are semiautomatically placed on the surfaces. The final precision of the resulting parametric surfaces is also influenced by many parameters such as smoothing kernels, patch size and placement, as well as the grid resolution of the patches. The NURBS surfaces are saved in Initial Graphics Exchange Specification (IGES) format that defines a neutral data format allowing the digital exchange of information among computer-aided design (CAD) systems. By choosing appropriate patches and grid resolution, the maximal deviations from the triangulated surface can be controlled by the user. More on NURBS can be found in Piegl and Tiller.10 The book covers all aspects of NURBS necessary to design geometry in a computer-aided environment.

In the last step of the processing pipeline, solid models are generated within Unigraphics (UGS Corp., USA). IGES files are imported, and solids for use in CAD systems can be produced by Boolean operations. For example, the subtraction of the inner cortex shell of a bone from its outer shell yields a solid cortex model of that bone. Solid models can be saved in STP format for further use.

All these processing steps can have an impact on the accuracy and precision of the final model. The goal of this work is not to isolate and quantify all these influences but to quantify only changes of the geometric dimensions at different phases of the processing pipeline with the aid of a phantom. The distances are determined at three stages of the conversion process. The landmarks are measured once by the same operator. First, the landmarks are determined within the segmentation editor of Amira after having loaded the initial DICOM images. It offers a simultaneous view on three orthogonal slices, and the edges can be easily determined (see Figs. 1 and 3 (upper)).

The second measurement occurs after the polygon phase in Geomagic just before entering into the shape phase where the NURBS patches are defined. Geomagic offers the possibility to place interactively feature points on surfaces and to save them in IGES format (see Figs. 1 and 3 (lower left)).

Finally, the landmarks are acquired on the NURBS surface (see Figs. 1 and 3 (lower right). All landmarks are then entered into a database, and a script computes the deviations from the reference.

Figure 3 illustrates the phantom in the different stages at which the landmarks are determined. In this example, the relatively low resolution of the scan generates a rippling of the surface.

After each process step k ϵ {DCM, POLYGON, NURBS} of a series n ϵ [1, 31] the landmarks of the phantom P n, k, i , i = 1,...,12 are measured. Then, the four diagonal distances \({d_{n,k,1,7}} = \left\| {{P_{n,k,7}} - {P_{n,k,1}}} \right\| \), \( {d_{n,k,2,8}} = \left\| {{P_{n,k,8}} - {P_{n,k,2}}} \right\| \), \( {d_{n,k,3,5}} = \left\| {{P_{n,k,5}} - {P_{n,k,3}}} \right\| \), and \( {d_{n,k,4,6}} = \left\| {{P_{n,k,6}} - {P_{n,k,4}}} \right\| \) are computed. As we are only interested in the deviations from the reference values (see Eq. 2), these diagonal distances are subtracted from the corresponding reference values.

Finally, in order to minimize the influence of the manual placement of the landmarks at the edges, these four variations are averaged, and the resulting value represents now the mean deviation of the reference after process step k of the series n (see Eq. 3).

After each process step k of series n, we also compute the distance \( {d_{n,k,{\rm{plane}}}} = {\hbox{dist}}\left( {{\hbox{line}}\left( {{P_{n,k,9}},{P_{n,k,10}}} \right),{\hbox{line}}\left( {{P_{n,k,11}},{P_{n,k,12}}} \right)} \right) \) of the corresponding planes and determine the deviation from the reference value according to Eq. 4.

Finally, after having processed and measured all N = 31 series, the variations of equal process steps can be averaged.

Note that these mean values in Eq. 5 are the deviations from the reference values and can be considered as a measure of the accuracy of the models after the corresponding process step k accumulating all errors. The standard deviation of these mean values can be considered as a measure of the precision of the modeling. Thus, for each processing step k, these average values of the deviations can be compared to each other allowing the formulation of reasonable tolerances for a quality control of the processed data.

However, to estimate the influence of the different processing steps, the means of the variations between two subsequent process steps are compared. Measurements also showed that the deviations within a given series are correlated, i.e., if the deviation from the reference is large before given processing step, then it is also large after the process step.

Therefore, paired t tests have been used to quantify the influences of the process steps that consider deviation differences according to Eq. 6.

Results

At the beginning of the study, the reference values of the EFP were determined. Table 1 shows the diagonal distances of the EFP which was nine times measured with the Microscribe device. The repeated distance measurements for each landmark pair are averaged and subsequently used as reference values. The reference value of the plane distance is also given in Table 1. It was measured 20 times with a calibrated sliding caliper.

In this work, the evaluation of N = 31 CT scans containing the same EFP is presented. Table 2 shows the mean values and standard deviations (SD) of the variations of the plane distance and the averaged edge distances after the three process steps of 31 series. According to normality tests of Shapiro–Wilk and Kolmogorov–Smirnov with Lilliefors significance correction (p = 0.2), all the six variables of the means v k,s , k ϵ {DCM, POLYGON, NURBS}, s ϵ {edges, planes} of the signed distance deviations are normally distributed.

To estimate the influence of the process steps POLYGON and NURBS, paired t tests were computed. The results are shown in Table 3.

According to Table 3, the pairs are correlated which justifies the use of paired t tests and which indicates that the errors in the processing pipeline are propagated. The Null Hypothesis of the t test assumes that there is no difference between the means. As in the three cases, the tests reject this assumption (p < 0.05); we conclude that the POLYGON process step significantly reduces the edge distances. The shortening of the edge distances is higher (−0.929 mm) than that of the plane distances (−0.57 mm), which is of no surprise as this process step performs smoothing operations. The NURBS phase only marginally influences edge and plane distances, and its amounts are of no clinical relevance.

Discussion

According to the results of this work, the accuracy of 3D models, processed as described above, lays within the scan resolution. According to the sampling theorem, stating that to show details of a given size d a sampling of d/2 is needed, it is not possible to analyze effects below scan resolution. Consequently, as the measured effects are close to or even below the scan resolutions, we conclude that the processing pipeline we are using, generating 3D models from CT-scans according to state-of-the-art methods, produces high quality 3D models that can be used in further clinically relevant applications where precise models of the geometry of real bones within scan resolution are required. It is also important that the triangulation and mesh resolution in the further steps of the processing pipeline are adequately chosen, to avoid additional loss of resolution. This is especially important when modeling fine structures of the skeleton such as orbital walls with thickness dimensions close to scan resolution.

In single conversions, deviations greater than scan resolution can occur. Then, they are most probably due to errors in the processing pipeline such as wrong scaling, excessive smoothing, inappropriate segmentation thresholds, or incorrect scanner calibration. Therefore, the processing should be controlled by pragmatic quality controls, such as coprocessing a control object with known geometry. If deviations are clearly larger than scan resolution, the reasons have to be examined. The maximal voxel edge length, for example, could stand for a practical threshold for acceptable deviations of the distance measures from the reference.

It is known that smoothing operations eliminate small details and can reduce or enlarge distance measurements between surface points of objects. Lines, for example, lying entirely within the object and connecting sharp convex edges could be reduced, whereas lines within the object, connecting two sharp concave edges could be enlarged. Rigid transformations, however, such as translations and rotations in 3D space do not influence significantly geometric specifications. Modern 3D software packages such as Geomagic Studio 10 are even certified and specify accuracies of less than 0.1 µm for distances and less than 0.1 arc sec for angles which are far below practical scan resolutions and clinical relevance.

Conclusion

Coprocessing a reference object such as the EFP enables the pragmatic assessment of the size accuracy and precision of a given processing pipeline for creating 3D shape models of human bones based on CT-scans. It is suitable for detecting most systematic or human errors when processing a CT-scan. Typical errors have about the same size as the scan resolution. If larger deviations appear, then the reasons should be examined in order to maintain the good quality of the models.

References

Messmer P, Matthews F, Jacob AL, Kikinis R, Regazzoni P, Noser H: A CT-database for research, development and education: concept and potential. J Digit Imaging 20(1):17–22, 2007

Schmutz B, Wullschleger ME, Schuetz MA: The effect of CT slice spacing on the geometry of 3D models. In: Proceedings of 6th Australian Biomechanics Conference. New Zealand: The University of Auckland, 2007, pp 93–94

Shin J, Lee HK, Choi CG, et al: The quality of reconstructed 3D images in multidetector-row helical CT: experimental study involving scan parameters. Korean J Radiol 3(1):49–56, 2002

Souza A, Udupa JK, Saha PK: Volume rendering in the presence of partial volume effects. IEEE Trans Med Imaging 24(2):223–235, 2005

Ward KA, Adams JE, Hangartner TN: Recommendations for thresholds for cortical bone geometry and density measurement by peripheral quantitative computed tomography. Calcif Tissue Int 77:275–280, 2005

Stalling D, Zöckler M, Hege HC: Interactive segmentation of 3D medical images with subvoxel accuracy. CAR 98:137–142, 1998

Drapikowski P: Surface modeling—uncertainty estimation and visualization. Comput Med Imaging Graph 32:134–139, 2008

Cignoni P, Rocchini C, Scopigno R: Metro: measuring error on simplified surfaces. Comput Graph Forum 17(2):167–174, 1998

Bade R, Haase J, Preim B: Comparison of fundamental mesh smoothing algorithms for medical surface models. In: Schulze T, Schlechtweg S, Hinz V Eds. Simulation und Visualisierung. SCS European Publishing House, Erlangen, 2006, pp 289–304

Piegl L, Tiller W: The NURBS Book. Springer, Berlin, 1997

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Noser, H., Heldstab, T., Schmutz, B. et al. Typical Accuracy and Quality Control of a Process for Creating CT-Based Virtual Bone Models. J Digit Imaging 24, 437–445 (2011). https://doi.org/10.1007/s10278-010-9287-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-010-9287-4