Abstract

Using a wavelet basis, we establish in this paper upper bounds of wavelet estimation on \( L^{p}({\mathbb {R}}^{d}) \) risk of regression functions with strong mixing data for \( 1\le p<\infty \). In contrast to the independent case, these upper bounds have different analytic formulae for \(p\in [1, 2]\) and \(p\in (2, +\infty )\). For \(p=2\), it turns out that our result reduces to a theorem of Chaubey et al. (J Nonparametr Stat 25:53–71, 2013); and for \(d=1\) and \(p=2\), it becomes the corresponding theorem of Chaubey and Shirazi (Commun Stat Theory Methods 44:885–899, 2015).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and preliminary

Nonparametric regression estimation plays important roles in practical applications. The classical approach uses the Nadaraya–Watson type kernel estimators (Ahmad 1995). Because wavelet bases have the local property in both time and frequency domains, a wavelet provides a new method for analyzing functions (signals) with discontinuities or sharp spikes. Therefore it is natural to get better estimations than the kernel method for some cases. Great achievements have been made in this area, see Delyon and Judisky (1996), Hall and Patil (1996), Masry (2000), Chaubey et al. (2013), Chaubey and Shirazi (2015), Chesneau and Shirazi (2014) and Chesneau et al. (2015).

In this paper we consider the following model: Let \((X_{i},Y_{i})_{i\in {\mathbb {Z}}}\) be a strictly stationary random process defined on a probability space \((\varOmega , {\mathscr {F}},P)\) with the common density function

where \(\omega \) stands for a known positive function, g denotes the density function of the unobserved random variables (U, V) and \(\mu =E \omega (U,V)<\infty \). Then we want to estimate the unknown regression function

from a sequence of strong mixing data \((X_{1},Y_{1}), (X_{2},Y_{2}), \ldots , (X_{n},Y_{n})\).

Chaubey et al. (2013) provide an upper bound of the mean integrated squared error for a linear wavelet estimator when \(\rho (V)=V\) and \(V\in [a, b]\); Chaubey and Shirazi (2015) consider the case of a nonlinear wavelet estimator with \(d=1\).

In this paper, we further extend these work to the d-dimensional setting over \(L^{p}\) risk for \(1\le p<\infty \). When \(p=2\), our result reduces to Theorem 4.1 of Chaubey et al. (2013); in the case of \(d=1\) and \(p=2\), it becomes Theorem 5.1 of Chaubey and Shirazi (2015).

1.1 Wavelets and Besov spaces

As a central notion in wavelet analysis, Multiresolution Analysis plays an important role for constructing a wavelet basis, which means a sequence of closed subspaces \(\{V_{j}\}_{j\in {\mathbb {Z}}}\) of the square integrable function space \(L^{2}({\mathbb {R}}^{d})\) satisfying the following properties:

-

(i)

\(V_{j}\subseteq V_{j+1}\), \(j\in {\mathbb {Z}}\). Here and after, \({\mathbb {Z}}\) denotes the integer set and \(\mathbb {N}:=\{n\in {\mathbb {Z}}, n\ge 0\};\)

-

(ii)

\(\overline{\bigcup \limits _{j\in {\mathbb {Z}}} V_{j}}=L^{2}({\mathbb {R}}^{d})\). This means the space \(\bigcup \nolimits _{j\in {\mathbb {Z}}} V_{j}\) being dense in \(L^{2}({\mathbb {R}}^{d})\);

-

(iii)

\(f(2\cdot )\in V_{j+1}\) if and only if \(f(\cdot )\in V_{j}\) for each \(j\in {\mathbb {Z}}\);

-

(iv)

There exists a scaling function \(\varphi (x)\in L^{2}({\mathbb {R}}^{d})\) such that \(\{\varphi (\cdot -k),k\in {\mathbb {Z}}^{d}\}\) forms an orthonormal basis of \(V_{0}=\overline{\mathrm{span}}\{\varphi (\cdot -k)\}\).

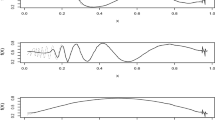

When \(d=1\), there is a simple way to define an orthonormal wavelet basis. Examples include the Daubechies wavelets with compact supports. For \(d\ge 2\), the tensor product method gives an MRA \(\{V_{j}\}\) of \(L^{2}({\mathbb {R}}^{d})\) from one-dimensional MRA. In fact, with a scaling function \(\varphi \) of tensor products, we find \(M=2^{d}-1\) wavelet functions \(\psi ^{\ell }~(\ell =1,2,\ldots ,M)\) such that for each \(f\in L^{2}({\mathbb {R}}^{d})\), the following decomposition

holds in \(L^{2}({\mathbb {R}}^{d})\) sense, where \(\alpha _{j_{0},k}=\langle f,\varphi _{j_{0},k}\rangle \), \(\beta _{j,k}^{\ell }=\langle f,\psi _{j,k}^{\ell }\rangle \) and

Let \(P_{j}\) be the orthogonal projection operator from \(L^{2}({\mathbb {R}}^{d})\) onto the space \(V_{j}\) with the orthonormal basis \(\{\varphi _{j,k}(\cdot )=2^{jd/2}\varphi (2^{j}\cdot -k),k\in {\mathbb {Z}}^{d}\}\). Then for \(f\in L^{2}({\mathbb {R}}^{d})\),

A wavelet basis can be used to characterize Besov spaces. The next lemma provides equivalent definitions for those spaces, for which we need one more notation: a scaling function \(\varphi \) is called m-regular, if \(\varphi \in C^{m}({\mathbb {R}}^{d})\) and \(|D^{\alpha }\varphi (x)|\le c(1+|x|^{2})^{-\ell }\) for each \(\ell \in {\mathbb {Z}}\) and each multi-index \(\alpha \in \mathbb {N}^d\) with \(|\alpha |\le m\).

Lemma 1.1

(Meyer 1990) Let \(\varphi \) be m-regular, \( \psi ^{\ell } \) (\( \ell =1, 2, \ldots , M, M=2^{d}-1 \)) be the corresponding wavelets and \(f\in L^{p}({\mathbb {R}}^{d})\). If \(\alpha _{j,k}=\langle f,\varphi _{j,k} \rangle \), \(\beta _{j,k}^{\ell }=\langle f,\psi _{j,k}^{\ell } \rangle \), \(p,q\in [1,\infty ]\) and \(0<s<m\), then the following assertions are equivalent:

-

(1)

\(f\in B^{s}_{p,q}({\mathbb {R}}^{d})\);

-

(2)

\(\{2^{js}\Vert P_{j+1}f-P_{j}f\Vert _{p}\}\in l_{q};\)

-

(3)

\(\{2^{j(s-\frac{d}{p}+\frac{d}{2})}\Vert \beta _{j}\Vert _{p}\}\in l_{q}.\)

The Besov norm of f can be defined by

We also need a lemma (Härdle et al. 1998) in our later discussions.

Lemma 1.2

Let a scaling function \(\varphi \in L^{2}({\mathbb {R}}^{d})\) be m-regular and \(\{\alpha _{k}\}\in l_{p}, 1\le p\le \infty \). Then there exist \(c_{2}\ge c_{1}>0\) such that

In Härdle et al. (1998), the authors assume a weaker condition than m-regularity. For \(d=1\), the proof of the lemma can be found in Härdle et al. (1998). Similar arguments work as well for \(d\ge 2\). In addition, Lemma 1.2 holds if the scaling function \(\varphi \) is replaced by the corresponding wavelet \(\psi \).

1.2 Problem and main theorem

In this paper we aim to estimate the unknown regression function

from a strong mixing sequence (see Definition 1.1) of bivariate random variables \((X_{1},Y_{1}), (X_{2},Y_{2}),\ldots , (X_{n},Y_{n})\) with the common density function

where \(\omega \) denotes a weight function, g stands for the density function of the unobserved bivariate random variable (U, V) and \(\mu =E\omega (U,V)<\infty \). In addition, h(x) is assumed to be the known density of U with compact support on \([0,1]^{d}\), as in Chaubey et al. (2013) and Chaubey and Shirazi (2015). Throughout the paper, we always require \( \mathrm{supp}~X_{i} \subseteq [0,1]^{d}\).

Definition 1.1

A strictly stationary sequence of random vectors \(\{X_{i}\}_{i\in {\mathbb {Z}}}\) is said to be strong mixing, if

where \( \digamma ^{0}_{-\infty } \) denotes the \(\sigma \) field generated by \( \{X_{i}\}_{i \le 0}\) and \( \digamma ^{\infty }_{k} \) does by \( \{X_{i}\}_{i \ge k}\).

Obviously, the independent and identically distributed (i.i.d) data are strong mixing, since \({\mathbb {P}} (A\cap B)={\mathbb {P}}(A) {\mathbb {P}} (B)\) and \(\alpha (k)\equiv 0\) in that case. In addition, \(\{X_{i}\}\) is said to be geometrically strong mixing , when \(\alpha (k)\le \gamma \delta ^{k}\) for some \(\gamma >0\) and \(0<\delta <1\). Now, we provide two examples for geometrically strong mixing data.

Example 1

(Kulik 2008) Let \(X_t=\sum \nolimits _{j\in {\mathbb {Z}}}a_j\varepsilon _{t-j}\) with

Then it can be proved by Theorem 2 and Corollary 1 of Doukhan (1994) on Page 58 that \(\{X_t,~t\in {\mathbb {Z}}\}\) is a geometrically strong mixing sequence.

Example 2

(Mokkadem 1988) Let \(\{\varepsilon (t),t\in {\mathbb {Z}}\}\overset{i.i.d.}{\sim } N_r(\mathbf {0},\Sigma )\) (r-dimensional normal distribution) and \(\{Y(t),~t\in {\mathbb {Z}}\}\) satisfy the auto-regression moving average equation

with \(l\times r\) and \(l\times l\) matrices A(k), B(i) respectively, as well as B(0) being the identity matrix. If the absolute values of the zeros of the determinant \(\text{ det }~P(z):=\text{ det }\sum \nolimits _{i=0}^pB(i)z^i (z\in \mathbb {C})\) are strictly greater than 1, then \(\{Y(t),~t\in {\mathbb {Z}}\}\) is geometrically strong mixing.

Those two important examples tell us that the strong mixing data does not reduce to the classical i.i.d, although \(\alpha (k)\) goes to zero in the so fast way, \(\alpha (k)=O(\delta ^{k})\).

It is well known that a Lebesgue measurable function maps i.i.d. data to i.i.d. data. When dealing with strong mixing data, it seems necessary to require the functions \(\omega , \rho , h\) in (2) and (3) to be Borel measurable. A Borel measurable function f on \({\mathbb {R}}^{d}\) means \(\{x\in {\mathbb {R}}^{d}, f(x)>c\}\) being a Borel set for each \(c\in {\mathbb {R}}\). In that case, we can prove easily that \(\{f(X_{i})\}\) remains strong mixing and \(\alpha _{f(X)}(k)\le \alpha _{X}(k)~(k=1, 2, \ldots )\), if \(\{X_{i}\}\) has the same property, see Guo (2016). This note is important for the proofs of Propositions 2.2 and 2.3.

Before introducing our estimators, we formulate the following assumptions:

-

H1.

The density function h of the random variable U has a positive lower bound,

$$\begin{aligned} \inf \limits _{x\in [0,1]^{d}}h(x)\ge c_{1}>0. \end{aligned}$$ -

H2.

The weight function \(\omega \) has both positive upper and lower bounds, i.e., for \((x,y)\in [0,1]^{d}\times {\mathbb {R}},\)

$$\begin{aligned} 0<c_{2}\le \omega (x,y)\le c_{3}<+\infty . \end{aligned}$$ -

H3.

There exists a constant \(c_{4}>0\) such that,

$$\begin{aligned} \sup \limits _{y\in {\mathbb {R}}}|\rho (y)|\le c_{4},\quad \int _{{\mathbb {R}}}|\rho (y)|dy\le c_{4}. \end{aligned}$$ -

H4.

The strong mixing coefficient of \(\{(X_{i}, Y_{i}), i=1, 2, \ldots , n\}\) satisfies \(\alpha (k)\le \gamma e^{-c_{5}k}\) with \(\gamma>0, c_{5}>0\).

-

H5.

The density \(f_{(X_{1}, Y_{1}, X_{k+1}, Y_{k+1})}\) of \((X_{1}, Y_{1}, X_{k+1}, Y_{k+1})~(k\ge 1)\) and the density \(f_{(X_{1}, Y_{1})}\) of \((X_{1}, Y_{1})\) satisfy that for \((x, y, x^{*}, y^{*})\in [0,1]^{d}\times {\mathbb {R}}\times [0,1]^{d}\times {\mathbb {R}}\),

$$\begin{aligned} \sup \limits _{k\ge 1}\sup \limits _{(x, y, x^{*}, y^{*})\in [0,1]^{d}\times {\mathbb {R}}\times [0,1]^{d}\times {\mathbb {R}}}|h_{k}(x, y, x^{*}, y^{*})|\le c_{6}, \end{aligned}$$where \(h_{k}(x, y, x^{*}, y^{*})=f_{(X_{1}, Y_{1}, X_{k+1}, Y_{k+1})}(x, y, x^{*}, y^{*}) -f_{(X_{1}, Y_{1})}(x,y)f_{(X_{k+1}, Y_{k+1})}(x^{*}, y^{*})\) and \(c_{6}>0\).

The assumptions H1 and H2 are standard for the nonparametric regression model with biased data (Chaubey et al. 2013; Chesneau and Shirazi 2014). In Chaubey and Shirazi (2015), the authors assume \(h\equiv 1\). While \(Y\in [a,b]\) is required by Chaubey et al. (2013). Condition H5 can be viewed as a ‘Castellana-Leadbetter’ type condition in Masry (2000).

We choose d-dimensional scaling function

with \(D_{2N}(\cdot )\) being the one-dimensional Daubechies scaling function. Then \(\varphi \) is m-regular \((m>0)\) when N gets large enough. Note that \(D_{2N}\) has compact support \([0,2N-1]\) and the corresponding wavelet has compact support \([-N+1,~N]\). Then for \(r\in L^{2}({\mathbb {R}}^{d})\) with \(\mathrm{supp}~r\subseteq [0,1]^{d}\) and \(M=2^{d}-1\),

where \(\varLambda _{j_{0}}=\{1-2N, 2-2N, \ldots , 2^{j_{0}}\}^{d}, ~\varLambda _{j}=\{-N, -N+1, \ldots , 2^{j}+N-1\}^{d}\) and

We introduce

and

By H1–H3, the definitions in (4)–(6) are all well-defined. When \(\rho (Y)=Y\), \(\widehat{\mu }_{n}\) and \(\widehat{\alpha }_{j_{0},k}\) in (4) and (5) are the same as those of Chaubey et al. (2013). If \(d=1\) and \(h(x)=I_{[0, 1]}(x)\), then \(\widehat{\mu }_{n}\), \(\widehat{\alpha }_{j_{0},k}\) and \(\widehat{\beta }_{j,k}^{\ell }\) in (4)–(6) reduce completely to those in Chaubey and Shirazi (2015).

We define our linear wavelet estimator

and the nonlinear wavelet estimator

where \(t_{n}\), \(j_{0}\) and \(j_{1}\) are specified in the Main Theorem, while the constant \(\kappa \) will be chosen in the proof of the theorem.

Comparing with the wavelet estimators in Chaubey et al. (2013) and Chaubey and Shirazi (2015), we use a wavelet basis in the whole space instead of wavelets on an interval. In the later case, boundary elements need be treated appropriately.

The following notations are needed to state our main theorem: For \(H>0\),

and \(x_{+}:=\max \{x,0\}.\) In addition, \(A\lesssim B\) denotes \(A\le cB\) for some constant \(c>0\); \(A\gtrsim B\) means \(B\lesssim A\); \(A\thicksim B\) stands for both \(A\lesssim B\) and \(B\lesssim A\). The indicator function on a set G is denoted by \(I_{G}\) as usual.

Main Theorem

Consider the problem defined by (2) and (3) under the assumptions H1–H5. Let \(r\in B^{s}_{\widetilde{p},q}(H), \widetilde{p},q\in [1,\infty ),~s>0\), \(\mathrm{supp}~r\subseteq [0,1]^{d}\) and either \(\widetilde{p}\ge p\) or \(\widetilde{p}\le p<\infty \) and \(s>\frac{d}{\widetilde{p}}\). Then for \(1\le p<+\infty \), the linear wavelet estimator \(\widehat{r}^{lin}_{n}\) defined in (7) with \(2^{j_{0}}\thicksim n^{\frac{1}{2s'+d+dI_{\{p>2\}}}}\) and \(s'=s-d(\frac{1}{\widetilde{p}}-\frac{1}{p})_{+}\) satisfies

The nonlinear estimator in (8) with \(2^{j_{0}}\sim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}~(m>s)\), \(2^{j_{1}}\sim (\frac{n}{(\ln n)^{3}})^{\frac{1}{d}}\) and \(t_{n}=\left[ I_{\{1\le p\le 2\}}+2^{\frac{jd}{2}}I_{\{p>2\}}\right] \sqrt{\frac{\ln n}{n}}\) satisfies

where

Remark 1

When \(p=2\), (9a) reduces to Theorem 4.1 of Chaubey et al. (2013); If \(p=2\) and \(d=1\), (9b) becomes Theorem 5.1 in Chaubey and Shirazi (2015) up to a \(\ln n\) factor.

In contrast to the linear wavelet estimator \(\widehat{r}^{lin}_{n}\), the nonlinear estimator \(\widehat{r}^{non}_{n}\) is adaptive, which means both \(j_{0}\) and \(j_{1}\) do not depend on s, \(\widetilde{p}\) and q. On the other hand, the convergence rate of the nonlinear estimator remains the same as that of the linear estimator up to \((\ln n)^{\frac{3p}{2}}\), when \(\widetilde{p}\ge p\). However, it gets better for \(\widetilde{p}<p\). The same situation happens for i.i.d. case.

Remark 2

Compared with the estimation for i.i.d data in Kou and Liu (2017), the convergence rate of Main Theorem keeps the same ( up to a \(\ln n\) factor), when \(p\in [1,2]\). However, it becomes worse for \(p>2\). This exhibits a major difference between those two types of data.

For i.i.d case, a lower bound estimation under \(L^{p}\) risk is provided by Kou and Liu (2016). It is a challenging problem for strong mixing data.

Remark 3

From (9a)–(9c) in our Main Theorem, we find that the convergence rates close to zero, when the dimension d gets very large (curse of dimensionality). In fact, the same situation happens for the classical i.i.d case (Delyon and Judisky 1996; Kou and Liu 2017). To reduce the influence of the dimension d on the accuracy of estimation, a known method is to assume some independent structure of the samples (Rebelles 2015a, b; Lepski 2013). Since the strong mixing data is much more complicated than the i.i.d one, it would be a challenging problem to do the same in our setting. We shall study it in future.

2 Three propositions

In this section, we provide three propositions for the proof of the Main Theorem which is given in Sect. 3. Clearly, \(\mu :=E\omega (U,V)>0\) under the condition H2. Moreover, the following simple (but important) lemma holds.

Lemma 2.1

For the problem defined in (2) and (3) and \(\widehat{\mu }_{n}\) given by (4),

where \(\alpha _{j_{0},k}=\int _{[0, 1]^{d}}r(x)\varphi _{j_{0},k}(x)dx\) and \(\beta _{j,k}^{\ell }=\int _{[0, 1]^{d}}r(x)\psi _{j,k}^{\ell }(x)dx~(\ell =1,2,\ldots , M).\)

Proof

One includes a simple proof for completeness, although it is essentially the same as that of Chaubey et al. (2013). By (4),

This with (3) leads to

which concludes (10a). Using (3) and (2), one knows that

This completes the proof of (10b). Similar arguments show (10c). \(\square \)

To establish the next two propositions, we need an important Lemma.

Lemma 2.2

(Davydov 1970) Let \(\{X_{i}\}_{i\in {\mathbb {Z}}}\) be strong mixing with the mixing coefficient \(\alpha (k)\), f and g be two measurable functions. If \(E|f(X_{1})|^{p}\) and \(E|g(X_{1})|^{q}\) exist for \(p, q>0\) and \(\frac{1}{p}+\frac{1}{q}<1\), then there exists a constant \(c>0\) such that

Proposition 2.1

Let \((X_{i}, Y_{i})~(i=1, 2, \ldots , n)\) be strong mixing, H1–H5 hold and \(2^{jd}\le n\). Then

Proof

Note that Condition H2 implies \(\mathrm{var} \left( \frac{1}{\omega (X_{i},Y_{i})}\right) \le E\left( \frac{1}{\omega (X_{i},Y_{i})}\right) ^{2}\lesssim 1\) and

Then it suffices to show

for the first inequality of (11). By the strict stationarity of \((X_{i}, Y_{i})\),

On the other hand, Lemma 2.2 and H2 show that

These with H4 give the desired conclusion (12),

To prove the second inequality of (11), one observes

By (3) and H1–H3, the first term of the above inequality is bounded by

It remains to show

where the assumption \(2^{jd}\le n\) is needed.

According to H5 and H1–H3,

Hence,

On the other hand, Lemma 2.2, H1–H3 and the arguments before (13) tell that

Moreover, \( \sum \nolimits _{m=2^{jd}}^{n}\left| \mathrm{cov} \left( \frac{\rho (Y_{1})\psi ^{\ell }_{j,k}(X_{1})}{\omega (X_{1},Y_{1})h(X_{1})},\frac{\rho (Y_{m+1})\psi ^{\ell }_{j,k}(X_{m+1})}{\omega (X_{m+1},Y_{m+1})h(X_{m+1})}\right) \right| \lesssim \sum \nolimits _{m=2^{jd}}^{n}\sqrt{\alpha (m)}~2^{\frac{jd}{2}} \lesssim \lesssim \sum \nolimits _{m=1}^{n}\sqrt{m\alpha (m)}\le \sum \nolimits _{m=1}^{+\infty }m^{\frac{1}{2}}\gamma e^{-\frac{cm}{2}}<+\infty .\) This with (14) shows (13). \(\square \)

To estimate \(E\Big |\widehat{\alpha }_{j_{0},k}-\alpha _{j_{0},k}\Big |^{p}\) and \(E\Big |\widehat{\beta }^{\ell }_{j,k}-\beta ^{\ell }_{j,k}\Big |^{p}\), we introduce a moment bound, which can be found in Yokoyama (1980), Kim (1993) and Shao and Yu (1996).

Lemma 2.3

Let \(\{X_{i}, i=1, 2, \ldots , n\}\) be a strong mixing sequence of random variables with the mixing coefficients \(\alpha (n)\le c n^{-\theta }~(c>0, \theta >0)\). If \(EX_{i}=0\), \(\Vert X_{i}\Vert _{\eta }:=(E|X_{i}|^{\eta })^{\frac{1}{\eta }}<\infty \), \(2<p<\eta <+\infty \) and \(\theta >\frac{p~\eta }{2(\eta -p)}\), then there exists \(K=K(p, \eta , \theta )<\infty \) such that

Proposition 2.2

Let \(r\in B^{s}_{\widetilde{p},q}(H) ~(\widetilde{p},q\in [1,\infty ),~s>0)\) and \(\widehat{\alpha }_{j_{0},k}, \widehat{\beta }^{\ell }_{j,k}\) be defined by (5) and (6). If H1–H5 hold, then

Proof

One proves (15b) only, (15a) is similar. By the definition of \(\widehat{\beta }^{\ell }_{j,k}\),

and \(E\left| \widehat{\beta }^{\ell }_{j,k}-\beta ^{\ell }_{j,k}\right| ^{p}\lesssim E\left| \frac{\widehat{\mu }_{n}}{\mu } \left[ \frac{\mu }{n}\sum \nolimits _{i=1}^{n}\frac{\rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi ^{\ell }_{j,k}(X_{i})-\beta ^{\ell }_{j,k}\right] \right| ^{p} +E\left| \beta ^{\ell }_{j,k}\widehat{\mu }_{n} \left( \frac{1}{\mu }-\frac{1}{\widehat{\mu }_{n}}\right) \right| ^{p}.\) Since Condition H3 implies the boundedness of r, \(\left| \beta ^{\ell }_{j,k}\right| :=\left| \int _{[0,1]^{d}}r(x)\psi ^{\ell }_{j,k}(x)dx\right| \lesssim 1\) thanks to Hölder inequality and orthonormality of \(\{\psi ^{\ell }_{j,k}\}\). On the other hand, \(\left| \frac{\widehat{\mu }_{n}}{\mu }\right| \lesssim 1\) and \(|\widehat{\mu }_{n}|\lesssim 1\) because of H2. Hence,

When \(p=2\), it is easy to see from Lemma 2.1 and Proposition 2.1 that \(E\left| \widehat{\beta }^{\ell }_{j,k}-\beta ^{\ell }_{j,k}\right| ^{2}\lesssim \mathrm{var} \left[ \frac{1}{n}\sum \nolimits _{i=1}^{n}\frac{\rho (Y_{i})\psi ^{\ell }_{j,k}(X_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\right] +\mathrm{var} \left[ \frac{1}{n}\sum \nolimits _{i=1}^{n}\frac{1}{\omega (X_{i},Y_{i})}\right] \lesssim \frac{1}{n}\). This with Jensen’s inequality shows \(E\left| \widehat{\beta }^{\ell }_{j,k}-\beta ^{\ell }_{j,k} \right| ^{p}\lesssim n^{-\frac{p}{2}}\) for \(1\le p\le 2\).

It remains to show (15b) true for \(p>2\). By the definition of \(\widehat{\mu }_{n}\),

Let \(\eta _{i}:=\frac{1}{\omega (X_{i},Y_{i})}-\frac{1}{\mu }\). Then \(E(\eta _{i})=0\) thanks to (10a). Furthermore, \(\eta _{1}, \ldots , \eta _{n}\) are strong mixing by the same property of \(\{(X_{i}, Y_{i})\}\) and the Borel measurability of the function \(\frac{1}{\omega (x,y)}-\frac{1}{\mu }\) (Guo 2016). On the other hand, Condition H2 implies \(|\eta _{i}|\lesssim 1\) and \(\Vert \eta _{i}\Vert _{\eta }^{p}\lesssim 1\). By Condition H4, \(\theta \) in Lemma 2.3 can be taken large enough so that \(\theta >\frac{p\eta }{2(\eta -p)}\) for fixed p and \(\eta \) with \(2<p<\eta <+\infty \). Then it follows from Lemma 2.3 and (17) that

Finally, one needs only to show

Define \(\xi _{i}:=\frac{\mu \rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi ^{\ell }_{j,k}(X_{i})-\beta ^{\ell }_{j,k}\). Then similar arguments to \(\eta _{i}\) conclude that \(E(\xi _{i})=0\), \(Q_{n}=\frac{1}{n^{p}}E|\sum \nolimits _{i=1}^{n}\xi _{i}|^{p}\) and \(\xi _{1}, \ldots , \xi _{n}\) are strong mixing with the mixing coefficients \(\alpha (k)\le \gamma e^{-ck}\). According to H1–H3 and \(\left| \psi ^{\ell }(x)\right| \lesssim 1\),

This with \(\beta ^{\ell }_{j,k}=E\left[ \frac{\mu \rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi ^{\ell }_{j,k}(X_{i})\right] \) leads to \(E|\xi _{i}|^{\eta }\lesssim 2^{\frac{jd\eta }{2}}\) and \(\Vert \xi _{i}\Vert _{\eta }^{p}\lesssim 2^{\frac{jdp}{2}}\). Using Lemma 2.3 again, one obtains the desired conclusion (18). \(\square \)

To prove the last proposition in this section, we need the following Bernstein-type inequality (Liebscher 1996, 2001; Rio 1995).

Lemma 2.4

Let \((X_{i})_{i\in {\mathbb {Z}}}\) be a strong mixing process with mixing coefficient \(\alpha (k)\), \(EX_{i}=0\), \(|X_{i}|\le M<\infty \) and \(D_{m}=\max \limits _{1\le j\le 2m}\mathrm{var} \left( \sum \nolimits _{i=1}^{j}X_{i}\right) \). Then for \(\varepsilon >0\) and \( ~n,m\in \mathbb {N}\) with \(0<m\le \frac{n}{2}\),

From the next proposition, we realize the reason for choosing \(2^{j_{1}}\sim \Big (\frac{n}{(\ln n)^{3}}\Big )^{\frac{1}{d}}\) in our Main Theorem. The classical choice is \(2^{j_{1}}\sim \Big (\frac{n}{\ln n}\Big )^{\frac{1}{d}}\), see Chesneau and Shirazi (2014).

Proposition 2.3

Let \(r\in B^{s}_{\widetilde{p},q}(H) ~(\widetilde{p},q\in [1,\infty ),~s>0)\), \(\widehat{\beta }^{\ell }_{j,k}\) be defined in (6) and \(t_{n}=\sqrt{\frac{\ln n}{n}}\). If H1–H5 hold and \(2^{jd}\le \frac{n}{(\ln n)^{3}}\), then for \(w>0\), there exists a constant \(\kappa >1\) such that

Proof

According to the arguments of (16), \(\left| \widehat{\beta }_{j,k}^{\ell }-\beta _{j,k}^{\ell }\right| \lesssim \frac{1}{n} \left| \sum \nolimits _{i=1}^{n} \left[ \frac{1}{\omega (X_{i},Y_{i})}-\frac{1}{\mu } \right] \right| + \left| \frac{1}{n}\sum \nolimits _{i=1}^{n}\frac{\mu \rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi _{j,k}^{\ell }(X_{i})-\beta _{j,k}^{\ell }\right| .\) Hence, it suffices to prove

One shows the second inequality only, because the first one is similar and even simpler.

Define \(\xi _{i}:=\frac{\mu \rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi _{j,k}^{\ell }(X_{i})-\beta _{j,k}^{\ell }\). Then \(E(\xi _{i})=0\) thanks to (10c), and \(\xi _{1}, \ldots , \xi _{n}\) are strong mixing with the mixing coefficients \(\alpha (k)\le \gamma e^{-ck}\) because of Condition H4. By H1–H3, \(\left| \frac{\mu \rho (Y_{i})}{\omega (X_{i},Y_{i})h(X_{i})}\psi _{j,k}^{\ell }(X_{i})\right| \lesssim 2^{\frac{jd}{2}}\) and

According to Proposition 2.1, \(D_{m}=\max \limits _{1\le j\le 2m}\mathrm{var} \left( \sum \nolimits _{i=1}^{j}\xi _{i}\right) \lesssim m\). Then it follows from Lemma 2.4 with \(m=u\ln n\) (the constant u will be chosen later on) that

Clearly, \(64~\frac{2^{\frac{jd}{2}}}{\kappa ~n~t_{n}}n\gamma e^{-cm}\lesssim n e^{-cu\ln n}\) holds due to \(t_{n}=\sqrt{\frac{\ln n}{n}}\), \(2^{jd}\le \frac{n}{(\ln n)^{3}}\) and \(m=u\ln n\). Choose u such that \(1-cu<-\frac{w}{d}\), then the second term of (20) is bounded by \(2^{-wj}\). On the other hand, the first one of (20) has the following upper bound

thanks to \(D_{m}\lesssim m\), \(2^{jd}\le \frac{n}{(\ln n)^{3}}\) and \(m=u\ln n\). For this estimation, \(2^{jd}\le \frac{n}{(\ln n)^{3}}\) is essential, one can not replace that condition by \(2^{jd}\le \frac{n}{\ln n}\). Obviously, there exists sufficiently large \(\kappa >1\) such that \(\exp \left\{ -\frac{\kappa ^{2}\ln n}{64} \left( 1+\frac{1}{6}\kappa u\right) ^{-1}\right\} \lesssim 2^{-wj}\). Finally, the desired conclusion (19) follows. \(\square \)

3 Proof of main theorem

This section proves the Main Theorem. We rewrite it as Theorem 3.1. The main idea of the proof comes from Donoho et al. (1996). When \(p=2\), the corresponding estimates seems easier (see Chaubey et al. 2013; Chaubey and Shirazi 2015), because \(L^{2}({\mathbb {R}}^{d})\) is a Hilbert space.

Theorem 3.1

Consider the problem defined by (2) and (3) with the assumptions H1–H5. Let \(r\in B^{s}_{\widetilde{p},q}(H)(\widetilde{p},q\in [1,\infty ),~s>0)\), \(supp~r\subseteq [0,1]^{d}\) and either \(\widetilde{p}\ge p\) or \(\widetilde{p}\le p<\infty \) and \(s>\frac{d}{\widetilde{p}}\). Then for \(1\le p<+\infty \), the linear wavelet estimator \(\widehat{r}^{lin}_{n}\) defined in (7) with \(2^{j_{0}}\thicksim n^{\frac{1}{2s'+d+dI_{\{p>2\}}}}\) and \(s'=s-d\left( \frac{1}{\widetilde{p}}-\frac{1}{p}\right) _{+}\) satisfies

The nonlinear one in (8) with \(2^{j_{0}}\sim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}~(m>s)\), \(2^{j_{1}}\sim \Big (\frac{n}{(\ln n)^{3}}\Big )^{\frac{1}{d}}\) and \(t_{n}=\left[ I_{\{1\le p\le 2\}}+2^{\frac{jd}{2}}I_{\{p>2\}}\right] \sqrt{\frac{\ln n}{n}}\) satisfies

where

Proof

When \(\widetilde{p}\le p\) and \(s>\frac{d}{\widetilde{p}}, ~s'-\frac{d}{p}=s-\frac{d}{\widetilde{p}}>0\). By the equivalence of (1) and (3) in Lemma 1.1, \(B^{s}_{\widetilde{p},q}(H)\subseteq B^{s'}_{p,q}(H')\) for some \(H'>0\). Then \(r\in B^{s'}_{p, q}(H')\) and \(\Vert P_{j_{0}}r-r\Vert _{p}=\Vert \sum \nolimits _{j=j_{0}}^{\infty }(P_{j+1}r-P_{j}r)\Vert _{p}\lesssim \sum \nolimits _{j=j_{0}}^{\infty }\Vert P_{j+1}r-P_{j}r\Vert _{p}\lesssim \sum \nolimits _{j=j_{0}}^{\infty }2^{-js'}\lesssim 2^{-j_{0}s'}\) thanks to (2) of Lemma 1.1. Moreover,

It is easy to see from Lemma 1.2 that

According to Proposition 2.2 and \(|\varLambda _{j_{0}}|\thicksim 2^{j_{0}d}\),

This with (22) shows that \(E\int _{[0,1]^{d}}\big |\widehat{r}^{lin}_{n}(x)-r(x)\big |^{p}dx\le E\int _{{\mathbb {R}}^{d}}\big |\widehat{r}^{lin}_{n}(x)-r(x)\big |^{p}dx \lesssim E\big \Vert \widehat{r}^{lin}_{n}-P_{j_{0}}r\big \Vert _{p}^{p}+\big \Vert P_{j_{0}}r-r\big \Vert _{p}^{p} \lesssim 2^{-j_{0}s'p}+2^{\frac{j_{0}dp}{2}}\big [I_{\{1\le p\le 2\}}+2^{\frac{j_{0}dp}{2}}I_{\{p>2\}}\big ] n^{-\frac{p}{2}}.\) To get a balance, one chooses \(2^{j_{0}}\thicksim n^{\frac{1}{2s'+d+dI_{\{p>2\}}}}\). Then

which is the desired conclusion (21a) for \(\widetilde{p}\le p\) and \(s>\frac{d}{\widetilde{p}}\).

From the above arguments, one finds that when \(p=\widetilde{p}\), \(s'=s>0\) and the inequality (24) still holds without the assumption \(s>\frac{d}{\widetilde{p}}\). It remains to conclude (21a) for \(\widetilde{p}>p\ge 1\). By Hölder inequality,

Using Jensen inequality and (24) with \(p=\widetilde{p}\), one gets

This completes the proof of (21a).

Similar to the arguments of (21a), it suffices to prove (21b) for \(\widetilde{p}\le p\) and \(s>\frac{d}{\widetilde{p}}.\) In this case, (21c) can be rewritten as

By the definitions of \(\widehat{r}^{lin}_{n}\) and \(\widehat{r}^{non}_{n}\), \(\widehat{r}^{non}_{n}(x)-r(x)=\Big [\widehat{r}^{lin}_{n}(x)-P_{j_{0}}r(x)\Big ]-\Big [r(x)-P_{j_{1}+1}r(x)\Big ] +\sum \limits _{j=j_{0}}^{j_{1}} \sum \limits _{\ell =1}^{M}\sum \limits _{k\in \varLambda _{j}}\Big [\widehat{\beta }_{j,k}^{\ell }I_{\{|\widehat{\beta }_{j,k}^{\ell }|\ge \kappa t_{n}\}}-\beta _{j,k}^{\ell }\Big ]\psi _{j,k}^{\ell }(x). \) Hence,

where \(I_{1}:=E\Big \Vert \widehat{r}^{lin}_{n}-P_{j_{0}}r\Big \Vert ^{p}_{p},~~I_{2}:=\Big \Vert r-P_{j_{1}+1}r\Big \Vert ^{p}_{p}\) and

According to (23), \(2^{j_{0}}\sim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}~(m>s)\) and the definition of \(\alpha \) in (21c),

The same arguments as (22) shows \(\big \Vert P_{j_{1}+1}r-r\big \Vert _{p}^{p}\lesssim 2^{-j_{1}s'p}\). On the other hand, \(\frac{s'}{d}=\frac{s}{d}-\frac{1}{\widetilde{p}}+\frac{1}{p} \ge \alpha \) thanks to \(\widetilde{p}\le p\) and \(s>\frac{d}{\widetilde{p}}\). Then it follows from \(2^{j_{1}}\sim \big (\frac{n}{(\ln n)^{3}}\big )^{\frac{1}{d}}\) and \(0<\alpha <\frac{1}{2}\) that

The main work for the proof of (21b) is to show

Note that Lemma 1.2 tells

Then the classical technique (see Donoho et al. 1996) gives

where

For \(Z_{1}\), one observes that

thanks to Hölder inequality. By Proposition 2.3,

On the other hand, Proposition 2.2 implies \(E\Big |\widehat{\beta }_{j,k}^{\ell }-\beta _{j,k}^{\ell }\Big |^{p}\lesssim 2^{\frac{jdp}{2}}n^{-\frac{p}{2}}\) for \(1\le p<+\infty \). Therefore,

Then for \(w>2pd\) in Proposition 2.3, \(Z_{1}\lesssim \sum \nolimits _{j=j_{0}}^{j_{1}}2^{pd(\frac{j}{2}-\frac{j}{p})}2^{jd}2^{\frac{jdp}{2}} n^{-\frac{p}{2}}~2^{-\frac{wj}{2}}\lesssim \lesssim \big (\frac{1}{n}\big )^{\frac{p}{2}}2^{-j_{0}(\frac{w}{2}-pd)}\lesssim \big (\frac{1}{n}\big )^{\frac{p}{2}}\big (\frac{1}{n}\big )^{\frac{\frac{w}{2}-pd}{2m+d+dI_{\{p>2\}}}}\le \big (\frac{1}{n}\big )^{\alpha p} \), where one uses \(\alpha <\frac{1}{2}\) and the choice \(2^{j_{0}}\sim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}~(m>s)\). Hence,

To estimate \(Z_{2}\), one rewrites

with the integer \(j_{0}^{*}\in [j_{0}, j_{1}]\) being specified later on. By Proposition 2.2,

On the other hand, it follows from Proposition 2.2, Lemma 1.1(3) and \(t_{n}=\left[ I_{\{1\le p\le 2\}}+2^{\frac{jd}{2}}I_{\{p>2\}}\right] \sqrt{\frac{\ln n}{n}}\) that

Define

Then for \(\varepsilon >0\), \(Z_{22}\lesssim (\ln n)^{-\frac{\widetilde{p}}{2}}(\frac{1}{n})^{\frac{p-\widetilde{p}}{2}}\sum \nolimits _{j=j_{0}^{*}+1}^{j_{1}}2^{-j\varepsilon }\lesssim (\ln n)^{-\frac{\widetilde{p}}{2}}(\frac{1}{n})^{\frac{p-\widetilde{p}}{2}}2^{-j_{0}^{*}\varepsilon }.\) To balance this with (29), one takes

Note that \(0<\alpha \le \frac{s}{2s+d+dI_{\{p>2\}}}<\frac{1}{2}\) and \(2^{j_{0}}\thicksim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}(m>s)\). Then \(2^{j_{0}^{*}}\le 2^{j_{1}}\thicksim \big (\frac{n}{(\ln n)^{3}}\big )^{\frac{1}{d}}\) and \(2^{j_{0}^{*}}\gtrsim \big (\frac{n}{\ln n}\big )^{\frac{1}{2s+d+dI_{\{p>2\}}}}\gtrsim n^{\frac{1}{2m+d+dI_{\{p>2\}}}}\thicksim 2^{j_{0}}~(m>s)\). Since \(\varepsilon >0\), \(\widetilde{p}>\frac{p(d+dI_{\{p>2\}})}{2s+d+dI_{\{p>2\}}}\) and \(\alpha =\frac{s}{2s+d+dI_{\{p>2\}}}\) thanks to (21c). Moreover, it can be checked that

by considering \(p>2\) and \(p\in [1, 2]\) respectively. This with the choice of \(2^{j^{*}_{0}}\) leads to

On the other hand, (29) with the choice of \(2^{j_{0}^{*}}\) implies

for each \(\varepsilon \in {\mathbb {R}}\).

For the case \(\varepsilon \le 0\), \(\widetilde{p}\le \frac{p(d+dI_{\{p>2\}})}{2s+d+dI_{\{p>2\}}}\) and \(\alpha =\frac{s-\frac{d}{\widetilde{p}}+\frac{d}{p}}{2(s-\frac{d}{\widetilde{p}})+d+dI_{\{p>2\}}}\) (see (21c)). Define \(p_{1}:=(1-2\alpha )p\). Then \(\alpha \le \frac{s}{2s+d+dI_{\{p>2\}}}\) and \(\widetilde{p}\le \frac{p(d+dI_{\{p>2\}})}{2s+d+dI_{\{p>2\}}}\le (1-2\alpha )p=p_{1}.\) Similar to the case \(\varepsilon >0\), \(Z_{22}\lesssim \sum \nolimits _{j=j_{0}^{*}+1}^{j_{1}}2^{pd(\frac{j}{2}-\frac{j}{p})}\sum \nolimits _{\ell =1}^{M}\sum \nolimits _{k\in \varLambda _{j}}E\left| \widehat{\beta }_{j,k}^{\ell }-\beta _{j,k}^{\ell }\right| ^{p}\left( \frac{|\beta _{j,k}^{\ell }|}{\kappa t_{n}/2}\right) ^{p_{1}}.\) Because \(\widetilde{p}\le p_{1}\) and \(r\in B^{s}_{\widetilde{p},q}(H)\), \(\big \Vert \beta _{j}\big \Vert _{p_{1}}^{p_{1}}\le \big \Vert \beta _{j}\big \Vert _{\widetilde{p}}^{p_{1}}\lesssim 2^{-j\big (s-\frac{d}{\widetilde{p}}+\frac{d}{2}\big )p_{1}}\) and

By the definitions of \(p_{1}\) and \(\alpha \), \(sp_{1}-\frac{dp_{1}}{\widetilde{p}}+\frac{dp_{1}}{2}+\frac{dp_{1}}{2}I_{\{p>2\}}-\frac{dp}{2}-\frac{dp}{2}I_{\{p>2\}}+d=0\) and \(Z_{22}\lesssim \Big (\ln n\Big )^{-\frac{p_{1}}{2}}\Big (\frac{1}{n}\Big )^{\frac{p-p_{1}}{2}}(\ln n)\lesssim \Big (\ln n\Big )\Big (\frac{1}{n}\Big )^{\alpha p}\). This with (30) and (31) shows in both cases,

Finally, one estimates \(Z_{3}\). Clearly,

This with the choice of \(2^{j^{*}_{0}}\) shows

On the other hand,

The same arguments as (30) shows that for \(\varepsilon >0\),

To prove (34) true when \(\varepsilon \le 0\), one writes

where the integer \(j_{1}^{*}\in [j_{0}^{*}+1, j_{1}]\) will be determined in the following. Similar to the case \(\varepsilon >0\),

holds for \(\varepsilon \le 0\). By \(s>\frac{d}{\widetilde{p}}\) and \(\big \Vert \beta _{j}\big \Vert _{\widetilde{p}}\lesssim 2^{-j(s-\frac{d}{\widetilde{p}}+\frac{d}{2})}\),

To make a balance with (35), one takes \(\left( \frac{\ln n}{n}\right) ^{\frac{p-\widetilde{p}}{2}}2^{-j_{1}^{*}\varepsilon }\sim 2^{-j_{1}^{*}\big (d+sp-pd/\widetilde{p}\big )}\), which means

where \(\varepsilon \le 0\) is equivalent to \(\widetilde{p}\le \frac{p(d+dI_{\{p>2\}})}{2s+d+dI_{\{p>2\}}}\) and \(\alpha =\frac{s-\frac{d}{\widetilde{p}}+\frac{d}{p}}{2(s-\frac{d}{\widetilde{p}})+d+dI_{\{p>2\}}}\). In that case, \(\Big (\frac{n}{\ln n}\Big )^{\frac{1-2\alpha }{d+dI_{\{p>2\}}}}\sim 2^{j_{0}^{*}}\le 2^{j_{1}^{*}}\le 2^{j_{1}}\thicksim \Big (\frac{n}{(\ln n)^{3}}\Big )^{\frac{1}{d}}\). Note that

Then \(Z_{321}\lesssim \big (\ln n\big )^{\frac{p}{2}}\big (\frac{1}{n}\big )^{\alpha p}\) and \(Z_{322}\lesssim \big (\ln n\big )^{\frac{p}{2}}\big (\frac{1}{n}\big )^{\alpha p}\). Therefore, \(Z_{32}=Z_{321}+Z_{322}\lesssim \big (\ln n\big )^{\frac{p}{2}}\big (\frac{1}{n}\big )^{\alpha p}\) for \(\varepsilon \le 0\). Combining this with (33) and (34), one knows \(Z_{3}\lesssim \big (\ln n\big )^{\frac{p}{2}}\big (\frac{1}{n}\big )^{\alpha p}\) in both case. This with (27), (28) and (32) shows

which is the desired conclusion. Although \((\ln n)^{\frac{3p}{2}}\) can be replaced by \((\ln n)^{\max {\{p, \frac{3p}{2}-1\}}}\) in the last inequality, it can not be in (21b) because one uses Jensen inequality for \(\widetilde{p}\ge p\). \(\square \)

References

Ahmad IA (1995) On multivariate kernel estimation for samples from weighted distributions. Stat Probab Lett 22:121–129

Chaubey YP, Chesneau C, Shirazi E (2013) Wavelet-based estimation of regression function for dependent biased data under a given random design. J Nonparametr Stat 25:53–71

Chaubey YP, Shirazi E (2015) On MISE of a nonlinear wavelet estimator of the regression function based on biased data under strong mixing. Commun Stat Theory Methods 44:885–899

Chesneau C, Shirazi E (2014) Nonparametric wavelet regression based on biased data. Commun Stat Theory Methods 43:2642–2658

Chesneau C, Fadili J, Maillot B (2015) Adaptive estimation of an additive regression function from weakly dependent data. J Multivar Anal 133:77–94

Davydov YA (1970) The invariance principle for stationary processes. Theory Probab Appl 3:487–498

Delyon B, Judisky A (1996) On minimax wavelet estimators. Appl Comput Harmonic Anal 3:215–228

Donoho DL, Johnstone MI, Kerkyacharian G et al (1996) Density estimation by wavelet thresholding. Ann Stat 24:508–539

Doukhan P (1994) Mixing: properties and examples. Springer, NewYork

Guo HJ (2016) Wavelet estimations for a class of regression functions with errors-in-variables. Dissertation, Beijing University of Technology

Hall P, Patil P (1996) On the choice of smoothing parameter, threshold and truncation in nonparametric regression by nonlinear wavelet methods. J Roy Stat Soc B 58:361–377

Härdle W, Kerkyacharian G, Picard D et al (1998) Wavelets, approximation and statistical application. Springer, New York

Kim TY (1993) A note on moment bounds for strong mixing sequences. Stat Probab Lett 16:163–168

Kou JK, Liu YM (2017) Nonparametric regression estimations over \(L^{p}\) risk based on biased data. Commun Stat Theory Methods 46(5):2375–2395

Kou JK, Liu YM (2016) A extension of Chesneau’s theorem. Stat Probab Lett 108:23–32

Kulik R (2008) Nonparametric deconvolution problem for dependent sequences. Electron J Stat 2:722–740

Lepski O (2013) Multivariate density estimation under sup-norm loss: oracle approach, adaptation and independence structure. Ann Stat 41(2):1005–1034

Liebscher E (1996) Strong convergence of sums of \(\alpha \) mixing random variables with applications to density estimation. Stoch Process Appl 65:69–80

Liebscher E (2001) Estimation of the density and regression function under mixing conditions. Stat Decis 19:9–26

Masry E (2000) Wavelet-based estimation of multivariate regression function in Besov spaces. J Nonparametr Stat 12:283–308

Meyer Y (1990) Wavelets and operators. Hermann, Paris

Mokkadem A (1988) Mixing properties of ARMA processes. Stoch Process Appl 29:309–315

Rebelles G (2015a) \(L^{p}\) adaptive estimation of an anisotropic density under independence hypothesis. Electron J Stat 9:106–134

Rebelles G (2015b) Pointwise adaptive estimation of a multivariate density under independence hypothesis. Bernoulli 21(4):1984–2023

Rio E (1995) The functional law of the iterated logarithm for stationary strongly mixing sequences. Ann Probab 23:1188–1203

Shao QM, Yu H (1996) Weak converhence for weighted empirical processes of depedent sequences. Ann Probab 24:2098–2127

Yokoyama R (1980) Moment bounds for stationary mixing sequences. Probab Theory Relat Fields 52:45–57

Acknowledgements

This work is supported by the National Natural Science Foundation of China [11771030] and the Beijing Natural Science Foundation [1172001]. The authors would like to thank professor Wenqing Xu for his suggestions in writing this manuscript, and the referees for their comments which improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kou, J., Liu, Y. Wavelet regression estimations with strong mixing data. Stat Methods Appl 27, 667–688 (2018). https://doi.org/10.1007/s10260-018-00430-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-018-00430-0