Abstract

The local influence method has proven to be a useful and powerful tool for detecting influential observations on the estimation of model parameters. This method has been widely applied in different studies related to econometric and statistical modelling. We propose a methodology based on the Lagrange multiplier method with a linear penalty function to assess local influence in the possibly heteroskedastic linear regression model with exact restrictions. The restricted maximum likelihood estimators and information matrices are presented for the postulated model. Several perturbation schemes for the local influence method are investigated to identify potentially influential observations. Three real-world examples are included to illustrate and validate our methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The linear regression model with exact linear restrictions is widely used in applied econometrics and statistics. An example in financial econometrics is the simple linear model for the excess return of a security regressed on the excess return of the market portfolio. When the intercept is set equal to zero, this becomes the capital asset pricing model.

The method of restricted least squares (RLS) provides natural estimators of the regression coefficients in a linear model with exact restrictions. Basic distributional properties of the RLS estimator, efficiency comparisons, hypothesis tests and real-world applications can be found in Chipman and Rao (1964), Trenkler (1987), Ramanathan (1993), Greene (2007) and Wooldridge (2013). It is well known that the RLS estimator can be expressed in terms of the ordinary least squares (OLS) estimator. In particular, Magnus and Neudecker (1999) studied the RLS estimator in various specific situations. Gross (2003) and Rao et al. (2008) explored its relationship to a ridge estimator. However, it seems that few publications so far have treated the RLS estimator from a viewpoint of influence diagnostic or sensitivity analyses, even though such analyses are needed and have increasingly been used; see, for example, the monographs Cook and Weisberg (1982) for early studies on residuals and influence in regression, Chatterjee and Hadi (1988) and Rao et al. (2008) for sensitivity analysis in linear regression, Atkinson and Riani (2000) and Atkinson et al. (2004) for robust diagnostic regression analysis, and Kleiber and Zeileis (2008) for regression diagnostics incorporated in a recent textbook on applied econometrics. To our knowledge, only Liu and Neudecker (2007) provided a local sensitivity result for the RLS estimator. Two alternatives to the RLS method are the model reduction and Lagrange multiplier methods. As pointed out by Hocking (2003, p. 622), in the model reduction method it is difficult to assess the effect of adjoining restrictions due to the lack of a specific expression for the reduced model. The Lagrange multiplier method is more amenable to such theoretical developments. Therefore, in the present paper, our approach is based on this last method. We deal with the estimation and diagnostic issues in a systematic manner, which links the unique solution of the restricted maximum likelihood (RML) and RLS estimators to the local influence method both proven to be very useful in practice.

The local influence method was introduced by Cook (1986). Subsequently, alternative approaches were suggested by, for example, Billor and Loynes (1993, 1999), Poon and Poon (1999) and Shi and Huang (2011). Unlike case deletion methods, which can suffer from masking effects, the local influence method is a powerful tool for detecting observations that can be influential in the estimation of model parameters. It now becomes a general method for assessing the influence of local departures from model assumptions on maximum likelihood (ML) estimates. The local influence method is employed in several areas of applied econometrics and statistics. For example, there are a number of applications and studies in regression modelling and time series analysis; see Cook (1986), Galea et al. (1997), Liu (2000, 2002, 2004), Díaz-García et al. (2003), Galea et al. (2008) and Shi and Chen (2008) for studies in linear regression and time series models, de Castro et al. (2007) and Galea and de Castro (2012) for heteroskedastic errors-in-variables models, Leiva et al. (2007, 2014) for influence diagnostics with censored and uncensored data, Barros et al. (2010) for a Tobit model and Paula et al. (2012) for robust modelling applied to insurance data. In particular, the local influence method can play an important role in regression models involving restrictions. Paula (1993) used this method to handle the linear model with inequality restrictions; Cysneiros and Paula (2005) and Paula and Cysneiros (2010) considered parameter constraints in univariate symmetrical linear regression models; and Liu et al. (2009) studied a normal linear model with stochastic linear restrictions.

The objective of this paper is to provide a methodology to assess local influence in the possibly heteroskedastic linear regression model with exact linear restrictions. While influence diagnostics were studied for the spherical linear model by Cook (1986) and the capital asset pricing model by Galea et al. (2008), no such studies have been carried out for the RLS estimator. Therefore, we fill this gap. In addition, heteroskedasticity is a classic issue that is widely encountered in practical situations; see, for example, Greene (2007) and Wooldridge (2013). It was investigated by de Castro et al. (2007) and Galea and de Castro (2012) in models with errors-in-variables, but not with linear restrictions. Furthermore, we present results which are relevant to but different from those considered by Cysneiros and Paula (2005), Liu and Neudecker (2007), Liu et al. (2009) and Paula and Cysneiros (2010). We use the ML method under normality of the model errors to estimate the corresponding parameters with exact restrictions. We deal with not only the general linear model for spherical disturbances, corresponding to the univariate models studied by Cysneiros and Paula (2005) and Paula and Cysneiros (2010), but also the general linear model for non-spherical or heteroskedastic disturbances as an extension. Our approach differs from the quadratic penalty function approach considered in Cysneiros and Paula (2005) and Paula and Cysneiros (2010) by the fact that we employ the Lagrange multiplier method with a linear penalty function, and that the RML estimators are closely related to the RLS or generalized least-squares (GLS) estimators. In addition, we introduce three global influence statistics and compare our local influence statistics with them.

Note that exact restrictions in the model often arise from past experience, economic or financial theory, or the area under study, which must be treated as prior information. We treat such prior information as equivalent to the sample data, although we also allow the possibility that the linear restrictions on the coefficients are not really prior information, but just a null hypothesis, proposed to simplify the relationship between the response variable (called response hereafter) and explanatory variables (called covariables hereafter).

The rest of the paper is organized as follows. In Sects. 2 and 3, we propose a methodology to assess local influence in the normal linear regression model with restrictions. Specifically, in Sect. 2, we formulate and discuss the model, RML estimation, the information matrix and inference. In Sect. 3, we describe the local influence method with different perturbation matrices, and three global influence statistics for comparison purposes. In Sect. 4, we illustrate and validate the proposed methodology with real-world data. In Sect. 5, we make some concluding remarks about this work. The technical proofs of our results are included in the Appendix.

2 Modelling and estimation

In this section, we formulate the general linear regression model with restrictions in two cases: the first with spherical disturbances and the second with non-spherical disturbances. We then provide several estimators for the corresponding parameters, together with the corresponding information matrix.

2.1 Formulation of the model

Spherical disturbance Consider the general linear regression model given by

where \({\varvec{y}} = (Y_1, \ldots , Y_n)^\top \) is an \(n \times 1\) response vector, \({\varvec{X}}\) is an \(n \times p\) known design matrix of rank p containing the values of the covariables, \({\varvec{\beta }}\) is a \(p \times 1\) vector of unknown parameters to be estimated, and \({\varvec{u}}\) is an \(n \times 1\) error vector with expectation \(\text {E}[{\varvec{u}}] = {\varvec{0}}_{n\times 1}\) and variance-covariance matrix \(\text {D}[{\varvec{u}}] = \sigma ^2 {\varvec{I}}_n\). Here, \({\varvec{0}}_{n\times 1}\) is an \(n \times 1\) zero vector, \(\sigma ^2 > 0\) is an unknown parameter to be estimated and \({\varvec{I}}_n\) is the \(n \times n\) identity matrix.

For the model given in (1), suppose we have prior information about \({\varvec{\beta }}\) in the form of a set of q independent exact linear restrictions expressed as

where \({\varvec{R}}\) is a \(q \times p\) known matrix of rank \(q \le p\) and \({\varvec{r}}\) is a \(q \times 1\) vector of known elements. The \(k \times 1\) parameter vector in the formulation given in (1) and (2) is \({\varvec{\theta }} = ({\varvec{\beta }}^\top , \sigma ^2)^\top \), where \(k=p+1\).

Non-spherical disturbance To extend the spherical disturbance assumption for the model given in (1), we consider a groupwise heteroskedastic case. Without loss of generality, we split the data into two groups to correspond to the non-spherical disturbance by writing \({\varvec{y}} = ({\varvec{y}}_1^\top , {\varvec{y}}_2^\top )^\top \), \({\varvec{X}} = ({\varvec{X}}_1^\top , {\varvec{X}}_2^\top )^\top \) and \({\varvec{u}} = ({\varvec{u}}_1^\top , {\varvec{u}}_2^\top )^\top \), where \(n_1+n_2=n\). We extend the model given in (1) by assuming the variance-covariance matrix \(\text {D}[{\varvec{u}}] = \text {diag}(\sigma _1^2 {\varvec{I}}_{n_1}, \sigma _2^2 {\varvec{I}}_{n_2}) ={\varvec{V}}\) (say), with \(\sigma _g^2 > 0\), for \(g=1, 2\), and \(\sigma _1^2 \ne \sigma _2^2\) (non-spherical or heteroskedastic case). We assume the same prior information about \({\varvec{\beta }}\) as in (2). The \(k \times 1\) parameter vector is now \({\varvec{\theta }}_{\text {G}} = ({\varvec{\beta }}^\top , \sigma _1^2, \sigma _2^2)^\top \), where \(k=p+2\).

2.2 Estimation

Spherical disturbance The RLS estimators of the elements of the parameter vector \({\varvec{\theta }}\) in the formulation (1) and (2) are well-known to be

where \({\varvec{b}}=({\varvec{X}}^\top {\varvec{X}})^{-1} {\varvec{X}}^\top {\varvec{y}}\) is the (unrestricted) OLS estimator of \({\varvec{\beta }}\).

If we add the normality assumption \({\varvec{u}} \sim \text {N}_n({\varvec{0}}_{n\times 1}, \sigma ^2 {\varvec{I}}_n)\) to the formulation given in (1) and (2), we can use the ML method to estimate \({\varvec{\theta }}\). In this case, the log-likelihood function for \({\varvec{\theta }}\) to be optimised subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) (called the restricted log-likelihood function) is given by

where \(\varvec{\uplambda }\) is the \(q \times 1\) vector of Lagrange multipliers. In order to obtain the RML estimator, we use matrix calculus to take the differential of \(\ell \) given in (5) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\) and equate it to \({\varvec{0}}_{k \times 1}\). We get

and then the corresponding RML estimators are

where \({\varvec{b}}\) is defined in (3).

Rao et al. (2008) noted that the RML and RLS estimators of \({\varvec{\beta }}\) given in (3) and (6) are the same, but those for \({\sigma }^2\) given in (4) and (7) are not the same. They considered a special case with exact knowledge of a subvector and stepwise inclusion of exact linear restrictions. From (6) we obtain

Note from (8) and (9) that \(\hat{{\varvec{\beta }}}\) is unbiased and more efficient than \({\varvec{b}}\), which has variance \({\varvec{\sigma }}^2 ({\varvec{X}}^\top {\varvec{X}})^{-1}\).

Non-spherical disturbance In this case, the restricted log-likelihood function for \({\varvec{\theta }}_{\text {G}}\) is given by

where \(\varvec{\uplambda }\) is again the \(q \times 1\) vector of Lagrange multipliers. We again use matrix calculus to find the RML estimators under groupwise heteroskedasticity, obtaining

where \({\varvec{b}}_{\text {G}} = ({\varvec{X}}^\top \hat{{\varvec{V}}}^{-1} {\varvec{X}})^{-1} {\varvec{X}}^\top \hat{{\varvec{V}}}^{-1} {\varvec{y}}\) is the GLS estimator of \({\varvec{\beta }}\) with \(\hat{{\varvec{V}}} = \text {diag}(\hat{\sigma }_1^2 {\varvec{I}}_{n_1}, \hat{\sigma }_2^2 {\varvec{I}}_{n_2})\).

From the formulas given in (11) and (12), we need to calculate the RML estimates iteratively. We can employ a procedure starting with the OLS estimate \({\varvec{b}}\) and then iterating between \(\hat{{\varvec{V}}}\) and \({\varvec{b}}_{\text {G}}\) and \(\hat{{\varvec{\beta }}}_{\text {G}}\). Under the usual assumptions, when the restrictions \({\varvec{R}} {\varvec{b}}_{\text {G}} = {\varvec{r}}\) are true, in large samples the distribution of \(\hat{{\varvec{\beta }}}_{\text {G}}\) can be approximated by a normal distribution with mean \({\varvec{\beta }}\) and a variance matrix which is consistently estimated by

For further ideas with examples and relevant asymptotic results, see, for example, Efron and Hinkley (1978), Judge et al. (1988) and Greene (2007). By assuming \(\sigma ^2_1 = \sigma ^2_2\), we note that the results given in (11) and (12) reduce to the RML estimators for \({\varvec{\beta }}\) given in (6) and for \({\sigma }^2\) given in (7). When \({\varvec{V}}\) in (11) is known, we obtain

2.3 Information matrices

Spherical disturbance Using the Hessian matrix derived in Appendix 1 and given by

we obtain the expected Fisher information matrix given by

The observed Fisher information matrix is \({\varvec{J}}(\hat{{\varvec{\theta }}}) = - {\varvec{H}}(\hat{{\varvec{\theta }}}) = {\varvec{I}}(\hat{{\varvec{\theta }}})\).

Non-spherical disturbance In this case, the Hessian matrix is obtained as

where

Then, the corresponding expected Fisher information matrix is given by

and we have \({\varvec{J}}(\hat{{\varvec{\theta }}}_{\text {G}}) = - {\varvec{H}}(\hat{{\varvec{\theta }}}_{\text {G}}) = {\varvec{I}}(\hat{{\varvec{\theta }}}_{\text {G}})\).

3 Influence diagnostics

In this section, we present the local influence method, the perturbation matrices for some different schemes, and then three global influence measures.

3.1 Local influence

Let \(\ell ({\varvec{\theta }})\) be the log-likelihood function for the model given in (1) and (2), which we call the postulated or non-perturbed model. Here \({\varvec{\theta }}\) is the \(k \times 1\) unknown parameter vector to be estimated and we denote its ML estimator by \(\hat{{\varvec{\theta }}}\). Let \({\varvec{w}} =(w_1,\ldots ,w_m)^\top \) denote an \(m \times 1\) perturbed vector and \({\varvec{\varOmega }}\) an open set of relevant perturbations such that \({\varvec{w}} \in {\varvec{\varOmega }}\). Then, let \(\ell ({\varvec{\theta }}|{\varvec{w}}) = \ell _{{\varvec{w}}}\) be the log-likelihood function for the perturbed model and \(\hat{{\varvec{\theta }}}_w\) be the corresponding ML estimator of \({\varvec{\theta }}\). Let \({\varvec{w}}_0 \in {\varvec{\varOmega }}\) denote an \(m \times 1\) non-perturbed vector with \({\varvec{w}}_0 =(0,\ldots ,0) ^\top \), or \({\varvec{w}}_0 =(1,\ldots ,1) ^\top \), or even a third choice, depending on the context, such that \(\ell ({\varvec{\theta }}) = \ell ({\varvec{\theta }}|{\varvec{w}}_0)\). Suppose that \(\ell ({\varvec{\theta }}|{\varvec{w}})\) is twice continuously differentiable in a neighborhood of \((\hat{{\varvec{\theta }}}, {\varvec{w}}_0)\). We are interested in comparing the parameter estimates \(\hat{{\varvec{\theta }}}\) and \(\hat{{\varvec{\theta }}}_w\) using the idea of local influence to detect how the inference is affected by the perturbation. Specifically, in the Cook local influence method, the likelihood displacement (LD) is given by

which can be used to assess the influence of the perturbation \({\varvec{w}}\). Here, large values of LD\(({\varvec{w}})\) indicate that \(\hat{{\varvec{\theta }}}\) and \(\hat{{\varvec{\theta }}}_w\) differ considerably in relation to the contours of the non-perturbed log-likelihood function \(\ell ({\varvec{\theta }}\)). The idea is based on studying the local behaviour of \(\text {LD}({\varvec{w}})\) and the normal curvature \(C_{{\varvec{l}}}\) in a unit-length direction vector \({\varvec{l}}\), where \(||{\varvec{l}}||=1\). According to Cook (1986), the normal curvature is given by \(C_{{\varvec{l}}} = 2|{\varvec{l}} ^\top {\varvec{F}}({\varvec{\theta }}) {\varvec{l}}|\). The maximum curvature \(C_{\max }\) and the corresponding direction vector \({\varvec{l}}_{\max }\) may reveal those observations that exercise more influence on LD\(({\varvec{w}})\), where \(C_{\max } = \max _{||{\varvec{l}}||=1} C_{{\varvec{l}}}\). To examine the magnitude of influence, it is useful to have a benchmark value for \(C_{\max }\) and for the elements of \({\varvec{l}}_{\max }\). For \(C_{\max }\), 2 or a q-value measure suggested by Shi and Huang (2011) can be used. For example, when the q-value is greater than 2, we can say the associated direction vector is significant for detecting influential observations. For the elements of \({\varvec{l}}_{\max }\), Poon and Poon (1999) suggested \(1/\sqrt{n}\) and Shi and Huang (2011) noted \(2/\sqrt{n}\) can be more reasonable, where n is the sample size. In our case, we consider as influential those observations with absolute values of \({\varvec{l}}_{\max }\) exceeding \(2/\sqrt{n}\). To find \(C_{\max }\) and \({\varvec{l}}_{\max }\), we need to calculate the \(m \times m\) matrix \({\varvec{F}}({\varvec{\theta }})\) defined by

where \({\varvec{\varDelta }}({\varvec{\theta }})\) is a \(p \times m\) matrix for the perturbed model (perturbation matrix), which must be obtained from \(\text {d}^2_{{\varvec{\theta }} {\varvec{w}}} \ell _{{\varvec{w}}}\) and evaluated at \({\varvec{\theta }}=\hat{{\varvec{\theta }}}\) and \({\varvec{w}}={\varvec{w}}_0\). Here, \({\varvec{H}}({\varvec{\theta }})\) is obtained from (13) or (14). In order to detect local influence, one or both of the following two options can be considered:

-

(i)

The vector \({\varvec{f}}=({\varvec{F}}_{11}, \ldots , {\varvec{F}}_{nn})^\top \), where \({\varvec{F}}_{ii}\) is the ith diagonal element of \({\varvec{F}}({\varvec{\theta }})\) given in (15), for \(i=1,\ldots ,n\). Clearly, \({\varvec{F}}_{ii}\) indicates the possible impact of the perturbation of the ith observation on the RML estimates of the model parameters. We consider the ith case as outstanding if \({\varvec{F}}_{ii} \ge 2\,\overline{{\varvec{F}}}\), similarly to \(C_i \ge 2\,\overline{C}\), where \(C_i\) is the ith total local influence corresponding to \({\varvec{F}}_{ii}\), and \(\overline{C} = {1 \over n} \sum _{i=1}^{n}C_i\) is as given in, for example, Leiva et al. (2007) and Paula and Cysneiros (2010).

-

(ii)

The vector \({\varvec{l}}_{\max }\), which is the eigenvector associated with the largest eigenvalue \(C_{\max }\) of \({\varvec{F}}({\varvec{\theta }})\); see Cook (1986), Leiva et al. (2007), Liu et al. (2009) and Paula et al. (2012). Large absolute values of those elements of \({\varvec{l}}_{\max }\), that is, those greater than \(2/\sqrt{n}\), indicate the corresponding observations to be influential.

3.2 Perturbation matrices

3.2.1 Model perturbation

Spherical disturbance We replace the normal distribution in (1) and (2) by \({\varvec{u}}_{{\varvec{w}}} \sim \text {N}_n({\varvec{0}}_{n\times 1}, \sigma ^2 {\varvec{W}}^{-1})\), where \({\varvec{W}}=\text {diag}(w_1, \ldots , w_n)\) is an \(n \times n\) diagonal matrix with its elements \(w_i\) being the perturbations or weights and \({\varvec{W}}_0=\text {diag}(1, \ldots , 1)\) is the \(n \times n\) identity matrix for non-perturbed values, with \(i=1,\ldots ,n\). We also use \(\text {vec} {\varvec{W}} = {\varvec{S}} {\varvec{w}}\), where \({\varvec{S}}\) is the \(n^2 \times n\) selection matrix, \({\varvec{w}}=(w_1,\ldots ,w_n)^\top \) and \(\text {vec} {\varvec{W}}\) is the vectorization of \({\varvec{W}}\); see Neudecker et al. (1995) and Liu et al. (2014). In this perturbation scheme, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

Using the differential of \(\ell _{{{\varvec{w}}}_1}({\varvec{\theta }})\) given in (16) with respect to \({\varvec{\theta }}\) detailed in Appendix 2, we obtain \({\varvec{\varDelta }}(\hat{{\varvec{\theta }}})\) defined in (15) as

where \(\otimes \) is the Kronecker product.

Non-spherical disturbance In this case, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

We use again matrix calculus to get

where \(S_g\) is the \(n_g^2 \times n_g\) selection matrix, for \(g=1,2\).

3.2.2 Response perturbation

Spherical disturbance We replace the assumption of normality by \({\varvec{u}}_{{\varvec{w}}} \sim \text {N}_n({\varvec{0}}_{n\times 1}, \sigma ^2 {\varvec{I}}_n)\), where \({\varvec{u}}_w = {\varvec{y}}+ {\varvec{w}}-{\varvec{X}} {\varvec{\beta }}\) is based on \({\varvec{y}}+{\varvec{w}}\) instead of \({\varvec{y}}\) given in (1), \({\varvec{w}} = (w_1, \ldots , w_n)^\top \) is an \(n \times 1\) perturbed vector, and \({\varvec{w}}_0 = (0, \ldots , 0)^\top \) is an \(n \times 1\) non-perturbed vector. In this perturbation scheme, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

Taking the differential of \(\ell _{{{\varvec{w}}}_2}({\varvec{\theta }})\) given in (17) with respect to \({\varvec{\theta }}\) as detailed in Appendix 2, we get

Non-spherical disturbance In this case, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

By using matrix calculus, we get

3.2.3 Covariable perturbation

Spherical disturbance We now assume \({\varvec{u}}_w \sim \text {N}_n({\varvec{0}}_{n\times 1}, \sigma ^2 {\varvec{I}}_n)\), where \({\varvec{u}}_w ={\varvec{y}}-({\varvec{X}}+{\varvec{W}} {\varvec{A}}) {\varvec{\beta }}\) is based on \({\varvec{X}}+{\varvec{W}} {\varvec{A}}\) instead of \({\varvec{X}}\) given in (1), with \({\varvec{W}}\) being an \(n \times p\) perturbed matrix, \({\varvec{W}}_0=\varvec{0}\) an \(n \times p\) non-perturbed matrix, \({\varvec{A}} = \text {diag}(a_1, \ldots , a_p)\) a \(p \times p\) diagonal matrix, and \(a_j\) the standard deviation of \({\varvec{x}}_j\) corresponding to the jth column of \({\varvec{X}}\), for \(j=1, \ldots , p\). In this perturbation scheme, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

Based on the differential of \(\ell _{{{\varvec{w}}}_3}({\varvec{\theta }})\) given in (18) with respect to \({\varvec{\theta }}\) as detailed in Appendix 2, we obtain

where \({\varvec{K}}\) is the \(np \times np\) commutation matrix such that \(\text {vec} {\varvec{W}}^\top = {\varvec{K}} \text {vec} {\varvec{W}}\) for the \(n \times p\) matrix \({\varvec{W}}\); see Magnus and Neudecker (1999) and Liu (2002) for the definition, properties and applications of \({\varvec{K}}\). In particular, if we perturb only \({\varvec{x}}_j\) to \({\varvec{x}}_j + a_j {\varvec{w}}\), where \({\varvec{w}} =(w_1,\ldots ,w_n)^\top \) is the \(n \times 1\) perturbed vector and \({\varvec{w}}_0 =(0,\ldots ,0)^\top \) is the \(n \times 1\) non-perturbed vector, we obtain

where \({\varvec{s}}_j\) is the \(p \times 1\) vector with an one in the the jth position and zeros in the other positions, for \(j=1,\ldots ,p\).

Non-spherical disturbance In this case, the relevant part of the log-likelihood function subject to the restriction \({\varvec{r}} = {\varvec{R}} {\varvec{\beta }}\) is given by

where \({\varvec{W}}_g\) is the \(n_g \times p\) perturbed matrix, \({\varvec{A}}_g = \text {diag}(a_{g1}, \ldots , a_{gp})\) is the \(p \times p\) diagonal matrix, and \(a_{gj}\) is the standard deviation of the jth column of \({\varvec{X}}_g\), for \(g=1,2\) and \(j=1,\ldots ,p\). We use matrix calculus to obtain

where \({\varvec{K}}_g\) is the \(n_g p \times n_g p\) commutation matrix such that \(\text {vec} {\varvec{W}}_g^\top = {\varvec{K}}_g \text {vec} {\varvec{W}}_g\) for the \(n_g \times p\) matrix \({\varvec{W}}\). In particular, if we perturb only \({\varvec{x}}_j\) to \({\varvec{x}}_j + a_j {\varvec{w}}\), we obtain

where \({\varvec{s}}_{j}\) is the \(p \times 1\) vector with an one in the the jth position and zeros in the other positions, for \(j=1, \ldots , p\).

3.3 Global influence

In addition to our local influence statistics \({\varvec{F}}_{ii}\) and \({\varvec{l}}_{\max }\) given in Sect. 3.1, using the case deletion method, we introduce three global influence statistics: LD, relative change (RC) and generalised Cook distance (GCD). These are defined by

-

(a)

\(\text {LD}_i = 2[\ell (\hat{{\varvec{\theta }}}) - \ell (\hat{{\varvec{\theta }}_{i}})]\),

-

(b)

\(\text {RC}_i = ||\hat{{\varvec{\theta }}}_{(i)} - \hat{{\varvec{\theta }}}||/||\hat{{\varvec{\theta }}}||\),

-

(c)

\(\text {GCD}_i = (\hat{{\varvec{\theta }}}_{(i)} -\hat{{\varvec{\theta }}})^\top \text {J}(\hat{{\varvec{\theta }}})^{-1}(\hat{{\varvec{\theta }}}_{(i)} -\hat{{\varvec{\theta }}})/k\),

for measuring differences between fits with and without the ith observation, for \(i=1, \ldots , n\). In the spherical disturbance case, to calculate these global influence measures, \(\ell \) is given in (5), \(\hat{{\varvec{\theta }}}\) is obtained using all the observations, \(\hat{{\varvec{\theta }}}_{(i)}\) is computed using the data without the ith observation, \(\varvec{J}(\hat{{\varvec{\theta }}})\) is given in (13) and \(k=p+1\) is the dimension of \({\varvec{\theta }}\). In the non-spherical disturbance case, \(\ell \) is given in (10), \(\hat{{\varvec{\theta }}}_{\text {G}}\) is obtained using all the observations, \(\hat{{\varvec{\theta }}}_{\text {G} i}\) is calculated using the data without the ith observation, \(\varvec{J}(\hat{{\varvec{\theta }}}_{\text {G}})\) is in (14) and \(k=p+2\) is the dimension of \({\varvec{\theta }}_{\text {G}}\).

4 Numerical illustration

In this section, we illustrate and validate the diagnostic methodology proposed in this work with three empirical examples of real-world data. The first two examples are for the spherical disturbance case and the third one is for the non-spherical disturbance case.

4.1 Example 1

We analyze the data for a response with six covariables observed in 40 metropolitan areas used in a study by Ramanathan (1993, Table 10.1) and Cysneiros and Paula (2005). The objective of this study is to regress the number (in thousands) of subscribers with cable TV (Y) against the number (in thousands) of homes in the area (\(X_1\)), the per capita income for each TV market with cable (\(X_2\)), the installation fee (\(X_3\)), the monthly service charge (\(X_4\)), the number of TV signals carried by each cable system (\(X_5\)) and the number of TV signals received with good quality without cable (\(X_6\)). As Y is a count, we take its square root to try to stabilize the variance. Thus, the model is

where \(\varepsilon _i \sim \text {N}(0, \sigma ^2)\) are mutually independent errors, for \(i=1,\ldots ,40\). It is reasonable to expect the effect of each coefficient to be unidirectional, such as in Cysneiros and Paula (2005), so that the opposite direction is theoretically impossible. We may focus on assessing whether the number of subscribers changes as the monthly service charge changes, that is, to assess whether \(\beta _4 = 0\) or not, which can be treated as an exact linear restriction. Of course, in the same way for the remaining covariables, we may be interested in assessing other equality restrictions. Therefore, we use \({\varvec{R}} {\varvec{\beta }} = {\varvec{0}}\), where

and \({\varvec{\beta }} = (\beta _0, \beta _1, \ldots , \beta _6)^\top \), such as in Cysneiros and Paula (2005). This corresponds to the exact linear restrictions \(\beta _2 = \beta _3 = \beta _4 = \beta _5 = 0\). For these data, Cysneiros and Paula (2005) found case #14 to be most influential using the standardised residuals, and both cases #1 and #14 to be the most influential using the total local influence based on a quadratic penalty function. Employing our formulas provided in Sect. 3, we obtain the RML estimates and the plots of the diagonal elements of \({\varvec{F}}\) and \({\varvec{l}}_{\max }\). The plot of standardised residuals in Fig. 1 may indicate two extremal observations. However, the potentially influential observations in Fig. 2 include cases #21 and #16 identified by \(|{\varvec{F}}_{ii}|\), plus those found by Cysneiros and Paula (2005) and our \({\varvec{l}}_\text {max}\). In addition, the three global influence statistics in Fig. 3 indicate that only cases #14 and #1 are influential.

4.2 Example 2

We use the data considered in Paula and Cysneiros (2010, Application 1). The response (Y) is the weight to height ratio (scaled by a factor of 100) versus age (X) for 72 children from birth to 71.5 months. A restricted normal model is proposed with response required to be cubic for \(x \le t_0\) and linear for \(x > t_0\) (\(t_0 = 16\) months), that is,

where \(\varepsilon _i \sim \text {N}(0, \sigma ^2)\) are mutually independent errors, and \((x_i - t_0)_+ = 0\) if \(x \le t_0\), or \((x_i - t_0)_+ = x_i - t_0\) if \(x \ge t_0\) with the restrictions \({\varvec{R}} {\varvec{\beta }} = {\varvec{0}}\) to guarantee a linear tendency after \(t_0\). Thus,

and \({\varvec{\beta }} = (\beta _0, \beta _1, \beta _2, \beta _3, \beta _4)^\top \).

Using a quadratic penalty function under a model perturbation scheme, Paula and Cysneiros (2010, Application 1) applied the total local influence method to \({\varvec{\beta }}\) and then to \(\sigma ^2\). They found two young children identified as cases #2 and #8 having a large total local influence on \({\varvec{\beta }}\) and cases #8, #21 and #25 having a large influence on \(\sigma ^2\). Using our formulas provided in Sect. 3, we obtain the RML estimates and the plots of the diagonal elements of \({\varvec{F}}\) and \({\varvec{l}}_\text {max}\). The plot of standardised residuals in Fig. 4 indicates four extremal observations. However, the potentially influential cases in Fig. 5 include #1, #72 and #9, and even #2 and #8, identified by \({\varvec{F}}_{ii}\) and \({\varvec{l}}_\text {max}\), in addition to those found by Paula and Cysneiros (2010, Application 1) with case #1 or #72 being the most influential ones in one or two perturbation schemes. In Fig. 6, the LD statistics may indicate that cases #8, #2, #1 and #21 are influential. The other two global influence statistics indicate that only cases #2 and #1 are influential.

4.3 Example 3

The objective of this example is to apply our results with restrictions for the non-spherical case. We use the data analysed in Examples 9.8 and 9.9 in Wooldridge (2013) to study if the R&D intensity increases with firm size, while an equality restriction on the coefficient may be involved. Following Wooldridge (2013), we suppose that R&D expenditures as a percentage of sales are related to sales and profits as a percentage of sales by

where the responses \(Y_i\) are for the R&D intensity, the covariables \(x_{i1}\) and \(x_{i2}\) are the firm sales (in millions) and the profit margins, respectively. For a simple illustration, we impose \({\varvec{R}}=(0, 1, 0)\) and \({\varvec{r}}=0.00005\), which agrees with the data analysis in Wooldridge (2013). After ordering the data by the sizes of \(x_{i1}\), that is, the sales, and splitting the data into two groups of 16 observations, we assume \(\varepsilon _i \sim \text {N}(0, \sigma _1^2)\), for \(i=1, \ldots , 16\), and \(\varepsilon _i \sim \text {N}(0, \sigma _2^2)\), for \(i=17, \ldots , 32\), are mutually independent errors. We fit the model and conduct the Goldfeld-Quandt test for heteroscedasticity with \(p\text { value}=0.012 < 0.05 =\alpha \), which supports the appropriateness of a non-spherical disturbance model with \(\sigma _1^2 \ne \sigma _2^2\).

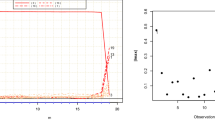

We present a plot of the standardised residuals in Fig. 7. Note that this residual for case #1 is greater than 2. In Fig. 8, the local influence statistics find case #1 to be most influential and cases #22, #30, #32 and #10 to be possibly influential. In Fig. 9, the global influence statistics suggest cases #1 and #30 to be influential, with cases #32 and #10 to be noted. Using a dummy variable approach, Wooldridge (2013) found cases #10 and #1 to be individually influential. They are the largest firm (case #10) and the firm with the highest value of R&D intensity (case #1). We find that case #1 is more influential than case #10, and identify additional observations including cases #22 and #30 as potentially influential using our local influence method with \({\varvec{F}}_{ii}\) and even \({\varvec{l}}_\text {max}\). These cases are not identified by the global influence or dummy variable approaches.

5 Concluding remarks

We have established results for influence diagnostics in the possibly heteroskedastic regression linear model with exact linear restrictions. We have used the restricted maximum likelihood estimators with Lagrange multipliers for the linear penalty function to find the diagnostic matrix. On one hand, the empirical examples have indicated that our results can be used to make findings similar to those on the same datasets provided in Cysneiros and Paula (2005) and Paula and Cysneiros (2010). On the other hand, we have seen that our results are different from those given in Cysneiros and Paula (2005) and Paula and Cysneiros (2010) in a number of ways. Our methodology may be used in the non-spherical disturbance case and further extended models. For diagnostics, we have used not just the total local influence statistics which are based on the diagonal elements of the diagnostic matrix, but also the direction eigenvector associated with its largest eigenvalue. We have compared the local influence statistics with three global influence statistics by examining the possible influential observations identified by these sets of influence statistics. Our local influence statistics have identified additional influential observations than the local influence statistics in Cysneiros and Paula (2005) and Paula and Cysneiros (2010) and our global influence statistics. These results are directly related to the restricted least squares estimators, which have been widely used in econometrics and statistics. They also complement those results for the linear model established by Liu and Neudecker (2007) using a sensitivity analysis approach. Our results can be used and implemented in a reasonably easy way by computer packages. Our Matlab codes are available on request.

References

Atkinson AC, Riani M (2000) Robust diagnostic regression analysis. Springer, Berlin

Atkinson AC, Riani M, Cerioli A (2004) Exploring multivariate data with the forward search. Springer, Berlin

Barros M, Galea M, González M, Leiva V (2010) Influence diagnostics in the tobit censored response model. Stat Methods Appl 19:379–397

Billor N, Loynes RM (1993) Local influence: a new approach. Commun Stat Theory Methods 22:1595–1611

Billor N, Loynes RM (1999) An application of the local influence approach to ridge regression. J Appl Stat 2:177–183

Chatterjee S, Hadi AS (1988) Sensitivity analysis in linear regression. Wiley, New York

Cook D (1986) Assessment of local influence. J R Stat Soc B 48:133–169

Chipman JS, Rao MM (1964) The treatment of linear restrictions in regression analysis. Econometrica 32:198–209

Cook D, Weisberg S (1982) Residuals and influence in regression. Chapman & Hall, New York

Cysneiros FJA, Paula GA (2005) Restricted methods in symmetrical linear regression models. Comput Stat Data Anal 49:689–708

de Castro M, Galea M, Bolfarine H (2007) Local influence assessment in heteroscedastic measurement error models. Comput Stat Data Anal 52:1132–1142

Díaz-García JA, Galea M, Leiva V (2003) Influence diagnostics for multivariate elliptic regression linear models. Commun Stat Theory Methods 32:625–641

Efron B, Hinkley D (1978) Assessing the accuracy of the maximum likelihood estimator: observed versus expected Fisher information. Biometrika 65:457–487

Galea M, de Castro M (2012) Influence assessment in an heteroscedastic errors-in-variables model. Commun Stat Theory Methods 41:1350–1363

Galea M, Diaz-Garcia JA, Vilca F (2008) Influence diagnostics in the capital asset pricing model under elliptical distributions. J Appl Stat 35:179–192

Galea M, Paula GA, Bolfarine H (1997) Local influence for elliptical linear models. J R Stat Soc D 46:71–79

Greene WH (2007) Econometric analysis. Prentice Hall, New York

Gross J (2003) Linear regression. Springer, Berlin

Hocking R (2003) Methods and applications of linear models: regression and the analysis of variance. Wiley, New York

Judge GG, Hill RC, Griffiths WE, Lutkepohl H, Lee TC (1988) Introduction to the theory and practice of econometrics. Wiley, New York

Kleiber C, Zeileis A (2008) Applied econometrics with R. Springer, Berlin

Leiva V, Barros M, Paula GA, Galea M (2007) Influence diagnostics in log-Birnbaum–Saunders regression models with censored data. Comput Stat Data Anal 51:5694–5707

Leiva V, Rojas E, Galea M, Sanhueza A (2014) Diagnostics in Birnbaum–Saunders accelerated life models with an application to fatigue data. Appl Stoch Model Bus Ind 30:115–131

Liu S (2000) On local influence for elliptical linear models. Stat Papers 41:211–224

Liu S (2002) Local influence in multivariate elliptical linear regression models. Linear Algebra Appl 354:211–224

Liu S (2004) On diagnostics in conditionally heteroskedastic time series models under elliptical distributions. J Appl Prob 41A:393–405

Liu S, Ahmed SE, Ma LY (2009) Influence diagnostics in the linear regression model with linear stochastic restrictions. Pak J Stat 25:647–662

Liu S, Ma T, Polasek W (2014) Spatial system estimators for panel models: a sensitivity and simulation study. Math Comput Simul 101:78–102

Liu S, Neudecker H (2007) Local sensitivity of the restricted least squares estimator in the linear model. Stat Papers 48:525–525

Magnus JR, Neudecker H (1999) Matrix differential calculus with applications in statistics and econometrics. Wiley, Chichester

Neudecker H, Liu S, Polasek W (1995) The hadamard product and some of its applications in statistics. Statistics 26:365–373

Paula GA (1993) Assessing local influence in restricted regression models. Comput Stat Data Anal 16:63–79

Paula GA, Cysneiros FJA (2010) Local influence under parameter constraints. Commun Stat Theory Methods 39:1212–1228

Paula GA, Leiva V, Barros M, Liu S (2012) Robust statistical modeling using the Birnbaum-Saunders-\(t\) distribution applied to insurance. Appl Stoch Models Bus Ind 28:16–34

Poon WY, Poon YS (1999) Conformal normal curvature and assessment of local influence. J R Stat Soc B 61:51–61

Rao CR, Toutenburg H, Shalabh, Heumann C (2008) Linear models and generalizations. Springer, Berlin

Ramanathan R (1993) Statistical methods in econometrics. Wiley, New York

Shi L, Chen G (2008) Local influence in multilevel models. Can J Stat 36:259–275

Shi L, Huang M (2011) Stepwise local influence analysis. Comput Stat Data Anal 55:973–982

Trenkler G (1987) Mean square error matrix comparisons among restricted least squares estimators. Sankyhā A 49:96–104

Wooldridge JM (2013) Introductory econometrics: a modern approach. South-Western Cengage Learning, Mason, OH

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: differentials for the Hessian matrix

We use matrix calculus as studied in Magnus and Neudecker (1999) to establish our results in both disturbance cases. In the spherical disturbance case, we present the differentials for the Hessian matrix in Appendix 1 and for the \({\varvec{\varDelta }}\) matrices in Appendix 2. In the non-spherical disturbance case, we obtain the differentials and matrices in a similar manner, so that they are and omitted here.

First, we take the differential of \(\ell \) given in (5) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\) and obtain

Then, we take the differentials of the elements of the score vector given in (19) and (20) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\) as

We establish the Hessian matrix \({\varvec{H}}({\varvec{\theta }})\) from the differentials given in (21), (22) and (23).

Appendix 2: differentials for the perturbation schemes

We present the differentials for the perturbation schemes in the spherical disturbance case defined in Sect. 3.2 considering the log-likelihood functions \(\ell _{{{\varvec{w}}}_1}\), \(\ell _{{{\varvec{w}}}_2}\) and \(\ell _{{{\varvec{w}}}_3}\) established in (16), (17) and (18), respectively. From the differentials, we get the \({\varvec{\varDelta }}\) matrices.

Model perturbation Taking the differential of \(\ell _{{{\varvec{w}}}_1}\) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\), we obtain

Taking the differential of \(\text {d} \ell _{{{\varvec{w}}}_1}\) with respect to \({\varvec{w}}\), we obtain

Response perturbation Taking the differential of \(\ell _{{{\varvec{w}}}_2}\) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\), we obtain

Taking the differential of \(\text {d} \ell _{{{\varvec{w}}}_2}\) with respect to \({\varvec{w}}\) we obtain

Covariable perturbation Taking the differential of \(\text {d} \ell _{{{\varvec{w}}}_3}\) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\), we obtain

Taking the differential of \(\text {d} \ell _{{{\varvec{w}}}_3}\) with respect to \({\varvec{w}}\), we obtain

Rights and permissions

About this article

Cite this article

Liu, S., Leiva, V., Ma, T. et al. Influence diagnostic analysis in the possibly heteroskedastic linear model with exact restrictions. Stat Methods Appl 25, 227–249 (2016). https://doi.org/10.1007/s10260-015-0329-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-015-0329-4

Keywords

- Information matrix

- Local influence

- Restricted least-squares estimator

- Restricted maximum likelihood estimator