Abstract

Subsequences-based time series classification algorithms provide interpretable and generally more accurate classification models compared to the nearest neighbor approach, albeit at a considerably higher computational cost. A number of discretized time series-based algorithms have been proposed to reduce the computational complexity of these algorithms; however, the asymptotic time complexity of the proposed algorithms is also cubic or higher-order polynomial. We present a remarkably fast and resource-efficient time series classification approach which employs a linear time and space string mining algorithm for extracting frequent patterns from discretized time series data. Compared to other subsequence or pattern-based classification algorithms, the proposed approach only requires a few parameters, which can be chosen arbitrarily and do not require any fine-tuning for different datasets. The time series data are discretized using symbolic aggregate approximation, and frequent patterns are extracted using a string mining algorithm. An independence test is used to select the most discriminative frequent patterns, which are subsequently used to create a transformed version of the time series data. Finally, a classification model can be trained using any off-the-shelf algorithm. Extensive empirical evaluations demonstrate the competitive classification accuracy of our approach compared to other state-of-the-art approaches. The experiments also show that our approach is at least one to two orders of magnitude faster than the existing pattern-based methods due to the extremely fast frequent pattern extraction, which is the most computationally intensive process in pattern-based time series classification approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Time series data mining has evolved into a significant research avenue over the last couple of decades. The continued interest in this field stems from the growing need to extract useful bits of information from the vast data stores filling up with the ever-increasing generation and storage of time series data from diverse fields including natural processes, scientific research, business, finance, activity and/or interaction logs, etc. Time series data comprise sequences of real-valued measurements recorded over time. The inherent temporal order of these measurements characterizes the varying behavior of the recorded process and allows to find the patterns which can identify similar or anomalous behavior over time; therefore, it is vital to keep this order intact so as to infer information about time-dependent features, e.g., seasonality, trend, etc. Time series data mining algorithms are used primarily for extracting knowledge from data with a temporal order; however, the same algorithms can also be applied to data with any form of logical ordering, e.g., images converted to series using shape outlines, chemical composition data obtained from spectral analyses, etc. This effectively increases the scope and impact of time series data mining research and its applications.

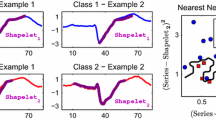

Classification has been an important research topic in the time series data mining domain. Generally, time series classification problems can be divided into two categories. The first category encompasses those problems where each class of instances has a basic underlying shape spanning the entire length of the time series. The shape can be time shifted, stretched/compressed, or distorted due to noise. For these problems, the best known approach is to use the Nearest Neighbor (1-NN) algorithm coupled with a suitable distance measure. For datasets having a small number of instances, the 1-NN algorithm coupled with an elastic distance measure, e.g., Dynamic Time Warping (DTW), performs best; however, for datasets with a large number of instances, the accuracy of 1-NN with an elastic distance measure converges to that of 1-NN with Euclidean distance (ED) [4]. The second category covers those problems for whom the entire shape of the time series instances is irrelevant and only small subsequences are indicative of the class. These subsequences are (i) much smaller than the overall length of the time series, (ii) phase independent, and (iii) can occur at any point in the time series. In such problems, identifying whether the particular subsequences are present or absent in the time series instances is a better suited classification approach. The Shapelets-based time series classification algorithm was proposed to handle problems from this category [17].

Effectively, shapelets are subsequences occurring frequently in a specific class of time series instances while being absent or infrequent in instances of the other classes. The distance between a shapelet and a time series is defined as the minimum observed distance between the shapelet and all shapelet-length subsequences of the time series instance. The presence or absence of a shapelet in a time series is determined based on whether the minimum distance between the shapelet and the time series is within a given threshold. Once the presence of a specific shapelet in a given time series is confirmed, the time series is classified as belonging to the class being represented by the particular shapelet. The original shapelets-based algorithm creates a classification model by embedding shapelets and their corresponding distance thresholds in the internal nodes of a decision tree. The classification of a given time series instance starts at the root node where the distance between the time series and the shapelet specific to the root node is calculated. The subsequent branch to be taken is determined based on whether the calculated distance is above or below the threshold for the root node. Subsequent nodes are traversed similarly until a leaf node is reached, at which point the classification decision is taken based on the leaf node’s class label. For complex and/or multi-class problems, the induction of the classification model may lead to the identification of a number of shapelets, whose presence ultimately determines a specific class of instances. This also indicates that a class of instances can have more than one representative shapelet and the order of the discovered shapelets heavily depends on the initial conditions used to initiate the shapelet discovery process.

Shapelets-based classification is much faster compared to the 1-NN approach because, for a given time series instance, shapelet-based classification only needs to calculate a few distance values for the time series instance and the shapelets encountered in the nodes of the tree, whereas the 1-NN approach needs to compare the said time series instance with all available reference time series instances before finalizing the classification decision. In contrast, shapelet-based model induction can become untenable for larger datasets because shapelet discovery is an exhaustive process of evaluating all possible candidate subsequences in the time series dataset to determine the best shapelet (and its corresponding distance threshold) at each node of the shapelet-based model. At any node, the time required for shapelet discovery is on the order of \(O(N^2 n^4)\), where N represents the number of time series instances reaching the particular node, while n is the length of the time series instances. Hence, the overall time required for shapelet-based model induction can become unreasonably high.

The Shapelet Transform (ST) algorithm dissociates the shapelet discovery and model induction processes [9]. It reduces the computational requirements of the training process, because a single call to the \(O(N^2 n^4)\) shapelet discovery process is required, unlike the original shapelets-based algorithm. For ST, a single invocation of the shapelet discovery process extracts the k best shapelets, which are then used to transform the time series classification problem into a feature-based classification problem. The transformed dataset consists of N rows and \(k+1\) columns, where each row is associated with a specific time series instance, while each column is associated with a specific shapelet from among the k best shapelets and the last column is used for the class label. The cell corresponding to the \(i{\mathrm{th}}\) row and \(j{\mathrm{th}}\) column holds the minimum distance between the \(i{\mathrm{th}}\) training instance and the \(j{\mathrm{th}}\) shapelet. The transformed dataset provides a feature-based view of the time series classification problem; therefore, any off-the-shelf classification algorithm can be used for model induction. Although ST is faster than the original shapelet algorithm, its asymptotic time complexity can still make it extremely computation intensive.

To scale up pattern-based time series classification to massive amounts of data, however, the algorithmic complexity has to be reduced to linear time at most. One way of addressing the complexity issue of pattern-based time series classification is by transforming the time series to symbolic (i.e., string) representations. Although the time series community has recognized and acknowledged the benefits of transforming time series data to strings by discretization and quantization for some time [11,12,13,14,15], the approaches are still suffering from high computational complexity: the complexity of the Fast Shapelets [12] approach is \(O(N n^2)\), the one of BoP (Bag of Patterns) [11] is \(O(N n^3)\), the one of SAX-VSM (Symbolic Aggregate approXimation–Vector Space Model) [15] is \(O(N n^3)\), the one of BOSS (Bag of SFA Symbols) [13] is \(O(N^2 n^2)\), and the complexity of BOSS VS (Bag of SFA Symbols in Vector Space) [14] is \(O(N n^\frac{3}{2})\). Overall, it appears that the opportunities arising from the positive results from the string mining literature have not yet been fully realized. Notably, string mining was the first and still remains, the only area of pattern mining, where, in terms of time complexity, optimal results can be guaranteed. In other words, modern string mining algorithms can extract almost arbitrary frequency-related string patterns in linear time. The first algorithms in this line of research were proposed by Fischer et al. [5, 6]. Further research has optimized their practical running times and theoretical properties [3].

Transforming numeric time series to symbolic sequences can, of course, lead to a loss of information, and it is evident that using one such transformation might lead to incorrect or suboptimal results due to badly chosen or just nearly missed interval boundaries. However, we argue that the advantage in terms of time complexity (linear vs. higher-order polynomials) enables exploring the space of transformations much more efficiently and effectively than with any other more costly transformation: It becomes feasible to explore a multitude of parameterizations, for instance multiple alphabet sizes and discretization schemes, given that the basic underlying algorithm has linear time complexity. Also, as classification is statistical and not a “precise science” anyway, the imperfection of any well-chosen string transformation does not harm and may even improve the results in noisy application domains.

The main contributions of this paper are as follows: first, we propose to build time series classification on the basis of a linear time string mining algorithm which enables pattern-based time series classification to scale up to massive datasets.Footnote 1 Whereas discretized time series have been used before, explicitly basing time series classification on string mining algorithms has not been considered yet. Second, the complexity advantage can be used to explore different parameterizations and still be much more time efficient than any superlinear time series classification scheme. Third and more specifically, we present a highly efficient pattern extraction method for time series which does not require any user provided limits for minimum/maximum pattern lengths. Fourth, the resulting classification scheme provides competitive accuracy compared to state-of-the-art symbolic representation-based approaches, and last, we show how the overall scheme can lead to extremely fast parameter optimization. In the following, our proposed approach will be referred to as MiSTiCl (Mining Strings for Time Series Classification).

The rest of the paper is organized as follows: Sect. 2 provides some background about SAX and the string mining algorithm. Section 3 presents the proposed algorithm. Sections 4 and 5 present the implementation details, experimental protocol, and results. Finally, Sect. 6 presents the conclusions.

2 Background

An ordered, real-valued sequence of n observations is called a time series and is denoted as \(T=(t_1,t_2,\ldots ,t_n)\). A time series belonging to a specific class is assigned a label \(y \in C\), where C is the set of all possible class labels. A set of N labeled time series instances \(\{(T_1,y_1),(T_2,y_2),\ldots ,(T_N,y_N)\}\) forms a time series dataset D. Figure 1 shows an illustration of a time series dataset, where the instances belonging to the same class are plotted together.

Time series data are inherently high-dimensional, which makes it difficult to design efficient algorithms for time series mining. It is also highly susceptible to noise, which can adversely affect the accuracy of an algorithm. Therefore, time series discretization is often used to (i) reduce the dimensionality and cardinality of the data, which enables to employ/develop efficient algorithms, and (ii) reduce the effects of noise present in the raw data. Symbolic aggregate approximation (SAX) is a widely used time series discretization approach [10]. SAX combines multiple time series observations into single averaged values to reduce the dimensionality and then maps these averaged values to characters from an alphabet to reduce the cardinality. A time series T of length n can be converted to a symbolic string \(\widehat{T}=(\widehat{t}_1, \widehat{t}_2,\ldots ,\widehat{t}_p)\) of length \(p=\lfloor \frac{n}{w}\rceil \) such that \(p \ll n\), where \(w \in \mathbb {Z}_{\ge 1}\) represents the dimensionality reduction factor (or, the averaging window size).Footnote 2 First, T is converted to a reduced dimensionality version \(\overline{T} = (\overline{t}_1,\overline{t}_2,\ldots ,\overline{t}_p)\) of length p such that each nonoverlapping sequence of w observations of T is averaged to provide one observation. \(\overline{T}\) is also referred to as a Piecewise Aggregate Approximation (PAA). Mathematically, we can get each \(\overline{t}_i\) using the equation:

Figure 2 shows the reduced dimensionality version of time series instances superimposed on their real-valued counterparts.

Illustration of the PAA version of time series instances superimposed on their real-valued counterparts. The 286 time points long time series have been reduced to 40 time points based on a dimensionality reduction factor \(w=7\). The PAA versions have been stretched (along the x-axis) to emphasize the retention of the overall shape of time series instances (color figure online)

For cardinality reduction, each observation \(\overline{t}_i \in \overline{T}\) is mapped to a character from an alphabet of size \(\alpha \in \mathbb {Z}_{\ge 2}\). A small value of \(\alpha \) leads to large quantization blocks and vice versa. The quantization blocks for each character in the alphabet are chosen based on breakpoints \(B=(\beta _0,\beta _1,\ldots ,\beta _{\alpha })\), where \(\beta _0\) and \(\beta _\alpha \) are defined as \(-\infty \) and \(\infty \), respectively, while the remaining breakpoints are chosen such that area under the \(N(\mu =0,\sigma =1)\) Gaussian curve from \(\beta _i\) to \(\beta _{i+1}\) equals \(\frac{1}{\alpha }\). This ensures an equiprobable selection of every alphabet. A Gaussian curve with \(\mu =0\) and \(\sigma =1\) is used because z-normalized time series instances have the same mean and standard deviation, and they also tend to follow a Gaussian distribution.Footnote 3 Table 1 shows the breakpoints for different values of \(\alpha \). All observations \(\overline{t}_i \in \overline{T}\), which have their values in the range \([\beta _0,\beta _1)\), are mapped to the first character in the alphabet. Similarly, all observations \(\overline{t}_i \in \overline{T}\) with values in the range \([\beta _1,\beta _2)\) are mapped to the second character in the alphabet, and so on. Mathematically, we get each \(\widehat{t}_i\) as follows:

Illustrations of real-valued time series instances and their PAA versions are shown in Figs. 1 and 2, respectively. The symbolic representations of these example time series instances are shown in Table 2. For a detailed analysis of the SAX algorithm and a review of its diverse applications, we refer the reader to the corresponding article [10].

String mining is concerned with the discovery of patterns (substrings) which are characteristic of a string dataset. Given a pair of symbolic datasets \(\mathcal {D}^+\) and \(\mathcal {D}^-\), each representing a positive and a negative class, respectively, extracting patterns which can discriminate between the two datasets is referred to as the emerging substrings mining problem. Formally, the problem of emerging substrings mining is to report all patterns in \(\mathcal {D}^+\) and \(\mathcal {D}^-\) such that each reported pattern occurs in at least \(f^+\) different strings in \(\mathcal {D}^+\), but does not occur in more than \(f^-\) different strings in \(\mathcal {D}^-\), where \(f^+\) and \(f^-\) are the relative support values of the pattern in the respective datasets.

During the last couple of decades, concerted research efforts in the fields of bioinformatics and natural language processing have lead to the development of several string mining algorithms for extracting frequent patterns from symbolic datasets. The first time-optimal algorithms for string mining were proposed by Fischer, Heun, and Kramer [5, 6] and required O(m) time and \(O(m\ \mathrm{log}\ m)\) bits of space for extracting frequent patterns from symbolic datasets, where \(m=\sum _{i=1}^{|\mathcal {M}|}|s_i|\) is the total length of all strings in the dataset concatenated. The authors showed how suffix arrays and longest common prefix (lcp) tables could be used to efficiently solve string mining problems under frequency constraints. Later, an improved version of the algorithm was proposed which constructs the internal data structures in fixed size blocks instead of constructing them all at once, which allows to reuse memory and reduce the overall space requirements to only \(O(m\ \mathrm{log}\ \alpha )\) bits, while increasing the worst-case computational complexity to only \(O(m\ \mathrm{log}^2\ m)\), where \(\alpha \) is the size of the alphabet used [3].

For completeness, a brief summary of the string mining algorithm is presented below. Let \(\mathcal {D}^+ = \{aaba, abaaab\}\) and \(\mathcal {D}^-= \{bbabb,abba\}\) be two string datasets. Let x denote a string of length q consisting of all the strings in \(\mathcal {D}^+\) and \(\mathcal {D}^-\) concatenated, i.e., \(x=aaba \#_1^1 abaaab \#_{|\mathcal {D}^+|}^1 bbabb \#_1^2 abba \#_{|\mathcal {D}^-|}^2\), where each \(\#_l^m\) is a unique symbol not occurring in any dataset and is used for marking the end of strings. A substring of x starting at position i and ending at position j is denoted by x[i, j], which is a concatenation of the symbols \(x[i]x[i+1] \ldots x[j]\). A suffix array is an array of integers which is used to describe the lexicographic order of all suffixes of x, s.t. \(x[SA[k], q] < x[SA[k+1], q]\) for all \(1 \le k < q\). The lcp array contains the lengths of the longest common prefixes of x’s suffixes that are consecutive in lexicographic order, and is defined as \(LCP[i] = lcp(x[SA[i],q], x[SA[i-1],q])\) for all \(1 < i \le q\), and \(LCP[1]=0\). The algorithm starts with the construction of the suffix array (SA) and the lcp array (LCP). Both of these data structures can be constructed in time linear in the length of x. Once the suffix array and the lcp array have been created, the string mining algorithm processes the lcp array to answer any range minimum queries (RMQs) in constant time. Formally, for any two indices i and j the query \(RMC_{LCP}(i,j)\) asks for the position of the minimum element in LCP[i, j], i.e., \(RMQ_{LCP}(i,j) := arg min_{k \in \{i, \ldots , j\}}\{LCP[k]\}\). If the minimum value is not unique, then the smallest index is returned. Table 3 shows the string x, the suffix array SA, and the lcp array LCP. Finally, the algorithm calculates so-called correction terms for establishing the occurrence frequency of the different substrings based on the lcp array values. For further details of the algorithm, we refer the interested reader to the respective papers [3, 5, 6].

3 Mining Strings for Time Series Classification

MiSTiCl is a subsequences-based time series classification algorithm which employs string mining for efficient extraction of discriminative subsequences from the time series data. The subsequences are used as features to create a transformed dataset similar to the shapelet transform approach. The main steps involved are: (i) time series discretization, (ii) frequent pattern extraction, (iii) determining independent and highly discriminative frequent patterns, (iv) creating a transformed dataset using the best K frequent patterns, and finally (v) model induction. Looking at steps (ii) and (iii) in a bit more detail, we aim for patterns that are frequent enough in the positive class and not too frequent in the negative class. From the patterns that pass this filter, we choose the most discriminative ones, and from those with the same discriminative power, we choose the most general ones. Step (ii) makes sure that the patterns are statistically meaningful in the first place. Step (iii) picks from the remaining those that are predictive individually. To reduce their number, the most general ones are actually used in case of equal discriminative power, to obtain high coverage on unseen cases.

Algorithms based on discretized time series data are faced with a significant challenge regarding the information and feature loss due to discretization; therefore, we would like to address a very important design aspect before going into the details of our proposed algorithm. Although SAX can preserve the overall shape of the time series instances, using a predefined window size to reduce the dimensionality of data can still lead to undesired feature loss due to inadvertent splitting of important features. A possible approach to counter this problem is to use those values of the parameters \(\alpha \) and w which result in minimum information loss. Usually, a brute force parameter tuning approach is used to come up with such parameters. This can incur a significant upfront computational cost depending on the number of evaluated parameter combinations and the size of the dataset split used to perform the search; still, the research community has generally opted for this approach. There is, however, another approach, which can be beneficial in two ways, specifically (i) improved model accuracy and (ii) reduced wasted computation. Instead of using a single discretized version of the data created with optimized parameters, creating multiple discretized versions of the data using a number of arbitrary \(\alpha \) and w parameters allows to extract discriminative patterns from some form of multi-view perspective and helps to capture different features at each view.Footnote 4 Using this approach, a feature-based dataset can be created with multi-cardinality and multi-dimensionality properties, which allows to incorporate a diverse set of features in a single feature set that can be exploited by complex classification algorithms to provide better generalization and improved classification accuracy. Moreover, using a linear time string mining algorithm for feature extraction from multiple discretized versions of the data only increases the computational complexity by a constant factor. Therefore, we have opted for the latter approach to tackle the challenge mentioned at the beginning of this paragraph.

3.1 Main algorithm

MiSTiCl is a powerful yet extremely efficient time series classification algorithm which brings together a number of highly effective approaches from the time series and string mining domains. This subsection provides a general overview of the algorithm, while the following subsections provide details of the individual steps. Algorithm 1 lists the steps involved in the creation of feature-based dataset splits using MiSTiCl. Transforming the real-valued time series training and testing data into a feature-based representation requires a set of values for the alphabet (A) and window sizes (W) each, the minimum and maximum frequency values for frequent pattern extraction, and a parameter K limiting the number of used frequent patterns. The first step is the initialization of map data structures for single-view feature sets (Line 1). The next step is the extraction of class labels which are subsequently used for frequent pattern extraction (Line 2). Next, single-view feature sets are created corresponding to each \((\alpha ,w) \in A \times W\) parameter combination (Lines 3–7). The real-valued time series splits are discretized to create symbolic splits corresponding to the current \(\alpha \) and w parameters (Line 4). Next, frequent patterns are extracted from the symbolic training split (Line 5). Now, single-view training and testing feature sets are created using the top K frequent patterns (Lines 6). Finally, the single-view feature sets are combined to create multi-view feature sets (Line 8). The multi-view feature sets can be used for model induction using any off-the-shelf algorithm.

3.2 Extracting frequent patterns

Algorithm 2 lists the steps involved in extracting frequent patterns from a symbolic dataset. The string mining algorithm extracts frequent patterns from binary problems; therefore, a multi-class problem is transformed into multiple binary problems using a one-vs-all approach. For each class \(c \in C\), a pair of datasets is created such that all instances with class label c are assigned to the positive class dataset \(\widehat{P}\), while all remaining instances are assigned to the negative class dataset \(\widehat{N}\) (Lines 3 and 4). The string mining algorithm extracts all the patterns from \(\widehat{P}\) and \(\widehat{N}\) which satisfy the \(f^+\) and \(f^-\) frequency constraints, and provides a list of frequent patterns along with actual occurrence frequencies of each pattern in the positive and negative class datasets (Line 5). Next, the most discriminative frequent patterns for the current class c are selected from the extracted frequent patterns and placed on the map FP (Line 6). Finally, the map containing discriminative frequent patterns for all the classes is returned.

3.3 Selecting discriminative patterns

The discriminative power of a given frequent pattern regarding correctly identifying a specific class of instances can be assessed using different measures, e.g., the \(\chi ^2\) independence test, information gain, etc. The occurrence frequencies of a pattern in the positive and negative class datasets can be used to create a confusion matrix for calculating either the \(\chi ^2\) independence test or the information gain value. The \(\chi ^2\) test assesses whether the observations, expressed as a contingency table, are statistically independent of each other. A higher value of the test statistic indicates greater level of independence and vice versa. Information gain measures the difference between two probability distributions and can be used to assess the association between features. For a binary problem, the information gain value of one indicates perfect class purity, while a value of zero indicates the opposite.

Setting the frequency constraints such that \(0 \lessapprox f^- < f^+ \le 1\) allows to extract frequent patterns which are maximally representative of the positive class. The strictness of frequency constraints directly affects the number of frequent patterns extracted by the string mining algorithm. A very small value for the \(f^+\) constraint can result in the extraction of a huge number of frequent patterns, whereas a large value can result in no frequent patterns being extracted at all. The huge number of frequent patterns extracted with a lower \(f^+\) constraint is attributed to the presence of various prefix- and/or suffix-based variants of base patterns (see Table 4 for examples). The occurrence frequency of a base pattern is always greater than or equal to the occurrence frequency of its variants. In case a variant pattern has the same occurrence frequency as its base pattern, the independence tests rank the two patterns equally; however, duplicate detection can be used to discard the variant pattern. Hence, using reasonably lenient frequency constraints and ranking the extracted patterns using an independence test followed by filtering for duplicates can provide independent, highly discriminative, and diverse frequent patterns.Footnote 5 Algorithm 3 lists the pseudo-code for selecting such frequent patterns. The procedure receives a list of all the frequent patterns along with their corresponding occurrence frequencies, and the instance counts for the positive and negative datasets. The filtered frequent patterns are returned in an ordered associative array of lists, where each slot in the associative array holds all the frequent patterns with the same test statistic and the array is sorted in decreasing order of the independence test statistic. After initializing the array, the procedure iterates over all the patterns provided as input (Lines 2–8). An independence test is used to obtain a value for the test statistic for the current pattern (Line 3).Footnote 6 Next, the list of patterns corresponding to the current test statistic is retrieved from the array OL, and if no such list exists, one is initialized and placed in the location pointed to by the test statistic (Line 5). Next, the current pattern f is compared against all the patterns in fpList to see whether the list already contains its base pattern (Lines 6). If a base pattern is found, f is discarded, otherwise it is inserted in the list (Line 7). Once all the patterns have been evaluated, the ordered array of lists containing the filtered frequent patterns is returned.

3.4 Creating feature sets

Once the discriminative frequent patterns have been identified, the next step is the creation of feature-based datasets. One approach is to create real-valued datasets, such that real-valued subsequences corresponding to the symbolic frequent patterns are used as features in the transformed datasets, while the distance values between said subsequences and the real-valued time series instances are used as feature values. Alternatively, binary-valued datasets can be created using the frequent patterns as features and zeros/ones as feature values representing the absence/presence of a feature in the discretized instances. The latter approach is straightforward and highly efficient because the discretized time series data are already at hand and searching for the presence of frequent patterns in discretized time series instances also requires very little overhead. On the downside, preliminary experiments showed that classification models based on the binary-valued transformation almost always performed inferior to their real-valued counterparts. Algorithm 4 lists the pseudo-code for creating real-valued feature-based datasets. After initializing the training and testing feature-based splits (Line 1), the procedure iterates over all class labels \(c \in C\) to add the top K features for each class (Lines 2–13). The loops in Lines 5 and 6 iterate over the frequent patterns for the current class c (in order of their independence test ranks). For each frequent pattern f, a reverse lookup is performed to extract the best corresponding subsequence from the real-valued training split (Line 7). Next, the procedure iterates over all instances of the training and testing splits to populate the respective feature set columns. Once the top K features have been added for the current class, the procedure breaks out of the feature adding loop and the process repeats itself for the next class.

3.5 Creating the multi-view feature sets

MiSTiCl aims to mitigate the problem of feature loss by combining multiple single-view feature sets, each created with its own \(\alpha \) and w parameter, thus creating a multi-view feature set. A candidate multi-view feature set is created by simply joining multiple single-view feature sets and requires zero computational overhead. Finding the best multi-view feature set, however, involves (i) creating all possible multi-view feature sets, (ii) model induction, and finally (iii) performance testing for each candidate feature set, all of which can incur a significant computational cost. A naïve approach would be to combine all available single-view feature sets; however, this could potentially introduce redundant features in the multi-view feature set. This presents an optimization problem where a minimum number of single-view feature sets should be used to create a multi-view feature set which provides maximum classification accuracy. For a cardinality reduction factor set A and a dimensionality reduction factor set W, the total number of single-view feature sets created by MiSTiCl equals \(|A| \times |W|\). Using the brute force approach to find the best multi-view feature set would require creating and evaluating \(2^{|A|\times |W|}-1\) candidate feature sets and is obviously the most computation intensive option. The set cover problem is a classical question in combinatorics which aims to identify the smallest subset of a collection S whose union equals the universe \(\mathcal {U}\). Finding an exact solution to the set cover problem can also be computationally very demanding; however, using a heuristic approach can significantly reduce the computational impact. Algorithm 5 lists the steps involved in creating the best multi-view feature set splits using a heuristic set cover approach. First, the procedure initializes a descending order associative array \(Map{-}CoveredInsts{-}Params\), and a table (dual-key associative array) S. Next, the set \(\{1,\ldots ,N\}\) is randomly split into two disjoint sets, \(All{-}Train{-}Indices\) and \(All{-}Val{-}Indices\) (Line 2). Each element of the set \(\{1,\ldots ,N\}\) corresponds to an instance in the original training set split, while the two disjoint sets, \(All{-}Train{-}Indices\) and \(All{-}Val{-}Indices\), are used to create subsets of the single-view training feature sets for model training and validation, respectively. The procedure iterates over all the \((\alpha ,w) \in A \times W\) parameter combinations (Lines 3–8) and performs the following steps for each single-view training feature set \(SV_{train}^{\alpha ,w}\).

Train a classification model CM using only those instances from \(SV_{train}^{\alpha ,w}\) whose indices occur in the \(All{-}Train{-}Indices\) set

Test the classification model CM using only those instances of \(SV_{train}^{\alpha ,w}\) whose indices occur in the \(All{-}Valid{-}Indices\) set and save the indices of correctly classified instances in the table S using \(\alpha \) and w as index values.

Add the current \((\alpha , w)\) pair in the associative array using the size of correctly classified validation instances as key

Next, two empty sets are initialized, one for keeping track of all the covered validation set instances \(Covered{-}Val{-}Indices\) and the other for the best found parameters BestParams. Next, the procedure iterates over the associative array containing the \((\alpha ,w)\) pairs (Lines 11–19). If the difference between the set \(S^{\alpha ,w}\) and all covered instances \(Covered{-}Val{-}Indices\) is not empty, then (i) the current \((\alpha ,w)\) pair is added to the set of best parameters, and (ii) the set of covered indices is updated with the union of \(S^{\alpha ,w}\) and \(Covered{-}Val{-}Indices\). If the difference between the sets \(All{-}Val{-}Indices\) and \(Covered{-}Val{-}Indices\) is empty, i.e., all validation instances have been covered, or the number of parameter pairs in the BestParams set is equal to the maximum allowed parameter pairs, then the loop is terminated. Finally, the single-view training and testing feature sets corresponding to each \((\alpha ,w)\) pair in the set of best parameters BestParams are joined together to form the multi-view training and testing feature sets, respectively (Lines 21–24).

3.6 Complexity analysis

The asymptotic time complexity of the MiSTiCl algorithm can be calculated by aggregating the time complexities of the individual steps. MiSTiCl creates multiple single-view feature sets, and the creation of each feature set contributes equally toward the overall complexity; therefore, we shall first consider a single iteration for an arbitrary combination of \(\alpha \) and w. The time taken by SAX to discretize a dataset is on the order of O(Nn). The time taken by the string mining algorithm for frequent pattern extraction is on the order of O(m). Rewriting m in terms of N and n gives \(m=N\frac{n}{w}\); therefore, the time complexity of string mining is on the order of \(O(N \frac{n}{w})\). To calculate the time complexity of filtering the frequent patterns for obtaining highly independent patterns, we first need to approximate the number of extracted frequent patterns. For a given \(\alpha \) and w, the number of all possible frequent patterns of all lengths is approximately equal to \(\alpha ^2 + \alpha ^3 + \cdots + \alpha ^p\), which can be simplified using a geometric progression as \(\frac{1-\alpha ^{(p+1)}}{1-\alpha }\), where \(p=\lfloor \frac{n}{w}\rceil \). Since all the terms in this expression are constant, we can approximate the time complexity of filtering the frequent patterns to be constant. Creating a feature set requires adding K features for each class \(c \in C\). For each feature, N feature values have to be calculated, where each feature value calculation takes O(ns) time, where s is the length of a subsequence and \(s \ll n\). Since K and |C| are constant and much smaller than N and n, the time required for creating a feature set is on the order of O(Nns). Aggregating the time complexities of the individual steps, the overall time complexity of creating a feature set for a given \(\alpha \) and w parameter combination is on the order of \(O(Nn)+O(N\frac{n}{w})+O(1)+O(Nns) \approx O(Nns)\). Creating \(|A| \times |W|\) many feature sets increases the complexity by a factor of \(|A| \times |W|\); therefore, the asymptotic time complexity of the MiSTiCl algorithm is on the order of O(Nns). Comparing the time complexity of MiSTiCl with the time complexity of some other state-of-the-art algorithms indicates that MiSTiCl is the fastest symbolic representation-based time series classification algorithm. The reported time complexity for the BoP, SAX-VSM, BOSS, and BOSS VS algorithms is on the order of \(O(Nn^3)\), \(O(Nn^3)\), \(O(N^2n^2)\), and \(O(Nn^\frac{3}{2})\), respectively [14].

4 Experiments

We have carried out an extensive set of experiments to compare different symbolic representation-based time series classification algorithms namely BoP, SAX-VSM, BOSS, and MiSTiCl. The main goal of our experimental evaluation is twofold. First, we want to ascertain whether the classification accuracy of MiSTiCl is on par with that of state-of-the-art symbolic representation-based approaches for time series classification, and second, whether the theoretical computational gains can be achieved in practice as well and the algorithm can perform at speeds which allows to handle very large scale time series classification problems.

The UEA Time Series Repository provides Weka-based implementations of a number of time series algorithms including BoP, SAX-VSM, and BOSS.Footnote 7 We have also implemented the MiSTiCl algorithm using the framework provided by the UEA Time Series Repository so that any platform or implementation bias can be minimized.Footnote 8 The different algorithms were evaluated using 85 real-world and synthetic time series datasets provided by the UCR Time Series Archive.Footnote 9 For each dataset, 100 random shuffles were created such that the class distribution and total number of instances in the training/testing splits of each shuffle were kept the same as in the original splits. The random number seeds used to create the different shuffles were kept the same when creating the shuffles for different algorithms. Each classification algorithm was then used to evaluate all 100 shuffles of each dataset to obtain a comprehensive set of measurements regarding classification accuracy and runtime requirements.

Parameter settings play a critical role in getting optimal results for almost all machine learning algorithms. Therefore, each algorithm was provided a predefined set of starting parameters and the algorithms performed parameter optimization to choose the best parameters for each run, i.e., each shuffle of each dataset was evaluated with optimized parameters. This also allows to account for the time required for parameter optimization. We have also taken great care toward using a consistent set of parameters, wherever possible. In this regard, the SAX-based MiSTiCl, BoP, and SAX-VSM algorithms were provided default cardinality levels of \(\{2,3,\ldots ,8\}\) and dimensionality reduction levels of \(\{2,3,\ldots ,6\}\).Footnote 10 MiSTiCl also requires a minimum positive frequency \(f^+\), a maximum negative frequency \(f^-\), and a parameter K for limiting the per class frequent pattern count. In all the MiSTiCl experiments, both \(f^+\) and \(f^-\) were kept fixed at 0.2 and 0.1, respectively, while the parameter K was set using optimization from \(\{1,2,4\}\). The BoP and SAX-VSM implementations provided by the UEA Repository require an interval count per window in addition to the cardinality and dimensionality level parameters, which was also determined during parameter optimization. The BOSS algorithm is based on a different discretization technique called Symbolic Fourier Approximation (SFA). We used the parameters already specified in the UEA implementation assuming the best set of possible parameters is provided. The alphabet size (or cardinality level) is fixed at four characters, while the word lengths are selected using parameter optimization from the possible values of \(\{8,10,12,14,16\}\). Compared to the 35 parameter combinations evaluated for BoP, SAX-VSM, and MiSTiCl, the BOSS algorithm is only evaluated using five combinations; therefore, it already has an advantage in terms of the required runtime.

MiSTiCl transforms a time series dataset into a feature-based representation which creates a generic classification problem and allows to utilize any off-the-shelf classification algorithm. Since the transformed dataset incorporates a number of diverse features, ensemble techniques can be extremely effective for creating accurate classification models. Therefore, we employed random forests (RF) [1], extremely randomized trees (ET) [8], and AdaBoost.M1 (AB) [7] for creating classification models using a maximum of 1000 trees per ensemble, while keeping all other parameters unchanged.

5 Results

This section provides an empirical analysis of the classification accuracy and runtime performance of MiSTiCl, BoP, SAX-VSM, and BOSS based on averaged values of the statistics collected for each dataset. We have also included the classification accuracy results for ST to establish a baseline comparison with a real-valued shapelet-based classification algorithm.Footnote 11 For statistical comparison of different algorithms, we employ the Friedman test followed by Nemenyi post hoc test based on average ranks attained by the different algorithms and show the comparisons as critical difference (CD) diagrams [2].

MiSTiCl can employ either the \(\chi ^2\) independence test or the information gain for selecting independent and discriminative frequent patterns; therefore, we carried out all the MiSTiCl experiments using both these methods. Figure 3a, b shows the critical difference diagrams for the different MiSTiCl variants based on classification accuracy and total runtime, respectively. We can see that using the \(\chi ^2\) test to determine pattern independence yields better overall results in terms of both classification accuracy and runtime; therefore, the following analysis will be based on the results obtained using the \(\chi ^2\) independence test.

Average ranks for different MiSTiCl variants based on a classification accuracy and b runtime. CS and IG represent \(\chi ^2\) independence test and information gain-based pattern selection, respectively, while ET, AB, and RF represent the three ensemble classifiers. MiSTiCl variants which are not significantly different (at \(p=0.05\)) are connected. The critical difference (CD) for significantly different algorithms is 0.81

Figure 4 presents a comparison of MiSTiCl against BoP, SAX-VSM, and BOSS, regarding classification accuracy and runtime. The x- and y-axes show the total time required for training and testing a classification model using MiSTiCl and the compared algorithm, respectively. Each evaluated dataset is represented by a marker. Green markers indicate that MiSTiCl provides better accuracy, whereas red markers indicate otherwise. The marker size corresponds to the absolute difference between classification accuracies of the compared algorithms, i.e., larger markers indicate a greater difference between the accuracy of the two algorithms. For each dataset, we also performed Wilcoxon’s signed-ranks test to establish whether one algorithm performs significantly better or worse compared to the other. In this regard, an upward-facing triangle indicates that MiSTiCl is significantly better, a downward-facing triangle indicates that the other algorithm is significantly better while a circle indicates that the difference was not significant. We can see that MiSTiCl consistently provides an improvement of at least an order of magnitude with respect to runtime, while it is on par with BOSS and dominates BoP and SAX-VSM regarding classification accuracy.

Comparing MiSTiCl against BoP, SAX-VSM, and BOSS regarding runtime and classification accuracy. Each marker represents one dataset. The x- and y-axes show the runtime (training + testing) in seconds. Markers below the dotted line indicate that MiSTiCl is slower than the other algorithm. A green marker indicates that MiSTiCl provides better classification accuracy while red indicates otherwise. Marker sizes correspond to the absolute difference between the mean classification accuracy provided by the competing algorithms for a particular dataset, i.e., larger markers indicate greater difference in classification accuracy of the two algorithms. An upward-facing triangle indicates that a significant difference was found in favor of MiSTiCl, while a downward-facing triangle shows a significant difference in favor of the other algorithm. Bubbles indicate there was no significance determined (color figure online)

Figure 5a, b shows the average ranks of the different algorithms based on classification accuracy and runtime, respectively. For classification accuracy, MiSTiCl is placed on par with ST and BOSS with average ranks of 2.92, 3.25, and 3.68 for the ET-, AB-, and RF-based variants, respectively, while SAX-VSM and BoP are significantly different compared to the other algorithms. For the runtime, MiSTiCl easily achieves the top three spots with average ranks of 1.33, 2.13, and 3.09 for ET-, RF-, and AB-based variants, respectively, making it significantly faster than any of its competitors.

A pairwise win/tie/loss analysis was also performed for a head-to-head comparison between MiSTiCl and the other algorithms. A win was registered if the difference between the classification accuracy achieved by MiSTiCl and the competing algorithm was greater than \(+\) 2%, while a loss was registered if the difference was less than − 2%, while a tie was registered otherwise. The numbers of wins/ties/losses are reported in Table 5. When comparing the different MiSTiCl variants with each other, we see that MiSTiCl-ET provides better classification accuracy for most of the datasets.

A breakdown of the time required for training and testing a classification model using MiSTiCl-ET is provided in Fig. 6. This includes the time required for (i) discretizing the training and testing sets, (ii) extracting frequent patterns from the discretized training data, (iii) creating transformed datasets, (iv) parameter optimization, and (v) model training and testing. The time required for each phase of the algorithm is converted to a percentage of the total time required, and box plots are created using the data for all evaluated datasets. We can see that the median time required for data discretization and transformation, parameter optimization, and model training/testing is less than 10% of the total time required to process a given dataset.

All the above observations regarding classification accuracy and runtime confirm that MiSTiCl is a faster algorithm for providing classification accuracy which is on par with existing algorithms, while incorporating extremely fast parameter optimization. Tables 6 and 7 provide the results used for the analysis performed for the classification accuracy and runtime, respectively.

5.1 Results using a conservative parameter set

We also carried out experiments for evaluating the classification and runtime performance of MiSTiCl algorithm using a conservative parameter set. In these experiments, we provided MiSTiCl with cardinality and dimensionality reduction levels of only \(\{3, 4, 5, 6\}\) and \(\{2, 3, 4, 5\}\), respectively. This reduces the number of evaluated parameter combinations to only 16 as compared to the 35 parameter combinations evaluated in the full set of experiments. This results in a further reduction in the total runtime for MiSTiCl with a slight reduction in the classification accuracy as well; however, the overall performance is generally the same. Figure 7 shows the average ranks of the different algorithms based on classification accuracy when using a conservative set of parameters for the MiSTiCl algorithm.

6 Conclusion

In this paper, we proposed MiSTiCl, a time series classification approach using a linear time string mining algorithm for feature extraction. Using the string mining algorithm for feature extraction allowed us to drastically reduce the runtime of the whole classification task compared to many other state-of-the-art approaches. On average, MiSTiCl achieved an order of magnitude speedup over the most accurate state-of-the-art algorithm, BOSS, while still being robust enough to provide a classification accuracy that is statistically not discernible from it. We used a multi-cardinality and multi-dimensionality approach to incorporate a multi-view aspect to the discretized time series classification algorithm. One alternative to using this multi-cardinality and multi-dimensionality approach is to use a form of stacking (or meta-ensemble approach) such that an ensemble of base models is created where each single-view feature set is used to create the base models. However, stacking usually leads to a more complex classification model.

MiSTiCl can also be extended in a couple of ways: Instead of creating the feature-based dataset using only the SAX-based frequent patterns, we can incorporate features extracted from different time series discretization algorithms. One such algorithm is the Symbolic Fourier Approximation (SFA) used in BOSS and BOSS VS. Incorporating a frequency-based symbolic representation could potentially provide a dual feature-based dataset. The local shape-based features can be extracted from SAX representations, while frequency components dominating the whole shape of the time series can be obtained from a frequency decomposition-based representation. This could provide a local and global view to a single classification algorithm, and the efficiency of the string mining-based feature extraction process ensures that the approach would scale very well for a variety of problems with large and complex data. In the future, we intend to adapt our algorithm for multi-variate time series problems. Another avenue to explore would be the application to online or streaming time series problems. A multi-variate streaming time series classification algorithm based on string mining could potentially have a huge impact on time series classification for sensor data from the Internet of things (IoT).

Notes

Throughout the text, we refer to real-valued time series segments as “subsequences” and the discretized/symbolic segments as “patterns.”

The presented mathematical notation is for the simple case of integer values of p; later SAX refinements enable handling non-integer window sizes as well.

Research suggests that a large number of time series datasets follow the Gaussian distribution. For the minority of datasets which do not follow this assumption, selecting the breakpoints using the Gaussian curve can deteriorate the efficiency of SAX; however, the “correctness of the algorithm is unaffected” [10].

Usually, multi-view learning refers to learning with different sets of features of vectorial data; however, here we use the term for multiple representations of a time series data originating from different parameterizations.

1. This criterion and procedure are not to be confused with closed or open/free patterns [16]. 2. Note that there can be two patterns p and q, with one pattern p being more general than the other, \(p \prec q\), both having the same value of \(\chi ^2\) (\(\chi ^2(p) = \chi ^2(q)\)), but yet occurring in different sets of positive and negative examples. However, this should be expected to be a rather infrequent case. The overall filtering procedure of patterns just makes sure that the patterns are frequent enough in the positives, infrequent enough in the negatives, highly discriminative and, given the same discriminative power, as general as possible.

A discussion about calculation of the \(\chi ^2\) test statistic and the information gain is provided in “Appendix.”

Our implementation is available from https://github.com/atifraza/MiSTiCl.

Following the parameter settings provided by the UEA Time Series Repository.

The results for ST have been taken from the UEA Time Series Repository.

References

Breiman L (2001) Random forests. Mach Learn 45(1):5–32. https://doi.org/10.1023/A:1010933404324

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Dhaliwal J, Puglisi SJ, Turpin A (2012) Practical efficient string mining. IEEE Trans Knowl Data Eng 24(4):735–744. https://doi.org/10.1109/TKDE.2010.242

Ding H, Trajcevski G, Scheuermann P, Wang X, Keogh E (2008) Querying and mining of time series data. Proc VLDB Endow 1(2):1542–1552

Fischer J, Heun V, Kramer S (2005) Fast frequent string mining using suffix arrays. In: 5th International conference on data mining, IEEE, ICDM ’05, pp 609–612. https://doi.org/10.1109/ICDM.2005.62

Fischer J, Heun V, Kramer S (2006) Optimal string mining under frequency constraints. In: Knowledge discovery in databases, PKDD 2006, lecture notes in computer science, vol 4213. Springer, Berlin, pp 139–150. https://doi.org/10.1007/11871637_17

Freund Y (1995) Boosting a Weak Learning Algorithm by Majority. Inf Comput 121(2):256–285. https://doi.org/10.1006/inco.1995.1136

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63(1):3–42. https://doi.org/10.1007/s10994-006-6226-1

Hills J, Lines J, Baranauskas E, Mapp J, Bagnall A (2014) Classification of time series by shapelet transformation. Data Min Knowl Discov 28(4):851–881. https://doi.org/10.1007/s10618-013-0322-1

Lin J, Keogh E, Wei L, Lonardi S (2007) Experiencing SAX: a novel symbolic representation of time series. Data Min Knowl Discov 15(2):107–144. https://doi.org/10.1007/s10618-007-0064-z

Lin J, Khade R, Li Y (2012) Rotation-invariant similarity in time series using bag-of-patterns representation. J Intell Inf Syst 39(2):287–315. https://doi.org/10.1007/s10844-012-0196-5

Rakthanmanon T, Keogh E (2013) Fast shapelets: a scalable algorithm for discovering time series shapelets. In: Proceedings of the 2013 SIAM international conference on data mining, SDM, Society for Industrial and Applied Mathematics, pp 668–676. https://doi.org/10.1137/1.9781611972832.74

Schäfer P (2015) The BOSS is concerned with time series classification in the presence of noise. Data Min Knowl Discov 29(6):1505–1530. https://doi.org/10.1007/s10618-014-0377-7

Schäfer P (2016) Scalable time series classification. Data Min Knowl Discov 30(5):1273–1298. https://doi.org/10.1007/s10618-015-0441-y

Senin P, Malinchik S (2013) SAX-VSM: interpretable time series classification using SAX and vector space model. In: 13th International conference on data mining, IEEE, ICDM ’13, pp 1175–1180. https://doi.org/10.1109/ICDM.2013.52

Toivonen H (2017) Frequent pattern. In: Sammut C, Webb GI (eds) Encyclopedia of machine learning and data mining. Springer, Boston, pp 524–529. https://doi.org/10.1007/978-1-4899-7687-1_318

Ye L, Keogh E (2011) Time series shapelets: a novel technique that allows accurate, interpretable and fast classification. Data Min Knowl Discov 22(1):149–182. https://doi.org/10.1007/s10618-010-0179-5

Acknowledgements

We are grateful to the reviewers for their comments and suggestions which helped in improving the quality of this paper. The first author was supported by a scholarship from the Higher Education Commission (HEC), Pakistan, and the German Academic Exchange Service (DAAD), Germany.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Calculating independence test statistics

Appendix: Calculating independence test statistics

Section 3.3 provides the algorithmic details for selecting frequent patterns based on their discriminative power. \(\chi ^2\) independence test or information gain values can be used to determine the discriminative power of a given pattern and find out how effectively it can identify the instances of a given class. This section explains the procedure of calculating these statistics based on the occurrence frequency of a pattern in the positive and negative class dataset splits. In this regard, the notation used for the following discussion is given below.

Symbol | Representation |

|---|---|

\(\widehat{P}\) | Positive class dataset |

\(\widehat{N}\) | Negative class dataset |

\(N_{\widehat{P}}\) | Number of instances in \(\widehat{P}\) |

\(N_{\widehat{N}}\) | Number of instances in \(\widehat{N}\) |

p | Frequent pattern |

\(f_{\widehat{P}}\) | Occurrence frequency of p in \(\widehat{P}\) |

\(f_{\widehat{N}}\) | Occurrence frequency of p in \(\widehat{N}\) |

1.1 Calculating the \(\chi ^2\) test statistic

The \(\chi ^2\) test statistic is calculated based on observed (\(O_{ij}\)) and expected (\(E_{ij}\)) values for the given categorical variables. The formula for calculating the \(\chi ^2\) statistic is given below.

Observed values (\(O_{ij}\)) correspond to the number of instances observed as belonging to a certain categorical variable. In our case, it is the number of instances labeled as belonging to the positive or negative class given a particular frequent pattern. This can be determined using the instance counts of the positive and negative class datasets and occurrence frequency values of the given pattern in the respective dataset splits. Based on these values, a contingency table can be created as follows.

Dataset splits | |||

|---|---|---|---|

Positive, \(\widehat{P}\) | Negative, \(\widehat{N}\) | ||

\( With (p)\) | \(O_{11}=\lfloor f_{\widehat{P}} \times N_{\widehat{P}} \rceil \) | \(O_{12}=\lfloor f_{\widehat{N}} \times N_{\widehat{N}} \rceil \) | \(RSum_{1.} = O_{11}+O_{12}\) |

\( WithOut (p)\) | \(O_{21}=N_{\widehat{P}}-O_{11}\) | \(O_{22}=N_{\widehat{N}}-O_{12}\) | \(RSum_{2.} = O_{21}+O_{22}\) |

\(CSum_{.1}=O_{11}+O_{21}\) | \(CSum_{.2}=O_{12}+O_{22}\) | \(n=\sum _{i,j} O_{ij}\) | |

The rows of this contingency table correspond to the number of instances containing or not containing the given pattern p, while the columns correspond to the positive and negative dataset splits, respectively. The combined total of row and column sums equals the total number of instances in the positive and negative dataset splits. Finally, the expected values (\(E_{ij}\)) are calculated using the following formula.

The \(\chi ^2\) test statistic determines whether any relationship between the positive and negative dataset splits exists given the frequent pattern. If the pattern occurs in both datasets, then the \(\chi ^2\) value will be close to zero which signifies a relationship exists between the two dataset splits. We can order the frequent patterns based on their \(\chi ^2\) statistic and select the ones for which the dataset splits do not exhibit any mutual relationship.

1.2 Calculating the information gain value

Entropy (H) is a measure for establishing whether a dataset has a uniform or varying distribution in terms of the different classes of instances. Given a dataset with positive and negative class instances, the entropy of the dataset can be calculated using the following formula.

If a pattern p occurs frequently in either class of instances in the dataset, we can create positive and negative class subsets based on the presence or absence of this pattern in each of the instances. The entropy of these subsets can then be calculated using the following equations.

Using the entropy values of the source dataset and the positive and negative subsets, we can calculate the information gain value using the following formula.

If the frequent pattern effectively distinguishes between the two classes, the positive and negative class subsets will have very few or no instances of the other class resulting in a smaller value of entropy for the two subsets. This in turn will cause a higher information gain value indicating that the pattern is a good candidate for distinguishing between the two classes of instances. If, however, the converse is true, then the pattern is not a good candidate. This way the candidates can be selected on the basis of their discriminative power.

Rights and permissions

About this article

Cite this article

Raza, A., Kramer, S. Accelerating pattern-based time series classification: a linear time and space string mining approach. Knowl Inf Syst 62, 1113–1141 (2020). https://doi.org/10.1007/s10115-019-01378-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-019-01378-7