Abstract

Optimization algorithms can see their local convergence rates deteriorate when the Hessian at the optimum is singular. These singularities are inescapable when the optima are non-isolated. Yet, under the right circumstances, several algorithms preserve their favorable rates even when optima form a continuum (e.g., due to over-parameterization). This has been explained under various structural assumptions, including the Polyak–Łojasiewicz condition, Quadratic Growth and the Error Bound. We show that, for cost functions which are twice continuously differentiable (\(\textrm{C}^2\)), those three (local) properties are equivalent. Moreover, we show they are equivalent to the Morse–Bott property, that is, local minima form differentiable submanifolds, and the Hessian of the cost function is positive definite along its normal directions. We leverage this insight to improve local convergence guarantees for safe-guarded Newton-type methods under any (hence all) of the above assumptions. First, for adaptive cubic regularization, we secure quadratic convergence even with inexact subproblem solvers. Second, for trust-region methods, we argue capture can fail with an exact subproblem solver, then proceed to show linear convergence with an inexact one (Cauchy steps).

Avoid common mistakes on your manuscript.

1 Introduction

We consider local convergence of algorithms for unconstrained optimization problems

where \(\mathcal {M}\) is a Riemannian manifoldFootnote 1 and \(f:\mathcal {M}\rightarrow {\mathbb R}\) is at least \({\textrm{C}^{1}}\) (continuously differentiable).

When \(f\) is \({\textrm{C}^{2}}\) (twice continuously differentiable), the most classical local convergence results ensure favorable rates for standard algorithms provided they converge to a non-singular local minimum \(\bar{x}\), that is, one such that the Hessian \(\nabla ^2f(\bar{x})\) is positive definite. And indeed, those rates can degrade if the Hessian is merely positive semidefinite. For example, with \(f(x) = x^4\), gradient descent (with an appropriate step-size) converges only sublinearly to the minimum, and Newton’s method converges only linearly.

This is problematic if the minimizers of \(f\) are not isolated, because in that case the Hessian cannot be positive definite there. This situation arises commonly in applications for structural reasons such as over-parameterization, redundant parameterizations and symmetry—see Sect. 1.2.

Notwithstanding, algorithms often exhibit good local behavior near non-isolated minimizers. As early as the 1960s, this has prompted investigations into properties that such cost functions may satisfy and which lead to fast local rates despite singular Hessians. We study four such properties.

In all that follows, we are concerned with the behavior of algorithms in the vicinity of its local minima. Since we do not assume that they are isolated, rather than selecting one local minimum \(\bar{x}\), we select all local minima of the same value. Formally, given a local minimum \(\bar{x}\), let

denote the set of all local minima with a given value \(f_{\mathcal {S}}= f(\bar{x})\).

For \(f\) of class \({\textrm{C}^{2}}\), it is particularly favorable if \(\mathcal {S}\) is a differentiable submanifold of \(\mathcal {M}\) around \(\bar{x}\). In that case the set \(\mathcal {S}\) has a tangent space \(\textrm{T}_{\bar{x}}\mathcal {S}\) at \(\bar{x}\). It is easy to see that each vector \(v \in \textrm{T}_{\bar{x}}\mathcal {S}\) must be in the kernel of the Hessian \(\nabla ^2f\) at \(\bar{x}\) because the gradient \(\nabla f\) is constant (zero) on \(\mathcal {S}\). Thus, \(\textrm{T}_{\bar{x}}\mathcal {S}\subseteq \ker \nabla ^2f(\bar{x})\). Since \(\bar{x}\) is a local minimum, we also know that \(\nabla ^2f(\bar{x})\) is positive semidefinite. Then, in the spirit of asking the Hessian to be “as positive definite as possible”, the best we can hope for is that the kernel of \(\nabla ^2f(\bar{x})\) is exactly \(\textrm{T}_{\bar{x}}\mathcal {S}\), in which case the restriction of \(\nabla ^2f(\bar{x})\) to the normal space \(\textrm{N}_{\bar{x}}\mathcal {S}\), that is, the orthogonal complement of \(\textrm{T}_{\bar{x}}\mathcal {S}\) in \(\textrm{T}_{\bar{x}}\mathcal {M}\), is positive definite.

We call this the Morse–Bott property (MB), and we write \(\mu \)-MB to indicate that the positive eigenvalues are at least \(\mu > 0\). The definition requires \(f\) to be twice differentiable.

Definition 1.1

Let \(\bar{x}\) be a local minimum of \(f\) with associated set \(\mathcal {S}\) (1). We say \(f\) satisfies the Morse–Bott property at \(\bar{x}\) if

If also \(\langle v, \nabla ^2f(\bar{x})[v]\rangle \ge \mu \Vert v\Vert ^2\) for some \(\mu > 0\) and all \(v \in \textrm{N}_{\bar{x}}\mathcal {S}\) then we say \(f\) satisfies \(\mu \)-MB at \(\bar{x}\).

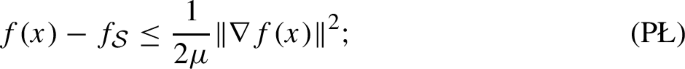

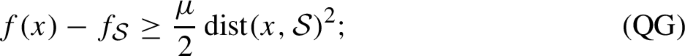

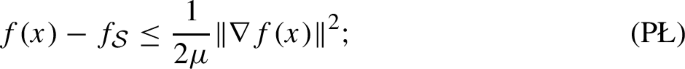

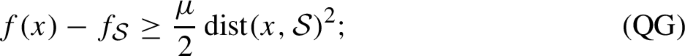

At first, a reasonable objection to the above is that one may not want to assume that \(\mathcal {S}\) is a submanifold. Perhaps for that reason, it is far more common to encounter other assumptions in the optimization literature. We focus on three: Polyak–Łojasiewicz (PŁ), error bound (EB) and quadratic growth (QG). The first goes back to the 1960s [81]. The latter two go back at least to the 1990s [22, 70].

Below, the first two definitions (as stated) require \(f\) to be differentiable. The distance to a set is defined as usual: \({{\,\textrm{dist}\,}}(x, \mathcal {S}) = \inf _{y\in \mathcal {S}} {{\,\textrm{dist}\,}}(x, y)\) where \({{\,\textrm{dist}\,}}(x, y)\) is the Riemannian distance on \(\mathcal {M}\).

Definition 1.2

Let \(\bar{x}\) be a local minimum of \(f\) with associated set \(\mathcal {S}\) (1). We say \(f\) satisfies

-

the Polyak–Łojasiewicz condition with constant \(\mu > 0\) (\(\mu \)-PŁ) around \(\bar{x}\) if

-

the error bound with constant \(\mu > 0\) (\(\mu \)-EB) around \(\bar{x}\) if

-

quadratic growth with constant \(\mu > 0\) (\(\mu \)-QG) around \(\bar{x}\) if

all understood to hold for all x in some neighborhood of \(\bar{x}\).

Note that all the definitions are local around a point \(\bar{x}\). Two observations are immediate: (i) QG implies that \(\bar{x}\) is a strict minimum relatively to \(\mathcal {S}\) (1), meaning that \(f(x) > f_{\mathcal {S}}\) for all \(x \notin \mathcal {S}\) close enough to \(\bar{x}\), and (ii) both EB and PŁ imply that critical points and \(\mathcal {S}\) coincide around \(\bar{x}\). Thus, both EB and PŁ rule out existence of saddle points near \(\bar{x}\). By extension, we say that \(f\) satisfies any of these four properties around a set of local minima if it holds around each point of that set.

1.1 Contributions

A number of relationships between PŁ, EB and QG are well known already for \(f\) of class \({\textrm{C}^{1}}\): see Table 1 and Sect. 1.3. Our first main contribution in this paper is to show that:

If f is of class \({\textrm{C}^{2}}\), then PŁ, EB, QG and MB are essentially equivalent.

Here, “essentially” means that the constant \(\mu \) may degrade (arbitrarily little) and the neighborhoods where properties hold may shrink. Notably, we show that if f is \({\textrm{C}^{p}}\) with \(p \ge 2\), then PŁ, EB and QG all imply that the set of local minima \(\mathcal {S}\) is locally smooth (at least \({\textrm{C}^{p - 1}}\)). We also give counter-examples when \(f\) is only \({\textrm{C}^{1}}\). Explicitly, in Sect. 2 we summarize known results for \(f\) of class \({\textrm{C}^{1}}\) and we contribute the following:

-

Theorem 2.16 shows PŁ around \(\bar{x}\) implies \(\mathcal {S}\) is a \({\textrm{C}^{p - 1}}\) submanifold around \(\bar{x}\) if f is \({\textrm{C}^{p}}\) with \(p \ge 2\). Remark 2.19 provides counter-examples if \(f\) is only \({\textrm{C}^{1}}\). If \(f\) is analytic, so is \(\mathcal {S}\), as already shown by Feehan [44].

-

Lemma 2.14 is instrumental to prove Theorem 2.16 and to analyze algorithms. It states that under PŁ the gradient locally aligns with the dominant eigenvectors of the Hessian.

-

Corollary 2.17 deduces that \(\mu \)-PŁ implies \(\mu \)-MB if \(f\) is \({\textrm{C}^{2}}\).

-

Proposition 2.8 shows \(\mu \)-QG implies \(\mu '\)-EB with \(\mu '<\mu \) arbitrarily close if \(f\) is \({\textrm{C}^{2}}\). Remark 2.9 provides a counter-example if \(f\) is only \({\textrm{C}^{1}}\).

-

Proposition 2.4 shows \(\mu \)-EB implies \(\mu '\)-PŁ with \(\mu '<\mu \) arbitrarily close if \(f\) is \({\textrm{C}^{2}}\). If \(f\) is only \({\textrm{C}^{1}}\) with L-Lipschitz continuous \(\nabla f\), Karimi et al. [52] showed the same with \(\mu ' = \mu ^2/L\).

In Sect. 4, we study the classical globalized versions of Newton’s method. We strengthen their local convergence guarantees when minimizers are not isolated but the \({\textrm{C}^{2}}\) cost function satisfies any (hence all) of the above. The key observation that enables those improvements is the fact that we may use all four conditions in the analysis without loss of generality. Specifically:

-

Cubic regularization enjoys superlinear convergence under PŁ, as shown by Nesterov and Polyak [76]. Yue et al. [97] further showed quadratic convergence under EB. Both references assume an exact subproblem solver. Leveraging our results above, we show that quadratic convergence still holds with inexact subproblem solvers (Theorem 4.1).

-

For the trust-region method with exact subproblem solver, we were surprised to find that even basic capture-type convergence properties can fail in the presence of non-isolated local minima (Sect. 4.2). Notwithstanding, common implementations of the trust-region method use a truncated conjugate gradient (tCG) subproblem solver, and those do, empirically, exhibit superlinear convergence under the favorable conditions discussed above. We discuss this further in Remark 4.14, and we show a partial result in Theorem 4.9, namely, that using the Cauchy step (i.e., the first iterate of tCG) yields linear convergence.

As is classical, to prove the latter results, we rely on capture theorems and Lyapunov stability. Those hold under assumptions of vanishing step-sizes and bounded path length. In Sect. 3, we state those building blocks succinctly, adapted to accommodate non-isolated local minima.

1.2 Non-isolated minima in applications

We now illustrate how optimization problems with continuous sets of minima occur in applications.

In all three scenarios below, we can cast the cost function \(f:\mathcal {M}\rightarrow {\mathbb R}\) as a composition of some other function \(g:\mathcal {N}\rightarrow {\mathbb R}\) through a map \(\varphi :\mathcal {M}\rightarrow \mathcal {N}\), where \(\mathcal {N}\) is a smooth manifold (see Fig. 1 and [60]). If \(g\) and \(\varphi \) are twice differentiable, then the Morse–Bott property (MB) for \(f= g\circ \varphi \) can come about as follows. Consider a local minimum \({\bar{y}}\) for \(g\). The set \(\mathfrak {X}= \varphi ^{-1}({\bar{y}})\) consists of local minima for \(f\). Pick a point \(\bar{x}\in \mathfrak {X}\). Assume \(x \mapsto {{\,\textrm{rank}\,}}\textrm{D}\varphi (x)\) is constant in a neighborhood of \(\bar{x}\). Then, the set \(\mathfrak {X}\) is an embedded submanifold of \(\mathcal {M}\) around \(\bar{x}\) with tangent space \(\ker \textrm{D}\varphi (\bar{x})\). Moreover, the Hessians of \(f\) and \(g\) at \(\bar{x}\) are related by

Therefore, if \(\nabla ^2g(\varphi (\bar{x}))\) is positive definite, then \(\ker \nabla ^2f(\bar{x}) = \textrm{T}_{\bar{x}}\mathfrak {X}\) and \(\nabla ^2f(\bar{x})\) is positive definite along the orthogonal complement. In other words: \(f\) satisfies the Morse–Bott property (MB) at \(\bar{x}\). We present below a few concrete examples of optimization problems where this can happen.

Over-parameterization and nonlinear regression. Consider minimizing \(f(x) = \frac{1}{2} \Vert F(x) - b\Vert ^2\) with \(F :{\mathbb R}^m \rightarrow {\mathbb R}^n\) a \({\textrm{C}^{2}}\) function. We cast this as above with \(g(y) = \frac{1}{2} \Vert y - b\Vert ^2\) and \(\varphi = F\). Suppose \(\mathfrak {X}= \varphi ^{-1}(b) = \{x: F(x) = b\}\) is non-empty (interpolation regime), which is typical in deep learning. This is the set of global minimizers of f. If \({{\,\textrm{rank}\,}}\textrm{D}F(x)\) is equal to a constant r in a neighborhood of \(\mathfrak {X}\), then \(\mathfrak {X}\) is a smooth submanifold of \({\mathbb R}^m\) of dimension \(m - r\). If additionally the problem is over-parameterized, that is, \(m > n \ge r\), then \(\mathfrak {X}\) has positive dimension (see Fig. 2 for an illustration). The discussion above immediately implies that \(f\) satisfies MB on \(\mathfrak {X}\). See also Nesterov and Polyak [76, §4.2] who argue that PŁ holds in this setting.

Redundant parameterizations and submersions. Say we want to minimize \(g:\mathcal {N}\rightarrow {\mathbb R}\) constrained to \({\mathcal {C}} \subseteq \mathcal {N}\). If \({\mathcal {C}}\) is complicated, and if we have access to a parameterization \(\varphi \) for that set (so that \(\varphi (\mathcal {M}) = {\mathcal {C}}\)), it may be advantageous to minimize \(f= g\circ \varphi \) instead. If the parameterization is redundant, this can cause \(f\) to have non-isolated minima, even if the minima of \(g\) are isolated.

As an example, consider minimizing \(g:{\mathbb R}^{m \times n} \rightarrow {\mathbb R}\) over the bounded-rank matrices \({\mathcal {C}} = \{Y \in {\mathbb R}^{m \times n}: {{\,\textrm{rank}\,}}Y \le r\}\). A popular approach consists in lifting the search space to \(\mathcal {M}= {\mathbb R}^{m \times r} \times {\mathbb R}^{n \times r}\) and minimizing \(f= g\circ \varphi \), where \(\varphi :\mathcal {M}\rightarrow \mathcal {N}\) is defined as \(\varphi (L, R) = LR^\top \). The parameterization is redundant because \(\varphi (LJ^{-1}, RJ^{\top }) = \varphi (L, R)\) for all invertible J. In particular, given a local minimum \(Y \in {\mathcal {C}}\) of \(g\), the fiber \(\varphi ^{-1}(Y)\) is unbounded, which hinders convergence analyses (see [59]). However, if Y is of maximal rank r then \(\textrm{D}\varphi \) has constant rank in a neighborhood of \(\varphi ^{-1}(Y)\). From the discussion above, it follows that \(f\) satisfies MB on \(\varphi ^{-1}(Y)\) if the (Riemannian) Hessian of g (restricted to the manifold of rank-r matrices) is positive definite.

Similarly, Burer and Monteiro [25, 26] introduced a popular approach to minimize a function \(g\) over the set of positive semidefinite matrices of bounded rank through the map \(\varphi :Y \mapsto YY^\top \). The resulting function \(f= g\circ \varphi \) can have non-isolated minima. However, the same arguments as above ensure that MB holds at minimizers of maximal rank when \(g\) is strongly convex (this setting is for example considered in [103]). This further extends to tensors [63].

Symmetries and quotients. Some optimization problems have intrinsic symmetries. For example, in estimation problems, if the measurements are invariant under particular transformations of the signal, then the signal can only be retrieved up to those transformations. The likelihood function then has symmetries, and possibly a continuous set of optima as a result. Sometimes, factoring these symmetries out (that is, passing to the quotient) yields a quotient manifold, and we can investigate optimization on that manifold [4]. In the notation of our general framework above, \(\varphi \) is then the quotient map. In particular, \(\varphi \) is a submersion, so that if \({\bar{y}} \in \mathcal {N}\) is a non-singular minimum of \(g\) then \(f\) satisfies MB on \(\varphi ^{-1}({\bar{y}})\) (which is a submanifold of dimension \(\dim \mathcal {M}- \dim \mathcal {N}\)). See also [24, §9.9] for the case where \(\mathcal {N}\) is a Riemannian quotient of \(\mathcal {M}\).

1.3 Related work

Historical note. Discussions about convergence to singular minima appear in the literature at least as early as [82, §6.1]. Luo and Tseng [70] introduced the EB condition explicitly to study gradient methods around singular minima. The QG property is arguably as old as optimization, though the earliest work we could locate is by Bonnans and Ioffe [22]. They employed QG to understand complicated landscapes with non-isolated minima. Łojasiewicz [68, 69] introduced his inequalities and used them subsequently to analyze gradient flow trajectories. Specifically, he proved that for analytic functions the trajectories either converge to a point or diverge. Concurrently, Polyak [81] introduced what became known as the Polyak–Łojasiewicz (PŁ) variant (also “gradient dominance”) to study both gradient flows and discrete gradient methods. Later, Kurdyka [55] developed generalizations now known as Kurdyka–Łojasiewicz (KŁ) inequalities. They are satisfied by most functions encountered in practice, as discussed in [9, §4]. In contrast, the Morse–Bott property has received little attention in the optimization literature. Early work by Shapiro [87] analyzes perturbations of optimization problems assuming a property similar to MB. There is also a mention of gradient flow under MB in [49, Prop. 12.3].

Relationships between properties. Several articles have explored the interplay between MB, PŁ, EB and QG in the last decades. The implication PŁ \(\Rightarrow \) QG has a rich history. It can be obtained as a corollary from [50, Basic lemma] (based on Ekeland’s variational principle; see also [41, Lem. 2.5]). It also follows from Łojasiewicz-type arguments that consist in bounding the length of gradient flow trajectories [79, Prop. 1]. Likewise, Bolte et al. [19] study growth under KŁ inequalities, with PŁ \(\Rightarrow \) QG as a special case. For lower-semicontinuous functions, Corvellec and Motreanu [35, Thm. 4.2] show that \(\mu \)-EB \(\Rightarrow \) \(\mu \)-QG. They also find that \(\mu \)-QG \(\Rightarrow \) \(\frac{\mu }{2}\)-EB for convex functions [35, Prop. 5.3]. In a somewhat different setting, Drusvyatskiy et al. [40, Cor. 3.2] prove that \(\mu \)-EB \(\Rightarrow \) \(\mu '\)-QG with arbitrary \(\mu ' < \mu \). With extra assumptions, they also show \(\mu \)-QG \(\Rightarrow \) \(\mu '\)-EB but without control of \(\mu '\). Later, Bolte et al. [21] proved an equivalence between KŁ inequalities and function growth for convex and potentially non-smooth functions. Their results seem to generalize to semi-convex functions. See also [100] and [39] for equivalences between EB and QG. Karimi et al. [52] established implications between several properties encountered in the optimization literature. In particular, they also show that PŁ and EB are equivalent, and that they both imply QG. Liao et al. [65] extended this to a non-smooth and weakly convex setting. Li and Pong [62] showed that EB implies PŁ for non-smooth functions under a level set separation assumption, though with no control on the PŁ constant. Implications between PŁ and EB are also reported in [42, Thm. 3.7, Prop. 3.8] for non-smooth functions under broad conditions. In the context of functional analysis, Feehan [44] proved that MB and PŁ are equivalent for analytic functions defined on Banach spaces. The work of [94, Ex. 2.9] also mentions that MB implies PŁ for \({\textrm{C}^{2}}\) functions. A more general implication is given by Arbel and Mairal [8, Prop. 1] for parameterized optimization problems. Previously, Bonnans and Ioffe [22] had exhibited sufficient conditions (similar to MB) for QG to hold. As a side note, Marteau-Ferey et al. [71] proved that MB is a sufficient condition to ensure that a non-negative function is globally decomposable as a sum of squares of smooth functions.

Convergence guarantees. The error bound approach of Luo and Tseng [70] has proven to be fruitful as multiple analyses based on this condition followed. Notably, Tseng [91] proved local superlinear convergence rates for some Newton-type methods applied to systems of nonlinear equations. They relied specifically on EB and did not assume isolated minima. Later, Yamashita and Fukushima [96] employed EB to establish capture theorems and quadratic convergence rates for the Levenberg–Marquardt method. Fan and Yuan [43], Behling et al. [12] and Boos et al. [23] generalized their results (in particular to solutions with a non-zero residual). More recently, Bellavia and Morini [14] found that two adaptive regularized methods converge quadratically for nonlinear least-squares problems (assuming that EB holds).

An early work of Anitescu [6] combines the QG property with other conditions to ensure isolated minima in constrained optimization, then deducing convergence results. Later, QG has found applications mainly in the context of convex optimization: see [67] for coordinate descent, [75] for various gradient methods, and [39] for the proximal gradient method. It is also worth mentioning that the definition of QG does not require differentiability of the function. For this reason, QG is valuable to study algorithms in non-smooth optimization too [36, 61].

The literature about convergence results based on Łojasiewicz inequalities is vast, and we touch here on some particularly relevant references. Absil et al. [2] discretized the arguments from [69] and obtained capture results for a broad class of optimization algorithms. Lageman [56, 57] provided generalizations to broader classes of functions. Later, such arguments have been used in many contexts to prove algorithmic convergence guarantees, among which [9, 20] are particularly influential works. Moreover, Attouch et al. [10] proposed a general abstract framework based on KŁ to derive capture results and convergence rates, and Frankel et al. [46] extended their statements. See also [74] for a framework that encompasses higher-order methods. Li and Pong [62] studied the preservation of Łojasiewicz inequalities under function transformations (such as sums and compositions). See also [11, 90] for the preservation of PŁ through function compositions. Interestingly, the PŁ condition is known to be a necessary condition for gradient descent to converge linearly [1, Thm. 5]. Recently, Yue et al. [98] proved that acceleration is impossible to minimize globally PŁ functions: gradient descent is optimal absent further structure. Assuming PŁ, Stonyakin et al. [88] formulated stopping criteria for gradient methods when the gradient is corrupted with noise. Using KŁ inequalities, Noll and Rondepierre [78] and Khanh et al. [53] analyzed the convergence of line-search gradient descent and trust-region methods. Łojasiewicz inequalities have also proved relevant for the study of second-order algorithms and superlinear convergence rates. A prominent example is the regularized Newton algorithm that converges superlinearly when PŁ holds, as shown in [76]. More recently, Zhou et al. [105], Yue et al. [97] provided finer analyses of this algorithm, respectively assuming Łojasiewicz inequalities and EB. Qian and Pan [84] extended the abstract framework of Attouch et al. [10] to establish superlinear convergence rates.

Stochastic algorithms have also been extensively studied through Łojasiewicz inequalities, and we briefly mention a few references here. Dereich and Kassing [37, 38] analyzed stochastic gradient descent (SGD) in the presence of non-isolated minima, using Łojasiewicz inequalities among other things. Local analyses of SGD using PŁ inequalities are given by Li et al. [64] and Wojtowytsch [94]. Ko and Li [54] studied the local stability and convergence of SGD in the presence of a compact set of minima with a condition that is weaker than PŁ. As for second-order algorithms, Masiha et al. [72] proved that a stochastic version of regularized Newton has fast convergence under PŁ.

Łojasiewicz inequalities are also particularly suited to analyze the convergence of flows. Notably, Łojasiewicz [69] bounded the path length of gradient flow trajectories and Polyak [81] derived linear convergence of flows assuming PŁ. Related results for flows but under MB are claimed in [49, Prop. 12.3]. More recently, Apidopoulos et al. [7] considered the Heavy-Ball differential equation and deduced convergence guarantees from PŁ. Wojtowytsch [95] studied a continuous model for SGD and the impact of the noise on the trajectory.

Finally, we found only few convergence results based on MB in the optimization literature. Fehrman et al. [45] derive capture theorems and asymptotic sublinear convergence rates for gradient descent assuming that MB holds on the set of minima. They also provide probabilistic bounds for stochastic variants. Usevich et al. [92] consider optimization problems over unitary matrices. They propose sufficient conditions for MB to hold at a local optimum and then exploit the induced PŁ condition to obtain convergence rates. In order to solve systems of nonlinear equations, Zeng [99] proposes a Newton-type method that is robust to non-isolated solutions. It enjoys local quadratic convergence assuming a MB-type property. The algorithm requires the knowledge of the dimension of the set of solutions.

Applications. Non-isolated minima arise in all sorts of optimization problems. It is common for non-convex inverse problems to have continuous symmetries, hence non-isolated minima (see [104]). In the context of deep learning, Cooper [34] proved that the set of global minima of a sufficiently over-parameterized neural network is a smooth manifold. In the last decade, there has been a renewed interest in Łojasiewicz inequalities because they are compatible with these complicated non-convex landscapes. In particular, a whole line of research exploits them to understand deep learning problems specifically. As an example, Oymak and Soltanolkotabi [80] employed PŁ to analyze the path taken by (stochastic) gradient descent in the vicinity of minimizers. Several other works suggested that non-convex machine learning loss landscapes can be understood in over-parameterized regimes through the lens of Łojasiewicz inequalities [11, 13, 66, 90]. Specifically, they argue that PŁ holds on a significant part of the search space and analyze (stochastic) gradient methods. Chatterjee [30] also establishes local convergence results for a large class of neural networks with PŁ inequalities.

1.4 Notation and geometric preliminaries

This section anchors notation and some basic geometric facts. In the important case where \(\mathcal {M}= {\mathbb R}^n\), several objects reduce as summarized in Table 2.

We let \(\langle \cdot , \cdot \rangle \) denote the inner product on \(\textrm{T}_x\mathcal {M}\)—it may depend on \(x \in \mathcal {M}\), but the base point is always clear from context. The associated norm is \(\Vert v\Vert = \sqrt{\langle v, v\rangle }\). The map \({{\,\textrm{dist}\,}}:\mathcal {M}\times \mathcal {M}\rightarrow {\mathbb R}_+\) is the Riemannian distance on \(\mathcal {M}\). We let \(\textrm{B}(x, \delta )\) denote the open ball of radius \(\delta \) around \(x \in \mathcal {M}\). The tangent bundle is \(\textrm{T}\mathcal {M}= \{ (x, v): x \in \mathcal {M}\text { and } v \in \textrm{T}_x\mathcal {M}\}\).

Moving away from \(x \in \mathcal {M}\) along the geodesic with (sufficiently small) initial velocity \(v \in \textrm{T}_x\mathcal {M}\) for unit time produces the point \(\textrm{Exp}_x(v) \in \mathcal {M}\) (Riemannian exponential). The injectivity radius at x is \(\textrm{inj}(x) > 0\). It is defined such that, given \(y \in \textrm{B}(x, \textrm{inj}(x))\), there exists a unique smallest vector \(v \in \textrm{T}_x\mathcal {M}\) for which \(\textrm{Exp}_x(v) = y\). We denote this v by \(\textrm{Log}_x(y)\) (Riemannian logarithm). Additionally, given \(x \in \mathcal {M}\) and \(y \in \textrm{B}(x, \textrm{inj}(x))\), we let \(\Gamma _{x}^{y} :\textrm{T}_x\mathcal {M}\rightarrow \textrm{T}_y\mathcal {M}\) denote parallel transport along the unique minimizing geodesic between x and y. If \(v = \textrm{Log}_x(y)\), we also let \(\Gamma _{v} = \Gamma _{x}^{y}\).

Let \(\mathfrak {X}\) be a subset of \(\mathcal {M}\). We need the notions of tangent and normal cones to \(\mathfrak {X}\), defined below.

Definition 1.3

The tangent cone to a set \(\mathfrak {X}\) at \(x \in \mathfrak {X}\) is the closed set

We also let \(\textrm{N}_x\mathfrak {X}= \big \{w \in \textrm{T}_x\mathcal {M}: \langle w, v\rangle \le 0 {\text { for all }}v \in \textrm{T}_x\mathfrak {X}\big \}\) denote the normal cone to \(\mathfrak {X}\) at x.

When \(\mathfrak {X}\) is a submanifold of \(\mathcal {M}\) around x, the cones \(\textrm{T}_x\mathfrak {X}\) and \(\textrm{N}_x\mathfrak {X}\) reduce to the tangent and normal spaces of \(\mathfrak {X}\) at x. (By “submanifold”, we always mean embedded submanifold.)

Given \(x \in \mathcal {M}\), we let \({{\,\textrm{dist}\,}}(x, \mathfrak {X}) = \inf _{y \in \mathfrak {X}} {{\,\textrm{dist}\,}}(x, y)\) denote the distance of x to \(\mathfrak {X}\). We further let \({{\,\textrm{proj}\,}}_\mathfrak {X}(x)\) denote the set of minima of the optimization problem \(\min _{y \in \mathfrak {X}} \,{{\,\textrm{dist}\,}}(x, y)\). If this set is non-empty (which is the case in particular if \(\mathfrak {X}\) is closed), then we have:

Indeed, the triangle inequality yields \({{\,\textrm{dist}\,}}(y, \bar{x}) \le {{\,\textrm{dist}\,}}(x, y) + {{\,\textrm{dist}\,}}(x, \bar{x})\), and \({{\,\textrm{dist}\,}}(x, y) = {{\,\textrm{dist}\,}}(x, \mathfrak {X}) \le {{\,\textrm{dist}\,}}(x, \bar{x})\). Moreover, if \(y \in {{\,\textrm{proj}\,}}_\mathfrak {X}(x)\) with \({{\,\textrm{dist}\,}}(x, y) < \textrm{inj}(y)\) then \(\textrm{Log}_y(x) \in \textrm{N}_y\mathfrak {X}\).

The set of local minima \(\mathcal {S}\) defined in (1) may not be closed: consider for example the function \(f(x) = \textrm{sgn}(x)\exp (-\frac{1}{x^2})(1 + \sin (\frac{1}{x^2}))\) with \(f_{\mathcal {S}}= 0\). It follows that the projection onto \(\mathcal {S}\) may be empty. Notwithstanding, the following holds:

Lemma 1.4

Around each \(\bar{x}\in \mathcal {S}\) there exists a neighborhood in which \({{\,\textrm{proj}\,}}_\mathcal {S}\) is non-empty.

Proof

Let \({\mathcal {U}}\) be an open neighborhood of \(\bar{x}\) such that \(f(x) \ge f_{\mathcal {S}}\) for all \(x \in {\mathcal {U}}\). Let \({\mathcal {V}}_1, {\mathcal {V}}_2 \subset {\mathcal {U}}\) be two closed balls around \(\bar{x}\) of radii \(\delta > 0\) and \(\frac{1}{4}\delta \) respectively. Then \(\mathcal {S}\cap {\mathcal {V}}_1 = f^{-1}(f_{\mathcal {S}}) \cap {\mathcal {V}}_1\), showing that \(\mathcal {S}\cap {\mathcal {V}}_1\) is closed and the projection onto this set is non-empty. Let \(x \in {\mathcal {V}}_2\) and \(y \in {{\,\textrm{proj}\,}}_{\mathcal {S}\cap {\mathcal {V}}_1}(x)\). Then \({{\,\textrm{dist}\,}}(x, y) \le \frac{1}{4}\delta \). Moreover, for all \(y' \in \mathcal {S}{\setminus } {\mathcal {V}}_1\) we have \({{\,\textrm{dist}\,}}(x, y') \ge \frac{3}{4}\delta \). It follows that \({{\,\textrm{proj}\,}}_{\mathcal {S}}(x) = {{\,\textrm{proj}\,}}_{\mathcal {S}\cap {\mathcal {V}}_1}(x)\), and this is non-empty. \(\square \)

From these considerations we deduce that the projection onto \(\mathcal {S}\) is always locally well behaved.

Lemma 1.5

Let \(\bar{x}\in \mathcal {S}\). There exists a neighborhood \({\mathcal {U}}\) of \(\bar{x}\) such that for all \(x \in {\mathcal {U}}\) the set \({{\,\textrm{proj}\,}}_{\mathcal {S}}(x)\) is non-empty, and for all \(y \in {{\,\textrm{proj}\,}}_{\mathcal {S}}(x)\) we have \({{\,\textrm{dist}\,}}(x, y) < \textrm{inj}(y)\). In particular, \(v = \textrm{Log}_y(x)\) is well defined and \(v \in \textrm{N}_y\mathcal {S}\).

Proof

Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) where \({{\,\textrm{proj}\,}}_{\mathcal {S}}\) is non-empty (given by Lemma 1.4). Given \({\bar{\delta }} < \textrm{inj}(\bar{x})\), the ball \({\bar{\textrm{B}}}(\bar{x}, \delta )\) is compact for all \(\delta < {\bar{\delta }}\). Define \(h(\delta ) = \inf _{x \in {\bar{\textrm{B}}}(\bar{x}, 2\delta )} \textrm{inj}(x)\) on the interval  . The function h is continuous with \(h(0) = \textrm{inj}(\bar{x}) > 0\) so we can pick \(\delta > 0\) such that \(\delta \le h(\delta )\). Let \(x \in \textrm{B}(\bar{x}, \delta ) \cap {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\). By definition of the projection we have \({{\,\textrm{dist}\,}}(x, y) \le {{\,\textrm{dist}\,}}(x, \bar{x}) < \delta \). Moreover, inequality (3) yields \({{\,\textrm{dist}\,}}(y, \bar{x}) \le 2 {{\,\textrm{dist}\,}}(x, \bar{x}) \le 2\delta \) so \(h(\delta ) \le \textrm{inj}(y)\). It follows that \({{\,\textrm{dist}\,}}(x, y) < \delta \le h(\delta ) \le \textrm{inj}(y)\) and \(v = \textrm{Log}_{y}(x)\) is well defined. The fact that v is in the normal cone follows from optimality conditions of projections. \(\square \)

. The function h is continuous with \(h(0) = \textrm{inj}(\bar{x}) > 0\) so we can pick \(\delta > 0\) such that \(\delta \le h(\delta )\). Let \(x \in \textrm{B}(\bar{x}, \delta ) \cap {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\). By definition of the projection we have \({{\,\textrm{dist}\,}}(x, y) \le {{\,\textrm{dist}\,}}(x, \bar{x}) < \delta \). Moreover, inequality (3) yields \({{\,\textrm{dist}\,}}(y, \bar{x}) \le 2 {{\,\textrm{dist}\,}}(x, \bar{x}) \le 2\delta \) so \(h(\delta ) \le \textrm{inj}(y)\). It follows that \({{\,\textrm{dist}\,}}(x, y) < \delta \le h(\delta ) \le \textrm{inj}(y)\) and \(v = \textrm{Log}_{y}(x)\) is well defined. The fact that v is in the normal cone follows from optimality conditions of projections. \(\square \)

Given a self-adjoint linear map H, we let \(\lambda _i(H)\) denote the ith largest eigenvalue of H, and \(\lambda _{\min }(H)\) and \(\lambda _{\max }(H)\) denote the minimum and maximum eigenvalues respectively.

2 Four equivalent properties

In this section, we establish that MB, PŁ, EB and QG (see Definitions 1.1 and 1.2) are equivalent around a local minimum \(\bar{x}\) when \(f\) is \({\textrm{C}^{2}}\). Specifically, we show the implication graph in Fig. 3.

It is well known that PŁ implies QG around minima: see references in Sect. 1.3. Perhaps the most popular argument relies on the bounded length of gradient flow trajectories under the more general Łojasiewicz inequality [2, 19, 68, 69, 79].

Definition 2.1

Let \(\bar{x}\) be a local minimum of \(f\) with associated set \(\mathcal {S}\) (1). We say \(f\) satisfies the Łojasiewicz inequality with constants  and \(\mu > 0\) around \(\bar{x}\) if

and \(\mu > 0\) around \(\bar{x}\) if

for all x in some neighborhood of \(\bar{x}\).

Notice that if \(f\) is Łojasiewicz with exponent \(\theta \) then it is Łojasiewicz with exponent \(\theta '\) for all \(\theta \le \theta ' < 1\) (though possibly in a different neighborhood). The case \(\theta = \frac{1}{2}\) is exactly the (PŁ) condition.

Proposition 2.2

(\({\text {P}{\L } }\Rightarrow {\text {QG}} \)) Suppose that \(f\) satisfies (Ł) around \(\bar{x}\in \mathcal {S}\). Then \(f\) satisfies

for all x sufficiently close to \(\bar{x}\). In particular, if \(\theta = \frac{1}{2}\), this shows \(\mu \)-(PŁ) \(\Rightarrow \) \(\mu \)-(QG).

We include a classical proof in Appendix A for completeness, with care regarding neighborhoods.

2.1 Two straightforward implications

In this section we show that \({\text {MB}} \Rightarrow {\text {QG}} \) and \({\text {EB}}\Rightarrow {\text {P}{\L } } \). These implications are known and direct. We give succinct proofs for completeness. The first one follows immediately from a Taylor expansion.

Proposition 2.3

(\({\text {MB}} \Rightarrow {\text {QG}} \)) Suppose that \(f\) is \({\textrm{C}^{2}}\) and satisfies \(\mu \)-(MB) at \(\bar{x}\in \mathcal {S}\). Then \(f\) satisfies \(\mu '\)-(QG) around \(\bar{x}\) for all \(\mu ' < \mu \).

Proof

Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) as in Lemma 1.5. Let \(d\) be the codimension of \(\mathcal {S}\) (around \(\bar{x}\)). Given \(\mu ' < \mu \), pick  and shrink \({\mathcal {U}}\) so that for all \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\) we have \(\lambda _{d}(\nabla ^2f(y)) \ge \mu ' + \varepsilon \). Given \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\), a Taylor expansion around y gives

and shrink \({\mathcal {U}}\) so that for all \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\) we have \(\lambda _{d}(\nabla ^2f(y)) \ge \mu ' + \varepsilon \). Given \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\), a Taylor expansion around y gives

where \(v = \textrm{Log}_y(x)\) is normal to \(\mathcal {S}\). We get the inequality \(\mu '\)-(QG) for all x sufficiently close to \(\bar{x}\). \(\square \)

Proposition 2.4

(\({\text {EB}} \Rightarrow {\text {P}{\L } } \)) Suppose that \(f\) is \({\textrm{C}^{2}}\) and satisfies \(\mu \)-(EB) around \(\bar{x}\in \mathcal {S}\). Then \(f\) satisfies \(\mu '\)-(PŁ) around \(\bar{x}\) for all \(\mu ' < \mu \).

Proof

Let \({\mathcal {U}}\) be the intersection of two neighborhoods of \(\bar{x}\): one where \(\mu \)-(EB) holds, and the other provided by Lemma 1.5. Given \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_{\mathcal {S}}(x)\), a Taylor expansion around y yields

where \(v = \textrm{Log}_y(x)\). Using the Cauchy–Schwarz inequality and the triangle inequality, it follows that

Finally, EB gives that \(\Vert v\Vert \le \frac{1}{\mu } \Vert \nabla f(x)\Vert \) so \(f(x) - f_{\mathcal {S}}\le \frac{1}{2\mu }\Vert \nabla f(x)\Vert ^2 + o(\Vert \nabla f(x)\Vert ^2)\). We get the inequality \(\mu '\)-(PŁ) for all x sufficiently close to \(\bar{x}\). \(\square \)

Remark 2.5

Suppose that \(f\) is only \({\textrm{C}^{1}}\) and \(\nabla f\) is locally L-Lipschitz continuous around \(\bar{x}\). If \(\mu \)-EB holds around \(\bar{x}\) then \(f\) satisfies PŁ with constant \(\frac{\mu ^2}{L}\) around \(\bar{x}\) [52, Thm. 2]. (This still holds locally on manifolds with the same proof.) The constant worsens but that is inevitable: see the example in Remark 2.19.

2.2 Quadratic growth implies error bound

In this section, we show that QG implies EB for \({\textrm{C}^{2}}\) functions. Other works proving this implication either assume that \(f\) is convex (see [35, Prop. 5.3], [52], and [39, Cor. 3.6]) or do not provide control on the constants (see [40, Cor. 3.2]). For this, we first characterize a distance growth rate when we move from \(\mathcal {S}\) in a normal direction (see Definition 1.3). Recall that for now \(\mathcal {S}\) is not necessarily smooth, and therefore \(\textrm{N}_{\bar{x}} \mathcal {S}\) is a priori only a cone.

Lemma 2.6

Let \(\bar{x}\in \mathcal {S}\) and \(v \in \textrm{N}_{\bar{x}}\mathcal {S}\) unitary. Then \({{\,\textrm{dist}\,}}(\textrm{Exp}_{\bar{x}}(tv), \mathcal {S}) = t + o(t)\) as \(t \rightarrow 0\), \(t \ge 0\).

Proof

Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) as in Lemma 1.5. Shrink \({\mathcal {U}}\) so that for all \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_{\mathcal {S}}(x)\) we have \({{\,\textrm{dist}\,}}(y, \bar{x}) < \textrm{inj}(\bar{x})\). Given a small parameter \(t > 0\), define \(x(t) = \textrm{Exp}_{\bar{x}}(tv)\) and let \(y(t) \in {{\,\textrm{proj}\,}}_{\mathcal {S}}(x(t))\). From (3) we have \({{\,\textrm{dist}\,}}(y(t), \bar{x}) \le 2t\), and it follows that \(y(t) \rightarrow \bar{x}\) as \(t \rightarrow 0\). Define \(u(t) = \textrm{Log}_{y(t)}(x(t))\) and \(w(t) = \textrm{Log}_{\bar{x}}(y(t))\). Then

as \(t \rightarrow 0\) because \(\Vert \Gamma _{y(t)}^{\bar{x}}u(t) - tv + w(t)\Vert = o(t)\) as \(t \rightarrow 0\) [89, Eq. (6)]. In particular, for all t sufficiently small we have

Let \(I \subseteq {\mathbb R}_{> 0}\) be the times when \(y(t) \ne \bar{x}\). If \(\inf I > 0\) then the final claim holds because \(y(t) = \bar{x}\) for small enough t. Suppose now that \(\inf I = 0\). Define \(r(t) = \frac{w(t)}{\Vert w(t)\Vert }\) on I, and let \({\mathcal {A}}\) be the set of accumulation points of r(t) as \(t \rightarrow 0\), \(t \in I\). Then \({\mathcal {A}}\) is included in the unit sphere and \({\mathcal {A}} \subseteq \textrm{T}_{\bar{x}}\mathcal {S}\) by definition. Given \(a \in {\mathcal {A}}\) and \(t \in I\), we can use \(\langle a, v\rangle \le 0\) to find that

It follows that \({{\,\textrm{dist}\,}}(x(t), \mathcal {S})^2 \ge t^2 - 4 t^2 {{\,\textrm{dist}\,}}(r(t), {\mathcal {A}}) + o(t^2) = t^2 + o(t^2)\) because \({{\,\textrm{dist}\,}}(r(t), {\mathcal {A}}) \rightarrow 0\) as \(t \rightarrow 0\). \(\square \)

Using this rate, we now find that QG implies the following bounds for \(\nabla ^2f\) in the normal cones of \(\mathcal {S}\). Note that the following proposition does not yet show QG \(\Rightarrow \) MB, because to establish MB we still need to argue that \(\mathcal {S}\) is a smooth set.

Proposition 2.7

Suppose \(f\) is \({\textrm{C}^{2}}\) and satisfies (QG) with constant \(\mu \) around \(\bar{x}\in \mathcal {S}\). Then for all \(y \in \mathcal {S}\) sufficiently close to \(\bar{x}\) and all \(v \in \textrm{N}_{y}\mathcal {S}\) we have

Proof

Let \({\mathcal {U}}\) be an open neighborhood of \(\bar{x}\) where \(\mu \)-QG holds. Let \(y \in \mathcal {S}\cap {\mathcal {U}}\) and \(v \in \textrm{N}_{y}\mathcal {S}\). For all small enough \(t > 0\), we obtain from a Taylor expansion that

where the inequality comes from QG and the following equality comes from Lemma 2.6. Take \(t \rightarrow 0\) to get the first inequality. The other inequality follows by Cauchy–Schwarz. \(\square \)

We deduce from this that, under QG, the gradient norm is locally bounded from below and from above by the distance to \(\mathcal {S}\), up to some constant factors. This notably secures EB.

Proposition 2.8

(\({\text {QG}} \Rightarrow {\text {EB}} \)) Suppose \(f\) is \({\textrm{C}^{2}}\) and satisfies \(\mu \)-(QG) around \(\bar{x}\in \mathcal {S}\). For all \(\mu ^\flat < \mu \) and \(\lambda ^\sharp > \lambda _{\max }(\nabla ^2f(\bar{x}))\) there exists a neighborhood \({\mathcal {U}}\) of \(\bar{x}\) such that for all \(x \in {\mathcal {U}}\) we have

Proof

Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) as in Lemma 1.5. Shrink \({\mathcal {U}}\) so that for all \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\) the inequalities of Proposition 2.7 hold. Now let \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\). Define \(v = \textrm{Log}_y(x)\) and \(\gamma (t) = \textrm{Exp}_y(tv)\), so that \(y = \gamma (0)\) and \(x = \gamma (1)\). Then a Taylor expansion of \(\nabla f\) around y yields

The Hessian is continuous so \(r(x) = o(\Vert v\Vert )\) as \(x \rightarrow \bar{x}\). Moreover, Proposition 2.7 provides that \(\Vert \nabla ^2f(y)[v]\Vert \ge \mu \Vert v\Vert \) so using the triangle inequality and the reverse triangle inequality we get

as \(x \rightarrow \bar{x}\). We get the result if we choose x close enough to \(\bar{x}\). \(\square \)

The first inequality in the previous proposition is exactly EB. In Proposition 2.4 we showed that EB implies PŁ when \(f\) is \({\textrm{C}^{2}}\). Thus, it also holds that QG implies PŁ.

Remark 2.9

When \(f\) is only \({\textrm{C}^{1}}\) it is not true that QG implies EB or PŁ. To see this, consider the function \(f(x) = 2x^2 + x^2\sin (1/\sqrt{|x|})\) (see Fig. 2). It is \({\textrm{C}^{1}}\) and satisfies QG around the minimum \(\bar{x}= 0\) because \(f(x) \ge x^2\). However, there are other local minima arbitrarily close to \({{\bar{x}}}\). Those are critical points with function value strictly larger than \(f(\bar{x})\), which disqualifies EB and PŁ around \(\bar{x}\).

Remark 2.10

Combining Propositions 2.2 and 2.8 (\(\mu \)-PŁ \(\Rightarrow \) \(\mu \)-QG and \(\mu \)-QG \(\Rightarrow \) \(\mu '\)-EB), one finds that \(\mu \)-PŁ implies \(\mu '\)-EB for \({\textrm{C}^{2}}\) functions. In fact, Karimi et al. [52] show that \(\mu \)-PŁ implies \(\mu \)-EB for \({\textrm{C}^{1}}\) functions, globally so if PŁ holds globally. Indeed, for x sufficiently close to \(\bar{x}\), we have

where the second inequality comes from PŁ, and the first inequality is QG (implied by PŁ).

Combining all previous implications, we obtain that MB implies PŁ. This is also straightforward from Taylor expansion arguments.

Corollary 2.11

(\({\text {MB}} \Rightarrow {\text {P}{\L } } \)) Suppose that \(f\) is \({\textrm{C}^{2}}\) and satisfies \(\mu \)-(MB) at \(\bar{x}\in \mathcal {S}\). Let \(0< \mu ' < \mu \). Then \(f\) satisfies \(\mu '\)-(PŁ) around \(\bar{x}\).

As a consequence, if \(f\) is \(\mu \)-MB on any subset of \(\mathcal {S}\) then for all \(\mu ' < \mu \) there exists a neighborhood of that subset where \(f\) is \(\mu '\)-PŁ. When \(f\) is \({\textrm{C}^{3}}\) the size of the neighborhood where PŁ holds can be controlled (to some extent) with the third derivative. A version of this corollary appears in [94, Ex. 2.9] with a different trade-off between control of the neighborhood and the constant \(\mu '\). Feehan [44, Thm. 6] shows a similar result in Banach spaces assuming that the function is \({\textrm{C}^{3}}\).

2.3 PL implies a smooth set of minima and MB

The MB property is explicitly strong because it presupposes a smooth set of minima, and it clearly implies PŁ, EB, and QG. It raises a natural question: do the latter also enforce some structure on the set of minima? In this section we show that the answer is yes for \({\textrm{C}^{2}}\) functions: if PŁ (or EB or QG) holds around \(\bar{x}\in \mathcal {S}\) then \(\mathcal {S}\) must be a submanifold around \(\bar{x}\).

To get a sense of why \(\mathcal {S}\) cannot have singularities, suppose that \(\mathcal {M}= {\mathbb R}^2\) and that around \(\bar{x}\) the set of minima is the union of two orthogonal lines (a cross) that intersect at \(\bar{x}\). Then it must be that \(\nabla ^2f(\bar{x}) = 0\) because the gradient is zero along both lines. However, if we assume PŁ then the spectrum of \(\nabla ^2f(x)\) must contain at least one positive eigenvalue bigger than \(\mu \) for all points \(x \in \mathcal {S}{\setminus } \{\bar{x}\}\) close to \(\bar{x}\), owing to the QG property. We obtain a contradiction because the eigenvalues of \(\nabla ^2f\) are continuous.

To generalize this intuition, we first show that PŁ induces a lower-bound on the positive eigenvalues of \(\nabla ^2f\).

Proposition 2.12

Suppose \(f\) is \({\textrm{C}^{2}}\) and \(\mu \)-(PŁ) around \(\bar{x}\in \mathcal {S}\). If \(\lambda \) is a non-zero eigenvalue of \(\nabla ^2f(\bar{x})\) then \(\lambda \ge \mu \).

Proof

Let \(\lambda > 0\) be an eigenvalue of \(\nabla ^2f(\bar{x})\) with associated unit eigenvector v. Then

The PŁ condition implies \(\frac{\lambda }{2}t^2 + o(t^2) \le \frac{1}{2\mu }{\lambda ^2 t^2 + o(t^2)}\), which gives the result as \(t \rightarrow 0\). \(\square \)

The latter argument is inconclusive when \(\lambda = 0\). Still, we do get control of the Hessian’s rank.

Corollary 2.13

Suppose \(f\) is \({\textrm{C}^{2}}\) and \(\mu \)-(PŁ) around \(\bar{x}\in \mathcal {S}\). Then \({{\,\textrm{rank}\,}}(\nabla ^2f(x)) = {{\,\textrm{rank}\,}}(\nabla ^2f(\bar{x}))\) for all \(x \in \mathcal {S}\) close enough to \(\bar{x}\).

Proof

Since \(f\) is \({\textrm{C}^{2}}\) the eigenvalues of \(\nabla ^2f\) are continuous and the map \(x \mapsto {{\,\textrm{rank}\,}}(\nabla ^2f(x))\) is lower semi-continuous, that is, if \(x \in \mathcal {M}\) is close enough to \(\bar{x}\) then \({{\,\textrm{rank}\,}}\nabla ^2f(x) \ge d\), where \(d= {{\,\textrm{rank}\,}}\nabla ^2f(\bar{x})\). Furthermore, if \(y \in \mathcal {S}\) is sufficiently close to \(\bar{x}\) then \(\lambda _{d+ 1}(\nabla ^2f(y)) < \mu \) by continuity of eigenvalues, and Proposition 2.12 then implies \(\lambda _{d+ 1}(\nabla ^2f(y)) = 0\). \(\square \)

This allows us to show that \(\nabla f\) aligns locally in a special way with the eigenspaces of \(\nabla ^2f\). This alignment will be particularly valuable to analyze second-order algorithms in Sect. 4.

Lemma 2.14

Suppose \(f\) is \({\textrm{C}^{2}}\) and satisfies (PŁ) around \(\bar{x}\in \mathcal {S}\). Let \(d= {{\,\textrm{rank}\,}}(\nabla ^2f(\bar{x}))\). Then the orthogonal projector P(x) onto the top \(d\) eigenspace of \(\nabla ^2f(x)\) is well defined when x is sufficiently close to \(\bar{x}\), and (with I denoting identity)

as \(x \rightarrow \bar{x}\). Additionally, if \(\nabla ^2f\) is locally Lipschitz continuous around \(\bar{x}\) then \(\Vert (I - P(x)) \nabla f(x)\Vert = O({{\,\textrm{dist}\,}}(x, \mathcal {S})^2) = O(\Vert \nabla f(x)\Vert ^2)\) as \(x \rightarrow \bar{x}\).

Proof

Given a point \(x \in \mathcal {M}\), let \(P(x) :\textrm{T}_x\mathcal {M}\rightarrow \textrm{T}_x\mathcal {M}\) denote the orthogonal projector onto the top \(d\) eigenspace of \(\nabla ^2f(x)\). This is well defined provided \(\lambda _{d}(\nabla ^2f(x)) > \lambda _{d+1}(\nabla ^2f(x))\). Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) as in Lemma 1.5. By continuity of the eigenvalues of \(\nabla ^2f\), we can shrink \({\mathcal {U}}\) so that for all \(x \in {\mathcal {U}}\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\) the projectors P(x) and P(y) are well defined. Given \(x \in {\mathcal {U}}\), we let \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\) and \(v = \textrm{Log}_{y}(x)\). Now define \(\gamma (t) = \textrm{Exp}_y(tv)\). A Taylor expansion of the gradient around y gives that

By Corollary 2.13, the rank of \(\nabla ^2f\) is locally constant on \(\mathcal {S}\) (equal to \(d\)) so \(\nabla ^2f(y) = P(y) \nabla ^2f(y)\) whenever x is sufficiently close to \(\bar{x}\) (using the bound \({{\,\textrm{dist}\,}}(y, \bar{x}) \le 2 {{\,\textrm{dist}\,}}(x, \bar{x})\) from (3)). It follows that

Notice that \(\Gamma _{v} \rightarrow I\) as \(x \rightarrow \bar{x}\) and \(P(y) \rightarrow P(\bar{x})\) as \(x \rightarrow \bar{x}\) so \((I - P(x))\Gamma _{v} P(y) \rightarrow 0\) as \(x \rightarrow \bar{x}\). It follows that \((I - P(x)) \nabla f(x) = o(\Vert v\Vert )\) as \(x \rightarrow \bar{x}\). The claim follows by noting that \(\Vert v\Vert = {{\,\textrm{dist}\,}}(x, \mathcal {S})\) and the fact that \({{\,\textrm{dist}\,}}(x, \mathcal {S})\) is commensurate \(\Vert \nabla f(x)\Vert \) (as shown in Proposition 2.8).

Suppose now that \(\nabla ^2f\) is locally Lipschitz continuous around \(\bar{x}\). Then P is also locally Lipschitz continuous around \(\bar{x}\) [93, Thm. 1] and \((I - P(x)) \Gamma _{v} P(y) = (I - P(x)) \Gamma _{v} (P(y) - \Gamma _{v}^{-1} P(x)) = O(\Vert v\Vert )\). Moreover, we have \(r(x) = O(\Vert v\Vert ^2)\) so it follows that \((I - P(x)) \nabla f(x) = O(\Vert v\Vert ^2)\) as \(x \rightarrow \bar{x}\). We conclude again with Proposition 2.8. \(\square \)

The lemma below exhibits a submanifold \(\mathcal {Z}\) that contains \(\bar{x}\). It need not coincide with the set of minima \(\mathcal {S}\). However, they do coincide if we assume that PŁ holds. This is the main argument to show that \(\mathcal {S}\) is locally a submanifold whenever \(f\) is PŁ and sufficiently regular.

Lemma 2.15

Suppose \(f\) is \({\textrm{C}^{p}}\) with \(p \ge 2\). Let \(\bar{x}\in \mathcal {S}\) and define \({\mathcal {U}} = \{x \in \mathcal {M}: {{\,\textrm{dist}\,}}(x, \bar{x}) < \textrm{inj}(\bar{x})\}\). Let \(P(\bar{x})\) denote the orthogonal projector onto the image of \(\nabla ^2f(\bar{x})\). Then the set

is a \({\textrm{C}^{p - 1}}\) embedded submanifold locally around \(\bar{x}\). If \(f\) is analytic then \(\mathcal {Z}\) is also analytic.

Proof

We build a local defining function for \(\mathcal {Z}\). Let \(d\) be the rank of \(\nabla ^2f(\bar{x})\) and \(u_1, \ldots , u_d\) be a set of orthonormal eigenvectors of \(\nabla ^2f(\bar{x})\) with associated eigenvalues \(\lambda _1, \ldots , \lambda _d> 0\). We define \(h :{\mathcal {U}} \rightarrow {\mathbb R}^d\) as \(h_i(x) = \langle u_i, \Gamma _{x}^{\bar{x}} \nabla f(x)\rangle \). Clearly, \(h(x) = 0\) if and only if \(x \in \mathcal {Z}\). The function h is \({\textrm{C}^{p - 1}}\) if \(f\) is \({\textrm{C}^{p}}\), and analytic if \(f\) is analytic. For all \(\dot{x} \in \textrm{T}_{\bar{x}}\mathcal {M}\) we have

It follows that \(\textrm{D}h(\bar{x})\) has full rank. Thus, \(\mathcal {Z}\) is a submanifold around \(\bar{x}\) with the stated regularity. \(\square \)

A result similar to Lemma 2.15 is presented in [31, Lem. 1] for Banach spaces. We are now ready for one of our main theorems, regarding the regularity of the set of local minimizers \(\mathcal {S}\) (1).

Theorem 2.16

Suppose \(f\) is \({\textrm{C}^{p}}\) with \(p \ge 2\) and satisfies (PŁ) around \(\bar{x}\in \mathcal {S}\). Then \(\mathcal {S}\) is a \({\textrm{C}^{p - 1}}\) submanifold of \(\mathcal {M}\) locally around \(\bar{x}\). If \(f\) is analytic then \(\mathcal {S}\) is also analytic.

Proof

We let \(d\) denote the rank of \(\nabla ^2f(\bar{x})\). By Corollary 2.13, \({{\,\textrm{rank}\,}}\nabla ^2f(y) = d\) for all \(y \in \mathcal {S}\) sufficiently close to \(\bar{x}\). We let \({\mathcal {U}} \subseteq \textrm{B}(\bar{x}, \textrm{inj}(\bar{x}))\) be a neighborhood of \(\bar{x}\) such that for all \(x \in {\mathcal {U}}\) the orthogonal projector \(P(x) :\textrm{T}_x\mathcal {M}\rightarrow \textrm{T}_x\mathcal {M}\) onto the top \(d\) eigenspace of \(\nabla ^2f(x)\) is well defined (it exists because eigenvalues of \(\nabla ^2f\) are continuous). Lemma 2.15 ensures that

is a submanifold around \(\bar{x}\). Clearly, \(\mathcal {S}\cap {\mathcal {U}} \subseteq \mathcal {Z}\) holds. We now show the other inclusion to obtain that \(\mathcal {S}\) and \(\mathcal {Z}\) coincide around \(\bar{x}\). From Lemma 2.14 we have \(\Vert \nabla f(x)\Vert \le \Vert P(x) \nabla f(x)\Vert + o(\Vert \nabla f(x)\Vert )\) as \(x \rightarrow \bar{x}\). Moreover, the triangle inequality gives \(\Vert P(x) \nabla f(x)\Vert \le \Vert (P(x) - \Gamma _{\bar{x}}^{x} P(\bar{x}) \Gamma _{x}^{\bar{x}}) \nabla f(x)\Vert + \Vert P(\bar{x}) \Gamma _{x}^{\bar{x}} \nabla f(x)\Vert \). By continuity of P we have \(P(x) - \Gamma _{\bar{x}}^{x} P(\bar{x}) \Gamma _{x}^{\bar{x}} = o(1)\) as \(x \rightarrow \bar{x}\) so it follows that

as \(x \rightarrow \bar{x}\). We conclude that \(P(\bar{x}) \Gamma _{x}^{\bar{x}} \nabla f(x) = 0\) implies \(\nabla f(x) = 0\) for all x sufficiently close to \(\bar{x}\). This confirms that \(\mathcal {Z}\subseteq \mathcal {S}\cap {\mathcal {U}}\) around \(\bar{x}\) because all critical points near \(\bar{x}\) are in \(\mathcal {S}\) by PŁ. \(\square \)

The codimension of \(\mathcal {S}\) is equal to the rank of \(\nabla ^2f\) on \(\mathcal {S}\), as expected. A similar result holds for Banach spaces when the function is assumed analytic [44, Thm. 1]. Around \(\bar{x}\), the set of all minima of \(f\) and \(\mathcal {S}\) coincide when PŁ holds. Hence, Theorem 2.16 implies that the set of minima of \(f\) is a submanifold around \(\bar{x}\). Using the QG property we now deduce that PŁ implies MB.

Corollary 2.17

If \(f\) is \({\textrm{C}^{2}}\) and \(\mu \)-(PŁ) around \(\bar{x}\in \mathcal {S}\) then it satisfies \(\mu \)-(MB) at \(\bar{x}\). The same holds if \(f\) is \(\mu \)-(EB) or \(\mu \)-(QG) rather than \(\mu \)-(PŁ).

Proof

Apply Theorem 2.16 to get that \(\mathcal {S}\) is locally a \({\textrm{C}^{1}}\) submanifold around \(\bar{x}\). Proposition 2.2 gives (QG) around \(\bar{x}\). Finally apply Proposition 2.7 to normal eigenvectors of \(\nabla ^2f(\bar{x})\). This yields that the normal eigenvalues are at least \(\mu \). We obtain the same result if we suppose \(\mu \)-EB or \(\mu \)-QG instead of \(\mu \)-PŁ. This is because they both imply \(\mu '\)-PŁ for \(\mu ' < \mu \) arbitrarily close to \(\mu \). Taking the limit \(\mu ' \rightarrow \mu \) gives \(\mu \)-MB. \(\square \)

Remark 2.18

(Connections to the distance function) Given a closed set \(\mathfrak {X}\subseteq \mathcal {M}\), the function \(f(x) = \frac{1}{2}{{\,\textrm{dist}\,}}_{\mathfrak {X}}(x)^2\) clearly satisfies QG. If \(f\) is \({\textrm{C}^{p}}\) with \(p \ge 2\) in a neighborhood of \(\mathfrak {X}\) then Theorem 2.16 applies, revealing that \(\mathfrak {X}\) is a \({\textrm{C}^{p-1}}\) submanifold. This question is of independent interest, see for example [15] for a proof when assuming \(p \ge 3\).

Remark 2.19

(Structure when \(f\) is only \({\textrm{C}^{1}}\)) Theorem 2.16 requires \(f\) to be \({\textrm{C}^{2}}\). And indeed, if \(f\) is only \({\textrm{C}^{1}}\) the set of minima may not be a submanifold. We provide two examples.

The function \(f(x, y) = \frac{x^2y^2}{x^2 + y^2}\) is \({\textrm{C}^{1}}\) and PŁ around the origin, yet its minimizers form a cross (see Fig. 2).Footnote 2 Incidentally, f is \(\frac{1}{\sqrt{2}}\)-EB but only \(\frac{1}{2}\)-PŁ around the origin, confirming that the constant worsens for the implication \({\text {EB}} \Rightarrow {\text {P}{\L } } \) when \(f\) is only \({\textrm{C}^{1}}\).

Additionally, let \(\mathfrak {X}\subseteq \mathcal {M}\) be a closed set and suppose that the distance function \({{\,\textrm{dist}\,}}_{\mathfrak {X}}\) is \({\textrm{C}^{1}}\) around \(\mathfrak {X}\) (such a set is called proximally smooth [32]). For \(f(x) = \frac{1}{2}{{\,\textrm{dist}\,}}_\mathfrak {X}(x)^2\), we find that \(\nabla f(x) = x - {{\,\textrm{proj}\,}}_\mathfrak {X}(x)\), meaning that \(f\) is PŁ around \(\mathfrak {X}\) with constant \(\mu = 1\). This holds in particular for all closed convex sets, yet many such sets fail to be \({\textrm{C}^{0}}\) submanifolds (e.g., consider a closed square in \(\mathcal {M}= {\mathbb R}^2\)). This provides further examples of \({\textrm{C}^{1}}\) functions satisfying PŁ yet for which the set of minima is not \({\textrm{C}^{0}}\).

Remark 2.20

(Restricted secant inequality) It is possible to show equivalences with even more properties. For example, as in [101], we say \(f\) satisfies the restricted secant inequality (RSI) with constant \(\mu \) around \(\bar{x}\in \mathcal {S}\) if \(\langle \nabla f(x), v\rangle \ge \mu {{\,\textrm{dist}\,}}(x, \mathcal {S})^2\) for all x in a neighborhood of \(\bar{x}\), where \(v = -\textrm{Log}_x(y)\) and \(y \in {{\,\textrm{proj}\,}}_\mathcal {S}(x)\). From simple Taylor expansion arguments, we find that \(\mu \)-MB implies \(\mu '\)-RSI for all \(\mu ' < \mu \). By Cauchy–Schwarz, we also find that \(\mu \)-RSI implies \(\mu \)-EB for \({\textrm{C}^{1}}\) functions (see [52]). It follows that for \({\textrm{C}^{2}}\) functions RSI is also equivalent to the four properties that we consider.

Remark 2.21

(Other Łojasiewicz exponents) The PŁ condition is exactly (Ł) with exponent \(\theta = \frac{1}{2}\). We comment here about other values of \(\theta \). First, suppose \(f\) is \({\textrm{C}^{1}}\) and \(\nabla f\) locally Lipschitz continuous. If \(f\) is non-constant around \(\bar{x}\in \mathcal {S}\) then it cannot satisfy (Ł) with an exponent \(\theta < \frac{1}{2}\) around \(\bar{x}\). This is because these assumptions are incompatible with the growth property from Proposition 2.2. See also [1, Thm. 4] for an algorithmic perspective on this. Now suppose \(f\) is \({\textrm{C}^{2}}\) and satisfies (Ł) with exponent \(\theta \) around \(\bar{x}\in \mathcal {S}\). If  and \(\nabla ^2f\) is Lipschitz continuous around \(\bar{x}\) then PŁ holds around \(\bar{x}\). Furthermore, if \(\theta = \frac{2}{3}\) and \(f\) is additionally \({\textrm{C}^{3}}\) then PŁ also holds around \(\bar{x}\). We include a proof of these observations in Appendix B.

and \(\nabla ^2f\) is Lipschitz continuous around \(\bar{x}\) then PŁ holds around \(\bar{x}\). Furthermore, if \(\theta = \frac{2}{3}\) and \(f\) is additionally \({\textrm{C}^{3}}\) then PŁ also holds around \(\bar{x}\). We include a proof of these observations in Appendix B.

3 Stability of minima and linear rates

In this section, we consider two types of algorithmic questions: the stability of minima and standard local convergence rates. We review some necessary classical arguments, taking this opportunity to generalize some of them to accommodate non-isolated minima. This will serve us well to analyze algorithms in Sect. 4.

3.1 Capture for sets of non-isolated minima

Typically, global convergence analyses of optimization algorithms merely guarantee that iterates accumulate only at critical points. The set of accumulation points may be empty (when the iterates diverge). Worse, it may even be infinite when minima are not isolated. See [2, §3.2.1] and [18, §5.3] for examples of pathological functions for which reasonable algorithms (such as gradient descent) produce iterates with continuous sets of accumulation points. The latter issue cannot occur when minima are isolated.

What kind of stability results still hold when minima are not isolated? Consider an algorithm generating iterates as \(x_{k + 1} = F_k(x_k, \ldots , x_0)\), where \(F_k\) is a descent mapping: it satisfies \(f(F_k(x_k, \ldots , x_0)) \le f(x_k)\) for all \(x_k, \ldots , x_0 \in \mathcal {M}\). Many deterministic algorithms fall in this category, including gradient descent and trust-region methods under suitable hypotheses. The standard capture theorem asserts that if the iterates generated by such descent mappings get sufficiently close to an isolated local minimum then the sequence eventually converges to it (under a few weak assumptions) [16, Prop. 1.2.5], [4, Thm. 4.4.2]. The result can be easily extended to a compact set of non-isolated local minima that satisfies several properties that we define now.

Definition 3.1

We say \(\mathfrak {X}\subseteq \mathcal {M}\) is isolated from critical points if there exists a neighborhood \({\mathcal {U}}\) of \(\mathfrak {X}\) such that \(x \in {\mathcal {U}}\) and \(\nabla f(x) = 0\) imply \(x \in \mathfrak {X}\).

Note that the points in \(\mathfrak {X}\) do not need to be isolated: the set \(\mathfrak {X}\) may be a continuum of critical points. It is clear that if \(\mathfrak {X}\subseteq \mathcal {S}\) (1) is isolated from critical points then there exists a neighborhood \({\mathcal {U}}\) of \(\mathfrak {X}\) such that \(f(x) > f_{\mathcal {S}}\) for all \(x \in {\mathcal {U}} {\setminus } \mathfrak {X}\), where \(f_{\mathcal {S}}\) is the value of \(f\) on \(\mathfrak {X}\). The capture result below, based on [4, Thm. 4.4.2], states that if the set of minima \(\mathfrak {X}\subseteq \mathcal {S}\) is both compact and isolated from critical points then it traps the iterates generated by all reasonable descent algorithms. A key hypothesis is that the steps have to be small around local minima: that is typically the case.

Definition 3.2

An algorithm which generates sequences on \(\mathcal {M}\) has the vanishing steps property on a set \(\mathfrak {X}\subseteq \mathcal {M}\) if there exists a neighborhood \({\mathcal {U}}\) of \(\mathfrak {X}\) and a continuous function \(\eta :\mathcal {M}\rightarrow {\mathbb R}_+\) with \(\eta (\mathfrak {X}) = 0\) such that, if \(x_k\) is an iterate in \({\mathcal {U}}\), then the next iterate \(x_{k + 1}\) satisfies

We say that the algorithm has the (VS) property at a point \(\bar{x}\in \mathcal {S}\) if it holds on the set \(\{\bar{x}\}\).

Proposition 3.3

(Capture of iterates) Let \(\mathfrak {X}\) be a compact subset of \(\mathcal {S}\) isolated from critical points. Consider an algorithm that produces iterates as \(x_{k + 1} = F_k(x_k, \ldots , x_0)\), where \(F_k\) is a descent mapping. Assume that it satisfies the (VS) property on \(\mathfrak {X}\). Also suppose that the sequences generated by this algorithm accumulate only at critical points of \(f\). Then there exists a neighborhood \({\mathcal {U}}\) of \(\mathfrak {X}\) such that if a sequence enters \({\mathcal {U}}\) then all subsequent iterates are in \({\mathcal {U}}\) and \({{\,\textrm{dist}\,}}(x_k, \mathfrak {X}) \rightarrow 0\).

Proof

There exists a compact neighborhood \({\mathcal {V}}\) of \(\mathfrak {X}\) such that \({\mathcal {V}} \setminus \mathfrak {X}\) does not contain any critical point and \(f(x) > f_{\mathcal {S}}\) for all \(x \in {\mathcal {V}} {\setminus } \mathfrak {X}\). The (VS) property implies that there exists an open neighborhood \({\mathcal {W}}\) of \(\mathfrak {X}\) included in \({\mathcal {V}}\) such that for all \(k \in {\mathbb N}\), \(x_k \in {\mathcal {W}}\) and \(x_{k - 1}, \ldots , x_0 \in \mathcal {M}\) we have \(F_k(x_k, \ldots , x_0) \in {\mathcal {V}}\). The set \({\mathcal {V}} {\setminus } {\mathcal {W}}\) is compact, and we let \(\alpha > f_{\mathcal {S}}\) denote the minimum of \(f\) on this set. We define \({\mathcal {U}} = \{x \in {\mathcal {V}}: f(x) < \alpha \}\), which is included in \({\mathcal {W}}\) by minimality of \(\alpha \). Now let \(K \in {\mathbb N}\), \(x_K \in {\mathcal {U}}\) and \(x_{K - 1}, \ldots , x_0 \in \mathcal {M}\). Then we have \(F_K(x_K, \ldots , x_0) \in {\mathcal {V}}\) by definition of \({\mathcal {W}}\). Moreover, \(F_K\) is a descent mapping so \(f(F_K(x_K, \ldots , x_0)) < \alpha \), and it implies that \(F_K(x_K, \ldots , x_0) \in {\mathcal {U}}\). It follows that \(x_{K + 1}\) is in \({\mathcal {U}}\) and all subsequent iterates are also in \({\mathcal {U}}\). Now we show that \(\{x_k\}\) converges to \(\mathfrak {X}\). The sequence \(\{x_k\}\) eventually stays in a compact set (because \(x_k \in {\mathcal {U}} \subseteq {\mathcal {V}}\) for all \(k \ge K\)) so it has a non-empty and compact set of accumulation points that we denote by \({\mathcal {A}}\). Then \({\mathcal {A}} \subseteq \mathfrak {X}\) because \({\mathcal {A}} \subseteq {\mathcal {V}}\) and the only critical points in \({\mathcal {V}}\) are in \(\mathfrak {X}\). The set of accumulation points of \(\{{{\,\textrm{dist}\,}}(x_k, {\mathcal {A}})\}\) is \(\{0\}\) (because \(\{x_k\}\) is bounded). So we deduce that \(\lim _{k \rightarrow +\infty } {{\,\textrm{dist}\,}}(x_k, \mathfrak {X}) \le \lim _{k \rightarrow +\infty } {{\,\textrm{dist}\,}}(x_k, {\mathcal {A}}) = 0\). \(\square \)

This statement does not guarantee that the iterates converge to a specific point, but merely that \({{\,\textrm{dist}\,}}(x_k, \mathfrak {X}) \rightarrow 0\). Notice that we do not require any particular structure for \(\mathfrak {X}\) nor any form of function growth. The assumptions on the mappings \(F_k\) are also mild.

However, we need \(\mathfrak {X}\) to be compact and this cannot be relaxed. Indeed, consider the function \(f:{\mathbb R}^2 \rightarrow {\mathbb R}\) defined as \(f(x, y) = \exp (x)y^2\). The set of global minima is \(\mathfrak {X}= \{(x, y) \in {\mathbb R}^2: y = 0\}\), and it contains all the critical points of \(f\). Consider the update rule \((x_{k + 1}, y_{k + 1}) = (x_k - y_k^2, y_k)\). It satisfies the descent condition because \(f(x_{k + 1}, y_{k + 1}) = \exp (-y_k^2)f(x_k, y_k)\) for all k. The distance assumption (VS) also holds because \({{\,\textrm{dist}\,}}((x_{k + 1}, y_{k + 1}), (x_k, y_k)) = {{\,\textrm{dist}\,}}((x_k - y_k^2, y_k), (x_k, y_k)) = y_k^2\). However, the sequence \(\{{{\,\textrm{dist}\,}}((x_k, y_k), \mathfrak {X})\}\) is constant (and not converging to zero) even if we initialize the algorithm arbitrarily close to \(\mathfrak {X}\) (but not exactly on \(\mathfrak {X}\)).

The vanishing steps property is a reasonable assumption. When \(\mathcal {M}= {\mathbb R}^n\), many optimization algorithms have an update rule of the form \(x_{k + 1} = x_k + s_k\) and the vector \(s_k\) is small if \(x_k\) is close to a local minimum (e.g., \(s_k = -\alpha _k \nabla f(x_k)\) with bounded \(\alpha _k\)). For a general manifold \(\mathcal {M}\), algorithms produce iterates as \(x_{k + 1} = \textrm{R}_{x_k}(s_k)\), where \(\textrm{R}:\textrm{T}\mathcal {M}\rightarrow \mathcal {M}:(x, s) \mapsto \textrm{R}_x(s)\) is a retraction [24, Def. 3.47]. Specifically, for all \((x, s) \in \textrm{T}\mathcal {M}\) the curve \(c(t) = \textrm{R}_x(ts)\) satisfies \(c(0) = x\) and \(c'(0) = s\).

To ensure vanishing steps, we must control the distance traveled by retractions. We let \(c_{\textrm{r}}\ge 1\) be such that

for all \((x, s) \in \textrm{T}\mathcal {M}\) where \(\Vert s\Vert \) is smaller than some fixed positive radius. For \(\textrm{R}= \textrm{Exp}\) (including the Euclidean case) the choice \(c_{\textrm{r}}= 1\) is valid for all s. More generally, \(c_{\textrm{r}}\) can be set arbitrarily close to 1 for all retractions as long as the radius is sufficiently small [86, Lem. 6].

3.2 Lyapunov stability and convergence to a single point

Pathological behavior such as a continuous set of accumulation points can be ruled out assuming Łojasiewicz inequalities. In this case, local minima are stable for a variety of algorithms, even without compactness hypothesis (in contrast to Proposition 3.3). This in turn ensures that the iterates converge to a single point. In this section, we review arguments from [2], [10, Lem. 2.6], [21, Thm. 14] and [81, Thm. 4].

A central property to obtain convergence to a single point is a bound on the path length of the iterates. We make this precise in the following definition.

Definition 3.4

An algorithm which generates sequences on \(\mathcal {M}\) has the bounded path length property on a set \(\mathfrak {X}\subseteq \mathcal {M}\) if the following is true. There exist a neighborhood \({\mathcal {U}}\) of \(\mathfrak {X}\) and a continuous function \(\gamma :\mathcal {M}\rightarrow {\mathbb R}_+\) with \(\gamma (\mathfrak {X}) = 0\) such that, if \(x_L, \ldots , x_K \in \mathcal {M}\) are consecutive points generated by the algorithm and which are all in \({\mathcal {U}}\), then

We say that the algorithm has the (BPL) property at a point \(\bar{x}\in \mathcal {M}\) if it does so on the set \(\{\bar{x}\}\).

Here we think of the algorithm as an optimization method with some fixed hyper-parameters. The definition is given for a generic set \(\mathfrak {X}\) but the bounded path length property is usually only satisfied around local minima. Combined with the vanishing steps property, bounded path length ensures stability of local minima. For comparison, Absil et al. [2] and Attouch et al. [10] deduce (BPL) from a function decrease condition. Here, we factor out (BPL) to enable analysis of algorithms that do not satisfy that decrease condition.

Proposition 3.5

(Lyapunov stability) Suppose that an algorithm satisfies the (VS) and (BPL) properties at \(\bar{x}\in \mathcal {M}\). Given a neighborhood \({\mathcal {U}}\) of \(\bar{x}\), there exists a neighborhood \({\mathcal {V}}\) of \(\bar{x}\) such that if a sequence generated by this algorithm enters \({\mathcal {V}}\) then all subsequent iterates stay in \({\mathcal {U}}\).

Proof

The set \({\mathcal {U}}\) contains an open ball of radius \(\delta _u > 0\) around \(\bar{x}\) in which the (BPL) and (VS) properties are satisfied with some functions \(\gamma \) and \(\eta \). By continuity of \(\eta \) there exists an open ball \({\mathcal {W}}\) centered on \(\bar{x}\) of radius \(\delta _w\) that satisfies \(\delta _w + \eta (x) < \delta _u\) for all \(x \in {\mathcal {W}}\). Likewise, by continuity of \(\gamma \), there exists an open ball \({\mathcal {V}} \subset {\mathcal {W}}\) of radius \(\delta _v > 0\) around \(\bar{x}\) such that for all \(x \in {\mathcal {V}}\) we have \(\delta _v + \gamma (x) < \delta _w\). Suppose that an iterate \(x_L\) is in \({\mathcal {V}}\). For contradiction, let \(K \ge L\) be the first index such that \(x_{K + 1} \notin \textrm{B}(\bar{x}, \delta _u)\). We deduce from the triangle inequality and the (BPL) property that

It follows that \(x_K \in {\mathcal {W}}\). Using again the triangle inequality we find \({{\,\textrm{dist}\,}}(x_{K + 1}, \bar{x}) \le {{\,\textrm{dist}\,}}(x_K, \bar{x}) + {{\,\textrm{dist}\,}}(x_K, x_{K + 1}) \le \delta _w + \eta (x_K) < \delta _u\). This implies that \(x_{K + 1}\) is in \(\textrm{B}(\bar{x}, \delta _u)\): a contradiction. \(\square \)

In particular, this excludes that the iterates diverge. We can also guarantee that accumulation points are actually limit points.

Corollary 3.6

Suppose that an algorithm satisfies the (VS) and (BPL) properties at \(\bar{x}\in \mathcal {M}\). If it generates a sequence that accumulates at \(\bar{x}\) then the sequence converges to \(\bar{x}\).

Proof

Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\). From Proposition 3.5 there is a neighborhood \({\mathcal {V}}\) such that if an iterate is in \({\mathcal {V}}\) then all subsequent iterates are in \({\mathcal {U}}\). Since \(\bar{x}\) is an accumulation point we know that such an iterate exists. Repeat with a sequence of smaller and smaller neighborhoods of \(\bar{x}\). \(\square \)

Many optimization algorithms generate sequences that accumulate only at critical points. In that scenario, we can deduce that the sequence converges to a point, provided that it gets close enough to a set where (VS) and (BPL) hold.

Corollary 3.7

Consider an algorithm that satisfies the (VS) and (BPL) properties on a set \(\mathfrak {X}\subseteq \mathcal {M}\) and let \(\bar{x}\in \mathfrak {X}\). Let \({\mathcal {U}}\) be a neighborhood of \(\bar{x}\) such that if a sequence generated by this algorithm accumulates at a point \(x_\infty \in {\mathcal {U}}\) then \(x_\infty \) is in \(\mathfrak {X}\). There exists a neighborhood \({\mathcal {V}}\) of \(\bar{x}\) such that if a sequence enters \({\mathcal {V}}\) then all subsequent iterates stay in \({\mathcal {U}}\) and converge to some \(x_\infty \in {\mathcal {U}} \cap \mathfrak {X}\).

Proof

The set \({\mathcal {U}}\) contains a compact neighborhood \({\mathcal {B}}\) of \(\bar{x}\) such that the (VS) and (BPL) properties hold on \({\mathcal {B}} \cap \mathfrak {X}\). From Proposition 3.5 there exists a neighborhood \({\mathcal {V}}\) of \(\bar{x}\) such that if \(\{x_k\}\) enters \({\mathcal {V}}\) then it stays in \({\mathcal {B}}\). The set \({\mathcal {B}}\) is compact so \(\{x_k\}\) has at least one accumulation point \(x_\infty \in {\mathcal {B}}\). Our hypotheses ensure that \(x_\infty \) must be in \(\mathfrak {X}\) since \({\mathcal {B}} \subseteq {\mathcal {U}}\). We conclude with Corollary 3.6. \(\square \)

The conclusions of Corollary 3.7 are similar to the ones in [2, Prop. 3.3] and [10, Thm. 2.10].Footnote 3

We describe below the argument that Absil et al. [2] used to show that many gradient descent algorithms satisfy (BPL) around points where \(f\) is Łojasiewicz. We say that the sequence \(\{x_k\}\) satisfies the strong decrease property around \(\bar{x}\in \mathcal {M}\) if there exists \(\sigma > 0\) such that

whenever \(x_k\) is sufficiently close to \(\bar{x}\), as introduced by Absil et al. [2].

Lemma 3.8

Suppose that \(f\) satisfies (Ł) around \(\bar{x}\in \mathcal {S}\) with constants \(\theta \) and \(\mu \). If an algorithm generates sequences \(\{x_k\}\) that satisfy (4) around \(\bar{x}\) then it satisfies the (BPL) property at \(\bar{x}\) with

We include a proof of this statement in Appendix C for completeness. In fact, the algorithm would still satisfy the (BPL) property under the more general Kurdyka–Łojasiewicz assumption (see [2, §3.2.3]). In practice, many first-order algorithms (including gradient descent with constant step-sizes or with line-search) generate sequences with the strong decrease condition (4), as shown in [2, §4].

3.3 Asymptotic convergence rate

To conclude this section, we briefly review classical linear convergence results for gradient methods under the PŁ assumption, as needed for Sect. 4. Proofs are in Appendix D for completeness. It is well known that gradient descent with appropriate step-sizes converges linearly to a minimum when \(f\) satisfies PŁ globally and has a Lipschitz continuous gradient [81]. The same arguments lead to an asymptotic linear convergence rate when PŁ holds only locally. We say that the sequence \(\{x_k\}\) satisfies the sufficient decrease property with constant \(\omega > 0\) if

whenever \(x_k\) is sufficiently close to a point \(\bar{x}\). The classical result below follows from that inequality [81].

Proposition 3.9

Let \(\{x_k\}\) be a sequence of iterates converging to some \(\bar{x}\in \mathcal {S}\) and satisfying (5). Suppose \(f\) satisfies (PŁ) around \(\bar{x}\) with constant \(\mu > 0\). Then the sequence \(\{f(x_k)\}\) converges linearly to \(f_{\mathcal {S}}\) with rate \(1 - 2 \omega \mu \). Moreover, \(\{\Vert \nabla f(x_k)\Vert \}\) and \(\{{{\,\textrm{dist}\,}}(x_k, \mathcal {S})\}\) converge linearly to zero with rate \(\sqrt{1 - 2 \omega \mu } \le 1 - \omega \mu \).

In the case where \(\mathcal {M}\) is a Euclidean space and \(\textrm{R}_x(s) = x + s\), it is well known that the sufficient decrease condition (5) holds for many first-order algorithms when \(\nabla f\) is Lipschitz continuous. This is also true for a general manifold \(\mathcal {M}\) and retraction \(\textrm{R}\) as we briefly describe now. We say that \(f\) and the retraction \(\textrm{R}\) locally satisfy a Lipschitz-type property around \(\bar{x}\in \mathcal {S}\) if there exists \(L> 0\) such that

for all x close enough to \(\bar{x}\) and s small enough. Note that if \(f\circ \textrm{R}\) is \({\textrm{C}^{2}}\) then the inequality (6) is always (locally) satisfied. It is a classical result that (6) implies sufficient decrease for gradient descent with constant step-sizes. This yields the following statement.

Proposition 3.10

Suppose \(f\) satisfies \(\mu \)-(PŁ) around \(\bar{x}\in \mathcal {S}\). Also assume that (6) holds around \(\bar{x}\). Let \(\{x_k\}\) be a sequence of iterates generated by gradient descent with constant step-size  , that is, \(x_{k + 1} = \textrm{R}_{x_k}(-\gamma \nabla f(x_k))\). Given a neighborhood \({\mathcal {U}}\) of \(\bar{x}\), there exists a neighborhood \({\mathcal {V}}\) of \(\bar{x}\) such that if an iterate enters \({\mathcal {V}}\) then the sequence converges linearly to some \(x_\infty \in {\mathcal {U}} \cap \mathcal {S}\) with rate \(\sqrt{1 - 2\mu (\gamma - \frac{L}{2}\gamma ^2)}\).

, that is, \(x_{k + 1} = \textrm{R}_{x_k}(-\gamma \nabla f(x_k))\). Given a neighborhood \({\mathcal {U}}\) of \(\bar{x}\), there exists a neighborhood \({\mathcal {V}}\) of \(\bar{x}\) such that if an iterate enters \({\mathcal {V}}\) then the sequence converges linearly to some \(x_\infty \in {\mathcal {U}} \cap \mathcal {S}\) with rate \(\sqrt{1 - 2\mu (\gamma - \frac{L}{2}\gamma ^2)}\).

4 Aiming for superlinear convergence