Abstract

A dual logarithmic barrier method for solving large, sparse semidefinite programs is proposed in this paper. The method avoids any explicit use of the primal variable X and therefore is well-suited to problems with a sparse dual matrix S. It relies on inexact Newton steps in dual space which are computed by the conjugate gradient method applied to the Schur complement of the reduced KKT system. The method may take advantage of low-rank representations of matrices \(A_i\) to perform implicit matrix-vector products with the Schur complement matrix and to compute only specific parts of this matrix. This allows the construction of the partial Cholesky factorization of the Schur complement matrix which serves as a good preconditioner for it and permits the method to be run in a matrix-free scheme. Convergence properties of the method are studied and a polynomial complexity result is extended to the case when inexact Newton steps are employed. A Matlab-based implementation is developed and preliminary computational results of applying the method to maximum cut and matrix completion problems are reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(S{\mathbb {R}}^{n\times n}\) denote the set of real symmetric matrices of order n and let \(U \bullet V\) denote the inner product between two matrices, defined by \(trace (U^T V)\). Consider the standard semidefinite programming (SDP) problem in its primal form

where \(A_i, C \in S{\mathbb {R}}^{n\times n}\) and \(b\in {\mathbb {R}}^m\) are given and \(X \in S{\mathbb {R}}^{n\times n}\) is unknown and assume that matrices \(A_i, i=1,2,\dots ,m\) are linearly independent, that is \(\sum _{i=1}^m A_i d_i = 0\) implies \(d_i=0\), \(i=1,\ldots ,m\). The dual form of the SDP problem associated with (1) is:

where \(y \in {\mathbb {R}}^m\) and \(S \in S{\mathbb {R}}^{n\times n}\).

In this paper we are concerned with the solution of problems where the dual variable S is very sparse. Such situations arise when matrices \(A_i\), \(i=1,\ldots ,m\) and C share the sparsity patterns [25], and are common in relaxations of optimization problems such as, e.g. maximum cut and matrix completion problems [3, 5].

Semidefinite programming is a well established area of convex optimization [8, 21, 26]. Over the last two decades many powerful techniques have been developed for the solution of SDP problems. Although the majority of developments in this area relied on interior point methods, there have been also successful attempts to employ different techniques such as a specialized variant of bundle method [14], augmented Lagrangian approach [27] or modified barrier method [15].

Interior point methods for SDP have an advantage: they have provable low worst-case iteration complexity [8, 21]. On the other hand, the solution of real-life SDPs still remains a computational challenge because the linear systems involved in interior point methods for SDP have dimensions \(n^2 + m\) or m for augmented system or Schur complement, respectively. Such systems may be prohibitive for any larger values of n and m. Most of standard IPM implementations work with the \(m \times m\) Schur complement linear system. For larger values of m building, storing and inverting this matrix is still a major challenge. There have been of course several attempts to overcome these difficulties. They usually rely on an application of Krylov subspace methods for solving the linear equations resulting from the reduced KKT systems [22, 23].

The challenge originates from the complexity of the reduced KKT systems which are large, involve products of matrices and often produce dense matrices of very large dimension. In the large-scale setting, direct methods of linear algebra are not an option. Iterative methods have to be employed. They are efficient in the early stage of Interior Point procedures, but they struggle in the late stage due to ill conditioning of matrices involved [23].

In quest for a perfect interior point method for SDP one has to compromise between several conflicting objectives. An ideal algorithm would:

-

share the best known worst-case iteration complexity,

-

have low memory requirements (avoid storing dense matrices of size n or m),

-

efficiently compute the Newton direction.

The method presented in this paper is an attempt to satisfy these objectives at least for a wide class of SDP problems which enjoy the property of having sparse dual matrix S.

We propose a dual logarithmic barrier method which maintains only the dual solution of the problem (y, S) and avoids any operations which could involve the primal matrix X (which is likely to be dense). Benson et al. [4] have analysed the dual potential reduction Interior Point method and have demonstrated certain advantages resulting from the ability to avoid using explicit primal matrix X. In a later technical report Choi and Ye [6] mentioned a possibility of employing an iterative linear algebra approach in the context of algorithm [4]. Without providing convergence analysis, authors observed that in this latter approach the algorithm terminates at a primal-dual sub-optimal solution depending on the accuracy imposed on the iterative linear solver. Moreover, they proposed to use a simple diagonal preconditioner for the linear system which naturally had only a very limited ability to improve the spectral properties of the system. An alternative approach has been introduced in [23] in the context of primal-dual method. In this approach in the late steps of the method a decomposition of the Schur complement is performed giving rise to a projected Schur complement to which the iterative method is applied.

The algorithm we propose here makes steps in inexact Newton directions which are computed by an approximate solution of the reduced KKT systems. The system is reduced to the Schur complement form and solved with the preconditioned conjugate gradient method. The Schur complement does not have to be constructed or stored because the CG algorithm needs only to perform matrix-vector multiplications with it and these operations can be executed as a sequence of simple matrix-vector products which involve only very sparse matrices. The procedure is particularly attractive when matrices \(A_i\) are low rank. Krylov-subspace methods are known to benefit from clustering of the spectrum of linear system. Unfortunately, there is no chance for this to happen in the case of systems arising from interior point methods. To improve the spectral properties of the linear system we employ a partial Cholesky preconditioner [1, 12].

Much of the effort in the analysis of the proposed approach has gone into designing implementable conditions for the acceptable inexactness in the Newton direction and choosing an appropriate preconditioned iterative method which can meet such conditions. The used preconditioner is compatible with the matrix-free regime of the whole method and still delivers the necessary improvement of the spectral properties of the linear system. We are not aware of any method prior to that one which would meet such conditions, except for the strategies proposed in [22, 23] that might provide an inspiration to create a viable alternative.

We also design a short-step variant of the method and show that it enjoys the \(\mathcal{O}(\sqrt{n} \ln \frac{n}{\varepsilon })\) worst-case iteration complexity.

The paper is organised as follows. After a brief summary of notation used in SDP, in Sect. 2 a framework of the dual barrier algorithm is presented. Next, in Sect. 3 an inexact variant of the method is introduced and some basic facts concerning the proximity of the dual iterates to the central path are discussed. Several technical results needed to establish the convergence of the Inexact Newton method are presented in Sect. 4. Then a complete analysis of the short-step inexact dual logarithmic barrier method is delivered in Sect. 5. The methods proposed in this paper have been implemented. The computation of inexact Newton directions employs the preconditioned conjugate gradient algorithm to find an approximate solution of the Schur complement form of the reduced KKT systems. The computation of a preconditioner and the matrix-free implementation of the method are discussed in Sect. 6. The preliminary computational results obtained with the methods applied to solve the SDP relaxations of maximum cut and matrix completion problems are presented in Sect. 7 and finally the conclusions are given in Sect. 8.

Notation The norm of the matrix associated with the inner product between two matrices \(U \bullet V = trace (U^T V)\) is the Frobenius norm, written \(\Vert U\Vert _F := (U\bullet U )^{1/2}\), while \(\Vert \cdot \Vert _2\) denotes the operator norm of a matrix. Norms on vectors will always be Euclidean.

Let \(\mathcal{A}\) be the linear operator \(\mathcal{A}: S{\mathbb {R}}^{n\times n}\rightarrow {\mathbb {R}}^m\) defined by

then its transposition \(\mathcal{A}^T\) is a mapping from \({\mathbb {R}}^m\) to \(S{\mathbb {R}}^{n\times n}\) given by

Moreover, let \(A^T\) denote the matrix representation of \(\mathcal{A}^T\) with respect to the standard bases of \({\mathbb {R}}^n\), that is

and

where mat is the “inverse” operator to vec (i.e., \(mat(vec(A_i)) = A_i \in S{\mathbb {R}}^{n\times n}\)).

Finally, given a symmetric matrix G, let \(G \odot G\) denote the operator from \(S{\mathbb {R}}^{n\times n}\) to itself given by

The notation \(U \otimes V\) indicates the standard Kronecker product of U and V.

2 The dual barrier algorithm

Let us consider the dual barrier problem parametrized by \(\mu >0\) (see [8, 21])

Let \(X=\mu S^{-1}\succ 0\), then the first-order optimality conditions for this problem are given by:

We adopt the dual-path following method described in [8, 21] that we will briefly describe below. Chosen a strictly dual feasible pair (y, S) and a scalar \(\mu >0\), damped Newton steps for the problem \(F_\mu (X,y,S)=0\) are made, maintaining S positive definite. Let \((X_{\ell },y_{\ell },S_{\ell })\) be the current primal-dual iterate, then the Newton step \((\varDelta X, \varDelta y, \varDelta S)\) is the solution of the following linear system

Computing \(\varDelta X\) from the third equation in (4) (and applying earlier introduced notation \((S_{\ell }^{-1} \odot S_{\ell }^{-1}) \varDelta S = S_{\ell }^{-1} \varDelta S\, S_{\ell }^{-1}\)) gives

and letting \(\varDelta \tilde{S}= (S_{\ell }^{-1} \odot S_{\ell }^{-1})\varDelta S\), we get the linear system in the augmented form

The Schur complement form of the system can be obtained computing \(\varDelta S\) from the first equation in (4)

substituting it in (5) and getting

Finally from the second equation in (4) we obtain:

where \(M_{\ell }\) is the Schur complement matrix given by

We note that the matrix \(M_{\ell }\) is symmetric and positive definite, its entries are given by

and it is generally dense.

Assuming that the initial guess is primal-dual feasible, primal-dual feasibility is maintained at each Newton iteration. Therefore one can substitute \(\mathcal{{A}} (X_{\ell })\) with b in the right hand side of (9) and solve the linear system

This substitution allows to avoid using the primal variable \(X_{\ell }\) explicitly.

The subsequent damped iterates are given by \(y_{\ell +1}=y_{\ell }+\alpha \varDelta y\) and \(S_{\ell +1}=S_{\ell }+\alpha \varDelta S\) with \(\alpha \) such that \(S_{\ell +1}\succ 0\). Given \(S_{\ell }\succ 0\), we define

and notice that \(X_{\ell +1}\) has the following form:

see [8, Section 5.8]. From (8) we have \(X_{\ell +1}=X_{\ell } + \varDelta X\). The adopted centrality measure is

where in the last equality we have used (7) and (13). This measure is used in the proximity stopping criterion since the damped Newton process is carried out until

with \(\tau \in (0,1)\). Then the scalar \(\mu \) is reduced and a new nonlinear system is solved.

3 The inexact dual-logarithmic barrier algorithm

We focus on sparse large dimension problems of the form (1)–(2) and propose an inexact version of the dual-logarithmic barrier algorithm for its solution. We therefore assume that the dual variable S is sparse, while the variable X may be dense and that the memory storage of the Schur complement matrix \(M_\ell \) is prohibitive.

We fix the value of \(\mu \) and use an Inexact Newton method. The step is made in a direction which is an approximate solution of the Schur complement formulation (11) computed in a matrix-free regime by using a Krylov method. The method is iterated until the centrality measure \(\delta (S_{\ell },\mu )\) drops below a prescribed tolerance. The barrier term \(\mu \) is then reduced to force convergence.

Note that one might consider solving the augmented system (6) since both the application of the coefficient matrix and the computation of \(\varDelta S\) manage to avoid the inverse of \(S_{\ell }\) hence are expected to be inexpensive. However, this formulation has two drawbacks. Firstly, \(\varDelta \tilde{S} = (S_{\ell }^{-1} \odot S_{\ell }^{-1}) \varDelta S\) is very likely to be dense despite \(\varDelta S\) being sparse. Secondly, solving linear system (11) inexactly causes \(\varDelta S\) to lose symmetry.

The overall Long-Step Inexact Interior-Point procedure is described in Algorithm 1. Clearly, the main step is the inexact solution of (3) at Line 4 whose steps are described in detail in Algorithm 2.

Some comments on the algorithms are in order. First, note that in Algorithm 1 an initial primal variable X is not required. Also, if the dual vector solution is not needed, one can avoid to update the vector \(y_{\ell }\) in Algorithm 2 explicitly and deal with \(\varDelta y\) only. Second, the main task in Algorithm 2 is the computation of the step \(\varDelta y\) at Lines 5–6. The computed step \(\varDelta y\) satisfies

where the residual vector \(r_{\ell }\) satisfies inequality (16). Hence it is an approximate solution of (11) and the relative residual norm is bounded by the forcing term \(\eta _{\ell }>0\). Third, the backtracking in the while loop at Lines 8–10 of Algorithm 2 preserves positive definiteness of \(S_{\ell }\), for each \(\ell \). Then, at each iteration k of Algorithm 1, matrix \(S_{k+1}\) computed at Line 4 is positive definite.

Moreover, in the inexact framework, we approximate the centrality measure in (14) with the following “inexact” measure

where \(\varDelta S\) is computed at Line 7 of Algorithm 2 and \(X_{\ell +1}\) is defined by

Although the algebraic formulae in (14) and (18) are identical, their meaning is different because \(X_{\ell +1}\) in the latter is allowed to violate the primal feasibility. The following result demonstrates that equality in (18) holds and the inexact computation of \(\varDelta y\) in (15) yields a loss of primal feasibility.

Proposition 1

Let \(X_{\ell +1}\) be defined in (19). Then for each \(\ell >0\)

and

Moreover, \(X_{\ell +1}\) satisfies

Proof

Equation (20) can be derived following [8, Section 5.8] and writing the first-order optimality conditions for the minimization problem in (19). Equality (21) easily follows from (20). Finally, we have

\(\square \)

Then, letting \(\varDelta X\) as in (5) we obtain

Interestingly, dual feasibility is preserved even if inexact steps are used. Assuming the pair \((y_{\ell },S_{\ell })\) satisfies \( {{\mathcal {A}}}^T y_{\ell } + S_{\ell }=C\), then dual feasibility of \((S_{\ell }+\alpha \varDelta S,y_{\ell }+\alpha \varDelta y)\) follows from the feasibility of \((S_{\ell },y_{\ell })\) and the definition of \(\varDelta S\) in Line 7 of Algorithm 2:

In order to distinguish the “inexact” measure (18) from the “exact” one in (14), we use the subscript ex for (14). Analogously, we denote with \((\varDelta X_{ex}, \varDelta y_{ex},\varDelta S_{ex})\) the “exact” solution of (4) which corresponds to having \(r_{\ell }=0\) in (15). Then,

and

The relationship between the two centrality measures follows.

Lemma 1

Let \(S_{\ell }\succ 0\). Then

Proof

Combining (22) and (23) we get \(X_{\ell +1, ex} = X_{\ell +1} + \varDelta X_{ex} - \varDelta X\) and then

Since \(M_{\ell } \varDelta y = M_{\ell } \varDelta y_{ex} + r_{\ell }\) we get

Let \(G := A (S_{\ell }^{-1/2}\otimes S_{\ell }^{-1/2})\), then

We observe that the vector \(\hat{q}:= G^{\dagger }r_{\ell } \in {\mathbb {R}}^{n}\) is the (unique) minimum length solution of the least-squares problem \(\min _{q\in {\mathbb {R}}^n} \Vert G q - r_{\ell }\Vert \). Let \(A = U \varSigma V^T\) be the SVD of A. Then letting \(z=(S_{\ell }^{-1/2}\otimes S_{\ell }^{-1/2}) \hat{q}\),

where \(g = V^T z\). Therefore \(g = \varSigma ^{\dagger } U^T r_{\ell } \), \( z = V \varSigma ^{\dagger } U^T r_{\ell } \) and finally

We therefore obtain by (24)

On the other hand, writing \(X_{\ell +1} = X_{\ell +1,ex} + \varDelta X - \varDelta X_{ex}\) and following the steps above, we obtain

which completes the proof. \(\square \)

Now, we bound the duality gap in terms of the centrality measure and the residual vector.

Lemma 2

At each \(\ell \)th iteration of Algorithm 2 the following inequality holds

Proof

Note that using Proposition 1 we have \(b = {{\mathcal {A}}}(X_{\ell +1}) - \mu r_{\ell }\), hence, following the proof of Lemma 5.8 in [8] we get

Using the dual feasibility of \((y_{\ell },S_{\ell })\) we get

Using the Cauchy-Schwartz inequality we have

that implies

We get the thesis combing the above equation with (25). \(\square \)

4 Convergence analysis of the Inexact Newton inner procedure

In this section we will look into convergence properties of Algorithm 2. We start from observing that once the stopping criterion at Line 13 of Algorithm 2 has been satisfied, say at iteration \(\bar{\ell }\), the corresponding matrix \(X_{\bar{\ell }+1}\) defined in (20) is positive definite.

Lemma 3

Let \(S_{\ell } \succ 0\) and \( \delta (S_{\ell },\mu ) < 1\). Then \(X_{\ell +1} \succ 0\).

Proof

The proof follows by a straightforward modification of the proof of Lemma 5.3 in [8]. \(\square \)

Moreover, the backtracking process in the while loop at Lines 8–10 is well-defined and if \(S_{\ell }\) is sufficiently well-centered, then \(\alpha = 1\) is taken. This is proved in the next two Lemmas.

Lemma 4

Assume \(S_{\ell }\succ 0\). Then, there exists \(\bar{\alpha }>0\) such that \(S_{\ell }+\alpha \varDelta S \succ 0\) for any \(\alpha \) in \((0,\bar{\alpha })\).

Proof

Assume \(\varDelta S\) is not positive definite, otherwise \(S_{\ell }+ \alpha \varDelta S \succ 0\) for any \(\alpha \ge 0\). We have

Then, as \( S_{\ell }^{1/2}\succ 0\) by hypothesis, it follows that \(S_{\ell }+\alpha \varDelta S \succ 0\) whenever \(\alpha \) is sufficiently small. In particular, the thesis holds with \(\bar{\alpha } =1/ \Vert S_{\ell }^{-1/2} \varDelta S S_{\ell }^{-1/2}\Vert _F\). \(\square \)

Lemma 5

Let \(S_{\ell } \succ 0\) and \( \delta (S_{\ell },\mu _k) < 1\). Then \(S_{\ell } + \varDelta S \succ 0\).

Proof

The proof follows by a straightforward modification of the proof of Lemma 5.4 in [8]. \(\square \)

We observe that the sequence \(\{(y_{\ell },S_{\ell })\}\), computed by Algorithm 2 corresponds to the sequence generated by an Inexact Newton method applied to the reduced nonlinear system:

In fact, the Newton system is given by:

Then, the vector \((\varDelta y, \varDelta S)\) computed at Lines 5 and 7 is an Inexact Newton step for (27) as it satisfies

Moreover, by (16) the residual vector satisfies the following inequality

Then, if we equip Algorithm 2 with a line-search along the direction \((\varDelta y, \varDelta S)\) we obtain an Inexact Newton method in the framework of Algorithm INB in [9]. Therefore, given \(t\in (0,1)\), let us substitute the loop at Lines 8–10 of Algorithm 2 with the steps described in Algorithm 3.

It is worth pointing out that when the inexact Newton method is applied to find an approximate solution of (27) the value of barrier term \(\mu \) remains constant. This is independent of which mechanism is used to choose the stepsize (either Lines 8–10 of Algorithm 2 or the backtracking strategy suggested in Algorithm 3). Moreover, letting \(\varDelta \tilde{S}=(S_{\ell }^{-1} \odot S_{\ell }^{-1} )\varDelta S\) the linear system (28) may be rewritten as

Its coefficient matrix is nonsingular provided that \(S_{\ell }\succ 0\) and this is indeed the case since \(\mu \) is a fixed positive constant in this context. Therefore \(\tilde{F}'_{\mu }(y_{\ell },S_{\ell })\) is nonsingular for any \(\ell \ge 0\).

Assume that the sequences \(\{\Vert S_{\ell }\Vert \}_{\ell =1}^{\infty }\), \(\{\Vert y_{\ell }\Vert \}_{\ell =1}^{\infty }\) are bounded. Then, the sequence \(\{(y_{\ell }, S_{\ell })\}\) has at least one accumulation point and from Theorem 6.1 in [9] it follows that if there exists a limit point \((y_{*},S_{*})\) of \(\{y_{\ell },S_{\ell }\}\) such that \(\tilde{F}'_{\mu }(y_{*},S_{*})\) is nonsingular, then \(\tilde{F}_{\mu }(y_{*},S_{*})=0\) and \(\{y_{\ell }, S_{\ell }\}\rightarrow (y_{*},S_{*})\), whenever \(\ell \) goes to infinity. As a consequence we have

as the sequence \(\{ \Vert \tilde{F}_{\mu }(y_{\ell },S_{\ell })\Vert \}\) is monotonically decreasing and bounded from below. This together with (29) implies that

We now show that the stopping criterion at Line 13 of Algorithm 2 is satisfied after a finite number of inner iterations. First, from (20), and (15) it follows

with \(G_{\ell } = A(S_{\ell }^{-1/2} \otimes S_{\ell }^{-1/2})\). Then, proceeding as in the proof of Lemma 1, i.e. letting \(A = U \varSigma V^T\) be the SVD of A, \(\hat{q}=G^{\dagger }_\ell ( r_{\ell }+\frac{b}{\mu }- \mathcal{{A}}( S_{\ell }^{-1}))\) and \(z=(S_{\ell }^{-1/2}\otimes S_{\ell }^{-1/2}) \hat{q} \), we get

and

This yields \(\Vert X_{\ell +1}-\mu S_{\ell }^{-1}\Vert _F\rightarrow 0\) using (30) and (31). Then, as

and \( \Vert S_{\ell }\Vert _F\) is bounded by assumption we can conclude that \(\delta (S_{\ell },\mu ) \rightarrow 0\).

Finally, we have that primal feasibility is recovered since

and therefore \(\Vert {{\mathcal {A}}}(X_{\ell +1})-b\Vert \rightarrow 0\) whenever \(\ell \) goes to \(\infty \).

Then, in order to compute accurate primal solution, in the last iteration of Algorithm 1 a small tolerance \(\tau _k\) is set to force the Inexact Newton method to iterate till convergence.

5 A short-step version of the inexact dual-logarithmic barrier method

In this section we introduce a short-step version of Algorithms 1 and 2. This corresponds to use a more conservative update \(\mu _{k+1} = \sigma \mu _k\) with \(\sigma = (1-\theta )\) for some small \(\theta \), to perform only two Inexact Newton iterations for each \(\mu \)-value and to consider an initial pair (y, S) sufficiently well centered with respect to the initial \(\mu \). In particular, a sequence is generated such that if the initial S is sufficiently close to the central path, i.e. such that \(\delta (S,\mu )\le 1/2\), then after two inexact Newton steps the new approximation serves as well-centered starting iterate for the sub-sequent outer iteration. In this section we will use two indices to specify the outer kth iteration and the three inner iterations \(\ell = 0,1,2\).

In order to establish such convergence properties we need to modify the accuracy requirement in the solution of the linear systems. Indeed, the norm of the residual is controlled by the value of the centrality measure \(\delta (S_{k,\ell }, \mu _k)\) instead of \(\Vert b - \mu _k {{\mathcal {A}}}( S_{k,\ell }^{-1})\Vert \) as in the long-step version. We state the short-step version of the inexact dual-logarithmic barrier method in Algorithm 4.

Note that since \(r_{0,0}=0\), then \(X_{0,1}\) is primal feasible. Moreover, Lemmas 3–5 hold also for the short-step version and for its “exact” counterpart, as the accuracy requirement in the solution of linear systems is never involved in their proofs. The following observations about the exact counterpart of our short-step algorithm are in order. First, the following result on the decrease of centrality measure holds.

Lemma 6

[8, Lemma 5.5] Fixed \(k\ge 0\), if \(S_{k,\ell }\succ 0\) is dual feasible and \(\delta _{ex}(S_{k, \ell },\mu _k) < 1\), then

with \(\ell = 0,1\).

Second, it is shown in [8] that starting from \(S_{k,0}\) such that \( \delta _{ex}(S_{k, 0},\mu _k) < \frac{1}{2}\), after one Newton step the obtained approximation \(S_{k, 1}\) can be used as the subsequent well-centered starting point \(S_{k+1,0}\), i.e. such that \(\delta _{ex}(S_{k+1,0},\mu _{k+1}) \le \frac{1}{2}\) and the process is quadratically convergent. In the inexact case, we are going to prove that we need two inexact Newton iterations to get an analogous result. In other words we are going to show that the Inexact Newton process is two-step quadratically convergent in terms of the centrality measure. Obviously, as in the long step case, in the last outer iteration, we need to iterate the Inexact Newton method till convergence in order to recover primal feasibility. Therefore after termination of Algorithm 4, an extra outer iteration is performed: several steps of Inexact Newton method are made until the nonlinear residual is small.

Remark 1

In the following proofs we will make use of the centrality measure evaluated at \(S_{k,2}\), i.e.

where \(\varDelta S=-{{\mathcal {A}}}^T \varDelta y\) and \(\varDelta y\) is an approximate solution of the linear system:

with residual

This quantity will be used to provide a bound for \(\delta (S_{k+1,0},\mu _{k+1})\) that is computed at Line 12 of the subsequent \((k+1)\)th iteration in Algorithm 4. The computation of \(\delta (S_{k,2},\mu _k)\) would require a third Newton step but in fact it is not needed in the algorithm.

Lemma 7

Let \(k\ge 0\), \(S_{k,0} \succ 0\) be dual feasible and

Moreover, let \(\gamma >0\), \(\beta \in (0,2)\) and \(\delta (S_{k,2},\mu _k)\) be given in (35).

Then, starting from \(S_{k,0}\), the following inequality holds

with

Proof

First, note that by Lemma 1 and (34) it follows

Then, using again Lemmas 1, 6 and (36) we get

Therefore we have

with \(C(\gamma ,\beta )\) given in (39). \(\square \)

The next step consists in bounding the centrality measure at the beginning of the outer iteration \(k+1\) in terms of the centrality measure at the end of the previous iteration k.

Lemma 8

Same assumptions as in Lemma 7. Then if \(\mu _{k+1}= (1-\theta )\mu _k\) for some \(\theta \in (0,1)\), then

Proof

Proceeding as in Lemma 1 and recalling that \(S_{k+1,0}= S_{k,2}\) (Line 20 of Algorithm 4) we have

where

Using the minimization property of \(\bar{X}^{ex}_{k+1,1}\) and the form of \(\mu _{k+1}\) we obtain

where

Combining (41), (42) and (32) we obtain

where we used that by assumption (37) \(\delta (S_{k,1},\mu _k) < 1\). Using Lemma 1 and (36) we have

and we finally get the thesis. \(\square \)

The next result establishes a bound on the proximity of \(S_{k+1,0}\) to the central path.

Lemma 9

Same assumptions as in Lemma 7. If

with \(C(\gamma ,\beta )\) defined as in (39) and \(\gamma , \beta \) sufficiently small so that \(\theta \in (0,1)\), then

Proof

and (43) yields

\(\square \)

Now we show that at each iteration k, \(S_{k,0}\) can be used as a well-centered starting point provided that the initial guess \(S_{0,0}\) is well centered.

Lemma 10

Let \(\theta \) be given in (43) and \(\mu _0>0\). Assume

and \(\gamma , \beta \in (0,1)\) in (39) are sufficiently small such that \(\theta \in (0,1)\) and \(\, 4\gamma +\gamma ^2+4\beta \le 4\). Then

Proof

Let us consider the first outer iteration (\(k=0\)). Then, as \(r_{0,0}=0\), it follows that \(\delta (S_{0,0},\mu _0)=\delta _{ex}(S_{0,0},\mu _0)\), and by Lemma 1, we get

Then, using Lemma 6, (34), and \(\delta (S_{0,0},\mu _0)\le 1/2\) we get

This implies \(\delta (S_{0,1},\mu _0)\le \delta (S_{0,0},\mu _0) \le 1/2\) as \(\beta <1\) by hypothesis. Therefore, Lemma 9 ensures

Let us consider \(\delta (S_{1,1},\mu _1)\). We are going to show that \(\delta (S_{1,1},\mu _1)\le 1/2\). We have, using Lemmas 6 and 1,

Then the stopping rules (32) and (34) and \(\max \{\delta (S_{1,0},\mu _1),\delta (S_{0,1},\mu _0)\}\le 1/2\) yield

Therefore, \(\delta (S_{1,1},\mu _1)\le 1/2\) provided that \(\, 4\gamma +\gamma ^2+4\beta \le 4\). Then, Lemma 9 yields \(\delta (S_{2,0},\mu _2)\le 1/2\). Proceeding in this way we can prove that \(\delta (S_{k,1},\mu _k) \le 1/2\) for \(k\ge 0\). \(\square \)

Summing up, we have proved that, starting from a dual feasible point \(S_{0,0}\) such that \(\delta _{ex}(S_{0,0},\mu _0) \le 1/2\), at a generic iteration k performing two inexact Newton steps and reducing \(\mu _k\) by a factor \((1-\theta )\), we get \(\delta (S_{k+1,0},\mu _{k+1}) \le \frac{1}{2}\) at the subsequent iteration.

Theorem 1

Let \(\epsilon \) be an accuracy parameter, \(\theta \) given in (43) and \(\mu >0\). Assume S is strictly dual feasible such that \(\delta _{ex}(S,\mu ) \le 1/2\) and \(\gamma \) and \(\beta \) in (39) are sufficiently small such that \(\theta \in (0,1)\) and \(\, 2\beta +\beta ^2+9\gamma < 1/3\) and \(4\gamma +\gamma ^2+4\beta \le 4\).

Then,

-

(i)

Algorithm 4 terminates after at most \(\left\lceil 18\sqrt{n}\log {\frac{n \mu }{\epsilon }}\right\rceil \) inexact Newton steps.

-

(ii)

Let \(\bar{k}\) be the last iteration of Algorithm 4, then the following inequality holds:

$$\begin{aligned} S_{\bar{k},1} \bullet X_{\bar{k},2} \le 3/2 \epsilon \end{aligned}$$and

$$\begin{aligned} C \bullet X_{\bar{k},2} - b^T y_{\bar{k},1} \le 3/2 \epsilon +r_{\bar{k},1}^T y_{\bar{k},1}. \end{aligned}$$

Proof

We follow the lines of proof of Theorem 5.1 in [8]. At the end of each kth iteration of Algorithm 4 \(S_{k,2}\) is strictly feasible, \(\mu _k = (1-\theta )^k \mu _0\) (\(\mu _0 = \mu \) at Line 1) and the algorithm stops at iteration \(\bar{k}\) where

Using \(-log(1-\theta )>\theta \) for \(\theta \in (0,1)\) we can derive that (44) is guaranteed to hold whenever

By (43) it follows

and it can be easily shown that \( 9\sqrt{n}>\frac{1}{\theta } \) whenever \(2\beta +\beta ^2+9\gamma <1/3\). Then, \(n\mu _{k} \le \epsilon \) for any

Then, the stopping criterion is met after at most \(18 \sqrt{n} \log {\frac{n\mu _0}{\epsilon }}\) inexact Newton steps and (i) follows.

Moreover, by (26) we have:

as \(\delta (S_{\bar{k},1}, \mu _{\bar{k}}) \le 1/2\).

Then, Lemma 2 yields (ii). \(\square \)

We underline that, as already noted, at termination of Algorithm 4, a further outer iteration has to be carried out in order to recover primal feasibility. In this case, a sequence \(\{S_{\bar{k}+1,\ell }\}_{\ell \ge 0}\) is generated and by Lemma 7 it follows that \(\delta ( S_{\bar{k}+1,\ell },\mu _{\bar{k}+1})\) goes to zero whenever \(\ell \rightarrow \infty \). Therefore, \(\Vert r_{\bar{k}+1,\ell }\Vert \) goes to zero and \( C \bullet X_{\bar{k}+1,\ell +1} - b^T y_{\bar{k}+1,\ell }\) is bounded by \( 3/2 \epsilon \).

6 Matrix-free implementation

In this section we describe our matrix-free implementation of Algorithms 1 and 2.

Since we use a Krylov solver to compute an approximate solution of (9), the matrix \(M_\ell \) given in (10) is required only to perform matrix-vector products. Due to the structure of \(M_\ell \), its action on a vector involves the inverse of the sparse matrix \(S_\ell \). Then, at each iteration of Algorithm 2, the Cholesky factorization \(R_\ell ^TR_\ell \) of the sparse matrix \(S_\ell \) has to be computed. Taking into account that the dual matrix is assumed to be sparse, a sparse Cholesky factor is expected. Note that the structure of dual matrix does not change during the iterations of Algorithm 1, hence reordering of \(S_0\) can be carried out once at the very start of Algorithm 1 and then may be reused to compute the Cholesky factorization of \(S_{\ell }\) at each iteration of Algorithm 2.

As pointed out in [2], the cost of evaluation of each column of \(M_\ell \) depends strongly on the structure of constraint matrices \(A_i\). More precisely, it is shown that the computation of the ith column of \(M_\ell \) involves \(p_i\) back-solves with \(S_\ell \), where \(p_i\) is the rank of the constraint matrix \(A_i\). Then, if we assume that the constraint matrices have the same rank p, the cost of forming \(M_\ell \) amounts to 2mp backsolves with \(R_\ell \). If \(M_\ell \) is too large to be stored, we need to work in a matrix-free regime and therefore, in order to compute matrix vector products with \(M_\ell \) we compute each column of \(M_\ell \) once at a time and then discard it. In this latter case, letting \(dens(R_\ell )\) be the density of the Cholesky factor \(R_\ell \), the cost of one matrix-vector product is given by \(2mp \times O(n^2 \,dens(R_\ell ))\) operations. Clearly, this procedure allows to save memory as the whole matrix \(M_\ell \) does not need to be stored, but it is more expensive than computing matrix vector-product with explicitly available matrix \(M_\ell \). Finally, we note that backsolves with \(R_\ell \) can be performed in parallel.

More specifically, let us consider the constraint matrices represented as a sum of vector outer products, that is

such that \(\alpha _{i,r}\in {\mathbb {R}}\) and \(v_{i,r}\in {\mathbb {R}}^n\), for \(r = 1,\dots , p_i\), e.g. represented by an eigendecomposition. Then a matrix-vector product can be performed using Algorithm 5 based on [2, Technique M2]. The algorithm is efficient when the ranks of the constraint matrices are low.

It is well known (see [23]) that a CG-like method applied to (9) may be slow, in particular in the late stage of an Interior Point method. On the other hand in our context traditional preconditioners cannot be incorporated as the matrix \(M_\ell \) is dense and we assume that it is not available. For this latter reason matrix-free preconditioners are needed. Incomplete Cholesky (IC) factorizations are matrix-free in the sense that the columns of \(M_\ell \) can be computed one at a time, and then discarded, but they rely on drop tolerances to reduce fill-in and have unpredictable memory requirements. Alternative approaches with predictable memory requirements depend on the entries of \(M_\ell \), [13, 17, 19, 20] and have high storage requirements if \(M_\ell \) is dense. On the other hand, the limited memory preconditioner given in [1, 12] has a predictable memory requirements and for this reason we employed it in our runs. It consists in a partial Cholesky factorization limited to q columns of \(M_\ell \) combined with a diagonal approximation of the Schur complement. In this approach, first an integer \(q< m\) is chosen and the following formal partition of \(M_\ell \) is considered

where \(M_{11}\in \mathcal{R}^{q\times q}, \, M_{21}\in \mathcal{R}^{(m-q)\times q}, \, M_{22} \in \mathcal{R}^{(m-q)\times (m-q)}\). Then, the first q columns of \(M_\ell \) have to be computed and the Cholesky factorization of \(M_\ell \) limited to

is formed giving

where \(L_{11}\in {\mathbb {R}}^{q \times q}\) is lower triangular with ones on the diagonal, \( L_{21}\in {\mathbb {R}}^{(m-q)\times q}\), \(Q_{11}\in {\mathbb {R}}^{q\times q}\) is diagonal and

is the Schur complement of \(M_{11}\) in \(M_\ell \). Then, letting \(diag(\cdot )\) the operator that extracts the diagonal of a matrix and returns the diagonal matrix based on it, Z is approximated by

and the following preconditioner is formed:

Storage of the preconditioner requires remembering the partial Cholesky factor

and the diagonal Q, which overall needs at most \((q+1) m\) nonzero entries. The a priori known bound on the storage requirements is an advantage. To compute L one needs to compute the first q columns and the diagonal of \(M_\ell \) first. The cost of doing it is negligible compared to the cost of each matrix-vector product in the iterative linear solver. Therefore, the choice of q in this context depends only on the memory available.

Regarding spectral properties of the above outlined preconditioner, it has been proved in [1] that q eigenvalues of \(P_\ell ^{-1}M_\ell \) are equal to 1 and the remaining ones are the eigenvalues of \(Q_{22}^{-1}Z\). Then,

Therefore, the maximum eigenvalue of the preconditioned matrix stays bounded and does not grow to \(\infty \) as \(\mu _k\) goes to zero and the solution is approached. Moreover, in [12] a “greedy” heuristic technique acting on the largest eigenvalues of \(M_\ell \) has been proposed. It consists in permuting rows and columns of \(M_\ell \) so that \(M_{11}\) contains the q largest elements of the diagonal of \(M_\ell \). This choice is motivated by the well known result about the eigenvalues of the Schur complement (which was also used in [1])

Hence, by reducing the trace of \(M_{22}\), a reduction in the value of \(\lambda _{max}(P_\ell ^{-1}M_\ell )\) is expected. Therefore we adopted this heuristic in our implementation.

As a final comment, the computation of \(\delta ( S_\ell ,\mu )\) at Line 12 of Algorithm 2 is not needed. Indeed, as observed in [4],

Moreover, if Conjugate Gradient method is initialized with the zero vector and \(\varDelta y\) is computed at a certain iteration of it then the quadratic form \(\varDelta y^T M_\ell \varDelta y\) satisfies

A similar property holds also when the preconditioned CG is used [10].

7 Numerical experiments

In this section we report on our numerical experience with the proposed inexact dual-logarithmic barrier algorithms described in Algorithms 1–2 and 4. We did not use the backtracking strategy described in Algorithm 3 because we verified that in practice it was not needed to obtain convergence of Algorithm 2. We first describe the problem sets, then discuss the numerical results.

All the results have been obtained using a Matlab (R2015a) code on an Intel Core i5-6600K CPU 3.50 GHz \(\times \) 4 16 GB RAM.

7.1 Test problem sets

We evaluated the performance of the proposed methods on two classes of problems where the sparsity of the dual variable S is inherited from the sparsity of C and the structure of the \(A_i\)’s. The first class of test examples arises in the SDP relaxation of maximum cut problems; the constraint matrices in this reformulation have rank 1 and \(n=m\). The second class of test examples is obtained by a reformulation of matrix completion problems. The obtained SDP problems are characterized by constraint matrices of rank 2. In this case \(n\ll m\) and therefore these problems could potentially be solved also by a primal-dual method. However, we considered this class for the sake of gaining more computational experience with problems which involve low-rank \(A_i\)’s.

The maximum cut problem consists in partitioning the vertices of a graph into two sets that maximize the sum of the weighted edges connecting vertices in one set with vertices in the other. Its SDP relaxation [21] is

where e is the vector of all ones and C depends on the weighted adjacency matrix of the graph. Therefore, sparsity of C depends on the sparsity of the adjacency matrix of the graph. The dual problem is given by:

The constraint matrices \(A_i\) are given by \({A_i = e_i e_i^T}, \ i = 1, \dots , m\), where \(e_i\in {\mathbb {R}}^m\) is the ith vector of the canonical basis. Therefore, the \(A_i\)s are trivially in the form (45), and each matrix has rank one. The form of matrix \(M_\ell \) and vector \({{\mathcal {A}}}(S_{\ell }^{-1})\) in the right-hand side of (9) simplifies and is given by

where we have borrowed the Matlab notation; i.e. \(M_\ell \) is the componentwise product of \((S^{-1}_{\ell })\) times itself. We specialized Algorithm 5 for the maximum cut problem in Algorithm 6, taking into account the special structure of each \(A_i\).

As a second set of problems, we considered large and sparse SDPs which originate from a reformulation of the matrix completion problem, that is the problem of recovering a low rank data matrix \(B\in {\mathbb {R}}^{\hat{n}\times \hat{n}}\) from a sampling of its entries [5].

Let \(B_{s,t}, (s,t) \in \varOmega \) be the given entries of matrix B. The SDP relaxation of this problem is given by

where \(\bar{X}, W_1, W_2 \in {\mathbb {R}}^{\hat{n}\times \hat{n}}\) are the unknowns, and \(B_{s,t}, (s,t) \in \varOmega \) are given, see [18].

We can reformulate (46) as (1) setting \(n=2 \hat{n}\) and m equal to the cardinality of \(\varOmega \), i.e. the number of known entries of B. The primal variable X takes the form \(X = \begin{bmatrix} W_1&\bar{X} \\ \bar{X}^T&W_2 \end{bmatrix} \in {\mathbb {R}}^{n \times n}\), while the matrix C in the objective is \(C = \frac{1}{2}I_{ n}\). In order to define the operators \(A_i\)’s, we enumerate the m couples \((s,t) \in \varOmega \) so that \(b_i= B_{s,t}\) for \(i\in \{1, \dots m\}\) and \((s,t) \in \varOmega \). Moreover, for each \((s,t) \in \varOmega \) let us define the matrix \(\varTheta ^{st} \in {\mathbb {R}}^{\hat{n} \times \hat{n}}\) such that

Then, the constraint matrices \(A_i \in {\mathbb {R}}^{n\times n}\) with \(i\in \{1, \dots m\}\) (and corresponding \((s,t) \in \varOmega \)) are given by

Having defined all the ingredients \(C,b,A_i, X\) as above, we obtain the SDP relaxation of matrix completion problem in the form (1).

We note that since C is diagonal and \(A_i\)’s have at most 2 nonzero elements, the slack variable S has at most \(2m+n\) nonzero elements. We also underline that \(m \ll \hat{n} ^2\).

Constraint matrices are rank 2 and they can easily be expressed in the form (45) using the eigendecomposition of the \(2\times 2\) matrix \(\begin{bmatrix} 0&0.5 \\ 0.5&0 \end{bmatrix}\). This is described in Algorithm 7, where we borrowed again the Matlab notation.

7.2 Implementation issues for the long-step algorithm

We set the initial parameters \(\mu =1\) in Algorithm 1. In all the iterations except for the last one, the Newton procedure was stopped whenever a full Newton step is taken (i.e. \(\alpha =1\) in Line 11 of Algorithm 2) and \(\delta (S_\ell ,\mu )\le 0.5\). Then, only a rough accuracy is required in the solution of the nonlinear systems (3) except in the last iteration where, in order to recover primal feasibility, the Newton process is carried out until \(\delta (S_\ell ,\mu )\le 10^{-5}\).

In Algorithm 2 we employed the Matlab function pcg to compute the approximate solution of (9), i.e. we used the CG method [11]. We work in a nearly matrix-free regime, so we do not store the whole matrix \(M_\ell \) but we only store the q columns needed for building the preconditioner. At Line 4 of Algorithm 2, we set \(\eta _\ell =10^{-3}\) for each \(\ell \), that is CG is stopped when the relative residual is less than \(10^{-3}\). However, if (16) is not satisfied within 100 iterations, CG is halted and the algorithm progresses with the best iterate computed by CG, that is the approximation returned by pcg at which the smallest residual has been obtained. Denoting with CGit the CG iteration at which this occurred, we set CGit as a limit on the number of CG iterations at the subsequent Newton’s iteration.

Finally, at Line 8 of Algorithm 2 we perform the Cholesky decomposition of \(S_\ell +\alpha \varDelta S\) in order to detect whether the matrix is positive definite.

7.3 Numerical results for the long-step algorithm: maximum cut test problems

We considered random graphs available in the GSet group of University of Florida Sparse Matrix Collection [7]. More precisely, we selected five matrices (G48, G57, G62, G65, G66, G67) corresponding to toroidal graphs of dimension that ranges from \(m=3000\) to \(m=20{,}000\) and two matrices (G60, G63) of dimension \(m=7000\) corresponding to less structured graphs. We also considered the smaller problem G11 to show in detail the preconditioner behaviour.

In Table 1 we report statistics of our runs. These results have been obtained setting \(\epsilon = 10^{-8}\) in Algorithm 1 and computing \(q= 0.3m\) columns in the partial Cholesky preconditioner, thus saving \(70\%\) of memory in comparison with a direct approach which applies the complete Cholesky factorization of the Schur complement. Problems corresponding to toroidal graphs have been solved using \(\sigma = 0.1\), while results for the graphs G60 and G63 were obtained using \(\sigma = 0.5\).

A feasible starting couple (S, y) is easily obtained as in [24].

The heading of the columns has the following meaning: dens(S): density of the dual matrix \(S_0\); dens(R) density of the Cholesky factor of \(S_0\); IT_NEW: overall number of inner Newton iterations; CG_AV: average number of CG iterations for each Newton iteration; TIME_AV: average time in seconds to perform one inner Newton iteration; \(\Vert {{\mathcal {A}}}(X) - b\Vert \): primal feasibility; \(X \bullet S\): complementarity gap. We can observe that both \(S_0\) and its Cholesky factor are quite sparse in all the tests. The number of overall Newton iterations and the average number of CG iterations are more or less the same in problems G57, G62, G65, G66 and G67. So they do not seem to increase with m. The higher number of Newton iterations in G60 and G63 is due to the less aggressive choice of \(\sigma \). Problem G48 is easier than the other ones as it requires noticeably fewer Newton and CG iterations. As expected the execution time increases with the dimension of the problem. It should be underlined that the high execution time is the price we pay for avoiding to store matrix \(M_\ell \). For example, in the solution of problem G67 the average time to perform one inner Newton iteration drops to 111 seconds if matrix \(M_\ell \) is computed once and then stored. Moreover, we observe that a major part of the execution time originates from the last iteration of Algorithm 1 as the linear systems become more difficult and their solution requires a higher number of CG iterations and therefore a higher number of matrix-vector products with \(M_\ell \).

To give some insight into the behaviour of the preconditioner, we considered the smaller problem G11 (m=800) and we focused on the most challenging last interior point iteration where the arising linear systems are ill-conditioned and several inexact Newton steps are required because the primal feasible solution X is sought. For this example, 8 Newton iterations were needed; we built the sequence of linear systems generated by Algorithm 2 (with the partial Cholesky preconditioner using the \(30\%\) of the columns of M) and we solved it also with unpreconditioned CG and with CG using the Diagonal preconditioner.

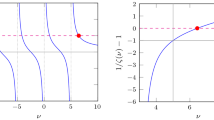

Firstly, we compare in Table 2 the smallest and largest eigenvalues of matrix \(M_\ell \) with those of \(M_\ell \) preconditioned by partial Cholesky (\(P^{-1}M\)) and \(M_\ell \) preconditioned by the Diagonal preconditioner (\(D^{-1}M\)). We note that the eigenvalues of unpreconditioned \(M_{\ell }\) vary between \(10^4\) and \(10^{10}\) at iteration 1 and their spread increases to \(10^4 - 10^{12}\) at iteration 8. The spread of eigenvalues of the preconditioned Schur complement \(P_{\ell }^{-1} M_{\ell }\) is significantly smaller. Indeed, the eigenvalues vary between \(10^{-3}\) and \(10^{1}\), that is the partial Cholesky preconditioner drastically reduces the largest eigenvalues of the unpreconditioned matrix \(M_\ell \); the smallest eigenvalue is pushed closer to zero but overall the condition number of the preconditioned matrix is smaller than that of \(M_\ell \). On the other hand, the Diagonal preconditioner is not as effective. It simply shifted the whole spectrum towards zero. We also plot in Figs. 1 and 2 the histograms of eigenvalues of \(M_{\ell }\) and \(P_{\ell }^{-1} M_{\ell }\) of the first (\(\ell =1\)) and the last (\(\ell =8\)) Newton system. The histograms use the logarithmic scale to demonstrate the magnitude of eigenvalues. They reveal that in both linear systems more than 50% of the eigenvalues of \(P_{\ell }^{-1} M_{\ell }\) are clustered around one. Although a good clustering of the spectrum of \(P_{\ell }^{-1} M_{\ell }\) does not necessarily imply fast convergence of the conjugate gradient method (see Section 5.6.5 in [16]) it usually benefits its behaviour.

Secondly, from the statistics reported in Table 3, we can observe that CG preconditioned by the partial Cholesky (CG+P) outperforms the unpreconditioned CG. Failures are denoted by the symbol ‘*’ and, in this case, the minimum value of the relative residual obtained during the iterations performed by CG is also reported. CG+P fails in the last two inner iterations but the obtained residuals are quite close to the desired values, while unpreconditioned CG stops with large residuals. Unsurprisingly, CG with Diagonal preconditioner (CG+D) behaves as poorly as the unpreconditioned CG. We also stress that the cost of application of the partial Cholesky preconditioner is negligible in the tested matrix-free implementation compared to the cost of performing matrix-vector product with \(M_\ell \) and therefore the preconditioner choice has to be guided only by its behavior in terms of reducing CG iterations.

Finally, in Fig. 3 we plot the convergence history of the whole procedure applied to problem G48. In particular the value of the duality gap and of primal feasibility versus the Newton iterations are displayed. We can observe that the duality gap reduces with \(\mu \) and primal feasibility is recovered at the last outer iteration.

7.4 Numerical results for the long-step algorithm: matrix completion test problems

In the solution of the SDP reformulation of matrix completion problems, the extra-diagonal submatrix \(\bar{X}\) of the primal variable X returned by Algorithm 1 is the computed approximation of matrix B that we would like to recover. Following [5] we considered a matrix recovered when \(\epsilon _B = \Vert \bar{X} -B\Vert _F/\Vert B\Vert _F \le 10^{-3}\) and we observed that high accuracy in the solution of the SDP problem is not needed to get such accuracy in the recovered matrix. Then, we set the stopping tolerance \(\epsilon \) equal to \(10^{-6}\) and \(\sigma = 0.5\) in Algorithm 1.

Following [5], we generated matrices \(B\in {\mathbb {R}}^{\hat{n}\times \hat{n}}\) of rank r, by sampling two \(\hat{n}\times r\) factors \(B_L\) and \(B_R\), each having independently and identically distributed Gaussian entries, and setting \(B=B_LB_R^T\). The set of observed entries \(\varOmega \) is generated sampling m entries of B uniformly at random. We set \(m=4 r(2\hat{n}-r)\), that is m is four times the degrees of freedom of rank r matrices and we choose \(r=\hat{n}/100\). This way, we obtained dual matrices S with density \(dens(S) \approx 4\cdot 10^{-2}\) and Cholesky factor’s density of the order of \(1.4\cdot 10^{-1}\). The dimensions of the generated SDP problems are shown in Table 4.

Since in these problems m is large and reaches tens of thousand, we set \(q=0.1 m\) in the partial Cholesky preconditioner with a saving of the \(90\%\) of memory. A feasible starting couple (S, y) is trivially computed by setting \(y=0\) and \(S=C\).

Results are reported setting the Matlab random generator rng(‘default’) and rng(1), similar results have been found with different random seeds. In Table 4 we report statistics of our runs. The headings are the same as in Table 1 with extra information on the rank r of matrix B to be recovered and the relative error of the recovered matrix \(\epsilon _B\).

We observe that the average number of nonlinear Newton iterations is larger than in maximum cut problems indicating that the arising nonlinear systems are quite difficult. On the other hand the average number of CG iterations remains small. Overall, the average CPU time is comparable to that obtained for maximum cut problems of similar size. The matrices B are recovered with a satisfactory accuracy.

7.5 Numerical validation of the short-step algorithm

Following the suggestion of the Anonymous Referee we carried experiments in order to validate the theoretical analysis conducted in Sect. 5 for the Short-Step Inexact Dual-Logarithmic barrier algorithm. We implemented Algorithm 4 and chose parameters \(\beta ,\gamma , \theta \) according to (43) and satisfying the assumptions in Theorem 1. We set the tolerance \(\epsilon = 10^{-5}\). After iteration \(\bar{k}\), at which the algorithm stops, a further outer iteration is performed until \(\delta (S_{\bar{k}+1,\ell }, \mu _{\bar{k}+1}) \le 10^{-5}\). Finally, we set a maximum number of 50 CG iterations. Algorithm 4 requires a starting couple \((S,\mu )\) such that S is feasible, \(\mu >0\) and \(\delta _{ex}(S,\mu )\le 1/2\). Setting \(\mu =1\), we built S by applying one iteration of Algorithm 1. Linear systems at Lines 5–6 of Algorithm 2 have been solved until \(\delta _{ex}(S_\ell , \mu )\le 1/2\) (\(\tau = 1/2)\).

Here we report the results obtained in the solution of the maximum cut problem using graph G11. Using the above strategy, we obtained for G11 a well-centered dual feasible S with \(\delta _{ex}(S,1)=0.1\). For this problem \(n = 800\) and consequently we used \(\theta = 0.008\) with \(\beta = \gamma = 0.01\). At the last interior point iteration, three Newton steps have been performed. Note that for this problem \(\Vert A^{\dagger }\Vert =1\).

In Figs. 4 and 5 we report statistics concerning the first and last 5 outer iterations, each involving 2 Newton inner iterations, except for the last one where 3 Newton iterations have been performed. In Fig. 4, we plot the values of \(\log _{10}\delta (S_{k,\ell }, \mu _k)\); the horizontal line corresponds to the value \(\log _{10}(1/2)\). We can observe that, as Lemma 10 states, starting from \((S_{k,0}, \mu _k)\) such that \(\delta _{ex}(S_{k,0}, \mu _k) \le 1/2\) after merely 2 Newton steps we obtain again a starting value \(S_{k+1,0}\) for the next iteration that is well-centered with respect to \(\mu _{k+1}\).

Let \(\rho _{k,\ell }\) for \(\ell =0,1\) be the upper bound on the relative residual norms in Algorithm 4 [see (32) and (34)]. Figure 5 shows the relative accuracy requirement \(\rho _{k,\ell }\) dictated by the theory and the actual value of the relative residual \(\Vert r_{k,\ell }\Vert /\Vert b/\mu _k - {\mathcal {A}}(S^{-1}_{k,\ell })\Vert \) returned by CG, still in the first and in the last 5 outer iterations. We observe that in the first iterations, the two quantities have the same order of magnitude that is around \(10^{-1}\) and \(10^{-7}\) in the first and the second Newton iteration, respectively. On the other hand, in the last iterations the prescribed accuracy is too tight and it is not needed to preserve the two-step quadratic convergence of the method. In fact, we can observe from the figures that in 50 iterations CG provides a higher value of the relative residual than that prescribed by the theory, but after 2 Newton steps, we still obtain a starting value \(S_{k+1,0}\) for the next iteration that is well-centered with respect to \(\mu _{k+1}\), as already noted.

8 Conclusions

A variant of dual interior point method for semidefinite programming has been proposed in this paper. The method uses inexact Newton steps and it is well suited to problems with sparse dual matrix S. The computations avoid any explicit use of primal variable X (which might be noticeably more dense than S) and therefore the method offers advantages in memory required to solve very large SDPs. Krylov subspace method preconditioned with partial Cholesky factorization of the Schur complement matrix is employed to solve the reduced KKT systems. Convergence properties and the \(\mathcal{O}(\sqrt{n} \ln \frac{n}{\varepsilon })\) complexity result have been established. A prototype Matlab-based implementation of the method has been developed and it has been demonstrated to perform well on medium scale SDP problems arising in maximum cut and matrix completion problems.

References

Bellavia, S., Gondzio, J., Morini, B.: A matrix-free preconditioner for sparse symmetric positive definite systems and least-squares problems. SIAM J. Sci. Comput. 35, A192–A211 (2013)

Benson, S.J., Ye, Y.: Algorithm 875: DSDP5-software for semidefinite programming. ACM Trans. Math. Softw. 34(3). Art. 16, 20 (2008)

Benson, S.J., Ye, Y., Zhang, X.: Mixed linear and semidefinite programming for combinatorial and quadratic optimization. Optim. Methods Softw. 11(1–4), 515–544 (1999)

Benson, S.J., Ye, Y., Zhang, X.: Solving large-scale sparse semidefinite programs for combinatorial optimization. SIAM J. Optim. 10(2), 443–461 (2000)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Choi, C., Ye, Y.: Solving large-scale sparse semidefinite programs using the dual scaling algorithm with an iterative solver. Tech. rep. (2000)

Davis, T.A., Hu, Y.: The University of Florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1–25 (2011)

De Klerk, E.: Aspects of Semidefinite Programming: Interior Point Algorithms and Selected Applications, vol. 65. Springer, Berlin (2006)

Eisenstat, S.C., Walker, H.F.: Globally convergent inexact Newton methods. SIAM J. Optim. 4(2), 393–422 (1994)

Fountoulakis, K., Gondzio, J.: A second-order method for strongly convex l1-regularization problems. Math. Program. A 156(1), 189–219 (2016)

Golub, G.H., Van Loan, C.: Matrix Computations, 2nd edn. The Johns Hopkins University Press, Baltimore (1989)

Gondzio, J.: Matrix-free interior point method. Comput. Optim. Appl. 51, 457–480 (2012)

Gould, N., Scott, J.A.: The state-of-the-art of preconditioners for sparse linear least-squares problems. Tech. Rep. RAL-P-2015-010, Rutherford Appleton Laboratory, Chilton, England (2015)

Helmberg, C., Rendl, F.: A spectral bundle method for semidefinite programming. SIAM J. Optim. 10, 673–696 (2000)

Kocvara, M., Stingl, M.: On the solution of large-scale SDP problems by the modified barrier method using iterative solvers. Math. Program. 109, 413–444 (2007)

Liesen, J., Strakos, Z.: Krylov Subspace Methods: Principles and Analysis. Oxford University Press, Oxford (2012)

Lin, C.J., Morè, J.J.: Incomplete Cholesky factorizations with limited memory. SIAM J. Sci. Comput. 21, 24–25 (1999)

Recht, B., Fazel, M., Parrilo, P.A.: Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 52(3), 471–501 (2010)

Scott, J.A., Tuma, M.: HSL_MI28: An efficient and robust limited-memory incomplete Cholesky factorization code. ACM Trans. Math. Softw. 40. Art. 24, 19 (2014)

Scott, J.A., Tuma, M.: On positive semidefinite modification schemes for incomplete Cholesky factorization. SIAM J. Sci. Comput. 36, A609–A633 (2014)

Todd, M.J.: Semidefinite optimization. Acta Numer. 2001(10), 515–560 (2001)

Toh, K.C.: Solving large scale semidefinite programs via an iterative solver on the augmented systems. SIAM J. Optim. 14, 670–698 (2004)

Toh, K.C., Kojima, M.: Solving some large scale semidefinite programs via the conjugate residual method. SIAM J. Optim. 12(3), 669–691 (2002)

Tütüncü, R.H., Toh, K.C., Todd, M.J.: Solving semidefinite-quadratic-linear programs using SDPT3. Math. Program. 95(2), 189–217 (2003)

Vandenberghe, L., Andersen, M.S.: Chordal graphs and semidefinite optimization. Found. Trends Optim. 1(4), 241–433 (2015)

Vandenberghe, L., Boyd, S.: Semidefinite programming. SIAM Rev. 38(1), 49–95 (1996)

Zhao, X.Y., Sun, D., Toh, K.C.: A Newton-CG augmented Lagrangian method for semidefinite programming. SIAM J. Optim. 20(4), 1737–1765 (2010)

Acknowledgements

The authors would like to thank the anonymous referees for their suggestions, which lead to significant improvement of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

The work of the first and the third author was supported by Gruppo Nazionale per il Calcolo Scientifico (GNCS-INdAM) of Italy. The work of the second author was supported by EPSRC Research Grant EP/N019652/1. Part of the research was conducted during a visit of the second author at Dipartimento di Ingegneria Industriale, UNIFI, the visit was supported by the University of Florence Internationalisation Plan.

Rights and permissions

About this article

Cite this article

Bellavia, S., Gondzio, J. & Porcelli, M. An inexact dual logarithmic barrier method for solving sparse semidefinite programs. Math. Program. 178, 109–143 (2019). https://doi.org/10.1007/s10107-018-1281-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-018-1281-5