Abstract

We revisit the proofs of convergence for a first order primal–dual algorithm for convex optimization which we have studied a few years ago. In particular, we prove rates of convergence for a more general version, with simpler proofs and more complete results. The new results can deal with explicit terms and nonlinear proximity operators in spaces with quite general norms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work we revisit a first-order primal–dual algorithm which was introduced in [15, 26] and its accelerated variants which were studied in [5]. We derive new estimates for the rate of convergence. In particular, exploiting a proximal-point interpretation due to [16], we are able to give a very elementary proof of an ergodic O(1 / N) rate of convergence (where N is the number of iterations), which also generalizes to non-linear norms [18], to overrelaxed [9, 16] and inertial [19] variants. In the second part, we give new, more precise estimates of the convergence rate for the accelerated variants of the algorithm. We conclude the paper by showing the practical performance of the algorithm on a number of randomly generated standard optimization problems.

The new proofs we propose easily incorporate additional smooth terms such as considered in [9, 31] (where convergence is already been proved, without rates), and [4] (where the proofs of [5] are extended to the framework of [31] which considers general monotone operators—in this setting one must also mention the recent work [10] for a Douglas–Rachford approach to the same problem, with a slightly different algorithm also presenting very good convergence properties). Also, a very recent work of Drori, Sabach and Teboulle, establishes similar results on a closely related primal–dual (“PAPC”) algorithm [11], which also handles explicit terms, but cannot jointly handle (without further splitting) nonsmooth functions in both the primal and dual variables.

We must observe that in addition, our proofs carry on to the nonlinear (or Banach space) setting. They can indeed take into account without effort non-linear proximity operators, based on Bregman distance functions (except in the accelerated variables of the accelerated schemes), in the spirit of the “Mirror-descent” methods introduced by Nemirovski and Yudin [21]. These were extensively studied by many authors, see in particular [2, 6, 29], and [20] in a primal–dual framework. See also [8, 25] for recent advances on such primal–dual algorithms, including stochastic versions. On the other hand, in the standard Euclidean setting, the algorithm we study can be shown to be a particular linearized variant of the ADMM algorithm for which a convergence theory, with more precise results, is found in [28]. We should add that the relationship between the type of algorithms which we study here and the ADMM was already stressed in [5] and that, in particular, one can derive from the analysis in [5] and in this paper convergence rates for the ADMM which are different from the ones currently found in the literature, see for instance [17].

We are addressing the following problem

which is the convex–concave saddle-point form of the “primal” minimization problem

Here, \({\mathcal {X}}\) and \({\mathcal {Y}}\) are, in the most general setting, real reflexive Banach spaces endowed with corresponding norms \(\Vert {\cdot }\Vert _{{x}}\) and \(\Vert {\cdot }\Vert _{{y}}\). Note however that in this setting it is quite restrictive to assume that K is bounded, so that the reader could assume that they are finite-dimensional. The only point where it matters is the fact that the estimates we compute never involve the dimension of the current spaces, except possibly through quantities such as \(\Vert K\Vert \). For notational simplicity, we will drop the subscript for the norms whenever there is no ambiguity. The dual spaces (spaces of all continuous linear functionals) are denoted by \({\mathcal {X}}^*\), and \({\mathcal {Y}}^*\). For \(x^*\in {\mathcal {X}}^*\) and \(x \in {\mathcal {X}}\), the bilinear form \(\left\langle x^*,x \right\rangle \) gives the value of the function \(x^*\) at x. Similar, for \(y^*\in {\mathcal {Y}}^*\) and \(y \in {\mathcal {Y}}, \left\langle y^*,y \right\rangle \) gives the value of the function \(y^*\) at y. The norms of the dual spaces are defined as

By definition, we also have that

We further assume that the following assumptions are fulfilled:

-

(i)

\(K:{\mathcal {X}}\rightarrow {\mathcal {Y}}^*\) is a bounded linear operator, with corresponding adjoint operator \(K^*:{\mathcal {Y}}\rightarrow {\mathcal {X}}^*\) defined by

$$\begin{aligned} \left\langle Kx,y \right\rangle = \left\langle K^*y,x \right\rangle \quad \forall (x,y)\in {\mathcal {X}}\times {\mathcal {Y}}. \end{aligned}$$Throughout the whole paper we will keep the notation “L” for the norm of this operator, defined by

$$\begin{aligned} L:= \Vert {K}\Vert _{{}} \; = \sup _{\Vert {x}\Vert _{{}}\le 1,\, \Vert {y}\Vert _{{}} \le 1 } \left\langle Kx,y \right\rangle \; = \sup _{\Vert {x}\Vert _{{}}\le 1} \Vert {Kx}\Vert _{{*}} = \Vert {K^*}\Vert _{{}} \; = \sup _{\Vert {y}\Vert _{{}} \le 1} \Vert {K^*y}\Vert _{{*}}. \end{aligned}$$Hence, we also have that

$$\begin{aligned} \left\langle Kx,y \right\rangle \le \Vert {Kx}\Vert _{{*}} \Vert {y}\Vert _{{}} \le L\, \Vert {x}\Vert _{{}} \Vert {y}\Vert _{{}}, \qquad \left\langle K^*y,x \right\rangle \le \Vert {K^*y}\Vert _{{*}} \Vert {x}\Vert _{{}} \le L\, \Vert {x}\Vert _{{}} \Vert {y}\Vert _{{}}. \end{aligned}$$For example, let \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{p}}\) and \(\Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{q}}\), with \(p,q \ge 1\), i.e. the usual \(\ell _p\) norms, then

$$\begin{aligned} \Vert {K}\Vert _{{}} = \sup _{\Vert {x}\Vert _{{p}}\le 1} \Vert {Kx}\Vert _{{q'}} = \sup _{\Vert {y}\Vert _{{q}}\le 1} \Vert {K^*y}\Vert _{{p'}} =\sup _{\begin{array}{c} \Vert {x}\Vert _{{p}}\le 1 \\ \Vert {y}\Vert _{{q}}\le 1 \end{array}} \left\langle Kx,y \right\rangle , \end{aligned}$$with \(p',q'\) such that \(1/p+1/p'=1\), and \(1/q+1/q'=1\).

-

(ii)

f is a proper, lower semicontinuous (l.s.c.), convex function, with \(\nabla f\) Lipschitz continuous on \({\mathcal {X}}\), i.e.

$$\begin{aligned} \Vert {\nabla f(x) - \nabla f(x')}\Vert _{{*}} \le L_f \Vert {x-x'}\Vert _{{}}, \quad \forall x,x' \in {\mathcal {X}}; \end{aligned}$$ -

(iii)

g, h are proper, l.s.c., convex functions with simple structure, in the sense that their proximal maps

$$\begin{aligned} \min _x g(x) + \frac{1}{\tau }D_x(x,\bar{x}), \quad \min _y h^*(y) + \frac{1}{\sigma }D_y(y,\bar{y}), \end{aligned}$$can be computed for any \(\tau ,\sigma > 0\).

Here \(D_x\) and \(D_y\) are Bregman proximity/distance functions based on 1-strongly convex (w.r.t. the respective norms) functions \(\psi _x\) and \(\psi _y\), defined by

Following [13], we assume that \(\psi _x, \psi _y\) are continuously differentiable on open sets \(S_x, S_y\), continuous on \(\overline{S}_x, \overline{S}_y\), and that given any converging sequences \((x^n)\) and \((y^n)\),

We may of course assume that \(\overline{S}_x\) and \(\overline{S}_y\) are the respective domains of \(\psi _x, \psi _y\). We need, in addition to [13], to assume the strong convexity of our functions to ensure the convergence of the algorithms studied in this paper. This restricts the possible class of Bregman functions, notice however that classical examples such as the entropy \(\psi _x(x)=\sum _{i=1}^d x_i\log x_i\) is well-known to be 1-strongly convex with respect to the 1-norm [2, 29] when restricted to the unit simplex, it is also strongly convex with respect to the 2-norm on bounded sets of \((\mathbb {R}_+)^d\). Eventually, we must assume here that \(\hbox {dom }g\subseteq \hbox {dom }\psi _x=\overline{S}_x\) and \(\hbox {dom }h^* \subseteq \hbox {dom }\psi _y=\overline{S}_y\).

Clearly, the Lipschitz continuity of f implies that

Furthermore, the 1-strongly convexity of \(\psi _x\) and \(\psi _y\) easily implies that for any \(x,\bar{x}\) and \(y, \bar{y}\), it holds

The most common choice for \(\psi _x\) and \(\psi _y\) is the usual squared Euclidean norm \(\frac{1}{2}\Vert {\cdot }\Vert _{{2}}^2\) (or Hilbertian in infinite dimension), which yields

We will refer to this classical case as the “Euclidean case”. In this case, it is standard that given a convex, l.s.c. function \(\phi \), if \(\hat{u}\) is the minimizer of

(which we call the “Euclidean proximity map” of \(\phi \) at \(\bar{u}\)), then by strong convexity one has for all u

It turns out that this property is true also for non-Euclidean proximity operators, that is

This is easily deduced from the optimality conditions for \(\hat{u}\), see [6, 30].

Before closing this section, we point out that most of our results still hold, if the function h is a convex l.s.c. function of the form [4, 19, 31]

so that

\(h^*_1\) having simple structure while \(\nabla h^*_2\) can be evaluated and is Lipschitz continuous with parameter \(L_{h^*_2}\). For the ease of presentation we will not consider this situation but we will mention when our results can be extended to this case.

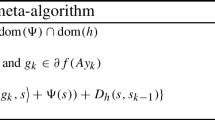

2 The general iteration

The main iterate of the class of primal–dual algorithms we consider in this paper is defined in (7). It takes the points \((\bar{x}, \bar{y})\) as well as the intermediate points \((\tilde{x}, \tilde{y})\) as input and outputs the new points \((\hat{x}, \hat{y})\). It satisfies the following descent rule:

Lemma 1

If (7) holds, then for any \(x\in {\mathcal {X}}\) and \(y\in {\mathcal {Y}}\) one has

Proof

From the first line in the above iteration (7) and property (5), it follows:

Moreover, from the convexity of f and (4) it follows

Combining this with the previous inequality, we arrive at

In the same way:

Summing (9), (10) and rearranging the terms appropriately, we obtain (8) \(\square \).

3 Non-linear primal–dual algorithm

In this section we address the convergence rate of the non-linear primal–dual algorithm shown in Algorithm 1:

The elegant interpretation in [16] shows that by writing the algorithm in this form (which “shifts” the updates with respect to [5]), in the Euclidean case, that is \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}}\), and \(D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2, D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\), then it is an instance of the proximal point algorithm [27], up to the explicit term \(\nabla f(x^n)\), since

where the variable \(z\in {\mathcal {X}}\times {\mathcal {Y}}\) represents the pair (x, y), and the matrix \(M_{\tau ,\sigma }\) is given by

which is positive-definite as soon as \(\tau \sigma L^2< 1\). A proof of convergence is easily deduced. Moreover, since in our particular setting we never really use the machinery of monotone operators, and rely only on the fact that we are studying a specific saddle-point problem, the results are a bit improved: in particular we deal easily with the explicit term f and non-linear proximity operators.

Theorem 1

Let \((x^n,y^n), n=0,\ldots ,N-1\) be a sequence generated by the non-linear primal–dual algorithm (11). Let the step size parameters \(\tau ,\sigma >0\) be chosen such that for all \(x,x' \in \hbox {dom }g\) and \(y,y' \in \hbox {dom }h^*\) it holds that

Then, for any \((x,y) \in {\mathcal {X}}\times {\mathcal {Y}}\) it holds that

where \(X^N = \frac{1}{N}\sum _{n=1}^N x^n\), and \(Y^N = \frac{1}{N}\sum _{n=1}^N y^n\).

Proof

According to the iterative scheme (11), the estimate (8) becomes

Thanks to (13), the terms in the brackets are non-negative. Now we sum the last estimate from \(n=0,\ldots ,N-1\) and find

where we have removed negative terms on the right hand side. Equation (14) follows from the convexity of \((\xi ,\eta ) \mapsto \mathcal {L}(\xi ,y)-\mathcal {L}(x,\eta )\). \(\square \)

Remark 1

Observe that since \(D_x(\cdot ,x')\) and \(D_y(\cdot ,y')\) are 1-convex, (13) is ensured as soon as

Remark 2

The rate (14) can also be written solely in terms of the distance functions \(D_x\) and \(D_y\). In fact, for any \(\alpha > 0\),

In case \(L_f = 0, \tau \sigma L^2 = 1\) and choosing \(\alpha =1/(\tau L)\), the rate (14) becomes

In the Euclidean setting, that is \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}} = \left\langle \cdot ,\cdot \right\rangle ^{\frac{1}{2}}\), and \(D(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2, D(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\), the estimate (15) reduces to

with \(M_{\tau ,\sigma }\) defined in (12). This can also be rewritten as

while the final estimate (14) becomes

Observe that this rate is different from the rate obtained in [5], which does only depend on the diagonal part of \(M_{\tau ,\sigma }\) (each rate can be bounded by twice the other).

Remark 3

If we assume in addition that the inequality \(\tau \sigma L^2<1\) is strict (which follows from (16) if \(L_f>0\), and has to be assumed else), then we can deduce as in [5] convergence results for the algorithm, whenever a saddle-point \(z^*=(x^*,y^*)\) exists. The first thing to observe is that this inequality yields that

for some \(\alpha >0\). As a consequence, it follows from (15) that the sequence \(z^n=(x^n,y^n)\) is globally bounded [indeed, \(\mathcal {L}(X^{N},y^*)-\mathcal {L}(x^*,Y^{N}) \ge 0\)]. Obviously, this also yields a bound for \(Z^N=(X^N,Y^N)\). We may thus assume that a subsequence \((Z^{N_k})_k\) weakly converges in \({\mathcal {X}}\times {\mathcal {Y}}\) to some \(Z=(X,Y)\), and from (14) and the lower-semicontinuity of \(f,g,h^*\) it follows that the limit Z is a saddle-point.

In finite dimension, we can also show the convergence of the whole sequences \(z^n\) and \(Z^N\) to the same saddle-point. The proof follows the proof in [5, 26], in the linear case. Let us assume that z is a limit point for a subsequence \((z^{n_k})_k\), then since (15) guaranties the summability of \(\Vert z^{n+1}-z^n\Vert ^2\), we have that also \(z^{n_k\pm 1}\rightarrow z\). It follows that z is a fixed point of the algorithm and thus a saddle-point [which we now denote \(z^*=(x^*,y^*)\)].

Let \(m \ge 0\) be the limit of the nonincreasing sequence

we wish to show that \(m=0\). Since \(z^{n_k}\rightarrow z^*\) we deduce

Using assumption (3), we deduce \(m=0\). The convergence of the global sequence follows from (20). In Hilbert spaces of infinite dimension, the same proof shows weak convergence of the sequence for Euclidean proximity operators, invoking Opial’s theorem [24].

Remark 4

In the Euclidean setting and when \(g=0\), a better algorithm (in fact, optimal, see [21, 23]) is proposed in [7], which yields a rate of order \(O(L_f/N^2+ L/N)\).

Remark 5

In case h has the composite form (6), then the theorem still holds with the condition (16) replaced with

4 Overrelaxed and inertial variants

In this section, we consider overrelaxed and inertial versions of the primal–dual algorithm. We will only consider the Euclidean setting, that is \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}} = \left\langle \cdot ,\cdot \right\rangle ^{\frac{1}{2}}\), and \(D(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2, D(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\), since our proofs heavily rely on the fact that \(\Vert {\cdot }\Vert _{{2}}^2 = \left\langle \cdot ,\cdot \right\rangle \).

4.1 Relaxed primal–dual algorithm

First we consider the overrelaxed primal–dual Algorithm 2, whose convergence has been considered already in [14, 16]. It is known that an overrelaxation parameter close to 2 can speed up the convergence but a theoretical justification was still missing.

Theorem 2

Assume \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}} = \left\langle \cdot ,\cdot \right\rangle ^{\frac{1}{2}}, D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2, D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\). Let \((\xi ^n,\eta ^n), n=0,\ldots ,N-1\) be a sequence generated by the overrelaxed Euclidean primal–dual algorithm (22). Let the step size parameters \(\tau ,\sigma >0\) and the overrelaxation parameter \(\rho _n\) be a non-decreasing sequence in \((0,\rho )\) with \(\rho < 2\) such that for all \(x,x' \in \hbox {dom }g\) and \(y,y' \in \hbox {dom }h^*\) it holds that

Then, for any \(z=(x,y) \in {\mathcal {X}}\times {\mathcal {Y}}\) it holds that

where \(X^N = \frac{1}{N}\sum _{n=1}^N \xi ^n\), and \(Y^N = \frac{1}{N}\sum _{n=1}^N \eta ^n\).

Proof

We start with the basic inequality (8). According to (22), using \(\bar{z} = z^n\) and \(\tilde{z} = (2\xi ^{n+1}-x^n,y^n)\) and \(\hat{z} = \zeta ^{n+1}\), we obtain

where \(M_{\tau ,\sigma }\) is defined in (12) and we have used the fact that \(2\left\langle a,b \right\rangle _M = \Vert {a}\Vert _{{M}}^2 + \Vert {b}\Vert _{{M}}^2 - \Vert {a-b}\Vert _{{M}}^2\). Now, observe that from the second line in (22), the auxiliary point \(\zeta ^{n+1}\) can be written as

Substituting back into the previous inequality, we have

where we have defined the metric

which is positive definite for all n as soon as (23) is fulfilled. In addition, since \(\rho _n\) is a non-decreasing sequence in \((0,\rho )\) with \(\rho < 2\), summing the above inequality for \(n=0,\ldots ,N-1\) and omitting all nonpositive terms on the right hand side, it follows

The final estimate (24) follows from defining appropriate averages and the convexity of the gap function. \(\square \)

Remark 6

The last result indeed shows that the convergence rate is improved by choosing \(\rho _0\) as large as possible, i.e. close to 2. However, observe that in case the smooth explicit term \(\nabla f\) is not zero, it might be less beneficial to use a overrelaxation parameter larger than one since it requires a smaller primal step size \(\tau \).

4.2 Inertial primal–dual algorithm

Next, we consider an inertial version of the primal–dual algorithm, who has recently been considered in [19] as an extension of the inertial proximal point algorithm of Alvarez and Attouch [1]. It has already been observed in numerical experiments that inertial terms leads to a faster convergence of the algorithm. Here we give a theoretical evidence that indeed the presence of an inertial term leads to a smaller worst-case complexity.

Theorem 3

Assume \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}} = \left\langle \cdot ,\cdot \right\rangle ^{\frac{1}{2}}, D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2, D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\). Let \((x^n,y^n), n=0,\ldots ,N-1\) be a sequence generated by the inertial Euclidean primal–dual algorithm (25). Let the step size parameters \(\tau ,\sigma >0\) and the inertial parameter \(\alpha _n\) be a non-decreasing sequence in \([0,\alpha ]\) with \(\alpha < 1/3\) such that for all \(x,x' \in \hbox {dom }g\) and \(y,y' \in \hbox {dom }h^*\) it holds that

Then, for any \(z=(x,y) \in {\mathcal {X}}\times {\mathcal {Y}}\) it holds that

where \(X^N = \frac{1}{N}\sum _{n=1}^N x^n\), and \(Y^N = \frac{1}{N}\sum _{n=1}^N y^n\).

Proof

We again start with the basic inequality (8). According to (25), using \(\bar{z} = \zeta ^n\) and \(\hat{z} = z^{n+1}\), we have

Plugging in the first line of (25) we arrive at

Using the inequality \(|\left\langle a,b \right\rangle _M| \le \frac{1}{2} \left( \Vert {a}\Vert _{{M}}^2 + \Vert {b}\Vert _{{M}}^2\right) \) we obtain the estimate

Now, since \(\alpha _n\ge 0\) is non-decreasing and \(z^{-1}=z^0\), summing the above inequality for \(n=0,\ldots ,N-1\), we find:

where

which is positive definite for all n as soon as (26) is fulfilled for all \(\alpha _n\le \alpha < 1/3\) since the function \(\frac{(1+\alpha _n)^2}{1-3\alpha _n}\) is monotonically increasing in \(\alpha _n\). Our last estimate can be further simplified as

It remains to show that the term in the last two lines of the above estimate is nonpositive. In fact:

as \(\alpha _n \le \alpha < 1/3\) and as the matrix

is clearly positive definite if (26) is fulfilled. It remains to derive the ergodic rate by defining appropriate averages and exploiting the convexity of the gap function. \(\square \)

Remark 7

This result again shows that it is beneficial to choose \(\alpha _0\) as large as possible, i.e. \(\alpha _0\) close to 1 / 3 in order to reduce the constant on the right hand side. Similar to the case of overrelaxation, larger values of \(\alpha _n\) leads to smaller primal step sizes \(\tau \) and hence an inertial term might be less beneficial in presence of an explicit term \(\nabla f\).

Remark 8

Letting \(\gamma = \tau L_f\) we find that the parameter \(\alpha \) should satisfy

in order for the left-hand side term in (26) to be positive (and then \(\sigma \) needs to be chosen accordingly). We point out that this condition is a bit more restrictive than the condition in [19]. This is due to the fact that our convergence proof is based on the Lipschitz continuity of \(\nabla f\) rather than its co-coercivity, which leads to the loss of a factor 2 in the size of the primal step size \(\tau \) relatively to the Lipschitz parameter \(L_f\).

5 Acceleration for strongly convex problems

Here in this section, we slightly improve the results in [5] on accelerated algorithms. We address more precisely the natural generalization proposed in [9] (also [31]) and studied in [4] (where rates of convergence are proven). The main novelty with respect to [4] is a proof that in an ergodic sense, also the primal–dual gap is controlled and decreases at rate \(O(1/N^2)\) where N is the number of iterations. In addition to our assumptions (i)–(iii) we assume that

-

(iv)

f or g (or both) are strongly convex with respective parameters \({\gamma _f},{\gamma _g}\) and hence the primal objective is strongly convex with parameter \(\gamma ={\gamma _f}+{\gamma _g}>0\).

In fact, we observe that since

we can “transfer” the strong convexity of f to g: letting \(\tilde{f}= f-{\gamma _f}\Vert {\cdot }\Vert _{{}}^2/2, \tilde{g}= g+{\gamma _f}\Vert {\cdot }\Vert _{{}}^2/2\), and \(\gamma ={\gamma _f}+{\gamma _g}\), we have now that \(\tilde{g}\) is \(\gamma \)-convex. In addition, \(\nabla \tilde{f}=\nabla f-{\gamma _f}I\), so that

with

(observe that \(\tau \) needs, as expected, to be less than \(1/{\gamma _f}>1/L_f\)). In addition, we find that \(\nabla \tilde{f}\) is \((L_f-{\gamma _f})\)—Lipschitz. Hence in the following, to simplify we will just assume that g is strongly convex (that is, \({\gamma _f}=0, \gamma ={\gamma _g}\)), replacing assumption (iv) with the simpler assumption:

-

(iv’)

g is strongly convex with parameter \(\gamma >0\).

We must eventually mention here that in case \(f=0\), the dual problem, which has the form \(\min _y g^*(-K^*y)+h^*(y)\), is the sum of a smooth plus a nonsmooth objective which could be tackled directly by more standard optimal methods [3, 22, 23] yielding similar convergence rates (provided one knows how to compute the Lipschitz gradient \(\nabla g^*\), which is slightly different from the assumptions we use in this paper).

5.1 Convergence analysis

With this additional assumption, the descent rule (9) can be slightly improved: indeed, thanks to the strong convexity of g, we can control an additional quadratic term on the right-hand side. It follows that for any \(x\in {\mathcal {X}}\),

It follows that (8) is also improved, with the additional term \(\tfrac{\gamma }{2}\Vert {x-\hat{x} }\Vert _{{}}^2\) on the left-hand side. One sees that one will be able to obtain a good convergence rate whenever the last two terms in (29) can be combined into one, which requires that \(D_x(x,\hat{x})=\frac{1}{2}\Vert {x-\hat{x}}\Vert _{{2}}^2\), that is, we must consider linear proximity operators in the x variable.Footnote 1 To simplify the notation we will drop the subscript “2” in the norm for x, in the rest of this section.

Now, we can specialize “à la” [5]. That is, we choose in (8) \(\tilde{y}=\hat{y}=y^{n+1}, \hat{x}=x^{n+1}, \tilde{x} = x^n+\theta _n (x^n-x^{n-1}), \bar{x}=x^n, \bar{y}=y^n\), and make \({\tau },\sigma \) depend also on the iteration counter n. In particular, now, for any \((x,y)\in {\mathcal {X}}\times {\mathcal {Y}}\),

The last term is simply controlled by observing that

for any \(\mu >0\), and choosing \(\mu =\theta _n L \sigma _n\) we obtain

To sum up, it follows from (8), with the additional strong convexity term from (29), that for any (x, y), using also that \(D_y(y^{n+1},y^n)\ge \frac{1}{2}\Vert {y^{n+1}-y^n}\Vert _{{}}^2\),

Assume the sequences \(\theta _n,\tau _n,\sigma _n\) satisfy for all \(n\ge 0\)

Then (31) becomes (using \(\theta _n^2L^2\sigma _n=\theta _n L^2\sigma _{n-1}\), thanks to (33))

Observe that from (33), \(\prod _{n=1}^N \theta _n = \sigma _0/\sigma _N\). We now let

Then, summing (35) (first multiplied on both sides by \(\sigma _n/\sigma _0\)) from \(n=0\) to \(n=N-1\) and assuming \(x^{-1}=x^0\), using also the convexity of \((\xi ,\eta )\mapsto \mathcal {L}(\xi ,y)-\mathcal {L}(x,\eta )\) (for fixed x, y), we deduce

Considering eventually that [using again (33)]

we deduce the following result.

Theorem 4

Assume \(D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{x}}^2\). Let \((x^n,y^n)\) be a sequence generated by Algorithm 4, and let \((X^N,Y^N)\) and \((T_N)\) be the ergodic averages given by (36). Then, for all x and y, for all \(N\ge 1\), one has the estimate

Remark 9

Notice that, taking \((x,y )=(x^*,y^*)\) a saddle-point in (37), we find that \(X^N\) and \(x^N\) are bounded (and converge to \(x^*\)). If we assume that h has full domain, so that \(h^*(y)/|y|\rightarrow \infty \) as \(|y|\rightarrow \infty \), we deduce that also \(Y^N\) is bounded (since otherwise \(-\mathcal {L}(x^*,Y^N)\) would go to infinity), and it follows that the (x, y) which realize the supremum in the expression \(\mathcal {L}(X^N,y)-\mathcal {L}(x,Y^N)\) are also globally bounded. It follows the global estimate on the gap

5.2 Parameter choices

It turns out that it is possible to choose sequences \((\tau _n,\sigma _n,\theta _n)\) satisfying (32), (33), (34) in order to have \(1/T_N = O(1/N^2)\). A possible choice, similar to [5], to ensure (32), (33), (34) is to keep the product \(\sigma _n{\tau }_n\) constant and let

Then, letting

(or \(\tau _0=\sigma _0=1/L\) if \(L_f=0\)), we find that by induction, since \({\tau }_{n+1}/{\tau }_n=\sigma _n/\sigma _{n+1}<1\) for each n, (34) will be satisfied. We refer to [5] for a proof that this choice ensures that \(\sigma _n\sim \gamma n/(4 L^2)\), so that \(T_N\sim \gamma N^2/(4 L_f)\).

A simpler (still slightly suboptimal) choice is to take \(\sigma _0>0\) arbitrary, and

Then, (32), (33), (34) hold, and

Observe that in this case,

and

that is, the equality holds in (32).

The optimal rule should consist in choosing equalities in (32), (33) and (34). We find that \(\sigma _0>0\) can be chosen arbitrarily,

and then:

so that, assuming \(L_f\tau _n<1\) (which is true for \(n=0\)),

and

Let us denote \(\tau _n^{\textit{opt}}, \sigma _n^{\textit{opt}}\) and \(T_N^{\textit{opt}}\) the \(\tau _n, \sigma _n\) and \(T_N\) obtained by this “optimal” rule (and the corresponding \(T_N\)) and let us keep the notation \(\tau _n, \sigma _n, T_N\) for the expressions in (40) and (41). These choices, in particular, satisfy the equality in (32), (33), but a strict inequality (for \(n\ge 1\)) in (34). We assume that the starting point \(\sigma _0=\sigma _0^{\textit{opt}}\) is the same, then of course also \(\tau _0=\tau _0^{\textit{opt}}\). Then one has:

Lemma 2

For each \(n\ge 0, \sigma _n^{\textit{opt}}\ge \sigma _n\), and \(T_n^{\textit{opt}}\ge T_n\sim c n^2\).

Proof

We proceed by induction. We assume \(\sigma _n^{\textit{opt}}\ge \sigma _n\), which is true for \(n=0\). For practical reasons, let us set \(X^{\textit{opt}}_n=L^2\sigma ^{\textit{opt}}_n, Y^{\textit{opt}}_n = -1/{ \tau ^{\textit{opt}}_n}, X_n=L^2\sigma _n\), and \(Y_n = -1/{ \tau _n}\). Then from the equality in (32), we have for all n

We also assume \(\Pi _n :=X_nY_n \ge \Pi ^{\textit{opt}}_n := X^{\textit{opt}}_n Y^{\textit{opt}}_n\), which is true at \(n=0\). It follows then that from (42) and \(X^{\textit{opt}}_n\ge X_n\) that \(\Pi _{n+1}\ge \Pi ^{\textit{opt}}_{n+1}\). Observe that being this product negative, it means in fact that \(|\Pi _{n+1}|\le |\Pi ^{\textit{opt}}_{n+1}|\).

On the other hand, from (34), one has that

(and, again, \(|\Sigma _{n+1}|\ge |\Sigma ^{\textit{opt}}_{n+1}|\)).

One has then

which, by concavity of \(\sqrt{\cdot }\) and since \(\Sigma _{n+1}^2\ge (\Sigma ^{\textit{opt}}_{n+1})^2, |\Pi _{n+1}|\le |\Pi ^{\textit{opt}}_{n+1}|\), is less than the similar expression for \(X^{\textit{opt}}_{n+1}\). This shows the Lemma. \(\square \)

6 Acceleration for smooth and strongly convex problems

In this section, we finally make the additional assumption that

-

(v)

\(h^*\) is strongly convex with parameter \(\delta >0\).

Equivalently, h has \((1/\delta )\)-Lipschitz gradient, so that the primal objective is both smooth and strongly convex. Then, as expected, the rate can be improved, to linear convergence. In this section, we must assume that the Bregman distance functions satisfy \(D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{2}}^2\) and \(D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{2}}^2\), that is, we are for both variables in the Euclidean/Hilbertian setting. For simplicity we will drop the subscript “2” in the rest of this section.

We show here how to modify the proof of the previous case to obtain a linear convergence rate on the gap. This slightly improves the results in [4, 5] which only give a rate on the iterates. In contrast to [5], we do not show here convergence for a large range of relaxation parameters \(\theta \), but the proof presented can handle the explicit term \(\nabla f\) and yields a similar convergence rate.

6.1 Convergence analysis

A first remark is that the inequality (31), in case \(h^*\) is \(\delta \)-convex, can be written

It follows that if one can choose \(\tau ,\sigma ,\theta \) so that

then, multiplying the inequality by \(\theta ^{-n}\) and summing from \(n=0\) to \(N-1\), we get (assuming \(x^{-1}=x^0\))

Using (45) again, we deduce

Hence, letting now

we obtain the following result

Theorem 5

Assume \(D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{x}}^2\) and \(D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{y}}^2\). Let \((x^n,y^n)\) be a sequence generated by Algorithm 5, and let \((X^N,Y^N)\) and \((T_N)\) be the ergodic averages defined in (46). Then, for all x and y, for all \(N\ge 1\), one has the estimate

which yields a linear convergence rate.

6.2 Parameter choices

Solving the Eqs. (44) for \(\tau ,\sigma ,\theta \), we obtain, lettingFootnote 2

In case \(L_f=0\), one has

and the above formulas simplify to

In this case, if \(\delta \gamma \ll L^2\), then the linear rate of convergence is governed by the factor

we remark that this is of the same order as the rate found in [5] (which was not a bound on the gap though). Note however that a more refined analysis in [5] allowed to obtain better rates, for a larger choice of parameters \(\theta \). A similar analysis with \(L_f\ne 0\) would probably lead to close results through very tedious calculations. On the other hand, the new proof allows for slightly larger steps than in [5].

7 Computational examples

In this section we demonstrate the practical performance of the proposed algorithms on a number of randomly generated instances of classical optimization problems.

7.1 Matrix games

Here, we consider the following min–max matrix game:

where \(\Delta _k\) and \(\Delta _l\) denote the standard unit simplices in \(\mathbb {R}^k\) and \(\mathbb {R}^l\) and \(K\in \mathbb {R}^{k\times l}\). This class of min–max matrix games can be used for approximately finding the Nash equilibrium of two-person zero-sum matrix games such as two-person Texas Hold’em Poker. Following the computational experiments in [23], the entries of K are independently and uniformly distributed in the interval \([-1,1]\). We denote by \(L=\Vert {K}\Vert _{{}}\) the operator norm of K. We can also easily compute the primal–dual gap of a feasible pair (x, y). For this we observe that \(\arg \min _{x\in \Delta _l} \mathcal {L}(x,y) = e_j\), where \(e_j\in \Delta _l\) is the j-th standard basis vector corresponding to the smallest entry of the vector \(K^Ty\). Likewise, \(\arg \max _{y\in \Delta _k} \mathcal {L}(x,y) = e_i\), where i corresponds to the coordinate of the largest entry of Kx. Hence, the primal–dual gap is computed as

7.1.1 Linear and nonlinear primal–dual algorithms

We first consider different Bregman distance settings of the nonlinear primal–dual algorithm presented in Algorithm 1. The initial points \((x^0,y^0)\) are chosen to be the centers of the simplices, that is \(x^0_j=1/l\) and \(y^0_i = 1/k\) for all i, j. There are two obvious choices for the Bregman distance functions:

-

1.

Euclidean setting In the Euclidean setting, \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{2}}, D_x(x,x')=\tfrac{1}{2}\Vert {x-x'}\Vert _{{}}^2\), and \(D_y(y,y')=\tfrac{1}{2}\Vert {y-y'}\Vert _{{}}^2\). It follows that \(\max _{x\in \Delta _l} D_x(x,x^0) = (1-\frac{1}{l})/2\) and likewise \(\max _{y\in \Delta _k} D_y(y,y^0) = (1-\frac{1}{k})/2\). The operator norm is computed as the largest singular value \(L_2 = s_{\max }(K)\). In the iterates of the algorithm, we need to solve subproblems of the following form:

$$\begin{aligned} \hat{x} = \arg \min _{x \in \Delta _l} \left\langle x,\xi \right\rangle + \frac{\Vert {x - \bar{x}}\Vert _{{}}}{2\tau }^2 \Leftrightarrow \hat{x} = \hbox {proj}_{\Delta _l}\left( \bar{x} - \tau \xi \right) , \end{aligned}$$where we are using the \(n\log n\) algorithm described in [12] to compute the orthogonal projections on the unit simplices. Taking the supremum on the right hand side of (17), the ergodic O(1 / N) rate for the primal–dual gap bounded by

$$\begin{aligned} {\mathcal {G}}\left( X^N,Y^N\right) \le \frac{2}{N}\left( \frac{1-\frac{1}{l}}{2\tau } + \frac{1-\frac{1}{k}}{2\sigma }\right) . \end{aligned}$$Since \(\tau \sigma L_2^2 = 1\), the right hand side is minimized by setting

$$\begin{aligned} \tau = \frac{1}{L_2}\sqrt{\frac{1-\frac{1}{l}}{1-\frac{1}{k}}}, \quad \sigma = \frac{1}{L_2}\sqrt{\frac{1-\frac{1}{k}}{1-\frac{1}{l}}}. \end{aligned}$$Hence we get the following final estimate for the gap

$$\begin{aligned} {\mathcal {G}}\left( X^N,Y^N\right) \le \frac{2\sqrt{\left( 1-\frac{1}{l}\right) \left( 1-\frac{1}{k}\right) }}{N}L_2. \end{aligned}$$ -

2.

Entropy setting In the entropy setting we choose \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{y}} = \Vert {\cdot }\Vert _{{1}}\) and the Bregman distance functions are given by \(D_x(x,x') = \sum _j x_j(\log x_j - \log x'_j) - x_j + x'_j\) and \(D_y(y,y') = \sum _i y_i(\log y_i - \log y'_i) - y_i + y'_i\), which are 1-convex with respect to the 1-norm. Now, \(\max _{x\in \Delta _l} D_x(x,x^0) = \log l\) and \(\max _{y\in \Delta _k} D_y(y,y^0) = \log k\). The operator norm is given by \(L_1 = \sup _{\Vert {x}\Vert _{{1}} = 1} \Vert {Kx}\Vert _{{\infty }} = \max _{i,j} |K_{i,j}|\).

It is well known that in the entropy setting, the iterates of the algorithm are explicit:

$$\begin{aligned} \hat{x} = \arg \min _{x \in \Delta _l} \left\langle x,\xi \right\rangle + \frac{1}{\tau }D_x(x, \bar{x}) \Leftrightarrow \hat{x}_j = \frac{\bar{x}_j \exp (-\tau \xi _j)}{\sum _{j=1}^l \bar{x}_j \exp (-\tau \xi _j)} \end{aligned}$$In turn, the ergodic O(1 / N) rate in (17) specializes to

$$\begin{aligned} {\mathcal {G}}\left( X^N,Y^N\right) \le \frac{2}{N}\left( \frac{\log l}{\tau } + \frac{\log k}{\sigma }\right) . \end{aligned}$$Again, the right hand side is minimized by choosing

$$\begin{aligned} \tau = \frac{1}{L_1}\sqrt{\frac{\log l}{\log k}}, \quad \sigma = \frac{1}{L_1}\sqrt{\frac{\log k}{\log l}} \end{aligned}$$We obtain the final estimate as

$$\begin{aligned} {\mathcal {G}}\left( X^N,Y^N\right) \le \frac{4\sqrt{\log l \log k}}{N}L_1. \end{aligned}$$

Remark 10

Since the entries of K are uniformly distributed in \([-1,1]\), the solutions of the min–max matrix games always cluster around the simplex centers 1 / k and 1 / l. In this region, the entropy functions are strongly k- and l-convex, respectively, and hence for small variations around the center,

With this information we can get a better (local) estimate of the operator norm:

In practice, we observed that slightly larger values, e.g. \(1.7 \cdot L_{\mathrm {cent}}\) worked very well in our experiments. It would be of great interest to find a method which is able to exploit this variability, unfortunately we were not able to find a rigorous and efficient method.

First let us observe that our theoretical worst case bounds for the matrix games are exactly the same as the corresponding worst case bounds in [23]. In Table 1 we report the number of iterations of the O(1 / N) primal–dual algorithms using the Euclidean setting and the entropy setting to reach a primal–dual gap that is less than \(\varepsilon \). One can see that the entropy-based algorithm is consistently faster compared to the Euclidean-based algorithm. Furthermore, one can see that the complexity for finding an \(\varepsilon \) accurate solution grows, as predicted in Theorem 1, with a factor of order \(1/\varepsilon \). Indeed, one can see that reducing \(\varepsilon \) by a factor of 10 roughly leads to ten times more iterations. Comparing the results with the results reported in [23] the proposed algorithms are significantly faster. Also observe that counterintuitively, less iterations are needed for larger problems. This might be due to the fact that the value of the gap of theses problems at the centers of the simplices goes to zero as the size goes to infinity, making this initialization more beneficial for larger problems.

7.1.2 Ergodic versus nonergodic sequence

We also tested the performance of the nonergodic sequences, i.e. the rate of convergence of the primal–dual gap of the iterates \((x^n,y^n)\). Figure 1 depicts logarithmic convergence plots in the setting \(k=l=1000\), for both the Euclidean and the entropy setting. It shows that in the Euclidean setting, the nonergodic sequence converges even faster than the ergodic sequence. In the entropy setting however, we observed the contrary. The nonergodic sequence converges much slower than the ergodic sequence. We do not know the reason for this behavior. For both ergodic sequences, the gap decreases exactly at rate O(1 / N) as predicted by the analysis.

Comparison between the performance of the ergodic and the nonergodic sequences of Algorithm 1 applied to the matrix game problem (50)

7.1.3 Overrelaxed and inertial primal–dual algorithms

In this section, we evaluate the performance of the overrelaxed and inertial version of the Euclidean primal–dual algorithm detailed in Algorithm 2 and Algorithm 3. We vary the relaxation parameter \(\rho \) and the inertial parameter \(\alpha \) (which are kept constant during the iterations) and record the number of iterations that are necessary to reach a primal–dual gap which is less a tolerance of \(\varepsilon =10^{-4}\). For both, the inertial and overrelaxed versions, we observe that the algorithms are still converging for the theoretical limits \(\rho =2\) and \(\alpha =1/3\).

In Table 2, we report the number of iterations using different values of the relaxation parameter \(\rho \). As predicted in Theorem 2, the number of iterations are approximately proportional to the factor \(1/\rho \). In Table 3, we report the number of iterations using different inertial parameters \(\alpha \). Again, as predicted in Theorem 3, the number of iterations roughly correspond to the factor \(1-1/\alpha \).

7.2 Simplex constrained least squares problem

In this section, we consider the following class of simplex-constrained least squares problems

where \(\Delta _l\) again denotes the standard unit simplex in \(\mathbb {R}^l\) and \(K \in \mathbb {R}^{k\times l}\), and \(b \in \mathbb {R}^k\). Several standard optimization problems used in machine learning such as the support vector machine can be obtained as special cases from (51). Here, K and b are randomly generated with their entries uniformly and independently distributed in the interval \([-1,1]\). We again denote by \(L=\Vert {K}\Vert _{{}}\) the operator norm of K. The saddle-point formulation of (51) is given by

Furthermore, the dual problem is given by

In turn, the primal–dual gap for a pair (x, y) can be easily computed by observing that \(\arg \min _{x \in \Delta _l} \mathcal {L}(x,y) = e_j\) and also \(\arg \max _y \mathcal {L}(x,y) = Kx-b\):

7.2.1 Accelerated primal–dual algorithms

Note that since the saddle-point problem is strongly convex in y, we can use the accelerated primal–dual algorithm presented in Algorithm 4 (by interchanging the role of the primal and the dual variables). Since \(L_f=0\), we apply the simple parameter choice (39). We initialize the algorithms with the obvious choice \((x^0)_j = 1/l\) for all j and \(y^0=Kx^0-b\). Recall that in the accelerated algorithm, we have fixed \(\Vert {\cdot }\Vert _{{y}}=\Vert {\cdot }\Vert _{{2}}\) and hence \(D_y(y,y') = \frac{1}{2}\Vert {y-y'}\Vert _{{}}^2\). Let us now consider two different setups of the algorithm:

-

1.

Euclidean setting In the Euclidean setting, we set \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{2}}\) and hence \(D_x(x,x') = \frac{1}{2}\Vert {x-x'}\Vert _{{}}^2\). The operator norm \(L_2=\Vert {K}\Vert _{{}}\) is again given by \(s_{\max }(K)\). According to (37), we guaranteed that after N iterations for all (x, y) it holds that

$$\begin{aligned} T_N \left( \mathcal {L}(X^N,y) - \mathcal {L}(x,Y^N)\right) \le \frac{\Vert {x-x^0}\Vert _{{}}^2}{2\tau _0} + \frac{\Vert {y-y^0}\Vert _{{}}^2}{2\sigma _0} \end{aligned}$$Substituting \(y=\arg \max _y \mathcal {L}(X^N,y) = KX^N-b\), we obtain for all x

$$\begin{aligned} T_N \left( {\mathcal {P}}(X^N) - \mathcal {L}(x,Y^N)\right) \le \frac{\Vert {x-x^0}\Vert _{{}}^2}{2\tau _0} + \frac{\Vert {K(X^N-x^0)}\Vert _{{}}^2}{2\sigma _0} \end{aligned}$$Taking the supremum with respect to x on both sides, it follows

$$\begin{aligned} T_N {\mathcal {G}}(X^N,Y^N)&\le \sup _{x\in \Delta _l} \frac{\Vert {x-x^0}\Vert _{{}}^2}{2\tau _0} + \frac{\Vert {K(X^N-x^0)}\Vert _{{}}^2}{2\sigma _0}\\&\le \frac{1-\frac{1}{l}}{2\tau _0} + \frac{\Vert {K(X^N-x^0)}\Vert _{{}}^2}{2\sigma _0}\\&\le \frac{1-\frac{1}{l}}{2\tau _0} + \frac{(1-\frac{1}{l})}{2\sigma _0}L_2^2. \end{aligned}$$The right hand side is minimized by choosing \(\tau _0 = 1/L_2^2\) and \(\sigma _0=1\) which gives the final estimate

$$\begin{aligned} {\mathcal {G}}(X^N,Y^N) \le \frac{1-\frac{1}{l}}{T_N}L_2^2, \end{aligned}$$where \(T_N \sim O(N^2)\) is defined in (36).

-

2.

Entropy setting In the entropy setting, we choose \(\Vert {\cdot }\Vert _{{x}} = \Vert {\cdot }\Vert _{{1}}\) and \(D_x(x,x') = \sum _j x_j(\log x_j - \log x'_j) - x_j + x'_j\). The operator norm is now given by \(L_{12} =\Vert {K}\Vert _{{}} = \sup _{\Vert {x}\Vert _{{1}} \le 1} \Vert {Kx}\Vert _{{2}} = \max _j \Vert {K_j}\Vert _{{2}}\) where \(K_j\) denotes the j-th column of K, which is typically smaller than \(L_2\). In analogy to the above calculations, we have

$$\begin{aligned} T_N {\mathcal {G}}(X^N,Y^N) \le&\sup _{x} \frac{D_x(x,x^0)}{\tau _0} + \frac{\Vert {K(X^N-x^0)}\Vert _{{}}^2}{2\sigma _0}\\ \le&\frac{\log l}{\tau _0} + \frac{L_2^2(1-\frac{1}{l})}{2\sigma _0}. \end{aligned}$$The optimal choice for \(\tau _0\) and \(\sigma _0\) is now

$$\begin{aligned} \tau _0 = \sqrt{\frac{2 \log l}{L_{12}^2L_2^2(1-\frac{1}{l})}}, \quad \sigma _0 = \sqrt{\frac{L_2^2 (1-\frac{1}{l})}{2 L_{12}^2 \log l}}, \end{aligned}$$which yields the final estimate

$$\begin{aligned} {\mathcal {G}}(X^N,Y^N) \le \frac{L_{12}L_2\sqrt{\frac{(1-\frac{1}{l})\log l}{2}}}{T_N}. \end{aligned}$$

We also observed that in the entropy setting, we can choose larger step sizes: choosing \(L_{12} = 0.35\cdot \max _j \Vert {K_j}\Vert _{{2}}\) gave experimentally good results. In Table 4, we report the number of iterations for Algorithm 4 in the Euclidean and the entropy setting. One can see that in the entropy setting, the algorithm converges significantly faster. Furthermore, one can see that the number of iterations which are necessary to reach a primal–dual gap less than \(\varepsilon \) nicely reflect the \(O(1/N^2)\) rate of Algorithm 4. Indeed, reducing \(\varepsilon \) by a factor of 10 roughly leads to \(\sqrt{10} \approx 3.16\) more iterations.

7.2.2 Ergodic versus nonergodic sequence

We also investigated the performance difference between the ergodic and the nonergodic sequences. Figure 2 shows a comparison between the ergodic and the nonergodic sequences for both the Euclidean and the entropy setup for the simplex constrained least squares problem (51) using \(k=100, l=1000\). While the ergodic sequences both show a \(O(1/N^2)\) rate, the nonergodic sequences show a completely different behavior. In the entropy setting, the nonergodic sequence converges a little bit faster but is seems to be quite unstable. In the Euclidean setting, the nonergodic sequence converges extremely fast. We do not know the reason for this, but it will be interesting to find an alternative proof for the convergence rate that does not rely on the ergodic sequence.

Comparison between the performance of the ergodic and the nonergodic sequences of Algorithm 4 applied to the simplex constrained least squares problem (51)

7.3 Elastic net problem

Finally, we consider the elastic net problem which has been extensively used for feature selection and sparse coding. It is written as the following optimization problem:

where \(K \in \mathbb {R}^{k\times l}\) is a matrix where its columns are features and \(b\in \mathbb {R}^k\) is the measurement vector. For \(\lambda _2=0\), the elastic net is equivalent to the well-known LASSO problem. It can be rewritten as the following saddle-point problem:

Observe that the above problem is \(\lambda _2\)-strongly convex in x and 1-strongly convex in y. Hence, we can make use of the linearly converging Algorithm 5. The dual problem is computed as

where the expressions \(|K^Ty|\) and \((t)^+ = \max (0, t)\) are understood element-wise. In turn the primal–dual gap can be computed as

In our experiments, we again choose the entries of K and b uniformly and independently in the interval \([-1,1]\) and we again denote by \(L_2=\Vert {K}\Vert _{{}} = s_{\max }(K)\) the largest singular value of K. We compute the values for \(\tau , \sigma \) and \(\theta \) using the formulas provided in (49) and we choose \(x^0=0, y^0=Kx^0-b\). According to (47), after N iterations, we have for all (x, y):

Taking the supremum on the left hand with respect to (x, y) we find \(x = (|K^TY^N|-\lambda _1)^+\cdot \mathrm {sgn}(-K^TY^N)/\lambda _2\) and \(y=KX^N-b\). Substituting back we obtain the final estimate

where \(T_N \sim O(\theta ^{-N})\) is defined in (46) and \(\tau ,\sigma \) are chosen according to (49).

For the implementation of the algorithm we need to solve the proximal map with respect to the mixed \(\ell _1\)-\(\ell _2\) norm appearing in the primal problem. The solution is given by:

where the operations are understood element-wise.

In Table 5 we evaluate Algorithm 5 for different problem instances of (53). We set \(\lambda _1=1\) and used different values of \(\lambda _2\) in order to study the behavior of the algorithm for different degrees of convexity. The table reports the number of iterations that were needed to achieve a primal–dual gap less than the error tolerance \(\varepsilon \). One can see that in general, a smaller value of \(\lambda _2\) leads to a smaller strong convexity parameter of the primal problem and hence the problem appears more difficult to the algorithm. Thanks to the \(O(\theta ^N)\) linear convergence rate of the algorithm, reducing the required tolerance by a factor of 10 only leads to a small increase of the required iterations.

7.3.1 Ergodic versus nonergodic sequence

Finally Fig. 3 shows the performance difference between the ergodic sequence and the nonergodic sequence for the elastic net problem using \(k=100, l=1000, \lambda _1 = 1\), and \(\lambda _2=10^{-3}\). One can see that while the performance of the ergodic sequence is again well predicted by the worst case rate \(O(\theta ^N)\), the performance of the nonergodic sequence is again superior.

Comparison between the performance of the ergodic and the nonergodic sequences of Algorithm 5 applied to the elastic net problem (53)

8 Conclusion

In this work, we have presented refined ergodic convergence rates for a first-order primal–dual algorithm for composite convex–concave saddle-point problems. Some of the presented proofs are quite elementary and easily extend to non-linear Bregman distance functions and inertial or overrelaxed variants of the algorithm. Furthermore, we have given refined ergodic convergence rates in terms of the primal–dual gap function for accelerated variants of the algorithm. We have illustrated the theoretical results by applying the algorithms to a number of standard convex optimization problems including matrix games, simplex constrained least squares problems and the elastic net selector. The numerical results show a behaviour which corresponds nicely to the theoretical predictions. We have also observed that in the Euclidean setting, the nonergodic sequences very often converge much faster than the ergodic sequences. We will investigate this issue in more detail in our future research. Furthermore, it will be interesting to investigate strategies to dynamically adjust the step sizes (\(\tau _n, \sigma _n\) and \(\theta _n\)) to the local smoothness of the problem, which can vary a lot during the optimization (see Remark 10).

Notes

It must be observed here that the right assumption on g to obtain an accelerated scheme with an arbitrary Bregman distance \(D_x\) should be that g is “strongly convex with respect to \(\psi _x\)”, in the sense that \(g-\gamma \psi _x\) is convex. The proof would then be similar. However, it is not clear whether this covers very interesting situations beyond the standard case.

Using WolframAlpha to check our calculations.

References

Alvarez, F., Attouch, H.: An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9(1–2), 3–11 (2001)

Beck, A., Teboulle, M.: Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31(3), 167–175 (2003)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Boţ, R.I., Csetnek, E.R., Heinrich, A., Hendrich, C.: On the convergence rate improvement of a primal–dual splitting algorithm for solving monotone inclusion problems. Math. Program. 150(2, Ser. A), 251–279 (2015)

Chambolle, A., Pock, T.: A first-order primal–dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chen, G., Teboulle, M.: Convergence analysis of a proximal-like minimization algorithm using Bregman functions. SIAM J. Optim. 3(3), 538–543 (1993)

Chen, Yunmei, Lan, Guanghui, Ouyang, Yuyuan: Optimal primal–dual methods for a class of saddle point problems. SIAM J. Optim. 24(4), 1779–1814 (2014)

Combettes, P.L., Condat, L., Pesquet, J.-C., Vũ, B.C.: A forward–backward view of some primal–dual optimization methods in image recovery. In: Proceedings ICIP 2014 Conference, Paris, Oct. 2014 (2014) (to appear)

Condat, L.: A primal–dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 158(2), 460–479 (2013)

Davis, D., Yin, W.: A three-operator splitting scheme and its optimization applications. Technical report, CAM Report 15-13/preprint arXiv:1504.01032 (2015)

Drori, Y., Sabach, S., Teboulle, M.: A simple algorithm for a class of nonsmooth convex–concave saddle-point problems. Oper. Res. Lett. 43(2), 209–214 (2015)

Duchi, J., Shalev-Shwartz, S., Singer, Y., Chandra, T.: Efficient projections onto the \(\ell _1\)-ball for learning in high dimensions. In: Proceedings of the 25th international conference on machine learning, ICML ’08, pages 272–279, New York (2008). ACM

Eckstein, J.: Nonlinear proximal point algorithms using bregman functions, with applications to convex programming. Math. Oper. Res. 18(1), 202–226 (1993)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(3, Ser. A), 293–318 (1992)

Esser, E., Zhang, X., Chan, T.F.: A general framework for a class of first order primal–dual algorithms for convex optimization in imaging science. SIAM J. Imaging Sci. 3(4), 1015–1046 (2010)

He, B., Yuan, X.: Convergence analysis of primal–dual algorithms for a saddle-point problem: from contraction perspective. SIAM J. Imaging Sci. 5(1), 119–149 (2012)

He, B., Yuan, X.: On the \(O(1/n)\) convergence rate of the Douglas–Rachford alternating direction method. SIAM J. Numer. Anal. 50(2), 700–709 (2012)

Hohage, T., Homann, C.: A generalization of the Chambolle–Pock algorithm to Banach spaces with applications to inverse problems. Technical report, arXiv:1412.0126 (2014)

Lorenz, D.A., Pock, T.: An inertial forward–backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51(2), 311–325 (2015)

Nemirovski, A.S.: Prox-method with rate of convergence \(O(1/t)\) for variational inequalities with Lipschitz continuous monotone operators and smooth convex–concave saddle point problems. SIAM J. Optim. 15(1), 229–251 (2004). (electronic)

Nemirovski, A.S., Yudin, D.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983). Translated from the Russian and with a preface by E.R. Dawson, Wiley-Interscience Series in Discrete Mathematics

Nesterov, Y.: Introductory Lectures on Convex Optimization. Applied Optimization, vol. 87. Kluwer Academic Publishers, Boston (2004). A basic course

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Opial, Z.: Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 73, 591–597 (1967)

Pesquet, J.-C., Repetti, A.: A class of randomized primal–dual algorithms for distributed optimization. arXiv:1406.6404 (2014)

Pock, T., Cremers, D., Bischof, H., Chambolle, A.: An algorithm for minimizing the Mumford-Shah functional. In: ICCV Proceedings, LNCS. Springer (2009)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Shefi, R., Teboulle, M.: Rate of convergence analysis of decomposition methods based on the proximal method of multipliers for convex minimization. SIAM J. Optim. 24(1), 269–297 (2014)

Teboulle, M.: Entropic proximal mappings with applications to nonlinear programming. Math. Oper. Res. 17(3), 670–690 (1992)

Tseng, P.: On accelerated proximal gradient methods for convex–concave optimization (2008). SIAM J. Optim. http://www.csie.ntu.edu.tw/~b97058/tseng/papers/apgm. (submitted)

Vũ, B.C.: A splitting algorithm for dual monotone inclusions involving cocoercive operators. Adv. Comput. Math. 38(3), 667–681 (2013)

Acknowledgments

This research is partially supported by the joint ANR/FWF Project Efficient Algorithms for Nonsmooth Optimization in Imaging (EANOI) FWF n. I1148/ANR-12-IS01-0003. A.C. would like to thank his colleague S. Gaiffas for stimulating discussions, as well as J. Fadili for very helpful discussions on nonlinear proximity operators. This work also benefited from the support of the “Gaspard Monge Program in Optimization and Operations Research” (PGMO), supported by EDF and the Fondation Mathématique Jacques Hadamard (FMJH). The authors also need to thank the referees for their careful reading of the manuscript and their numerous helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chambolle, A., Pock, T. On the ergodic convergence rates of a first-order primal–dual algorithm. Math. Program. 159, 253–287 (2016). https://doi.org/10.1007/s10107-015-0957-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-015-0957-3

Keywords

- Saddle-point problems

- First order algorithms

- Primal–dual algorithms

- Convergence rates

- Ergodic convergence