Abstract

We consider a risk-averse multi-stage stochastic program using conditional value at risk as the risk measure. The underlying random process is assumed to be stage-wise independent, and a stochastic dual dynamic programming (SDDP) algorithm is applied. We discuss the poor performance of the standard upper bound estimator in the risk-averse setting and propose a new approach based on importance sampling, which yields improved upper bound estimators. Modest additional computational effort is required to use our new estimators. Our procedures allow for significant improvement in terms of controlling solution quality in SDDP-style algorithms in the risk-averse setting. We give computational results for multi-stage asset allocation using a log-normal distribution for the asset returns.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We formulate and solve a multi-stage stochastic program, which uses conditional value at risk (\(\mathrm{CVaR }\)) as the measure of risk. Our solution procedure is based on stochastic dual dynamic programming (SDDP), which has been employed successfully in a range of applications, exhibiting good computational tractability on large-scale problem instances; see, e.g., [10, 12, 13, 17, 31, 32].

There has been very limited application of SDDP to models with the type of risk measure that we use, which involves a form of \(\mathrm{CVaR }\) that is nested to ensure a notion of time consistency. See Ruszczynski [36] and Shapiro [40] for discussions of time-consistent risk measures in multi-stage stochastic optimization. A standard multi-stage recourse formulation has an additive form of expected utility. In this case, the usual upper bound estimator in SDDP algorithms is computed by solving subproblems along linear sample paths through the scenario tree, and the resulting computational effort is linear in the product of the number of stages and the number of samples. As we describe below, this type of estimator performs poorly for a model with a nested \(\mathrm{CVaR }\) risk measure, and this has hampered application of SDDP to such time-consistent risk-averse formulations.

We are aware of three solutions that have been proposed in the literature to circumvent the difficulty we have just described. First, we can solve a risk-neutral version of the problem instance under some suitable termination criterion, and then fix the number of iterations of SDDP to solve the risk-averse model under nested \(\mathrm{CVaR }\). Philpott and de Matos [29] report good computational experience with this approach. However, this leaves open the question of whether the same number of iterations is always appropriate for both risk-neutral and risk-averse model instances. Second, we can compute an upper bound estimator via the conditional sampling method of Shapiro [41]. However, the associated computational effort grows exponentially in the number of stages, and as Shapiro [41] discusses, the bound can be loose. Third, a non-statistical deterministic upper bound is proposed in Philpott et al. [30] based on using an inner approximation scheme. This approach is attractive in that it does not have sampling-based error, but as discussed in [30] the upper bound does not scale well as the number of state variables grows.

The purpose of this article is to propose, analyze, and computationally demonstrate a new upper bound estimator for SDDP algorithms under a nested \(\mathrm{CVaR }\) risk measure. The computational effort required to form our bound grows linearly in the number of time stages, is not limited to models with a modest number of state variables, and the estimation procedure fits flawlessly in the standard SDDP framework. Moreover, our bound is significantly tighter than the estimator based on conditional sampling, which further facilities application of natural termination criteria, which are usually based on comparing the difference between an upper bound estimator and the lower bound. That said, our estimation procedure is not turnkey. Rather, it requires specification of functions that can appropriately characterize the tail of the recourse function, as we formalize in our main results and as we illustrate with an asset allocation model.

SDDP originated in the work of Pereira and Pinto [26], and inspired a number of related algorithms [7, 10, 23, 28], which aim to improve its efficiency. Nested Benders’ decomposition algorithm [5] applied to a multi-stage stochastic program requires computational effort that grows exponentially in the number of stages. SDDP-style algorithms have computational effort per iteration that instead grows linearly in the number of stages. To achieve this, SDDP algorithms rely on the assumption of stage-wise independence. That said, SDDP algorithms can also be applied in some special cases of additive interstage dependence, such as when an autoregressive process, or a dynamic linear model, governs the right-hand side vectors [9, 19]. SDDP-style algorithms extend to handle other types of interstage dependency, such as combining the usual finer grain SDDP dependency with a Markov chain governing a coarser grain state of the system [25, 29] or with a coarser grain scenario tree [32]. SDDP algorithms can also be coupled with a Markov decision process (MDP) and approximate dynamic programming employed on a scenario lattice for the MDP [24]. The ideas we present should be useful in these extensions of SDDP.

Other important algorithms designed to solve multi-stage stochastic programs include extensions of stochastic decomposition to the multi-stage case [16, 37] and progressive hedging [33]. While these algorithms have been developed in the risk-neutral setting, they extend in natural ways to handle the risk measure we consider here, with the caveat that the nested \(\mathrm{CVaR }\) expression can be exactly computed only when the scenario tree is of modest size. When sampling is required, these algorithms may also benefit from the type of estimator we propose.

Risk-averse stochastic optimization has received significant attention in recent years because of its attractive properties for decision makers. The properties required of coherent risk measures, introduced in Artzner et al. [2], are now widely accepted for time-static risk-averse optimization. Many risk measures are known to satisfy these properties; for an overview see, for instance, Krokhmal et al. [21]. A number of proposals have been put forward to extend coherent risk measures to handle multi-stage stochastic optimization. In the multi-stage case we seek a policy, which specifies a decision rule at every stage \(t\) for any realization of the stochastic process up to time \(t\). While there are multiple approaches, in addition to coherency, time consistency may be desired. This latter property states that optimal decisions at time \(t\) should not depend on future states of the system, which we already know cannot be realized, conditional on the state of the system at time \(t\). Despite the natural statement of this requirement, there are a variety of risk measures that fail to meet this condition. While it is beyond the scope of this article, the ideas behind our proposed estimators may also apply to models with \(\mathrm{CVaR }\)-style risk measures that do not satisfy time consistency; see Definition 3.29 of Pflug and Römisch [27], Example 6.43 of Shapiro et al. [38], and Example 3.11 of Eichhorn and Römisch [11].

The main approach to construct time-consistent risk measures involves so-called conditional risk measures, introduced in Ruszczynski and Shapiro [35]. The construction is based on nesting of the risk measures, conditional on the state of the system. While this ensures time consistency, it leads to the computational difficulties that we describe above. (See Philpott and de Matos [29] and Shapiro [41] for further discussion.) With time-consistent \(\mathrm{CVaR }\) chosen as the risk measure, we propose a new approach to upper bound estimation to overcome these computational difficulties.

We organize the remainder of this article as follows. We present our risk-averse multi-stage model in Sect. 2 and briefly review the SDDP algorithm in Sect. 3. Section 4 extends this description to the risk-averse case. We develop and analyze the proposed upper bound estimators in Sect. 5, and we provide computational results for two asset allocation models, both with and without transaction costs, in Sect. 6. We conclude and discuss ideas for future work in Sect. 7.

2 Multi-stage risk-averse model

We formulate a multi-stage stochastic program with a nested \(\mathrm{CVaR }\) risk measure in the same manner as Shapiro [41], largely following his notation. Hence, we provide a brief problem statement. The model has random parameters in stages \(t=2, \ldots ,T\), denoted \(\mathbf{\xi }_{t} =\left( \mathbf{c}_{t},\mathbf{A}_{t},\mathbf{B}_{t},\mathbf{b}_{t} \right) \), which are stage-wise independent and governed by a known, or well-estimated, distribution. The parameters of the first stage, \(\mathbf{\xi }_{1} =\left( \mathbf{c}_{1},\mathbf{A}_{1},\mathbf{b}_{1} \right) \), are assumed to be known when we make decision \(\mathbf{x}_{1}\), but only a probability distribution governing future realizations, \(\mathbf{\xi }_{2} , \ldots ,\mathbf{\xi }_{T}\), is assumed known. The realization of \(\mathbf{\xi }_{2}\) is known when decisions \(\mathbf{x}_{2}\) must be made and so on to stage \(T\). We denote the data process up to time \(t\) by \(\mathbf{\xi }_{\left[ t\right] }\), meaning \(\mathbf{\xi }_{\left[ t\right] } = \left( \mathbf{\xi }_{1} , \ldots ,\mathbf{\xi }_{t} \right) \).

Our model allows specification of a different risk aversion coefficient and confidence level, denoted \(\lambda _{t}, \alpha _{t} \in \left[ 0, 1 \right] \), respectively, at each time stage, \(t=2, \ldots ,T\). In order to provide the nested formulation of the model we introduce the following operator, which forms a weighted sum of expectation and risk associated with random loss \(Z\):

In Eq. (1), \(\mathrm{CVaR }\) penalizes losses in the upper \(\alpha \) tail of \(Z\) with a typical value of \(\alpha \) being \(0.05\).

We can write the risk-averse multi-stage model with \(T\) stages in the following form:

where the first-, second-, and final stage minimizations are constrained by \(\mathbf{A}_{1}\mathbf{x}_{1}= \mathbf{b}_{1}, \mathbf{x}_{1} \ge 0\); \(\mathbf{A}_{2}\mathbf{x}_{2}=\mathbf{b}_{2}- \mathbf{B}_{2}\mathbf{x}_{1}, \mathbf{x}_{2} \ge 0\); and, \(\mathbf{A}_{T}\mathbf{x}_{T}=\mathbf{b}_{T} - \mathbf{B}_{T}\mathbf{x}_{T-1}, \mathbf{x}_{T} \ge 0\), respectively. We assume model (2) is feasible, has relatively complete recourse, and has a finite optimal value. The special case with \(\lambda _{t} = 0, t=2, \ldots ,T\), is risk-neutral because we then minimize expected cost.

Model (2) is distinguished from other possible approaches to characterizing risk, by taking the risk measure as a function of the recourse value at each stage. This ensures time consistency of the risk measure. See Rudloff et al. [34] and Ruszczynski [36] for discussions of a conditional certainty-equivalent interpretation from utility theory for such nested formulations. A solution to model (2) is a policy, and time consistency means that the resulting policy has a natural interpretation that lends itself to implementation along a sample path with realizations that unfold sequentially.

Our model, with the nested risk measure, allows a dynamic programming formulation to be developed, as is described in [29, 41]. Using as the definition of conditional value at risk,

where \([\, \cdot \,]_+ \equiv \max \{ \, \cdot \, , 0 \}\), we can write

The recourse value \(\mathsf {Q}_{t}(\mathbf{x}_{t-1}, \mathbf{\xi }_{t})\) at stage \(t = 2, \ldots , T\) is given by:

where

We take \(Q_{T+1}(\cdot ) \equiv 0\) and \(\lambda _{T+1} \equiv 0\) so that the objective function of model (4) reduces to \(\mathbf{c}_{T} ^{\top }\mathbf{x}_{T}\) when \(t=T\).

In contrast to a multi-stage formulation rooted in expected utility, our multi-stage model with \(\mathrm{CVaR }\) has an additional decision variable, \(u_{t}\), which estimates the value-at-risk level. The recourse value at stage \(t\) depends on \(\mathbf{\xi }_{t}\) rather than \(\mathbf{\xi }_{\left[ t\right] }\), because we assume the process to be stage-wise independent. After introducing the auxiliary variables, \(u_{t}\), the problem seems to be converted to the simpler case, involving only expectations of an additive utility. This impression may lead to the false conclusion that a traditional SDDP-style algorithm can be applied. The nested nonlinearity arising from the positive-part function precludes this, as we illustrate in the next example.

Example 1

Suppose we incur random costs \(Z_2\) in the second stage and \(Z_3\) in the third stage. Then under an additive utility with contribution \(u_t(\cdot )\) in stage \(t\), we have:

However, this additive form does not hold under \(\mathrm{CVaR }\). While we can write the composite risk measure as:

the composite risk measure does not lend itself to further simplification. Subadditivity of \(\mathrm{CVaR }\) yields

which only bounds the risk measure and, even then, the composite measure still requires evaluation.

It is for the reasons illustrated in Example 1 that Philpott and de Matos [29] and Shapiro [41] point to the lack of a good upper bound estimator for model (2) when the problem has more than a very small number of stages. The natural conditional sampling estimator, discussed in [29, 41], has computational effort that grows exponentially in the number of stages. The following example points to a second issue associated with estimating \(\mathrm{CVaR }\).

Example 2

Consider the following estimator of \(\mathrm{CVaR }_{\alpha }\left[ Z\right] \), where \(Z^1,Z^2,\ldots ,Z^{M_{}}\) are independent and identically distributed (i.i.d.) from the distribution of \(Z\):

If \(\alpha =0.05\) only about 5 % of the samples contribute nonzero values to this estimator of \(\mathrm{CVaR }\).

The inefficiency pointed to in Example 2 compounds the computational challenges of forming a conditional sampling estimator of \(\mathrm{CVaR }\) in the multi-stage setting. When forming an estimator of our risk measure from Eq. (1), this inefficiency means that, say, 95 % of the samples are devoted to only estimating expected cost and the remaining 5 % contribute to estimating both \(\mathrm{CVaR }\) and expected cost. In what follows we propose an approach to upper bound estimation in the context of SDDP that rectifies this imbalance and has computational requirements that grow gracefully with the number of stages. Before turning to our estimator, we discuss SDDP and its application to our risk-averse formulation in the next two sections.

3 Stochastic dual dynamic programming

We use stochastic dual dynamic programming to approximately solve model (2). SDPP does not operate directly on model (2). Instead, we first form a sample average approximation (SAA) of model (2), and SDDP approximately solves that SAA. Thus in our context SDDP forms estimators by sampling within an empirical scenario tree. We describe below how we form the empirical scenario tree. Then in the remainder of this article we restrict attention to solving that SAA via SDDP. See Shapiro [39] for a discussion of asymptotics of SAA for multi-stage problems, Philpott and Guan [28] for convergence properties of SDDP, and Chiralaksanakul and Morton [8] for procedures to assess the quality of SDDP-based policies.

Again, we assume \(\mathbf{\xi }_{t}, t= 2 , \ldots ,T\), to be stage-wise independent. We further assume that for each stage \(t= 2 , \ldots ,T\) there is a known (possibly continuous) distribution \(P_{t}\) of \(\mathbf{\xi }_{t}\) and that we have a procedure to sample i.i.d. observations from this distribution. Using this procedure we obtain a single empirical distribution for each stage, denoted \(\hat{P}_{t}, t= 2 , \ldots ,T\), and the associated empirical scenario tree is interstage independent. The scenarios generated by this procedure are equally probable, but this is not required by SDDP.

Distribution \(\hat{P}_{t}\) has \(D_{t}\) realizations and stage \(t\) has \(N_{t}\) scenarios, where \(N_{t} = \prod _{i=2}^{t} D_{i}\). Fully general forms of interstage dependency lead to inherent computational intractability as even the memory requirements to store a general sampled scenario tree grow exponentially in the number of stages, but several tractable dependency structures are discussed in Sect. 1.

We let \(\hat{\varOmega }_{t}\) denote the stage \(t\) sample space, where \(|\hat{\varOmega }_{t}|=N_{t}\). We use \(j_{t} \in \hat{\varOmega }_{t}\) to denote a stage \(t\) sample point, which we call a stage \(t\) scenario. We define the mapping \(a(j_{t}) : \hat{\varOmega }_{t} \rightarrow \hat{\varOmega }_{t-1}\), which specifies the unique stage \(t-1\) ancestor for the stage \(t\) scenario \(j_{t}\). Similarly, we use \(\varDelta (j_{t}) : \hat{\varOmega }_{t} \rightarrow 2^{\hat{\varOmega }_{t+1}}\) to denote the set of descendant nodes for \(j_{t}\), where \(|\varDelta (j_{t})|=D_{t+1}\). The empirical scenario tree therefore has stage \(t\) realizations denoted \(\mathbf{\xi }_{t}^{j_{t}}, j_{t} \in \hat{\varOmega }_{t}\). At the last stage, we have \(\mathbf{\xi }_{T}^{j_{T}}, j_{T} \in \hat{\varOmega }_{T}\), and each stage \(T\) scenario corresponds to a full path of observations through each stage of the scenario tree. That is, given \(j_{T}\), we recursively have \(j_{t-1} = a(j_{t})\) for \(t=T,T-1,\ldots ,2\). For this reason and for notational simplicity, when possible, we suppress the stage \(T\) subscript and denote \(j_{T} \in \hat{\varOmega }_{T}\) by \(j_{} \in \hat{\varOmega }_{}\).

We briefly describe SDDP to give sufficient context for our results. For further details on SDDP, see [26, 41]. The simplest SDDP algorithm applies to the risk-neutral version of our model, which means setting \(\lambda _{t} = 0\) for \(t=1 , \ldots ,T\) in Eq. (1) and model (2) or equivalently in (3–5). We denote the recourse value for the risk-neutral version of our model by \(\mathsf {Q}^{N}_{t}(\mathbf{x}_{t-1}, \mathbf{\xi }_{t})\), which for \(t=2,\ldots ,T\), is given by:

where

and where \(Q^{N}_{T+1}(\cdot ) \equiv 0\). The risk-neutral formulation is completed via model (3) with \(\lambda _2=0\) and \(Q_{2}(\mathbf{x}_{1}, u_{1})\) replaced by \(Q^{N}_{2}(\mathbf{x}_{1})\).

During a typical iteration of SDDP, cuts have been accumulated at each stage. These represent a piecewise linear outer approximation of the expected future cost function, \(Q^{N}_{t+1}(\mathbf{x}_{t})\). On a forward pass we sample a number of linear paths through the tree. As we solve a sequence of master programs (which we specify below) along these forward paths, the cuts are used to form decisions at each stage. Solutions found along a forward path in this way form a policy, which does not anticipate the future. The sample mean of the costs incurred along all the forward sampled paths through the tree forms an estimator of the expected cost of the current policy.

In the backward pass of the algorithm, we add cuts to the collection defining the current approximation of the expected future cost function at each stage. We do this by solving subproblems at the descendant nodes of each node in the linear paths from the forward pass, except in the final stage, \(T\). The cuts collected at any node in stage \(t\) apply to all the nodes in that stage, and hence we maintain a single set of cuts for each stage. We let \(C_{t}\) denote the number of cuts accumulated so far in stage \(t\).

Model (8) acts as a master program for its stage \(t+1\) descendant scenarios and acts as a subproblem for its stage \(t-1\) ancestor:

Decision variable \(\theta _{t}\) in the objective function (8a), coupled with cut constraints in (8c), forms the outer linearization of the recourse function \(Q^{N}_{t+1}(\mathbf{x}_{t})\) from model (6) and Eq. (7). The structural and nonnegativity constraints in (8b) and (8d) simply repeat the same constraints from model (6). In the final stage \(T\), we omit the cut constraints and the \(\theta _{T}\) variable. While we could append an “\(N\)” superscript on terms like \(\hat{\mathsf {Q}}_{t}, \hat{Q}_{t+1}^{j}, \mathbf{\mathsf {g}}_{t+1}^{j}\), etc. we suppress this index for notational simplicity.

As we indicate in constraint (8b), we use \(\mathbf{\pi }_{t}\) to denote the dual vector associated with the structural constraints. Let \(j_{t}\) denote a stage \(t\) scenario from a sampled forward path. With \(\mathbf{x}_{t-1}= \mathbf{x}_{t-1}^{a(j_{t})}\) and with \(\mathbf{\xi }_{t}^{}=\mathbf{\xi }_{t}^{j_{t}}\) in model (8), we refer to that model as \(\mathrm{sub }({j_{t}})\). Given model \(\mathrm{sub }({j_{t}})\) and its solution \(\mathbf{x}_{t}\), we form one new cut constraint at stage \(t\) for each backward pass of the SDDP algorithm as follows. We form and solve \(\mathrm{sub }({j_{t+1}})\), where \(j_{t+1} \in \varDelta (j_{t})\) indexes all descendant nodes of \(j_{t}\). This yields optimal values \(\hat{\mathsf {Q}}_{t+1}^{j_{t+1}}(\mathbf{x}_{t})\) and dual solutions \(\mathbf{\pi }_{t+1}^{j_{t+1}}\) for \(j_{t+1} \in \varDelta (j_{t})\). We then form

where \(\mathbf{g}_{t+1}^{j_{t+1}} =\mathbf{g}_{t+1}^{j_{t+1}} (\mathbf{x}_{t})\) is a subgradient of \(\hat{\mathsf {Q}}_{t+1}^{j_{t+1}} =\hat{\mathsf {Q}}_{t+1}^{j_{t+1}}(\mathbf{x}_{t})\). The cut is then obtained by averaging over the descendants:

As we indicate above, \(\hat{\mathsf {Q}}_{t+1}{} \,{=}\,\hat{\mathsf {Q}}_{t+1}{}(\mathbf{x}_{t})\) and \(\mathbf{g}_{t+1}^{}\,{=}\, \mathbf{g}_{t+1}^{}(\mathbf{x}_{t})\), and hence \(\hat{Q}_{t+1}= \hat{Q}_{t+1}(\mathbf{x}_{t})\) and \(\mathbf{\mathsf {g}}_{t+1} = \mathbf{\mathsf {g}}_{t+1}(\mathbf{x}_{t})\) but we suppress this dependency for notational simplicity. We also suppress the \(j_{t}\) index on \(\hat{Q}_{t+1}\) and \(\mathbf{\mathsf {g}}_{t+1}\) because we append a new cut to the stage \(t\) collection of cuts, and do not associate it with a particular stage \(t\) subproblem. For simplicity in stating the SDDP algorithm below, we assume we have known lower bounds \(L_t\) on the recourse functions.

Algorithm 1

Stochastic dual dynamic programming algorithm

-

1.

Let iteration \(k=1\) and append lower bounding cuts \( \theta _{t} \ge L_t, t=1 , \ldots ,T-1\).

-

2.

Solve the stage \(1\) master program (\(t=1\)) and obtain \(\mathbf{x}_{1}^{k}, \theta _{1}^{k}\). Let \(\underline{z}_{k} = \mathbf{c}_{1} ^{\top }\mathbf{x}_{1}^{k} + \theta _{1}^{k}\).

-

3.

Forward pass: sample i.i.d. paths from \(\hat{\varOmega }_{}\) and index them by \(S^{k}\).

Form the upper bound estimator:

$$\begin{aligned} \overline{z}_{k} = \mathbf{c}_{1} ^{\top }\mathbf{x}_{1}^{k} + \frac{1}{|S^{k}|}\sum _{j_{} \in S^{k}} \sum _{t=2}^{T} (\mathbf{c}_{t}^{j_{t}}) ^{\top }\left( \mathbf{x}_{t}^{j_{t}}\right) ^{k}. \end{aligned}$$(12) -

4.

If a stopping criterion, given \(\overline{z}_{k}\) and \(\underline{z}_{k}\), is satisfied then stop and output first stage solution \(\mathbf{x}_{1}= \mathbf{x}_{1}^{k}\) and lower bound \(\underline{z}_{}=\underline{z}_{k}\).

-

5.

Backward pass:

-

6.

Let \(k= k+ 1\) and goto step 2.

See Bayraksan and Morton [4] and Homem-de-Mello et al. [17] for stopping rules that can be employed in step 4.

4 Risk-averse approach

We must modify the SDDP algorithm of Sect. 3 to handle the risk-averse model of Sect. 2. The auxiliary variables \(u_{t}\) now play a role both in computing the cuts and in determining the policy from the master programs. In the modified SDDP algorithm we select the \(\mathrm{VaR }\) level, \(u_{t}\), along with our stage \(t\) decisions, \(\mathbf{x}_{t}\), and then solve the subproblems at the descendant nodes. The \(\mathrm{VaR }\) level influences the value of the recourse function estimate and therefore is included in the cuts, in the same way as any another decision variable. Extending the development from the previous section, the stage \(t\) subproblem in the risk-averse case is given by:

While \(\hat{\mathsf {Q}}_{t+1}{}=\hat{\mathsf {Q}}_{t+1}{}(\mathbf{x}_{t})\), we now have \(\hat{Q}_{t+1}^{} = \hat{Q}_{t+1}^{}(\mathbf{x}_{t}, u_{t})\) and \(\mathbf{\mathsf {g}}_{t+1}^{} = \mathbf{\mathsf {g}}_{t+1}^{}(\mathbf{x}_{t}, u_{t})\) as a function of both the stage \(t\) decision and the \(\mathrm{VaR }\) level. The subgradient of \(\hat{\mathsf {Q}}_{t+1}{}(\mathbf{x}_{t})\) is still computed by Eq. (9). However, the function value \(\hat{Q}_{t+1}^{}(\mathbf{x}_{t}, u_{t})\) and its subgradient have to be adjusted to respect the differences between the risk-neutral and risk-averse cases. See Shapiro [41] for the formulas that replace Eqs. (10) and (11) to obtain \(\hat{Q}_{t+1}\) and \(\mathbf{\mathsf {g}}_{t+1}\). In modifying the SDDP algorithm for the risk-averse formulation, these new cut computations are used in the backward pass of step 5 of Algorithm 1 to provide the piecewise linear outer approximation of \(Q_{t+1}(\mathbf{x}_{t}, u_{t})\). Shapiro et al. [42] describe an approach in which the VaR level does not appear explicitly in model (13). However, as we describe below our upper bound estimators require explicit VaR levels.

One issue that remains concerns evaluation of an upper bound; i.e., an analog of estimator (12) for the risk-averse setting. As we illustrate in Example 1, we cannot expect an analogous additive estimator to be appropriate for the risk-averse setting. Example 1 suggests that to compute the conditional risk measure, we should start at the last stage and recurse back to the first stage to obtain an estimator of the risk measure evaluated at a policy. This differs significantly from the risk-neutral case, where the costs incurred at any stage can be estimated just by averaging costs at sampled nodes. Starting at the final stage, \(T\), our cost under scenario \(j_{T}\) is \((\mathbf{c}_{T}^{j_{T}}) ^{\top }\mathbf{x}_{T}^{j_{T}}\). For the stage \(T-1\) ancestor scenario \(j_{T-1}=a(j_{T})\) we must calculate

The question that remains is how to estimate \(Q_T(\mathbf{x}_{T-1}^{j_{T-1}}, u_{T-1}^{j_{T-1}})\). We maintain a parallel with the estimator in the risk-neutral version of the SDDP algorithm in the sense that we estimate this term using the value of one descendant scenario along the corresponding forward path in step 3 of Algorithm 1. This means that based on Eq. (5) we estimate \(Q_T(\mathbf{x}_{T-1}^{j_{T-1}}, u_{T-1}^{j_{T-1}})\) by

Removing the expectation operator in Eq. (5), the associated recursion of the objective function in model (4) and Eq. (5) yields the following recursive estimator of \(Q_t(\mathbf{x}_{t-1}^{j_{t-1}}, u_{t-1}^{j_{t-1}})\) for \(t=2 , \ldots ,T\):

where \(\hat{\mathsf {v}}_{T+1}(\mathbf{\xi }_{T}^{j_{T}}) \equiv 0\). We denote the estimator for sample path \(j\) by

Because the first stage parameters, \(\mathbf{\xi }_{1}^{j_{1}}\), are deterministic we simply write \(\hat{\mathsf {v}}_{2}=\hat{\mathsf {v}}_{2}(\mathbf{\xi }_{1}^{j_{1}})\), dropping its argument.

Having selected scenario \(j\) and solved all node subproblems (13) associated with realizations \(\mathbf{\xi }_{1}^{j_{1}} , \ldots ,\mathbf{\xi }_{T}^{j_{T}}\) along the sample path, we form the estimator recursively as follows. We start at the stage \(T-1\) node, compute \(\hat{\mathsf {v}}_{T}(\mathbf{\xi }_{T-1}^{j_{T-1}})\), substitute it into formula (14) for \(t=T-1\) to obtain \(\hat{\mathsf {v}}_{T-1}(\mathbf{\xi }_{T-2}^{j_{T-2}})\) and so on until we obtain \(\hat{\mathsf {v}}_{2}\) and hence can compute the value of \(\hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{j})\) via Eq. (15). Then if \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), are i.i.d. sample paths, sampled from the scenario tree’s empirical distribution as in step 3 in Algorithm 1, the corresponding upper bound estimator is given by:

We use the “\(\mathbf {n}\)” superscript to indicate that we use naive Monte Carlo sampling here, and to distinguish it from estimators we develop below.

We can attempt to use estimator (16), which is the natural analog of (12), to solve the risk-averse problem. Unfortunately, this estimator has large variance. The main shortcoming of this estimator lies in the imbalance in sampled scenarios we point to in Example 2 coupled with the policy now specifying an approximation of the value-at-risk level via \(u_{t-1}\). If the descendant node has value less than \(u_{t-1}\) then the positive-part term in Eq. (14) is zero. When the opposite occurs, the difference between the node value and \(u_{t-1}\) is multiplied by \(\alpha _{t}^{-1}\), which can lead to a large value of the estimator because a typical value of \(\alpha _{t}^{-1}\) is 20. When \(\hat{\mathsf {v}}_{t}(\mathbf{\xi }_{t-1}^{j_{t-1}})\) is large, this increases the likelihood that preceding values are also large and hence multiplied by \(\alpha _{t-1}^{-1}, \alpha _{t-2}^{-1}, \ldots \) many more times in the backward recursion. This leads to a highly variable estimator which is of little practical use, particularly when \(T\) is not small.

To overcome the issues we have just discussed, Shapiro [41] describes an estimator which uses more nodes to estimate the recourse value. This estimator for a 3-stage problem is obtained by sampling, and solving subproblems associated with i.i.d. realizations \(\mathbf{\xi }_{2}^{1}, \ldots ,\mathbf{\xi }_{2}^{M_{2}}\) from the second stage and for each of these solving subproblems to estimate the future risk measure using i.i.d. realizations \(\mathbf{\xi }_{3}^{1}, \ldots ,\mathbf{\xi }_{3}^{M_{3}}\) from the third stage. This requires solving subproblems at a total of \(M_{2}M_{3}\) nodes. More generally under this approach, given a stage \(T-1\) scenario \(\mathbf{\xi }_{T-1}^{j_{T-1}}\) we estimate the recourse function value by:

For stages \( t = 2 , \ldots ,T - 1 \) we have:

and finally for the upper bound estimator we compute:

Shapiro [41] discusses two significant problems with the upper bound estimator (18). First, the estimator requires solving an exponential number, \(\prod _{t=2}^T M_{t}\), of subproblems in the number of stages (Thus the “\(\mathbf {e}\)” superscript.) and hence is impractical unless \(T\) is small. Second, as we examine further in Sect. 6, even when we can afford to compute the bound provided by (18), the bound is not very tight. For these reasons, estimator (18) is not typically used in practice.

Philpott and de Matos [29] mention another approach. They avoid computing an upper bound for the risk-averse model by first solving the risk-neutral version of the problem, in which we can compute reliable upper bound estimators and hence employ a reasonable termination criterion. When the SDDP algorithm stops we fix the number of iterations needed to satisfy the termination criterion. We then form the risk-averse model and run the SDDP algorithm, without evaluating an upper bound estimator. The solution and corresponding lower bound obtained after that fixed number of iterations are considered the algorithm’s output. However, this approach has some pitfalls. It is unclear that the number of iterations for the risk-averse model should be the same as in the risk-neutral case, because the shape of the cost-to-go functions differs. This approach gives us no guarantees on the quality of the solution and requires that we run the SDDP algorithm twice.

To our knowledge, the most effective procedure currently available to compute an upper bound is proposed by Philpott et al. [30]. They develop an inner approximation scheme that provides a candidate policy and a deterministic upper bound on the policy’s value, using a convex combination of feasible policies. This bound provides significantly better results than estimator (18), and it does not have sampling error. However, as Philpott et al. [30] discuss, its main drawback is that the computational effort increases rapidly in the dimension of the decision variables. Applicability of the type of Monte Carlo estimators we propose, tends to scale more gracefully with dimension. We further discuss the approach of [30] in Sect. 6.

When we restrict attention to statistical upper bound estimators, we have three possible approaches at this point. The two upper bound estimators (16) and (18) are available in the risk-averse case, and there is also a heuristic based on solving the risk-neutral model to determine the stopping iteration. In our view, all three of these approaches are unsatisfactory. We are either forced to use loose upper bounds that lead to very weak guarantees on solution quality and scale poorly. Or, we are forced to use an approach which provides no guarantees on solution quality, even if reasonable empirical performance has been reported in the literature. In the next section we propose a new upper bound estimator to overcome these difficulties. Our estimator scales better with the number of stages and can yield greater precision than previous approaches.

5 Improved upper bound estimation

We overcome the shortcomings of the upper bound estimators (16) and (18) by first focusing on the main issue causing the estimators to be poor: A relatively small fraction of the sampled scenario-tree nodes contribute to estimating \(\mathrm{CVaR }\), for reasons we illustrate in Example 2. To sample in a better manner we assume that for every stage, \(t = 2 , \ldots ,T\), we can cheaply evaluate a real-valued approximation function, \(h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\), which estimates the recourse value of our decisions \(\mathbf{x}_{t-1}\) after the random parameters \(\mathbf{\xi }_{t}\) have been observed.

The functions \(h_{t}\) play a central role in our proposal for sampling descendant nodes. Rather than solving linear programs at a large number of descendant nodes, as is done in estimator (18), we instead evaluate \(h_{t}\) at these nodes and then sort the nodes based on their values. This guides sampling of the nodes to estimate \(\mathrm{CVaR }\). Having such a function \(h_{t}\) indicates that once we observe the random outcome for stage \(t+1\), we have some means of distinguishing “good” and “bad” decisions at stage \(t\) without knowledge of subsequent random events in stages \(t+2 , \ldots ,T\). Sometimes this is possible via an approximation of the recourse value associated with the system’s state. For example, when dealing with some asset allocation models, we may use current wealth to define \(h_{t}\).

Our sampling procedure forms an empirical scenario tree with equally-weighted scenarios and discrete empirical distributions \(\hat{P}_{t}, t= 2 , \ldots ,T\). The probability mass function (pmf) governing the conditional probability of the descendant nodes from any stage \(t-1\) node is given by:

where the indicator function \(\mathbf {I}{\left[ \cdot \right] }\) takes value 1 if its argument is true and 0 otherwise.

We propose a sampling scheme based on importance sampling. The scheme depends on the current state of the system, giving rise to a new pmf, which we denote \(g_{t}(\mathbf{\xi }_{t}|\mathbf{x}_{t-1})\). This pmf is tailored specifically for use with \(\mathrm{CVaR }\). Alternative pmfs would be needed to apply the proposed ideas to other risk measures. Given the current state of the system we can compute the value at risk for our approximation function, \(u_{h}= \mathrm{VaR }_{\alpha _t}\left[ h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\right] \), and partition the nodes corresponding to \({\mathbf{\xi }}_{t}^{1}, \ldots ,{\mathbf{\xi }}_{t}^{D_{t}}\) into two groups by comparing their approximate value to \(u_{h}\). In particular, the importance sampling pmf is:

where the \(\lfloor \cdot \rfloor \) operator rounds down to the nearest integer. The factor of \(\frac{1}{2}\) in the pmf \(g_{t}(\mathbf{\xi }_{t}|\mathbf{x}_{t-1})\) modifies the probability masses so that we are equally likely to draw sample observations above and below \(u_{h}= \mathrm{VaR }_{\alpha _t}\left[ h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\right] \). We choose \(\frac{1}{2}\) for simplicity, but in general a good choice of this factor could be tailored to the values of the confidence levels, \(\alpha _t\), and risk aversion coefficients, \(\lambda _{t}\).

In accordance with importance sampling schemes, we can compute the required expectation under our new measure via

for any random variable \(Z\) for which the expectations exist. If the expectation is taken across the distributions for all \(T\) stages we denote the analogous operators by \(\mathbf {E}_{f_{}}\left[ \cdot \right] \) and \(\mathbf {E}_{g_{}}\left[ \cdot \right] \).

We can form an estimator similar to (16), except that we employ our importance sampling distributions, \(g_{t}\), in place of the empirical distributions, \(f_{t}\), in the forward pass of SDDP when selecting the sample paths. In particular, given a single sample path from stage 1 to stage \(T, \mathbf{\xi }^{j}\), we form estimator (15), which uses recursion (14) and preserves the good scalability of the estimator with the number of stages. We carry out this for a set of samples drawn using the new measure \(g_{t}\) to select the sample paths.

Thus we have weights for each stage of

which yields weights along a sample path of

and an estimator of the form

This estimator is a weighted sum of the upper bounds (15) for the sampled scenarios. Normalizing the weights reduces the variability of the estimator (see Hesterberg [15]) and yields:

where “\(\mathbf{i}\)” indicates that the estimator uses importance sampling. We summarize the development so far in the following proposition.

Proposition 1

Assume model (2) has relatively complete recourse and interstage independence. Let \(z^{*}\) denote the optimal value of model (2) under the empirical distributions, \(\hat{P}_{t}, t= 2 , \ldots ,T\), generated by i.i.d. sampling. Let \(\mathbf{\xi }\) denote a sample path selected under the empirical distribution, and let \(\hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\) be defined by (15) for that sample path. Then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge z^{*}\). Furthermore if \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), are i.i.d. and generated by the pmfs (20) and \(U^\mathbf{{i}}\) is defined by (21) then \(U^\mathbf{{i}}\rightarrow \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \), w.p.1, as \(M \rightarrow \infty \).

Proof

The optimal value of model (2) as reformulated in model (3) yields \(z^{*}\). Along sample path \(\mathbf{\xi }\), under the assumption of relatively complete recourse, the cuts in subproblems (13) generate a feasible policy in the space of the \((\mathbf{x}_{t}, u_{t})\) variables. Removing the expectation operator in equation (5), the associated recursion of the objective function in model (4) and equation (5) coincides with the recursion in equation (14). Taking expectations yields \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge z^{*}\).

By the law of large numbers we have that

For \(\mathbf{\xi }\) generated by the empirical pmfs (19) and for each \(\mathbf{\xi }^{j}\), generated by the pmfs (20), we have

Thus by the law of large numbers we have

From the definition of \(U^\mathbf{{i}}\) from (21) we have

Using a converging-together result with Eqs. (22) and (23) we then have

as \(M \rightarrow \infty \). \(\square \)

In the sense made precise in Proposition 1, estimator (21) provides an asymptotic upper bound on the optimal value of model (2). The naive estimator \(U^\mathbf{{n}}\) of (16) is an unbiased and consistent estimator of \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \). However, if the functions \(h_{t}\) provide a good approximation, in the sense that they order the state of the system in the same way as the recourse function, we anticipate that \(U^\mathbf{{i}}\) will have smaller variance than \(U^\mathbf{{n}}\). That said, we view estimator (21) as an intermediate step to an improved estimator. Under an additional assumption, the estimator can be improved significantly. We now consider a stricter assumption, with the simplified notation, \(\mathsf {Q}_{t} = \mathsf {Q}_{t}(\mathbf{x}_{t-1}, \mathbf{\xi }_{t})\) and \( h_{t} = h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\).

Assumption 1

For every stage \(t = 2 , \ldots ,T\) and decision \(\mathbf{x}_{t-1}\) the approximation function \( h_{t}\) satisfies:

Under Assumption 1, we can strengthen the estimator through a reformulation. Given a sample path \(\mathbf{\xi }\) we modify the recursive estimator (14) for \(t=2, \ldots ,T\) as:

where \(\hat{\mathsf {v}}_{T+1}^\mathbf{h}(\mathbf{\xi }_{T}^{j_{T}}) \equiv 0\), and we let

Like the estimators \(U^\mathbf{{n}}\) and \(U^\mathbf{{i}}\), which are based on (14) and (15), we note that the estimator we propose next, based on Eqs. (24) and (25), requires explicit estimation of the VaR-level by the \(u_{t-1}\) decision variables. With \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), i.i.d. from the pmfs (20) we form the upper bound estimator:

Proposition 2

Assume the hypotheses of Proposition 1, let \(\mathbf{\xi }\) denote a sample path selected under the empirical distribution, let \(\hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\) be defined by (25) for that sample path, and let Assumption 1 hold. Then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \ge z^{*}\). If \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), are i.i.d. and generated by the pmfs (20) and \(U^\mathbf{h}\) is defined by (26) then \(U^\mathbf{h}\rightarrow \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \), w.p.1, as \(M \rightarrow \infty \). Furthermore if subproblems (13) induce the same policy for both \(\hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\) and \(\hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\) then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \).

Proof

Let \((\mathbf{x}_{1}, u_{1}) , \ldots ,(\mathbf{x}_{T-1}, u_{T-1}), \mathbf{x}_{T}\) be the feasible sequence to models (3) and (4) for \(t=2 , \ldots ,T\), specified by (13) along sample path \(\mathbf{\xi }\). The result \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \) holds because \(\mathbf {I}{\left[ h_{t} \ge \mathrm{VaR }_{\alpha _{t}}\left[ h_{t}\right] \right] }\) can preclude some positive terms in the recursion (24) that are included in (14).

The terms in (24b) are used to estimate \(\mathrm{CVaR }\). Thus to establish the rest of the proposition it suffices to show:

because the rest of the proof then follows in the same fashion as that of Proposition 1.

First consider the case in which \(u_{t-1} \ge \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \). We have:

where the inequality follows from \(\mathrm{CVaR }\)’s definition as the optimal value of a minimization problem, the first equality holds because the indicator has no effect when \(u_{t-1} \ge \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \), and the last equality follows from Assumption 1.

For the case when \(u_{t-1} < \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \) we first drop the positive part operator, because that is handled by the indicator, and write:

where the inequality holds because \(\mathbf {P}_{}\left[ \mathsf {Q}_{t} \ge \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \right] \ge \alpha _{t}\) and \(u_{t-1} < \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \). (Note that we would instead have \(\mathbf {P}_{}\left[ \mathsf {Q}_{t} \ge \mathrm{VaR }_{\alpha _{t}}\left[ \mathsf {Q}_{t}\right] \right] = \alpha _{t}\) if we were in the continuous case.) This completes the proof as the desired result holds in both cases. \(\square \)

As Proposition 2 indicates, \(U^\mathbf{h}\) provides an asymptotic upper bound estimator for the optimal value of model (2). It also provides a tighter upper bound in expectation than estimators \(U^\mathbf{{n}}\) and \(U^\mathbf{{i}}\). We also anticipate that estimator \(U^\mathbf{h}\) will have smaller variance than \(U^\mathbf{{i}}\) provided the “induce the same policy” hypothesis holds. Note the same-policy hypothesis is not needed for the proposition’s consistency result. As we discuss in Sect. 4, when a sample path is such that the positive-part term in (14) is positive that term is multiplied by \(\alpha _{t}^{-1}=20\) (say), and this increases the likelihood that as we decrement \(t\) we obtain large values that are repeatedly multiplied by \(\alpha _{t-1}^{-1}, \alpha _{t-2}^{-1}\), etc. This repeated multiplication should occur for some samples, but it can also occur when it should not. The indicator function in \(U^\mathbf{h}\) helps avoid this issue and hence tends to reduce variance.

Under Assumption 1, the approximation function, \(h_{t}\), characterizes the recourse value in that it fully classifies whether a realization is in the upper \(\alpha \) tail of the recourse values. We now weaken Assumption 1 to incorporate the notion of what we call a margin function, \( m_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\), in order for our type of upper bound estimator to address a broader class of stochastic programs. Under Assumption 2, given below, the margin function is sufficient to classify a realization as not being in the upper \(\alpha \) tail of the recourse values. It accomplishes this by effectively lowering the threshold that approximates the upper tail and has the effect of increasing the number of descendant scenarios that contribute to the positive-part CVaR term.

Assumption 2

For every stage \(t = 2 , \ldots ,T\) and decision \(\mathbf{x}_{t-1}\) we have real-valued functions \(h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\) and \( m_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\) which satisfy:

Given a sample path \(\mathbf{\xi }\) we modify the recursive estimators (14) and (24) for \(t=2, \ldots ,T\) as:

where \(\hat{\mathsf {v}}_{T+1}^\mathbf{m}(\mathbf{\xi }_{T}^{j_{T}}) \equiv 0\), and we let

With \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), i.i.d. and from the pmfs (20), which use functions \(h_{t}\), we form the upper bound estimator:

Again, note that we do not modify the importance sampling procedure here to use the margin value. The sampling scheme still relies on the \(\mathrm{VaR }_{\alpha _{t}}\left[ h_{t}\right] \) level of the approximation function via the pmfs (20). However, the estimator based on (28) is more generally applicable than the estimator based on (25) because we can drop Assumption 1 and instead require only the weaker implication of Assumption 2.

Proposition 3

Assume the hypotheses of Proposition 1, let \(\mathbf{\xi }\) denote a sample path selected under the empirical distribution, let \(\hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\) be defined by (28) for that sample path, and let Assumption 2 hold. Then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \ge z^{*}\). If \(\mathbf{\xi }^{j}, j=1, \ldots ,M\), are i.i.d. and generated by the pmfs (20) and \(U^\mathbf{m}\) is defined by (29) then \(U^\mathbf{m}\rightarrow \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \), w.p.1, as \(M \rightarrow \infty \). Furthermore if subproblems (13) induce the same policy for both \(\hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\) and \(\hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\) then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \). Finally, if Assumption 1 also holds and subproblems (13) induce the same policy for both \(\hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\) and \(\hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\) then \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \).

Proof

We have:

where the first inequality comes from following the steps of the proof of Proposition 2 (in both of the cases considered) and the second inequality follows from Assumption 2. From this we have \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \ge z^{*}\), and the consistency result for \(U^\mathbf{m}\) follows in the same manner as in the proof of Proposition 1. Inequality \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}(\mathbf{\xi }_{}^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \) holds because \(\mathbf {I}{\left[ h_{t} \ge m_{t}\right] }\) can preclude some positive terms in the recursion (27) that are included in (14). Finally, \(\mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{m}(\mathbf{\xi }^{})\right] \ge \mathbf {E}_{f_{}}\left[ \hat{\mathsf {v}}_{}^\mathbf{h}(\mathbf{\xi }_{}^{})\right] \) holds because under Assumptions 1 and 2 the indicator \(\mathbf {I}{\left[ h_{t} \ge m_{t}\right] }\) allows inclusion of some positive terms that the indicator \(\mathbf {I}{\left[ h_{t} \ge \mathrm{VaR }_{\alpha _{t}}\left[ h_{t}\right] \right] }\) does not. \(\square \)

In order to ensure that \(U^\mathbf{h}\) is a valid upper bound estimator we require that we have an approximation function that can fully order states of the system in the sense of Assumption 1, and this limits applicability of the estimator in some cases. Assumption 2 weakens considerably this requirement, and widens the applicability of estimator \(U^\mathbf{m}\). While \(U^\mathbf{m}\) again provides an asymptotic upper bound estimator for the optimal value of model (2), the price we pay is that it is weaker than \(U^\mathbf{h}\) as Proposition 3 indicates.

For the types of approximation and margin functions (\(h_{t}\) and \(m_{t}\)) that we envision, our importance-sampling estimators (\(U^\mathbf{{i}}, U^\mathbf{h}\), and \( U^\mathbf{m}\)) require modest additional computation relative to estimator \(U^\mathbf{{n}}\), which uses samples from the empirical pmfs (19). In particular with \(D_{t}\) denoting the number of stage \(t\) descendant nodes formed in the sampling procedure, the bulk of the additional computation requires evaluating \(h_{t}\) and \(m_{t}\) at each of these \(D_{t}\) nodes and determining \(u_{h}= \mathrm{VaR }_{\alpha _{t}}\left[ h_{t}\right] \), which can be done by sorting with effort \(O(D_{t} \log D_{t})\) or in linear time in \(D_{t}\) (see [6]). This effort is small compared to solving linear programs for modest values of \(D_{t}\), particularly recalling that in SDDP’s backward pass we must solve linear programs at all \(D_{t}\) nodes to compute a cut. Furthermore, in Sects. 3–5, we have simplified the presentation by using an SDDP tree with equally-likely realizations. However, our ideas generalize in a straightforward manner to handle general discrete distributions. For example, in computing the quantile \(u_{h}\) we should form the cumulative distribution function rather than simply sorting.

6 Computational results

We present computational results for applying SDDP with the upper bound estimators we describe in Sects. 4 and 5 to two asset allocation models under our \(\mathrm{CVaR }\) risk measure. We present results for our four new upper bound estimators: (i) \(U^\mathbf{{n}}\) from equation (16); (ii) \(U^\mathbf{{i}}\) from Eq. (21); (iii) \(U^\mathbf{h}\) from Eq. (26); and, (iv) \(U^\mathbf{m}\) from Eq. (29). We compare their performance with that of the existing upper bound estimator from the literature: \(U^\mathbf{{e}}\) from Eq. (18). The two asset allocation models we consider differ only in whether we include transaction costs or not. Without transaction costs we can use estimator \(U^\mathbf{h}\), but we can only use estimator \(U^\mathbf{m}\) when we include transaction costs.

We begin with a simple asset allocation model without transaction costs and emphasize that our primary purpose is to compare the upper bound estimators as opposed to building a high-fidelity model for practical use. At stage \(t\) the decisions \(\mathbf{x}_{t}\) denote the allocations (in units of a multiple of a base currency, say USD), and \(\mathbf{p}_{t}\) denotes gross return per stage; i.e., the ratio of the price at stage \(t\) to that in stage \(t-1\). These represent the only random parameters in the model. Without transaction costs, model (4) specializes to:

except that in the first stage: (i) the right-hand side of (30b) is instead 1 and (ii) because \(- \mathbf{1}^{\top }\mathbf{x}_{1}\) is then identically \(-1\), we drop this constant from the objective function.

The assets in our allocation model consist of the stock market indices DJA, NDX, NYA, and OEX. We used monthly data for these indices from September 1985 until September 2011 to fit the multivariate log-normal distribution to the price ratios observed month-to-month. An empirical scenario tree was then constructed by sampling from the log-normal distribution, using the polar method [20] for sampling the underlying normal distributions. The L’Ecuyer random generator [22] was used to generate the required uniform random variables. We implemented the SDDP algorithm in C++ software, using CPLEX [18] to solve the required linear programs and the Armadillo [1] library for matrix computations. The confidence level was set to \(\alpha _{t}=5\,\%\) and the risk coefficients were set to \(\lambda _{t} = \frac{t-1}{T}, t=2 , \ldots ,T\) so that risk aversion increases in later stages. Table 1 shows the sizes of the empirical scenario trees for our test problem instances.

The approximation function, \(h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t})\), that we use for the importance sampling estimators \(U^\mathbf{{i}}\) and \(U^\mathbf{h}\) is simply our current wealth, within a sign, which is determined by the previous stage decisions and the current price:

Note that this function meets the requirements of Assumption 1, because when we have no transaction costs the specific allocations in the vector \(\mathbf{x}_{t-1}\) can be rebalanced with no loss. This is captured mathematically in model (30) in that \(h_{t}=-\mathbf{p}_{t}^{\top }\mathbf{x}_{t-1}\) acts as the sole state variable; i.e., the equality constraint of (30b) could be used to replace the first term in the objective function of (30a). Hence, we see \(\mathsf {Q}_{t}\) also depends solely on \(h_{t}\) and that dependence is monotonic in \(h_{t}\) due to monotonicity of \(\mathrm{CVaR }\).

Our primary purpose is to compare the upper bound estimators that we have developed. For this reason we ran the SDDP algorithm with each of the upper bound estimators until the algorithm reached nearly the same optimal value as estimated by the first stage master program’s objective function; i.e., the lower bound \(\underline{z}_{}\) from step 2 of Algorithm 1 for the risk-averse model. Specifically, SDDP was terminated when \(\underline{z}_{}\) agreed across the four runs and did not improve by more than \(10^{-6}\) over 10 iterations. A total of 100 iterations of SDDP sufficed to accomplish this for problem instances with \(T=2 , \ldots ,5\) and a total of 200 iterations sufficed for the larger instances with \(T=10\) and \(15\). For estimators \(U^\mathbf{{n}}, U^\mathbf{{i}}\), and \(U^\mathbf{h}\) on problem instances with \(T=2, 3, 4\), and \(5\) we used respective sample sizes of \(M=1{,}001, 501, 334\), and \(251\). In this way, forming the estimators required solving around 1,000 linear programming subproblems in each case. For \(T=10\) and \(15\) we used \(M=1112\) and \(3572\) so that forming the estimator required solving about 10,000 and 50,000 linear programs, respectively. For the estimator \(U^\mathbf{{e}}\) we must specify a sample size \(M_t\) for each stage: For \(T=2\) we used \(M_2=1{,}000\). For \(T=3\) we used \(M_2=M_3=32\) because this means forming the estimator requires solving \(32^2 \approx \)1,000 linear programs and this allows for a fair comparison with the single-path estimators \(U^\mathbf{{n}}, U^\mathbf{{i}}\), and \(U^\mathbf{h}\). With similar reasoning for \(T=4\) we used \(M_t=11 \, \forall t\), and for \(T=5\) we used \(M_t=6 \, \forall t\). And for the largest value of \(T\) for which we compute \(U^\mathbf{{e}}, T=10\), we used \(M_t=3 \, \forall t\).

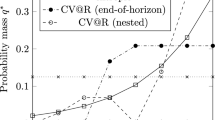

Table 2 shows results for four estimators for the asset allocation model without transaction costs. These results were computed using the sample sizes that we indicate above, except that we formed 100 i.i.d. replicates of the estimators. For a particular problem instance, all 100 replicates used the same single run of 100 or 200 iterations of SDDP. Each cell in Table 2 reports the mean and standard deviation of the 100 replicates of the estimator. The table also shows the lower bound \(\underline{z}_{}\) for the models obtained as we describe above. The estimators perform similarly for the two-stage problem instance, but the advantages of the proposed estimator, \(U^\mathbf{h}\), are revealed as the number of stages grows. Note that estimators \(U^\mathbf{{n}}, U^\mathbf{{i}}\) and \(U^\mathbf{{e}}\) degrade at \(T=10\) for reasons we discuss above involving recursive multiplication by \(\alpha _{t}^{-1}=20\) along some sample paths. Due to this degradation we do not report results for these estimators for \(T=15\). We suspect it is for this same reason that the benefit of the importance sampling scheme is only fully realized when we include the indicator functions shown in Eq. (24); compare the performance of \(U^\mathbf{{i}}\) and \(U^\mathbf{h}\) in the table. For \(T=2 , \ldots ,5\) the variance reduction of \(U^\mathbf{h}\) relative to \(U^\mathbf{{e}}\) grows from roughly 3 to 25 to 50 to 300. The smaller standard deviations of \(U^\mathbf{h}\) could facilitate its use in a sensible stopping rule.

For our second set of problem instances the model incorporates transaction costs. This allows us to show how to implement our upper bound estimation procedure in a more complex model. We consider the case in which transaction costs are proportional to the value of the assets sold or bought, and in particular that the fee is \(f_{t}= 0.3\,\%\) of the transaction value. We must modify the rebalancing equation between stage \(t-1\) and stage \(t\) to include the transaction costs of \(f_{t}\mathbf{1}^{\top }|\mathbf{x}_{t} - \mathbf{x}_{t-1}|\), where the \(| \cdot |\) function applies component-wise. Linearizing we obtain the following special case of model (4):

We again use the approximation function \( h_{t}(\mathbf{x}_{t-1},\,\mathbf{\xi }_{t}) = - \mathbf{p}_{t} ^{\top }\mathbf{x}_{t-1} \). With nonzero transaction costs the conditions of Assumption 1 are no longer satisfied: Suppose in the third stage it is optimal to invest all money in stock A. Arriving at this point with the second stage portfolio consisting only of stock A is convenient because we need not rebalance and incur a transaction cost. A portfolio of less worth, in the sense of \(\mathbf{p}_{t} ^{\top }\mathbf{x}_{t-1}\), consisting only of stock A may be preferred to another portfolio with larger value of \(\mathbf{p}_{t} ^{\top }\mathbf{x}_{t-1}\) but consisting of other stocks. Fortunately, we can still compare some portfolios. Consider the worst case scenario in which we must rebalance the entire portfolio; i.e., sell all our assets and buy some other assets at stage \(t\). This would increase the loss by a factor of \(\frac{1+f_{t}}{1-f_{t}}\). However, this portfolio is still better than any other portfolio whose total loss exceeds this portfolio’s loss adjusted by the same factor. This leads us to the margin function given by:

Functions \( h_{t}\) and \(m_{t} \) satisfy Assumption 2, and we can apply the upper bound estimator \(U^\mathbf{m}\). This construction, of course, increases the bias of the estimator as we indicate in Proposition 3. However, if the transaction costs are modest compared to market volatility, we may expect our estimator to provide reasonable results.

Table 3 reports results in the same manner as Table 2, now comparing \(U^\mathbf{m}\) and \(U^\mathbf{{e}}\). The value \(\underline{z}_{}\) is computed in the same way we describe above. From Table 2 we see that the point estimate \(U^\mathbf{h}\) as a percentage of \(\underline{z}_{}\) drops from 99.8 to 98.8 to 95.6 % for \(T=5, 10,\) and 15, respectively. The analogous values for \(U^\mathbf{m}\) from Table 3 are weaker as expected, dropping from 99.6 to 98.7 to 90.5 %. Note that these same values for \(U^\mathbf{{e}}\) for \(T=5\) are \(78.9\,\%\) without transaction costs and \(75.3\,\%\) with transaction costs. For \(T=2,3,4,5\) the variance reduction of \(U^\mathbf{m}\) over \(U^\mathbf{{e}}\) grows from roughly 3 to 20 to 40 to 400, again indicating that our proposed upper bound estimator is superior to the previously available estimator.

To assess the required computational effort, for the model instances without transaction costs for \(T=5, 10\), and \(15\) stages we ran 100 replications of estimators \(U^\mathbf{{n}}\) and \(U^\mathbf{h}\). For each estimator we used a sample size of \(M\) = 1,000 and the subproblems in each stage had 1,000 cuts. The computations were performed using a single thread on an Intel 2.53 GHz Core2 Duo with \(4\) GB of RAM and CPLEX version 12.2. The average computation time for estimator \(U^\mathbf{{n}}\) grew from 8.7 seconds to 31.6 sec. to 67.4 sec. for the respective model instances with \(T=5, 10\), and \(15\) stages. The computation times, again averaged over 100 replications of the estimators, for \(U^\mathbf{h}\) grew from 6.8 s to 30.0 s to 66.5 s for the same instances. (The standard deviations associated with the run times are at most 1 % of the average.) Like estimator \(U^\mathbf{{n}}\), the computational effort we require to compute \(U^\mathbf{h}\) scales well with the number of stages. We note that the estimator \(U^\mathbf{h}\) does have additional computational overhead, relative to \(U^\mathbf{{n}}\), that grew from an average 0.3 s to 1.2 s to 2.9 s for \(T=5, 10\), and \(15\) stages. However, for these particular problem instances the linear programming master programs happen to solve slightly more quickly for estimator \(U^\mathbf{h}\) compared to \(U^\mathbf{{n}}\). We obtained very similar scaling results with \(T\) for the estimator \(U^\mathbf{m}\) applied to problem instances with transaction costs.

Although we stop short of a computational comparison, we discuss similarities and differences between our estimators (\(U^\mathbf{h}\) and \(U^\mathbf{m}\)) and the inner approximation bound of Philpott et al. [30]. The computational work in [30] is on a hydro-thermal scheduling model that maintains an inventory of water (or energy) in four aggregate reservoirs. The analogous dimension for our first set of computational examples is one (current wealth), and the dimension is five for our second set of problem instances. Our largest models have 15 stages while those of Philpott et al. have 24 stages. The problems to which the respective bounds are applied are quite different, but our point estimate with the largest gap is about 10 % (see \(T=15\) row of Table 3), and the gap reported in Table 3 of [30] with 10,000 cuts is of similar magnitude. With 10,000 cuts the inner approximation of [30] would require solving \(150{,}000\) linear programs for our \(15\)-stage models, while we report results for our estimators solving \(50{,}000\) linear programs. We suspect that our approach will scale well with dimension, although we have yet to investigate this computationally. We note that our estimators require specification of functions that appropriately characterize the tail of the recourse function while the inner approximation of Philpott et al. does not have this requirement. A final distinction is that, like the SDDP algorithm for the risk-neutral model, our upper bound estimators are for the policy dictated by the cuts, i.e., the policy associated with the outer approximation. Philpott et al. do not provide an upper bound for this cut-based policy. Rather their bound is for a policy associated with a set of points employed in developing the inner approximation.

7 Conclusion

We have presented a new approach to compute upper bound estimators for multi-stage stochastic programs that incorporate risk via \(\mathrm{CVaR }\). Under relatively mild conditions our most widely applicable estimator provides much better results than an existing estimator from the literature, in terms of reduced bias, smaller variance, and viability in problems with more than a few stages. We believe this type of estimator could be used to form better stopping rules for SDDP-style algorithms and possibly other algorithms, too. Such stopping rules and upper bounds allow for quantification of the quality of a proposed policy, which we see as important in practice.

Future research could include further characterization of the statistical properties of the proposed estimators, parametric tuning of the importance sampling distribution, or could focus on the type of approximation functions useful for the importance sampling schemes. Further future work could include application of the estimator in other problem settings in which multi-stage stochastic programs see pervasive use, such as hydroelectric scheduling under inflow uncertainty. We believe that other risk measures will lend themselves to our ideas and developing and analyzing analogous estimators is another topic for further research.

References

Armadillo C++ linear algebra library. http://arma.sourceforge.net/

Artzner, P., Delbaen, F., Eber, J.-M., Heath, D.: Coherent measures of risk. Math. Financ. 9, 203–228 (1999)

Bayraksan, G., Morton, D.P.: Assessing solution quality in stochastic programs via sampling. In: Oskoorouchi, M., Gray, P., Greenberg, H. (eds.) Tutorials in Operations Research. pp. 102–122. INFORMS, Hanover (2009). ISBN: 978-1-877640-24-7

Bayraksan, G., Morton, D.P.: A sequential sampling procedure for stochastic programming. Oper. Res. 59, 898–913 (2011)

Birge, J.R.: Decomposition and partitioning methods for multistage stochastic linear programs. Oper. Res. 33, 989–1007 (1985)

Blum, M., Floyd, R. W., Pratt, V., Rivest, R. L., Tarjan, R. E.: Linear time bounds for median computations. In: Proceedings of the Fourth Annual ACM Symposium on Theory of Computing, pp 119–124 (1972)

Chen, Z.L., Powell, W.B.: Convergent cutting-plane and partial-sampling algorithm for multistage stochastic linear programs with recourse. J. Optim. Theory Appl. 102, 497–524 (1999)

Chiralaksanakul, A., Morton, D.P.: Assessing policy quality in multi-stage stochastic programming. Stochastic Programming E-Print Series, vol. 12 (2004). Available at: http://www.speps.org/

de Queiroz, A.R., Morton, D.P.: Sharing cuts under aggregated forecasts when decomposing multi-stage stochastic programs. Oper. Res. Lett. 41, 311–316 (2013)

Donohue, C.J., Birge, J.R.: The abridged nested decomposition method for multistage stochastic linear programs with relatively complete recourse. Algorithm. Oper. Res. 1, 20–30 (2006)

Eichhorn, A., Römisch, W.: Polyhedral risk measures in stochastic programming. SIAM J. Optim. 16, 69–95 (2005)

Goor, Q., Kelman, R., Tilmant, A.: Optimal multipurpose-multireservoir operation model with variable productivity of hydropower plants. J. Water Resour. Plan. Manag. 137, 258–267 (2011)

Guigues, V.: SDDP for some interstage dependent risk averse problems and application to hydro-thermal planning. Comput. Optim. Appl. 57, 167–203 (2014)

Guigues, V., Römisch, W.: Sampling-based decomposition methods for multistage stochastic programs based on extended polyhedral risk measures. SIAM J. Optim. 22, 286–312 (2012)

Hesterberg, T.C.: Weighted average importance sampling and defensive mixture distributions. Technometrics 37, 185–194 (1995)

Higle, J.L., Rayco, B., Sen, S.: Stochastic scenario decomposition for multistage stochastic programs. IMA J. Manag. Math 21, 39–66 (2010)

Homem-de-Mello, T., de Matos, V.L., Finardi, E.C.: Sampling strategies and stopping criteria for stochastic dual dynamic programming: a case study in long-term hydrothermal scheduling. Energy Syst. 2, 1–31 (2011)

IBM ILOG CPLEX. http://www.ibm.com/software/integration/optimization/cplex-optimization-studio/

Infanger, G., Morton, D.P.: Cut sharing for multistage stochastic linear programs with interstage dependency. Math. Prog. 75, 241–256 (1996)

Knopp, R.: Remark on algorithm 334 [G5]: normal random deviates. Commun. ACM 12, 281 (1966)

Krokhmal, P., Zabarankin, M., Uryasev, S.: Modeling and optimization of risk. Surv. Oper. Res. Manag. Sci. 16, 49–66 (2011)

L’Ecuyer random streams generator. http://www.iro.umontreal.ca/~lecuyer/myftp/streams00/

Linowsky, K., Philpott, A.B.: On the convergence of sampling-based decomposition algorithms for multi-stage stochastic programs. J. Optim. Theory Appl. 125, 349–366 (2005)

Löhndorf, N., Wozabal, D., Minner, S.: Optimizing trading decisions for hydro storage systems using approximate dual dynamic programming. Oper. Res. 61, 810–823 (2013)

Mo, B., Gjelsvik, A., Grundt, A.: Integrated risk management of hydro power scheduling and contract management. IEEE Trans. Power Syst. 16, 216–221 (2001)

Pereira, M.V.F., Pinto, L.M.V.G.: Multi-stage stochastic optimization applied to energy planning. Math. Prog. 52, 359–375 (1991)

Pflug, GCh., Römisch, W.: Modeling, Measuring and Managing Risk. World Scientific Publishing, Singapore (2007)

Philpott, A.B., Guan, Z.: On the convergence of sampling-based methods for multi-stage stochastic linear programs. Oper. Res. Lett. 36, 450–455 (2008)

Philpott, A.B., de Matos, V.L.: Dynamic sampling algorithms for multi-stage stochastic programs with risk aversion. Eur. J. Oper. Res. 218, 470–483 (2012)

Philpott, A.B., de Matos, V.L., Finardi, E.C.: On solving multistage stochastic programs with coherent risk measures. Oper. Res. 61, 957–970 (2013)

Philpott, A.B., Dallagi, A., Gallet, E.: On cutting plane algorithms and dynamic programming for hydroelectricity generation. In: Pflug, GCh., Kovacevic, R.M., Vespucci, M.T. (eds.) Handbook of Risk Management in Energy Production and Trading. Springer, UK (2013)

Rebennack, S., Flach, B., Pereira, M.V.F., Pardalos, P.M.: Stochastic hydro-thermal scheduling under \(\text{ CO }_{2}\) emissions constraints. IEEE Trans. Power Syst. 27, 58–68 (2012)

Rockafellar, R.T., Wets, R.J.-B.: Scenarios and policy aggregation in optimization under uncertainty. Math. Oper. Res. 16, 119–147 (1991)

Rudloff, B, Street, A., Valladao, D.: Time consistency and risk averse dynamic decision models: definition, interpretation and practical consequences. Available at: http://www.optimization-online.org/

Ruszczynski, A., Shapiro, A.: Conditional risk mappings. Math. Oper. Res. 31, 544–561 (2006)

Ruszczynski, A.: Risk-averse dynamic programming for Markov decision processes. Math. Prog. 125, 235–261 (2010)

Sen, S., Zhou, Z.: Multistage stochastic decomposition: a bridge between stochastic programming and approximate dynamic programming. SIAM J. Optim. 24, 127–153 (2014)

Shapiro, A., Dentcheva, D., Ruszczynski, A.: Lectures on Stochastic Programming: Modeling and Theory. SIAM-Society for Industrial and Applied Mathematics (2009)

Shapiro, A.: Inference of statistical bounds for multistage stochastic programming problems. Math. Methods Oper. Res. 58, 57–68 (2003)

Shapiro, A.: On a time consistency concept in risk averse multistage stochastic programming. Oper. Res. Lett. 37, 143–147 (2009)

Shapiro, A.: Analysis of stochastic dual dynamic programming method. Eur. J. Oper. Res. 209, 63–72 (2011)

Shapiro, A., Tekaya, W., da Costa, J.P., Soares, M.P.: Risk neutral and risk averse stochastic dual dynamic programming method. Eur. J. Oper. Res. 224, 375–391 (2013)

West, M., Harrison, J.: Bayesian Forecasting and Dynamic Models, 2nd edn. Springer, New York (1997)

Acknowledgments

We thank two anonymous referees and an Associate Editor for comments that improved the paper. The research was partly supported by the Czech Science Foundation through grant 402/12/G097, by the Defense Threat Reduction Agency through Grant HDTRA1-08-1-0029, and by the National Science Foundation through Grant ECCS-1162328.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kozmík, V., Morton, D.P. Evaluating policies in risk-averse multi-stage stochastic programming. Math. Program. 152, 275–300 (2015). https://doi.org/10.1007/s10107-014-0787-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-014-0787-8

Keywords

- Multi-stage stochastic programming

- Stochastic dual dynamic programming

- Importance sampling

- Risk-averse optimization