Abstract

Faces play an important role in communication and identity recognition in social animals. Domestic dogs often respond to human facial cues, but their face processing is weakly understood. In this study, facial inversion effect (deficits in face processing when the image is turned upside down) and responses to personal familiarity were tested using eye movement tracking. A total of 23 pet dogs and eight kennel dogs were compared to establish the effects of life experiences on their scanning behavior. All dogs preferred conspecific faces and showed great interest in the eye area, suggesting that they perceived images representing faces. Dogs fixated at the upright faces as long as the inverted faces, but the eye area of upright faces gathered longer total duration and greater relative fixation duration than the eye area of inverted stimuli, regardless of the species (dog or human) shown in the image. Personally, familiar faces and eyes attracted more fixations than the strange ones, suggesting that dogs are likely to recognize conspecific and human faces in photographs. The results imply that face scanning in dogs is guided not only by the physical properties of images, but also by semantic factors. In conclusion, in a free-viewing task, dogs seem to target their fixations at naturally salient and familiar items. Facial images were generally more attractive for pet dogs than kennel dogs, but living environment did not affect conspecific preference or inversion and familiarity responses, suggesting that the basic mechanisms of face processing in dogs could be hardwired or might develop under limited exposure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recognition of faces and interpretation of facial cues in daily interactions are valuable for social animals: Faces convey information about the identity, gender, age, emotions and communicative intentions of other individuals (Tate et al. 2006; Leopold and Rhodes 2010; Parr 2011a). In domestic dogs, face perception abilities may extend beyond their own species because responding to human facial cues could have been a selective advantage during domestication (Guo et al. 2009; see Lakatos 2011 for a review). However, the face perception mechanisms of domestic dogs are weakly understood.

Humans detect and recognize human faces remarkably quickly and accurately despite similarity in the basic structure of all faces. Human expertise in face perception is suggested to rely on global processing, where faces are processed not as separate structures but as a unique configuration of relative size and placement of facial structures (Tanaka and Farah 1993; Maurer et al. 2002; Rossion 2008). When a facial image is inverted, global processing is disturbed, making faces harder to recognize, and the stimulus has to be processed element by element, like non-face objects (Yin 1969; Tanaka and Farah 1993). The face inversion effect (FIE) is one of the most studied phenomena in human face perception research because it has been considered as evidence of specific brain mechanisms involved in face processing (e.g., Yin 1969; Diamond and Carey 1986; Phelps and Roberts 1994; Rossion 2008).

There is debate as to whether such a global face processing mechanism exists in non-human animals. Behavioral studies have documented facial inversion effect in chimpanzees (e.g., Parr et al. 1998; Parr 2011b), monkeys (e.g., Phelps and Roberts 1994; Neiworth et al. 2007; Pokorny et al. 2011) and sheep (Kendrick et al. 1995; 1996), but there is also contradictory evidence from monkeys (Bruce 1982; Parr 2011b; see Parr 2011a for a review) and pigeons (Phelps and Roberts 1994). Recently, Racca et al. (2010) found that inversion impaired visual discrimination in dogs, but the response was not face-specific. Eye movement studies in non-human primates have demonstrated that inversion impacts on scanning patterns, especially fixations targeted at the eyes of conspecific faces (chimpanzees: Hirata et al. 2010; rhesus monkeys: Guo et al. 2003; Dahl et al. 2009; Gothard et al. 2009). To date, the influence of facial inversion on the scanning behavior of non-primate animals has not been documented.

Previously, we have found that dogs prefer viewing facial images over images of inanimate objects and conspecific faces over human faces, which might reflect their natural interests (Somppi et al. 2012). However, the level at which they perceived images remained unclear: Do dogs actually see faces in the pictures? Low-level eye movement control and picture perception occur on the basis of physical features (e.g., color, contrasts, shape) without extracting the representational content of the picture (Henderson and Hollingworth 1999; Fagot et al. 1999; Bovet and Vauclair 2000). At a higher level, eye movements are driven by semantic salience, e.g., interest, informativeness or emotional valence (Henderson and Hollingworth 1999; Kano and Tomonaga 2011; Niu et al. 2012). Inverted faces consist of similar physical properties as their normal upright versions, but their semantic information differs from normal faces, and in humans, they do not engage the face-specific recognition mechanisms (Yin 1969; Diamond and Carey 1986; Tanaka and Farah 1993). Thus, we can assume that if a subject perceives upright stimuli as faces, the upright and inverted stimuli will be scanned differently.

The most fine-tuned aspect of face processing is face identification, matching the face currently seen to facial memories (Bruce and Young 1986; Barton et al. 2006). Humans process and scan familiar and unfamiliar faces in different ways (Althoff and Cohen 1999; Johnston and Edmonds 2009). It is unclear what mechanism animals use in the recognition of individual faces, but it is known that non-human primates (e.g., Parr et al. 2000, 2011; Pokorny and de Waal 2009; Marechal and Roeder 2010) and many other mammals, including sheep (Kendrick et al. 1996), cows (Coulon et al. 2009) and horses (Stone 2010), can discriminate facial photographs of different individuals, at least after training. Untrained reactions toward facial pictures of personally familiar individuals have been studied less. However, the behavioral responses of macaques (Schell et al. 2011), sheep (Kendrick et al. 1995) and cows (Coulon et al. 2011) depend on whether they see a photograph of a member of their group or stranger, suggesting that they distinguish faces by familiarity. Recent study revealed similarities in scanning behaviors between rhesus monkeys and humans toward personally familiar faces (van Belle et al. 2010; Leonard et al. 2012). However, it is not known how familiarity affects eye movements of dogs or other non-primates.

In primates, fixations tend to accumulate the details which are perceived as interesting and informative, like faces in scenes and eyes in faces (Yarbus 1967; Henderson and Hollingworth 1999; Kano and Tomonaga 2011). Typically, the inversion effect and familiarity recognition are apparent in the fixations targeted at the eye region, emphasizing the importance of eyes for face recognition (e.g., Guo et al. 2003; Barton et al. 2006; Dahl et al. 2009; Hirata et al. 2010; Leonard et al. 2012). Despite numerous findings showing that domestic dogs are highly sensitive to facial signals and even utilize gaze cues in a human-like manner (Topál et al. 2009; Téglás et al. 2012), the role of eyes in the face perception of canines has not been investigated.

Functional similarities in social cognitive skills of domestic dogs and humans (reviewed in Topál et al. 2009) can be explained by their evolutionary history, life experiences and familiarization in a human environment (Udell et al. 2010b; Lakatos 2011). Recent comparative cognition studies have primarily focused on the behavior of pet dogs, which often share living habitats with humans. In contrast, biomedical research has used purpose-bred beagles for decades to model human aging-related cognitive dysfunction (Cotman and Head 2008). Studies comparing these two populations of dogs are lacking. Comparing different subpopulations of domestic dogs contributes to better understanding of genetic, environmental and developmental factors affecting canine cognition (Udell et al. 2010a).

Since the pioneering work of Yarbus (1967), eye tracking has been intensively used in human cognitive research. Currently, non-invasive eye tracking represents a promising tool for studying social information processing in animals also (Hattori et al. 2010, Kano and Tomonaga 2011; Téglás et al. 2012). The purpose of this study is to clarify the level at which domestic dogs perceive facial images. In a free-viewing task, we test how the scanning behavior of dogs is affected by facial inversion and explore whether spontaneous familiarity recognition could be detected using eye movement tracking. Considering the facts that dogs are prone to respond to human eye gaze and that they view human and dog faces in different ways (Guo et al. 2009; Racca et al. 2010; Somppi et al. 2012), we test whether the responses are species-dependent and particularly noticeable in fixations targeted at eye area. Through these comparisons, our aim is to demonstrate that eye movements of dogs are driven not only by low-level visual salience but also by high-level semantic information, as in primates. In addition, we compare family-living pet dogs with kennel-housed purpose-bred dogs to test whether living environment influences dogs’ responses to socially relevant stimuli.

Materials and methods

All experiments were conducted at the Veterinary Faculty of the University of Helsinki. Procedures were approved by the Ethical Committee for the Use of Animals in experiments at the University of Helsinki (minutes 2/2010).

Animals and pretraining

A total of 33 dogs were included in the study, representing two populations living in different environments; eight kennel dogs and 25 pet dogs. Kennel dogs were four-year-old beagles housed in a group kennel (2 sterilized females and 6 castrated males, purpose-bred in the Netherlands). Pet dogs were privately owned 1–8-year-old (average 4.6 years, SD 2.2) dogs living in their owners’ homes (14 intact females, 4 sterilized females, 5 intact males and 2 castrated males). Pet dogs represented 11 different breeds and mongrels (6 Border Collies, 3 Hovawarts, 3 Beauce Shepherds, 2 Rough Collies, 2 Smooth Collies, 1 Great Pyrenees, 1 Welsh Corgi, 1 Australian Kelpie, 1 Lagotto Romagnolo, 1 Manchester Terrier, 1 Swedish Vallhund, 1 Finnish Lapphund, 2 Mongrels). Five of the pet dogs had previously participated in an eye-tracking study (Somppi et al. 2012) 1 year earlier.

The daily routines of the dogs were kept similar to those of their regular life. Pet dogs were fed once or twice a day and taken outdoors three to five times for 0.5–2 h at a time. Most (21) of the pet dogs were trained regularly in obedience, agility and/or search activities. Kennel dogs lived in the kennel facilities of the University of Helsinki. They were fed twice a day and released into an exercise enclosure once a day for 2 h. They regularly saw other beagles living in the kennel, but had not seen or met any other unfamiliar dogs. Kennel dogs had lived in the same facilities from 9 months of age. They were primarily looked after by five caretakers and had been trained by two researchers from 2 years of age (Authors S.S. and H.T). In addition, five other experimenters handled dogs during pharmacological studies (2–4 test periods per year, each consisting of 2–4 test days per dog). For those experiments, dogs were carried to another building. Otherwise, kennel dogs were kept in the same building and occasionally saw unfamiliar humans.

Prior to conducting the experiments, all subjects were clicker-trained to lie still and lean their jaw on a special designed U-shaped chin rest in a similar procedure to that described in Somppi et al. 2012. The pet dogs were trained by their owners and the kennel dogs by the experimenters. The criterion for passing the training period was that the dog took the pretrained position without being commanded and remained in that position for at least 30 s, while the owner and experimenters were positioned behind an opaque barrier. During the training, the dogs were not encouraged to fix their eyes on a monitor or images and they were not restrained or forced to perform the task.

Eye-tracking system

The monitor, eye tracker and chin rest were placed in a cardboard cabin (h = 1.5 m, w = 0.9 m, d = 0.9 m) with three walls and a roof. The eye movements of the dogs were recorded with an infrared-based contact-free eye-tracking system (iView X™ RED250, SensoMotoric Instruments GmbH, Germany), which was integrated below a 22″ (56 cm) LCD monitor (1,680 × 1,050 px) placed 0.6–0.7 m from the dogs’ eyes. The distance was adjusted individually for each dog for optimal detection of the eyes with the eye tracker. Two fluorescent lamps were placed in front of and above the monitor. The mean illumination intensity measured at the top of the chin rest was 5,190 lx (SD 1,200 lx, ranging between 4,000 and 8,000 lx) and measured at the front of the chin rest 9,990 lx (SD 940 lx, ranging between 8,000 and 11,200 lx).

The eye tracker was calibrated for each dog’s eyes using a five-point procedure introduced by Somppi et al. (2012). The calibrated area was a visual field of 40.5° × 24.4° (equal to the size of the monitor). For calibration, the monitor was replaced with a plywood wall with five 22-mm-diameter holes in the positions of the calibration points in which the experimenter showed a tidbit (piece of sausage) to catch the dog’s attention (for more details, see Somppi et al. 2012).

After the five-point calibration, the accuracy of calibration was checked twice by recording the eye movements during the same procedure as in the original calibration session. The criterion for an accurate calibration was achieved if the dogs’ eye fixations hit within a 2° radius of the central calibration point and at least at three of four distal points. If the calibration reached this criterion, it was saved for later use. To get an optimal calibration, 1–13 calibrations were required for each dog (average 4.2 times, SD 2.6). The average calibration accuracy was 97 % (SD 7 %, ranging between 80 and 100 %), calculated as a proportion of fixated points out of five calibration points over two calibration checks including all dogs. Two of the 25 pet dogs were excluded from the experiment because of inadequate calibration accuracy.

To maintain vigilance in the dogs, the calibration and experimental task were run on separate days. Illumination and the position of the chin rest, monitor and eye tracker were kept the same during the calibration, calibration checks and the experimental task.

Stimuli

Digital color facial photographs of humans (46 images) and dogs (52 images) with neutral expression and direct gaze were used. Faces represented personally familiar humans (family members or caretakers), personally familiar dogs (dogs living in the same family or regular playmates), strange humans (humans that the subject has not previously seen in real life or in photographs) and strange dogs (dogs that the subject has not previously seen in real life or in photographs). Photographs were taken by experimenters and dog owners. The faces were cropped from their surroundings and placed on gray backgrounds (650 × 650 px, corresponding visual field of 15.6° × 15.6°).

Stimuli sets were designed individually for each subject so that a certain image represented a familiar face for one subject and a strange face for another subject. The strange faces were presented also in their inverted forms (upside-down rotation). Each stimulus set consisted of 18 different stimuli images (strange humans, N = 3; strange dogs, N = 3; familiar humans, N = 3; familiar dogs, N = 3; inverted humans, N = 3; inverted dogs, N = 3). The familiar and strange dog faces were paired for contrast, ear shape and hair length. Human face pictures were paired by contrast, gender, age and hair color to make the images quite similar but easily distinguishable to humans. Stimulus categories were defined in respect of species, familiarity and inversion as human or dog, strange or familiar and upright or inverted. Stimuli samples are shown in Fig. 1.

Examples of the six stimulus categories presented in the study a familiar human face, b strange human face, c inverted human face, d familiar dog face, e strange dog face and f inverted dog face. The eye movement data were analyzed for two areas of interest (AOIs: face and eye area), which are drawn in the images a and d. Original photographs by S.Somppi, H.Törnqvist and M. Hytönen

Experimental procedure

The experimental task was repeated on 2 days with a randomized stimulus order. The time delays between the test days were 3–12 days (average 4.5 days, SD 1.9).

At the beginning of the test, the dog was released from the leash to the test room to settle down at the pretrained position and the owner went behind the opaque barrier with the experimenters (for more details, see Somppi et al. 2012). The behavior of the dog was monitored via video camera. Prior to the experimental task, one to five warm-up trials were conducted. During warm-up trials, pictures that were not included in actual stimulus sets (toys, cars and wild animals) were shown and dogs were rewarded randomly after one to fifteen images. The experimental task started when the dog was calm and attentive, and the eye tracker was detecting the eyes properly.

During the experimental task, each stimulus image was repeated three to four times, giving a total of 60 presentations divided among six trials. In each trial, the series of 8–12 images was presented at the center of a monitor with a gray background using Presentation® software (Neurobehavioral Systems, San Francisco, USA). Each stimulus lasted 1,500 ms and followed a 500-ms blank gray screen between images. The length of the trial and the order of the stimuli were randomized in order to prevent anticipatory behavior. At the end of the trial, the image disappeared and the subject was rewarded, regardless of gazing behavior. If the dog left the position during the trial, it was not commanded to return. After the third trial, a 5-min break was taken. During the break, the dog waited in a separate room with the owner or caretaker.

Data preparation

Eye movement data were obtained from 23 pet dogs and 8 kennel dogs for a total of 3,284 images, on average 106 images per dog (SD 11 images, ranging between 69 and 120). A total of 436 images were excluded from analysis because of missing data lasting over 750 ms during one stimulus presentation (interrupted eye tracking due to technical problems or a dog’s behavior).

The raw binocular eye movement data were analyzed using BeGaze 2.4™ software (SensoMotoric Instruments GmbH, Berlin, Germany). The fixation of the gaze was scored with a low-speed event detection algorithm that calculates potential fixations with a moving window spanning consecutive data points. The fixation was coded if the minimum fixation duration was 75 ms and the maximum dispersion value D = 250 px (D = [max(x) − min(x)] + [max(y) − min(y)]). Otherwise, the recorded sample was defined as part of a saccade.

Statistical analyses

Each stimulus image was divided into two areas of interest (AOI): face and eye area. The face area was drawn in line with the facial contours (hair and ears excluded), and the eye area was defined as a rectangle whose contours were approximately at 1° distance from the pupils (Fig. 1). From the binocular raw data, number of fixations, total fixation duration and latency to first fixation were averaged per image for these two AOIs. In addition, relative fixation duration targeted to the eye area was calculated by dividing the fixation duration of the eye area by the fixation duration of the face area.

The effect of inversions and effect of familiarity were analyzed separately with linear mixed-effects models (MIXED), taking repeated sampling into account, with PASW statistics 18.0 (IBM, New York, USA). Only the strange images were used for models analyzing the effect of inversion and only the upright orientation for analyzing the effect of familiarity. In the final model for studying the inversion, the fixed effects were group (pet or kennel dog), inversion (upright vs. inverted), species (dog vs. human) and an interaction between inversion and species. Other interactions were tested and excluded as being non-significant. In the final model for studying the familiarity, the fixed effects were group (pet or kennel dog), familiarity (familiar vs. strange) and species (dog vs. human). No interactions showed statistically significant effects in the preliminary analyses, so all were omitted from the final model. In both models, the test day, the order of the trial and order of the image were used as repeated factors with the first-order autoregressive covariance structure. The age of the dog and the size of the AOI were included as covariates and the dog, the gender of the dog, and the calibration level (calibration accuracy 80, 90 or 100 %) as random effects.

The normality and homogeneity assumptions of the models were checked with a normal probability plot of residuals and scatter plot residuals against fitted values. Results are reported as estimated means with a standard error of the mean (SEM). The significance level was set at alpha = 0.05.

Results

Inversion analysis

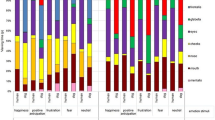

Figure 2 shows that the total fixation duration was longer and the relative fixation duration targeted at the eye area was greater for the upright (N = 865) than for the inverted (N = 886) condition (47.1 ± 4.8 % vs. 40.9 ± 4.8 %, P = 0.001). The eyes of the inverted stimuli were fixated faster than the eyes of upright stimuli (Fig. 2: Latency to fixate).

The differences between upright and inverted conditions in the total fixation duration, number of fixations and latency to fixate (mean ± SEM, dog and human images pooled) averaged over 31 dogs and analyzed separately for face and eye area. Statistically significant differences between the conditions are represented by an asterisk (MIXED, *P < 0.05)

Overall, the number of fixations targeted at the eye area did not differ between upright and inverted conditions (Fig. 2). However, an interaction between species and inversion reached statistical significance in the face area (P = 0.02): Inverted human (N = 558) faces gathered more fixations than upright human (N = 553) faces (1.3 ± 0.2 vs. 1.1 ± 0.2, P = 0.03), but no differences were found between number of fixations on inverted dog (N = 550) and upright dog (N = 547) faces (1.2 ± 0.2 vs. 1.2 ± 0.2, P = 0.34). Examples of eye gaze patterns of the dogs are shown in Fig. 3.

An example of gaze patterns during presentation of upright human and dog faces and their inverted versions. The focus map represents the averaged fixations of one dog over six repetitions of the image. The color coding represents the average fixation duration: minimum 5 ms indicated by light blue and maximum of 100 ms or over by bright red. Original photographs by S.Somppi and K.Helander

The relative fixation duration targeted at the eye area was greater for dog faces than for human faces. In addition, latency to fixate at the dog face and eyes was shorter than for the human face and eyes (Table 1). Pet dogs fixated longer at the face area and had shorter latencies to fixate at the face than kennel dogs (Table 2).

Familiarity analysis

Familiar eyes and faces gathered more fixations than strange eyes and faces (Fig. 4). No statistically significant differences between familiar (N = 836) and strange (N = 865) stimuli were found in relative duration targeted at the eye area (48.3 ± 2.8 % vs. 46.8 ± 2.8 %, P = 0.49) or in other variables (Fig. 4).

The differences between familiar and strange conditions in the total fixation duration, number of fixations and latency to fixate (mean ± SEM, dog and human images pooled) averaged over 31 dogs and analyzed separately for face and eye area. Statistically significant differences between the conditions are represented by an asterisk (MIXED, *P < 0.05)

Dog eyes and faces gathered more fixations and longer total fixation durations compared with upright human eyes and faces (Table 3). Pet dogs fixated longer at the face area and had shorter latencies to fixate at the face and eye area than kennel dogs (Table 4).

Discussion

This study demonstrates for the first time how facial inversion and familiarity, two phenomena widely studied in primate face perception research, affect the scanning behavior of domestic dogs. Results revealed similarities with the gazing behavior of primates. In summary, dogs preferred conspecific faces showed a great interest in the eye area of upright faces and fixated more at personally familiar faces than at strange ones.

Effects of inversion and familiarity

Dogs targeted nearly half of the relative fixation duration at the eye area of both upright dog and human faces, supporting the previous evidence showing that dogs are highly sensitive to eye gaze (Topál et al. 2009; Téglás et al. 2012). Dogs fixated for a longer duration at the eye region in the upright condition than in the inverted condition, despite the fact that the physical properties of these two orientations were identical. Such a salience for the eye region of upright faces has been interpreted as an indicator of a global face processing mechanism (Gothard et al. 2009; Dahl et al. 2009; Hirata et al. 2010). Results suggest that dogs actually perceived the images as representing faces.

Interestingly, dogs fixated at eyes sooner in the inverted condition than in the upright condition. For monkeys also, eyes remain salient facial features despite image manipulations (Keating and Keating 1993; Guo et al. 2003; Gothard et al. 2009). In live social situations, eye gaze has multiple functions for dogs depending on context: A fixed stare is interpreted as an expression of threat, while gaze alternation is used in cooperative and affiliative interactions (Vas et al. 2005; Topál et al. 2009). In the current experiment, dogs likely interpreted the upright stimuli at first as staring faces and hence delayed fixating at their eyes. This resembles dogs’ behavior in normal life when encountering unfamiliar people and dogs. Inverted stimuli may have been processed on a lower level prior to object classification, where visual salience predominates overemotional salience, so that the eyes gathered fixations because of their physical properties (e.g., contrast, shape) (Guo et al. 2003; Kano and Tomonaga 2011; Niu et al. 2012).

Dogs preferred conspecific to human faces, corresponding to our previous findings (Somppi et al. 2012) and recent behavioral evidence showing that dogs can discriminate their conspecifics using facial images (Autier-Dérian et al. 2013). Chimpanzees and macaques also attend more to the faces and eyes of conspecifics, suggesting expertise in the faces of their own species (Dahl et al. 2009; Hattori et al. 2010). Due to expertise, the inversion effect tends to particularly impair the perception of conspecific faces (Neiworth et al. 2007; Dahl et al. 2009; Parr 2011b; Pokorny et al. 2011). Despite the conspecific preference of dogs, inversion affected the gazing duration targeted at dog and human images similarly, which corresponds to the results of Racca et al. (2010).

However, we found a species-dependent effect in number of fixations: Dogs fixated more often at inverted faces than at upright human faces, while inverted and upright dog faces gathered the same number of fixations. Dogs may have difficulties in extracting information from inverted human faces (Barton et al. 2006) because human faces are quite similarly shaped (oval) in both the normal and upside-down positions. Therefore, the encoding of inverted human faces may have required more visual processing to determine whether the stimulus was a face or not. In contrast, inverted dog faces, whose shape is less face-like (e.g., ears downward), may have been classified as non-faces and thus received less attention, like inanimate objects (Somppi et al. 2012). Alternatively, it is possible that as dogs share their lives with humans, they exhibit different strategies for processing faces of humans and conspecifics (Guo et al. 2009; Racca et al. 2010).

Regardless of the species presented in the image, dogs fixated more on the faces and eyes of personally familiar individuals than on the faces and eyes of strangers. Correspondingly, humans and macaques show greater interest in personally familiar faces and fixate repeatedly close to their eyes (van Belle et al. 2010; Leonard et al. 2012). The eye region seems to be an important feature in identity recognition for dogs, as it is for humans (Barton et al. 2006; Heisz and Shore 2008), chimpanzees, monkeys (Parr et al. 2000) and sheep (Kendrick et al. 1995). The scanning behavior of dogs supports previous behavioral findings, showing that dogs were able to distinguish dog and human individuals using solely facial cues (Racca et al. 2010) and responded to an owner’s picture without training (Adachi et al. 2007).

Spontaneous reactions toward images of personally familiar faces reflect the strategies animals use in live social situations (Kendrick et al. 1995; Coulon et al. 2011; Schell et al. 2011). Thus, familiar faces and eyes may attract more fixations due to their emotional salience: They are interpreted as being more trusted and interesting because of positive associations related to a personal relationship. On the other hand, exposure to certain objects may lead to expertise (Diamond and Carey 1986), which may provoke fixating. Thus, the response to familiarity may not be social, but a perceptual effect. It is also possible that dogs recognize familiarity at a general level without identifying individuals presented in pictures. Further studies, including the comparison between upright and inverted familiar faces and objects, are needed to clarify the level at which dogs individuate faces and determine whether the familiarity preference is caused by social or perceptual effects.

Several studies have reported that humans fixate familiar faces less than unfamiliar ones, indicating easier recognition of familiar faces (e.g., Althoff and Cohen 1999; Barton et al. 2006; Heisz and Shore 2008). However, in these studies, the tasks have usually required active memorizing and categorization of the faces. In addition, famous or familiarized faces have regularly been used instead of personally familiar faces, which may influence scanning strategies (Johnston and Edmonds 2009; van Belle et al. 2010). In contrast, the current experiment was based on passive viewing and both personally familiar and unfamiliar stimuli were repeated, so the response to the familiarity did not only arise from learning over the course of the experiment. However, dogs’ interest in two-dimensional pictures decreases during repetitions (Somppi et al. 2012) and they tend to attend more to the person with whom they have the strongest bond (Horn et al. 2013). Thus, responses to familiarity might have been stronger if fewer stimuli had been presented to each subject and only the pictures of principal caretakers and the closest dog associates had been used.

Overall, the effects of inversion and familiarity were only marginally dependent on the species presented at the image. It is possible that the face perception expertise of dogs is not restricted to their own species because of their shared life with humans. Alternatively, face scanning in dogs may be species-independent, or the experimental design may not have brought out the species dependence. A number of different experimental paradigms should be tested further (e.g., Maurer et al. 2002; Parr 2011a) to determine how dogs identify faces and whether they exhibit facial expertise and global face processing. Contact-free eye tracking combined with neurophysiological recording, for example non-invasive EEG (Törnqvist et al. 2013; Kujala et al. 2013), represents a promising tool for more detailed exploration of face perception of dogs.

Differences between pet and kennel dogs

The two dog populations tested in this study had very different living environments and life experiences. The pet dogs lived in an urban environment and were well familiarized with strange people and dogs. Environmental enrichment and opportunities for intra- and interspecific social interactions were much more limited for the kennel dogs. Nevertheless, differences between their gazing behaviors were quite marginal: Kennel dogs fixated for a shorter duration at the face area than the pet dogs, and their latencies to fixate at the face and eye areas were longer.

The different scanning behavior of kennel dogs may have several explanations. Firstly, breed could modulate the viewing behavior. Behavioral studies have shown that herding and working dogs which have been bred to cooperation may be more prone to look at faces (Wobber et al. 2009; Passalacqua et al. 2011). The kennel dogs were beagles, which are hunting dogs, whereas most pet dogs were of breeds from the herding or working groups.

Personality, life experiences and current emotional state could affect tendency to gaze at faces (Jakovcevic et al. 2012; Barrera et al. 2011; Bethell et al. 2012) and way of scanning facial images (Gothard et al. 2009; Gibboni et al. 2009; Kaspar and König 2012). The beagles in this study were shy toward unfamiliar people and fearful in new situations. Furthermore, they were not used to seeing dogs and humans on television as pet dogs living in families were. Pictorially, naïve animals often confuse pictures and the objects they represent (confusion mode: Fagot et al. 1999; Bovet and Vauclair 2000; Coulon et al. 2011). Even though kennel dogs had seen warm-up images, they may have a pronounced tendency to avoid directly gazing at faces because they regarded the face images as being real dogs and humans. Interestingly, although kennel dogs fixated at the eye region later, they looked at eyes as long as pet dogs, suggesting that eyes are also meaningful facial features for kennel dogs, which did not have such a close relationship with humans as pet dogs. Shy people show vigilance for social threat in the early stages of attention (Gamble and Rapee 2010) and scrutinize the eye region especially effectively (Brunet et al. 2009), and this may be the case in dogs too.

In spite of the differences in their overall looking behavior, kennel and pet dogs responded to inversion, familiarity and species conditions similarly. It should be noted that the number of kennel dogs was smaller than that of pet dogs, and all dogs were trained using the same method, which may have compensated for differences. In both dog groups, variation among subjects was large. Based on a limited sample size and uncontrolled developmental factors (e.g., familiarization with strange people and dogs in puppyhood), we are not able to determine whether differences between kennel dogs and pet dogs are genetic, breed-specific or a result of habitat.

Nonetheless, faces were generally more attractive for pet dogs than for kennel dogs, which may be due to different emotional salience. Overall, in a free-viewing task, dogs seem to target their fixations at naturally salient and familiar items (conspecifics, upright eyes, familiar faces), which supports our previous proposal that dogs’ gazing preferences reflect their natural interests (Somppi et al. 2012). As an exception to these results, the accumulation of fixations at inverted human faces was probably not a sign of attraction but stemmed from the requirements for more detailed visual processing because they were more demanding to encode compared with other stimuli.

In conclusion, the results underline the importance of the eyes for face perception in dogs and support the fact that face scanning of dogs is guided not only by the physical properties of images, but also by semantic factors. Dogs are likely to recognize conspecific and human faces in photographs, and their face perception expertise may extend beyond their own species. Living environment affected gazing behavior at the general level, but not species preference or responses to inversion and familiarity, suggesting that the basic mechanisms of face processing in dogs could be hardwired or might develop under limited exposure.

References

Adachi I, Kuwahata H, Fujita K (2007) Dogs recall their owner’s face upon hearing the owner’s voice. Anim Cogn 10:17–21

Althoff RR, Cohen NJ (1999) Eye-movement-based memory effect: a reprocessing effect in face perception. J Exp Psychol Learn Mem Cogn 25:997–1010

Autier-Dérian D, Deputte BL, Chalvet-Monfray K, Coulon M, Mounier L (2013) Visual discrimination of species in dogs (Canis familiaris). Anim Cogn 16:637–651

Barrera G, Mustaca A, Bentosela M (2011) Communication between domestic dogs and humans: effects of shelter housing upon the gaze to the human. Anim Cogn 14:727–734

Barton J, Radcliffe N, Cherkasova MV, Edelman J, Intriligator JM (2006) Information processing during face recognition: the effects of familiarity, inversion, and morphing on scanning fixations. Perception 35:1089–1105

Bethell EJ, Holmes A, Maclarnon A, Semple S (2012) Evidence that emotion mediates social attention in rhesus macaques. PLoS ONE 7(8):e44387. doi:10.1371/journal.pone.0044387

Bovet D, Vauclair J (2000) Picture recognition in animals and humans. Behav Brain Res 109:143–165

Bruce C (1982) Face recognition by monkeys: absence of an inversion effect. Neuropsychologia 20:515–521

Bruce V, Young A (1986) Understanding face recognition. Br J Psychol 77:305–327

Brunet PM, Heisz JJ, Mondloch CJ, Shore DI, Schmidt LA (2009) Shyness and face scanning in children. J Anxiety Disord 23:909–914

Cotman CW, Head E (2008) The Canine (Dog) Model of human aging and disease: dietary, environmental and immunotherapy approaches. J Alzheimers Dis 15:685–707

Coulon M, Deputte BL, Heyman Y, Baudoin C (2009) Individual recognition in domestic cattle (Bos taurus): evidence from 2D-images of heads from different breeds. PLoS ONE 4(2):e4441. doi:10.1371/journal.pone.0004441

Coulon M, Baudoin C, Heyman Y, Deputte BL (2011) Cattle discriminate between familiar and unfamiliar conspecifics by using only head visual cues. Anim Cogn 14:279–290

Dahl CD, Wallraven C, Bülthoff HH, Logothetis NK (2009) Humans and macaques employ similar face-processing strategies. Curr Biol 19:509–513

Diamond R, Carey S (1986) Why faces are and are not special: an effect of expertise. J Exp Psychol 115:107–117

Fagot J, Martin-Malivel J, De′py D (1999) What is the evidence for an equivalence between objects and pictures in birds and nonhuman primates? Curr Psychol Cogn 18:923–949

Gamble AL, Rapee RM (2010) The time-course of attention to emotional faces in social phobia. J Behav Ther Exp Psychiatry 41(1):39–44

Gibboni RR, Zimmerman PE, Gothard KM (2009) Individual differences in scan paths correspond with serotonin transporter genotype and behavioral phenotype in rhesus monkeys (Macaca mulatta). Front Behav Neurosci 3(50):1–11. doi:10.3389/neuro.08.050.2009

Gothard KM, Brooks KN, Peterson MA (2009) Multiple perceptual strategies used by macaque monkeys for face recognition. Anim Cogn 12:155–167

Guo K, Robertson RG, Mahmoodi S, Tadmor Y, Young MP (2003) How do monkeys view faces?: a study of eye movements. Exp Brain Res 150:363–374

Guo K, Meints K, Hall C, Hall S, Mills D (2009) Left gaze bias in humans, rhesus monkeys and domestic dogs. Anim Cogn 12:409–418

Hattori Y, Kano F, Tomonaga M (2010) Differential sensitivity to conspecific and allospecific cues in chimpanzees and humans: a comparative eye-tracking study. Biol Lett 6:610–613

Heisz JJ, Shore DI (2008) More efficient scanning for familiar faces. J Vis 8(1):1–10. doi:10.1167/8.1.9

Henderson JM, Hollingworth A (1999) High-level scene perception. Annu Rev Psychol 50:243–271

Hirata S, Fuwa K, Sugama K, Kusunoki K, Fujita S (2010) Facial perception of conspecifics: chimpanzees (Pan troglodytes) preferentially attend to proper orientation and open eyes. Anim Cogn 13:679–688

Horn L, Range F, Huber L (2013) Dogs’ attention towards humans depends on their relationship, not only on social familiarity. Anim Cogn 16:435–443

Jakovcevic A, Elgier AM, Mustaca A, Bentosela M (2012) Do more sociable dogs gaze longer to the human face than less sociable ones? Behav Process 90:217–222

Johnston RA, Edmonds AJ (2009) Familiar and unfamiliar face recognition: a review. Memory 17:577–596

Kano F, Tomonaga M (2011) Perceptual mechanism underlying gaze guidance in chimpanzees and humans. Anim Cogn 14:377–386

Kaspar K, König P (2012) Emotions and personality traits as high-level factors in visual attention: a review. Front Hum Neurosci 6:321. doi:10.3389/fnhum.2012.00321

Keating CF, Keating EG (1993) Monkeys and mug shots: cues used by rhesus monkeys (Macaca mulatta) to recognize a human face. J Comp Psychol 107:131–139

Kendrick KM, Atkins K, Hinton MR, Broad KD, Fabre-Nys C, Keverne EB (1995) Facial and vocal discrimination in sheep. Anim Behav 49:1665–1676

Kendrick KM, Atkins K, Hinton MR, Heavens P, Keverne B (1996) Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav Process 38:19–35

Kujala MV, Törnqvist H, Somppi S, Hänninen L, Krause CM, Kujala J, Vainio O (2013) Reactivity of dogs’ brain oscillations to visual stimuli measured with non-invasive electroencephalography. PLoS ONE 8(5):e61818. doi:10.1371/journal.pone.0061818

Lakatos G (2011) Evolutionary approach to communication between humans and dogs. Ann Ist Super Sanità 47:373–377

Leonard TK, Blumenthal G, Gothard KM, Hoffman KL (2012) How macaques view familiarity and gaze in conspecific faces. Behav Neurosci. doi:10.1037/a0030348

Leopold DA, Rhodes G (2010) A Comparative view of face perception. J Comp Psychol 124:233–251

Marechal L, Roeder JJ (2010) Recognition of faces of known individuals in two lemur species (Eulemur fulvus and E. macaco). Anim Behav 79:1157–1163

Maurer D, Le Grand R, Mondloch CJ (2002) The many faces of configural processing. Trends Cogn Sci 6:255–260

Neiworth JJ, Hassett JM, Sylvester CJ (2007) Face processing in humans and new world monkeys: the influence of experiential and ecological factors. Anim Cogn 10:125–134

Niu Y, Todd RM, Anderson AK (2012) Affective salience can reverse the effects of stimulus-driven salience on eye movements in complex scenes. Front Psychol 3:336. doi:10.3389/fpsyg.2012.00336

Parr LA (2011a) The evolution of face processing in primates. Philos Trans R Soc B 366:1764–1777

Parr LA (2011b) The inversion effect reveals species differences in face processing. Acta Psychol (Amst) 138:204–210

Parr LA, Dove T, Hopkins WD (1998) Why faces may be special: evidence of the inversion effect in chimpanzees. J Cogn Neurosci 10:615–622

Parr LA, Winslow JT, Hopkins WD, de Waal FBM (2000) Recognizing facial cues: individual discrimination by chimpanzees (Pan troglodytes) and rhesus monkeys (Macaca mulatta). J Comp Psychol 114:47–60

Parr LA, Siebert E, Taubert J (2011) Effect of familiarity and viewpoint on face recognition in chimpanzees. Perception 40:863–872

Passalacqua C, Marshall-Pescini S, Barnard S, Lakatos G, Valsecchi P, Previde EP (2011) Human-directed gazing behaviour in puppies and adult dogs, Canis lupus familiaris. Anim Behav 82:1043–1050

Phelps MT, Roberts WA (1994) Memory for pictures of upright and inverted primate faces in humans (Homo sapiens), squirrel monkeys (Saimiri sciureus), and pigeons (Columba livia). J Comp Psychol 108:114–125

Pokorny JJ, de Waal FBM (2009) Monkeys recognize the faces of group mates in photographs. Proc Natl Acad Sci USA 106:21539–21543

Pokorny JJ, Webb CE, de Waal FB (2011) An inversion effect modified by expertise in capuchin monkeys. Anim Cogn 14:839–846

Racca A, Amadei E, Ligout S, Guo K, Meints K, Mills D (2010) Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris). Anim Cogn 13:525–533

Rossion B (2008) Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol (Amst) 128:274–289

Schell A, Rieck K, Schell K, Hammerschmidt K, Fischer J (2011) Adult but not juvenile Barbary macaques spontaneously recognize group members from pictures. Anim Cogn 14:503–509

Somppi S, Törnqvist H, Hanninen L, Krause C, Vainio O (2012) Dogs do look at images: eye tracking in canine cognition research. Anim Cogn 15:163–174

Stone SM (2010) Human facial discrimination in horses: can they tell us apart? Anim Cogn 13:51–61

Tanaka JW, Farah MJ (1993) Parts and wholes in face recognition. Q J Exp Psychol A 46:225–245

Tate AJ, Fischer H, Leigh AE, Kendrick KM (2006) Behavioural and neurophysiological evidence for face identity and face emotion processing in animals. Philos Trans R Soc Lond B Biol Sci 361:2155–2172

Téglás E, Gergely A, Kupán K, Miklósi Á, Topál J (2012) Dogs’ Gaze following is tuned to human communicative signals. Curr Biol 22:209–212

Topál J, Miklósi Á, Gácsi M, Dóka A, Pongrácz P, Kubinyi E, Virányi Z, Csányi V (2009) The dog as a model for understanding human social behavior. In: Brockmann HJ, Roper TJ, Naguib M, Wynne-Edwards KE, Mitani JC, Simmons LW (eds) Advances in the study of behavior, vol 39. Academic Press, Burlington, pp 71–116

Törnqvist H, Kujala MV, Somppi S, Hänninen L, Pastell M, Krause CM, Kujala J, Vainio O (2013) Visual event-related potentials of dogs: a non-invasive electroencephalography study. Anim Cogn. doi:10.1007/s10071-013-0630-2

Udell MAR, Dorey NR, Wynne CDL (2010a) The performance of stray dogs (Canis familiaris) living in a shelter on human-guided object-choice tasks. Anim Behav 79:717–725

Udell MA, Dorey NR, Wynne CD (2010b) What did domestication do to dogs? A new account of dogs’ sensitivity to human actions. Biol Rev 85:327–345

van Belle G, Ramon M, Lefèvre P, Rossion B (2010) Fixation patterns during recognition of personally familiar and unfamiliar faces. Front Psychology 1:20. doi:10.3389/fpsyg.2010.00020

Vas J, Topál J, Gácsi M, Miklósi A, Csányi V (2005) A friend or an enemy? Dogs’ reaction to an unfamiliar person showing behavioural cues of threat and friendliness at different times. Appl Anim Behav Sci 94:99–115

Wobber V, Hare B, Koler-Matznick J, Wrangham R, Tomasello M (2009) Breed differences in domestic dogs’ (Canis familiaris) comprehension of human communicative signals. Interact Stud 10:206–224

Yarbus AL (1967) Eye movements and vision. Plenum Press, New York

Yin RK (1969) Looking at upside-down faces. J Exp Psychol 81:141–145

Acknowledgments

This study was financially supported by the Academy of Finland (project #137931 to O.Vainio and projects #129346 and #137511 to C.M. Krause) and Foundation of Emil Aaltonen. Special thanks to all pet dog owners for dedication to the training of dogs and providing photographs. We thank also Reeta Törne for assisting in the experiments and data preparation; Timo Murtonen for the custom-built calibration system and chin rests; Katja Irvankoski, Matti Pastell, Antti Flyck and Kristian Törnqvist for the technical support; and Mari Palviainen for the help in kennel dog training. The authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Somppi, S., Törnqvist, H., Hänninen, L. et al. How dogs scan familiar and inverted faces: an eye movement study. Anim Cogn 17, 793–803 (2014). https://doi.org/10.1007/s10071-013-0713-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10071-013-0713-0