Abstract

Although domestic dogs can respond to many facial cues displayed by other dogs and humans, it remains unclear whether they can differentiate individual dogs or humans based on facial cues alone and, if so, whether they would demonstrate the face inversion effect, a behavioural hallmark commonly used in primates to differentiate face processing from object processing. In this study, we first established the applicability of the visual paired comparison (VPC or preferential looking) procedure for dogs using a simple object discrimination task with 2D pictures. The animals demonstrated a clear looking preference for novel objects when simultaneously presented with prior-exposed familiar objects. We then adopted this VPC procedure to assess their face discrimination and inversion responses. Dogs showed a deviation from random behaviour, indicating discrimination capability when inspecting upright dog faces, human faces and object images; but the pattern of viewing preference was dependent upon image category. They directed longer viewing time at novel (vs. familiar) human faces and objects, but not at dog faces, instead, a longer viewing time at familiar (vs. novel) dog faces was observed. No significant looking preference was detected for inverted images regardless of image category. Our results indicate that domestic dogs can use facial cues alone to differentiate individual dogs and humans and that they exhibit a non-specific inversion response. In addition, the discrimination response by dogs of human and dog faces appears to differ with the type of face involved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Faces convey visual information about an individual’s gender, age, familiarity, intention and mental state, and so it is not surprising that the ability to recognise these cues and to respond accordingly plays an important role in social communication, at least in humans (Bruce and Young 1998). Numerous studies have demonstrated our superior efficiency in differentiating and recognising faces compared with non-face objects and have suggested a face-specific cognitive and neural mechanism involved in face processing (e.g. Farah et al. 1998; McKone et al. 2006; see also Tarr and Cheng 2003). For instance, neuropsychological studies have reported selective impairments of face and object recognition in neurological patients (prosopagnosia and visual agnosia) (Farah 1996; Moscovitch 1997), and brain imaging studies have revealed distinct neuroanatomical regions in the cerebral cortex, such as the fusiform gyrus, associated with face processing (McCarthy et al. 1997; Tsao et al. 2006). Likewise, behavioural/perceptual studies show that inversion (presentation of a stimulus upside-down) results in a larger decrease in recognition performance for faces than for other mono-oriented objects (e.g. Yin 1969; Valentine 1988; Rossion and Gauthier 2002). Although the precise cause of this so-called face inversion effect is still source of debate (qualitative vs. quantitative difference between the processing of upright and inverted faces; e.g. Sekuler et al. 2004; Rossion 2008, 2009; Riesenhuber and Wolff 2009; Yovel 2009); it is generally associated with a more holistic processing for faces [both the shape of the local features (i.e. eyes, nose, mouth) and their spatial arrangement are integrated into a single representation of the face] than other objects. The face inversion effect is therefore considered as a hallmark for differentiating face from object processing.

The capacity for differentiating individuals based on facial cues is not restricted to humans. Using match-to-sample or visual paired comparison tasks, previous studies have found that non-human primates [e.g. chimpanzees (Pan troglodytes): Parr et al. 1998, 2000, 2006; and monkeys (Macaca mulatta, Macaca tonkeana, Cebus apella): Pascalis and Bachevalier 1998; Parr et al. 2000, 2008; Gothard et al. 2004, 2009; Dufour et al. 2006; Parr and Heintz 2008), other mammals (e.g. sheep (Ovis aries): Kendrick et al. 1996; heifers (Bos Taurus): Coulon et al. 2009)), birds (e.g. budgerigars (Melopsittacus undulatus): Brown and Dooling 1992), and even insects (e.g. paper wasps (Poliste fuscatus): Tibbetts 2002] could discriminate the faces of their own species (conspecifics), based on visual cues. Although it is not clear whether face processing in non-human animals share a similar neural mechanism as that in humans, some behavioural studies have noticed a face inversion effect, at least towards conspecific faces in chimpanzees (e.g. Parr et al. 1998), monkeys (e.g. Parr et al. 2008; Parr and Heintz 2008; Neiworth et al. 2007; see also Parr et al. 1999) and sheep (Kendrick et al. 1996), suggesting that a similar holistic process may be used for face perception by these species.

Many studies have suggested that the development of a face-specific cognitive process relies heavily on the animal’s extensive experience with certain type of faces. For instance, human adults have difficulties at recognising faces from a different ethnic group and demonstrate weaker holistic processing towards these faces (O’Toole et al. 1994; Tanaka et al. 2004). This so-called other-race effect can decrease and even reverse by experiencing another ethnic face type (e.g. Elliott et al. 1973; Brigham et al. 1982; Sangrigoli et al. 2005). Furthermore, humans and some non-human primates present abilities of discrimination and/or an inversion effect towards faces of other species, provided that they have been frequently exposed to them (generally tested with other-primate species) (Parr et al. 1998, 1999; Martin-Malivel and Fagot 2001; Pascalis et al. 2005; Martin-Malivel and Okada 2007; Neiworth et al. 2007; Parr and Heintz 2008; Sugita 2008). Finally, human performances in simple human-face identification task are known to depend primarily on the amount of preceding practice (Hussain et al. 2009). Taken together, exposure seems to be an important determinant for holistic face processing.

Given their long history of domestication (estimated at 12,000–100,000 years ago, Davis and Valla 1978; Vila et al. 1997) and intensive daily interaction with humans, pet domestic dogs could be a unique animal model for the comparative study of face processing. Despite their extraordinary capacity for discriminating olfactory cues (e.g. Schoon 1997; Furton and Myers 2001), domestic dogs also process visual inputs efficiently. Although they could have less binocular overlap, less range of accommodation and colour sensitivity, and lower visual acuity (20/50 to 20/100 with the Snellen chart) compared with humans, they in general have a larger visual field and higher sensitivity to motion signals (for a review, see Miller and Murphy 1995). Growing evidence has revealed that they can rely on facial cues for social communication. They can display a range of facial expressions, and these are believed to be important in intraspecific communication (e.g. Feddersen-Petersen 2005). They also attend to and use human facial cues. For instance, they attend to human faces to assess their attentional state (Call et al. 2003; Gácsi et al. 2004; Virányi et al. 2004) or in problem-solving situations (Topál et al. 1997; Miklósi et al. 2003). They are particularly efficient at reading and understanding some human directional communicative cues, such as following human eye/head direction to find hidden food (e.g. Miklósi et al. 1998; Soproni et al. 2001), and even exceed the ability of some non-human primates in such tasks (e.g. Povinelli et al. 1999; Soproni et al. 2001; Hare et al. 2002). In a recent study, Marinelli and colleagues (2009) observed the apparent attention of dogs while looking at their owner and a stranger entering and leaving a room. They showed that the dogs’ attention towards their owner decreased if both the owner and the stranger were wearing hoods covering their heads. This could suggest that dogs use the face as a cue to recognise their owners. Moreover, another study suggests dogs may even have an internal representation of their owner’s face and can correlate visual inputs (i.e. owner’s face) with auditory inputs (i.e. owner’s voice) (Adachi et al. 2007). Finally, our recent behavioural study (Guo et al. 2009) revealed that when exploring faces of different species, domestic dogs demonstrated a human-like left gaze bias (i.e. the right side of the viewer’s face is inspected first and for longer periods) towards human faces but not towards monkey or dog faces, suggesting that they may use a human-like gaze strategy for the processing of human facial information but not conspecifics.

In this study, we examined whether domestic dogs (Canis familiaris) could discriminate faces based on visual cues alone, whether they demonstrate a face inversion effect and to what extent these behaviour responses were influenced by the species viewed (i.e. human faces vs. dog faces), given their high level of natural exposure to both species.

Experiment 1: Object discrimination in domestic dogs measured by a visual paired comparison task

Compared with other methodologies such as match-to-sample task, the visual paired comparison (VPC or preferential looking) task does not involve intensive training, is rapid to perform and is naturalistic. Consequently, it is commonly used in the study of visual discrimination performance in human infants (e.g. Fantz 1964; Fagan 1973; Pascalis et al. 2002) and non-human primates (e.g. Pascalis and Bachevalier 1998; Gothard et al. 2004, 2009; Dufour et al. 2006). It is based on behavioural changes stemming from biases in attention towards novelty. In this task, a single stimulus is presented to the participant in a first presentation phase (familiarisation phase), followed by the simultaneous presentation of the same stimulus and a novel stimulus in the second presentation phase (test phase). It is assumed that if the individual can discriminate between the familiar and the novel stimulus, there will be increased attention shown towards the novel stimulus, which is evident from a longer viewing time.

To our knowledge, the VPC task has not been applied in the controlled testing of the perceptual ability of domestic dogs. Therefore, in the first experiment, we employed an object discrimination task to establish whether the domestic dog could fulfil the necessary criteria for using the VPC task in such studies.

Methods

Animals

Seven adult domestic pet dogs (Canis familiaris, 5.6 ± 2.8 (mean ± SD) years old; 1 miniature Dachshund, 2 Lurchers and 4 cross-breeds; 2 males and 5 females) were recruited from university staff and students for this experiment. The study was carried out at the University of Lincoln (UK) from May to June 2008.

Visual stimuli

Eighteen grey scale digitized common object pictures (subtending a visual angle of 34° × 43°) were used in this experiment. The pictures were taken using a Nikon D70 digital camera and further processed in Adobe Photoshop. Specifically, a single object was cropped from the original picture and was then resized (to ensure a similar height between objects) and overlapped with a homogenous white background to create object image used in the study. The object pictures were then paired according to similarity of their general shape, and each trial contained two different images of the same object (first picture and familiar picture) and one image of a different object (novel picture) (see Fig. 1 for an example). All visual stimuli were back-projected on the centre of a ‘dark’ projection screen using customised presentation software (Meints and Woodford 2008).

To reduce the chance of discriminating objects using a low-level cognitive process, such as detecting differences in contrast or brightness, two precautions were taken: (1) for each trial, the first and familiar images were two different images of the same object with a slight difference in the perspective to avoid repetition of the contrast and brightness distribution in the pictures; (2) the contrast and brightness of the three pictures forming each trial were visually adjusted to appear as similar as possible. Therefore, the dogs could not rely on the immediate change of contrast or brightness to differentiate the familiar and novel stimulus presented simultaneously in the test phase.

Experimental protocol

During the experiment, the dog was familiarised with a quiet, dim-lit test room and then sat about 60 cm in front of the projection screen. A researcher stood behind the dog, put her hands on the shoulders or under the head of the dog but did not interfere with it during the image presentation or force it to watch the screen. The small dogs were sat on the lap of the researcher. A CCTV camera (SONY SSC-M388CE, resolution: 380 horizontal lines) placed in front of the dog was used to monitor and record the dog’s eye and head movements. Once the dog’s attention had been attracted towards the screen using a sound stimulus behind it (e.g. a call to the dog, tap on the screen), the trial was started with a small yellow fixation point (FP) presented in the centre of the screen at the dog’s eye level (also the centre of the project stimulus). The diameter of the FP was changed dynamically by expanding and contracting (ranging between 2.8° and 6.6°) to attract and maintain the dog’s attention. The dog’s head and eye positions were monitored on-line by a second researcher, in an annexe room, through CCTV. Once the dog’s gaze was oriented towards the FP a visual stimulus was then presented. During the presentation, the dog passively viewed the images. No reinforcement was given during this procedure, neither were the dogs trained on any other task with these stimuli.

In total, 6 trials were tested in a random order for each dog, and 3 pretest trials were used to familiarise the dog with the general procedure. A typical trial consisted of two presentations (or phases). The first familiarisation phase had a single first picture presented at the centre of the screen for 5 s, and the second test phase had the familiar and novel pictures presented also for 5 s side-by-side with a 35° spatial gap between them (distance between the inner edges of two simultaneously presented pictures). The side location (left or right) of the novel picture was randomised and counterbalanced. The time between the familiarisation phase and the test phase (inter-phase interval) varied between 1 and 4 s, depending on the time needed to re-attract the attention of the dog towards the FP. A trial was aborted if the dog spent less than 1 s exploring the first picture during the familiarisation phase or if the researcher failed to re-attract dog’s attention towards the FP within a maximum of 4 s during the inter-phase interval. The dogs were allowed short breaks when needed and were given treats during the breaks. All of the dogs tested successfully completed at least 67% of the trials (81% ± 11). Two dogs needed an extra session to retest missed trials to reach this criterion.

The dog’s eyes and head movements were recorded and then digitised with a sampling frequency of 60 Hz. The image was replayed off-line frame by frame for accurate analysis by one researcher and the direction of the dog’s gaze towards the screen was manually classified as ‘left’, ‘right’, ‘central’ and ‘out’ looking accordingly (see Fig. 2 for an example). The coding of each trial was started with a ‘central’ gaze (direct gaze towards the central FP) which was used as a reference position for the entire trial. The gaze direction was then coded as ‘left’ or ‘right’ once the dog’s eye deviated from this reference position, assessed by a change of pupil position. The movement of head and/or eyebrows were also used to facilitate the coding. Establishing if a subject was looking ‘out’ was accomplished by training the observers. This involved repeatedly presenting them with video sequences in which a human subject oscillated her gaze between the outer edge of the image and beyond. The ‘out’ looking was always chosen when in doubt.

The researcher was blind about the side location of the pictures on the screen during the test phase for each trial when performing off-line data analysis.

Data analysis and statistics

For each trial, the viewing time of gaze direction classified as ‘left’, ‘right’, ‘central’ and ‘out’ was calculated separately. As the amount of time spent looking at the pictures varied widely between subjects, we calculated the proportion of ‘left’ and ‘right’ viewing time as a proportion of cumulative viewing time allocated within the screen (i.e. right + left + central) in order to normalise our data. The data were then unblinded so that the proportion of ‘left’ and ‘right’ viewing time could be contextualised according to the position of the familiar and novel pictures, and was averaged across trials for each dog. A two-tailed paired t-test was used to compare viewing time between two pictures for all the tested dogs.

Results and discussion

Within a 5 s presentation time, the dogs spent on average 4.0 s ± 0.6 looking at the first picture in the familiarisation phase, and 4.4 s ± 0.48 looking at the familiar and novel pictures in the test phase. The two-tailed paired t-test showed that the novel picture attracted a significantly longer viewing time than the familiar picture (41.1% ± 11.2 vs. 26.8% ± 7.2, t 6 = 4.83, P = 0.003), suggesting that the dogs demonstrated a clear preference for novelty and could differentiate two objects presented simultaneously in the test phase. The VPC task, therefore, can be used for investigating face discrimination and inversion performance in domestic dogs. We should, however, acknowledge that the researcher stood behind the dog during the study was not blind towards the stimuli presented. As subtle unconscious cues may have been transmitted to the dogs by the experimenter, this potential factor was eliminated in our second experiment.

Experiment 2: face discrimination and inversion performance in the viewing of human and dog faces

In the second experiment, we employed VPC tasks to examine (1) whether domestic dogs could discriminate individual faces based on visual cues alone; (2) whether they show a face inversion effect as seen in human and non-human primates; and (3) to what extent their face discrimination and inversion performance were influenced by the species of viewed faces (i.e. human faces vs. dog faces).

Methods

Twenty-six adult domestic pet dogs were recruited from university staff and students for this experiment, with 15 of them successfully completing the experiment. The reasons for failure to complete were mainly due to a lack of attention, restlessness or distress. One of the fifteen dogs was also excluded from the data analysis because of producing scores above 2.5 standard deviations from the mean, and so was rejected as an outlier. The final sample contained fourteen dogs (4.3 ± 3.2 (mean ± SD) years old; 1 Alaskan Malamute, 1 miniature Dachshund, 2 Jack Russells, 2 Labradors, 3 Lurchers and 5 cross-breeds; 6 males and 8 females). Four of them had also participated in the first experiment. All dogs were well socialised to humans and other dogs. The study took place at the University of Lincoln (UK) from October to December 2008.

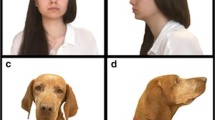

A total of 72 grey scale digitized unfamiliar human face, unfamiliar dog face and common object images (24 images per category; 36 cm × 45 cm) were used in this experiment (see Fig. 3 for examples). The human faces were taken from Caucasian students at the University of Lincoln (aged between 19 and 26; 8 women and 8 men) who did not present any distinctive facial marks, facial jewelleries and make-up. The faces of adult dogs (aged between 2 and 7; 8 males and 8 females) were obtained from pedigree dog breeders (Poodle, miniature Dachshund, Spaniel and Border Terrier). All face images were judged to have neutral facial expressions with a straight gaze. The common object images contained pictures of generally seen upright items: table, lamp, chair and car.

Eight trials were used for each image category to test discrimination performance (24 trials in total for each dog). Four of them were upright trials where all the pictures were presented in an upright orientation. The other four trials were inverted trials where the first picture was presented upright during the familiarisation phase but the familiar, and the novel pictures were presented upside-down (180° rotation) during the test phase. For a given trial, the stimuli used as familiar or novel items were randomly determined. The human faces were paired by gender and age, the dog faces were paired by gender, age and breed, and the object pictures were paired by category type. The gender of human faces, the breed of dog faces and the type of objects were balanced between upright and inverted trials. Each pair of human and dog faces was also assessed as more similar or different based on hair/fur colour and facial marking and was then balanced between upright and inverted trials. Furthermore, all the pictures presented within a given trial were digitally processed in the same way as described in Experiment 1 to control for some low-level image properties (i.e. background colour, size, contrast and brightness of the stimuli); the overall brightness (stimulus + background) of the first picture presented in the familiarisation phase was also set as the mean brightness of the novel and familiar pictures presented in the test phase. The dogs, therefore, had to rely on differences in the face/object contained in the picture, rather than differences in overall picture brightness, to differentiate familiar and novel pictures.

The experimental procedure and data analysis were identical to those described in Experiment 1. An additional precaution was, however, used here: the researcher behind the dog was instructed not to look at the pictures by keeping her head down during the trial to avoid potential influence on the dog’s viewing behaviour. The 15 dogs tested successfully completed at least 75% of the trials (92% ± 5), and needed extra sessions to retest missed trials to reach this criterion (the dogs did not miss more trials with regards to one stimulus category than another, ANOVA, P > 0.05). Two researchers coded the direction of the dog’s gaze in the same way as in experiment 1, and without prior knowledge about the side location of the familiar and novel pictures presented. The inter-rater reliability measures yielded correlations of 0.94 between the two researchers after coding data independently.

Data analysis and statistics

As in experiment 1, the cumulative viewing time directed at the ‘left’, ‘right’, ‘central’ and ‘out’ of the screen was calculated separately for each trial. We then calculated the proportion of ‘left’ and ‘right’ viewing time as a proportion of cumulative viewing time allocated within the screen in order to normalise our data. The proportion of ‘left’ and ‘right’ viewing time was then referenced to the viewing time directed at the familiar and novel pictures and averaged between trials and across image categories for each dog. Data were checked for normality using a Kolmogorov–Smirnov test (P > 0.05), therefore, analyses of variance with repeated measures were conducted on the proportion of viewing time at the stimuli considering the following factors: Stimulus Type (dog face vs. human face vs. object), Orientation (upright vs. inverted) and Image novelty (novel vs. familiar assessed by gaze direction). We then used planned comparisons, run within the ANOVA, to determine whether there was a significant attraction towards the novel stimulus in the different type of stimuli and in the different orientation.

Results and discussion

During the familiarisation phase, the dogs spent on average 4.1 s ± 0.7, 4.1 s ± 0.8 and 4.2 s ± 0.7 viewing dog faces, human faces and object pictures. During the test phase, they spent 4.3 s ± 0.78, 4.2 s ± 0.8 and 4.3 s ± 0.6 looking at the familiar and novel images of dog faces, human faces and objects. We did not observe a significant difference in viewing time across image categories or presented orientations (ANOVA, P > 0.05). The averaged cumulative viewing time, in seconds, directed at the novel picture (looking ‘left’ or ‘right’ depending on the side location of the stimuli), ‘familiar’ picture (looking ‘right’ or ‘left’), ‘central’ and ‘out’ of the screen are presented in Table 1.

Our ANOVA conducted on the proportion of viewing time allocated to the stimuli revealed no significant effect for Image novelty (F 1,13 = 3.84; P = 0.0717) but a significant interaction between Stimulus Type and Image novelty (F 2,26 = 5.98; P = 0.0073). Planned comparisons show that during the test phase with the upright images, the novel object and novel human face picture attracted a significantly longer viewing time than the familiar object and familiar human face (object: F 1 = 8.15, P = 0.0135; human face: F 1 = 7.09, P = 0.0195) and that the familiar dog face attracted a significantly longer viewing time than the novel dog face (F 1 = 5.43, P = 0.037) (Fig. 4a). For inverted stimuli, the novel and familiar pictures in the test phase resulted in no significant difference in the viewing time for each image category (object: F 1 = 1.08, P = 0.32; human face: F 1 = 1.13, P = 0.31; dog face: F 1 = 0.005, P = 0.94) suggesting that the dogs did not reliably differentiate between the two inverted pictures presented simultaneously (Fig. 4b).

Mean percentage and standard deviation of time spent looking at the novel and the familiar picture in experiment 2 for each image category (object, human faces and dog faces) in a upright trials and b inverted trials. *Significant difference between the novel and the familiar picture (Planned comparisons, P < 0.05)

The absence of an interaction between Stimulus Type and Orientation suggests that the observed inversion effect was neither face specific nor species specific.

General discussion

In this study, we first demonstrated that the visual paired comparison (VPC) procedure can be successfully applied to domestic dogs for the study of visual discrimination. To the authors’ knowledge, this is the first report of the use of VPC in non-primate animals.

Using a VPC task, we observed a clear difference between the proportion of viewing time directed at a simultaneously presented novel image and prior-exposed familiar image, suggesting the dogs could make a within-category discrimination between upright dog faces, human faces and object images. Therefore, the capacity for differentiating individual faces based on visual cues alone, which is evident in humans and non-human primates (e.g. Bruce and Young 1998; Pascalis and Bachevalier 1998; Parr et al. 2000; Dufour et al. 2006), extends to domestic dogs. Interestingly, their viewing preferences seemed to differ for the processing of faces of different species. The dogs demonstrated a preference for the novel face when presented with human faces, but a preference for the familiar face when presented with dog faces. This discrepancy may reflect different cognitive processes in the initial perception of dog and human faces.

When applying a VPC task in infant studies, a preference for novelty has been reported frequently and used as the criterion for determining discrimination abilities (e.g. Fantz 1964; Fagan 1973; Pascalis et al. 2002). However, cases of preference for familiarity have also been observed (for a review, see Pascalis and de Haan 2003). The completeness of the encoding has been identified as a major factor influencing children’s viewing preferences. In general, a well-encoded stimulus will tend to result in a preference for novelty and an incomplete encoding of a stimulus will tend to result in a preference for familiarity in order to complete the encoding of the stimulus (e.g. Wagner and Sakovits 1986; Hunter and Ames 1988). Incomplete encoding is generally due to a lack of familiarisation time compared to the complexity of the stimulus (the more complex the stimulus is, the more familiarisation time is needed). In our study, 5 s were given to the dogs as a familiarisation time and, in average, dogs paid attention to the stimuli for 4.1 s, whatever the stimulus type. A possible explanation of our results could therefore be that dog faces are more complex than human faces to encode for dog observers. Alternatively, our results could also be due to our methodology. Indeed, some cases of preference for familiarity in children have been observed when the familiar stimulus was similar, but not identical to the stimulus previously seen (Gibson and Walker 1984). In our study, the first stimulus presented in the familiarisation phase and the familiar stimulus presented in the test phase were not identical (same face/object but different picture) in order to avoid a discrimination based simply on contrast/brightness similarities. Thus, it could be possible that dogs detected the difference between the first and the familiar stimulus for dog faces but not for human faces. Finally, the discrepancy of dog preferences between dog and human faces could also correspond to a different social response towards conspecifics versus humans in dogs or to differential exposure to conspecifics and humans. These possibilities warrant future research in the area.

In this study, we also observed that the dogs did not make reliable within-category discriminations once the images were inverted. The inversion of dog faces, human faces and object images had a similar deteriorative effect on their discriminative responses. If we apply the same arguments as have been used in human studies, then we might be tempted to conclude that there is a similar cognitive strategy in processing of dog faces, human faces and common objects in domestic dogs. However, our previous study suggests this is not the case as dogs seem to present a different gaze strategy while viewing human faces (left gaze bias) compared to dog faces and objects (no bias) (Guo et al. 2009). Using both face and non-face stimuli, a face-specific inversion effect has been observed in some non-human primates, such as chimpanzees (e.g. Parr et al. 1998), rhesus monkeys (Parr et al. 2008; Parr and Heintz 2008) and cotton-top tamarins (Neiworth et al. 2007), but other studies have failed to observe this effect in rhesus monkeys (Parr et al. 1999). In this latter experiment, Parr and her colleagues found a non-face-specific inversion effect: i.e. monkeys demonstrated an inversion effect towards faces of different species (rhesus monkey and capuchin) and objects (automobile). Our study produces similar results for domestic dogs, i.e. a more general inversion effect towards faces and objects. However, it should be noted that our methodology for assessing the inversion effect was very conservative. As the first picture in the familiarisation phase was presented upright to show normal configuration, a mental rotation was needed to compare the inverted familiar picture with the encoded upright first picture during the test phase. If dogs have a poor capacity for mental rotation, then they would treat both the inverted familiar picture and inverted novel picture as new pictures, and not present any gaze preference. It would be worthwhile to revisit this face inversion response with different methodologies (e.g. present inverted stimuli in both the familiarisation and test phases) in future research.

In conclusion, a visual paired comparison (VPC) procedure can be used successfully to study discrimination abilities of dogs and thus can provide an effective tool to study canine cognition. Furthermore, we found no evidence that domestic dogs show a face-specific inversion response, but they do have the ability to discriminate both individual human and dog faces using 2-dimensional visual information only. These images do not appear to be processed equivalently, with the looking response differing according to the type of face involved.

References

Adachi I, Kuwahata H, Fujita K (2007) Dogs recall their owner’s face upon hearing the owner’s voice. Anim Cogn 10:17–21

Brigham JC, Maass A, Snyder LD, Spaulding K (1982) Accuracy of eyewitness identifications in a field setting. J Personal Soc Psychol 42:673–681

Brown SD, Dooling RJ (1992) Perception of conspecific faces by budgerigars (Melopsittacus undulatus): I. Natural faces. J Comp Psychol 106:203–216

Bruce V, Young AW (1998) In the eye of the beholder: The science of face perception. University Press, Oxford

Call J, Brauer J, Kaminski J, Tomasello M (2003) Domestic dogs (Canis familiaris) are sensitive to the attentional state of humans. J Comp Psychol 117:257–263

Coulon M, Deputte BL, Baudoin C (2009) Individual recognition in domestic cattle (Bos taurus): evidence from 2D-images of heads from different breeds. PLoS One 4:e4441

Davis SJM, Valla FR (1978) Evidence for domestication of the dog 12, 000 years ago in the Natufian of Israel. Nature 276:608–610

Dufour V, Pascalis O, Petit O (2006) Face processing limitation to own species in primates: a comparative study in brown capuchins, Tonkean macaques and humans. Behav Process 73:107–113

Elliott ES, Wills EJ, Goldstein AG (1973) The effects of discrimination training on the recognition of white and oriental faces. Bull Psychon Soc 2:71–73

Fagan JF (1973) Infants’ delayed recognition memory and forgetting. J Exp Child Psychol 16:424–450

Fantz RL (1964) Visual experience in infants: decreased attention to familiar patterns relative to novel ones. Science 146:668–670

Farah MJ (1996) Is face recognition ‘special’? Evidence from neuropsychology. Behav Brain Res 76:181–189

Farah MJ, Wilson KD, Drain M, Tanaka JN (1998) What Is” Special” about face perception? Psychol Rev 105:482–498

Feddersen-Petersen DU (2005) Communication in wolves and dogs. In: Bekoff M (ed) Encyclopedia of animal behavior, vol I. Greenwood Publishing Group, Inc., Westport, pp 385–394

Furton KG, Myers LJ (2001) The scientific foundation and efficacy of the use of canines as chemical detectors for explosives. Talanta 54:487–500

Gácsi M, Miklósi Á, Varga O, Topál J, Csányi V (2004) Are readers of our face readers of our minds? Dogs (Canis familiaris) show situation-dependent recognition of human’s attention. Anim Cogn 7:144–153

Gibson EJ, Walker AS (1984) Development of knowledge of visual-tactual affordances of substance. Child Dev 55:453–460

Gothard KM, Erickson CA, Amaral DG (2004) How do rhesus monkeys (Macaca mulatta) scan faces in a visual paired comparison task? Anim Cogn 7:25–36

Gothard KM, Brooks KN, Peterson MA (2009) Multiple perceptual strategies used by macaque monkeys for face recognition. Anim Cogn 12:155–167

Guo K, Meints K, Hall C, Hall S, Mills D (2009) Left gaze bias in humans, rhesus monkeys and domestic dogs. Anim Cogn 12:409–418

Hare B, Brown M, Williamson C, Tomasello M (2002) The domestication of social cognition in dogs. Science 298:1634–1636

Hunter MA, Ames EW (1988) A multifactor model of infant preferences for novel and familiar stimuli. Adv Infancy Res 5:69–95

Hussain Z, Sekuler AB, Bennett PJ (2009) How much practice is needed to produce perceptual learning? Vis Res 21:2624–2634

Kendrick KM, Atkins K, Hinton MR, Heavens P, Keverne B (1996) Are faces special for sheep? Evidence from facial and object discrimination learning tests showing effects of inversion and social familiarity. Behav Process 38:19–35

Marinelli L, Mongillo P, Zebele A, Bono G (2009) Measuring social attention skills in pet dogs. J Veterinary Behavior: Clin Appl Res 4:46–47

Martin-Malivel J, Fagot J (2001) Perception of pictorial human faces by baboons: effects of stimulus orientation on discrimination performance. Anim Learn Behav 29:10–20

Martin-Malivel J, Okada K (2007) Human and chimpanzee face recognition in chimpanzees (Pan troglodytes): role of exposure and impact on categorical perception. Behav Neurosci 121:1145–1155

McCarthy G, Puce A, Gore JC, Allison T (1997) Face-specific processing in the human fusiform gyrus. J Cogn Neurosci 9:605–610

McKone E, Kanwisher N, Duchaine BC (2006) Can generic expertise explain special processing for faces? Trends Cogn Sci 11:8–15

Meints K, Woodford A (2008) Lincoln Infant Lab Package 2008: A new programme package for IPL, Preferential Listening, Habituation and Eyetracking [WWW document: Computer software & manual]. URL: http://www.lincoln.ac.uk/psychology/babylab.htm

Miklósi Á, Polgárdi R, Topál J, Csányi V (1998) Use of experimenter-given cues in dogs. Anim Cogn 1:113–121

Miklósi Á, Kubinyi E, Topál J, Gácsi M, Virányi Z, Csányi V (2003) A simple reason for a big difference wolves do not look back at humans, but dogs do. Curr Biol 13:763–766

Miller PE, Murphy CJ (1995) Vision in dogs. J Am Vet Med Assoc 207:1623–1634

Moscovitch M (1997) What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. J Cogn Neurosci 9:555–604

Neiworth JJ, Hassett JM, Sylvester CJ (2007) Face processing in humans and new world monkeys: the influence of experiential and ecological factors. Anim Cogn 10:125–134

O’Toole AJ, Deffenbacher KA, Valentin D, Abdi H (1994) Structural aspects of face recognition and the other-race effect. Mem Cogn 22:208–224

Parr LA, Heintz M (2008) Discrimination of faces and houses by Rhesus monkeys: the role of stimulus expertise and rotation angle. Anim Cogn 11:467–474

Parr LA, Dove T, Hopkins WD (1998) Why faces may be special: evidence of the inversion effect in chimpanzees. J Cogn Neurosci 10:615–622

Parr LA, Winslow JT, Hopkins WD (1999) Is the inversion effect in rhesus monkeys face-specific? Anim Cogn 2:123–129

Parr LA, Winslow JT, Hopkins WD, de Waal FBM (2000) Recognizing facial cues: individual discrimination by chimpanzees (Pan troglodytes) and Rhesus monkeys (Macaca mulatta). J Comp Psychol 114:47–60

Parr LA, Heintz M, Akamagwuna U (2006) Three studies on configural face processing by chimpanzees. Brain Cogn 62:30–42

Parr LA, Heintz M, Pradhan G (2008) Rhesus monkeys (Macaca mulatta) lack expertise in face processing. J Comp Psychol 122:390–402

Pascalis O, Bachevalier J (1998) Face recognition in primates: a cross-species study. Behav Process 43:87–96

Pascalis O, de Haan M (2003) Recognition memory and novelty preference: what a model? In: Hayne H, Fagen J (eds) Progress in infancy research, vol 3. Lawrence Erlbaum Associates, New Jersey, pp 95–120

Pascalis O, de Haan M, Nelson CA (2002) Is face processing species-specific during the first year of life? Science 296:1321–1323

Pascalis O, Scott LS, Kelly DJ, Shannon RW, Nicholson E, Coleman N, Nelson CA (2005) Plasticity of face processing in infancy. Proc Natl Acad Sci 102:5297–5300

Povinelli DJ, Bierschwale DT, Cech CG (1999) Comprehension of seeing as a referential act in young children, but not juvenile chimpanzees. Br J Dev Psychol 17:37–60

Riesenhuber M, Wolff BF (2009) Task effects, performance levels, features, configurations, and holistic face processing: a reply to Rossion. Acta Psychol 102:286–292

Rossion B (2008) Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol 128:274–289

Rossion B (2009) Distinguishing the cause and consequence of face inversion: the perceptual field hypothesis. Acta Psychol 132:300–312

Rossion B, Gauthier I (2002) How does the brain process upright and inverted faces? Behav Cogn Neurosci Rev 1:62–74

Sangrigoli S, Pallier C, Argenti AM, Ventureyra VAG, de Schonen S (2005) Reversibility of the other-race effect in face recognition during childhood. Psychol Sci 16:440–444

Schoon A (1997) The performance of dogs in identifying humans by scent. Ph.D. Dissertation, Rijksuniveristeit, Leiden

Sekuler AB, Gaspar CM, Gold JM, Bennett PJ (2004) Inversion leads to quantitative, not qualitative, changes in face processing. Curr Biol 14:391–396

Soproni K, Miklósi A, Topál J, Csányi V (2001) Comprehension of human communicative signs in pet dogs (Canis familiaris). J Comp Psychol 115:122–126

Sugita Y (2008) Face perception in monkeys reared with no exposure to faces. Proc Natl Acad Sci 105:394–398

Tanaka JW, Kiefer M, Bukach CM (2004) A holistic account of the own-race effect in face recognition: evidence from a cross-cultural study. Cogn 93:1–9

Tarr MJ, Cheng YD (2003) Learning to see faces and objects. Trends Cogn Sci 7:23–30

Tibbetts EA (2002) Visual signals of individual identity in the wasp Polistes fuscatus. Proc R Soc Lond, Ser B: Biol Sci 269:1423–1428

Topál J, Miklósi Á, Csányi V (1997) Dog-human relationship affects problem-solving behavior in the dog. Anthrozoos 10:214–224

Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS (2006) A cortical region consisting entirely of face-selective cells. Science 311:670–674

Valentine T (1988) Upside-down faces: a review of the effect of inversion upon face recognition. Br J Psychol 79:471–491

Vila C, Savolainen P, Maldonado JE, Amorim IR, Rice JE, Honeycutt RL, Crandall KA, Lundeberg J, Wayne RK (1997) Multiple and ancient origins of the domestic dog. Science 276:1687–1689

Virányi Z, Topál J, Gácsi M, Miklósi Á, Csányi V (2004) Dogs respond appropriately to cues of humans’ attentional focus. Behav Process 66:161–172

Wagner SH, Sakovits LJ (1986) A process analysis of infant visual and cross-modal recognition memory: implications for an amodal code. Adv Infancy Res 4:195–217

Yin RK (1969) Looking at upside-down faces. J Comp Psychol 81:141–145

Yovel G (2009) The shape of facial features, the spacing among them generate similar inversion effects: a reply to Rossion (2008). Acta Psychol 132:293–299

Acknowledgments

We thank Dr. Olivier Pascalis for advice on experimental design and comments on the manuscript; Fiona Williams for helping data collection; and Sylvia Sizer, Szymon Burzynski and Angela Fieldsend for providing dog pictures. We also thank three anonymous reviewers for their helpful comments and suggestions on the manuscript. Ethical approval had been granted for the University of Lincoln (UK), and all procedures complied with the ethical guidance of the International Society for Applied Ethology.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Racca, A., Amadei, E., Ligout, S. et al. Discrimination of human and dog faces and inversion responses in domestic dogs (Canis familiaris) . Anim Cogn 13, 525–533 (2010). https://doi.org/10.1007/s10071-009-0303-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10071-009-0303-3