Abstract

In this work we introduce a new approach for solving equilibrium problems in a real Hilbert space. First, we propose a solution mapping and show its strong quasi-nonexpansiveness. Next, we apply the mapping to present an algorithm for solving equilibrium problems. Strong convergence of the algorithm is showed under quasimonotone and Lipschitz-type continuous assumptions of the cost bifunctions. Finally, we give some numerical results for the proposed algorithm and comparison with some other known methods using the solution mapping.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\mathcal H\) be a real Hilbert space with inner product \(\langle \cdot ,\cdot \rangle \) and its deduced norm \(\Vert \cdot \Vert \). Denote \(x^k\rightharpoonup x\) to indicate that the sequence \(\{x^k\}\) converges weakly to x, and \(x^k\rightarrow x\) to indicate that the sequence \(\{x^k\}\) converges strongly to x. Let C be a nonempty convex closed subset of \(\mathcal H\) and a bifunction \(f:\mathcal H\times \mathcal H\rightarrow \mathcal R\) such that the equilibrium condition \(f(x,x)=0\) holds for all \(x\in \mathcal H\) and \(f(x,\cdot )\) is lower semicontinuous, convex for each \(x\in \mathcal H\). In this paper, we consider an equilibrium problem, shortly \(\textrm{EPs}(C,f)\), first suggested by Nikaido and Isoda in [29] for non-cooperative convex games and the term “equilibrium problem” first used by Blum and Oettli in [9] as follows:

The solution set is denoted by \(\textrm{Sol}(C,f)\). In recently years, the problem \(\textrm{EPs}(C,f)\) is an attractive field that has been investigated in many research papers due to its applications in a large variety of fields arising in structural analysis, economics, optimization, operations research and engineering sciences (see some interesting books [12, 13, 21] and many references therein).

It is worth to mention that during the recent years several solution algorithms came in the existence to solve the problem \(\textrm{EPs}(C,f)\) and its generalizations. The solution mapping \(E: C\rightarrow C\) was first introduced by Moreau in [28] as the form:

where \(\lambda >0\). Note that Ex is the unique solution of a strongly convex problem under proper lower semicontinuous and convex assumptions of f. It is originated by the fact that a point \(x^*\in C\) is a solution of the problem \(\textrm{EPs}(C,f)\) if and only if it is a fixed point of the solution mapping E [25, Proposition 2.1]. The solution mapping has become a useful tool in solving the problem \(\textrm{EPs}(C,f)\). Under some conditions onto \(\lambda \), the mapping E has some the following properties:

-

If the cost bifunction f is strongly monotone and Lipschitz-type continuous, then E is contractive [14, 25, Proposition 2.1].

Then, by using Banach’s contraction mapping principle, the unique solution of the problem \(\textrm{EPs}(C,f)\) is evaluated by the fixed point iteration sequence:

The authors showed that the sequence \(\{x^k\}\) converges strongly to a unique solution of the problem \(\textrm{EPs}(C,f)\). To avoid strongly monotone assumption of f, as an extension of Kraikaew and Saejung in [22], Hieu used a solution mapping [15] as follows:

where \(H_x=\{w\in \mathcal H: \langle x-\lambda w_x-Ex,w-Ex\rangle \le 0\}\), \(w_x\in \partial f(x,\cdot )(x)\) and Ex is defined in (1.1). Then, for each \(x^*\in \textrm{Sol}(C,f)\), we have

where f is pseudomonotone and Lipschitz-type continuous. However, F can not be quasi-nonexpansive on \(\mathcal H\) such as \(\textrm{Sol}(C,f)\ne \textrm{Fix}(T)\).

For solving the monotone problem \(\textrm{EPs}(C,f)\), there are various instances of the computational algorithms with combining the solution mapping E with other iteration techniques. It is worth mentioning to very interesting results such as the extragradient algorithms proposed by Quoc et al. [31, 32], inexact proximal point methods of Iusem et al. [17, 18], extragradient-viscosity methods of Maingé and Moudafi [24], auxiliary principles of Mastroeni and Noor [25, 26, 30], extragradient methods of Anh et al. [2, 5, 6] and many other computational methods in [4, 7, 8, 10, 11, 16, 19, 20, 27, 33, 34] and the references cited therein.

Inspired and motivated by the ongoing research, we are aiming to suggest a new approach to the equilibrium problem \(\textrm{EPs}(C,f)\). First, we introduce a new solution mapping and prove its strongly quasi-nonexpansiveness. Second, we use the solution mapping for solving the problem \(\textrm{EPs}(C,f)\) via a Lipschitz continuous and strongly monotone mapping, and another Lipschitz continuous mapping. By the way, we can prove that the strong cluster point of the sequence constructed by our algorithm is the unique solution of a variational inequality problem where the constraint is the solution set of the problem \(\textrm{EPs}(C,f)\) under quasimonotone and Lipschitz continuous assumptions of the cost bifunction f. This constitutes a new approach which is called solution mapping approach and the fundamental difference of our algorithm with respect to current computational methods.

Our paper is organized as follows. In Section 2, we present some useful definitions, technique lemmas and a new solution mapping. A new algorithm and its convergent analysis for solving the problem \(\textrm{EPs}(C,f)\) are presented in Section 3. In Section 4, several numerical experiments are provided to illustrate the efficiency and accuracy of our proposed algorithm.

2 Solution Mappings

For each \(x\in \mathcal H\), the metric projection of x onto C is denoted by \(\varPi _C(x)\) which is the unique solution to the strongly convex problem:

Given a bifunction \(f: \mathcal H\times \mathcal H\rightarrow \mathcal R\) and \(\emptyset \ne K\subset \mathcal H\). The bifunction f is called to be:

-

\(\beta \)-strongly monotone if

$$ f(x,y)+f(y,x)\le -\beta \Vert x-y\Vert ^2,\quad \forall x,y\in \mathcal H. $$ -

monotone if

$$ f(x,y)+f(y,x)\le 0,\quad \forall x,y\in \mathcal H. $$ -

pseudomonotone if

$$ f(x,y)\ge 0\quad \Rightarrow \quad f(y,x)\le 0,\quad \forall x,y\in \mathcal H. $$ -

\(\eta \)-strongly quasimonotone on K where \(\eta >0\) if

$$ f(x,y)+f(y,x)\le -\beta \Vert x-y\Vert ^2,\quad \forall x\in K,y\in \mathcal H. $$ -

quasimonotone on K if

$$ f(x,y)\ge 0\Rightarrow f(y,x)\le 0,\quad \forall x\in K,y\in \mathcal H. $$ -

Lipschitz-type continuous on \(\mathcal H\) with constants \(c_1>0\) and \(c_2>0\) if

$$ f(x,y)+f(y,z)\ge f(x,z)-c_1\Vert x-y\Vert ^2-c_2\Vert y-z\Vert ^2,\quad \forall x,y,z\in \mathcal H. $$

Let a mapping \(S: \mathcal H\rightarrow \mathcal H\) and the fixed point set of S be \(\textrm{Fix}(S):=\{x\in \mathcal H: Sx=x\}\). The operator S is called to be:

-

\(\eta \)-strongly quasi-nonexpansive, where \(\eta >0\), if

$$ \Vert Sx-z\Vert ^2\le \Vert x-z\Vert ^2-\eta \Vert Sx-x\Vert ^2,\quad \forall x\in \mathcal H,~ z\in \textrm{Fix}(S). $$ -

quasi-nonexpansive if

$$ \Vert Sx-z\Vert \le \Vert x-z\Vert ,\quad \forall x\in \mathcal H,~ z\in \textrm{Fix}(S). $$ -

quasicontractive with constant \(\eta \in [0,1)\) if

$$ \Vert Sx-z\Vert \le \eta \Vert x-z\Vert ,\quad \forall x\in \mathcal H,~z\in \textrm{Fix}(S). $$When \(\textrm{Fix}(S)=\mathcal H\), the mapping S is called contractive with constant \(\eta \).

For each \(x\in \mathcal H\) and \(\xi >0\), we consider the solution mapping \(S: \mathcal H\rightarrow C\) of the problem \(\textrm{EPs}(C,f)\) defined in the form:

It is well-known that \(x\in C\) is a solution of the problem \(\textrm{EPs}(C, f)\) if and only if it is a fixed point of the mapping S [25, Proposition 2.1]. Let \(\gamma >0\). We introduce a new half space as follows:

where \(w_x\in \partial f(x,\cdot )(Sx)\). Using the well-known necessary and sufficient condition for optimality of the convex programing (2.1), we see that Sx solves the strongly convex program

if and only if

where \(N_C(Sx)\) is the (outward) normal cone of C at \(Sx\in C\). Since \(f(x,\cdot )\) is subdifferentiable for each \(x\in \mathcal H\), so there exists \(w_x\in \partial f(x,\cdot )(Sx)\) such that

Consequently

From \(\gamma >0\), it follows that

By (2.2), it yields \(C\subset H_x\) for all \(x\in \mathcal H\).

Now we propose a new solution mapping \(T:\mathcal H\rightarrow \mathcal H\) for the problem \(\textrm{EPs}(C,f)\) as follows:

where regular parameter \(\nu >0\) is very important for strongly quasi-nonexpansiveness of T. In the case \(f(x,y)=\langle F(x),y-x\rangle \), set \(z=x-\nu \xi F(x)\). It is easy to evaluate that Tx is the projection of z onto \(H_x\) and presented in an explicit formula:

where \(d_x=x-\xi w_x-Sx\). Note that, if \(d_x=0\) then obviously \(z\in H_x\) and \(Tx=x-\nu \xi F(x)\).

The following result will present some important properties of the operators T and S that will be needed in the sequel.

Lemma 2.1

Suppose that f is Lipschitz-type continuous with constants \(c_1>0\) and \(c_2>0\). Under conditions \(\xi >0\), \(\nu >0\) and \(\gamma >0\), the following inequality holds

Proof

By using the definition of \(w_x\in \partial f(x,\cdot )(Sx)\), we get

Combining (2.4) and \(Tx\in H_x\), it implies

From the necessary and sufficient condition for the strongly convex problem (2.3), there exists \(v_x\in \partial f(Sx,\cdot )(Tx)\) such that

Thus,

and hence

By the definition of \(v_x\in \partial f(Sx,\cdot )(Tx)\) and \(\nu \xi >0\), it implies

This together with (2.5) implies

Since f is Lipschitz-type continuous with \(c_1\) and \(c_2\), we deduce

Combining this and (2.6), we get that, for each \(t\in \mathcal H_x\),

By using the relation

we obtain

This together with (2.7) implies that

The proof is complete.\(\square \)

Lemma 2.2

Assume that f is Lipschitz-type continuous with constants \(c_1>0\) and \(c_2>0\). Let parameters \(\nu \), \(\xi \) and \(\gamma \) satisfy the following conditions:

Then, \(x^*\in C\) is a solution of the problem \(\textrm{EPs}(C,f)\) if and only if it is a fixed point of the solution mapping T.

Proof

Assume that \(\bar{x}\in C\) is a fixed point of T, i.e., \(T\bar{x}=\bar{x}\). Substituting \(x=\bar{x}\) into Lemma 2.1, we obtain

Consequently

From (2.8), it follows

Thus, \(S\bar{x}\in C\) is a solution of the problem \(\textrm{EPs}(C,f)\). Since \(x\in C\) is a solution of the problem \(\textrm{EPs}(C,f)\) if and only it is a fixed point of S, so \(\bar{x}=S\bar{x}\in \textrm{Sol}(C,f)\).

Now we assume \(\hat{x}\in \textrm{Sol}(C,f)\). Then, \(S\hat{x}=\hat{x}\). Substituting \(t=\hat{x}\) and \(x=\hat{x}\) into Lemma 2.1 and using \(f(x,x)=0\) for all \(x\in C\), we get

By (2.8), it yields \(T\hat{x}=\hat{x}\). Thus, the solution \(\hat{x}\in \textrm{Sol}(C,f)\) is a fixed point of T. This implies the proof. \(\square \)

Lemma 2.3

Suppose that f is quasimonotone on \(\textrm{Sol}(C,f)\) and Lipschitz-type continuous with constants \(c_1>0\), \(c_2>0\). The parameters satisfy the following restrictions:

Then, the solution mapping T is strongly quasi-nonexpansive with constant \((1-\nu )\).

Proof

Let \(x^*\in \textrm{Sol}(C,f)\), i.e., \(f(x^*,x)\ge 0\) for all \(x\in C\). By replacing \(t=x^*\) into Lemma 2.1, we get

Since \(Sx\in C\), f is quasimonotone and (2.10), we deduce \(f(Sx,x^*)\le 0\) and

Combining this and conditions (2.9), we have

By the definition and Lemma 2.2, we get that \(\textrm{Fix}(T)=\textrm{Sol}(C,f)\) and the solution mapping T is \((1-\nu )\)-strongly quasi-nonexpansive.\(\square \)

Remark 2.4

In [14, Theorem 3.7], the author showed that if \(f:C\times C\rightarrow \mathcal R\) is \(\zeta \)-strongly monotone and there exist functions \(\alpha _i:C\times C\rightarrow \mathcal H\), \(\beta _i: C\rightarrow \mathcal H\) \((i=1,\ldots ,p)\) such that

where \(\beta _i\) is \(K_i\)-Lipschitz continuous, \(\alpha _i(x,y)+\alpha _i(y,x)=0\) and \(|\alpha _i(x,y)|\le L_i\Vert x-y\Vert \) for all \(x,y\in C\), \(i=1,\ldots ,p\). Under condition \(\xi \in (0, \frac{2\zeta }{M^2})\) where \(M=\sum _{i=1}^pK_iL_i\), the mapping S defined by (2.1) is contractive with constant \(\delta =\sqrt{1-\xi (2\zeta -\xi M^2)}\in (0,1)\).

Lemma 2.5

[23, Remark 2.1] Let \(T:\mathcal H\rightarrow \mathcal H\) is quasi-nonexpansive and \(T_{\omega }=(1-\omega )\textrm{Id}+\omega T\) with \(\omega \in (0,1]\) such that \(\textrm{Fix}(T)\ne \emptyset \), where \(\textrm{Id}\) is an identify operator. Then, the following statements hold:

-

(i)

\(\textrm{Fix}(T)=\textrm{Fix}(T_{\omega })\).

-

(ii)

\(T_{\omega }\) is quasi-nonexpansive.

-

(iii)

\(\Vert T_{\omega }x-v\Vert ^2\le \Vert x-v\Vert ^2-\omega (1-\omega )\Vert Tx-x\Vert ^2\) for all \(x\in \mathcal H\) and \(v\in \textrm{Fix}(T)\).

-

(iv)

\(\langle x-T_{\omega }x,x-u\rangle \ge \frac{\omega }{2}\Vert x-Tx\Vert ^2\) for all \(x\in \mathcal H\) and \(u\in \textrm{Fix}(T)\).

3 Subgradient Auxiliary Principle Algorithm

Let \(G:\mathcal H\rightarrow \mathcal H\) be \(L_G\)-Lipschitz continuous and \(\beta _G\)-strongly monotone, and \(g:\mathcal H\rightarrow \mathcal H\) is \(L_g\)-Lipschitz continuous. In this section, we propose a new algorithm which is called Subgradient Auxiliary Principle Algorithm for solving the problem \(\textrm{EPs}(C,f)\) via the mappings G and g. Under certain conditions we obtain the desired convergence for the algorithm. First, we give the restrictions governing the cost bifunction \(f: \mathcal H\times \mathcal H\rightarrow \mathcal R\) and the sequence of parameters below.

- \((R_1)\):

-

The solution set \(\textrm{Sol}(C,f)\) of the problem \(\textrm{EPs}(C,f)\) is nonempty.

- \((R_2)\):

-

The cost bifunction f is quasimonotone and Lipschitz-type continuous with constants \(c_1>0\) and \(c_2>0\). f is jointly weakly continuous on \(\mathcal H\times C\) in the sense that, if \(\{x^k\}\), \(\{y^k\}\) converge weakly to \(\hat{x}\), \(\hat{y}\), respectively, then \(f(x^k,y^k)\rightarrow f(\hat{x}, \hat{y})\) as \(k\rightarrow \infty \).

- \((R_3)\):

-

For every integer \(k\ge 0\), all the positive parameters satisfy the following restrictions:

$$ \left\{ \begin{array}{l} \xi _k\in \left( 0,\min \left\{ \frac{1}{2c_1},\frac{1}{2c_2}\right\} \right) ,~\lim _{k\rightarrow \infty }\xi _k=\xi >0, \\ \gamma _k\in \left( 0,1-\xi _k(c_1+c_2)\right) , \\ \nu \in \left( 0,\min \left\{ 1,\frac{1}{\xi _k c_2}\right\} \right) ,\\ \omega \in \left( 0,\frac{1}{2}\right) ,~\mu \in \left( 0,\frac{2\beta _G}{L_G^2}\right) ,~\gamma \in \left( 0,\frac{\mu }{L_g}\left( \beta _G-\frac{\mu L_G^2}{2}\right) \right) ,~\tau \in \left( \gamma L_g,\mu \beta _G\right) ,\\ \alpha _k\in \left( 0,\min \left\{ 1,\frac{2(\mu \beta _G-\tau )}{\mu ^2 L_G^2-\tau ^2},\frac{1}{\tau -\gamma L_g}\right\} \right) ,~\sum _{k=0}^{\infty }\alpha _k=\infty ,~\lim _{k\rightarrow \infty }\alpha _k=0. \end{array}\right. $$

Let the mappings S and T be defined by (2.1)–(2.3). Now we present the Subgradient Auxiliary Principle Algorithm for solving the problem \(\textrm{EPs}(C,f)\).

For each \(k\ge 0\) and \(x\in \mathcal H\), set

where \(H_k=\{w\in \mathcal {H}: \langle x-\xi _k w_x-S_kx,w-S_kx\rangle \le \gamma _k\Vert x-S_kx\Vert ^2\}\) and \(w_x\in \partial f(x,\cdot )(S_kx)\).

Remark 3.1

-

(i)

Since \(x^*\) is a solution of the problem \(\textrm{EPs}(C,f)\) if and only if it is a fixed point of the mapping \(S_k\) defined by (3.1). Therefore, if \(y^k=x^k\) in Algorithm 1, i.e., \(x^k=S_kx^k\) under the assumption \(\xi _k>0\), then \(x^k\) is a solution of the problem \(\textrm{EPs}(C,f)\). The stopping criterion in Step 1 is valid.

-

(ii)

By Lemma 2.2, \(x^k\) is a solution of the problem \(\textrm{EPs}(C,f)\) if and only if it is a fixed point of the solution mapping \(T_k\) defined by (3.2) under the assumptions \((R_1)\)–\((R_3)\). Thus, if \(z^k=x^k\) in Algorithm 1, i.e., \(x^k=T_kx^k\), then \(x^k\) is a solution of the problem \(\textrm{EPs}(C,f)\). The stopping criterion in Step 2 is valid.

-

(iii)

As usual, for each \(\varepsilon >0\), an iteration point \(x^k\) defined in Algorithm 1 is \(\varepsilon \)-solution of the problem \(\textrm{EPs}(C,f)\), if \(\Vert y^k-x^k\Vert \le \varepsilon \) or \(\Vert z^k-x^k\Vert \le \varepsilon \). Equivalently, \(\max \{\Vert y^k-x^k\Vert ,\Vert z^k-x^k\Vert \}\le \varepsilon \).

The next lemma is crucial for the proof of our convergent theorem.

Lemma 3.2

Let \(\{x^k\}\) and \(\{y^k\}\) be the two sequences generated by Algorithm 1 and let \(x^*\in \textrm{Sol}(C,f)\). Under assumptions \((R_2)\) and \((R_3)\), the following claim holds

Proof

From (2.1) and Step 1, it follows \(y^k=S_kx^k\), where the mapping \(S_k\) is defined in (3.1). Combining (3.2) and Step 2, it yields that \(x^{k+1}=T_kx^k\). By using the \((1-\nu )\)-strongly quasi-nonexpansive property of the mapping \(T_k\) in Lemma 2.3, (2.11), assumptions \((R_2)\) and \((R_3)\), we obtain

which completes the proof.\(\square \)

Theorem 3.3

Let the cost bifunction f and the parameters satisfy assumptions \((R_1)\)–\((R_3)\). Then, two iteration sequences \(\{x^k\}\) and \(\{y^k\}\) generated by Algorithm 1 converge strongly to the unique solution \(x^*\in \textrm{Sol}(C,f)\) of the following variational inequality:

Proof

Let \(x^*\) satisfy the inequality (3.3). Then, \(x^*\in \textrm{Sol}(C,f)\). From Lemma 2.2, it follows that \(x^*\) is a fixed point of \(T_k\) defined by (3.2). By Lemma 2.3, the mapping \(T_k\) is strongly quasi-nonexpansive on \(\mathcal H\). Since (3.2) and Step 2, we have \(z^k=T_kx^k\). For every \(x\in \mathcal H\), since G is \(\beta _G\)-strongly monotone and \(L_G\)-Lipschitz continuous, we have

where the last inequality is deduced from the condition \(\alpha _k\in \left( 0,\min \left\{ 1,\frac{2(\mu \beta _G-\tau )}{\mu ^2 L_G^2-\tau ^2}\right\} \right) \) and \(\tau \in (0,\mu \beta _G)\) of \((R_3)\). Since g is \(L_g\)-Lipschitz continuous, (3.4) and Lemma 2.5(iii), we obtain

where \(\alpha _k(\tau -\gamma L_g)\in (0,1)\) is deduced from the conditions \((R_3)\). Therefore, \(\{x^k\}\) is bounded. Applying Lemma 2.5(iv) for \(T_{\omega }:=(1-\omega )\textrm{Id}+\omega T_k\), using the Cauchy–Schwarz inequality and (3.4), we have

Then, using the relation

we get

From Step 3, it follows that

Set \(a_k:=\Vert x^k-x^*\Vert \). Combining (3.6) and (3.7), it yields

Let us consider two following cases:

Case 1. There exists a positive integer \(k_0\) such that \(a_{k+1}\le a_k\) for all \(k\ge k_0\). Then, the limit \(\lim _{k\rightarrow \infty }a_k=A<\infty \) exists. Passing to the limit into (3.8) as \(k\rightarrow \infty \), using the boundedness of \(\{x^k\}\) and \(\lim _{k\rightarrow \infty }\alpha _k=0\), we obtain \(\lim _{k\rightarrow \infty }\Vert T_kx^k-x^k\Vert =0\). From (3.8), it follows

where \(\varGamma _k:=-2\langle \mu G(x^k)-\gamma g(x^k), x^*-x^k\rangle -2\alpha _k\Vert \gamma g(x^k)-\mu G(x^k)\Vert ^2-2\omega \alpha _k(1-\alpha _k\tau )\Vert T_kx^k-x^k\Vert \Vert x^k-x^*\Vert \). By the condition \(\sum _{k=0}^{\infty }\alpha _k=\infty \) in \((R_3)\), we deduce \(\liminf _{k\rightarrow \infty }\varGamma _k\le 0\) and hence

Combining this with the relation

yields

From (3.5), it follows

Passing to the limit as \(k\rightarrow \infty \), we have

By Lemma 3.2, we also have

Thus,

Using Lemma 3.2 yields

Since \(\{x^k\}\) is bounded, there exists a subsequence \(\{x^{k_j}\}\) such that \(x^{k_j}\rightharpoonup \bar{x}\) as \(j\rightarrow \infty \) and

Then, \(y^{k_j}\rightharpoonup \bar{x}\). Since C is closed and convex, C is weakly closed. Thus, from \(\{y^k\}\subset C\), we obtain \(\bar{x}\in C\). By the proof of [1, Lemma 3.1],

Passing to the limit in the last inequality as \(k\rightarrow \infty \) and using the assumption \((R_2)\) and \(\lim _{k\rightarrow \infty }\xi _k=\xi >0\), we get \(f(\bar{x},y)\ge 0\) for all \(y\in C\). Thus, \(\bar{x}\in \textrm{Sol}(C,f)=\textrm{Fix}(T_k)\). Since \(x^*\) is the solution of (3.3) and (3.9), we have

Using \(\gamma \in \left( 0,\frac{\mu }{L_g}\left( \beta _G-\frac{\mu L_G^2}{2}\right) \right) \), it implies \(\lim _{k\rightarrow \infty }a_k=0\). Thus, both \(\{x^k\}\) and \(\{y^k\}\) converge strongly to \(x^*\).

Case 2. There does not exist any integer \(k_0\) such that \(a_{k+1}\le a_k\) for all \(k\ge k_0\). Then, consider the sequence of integers as follows:

By [23], \(\{\phi (k)\}\) is a nondecreasing sequence verifying

Replacing k by \(\phi (k)\) into (3.8), it follows that

Taking the limit as \(k\rightarrow \infty \) in (3.11) and using the boundedness of \(\{x^k\}\), we obtain

From (3.11), it implies

Consider (3.9) again, we have

Combining this and (3.13), it leads

Then, by using \(\lim _{k\rightarrow \infty }\alpha _k=0\) and (3.12), we have

We recall the fact that \(\{x^{\phi (k)}\}\) is bounded, we can choose a subsequence \(\{x^{\phi (k_j)}\}\) such that

and

By a similar way as in Case 1, we also have \(\hat{x} \in \textrm{Sol}(C,f)\). It means \(\langle \mu G(x^*)-\gamma g(x^*), \hat{x}-x^*\rangle \ge 0\). Then, using (3.14) and \(\mu \beta _G-\gamma L_g>0\) in \((R_3)\), we deduce

and hence \(\limsup _{k\rightarrow \infty } a_{\phi (k)}=0\). However, from (3.8) and (3.12), it follows

Consequently

Recalling \(a_k\le a_{\phi (k)}\) for all \(k\ge k_0\) in (3.10), we immediately obtain \(\lim _{k\rightarrow \infty } a_k=0\). Thus, both \(\{x^k\}\) and \(\{y^k\}\) converge strongly to a unique solution \(x^*\) of the variational inequality problem (3.3). Which completes the proof. \(\square \)

Remark 3.4

Theorem 3.3 showed that the strongly cluster point of the sequences \(\{x^k\}\) and \(\{y^k\}\) constructed by the algorithm (SAPA) is a unique solution of the variational inequality problem (3.3). This result is a fundamental difference of our algorithm with respect to existing algorithms. However, the set \(\textrm{Sol}(C,f)\) is not given explicit. So, the problem (3.3) is not easy to solve.

4 Numerical Experiments

An important application of the problem \(\textrm{EPs}(C,f)\) is the noncooperative n-person games. The problem is to find \(x^*\in C\) such that

where

-

The ith player’s strategy set is a closed convex set \(C_i\) of the Euclidean space \(\mathcal R^{s_i}\) for all \(i\in I:=\{1,2,\ldots ,n\}\).

-

The \(f_i: C:=C_1\times C_2\times \cdots \times C_n\rightarrow \mathbb {R}\) is the loss function of player i.

-

The \(x[y^i]\) stands for the vector obtained from \(x = (x^1,\ldots ,x^n)\in C\) by replacing \(x^i\) with \(y^i\).

By [21], a point \(x^*\in C\) is said to be a Nash equilibrium point on C if and only if

Then, we set

We can see that the problem of finding a Nash equilibrium point of f on C can be formulated equivalently to the problem \(\textrm{EPs}(C,f)\).

Now we provide some computational results for solving the problem \(\textrm{EPs}(C,f)\) to illustrate the effectiveness of Subgradient Auxiliary Principle Algorithm (SAPA), and also to compare this algorithm with two well-known algorithms using the solution mapping S defined in the form (2.1): Extragradient Algorithm (EA) introduced by Quoc et al. [32, Algorithm 1] with the auxiliary bifunction \(L(x,y)=\frac{1}{2}\Vert y-x\Vert ^2\) for all \(x,y\in \mathcal H\) and Halpern Subgradient Extragradient Algorithm (HSEA) proposed by Hieu [15, Algorithm 3.2]. As we know, the iteration point \(x^k\) defined by S is a solution of the problem \(\textrm{EPs}(C,f)\) if and only if \(y^k=x^k\). Therefore, we have used the sequence \(\{S_k= \Vert x^k -y^k\Vert : k=0,1,\ldots \}\) to consider the convergent rate of all above algorithms. And, we can say that \(x^k\) is an \(\varepsilon \)-solution to the problem \(\textrm{EPs}(C,f)\) where \(\varepsilon >0\), if \(S_k\le \varepsilon \).

To test all above algorithms, the parameters are chosen as follows.

-

Subgradient Auxiliary Principle Algorithm (SAPA):

$$\begin{aligned} \xi _k= & \frac{1}{4c_1}+\frac{1}{5k+400},\quad \gamma _k=\frac{1}{2}(1-\xi _k(c_1+c_2)),\quad \nu =\frac{1}{2}\min \left\{ 1,\frac{1}{\xi _k c_2}\right\} ,\\ \omega= & \frac{1}{4},\quad \mu =\frac{\beta _G}{L_G},\quad \gamma =\frac{\mu }{2L_g}\left( \beta _G-\frac{\mu L_G^2}{2}\right) ,\quad \tau =\frac{1}{2}\min (\gamma L_g,\mu \beta _G),\\ \alpha _k= & \frac{a}{k+1}\quad \text { where }~a=\min \left\{ 1,\frac{2(\mu \beta _G-\tau )}{\mu ^2 L_G^2-\tau ^2},\frac{1}{\tau -\gamma L_g}\right\} . \end{aligned}$$ -

Extragradient Algorithm (EA):

$$ \beta :=\frac{1}{2},\quad \rho :=\frac{1}{2\Vert z\Vert (h|e_1|+g|p_1|)}\in \left( 0,\min \left\{ \frac{\beta }{2c_1},\frac{\beta }{2c_2}\right\} \right) . $$ -

Halpern Subgradient Extragradient Algorithm (HSEA):

$$ \lambda :=\frac{1}{4c_1}\in \left( 0,\min \left\{ \frac{1}{2c_1},\frac{1}{2c_2}\right\} \right) ,\quad \alpha _n:=\frac{1}{5n+10},\quad \forall n\ge 0. $$

Auxiliary convex problems in the algorithms are computed effectively by the function fmincon in Matlab 2018a Optimization Toolbox. All the programs are performed on a PC Desktop Intel(R) Core(TM) i7-12700F CPU @ 2.10 GHz 2.50 GHz, RAM 32.00 GB.

Let \(\mathcal H\) be a real Hilbert space. We introduce a new cost bifunction \(f: \mathcal H\times \mathcal H\rightarrow \mathcal R\) and the constraint C are given in the forms

where \(x,y\in \mathcal H\), \(R,p_1,p_2,e_1,e_2\in \mathcal R\), \(l>0\), \(g>0\), \(h>0\), \(m\in (g+h,\infty )\), \((z,r)\in \mathcal H\times \mathcal H\). Then, the C is nonempty closed convex, and the f has the following properties.

Proposition 4.1

Let the bifunction \(f:\mathcal H\times \mathcal H\rightarrow \mathcal R\) be defined by (4.2). Then,

-

(i)

f is pseudomonotone;

-

(ii)

f is Lipschitz-type continuous with constants \(c_1=c_2=\frac{\Vert z\Vert (h|e_1|+g|p_1|)}{2}\).

Proof

Assume that \(f(x,y)\ge 0\) for each \(x,y\in \mathcal H\). Then, we have

From \(m\in (g+h,+\infty )\), it yields that \(\langle z, y-x\rangle \ge 0\) and

By definition, the bifunction f is pseudomonotone on \(\mathcal H\times \mathcal H\).

Since (4.2), it follows that, for every \(x,y,t\in \mathcal H\),

where the last inequality is deduced from the relation

By using the relation

we obtain

Thus, the f is Lipschitz-type continuous with \(c_1=c_2=\frac{\Vert z\Vert (h|e_1|+g|p_1|)}{2}\). The proof is complete. \(\square \)

Test 1

First, let us run the algorithm (SAPA) in \(\mathcal {R}^s\) with \(s=5\). The starting point is \(x^0=(1,0,1,100,25)^{\top }\). The parameters R, g, p, q, h, e, f, m, l and the vectors r, z are randomly chosen as follows:

Consider the mappings \(G:\mathcal R^s\rightarrow \mathcal R^s\), \(g:\mathcal R^s\rightarrow \mathcal R^s\):

where \(q\in \mathcal R^s\), \(Q=AA^{\top }+B+D\), A is a \(s\times s\) matrix, B is a \(s\times s\) skew-symmetric matrix, and D is a \(s\times s\) diagonal matrix with its nonnegative diagonal entries (so Q is positive semidefinite). It is obviously that G is \(\beta _G\)-strongly monotone and \(L_G\)-Lipschitz continuous, where \(\beta _G=\min \{t: t\in \text {eig}(Q)\}\) is the smallest eigenvalue of Q and \(L_G=\Vert Q\Vert \). The matrics A, B, D of the mapping G are chosen randomly as follows:

Then, the eigenvalue and the norm of Q are evaluated as follows:

This implies that the strongly monotone constant of G is \(\beta _G=3.3983\) and the Lipschitz continuous constant of G is given in \(L_G=222.3145\).

It is easy to evaluate that

and

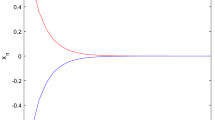

With the tolerance \(\max \{\Vert y^k-x^k\Vert ,\Vert x^{k+1}-x^k\Vert \}\le \varepsilon =10^{-3}\), the computational results of the algorithm (SAPA) are showed in Fig. 1 and Table 1.

Test 2

Consider in an infinite-dimensional Hilbert space \(\mathcal H=L^2[0,1]\) with inner product

and its induced norm

The constraint C and the cost bifunction f are defined in the forms (4.1) and (4.2). We compare the algorithm (SAPA) with three above algorithm with different starting points \(x_0\). The numerical results are showed in Table 2.

From the comparative results in Fig. 2 and Table 2 of the Subgradient Auxiliary Principle Algorithm (SAPA) with two other agorithms: the Extragradient Algorithm (EA) and the Halpern Subgradient Extragradient Algorithm (HSEA), and the preliminary numerical results reported in Table 1 and Fig. 1, we observe that

-

The convergence speed of our algorithm (SAPA) is the most sensitive to all the parameters. The CPU time and iteration number depend very much on the parameter sequence \(\{\xi _k\}\).

-

The CPU time (second) and the number of iterations of our algorithm are less than those of the algorithms (EA) and (HSEA).

Conclusions

In this paper, we introduce a new solution mapping to equilibrium problems in a real Hilbert space. We show that this mapping is strongly quasi-nonexpansiveness under quasimonotone and Lischitz continuous assumptions of the cost bifunction. Then, the Subgradient Auxiliary Principle Algorithm is constructed by the solution mapping and classical auxiliary principle. Finally, the stated theoretical results are verified by several preliminary numerical experiments.

References

Anh, P.N.: A hybrid extragradient method for pseudomonotone equilibrium problems and fixed point problems. Bull. Malays. Math. Sci. Soc. 36, 107–116 (2013)

Anh, P.N., Anh, T.T.H., Hien, N.D.: Modified basic projection methods for a class of equilibrium problems. Numer. Algor. 79, 139–152 (2018)

Anh, P.N., Le Thi, H.A.: An Armijo-type method for pseudomonotone equilibrium problems and its applications. J. Glob. Optim. 57, 803–820 (2013)

Anh, P.N., Hai, T.N., Tuan, P.M.: On ergodic algorithms for equilibrium problems. J. Glob. Optim. 64, 179–195 (2016)

Anh, P.N., Hieu, D.V.: Multi-step algorithms for solving EPs. Math. Model. Anal. 23, 453–472 (2018)

Anh, P.N., Ansari, Q.H., Tu, H.P.: DC auxiliary principle methods for solving lexicographic equilibrium problems. J. Glob. Optim. 85, 129–153 (2023)

Ansari, Q.H., Balooee, J.: Auxiliary principle technique for solving regularized nonconvex mixed equilibrium problems. Fixed Point Theory 20, 431–450 (2019)

Bianchi, M., Schaible, S.: Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 90, 31–43 (1996)

Blum, E., Oettli, W.: From optimization and variational inequality to equilibrium problems. Math. Stud. 63, 127–149 (1994)

Combettes, L.P., Hirstoaga, S.A.: Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 6, 117–136 (2005)

Eskandani, G.Z., Raeisi, M., Rassias, T.M.: A hybrid extragradient method for solving pseudomonotone equilibrium problems using Bregman distance. J. Fixed Point Theory Appl. 20, 132 (2018)

Fan, K.: A minimax inequality and applications. In: Shisha, O. (ed.) Inequality III, pp. 103–113. Academic Press, New York (1972)

Giannessi, F., Maugeri, A., Pardalos, P.M. (eds.): Equilibrium Problems: Nonsmooth Optimization and Variational Inequality Models. Kluwer Academic Publishers, Dordrecht (2004)

Hai, T.N.: Contraction of the proximal mapping and applications to the equilibrium problem. Optimization 66, 381–396 (2017)

Hieu, D.V.: Halpern subgradient extragradient method extended to equilibrium problems. RACSAM 111, 823–840 (2017)

Iiduka, H., Yamada, I.: A subgradient-type method for the equilibrium problem over the fixed point set and its applications. Optimization 58, 251–261 (2009)

Iusem, A.N., Sosa, W.: Iterative algorithms for equilibrium problems. Optimization 52, 301–316 (2003)

Iusem, A.N., Nasri, M.: Inexact proximal point methods for equilibrium problems in Banach spaces. Numer. Funct. Anal. Optim. 28, 1279–1308 (2007)

Khatibzadeh, H., Mohebbi, V.: Proximal point algorithm for infinite pseudo-monotone bifunctions. Optimization 65, 1629–1639 (2016)

Khatibzadeh, H., Mohebbi, V., Ranjbar, S.: Convergence analysis of the proximal point algorithm for pseudo-monotone equilibrium problems. Optim. Methods Softw. 30, 1146–1163 (2015)

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Springer, Berlin (2000)

Kraikaew, R., Saejung, S.: Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 163, 399–412 (2014)

Maingé, P.E.: The viscosity approximation process for quasi-nonexpansive mappings in Hilbert spaces. Comput. Math. Appl. 59, 74–79 (2010)

Maingé, P.-E., Moudafi, A.: Coupling viscosity methods with the extragradient algorithm for solving equilibrium problems. J. Nonlinear Convex Anal. 9, 283–294 (2008)

Mastroeni, G.: Gap function for equilibrium problems. J. Glob. Optim. 27, 411–426 (2003)

Mastroeni, G.: On auxiliary principle for equilibrium problems. Publ. Dipart. Math. Univ. Pisa 3, 1244–1258 (2000)

Moudafi, A.: Proximal methods for a class of bilevel monotone equilibrium problems. J. Glob. Optim. 47, 287–292 (2010)

Moreau, J.J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 93, 273–299 (1965)

Nikaidô, H., Isoda, K.: Note on noncooperative convex games. Pac. J. Math. 5, 807–815 (1955)

Noor, M.A.: Auxiliary principle technique for equilibrium problems. J. Optim. Theory Appl. 122, 371–386 (2004)

Quoc, T.D., Anh, P.N., Muu, L.D.: Dual extragradient algorithms extended to equilibrium problems. J. Glob. Optim. 52, 139–159 (2012)

Quoc, T.D., Muu, L.D., Hien, N.V.: Extragradient algorithms extended to equilibrium problems. Optimization 57, 749–776 (2008)

Santos, P., Scheimberg, S.: An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 30, 91–107 (2011)

Yordsorn, P., Kumam, P., Ur Rehman, H.: Modified two-step extragradient method for solving the pseudomonotone equilibrium programming in a real Hilbert space. Carpathian J. Math. 36, 313–330 (2020)

Acknowledgements

The authors would like to thank the anonymous referees for their really helpful and constructive comments and suggestions that helped us very much in improving the paper. This research is funded by the Vietnam National Foundation for Science and Technology Development (NAFOSTED) under Grant Number 101.02-2023.01.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Christiane Tammer.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Anh, P.N. Strong Quasi-nonexpansiveness of Solution Mappings of Equilibrium Problems. Vietnam J. Math. (2024). https://doi.org/10.1007/s10013-024-00697-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10013-024-00697-9

Keywords

- Equilibrium problems

- Solution mapping

- Quasi-nonexpansive

- Subgradient method

- Quasimonotone

- Lipschitz-type condition