Abstract

Micro-seismic events within a mine have the potential to disturb planned daily operations as unpredictable events can lead to safety concerns. Mines are typically large-scale underground endeavours which cannot easily be modelled on the macro scale. One increasingly popular technique for simulating activity in mines is the material point method (MPM) which is a numerical algorithm designed for analysing large deformations. One attraction of the MPM over many other numerical strategies is that it does not require repeated remeshing of the underlying computational grid but this means that the MPM is not particularly suited to analysis of small-scale events. In an effort to address this dichotomy, in this work we propose an adaptation to the regular grid used in MPM together with a novel time-stepping strategy. This assists greatly in the solution of large modeling problems typical of those arising in mining contexts. We present a new approach of extracting mining induced micro-seismic events from a numerical model by converting simulated plastic strain to seismic potency with the elastoplastic Coulomb criterion used to describe tensile and shear fracturing. Our results are benchmarked against a standard geotechnical problem in order to validate our formulation. The potential practical usefulness of the model is demonstrated by applying it to a real case study consisting of 4 months of recorded micro-seismic events taken from a mine in Tasmania.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Geotechnical engineers use a plethora of information to help plan future excavations of rock mass and thereby ensure safe and reliable extraction of resources. Stress modelling is one of many tools that helps to manage risk associated with seismic hazard, especially in areas, where rock conditions are not particularly favourable to mining. Several stress modelling methods are frequently used in this industry, each with its own advantages and limitations, and choosing an appropriate one for a specific problem must be done with careful consideration.

Two of the standard modelling techniques are based on boundary or finite elements. The standard boundary element method (BEM) (Brebbia 1973) is ideal for modelling large-scale mining configurations and is based on the assumption of an infinite linear-elastic media. This restricts its use to situations when the rock is heterogeneous, which stimulated further work to suggest refinements that would enable non-linear materials to be accounted for Salamon (1993) was the first to attempt to simulate seismic failure with the BEM. His work was later extended by Linkov (2005) in which a BEM domain was pre-seeded with potential weaknesses or slip planes. One significant drawback of this approach is that prior knowledge about weakness planes has to be built into the grid and that no new failure planes can be generated during a simulation. Malovichko and Basson (2014) avoided this problem by further extending Linkov’s work and proposing what is now known as the Salamon–Linkov method to model mining-induced seismic events. This method is currently used in numerous mines for interpretation of seismicity and forecast of seismic hazard but it is known to have its own limitations, largely owing to its dependence on the underlying BEM strategy. Included in these restrictions are the problems associated with analysing non-uniform materials together with the observation that the method struggles to model accurately seismic sources close to excavations. The BEM imposes constraints on the seeding of potential failure zones close to its elements that represent excavations. Unfortunately, it is here that seismic sources tend to be the most prominent.

Another family of models, best referred to as finite element method (FEM) techniques, tends to be very reliable for the analysis and simulation of a spectrum of mining related problems. This approach is capable of modelling a heterogeneous medium of rock mass, but often struggles when dealing with large deformations. In this instance special remeshing of the modelling grid is necessary and this is undesirable, since seismic sources often occur in areas with numerous large deformations. This magnifies the computational complexity of the problem and often means that large mining layouts are beyond of the scope of standard FEM techniques. Nevertheless, many commercial software packages have been developed that rely on finite elements including the popular libraries ABAQUS (Simulia 2019) and FLAC (Itasca 2019). Discrete element methods (DEM) are also often used in the mining context and can cope with large grid distortions. They are particularly well suited to studying cave propagation or damage to the skin of excavations. However, due to the computational complexity, DEM are not often used to study macro-scale problems involving entire mining layouts. An example of DEM software often used in the mining context is 3DEC as described in Itasca (2021).

In this paper, we investigate the feasibility of using a third group of techniques in modelling seismic events induced within a large mining layout. This will complement our knowledge of the strengths and weaknesses of both the BEM and FEM strategies. The principal attraction of this third family, often referred to as material point methods (MPM), is that it is capable of describing large deformations whilst the need to update the underlying grid is circumvented. This sidesteps the considerable computational expense associated with remeshing and is possible as the MPM discretises the mining geometry using Lagrangian material points, subsequently referred to as particles, to carry history-dependent material properties. This contrasts to other types of simulation which ascribe properties to elements as opposed to the particles. Moreover, representing the domain as a collection of points rather than elements means that it is much simpler to construct models of possibly complex mining geometry. The original version of the MPM (Sulsky et al. 1994) utilised a regular background grid which is not suitable for very large models for then the resolution of the grid can significantly influence the results (Steffen et al. 2008). A high-grid resolution is necessary adjacent to mining excavations to ensure that important information such as stress or displacement near the voids is captured accurately. This type of grid is illustrated in Fig. 1a which shows a clear fine-grained mesh structure. Away from this region we have a relatively coarse collection of grid cells that cover the zones, where much less deformation expected. Standard MPM requires a high resolution grid over the entire domain to ensure that sufficient accuracy is achieved near mining excavations. This imposes such a high demand on computational resources that it makes it difficult to simulate even relatively simple and straightforward problems.

Subsequent studies have sought to generalise the original MPM formulation. In particular, we note that there have been a number of refinements (Al-Kafaji 2013; Hamad 2014; Pantev 2016) that have used either non-regular or non-structured meshes for MPM simulations. Typically these grids use triangular meshes for two-dimensional applications and tetrahedral meshes in three dimensions. This allows one to refine in zones that require high accuracy. Although this approach reduces the number of background grid cells needed in a simulation, it does suffer from a few drawbacks. In three dimensions, tetrahedral meshes are complicated to produce and it is normally necessary to appeal to an advanced grid generator to do this. (An example of an intricate tetrahedral grid is shown in Fig. 1b.) This difficulty with the grid is particularly prevalent when modelling mining excavations which include development tunnels that typically are of arbitrary orientation and dimensions. Another major disadvantage arises from the fact that, in contrast to linear hexahedron elements, the derivatives of the shape functions for a linear tetrahedron are constant over the element (Hughes 1987). Stresses and strains may then become highly inaccurate, making it unreliable for stress analysis. To solve this problem higher order interpolation techniques are required, which increases the complexity of the simulation. Therefore, as a precursor to using the MPM to simulate seismic sources for a large scale mining layout, we need to address some of these meshing problems and discuss these in Sect. 2 below.

Several attempts have been made to include local refinement in areas of high deformation for the regular MPM grid. Lian et al. (2014) introduced the tied interface grid material point method (TIGMPM), which allows for several layers of sub-grids to be present within the main background mesh of the MPM. Vertices that share a common interface between the different grid layers are identified as being tied to that interface and transmit various properties such as the velocity, acceleration and force across the interface. Subsequent work (Lian et al. 2015) described the so-called mesh-grading material point method (MGMPM) and although this shared commonalities with the TIGMPM, vertices that sit on the interface of sub-grids are introduced as an additional degree of freedom in the grid. Standard tri-linear shape functions are then modified to include special treatment when these vertices are present. The work by Gao (2017) presented an adaption to the generalized material point method (Bardenhagen and Kober 2004) which shares similarities with the TIGMPM in the sense that interface nodes are tied to the common interface, but extended to use higher-order basis functions.

Figure 1 illustrates a section view of a typical model of a pit in an Australian gold mine with a selection of possible grid structures. The first example demonstrates a classic MPM grid which is of very high resolution in the region near excavations and is likely to be computationally expensive. The tetrahedral grid shown in the second panel requires fewer computational resources, but requires complex mesh generators to construct this grid. The TIGMPM and MGMPM refinements illustrated in the third case attempts to combine the advantages enjoyed by the previous approaches; it requires significantly fewer cells which in turn means reduced computational requirements.

Top: section view of a pit of an Australian gold mine. The three panels illustrate the commonly used MPM mesh refinement techniques. In the top panel there is a relatively fine regular grid such that the region of excavation can be accurately captured. This grid configuration requires extensive computational resources. The middle panel shows a tetrahedral mesh typically used in three-dimensional simulations. The bottom diagram shows the MGMPM philosophy in which several layers of sub-grids are embedded. A rectangular region around the excavation is identified and the cells are repeatedly subdivided until the desired level of refinement is achieved. Note that due to the rectangular nature of the methods, there is inevitable over-refinement in certain parts of the grid that is relatively remote from the excavation; this is shown in more detail in Fig. 2

In this work, we investigate some of the above MPM grid adaptations to determine their applicability to large mining layout modeling, with an emphasis on large deformation and the extraction of seismic sources. We adapt these approaches to improve grid generation for mining applications and test our proposals on a conceptual model in Sect. 3. We apply our methods to a real mining example in Sect. 4 and conclude with some discussion and proposals for further work in Sect. 5.

2 A Multi-level MPM Grid

To construct a mining model using the MPM framework, material points (particles) are seeded throughout the entire domain which represent the solid rock. Where excavations like tunnels, stopes or caves are present, the particles that fall inside these voids are often simply ignored and removed. As an alternative to this rather crude approach, these particles may instead be assigned different material properties so that they can continue to exert some influence within the overall model. For example, caves in mines may be filled with residual rock, thereby creating a region at a lower density than its immediate surroundings, but still able to affect the nearby environment owing to gravitational loading. An Eulerian background grid is then placed over the domain and plays the role of a communication tool between the particles, where information such as stress, velocities, mass and other constitutive material properties are continuously interpolated from particles to and from the background grid. As already mentioned, the cell size of the background grid can significantly influence the performance of the model. Therefore, the introduction of areas of local refinement near mining excavations is vital to capture any fine detail that may otherwise be missed. We first review briefly the work done by others in this direction (Gao 2017; Lian et al. 2014, 2015) before proposing a novel method of grid refinement developed with applications to the mining industry specifically in mind.

In Sect. 1, we described both the TIGMPM and MGMPM. In their generic forms both use layers of grid refinement in which the cell size of each lower level grid is half the cell size of its parent layer. These methods are also constrained by the fact that all sub-grid layers must be rectangular in design. The restriction to rectangular sub-grids means that it can be difficult to resolve adequately solution behaviour in a simulation, especially in three dimensions and can lead to unnecessary work. We refer the reader to the cartoon, Fig. 2, as a simple illustration of this phenomenon. Here we have a triangular region of excavation within the computational zone. Clearly we ought to introduce a refined grid over the excavation but the fact that the sub-layer region has to be rectangular itself means that we are forced to refine parts of the grid, where this is not really necessary. This adds to the computational expense, and while this increase may be minimal and probably inconsequential in simple examples, it can be of great significance in the more complex geometries typically encountered in practical problems that includes mining tunnels such as stopes, declines and other development.

Example of the grid refinement needed for TIGMPM and MGMPM. Here the need to introduce a subgrid to cover the excavated region, together with the requirement that the sub-grid itself must be rectangular, means that over the highlighted zones there is unnecessary refinement which adds to the already substantial computational overhead

A second disadvantage of classical TIGMPM and MGMPM is that refinement regions must not be allowed to intersect, since this may cause some grid vertices to acquire negative mass. A vertex that has a negative mass possesses a negative momentum and when this happens instability in the MPM normally sets in with a consequent severe loss of accuracy (Anderson and Anderson 2007). Figure 3 shows two potentially feasible examples involving three layers of refinement. In one case the refinement is valid (left) in the sense that no problems are anticipated but the other case the local refinement is likely to fail, because two of the subgrids intersect at a few particle nodes. Experience suggests that local refinement regions should ideally use arbitrary shapes and the minimal number of vertices necessary to describe the area; an example of this is shown in Fig. 4.

Valid and invalid configurations of TIGMPM and MGMPM. Left: a valid configuration of main grids with associated sub grids. Right: a potential problematic configuration of a main grid with two intersecting sub grids with a zoomed-in view. Filled cells with problematic vertices are highlighted to indicate those areas, where vertices may acquire negative mass

Example of the steps of grid refinement for the multi-level grid. This process is described in Sect. 2.1

The study by Gao (2017) adapted the ideas behind the generalized material point methods (Bardenhagen and Kober 2004) so that the usual constraint of a rectangular grid shape could be relaxed. They demonstrate examples of two arbitrarily shaped hyper-elastic jello squares that collide and illustrated how during each MPM time step the grid is able to adapt thereby capturing areas of high deformation. In these simulations intricate interpolation functions were used in preference to the standard tri-linear shape functions of the MPM but incorporating these more complicated methods into the mining context is not straightforward. The difficulty arises when simulating small excavations, like tunnels, as particles may communicate with grid vertices outside their containing cells, making it awkward to represent faithfully the discontinuity of the opening. For example, when the size of an excavation spans only one grid cell, then when using higher order interpolation functions, such as the ones used in GIMP, the presence of the opening may be smeared over the background grid as material points are allowed to communicate to nodes of grid cells outside of its containing cell. When using standard linear interpolation functions, the presence of the opening is preserved, because material points are only allowed to communicate to the nodes of the cell in which it is contained. We, therefore, base our approach to grid refinement on that described in Gao (2017) but incorporate modifications better suited to mining-specific situations. We chose to use standard tri-linear shape functions for interpolation as and when required.

An example of a three-dimensional grid cell of length L is sketched in Fig. 5. This cell is connected to four lower-level (child) cells each of size L/2. In this figure, the red vertices are referred to as parent nodes and they the follow conventional MPM methodology; hence mass, momentum and forces are interpolated using tri-linear shape functions from particles to these nodes. The vertices shaded green are denoted child nodes and they each inherit their properties from its parents. To be more specific, in Fig. 5 the child node ab derives its various properties simply as averages of the values at the two parent nodes a and b. In a similar way, the properties of child node labelled abcd are the averaged values at the four parents a, b, c, and d. We point out that there is no formal restriction on how many faces of a parent cell are connected to child cells. Instead, only one constraint needs to be imposed here; that child cells sharing an interface with a parent must have a dimension that is one half that of the higher level cell. One consequence of this requirement is that a parent cell cannot be connected to a child that is more than one level lower than itself.

Given this outline of the computational strategy to be employed, we next delve deeper into two key components of the method; the generation of the grid and how the solution is advanced in time.

2.1 Construction of the Grid

We again make use of the pit used in Fig. 1 to explain the procedure . Here a two-dimensional slice of the pit is taken to provide a simple example for illustration of the method characterised in Fig. 4. While this case is straightforward, it is easily extendable to higher dimensions. In order to describe the process of constructing the underlying mesh, it is helpful to introduce some notation. Let \(L_{i}\) denote the dimension of a grid cell grid at level i, where \(0\le i\le n\); then the initial (coarsest) grid has size \(L_0\) and denotes the lowest level cell resolution, while \(L_n\) is the highest resolution (smallest size) used in the grid.

The grid is then constructed following a sequence of steps. For definiteness, let us describe the procedure for the type of problem exemplified by Fig. 4 so that we aim to form a grid around some line lying in the computational domain. First, the entire domain is seeded with a regular background grid of size \(L_0\) which follows exactly the procedure that is used in standard MPM (Sulsky et al. 1994). Then we identify all those cells through which the pit boundary passes together with their immediate neighbouring cells. These cells are subdivided into smaller entities of dimension \(L_1=\frac{1}{2}L_0\).

This method then proceeds iteratively. Suppose we have reached the stage, where the smallest cell has size \(L_I\) for some integer \(I\,(<n)\). Once again we identify the cells on the pit boundary, together with their neighbours, and consider whether these cells are viable candidates for subdivision. A cell of size \(L_i\) can only be successfully subdivided if none of its neighbours are at a level higher than \(L_j\) with \(j>i+1\). There is guaranteed at least one cell of size \(L_I\) that can be subdivided thereby producing cells of dimension \(L_{I+1}\). We can now repeat the process successively refining the grid further until once \(L_{I+1}=L_n\) no further subdivision is permitted and the final grid is obtained.

If these ideas are applied to examples taken from the mining industry, it is found that the result is a grid that is only refined close to excavations as is desired. Once the grid is fixed all the cells are sorted from highest level \(L_{n}\) to the lowest level \(L_{0}\) and this is then used to inform the size of the appropriate MPM time step. This is discussed next.

2.2 Implementation of the Time Step

A comprehensive review of the standard MPM algorithm may be found in Nairn (2003) and so our focus here will be to describe those facets of the modified problem that need to be considered when advancing the solution forward in time. An overview of the process is provided in the schematic, Fig. 6. After setting the grid using the method outlined above, we then populate it with particles and initialise each of them with appropriate stress, velocity and material properties (Fig. 6a). In order to describe the implementation of the advancement in time it will prove necessary to make reference to both the nodes of the grid and the particles sitting within the grid; to this end we indicate with a subscript l quantities relating to the grid, while the subscript p is an index that enumerates over particles. Given that, the strategy underpinning the application of the timestep is broadly as follows: suppose we have the solution at time t and we intend to move the solution forward to the time \(t+\Delta t\). We do this in three parts: first we need to map the existing particle properties onto the grid, then we update the properties of the grid and lastly map these back onto the particles. Let us describe each of these stages.

Flow diagram illustrating the six stages required to advance the MPM through one time step. a The particles (shown as blue dots) contain the current state of the system and include stress, velocity and material properties. b The particle states are mapped to the nodes of their respective containing cell within the background grid to deduce the mass, momentum and internal forces using Eqs. (1) and (2) of Sect. 2.2.1. c The mass, momentum and force contributions of the parent nodes are updated from their respective child nodes. d The background grid is then stepped forward in time. The parent node acceleration and velocity are updated using Eq. (3) and (4) of Sect. 2.2.2. e The particle states can now be updated. Position, velocity, and strain increments are calculated using Eqs. (5) and (6) of Sect. 2.2.3. At this point the appropriate constitutive relation is applied to update stress. f Finally, the background grid is reset in preparation for the next timestep (colour figure online)

2.2.1 Stage (i): Mapping the Existing Particle Properties onto the Grid

Suppose that \(C_{L}\) denotes the list of sorted grid cells, where L ranges from \(L_{n}\) to \(L_{0}\) as described in Sect. 2.1. The standard tri-linear shape function \(S_{l}\), commonly used in FEM to describe linear elements, is used to interpolate information between each particle and its connecting node and we denote the gradient of this function \(G_{l}\). For each node we can compute its mass \(m_l\) and associated momentum \(\left( m{\varvec{v}}\right) _{l}^{t}\) by simply summing these quantities across all the particles that are sitting in that cell (Fig. 6b). Hence

here the superscript t simply designates the value of each particular quantity at time t. The force \({f}_l\) at the node due to particle stress can be deduced from

where \(V_{p}\) represents the volume of a particle, \({\varvec{b}}\) denotes any external force that may be acting on the particle and \(\sigma _{p}^{t}\) is the particle stress. Having worked out \(m_{l}\), \(\left( m{\varvec{v}}\right) _{l}^{t}\) and \({\varvec{f}}_{l}^{t}\) for each node, we can transfer these quantities for each child node to their connected parents for all \(C_L\) (Fig. 6c). If a child node has two parents (so that it sits on the edge of a higher level grid cell) then half of these quantities are given to each parent and if a child has four parents then a quarter goes to each of them.

2.2.2 Stage (ii): Updating the Existing Properties of the Grid

We now update the status of the grid (Fig. 6d). We begin by considering those nodes that have no parents; call this set of points \(\{N_l \}\). The acceleration of these nodes is expressed as

the force term on the right hand side comprises the stress component (2) and \({\varvec{f}}_{l}^{d}=-c\left| {\varvec{f}}_{l}^{t}\right| \text {sgn}\left( {\varvec{v}}_{l}^{t}\right)\) which is a damping force that is included to ensure the system reaches quasi-static equilibrium. Empirical evidence suggests that the constant c should lie somewhere between 0.7 and 0.85 [see Cundall (2009) or Wang et al. (2015)]. The velocity of the node is then updated using

where the superscript \(t+\Delta t\) on a quantity reminds us that this is now an updated value.

This describes how we can advance the states of those nodes without parents. Those with parents can be updated quite easily as for these the quantities \({\varvec{v}}_{l}^{t+\Delta t}\) and \({\varvec{a}}_{l}^{t}\) are simply the respective averages taken over the connected parents.

2.2.3 Stage (iii): Mapping the Grid Properties Back onto the Particles

The final stage in applying the timestep is to map the updated grid properties back onto the particles themselves (Fig. 6e). We use the list \(C_{L}\) of nodes to update the connected particle positions and velocities using

The increment in strain of the particle can be calculated using

which can then be used in the appropriate constitutive relation in order to determine the particle stress. At this point the particles contain the updated state and the grid is reset (Fig. 6f).

2.2.4 Summary of the Time-Stepping Process

We reiterate the sequence in which the time-stepping process is implemented. Given a collection of particles and their properties at time t, we map these onto the underlying grid, update the state of the grid and then infer the properties of the particles. This increments the state of the whole system and these modifications to the timestepping process in the standard MPM enables us to model large scale mining geometries in an efficient way. One attraction of the approach is that grid refinement is only required near those cells containing the mining geometry.

We emphasise that the new method is designed to address quasi-static problems related to mining induced stresses. Some modifications may be necessary if it is used to address dynamic problems. For example, if the grid is used to propagate a stress wave through the medium, the wave may be distorted as it passes through the grid in areas, where different size cells exist. Further investigation is needed to address this issue.

With the gridding problem now hopefully resolved, we need to switch our attention to a novel method of extracting information from the numerical models regarding seismic events induced by the mining. Before doing this, however, we need to be assured that our methods are sufficiently robust and well behaved and so conduct a few validation tests.

2.3 Grid Validation

The well established Kirsch’s solution, see Ryder and Jager (2002), was chosen as a suitable candidate against which the performance of the new MPM grid could be benchmarked. This solution gives the exact solutions for the stress concentrations around a circular hole in an infinite linear elastic plate. If a uniform stress \(\sigma _{\infty }\) is applied to the plate then there are analytical expressions for the radial stress \(\sigma _\mathrm{{r}}\) and the tangential stress \(\sigma _{\theta }\) at a point distance r from the centre a the hole of radius d. In particular

Two two-dimensional models were constructed as shown in Fig. 7 to compare the performance of our new multilevel grid with that of a more conventional MPM regular structure. The domain was chosen to be of size \(200\times 200\) m and a hole of radius 10 m was drilled in its centre. A uniform stress of 30 MPa was applied to all boundaries. The models were composed of an elastic material of density 2700 kg/m\(^3\), bulk modulus \(3.9 \times 10^9\) and shear modulus \(2.8 \times 10^9\). The conventional MPM grid consisted of cells of dimension \(0.5 \times 0.5\) m which were sufficiently small that the shape of the circular opening could be captured; this grid contained a total of 160,000 grid cells.

The second model, which incorporates our suggested refinements, contains four levels of grid cells. The coarsest level consisted of square cells of side 4 m so that the finer grids were composed of square elements of side 2, 1 and 0.5 m; the composite grid contained 11,550 cells. Both models have a distribution of eight material points per cell.

The forms of \(\sigma _\mathrm{{r}}\) and \(\sigma _\theta\) as computed by the simulations are presented in terms of heat maps, as depicted in Fig. 8. From here it is clear that the calculations give qualitatively similar results and it is not easy to spot significant differences between them. A more meaningful comparison is provided in Fig. 9. Here the analytical predictions given by expressions (7) are shown by the solid blue and red lines. The corresponding calculated profiles are shown by the markers superimposed on the figure. Both models generated results that almost coincide with the analytical forms, with the variations manifesting as relatively minor discrepancies very close to the edge of the hole. Further testing confirmed that this disagreement can be ameliorated by reducing the grid cell size. Moreover, the use of a triangular grid alleviates the remnant of this artifact even more. The insistence that the underlying grid be rectangular limits the ability of the computational scheme to capture the edge of the hole accurately. The salient conclusion we draw for the purposes of this study that relies on the output of quasi-static solutions, is that the multilevel grid MPM can produce results that are of similar quality to those given by the conventional regular gridding of the MPM but can do so with significantly fewer points and effort. We conclude that with given computational resources the multi-grid approach can be confidentially expected to produce concomitantly better outcomes.

3 Simulation of Mining-Induced Seismic Events

Salamon (1993) developed the prototype method used to simulate the seismicity associated with mining. There were several subsequent refinements introduced; for instance, Linkov (2005) extended it to model both seismic and aseismic events, while Malovichko and Basson (2014) introduced the so-called Salamon–Linkov method based on boundary elements which focuses only on seismic events. There is evidence that the Salamon–Linkov method is currently being widely used in mining to interpret mine seismicity and to assess seismic hazard (Mendecki 2016). We have already mentioned that a weakness in this strategy is the inability to describe a medium with nonuniform material properties. Moreover, there appear to be difficulties in simulating volumetric seismic sources as all failure is assumed to be of shear type. These aspects persuade us of the viability of MPM as an alternative is worthy of exploration. That MPM might be an attractive option is motivated primarily by the recognition that it can cater for the presence of several materials and is capable of simulating both volumetric and shear sources.

In this section, we describe a method designed to identify seismic events from inelastic (plastic) strains of failed elements within a MPM model; here the solid phase is represented as a continuum. First, we need to be able to identify a failed element; within the MPM formulation, such an element is recognised as a particle that experiences a stress that exceeds the yield surface of a suitable constitutive relation (such as the Coulomb or Hoek–Brown relation) and which has undergone a stress drop \(\Delta \sigma ^\mathrm{{p}}\) to return to the yield surface. We define the seismic potency P of a single dislocation source as the product of the average slip \({\bar{u}}\) over a source area \(A_\mathrm{{s}}\) (Mendecki 2016); this creates the link between the modelled stress drop of a failed element to that of the stress drop of a real seismic event. This means that

and for a complex volumetric seismic source, this expression becomes

where \(\Delta \epsilon =\Delta \sigma /\mu\), \(\Delta \sigma\) is the averaged stress drop and \(\mu\) is the rigidity of the rock mass surrounding the source volume \(V_\mathrm{{s}}\), (Madariaga 1979). If we assume that the modelled stress drop of the element is proportionally equal to the stress drop of the seismic source, then we can define the seismic potency of a failed element to be

where \(\Delta \epsilon ^\mathrm{{p}}\) denotes the element plastic strain change calculated from \(\Delta \sigma ^\mathrm{{p}}\) and V is the volume of the element. Equation (10) closely models potency as a first-order approximation of a single failed element. However, if two neighbouring elements fail simultaneously, then it is acceptable to combine them into one single larger seismic event if their mechanisms of failure are similar but not if the mechanisms are different. This points to the need for a robust grouping procedure that takes into account the spectrum of spatial and similarity information and thus guides us as to when amalgamation of failed elements is appropriate.

We appeal to the focal mechanism (or seismic potency tensor) of a seismic event to combine failed elements into families whose members have common failure mechanisms. This tensor contains useful information that describes the deformation in a source region. In the case of a shear-type event, the tensor enables us to infer the orientation of the slipped fault plane together with the direction of slip, whereas for failure under tensile conditions it provides knowledge of the degree of dilation that occurred. Michael (1984) suggested that the principal components of a focal mechanism can be a used as a proxy for the direction of stress in the rock mass. It was proposed that the P-axis (the largest eigen-component) of the mechanism is a good guide to the direction of the major principal stress \(\sigma _{1}\). Taking the analogy further, it seems that the B- and T-vectors of the mechanism are approximately oriented parallel to the intermediate stress \(\sigma _{2}\) and \(\sigma _{3}\), respectively. We make use of these assumptions in our derivation of a direct relationship between the seismic potency tensor and the modelled plastic strain of a failed element.

Two-dimensional illustration of spatial and closeness information of a numerical simulation that has intact and failed elements. Left: result of a numerical simulation with failed elements arising from one or other of two mechanisms. Middle: failed elements of mechanism 1 extracted from the simulation. Right: failed elements of mechanism 2 extracted from the simulation. Each group of elements falling inside a blue circle represent a single seismic event

Consider a set of rectangular elements, as shown in Fig. 10 (left panel). The elements are assumed to be one of three states, either intact or failed via one of two possible mechanisms. A quick visual inspection suggests how the failed elements can be clustered into two groups as shown in Fig. 10. We refer to this grouping as a closeness separation; in other words, members of the same set are likely to be outcomes of the same failure mechanism. We now cluster each group based on their relative spatial relation which we refer to as the spatial separation. Each one of these subsequent spatial groups, as indicated by the blue circles in Fig. 10 is likely to be the result of separate seismic events. The total potency of the event is then expressed as

The extraction of seismic events from numerical models is conceptually intuitive but does not implicitly encompass different failure mechanisms (although this does depend on the constitutive relation).

The following sections aim to clarify this issue as it is manifest in both the closeness and spatial separation stages.

3.1 Closeness Separation

We shall assume that there is a direct relationship between the plastic strain of a failed element and the seismic potency tensor of an event. The difficult task of deciding which portion of the plastic strain is related to aseismic failure compared to the seismic is discussed in Sect. 3.3 below. Rewritten in tensor notation, Eq. (10) becomes

and this enables us to obtain information regarding the failure mechanism of the element and for it to be extracted using standard seismological techniques. We remind the reader that the purpose of Fig. 10 was to show a simple toy model in which all failed elements could be divided into two closeness groups; moreover, all events in each group shared similar potency tensors. In some later work, Michael (1987) proposed that a normalised scalar product of two tensors could usefully be taken as a measure of similarity between two focal mechanisms. For two tensors \(X_{ij}\) and \(Y_{ij}\) their closeness is defined to be

this takes a value between 1 for (when the tensors are exactly parallel) and \(-1\) when they are directly opposite. (In many ways this is just a generalisation of the scalar product of vectors to tensors.) The application of Eq. (13) to all pairs of failed elements should, at least in theory, enable them to be easily be separated into distinct closeness groups (recall that in Fig. 10 we have two such groups coloured red and green). Unfortunately, in practice, it is rare to find the closeness of two tensors to be exactly equal to 1 which means some kind of threshold needs to be introduced; if the closeness of two failed elements is not unity, but not too far from it, how do we decide whether it is likely that their failure is due to the same mechanism? This is a slightly delicate matter: if the threshold is set to be too stringent then we risk having numerous closeness groups corresponding to small seismic events being identified. Then it might seem that all the failed elements arise from separate seismic events. On the other hand, if the threshold is set too lax then it is likely that many elements would all be ascribed to one of a few larger events. Once the threshold is fixed, this generates a list of closeness groups, each containing elements all with similar potency tensors with the total potency \(P_{ij}^\mathrm{{T}}\) of each group given by the tensor form of Eq. (11). (We point out that the total simulated potency of all failed elements across all groups is of course fixed as each failed element belongs to precisely one closeness group irrespective of how they are divided up.) Therefore, by modifying the closeness threshold, the potency can be distributed to more or fewer groups. Our experiments found thresholds larger than about 0.8 provided acceptable results in most simulations.

3.2 Spatial Separation

The output of the closeness separation calculation is next spatially clustered using the method described below. The nature of seismic sources means that clustering would normally would tend to form elliptical shapes, as illustrated in Fig. 11. This figure indicates two ways in which closeness groups may be clustered. In the left panel the data are clustered into two parts in the obvious way but one of these has assumed a rather peculiar shape. The data maybe better clustered into three parts as depicted in the right panel. This simplistic example shows that care must be taken in the choice of the clustering algorithm; we do not want to select a technique that could generate some oddly-shaped clusters.

We experimented with several spatial clustering algorithms as possible candidates to be applied to our problem. Dynamic clustering, also known as k-means clustering (MacQueen 1967) is one of the most popular clustering algorithms. We found that it tended to create spherical clusters in the feature space. Its drawback though is that it requires prior knowledge about the number of clusters to be created. Single-link clustering (Davis and Frolich 1991) was also somewhat unsatisfactory as it was liable to produce elongated clusters. In contrast, density-based spatial clustering of applications with noise (DBSCAN) (Ester et al. 1996) is a non-parametric estimator which groups densities in the feature space. Tests suggested that it seemed to retain the elliptical shape of points and it performed significantly better than the other two methods. Figure 12 that shows a conceptual example, where a comparison is made between the tested spatial clustering algorithms. The k-means algorithm is designed such that all points must belong to a cluster. The Single Link algorithm generates only one cluster containing all points. This cluster is not desirable as the point configuration is spatially elongated. Finally the DBSCAN algorithm creates three clusters, where points of high density are groups together. This is a more desired configuration for the creation of seismic events from the model.

Therefore, this spatial clustering algorithm was implemented in our further study of spatial separation of closeness groups. It is worth emphasising that in the context of our work a cluster is defined as a single seismic event whose potency is given by Eq. (11). The location of the event is equal to the potency weighted average of the cluster and pinpoints the area of the event with the most deformation.

3.3 Seismic vs Aseismic Modelled Seismicity

By definition, seismic failure occurs when a part of a material experiences a sudden inelastic deformation. The energy released by this process releases a wave that is propagated through the material, which is easily detectable by modern day seismic sensors. On the other hand, aseismic failure occurs at a very low frequency, typically spanning from a few seconds to years, and in the majority of cases is undetectable. We assume a direct relationship between plasticity and seismicity. However, there are many materials, where plastic deformation occurs and does not lead to seismic failure so that all deformation occurs aseismically. One feature of the method described above is that it does not distinguish between seismic and aseismic failure, making it difficult to compare modelled seismic events to real seismic outcomes. Therefore, a decision needs to be made about what proportion of \(\Delta \epsilon ^\mathrm{{p}}\) of a failed element may be associated to seismic events. A rather simplistic way of tackling this issue is to just prescribe the percentage of \(\Delta \epsilon ^\mathrm{{p}}\) that occurs seismically. This has the effect that a constant \(\alpha\), ranging somewhere between 0 and 1, needs to be introduced into Eq. (10) so that it is modified to

A reasonable estimate for \(\alpha\) is best derived by comparing numerical models with some real historical seismicity observed in a mine.

A rather more sophisticated approach to separating seismic from aseismic failure in the model is to presume that “strain-softening” is taking place when an element fails. Usually when a constitutive relation (such as Coulomb) is used, perfect plasticity is assumed, which implies that if an element fails, it can do so repeatedly without changing the material properties. The introduction of strain softening permits the material properties of a failed element to be modified so that it can represent the damaged material. Cundall (2009) describes in some detail many constitutive relations that allow for strain softening. Of the various possibilities, we chose to use the Coulomb relation to model failure of elements in our calculations; in particular, we assume that if an intact element fails for the first time, the stress drop \(\Delta \sigma ^\mathrm{{p}}\) occurs seismically and the friction angle and cohesion properties of the element are decreased to residual values. Any subsequent failure of the element at lower material values is assumed to occur aseismically and does not contribute to any further increase of \(\Delta \epsilon ^\mathrm{{p}}\). Figure 13 shows a model example of the classical stress–strain curve for a material that fails when it initially reaches the yield point. At this point, a stress drop is recorded and material failure criteria change to residual values. Only the plastic strain derived from the initial stress drop (as highlighted) contributes to the potency of the seismic event and any other subsequent stress drops are disregarded.

3.4 Method Validation

New methods are usually best validated by comparing with known theoretical solutions. Since the theoretical data directly pertaining to the forecasting of seismicity are rather sparse, the next best option is to benchmark the method against a laboratory study. For validating our method, we have chosen the Brazilian disk test first described by Fairhurst (1964). Subsequently, this experiment has been repeated many times and the outcome is now well-known and established. The test involves applying a uni-axial load to a cylindrical disk. The work of Wei et al. (2019) is of particular interest to this study, since in their tests, they placed acoustic emission sensors within a specimen and mapped the location and sizes of seismic events that occurred during the experiment. Since our method is focused on simulating seismic data we make use of their seismic observations to compare to our simulated data by repeating their experiment numerically. Mention should also be made of the results of Liao et al. (2019). They used numerical simulations of the Brazilian test using a FEM-based code, rock failure process analysis (RFPA), to simulate the failure process of the disk and to derive artificial acoustic emission events.

Our model follows the experiments described by Wei et al. (2019). They used a sandstone material that had elastic properties as listed in Table 1. For this study, we applied the Coulomb relation to the model and although Wei et al. did not explicitly specify the failure criteria of their samples, we assumed properties that are typical of sandstone.

An MPM model of the Brazilian test was constructed, as shown in Fig. 14. The cylinder was taken to be 80 mm in diameter with a thickness of 40 mm and was placed on the bottom boundary of the MPM domain. Fixed displacement boundaries were applied to all edges of the domain, although only the lower part of the disk touched a boundary. For this study, a regular grid with cubic cells of side 1 mm was used with the total model comprising approximately 4 million material points. The sample was loaded from above by applying an external force to those top layer material points that lie within angle \(\phi =20^\circ\), as shown in Fig. 14. Initially, the external force magnitude was low and was gradually increased linearly as the simulation progressed to simulate the the plate loading of the laboratory experiment. The simulation was stopped once a crack formed through the centre of the sample and no further failure occurred and sufficient damage was observed.

Our results are summarised in Figs. 15 and 16. The left panel of Fig. 15 reproduces the seismic event distributions that were recorded by the acoustic emission sensors used in the experiment detailed in Wei et al. (2019). Events are coloured and sized according to the recorded energy. The right-hand panel of Fig. 15 illustrates the damage distribution recorded by the RFPA experiment conducted by Liao et al. (2019). The results of our implementation are shown in Fig. 16 and represent the seismic event distribution for six different time steps of the simulation. Note that the seismic source mechanisms are represented by ‘beach balls’ each of which consist of coloured and white areas; the coloured regions of a beach ball indicate regions that have been dilated, while the white areas denote those parts that have experienced compression. The first three time steps of our MPM output replicate the observations of Wei et al. (2019) and it is unfortunate that their seismic catalog is not available to enable a more comprehensive comparison of our results with theirs. When our simulation reaches time step 4 it is apparent that our damage distribution is somewhat reminiscent of the findings of Liao et al. (2019) and shown in Fig. 15b. Their results suggest a mixture of tensile and shear failure, whereas our catalog provides a more detailed overview of the failure mechanisms. We allowed our simulation to run on until quite extensive failures occurred within the disk such that as exhibited in the images at steps 5 and 6. We believe the failure patterns observed in our MPM output gives reassuringly good agreement with previous observations recorded in the literature.

Left: seismic event distribution obtained from the acoustic emission data of Wei et al. (2019). The events are sized and coloured according to the released energy. Right: simulated data taken from Liao et al. (2019), where blue and red circles represent tensile and shear failure, respectively (colour figure online)

Seismic event distribution taken at six different times within our MPM simulation. The source mechanisms are represented by beach balls; solid coloured areas of these balls denote regions that dilated (where tensile failure occured), whereas white areas indicate those parts that experience compression (colour figure online)

4 A Case Study: The Renison Bell Tin Mine

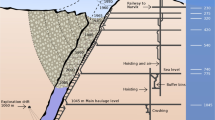

Renison Bell is an underground mine located on the west coast of the island state of Tasmania. It is the largest tin producer within Australia whose output is arguably of highest grade worldwide. The ore body is oriented North-South with the major principal stress directed parallel to the ore body. Various laboratory tests have been performed on the rock properties over the life of the mine and although different materials are present, they can be modelled by two main repositories. The first of these is the host rock that consists of carbonates and sulphide mineralizations, while the rest of the rock can be taken to be talcose intrusions that are dominantly altered carbonates. Seismic activity appears to be on the rise as mining continues and currently experiences events of moment magnitude up to 1.6. The mine frequently uses advanced modeling techniques to identify potentially seismically hazardous areas when designing the layout of new mining areas (see Fig. 17). The Salamon–Linkov method (as previously described) is currently used to simulate mining-induced seismicity and is a principal vehicle for providing hazard assessment. However, the fact that the method is unable to simulate volumetric sources and is restricted to a single elastic material is somewhat unsatisfactory. Our proposed strategy alleviates these difficulties and may lead to improved results and predictions. We shall simulate seismicity over four consecutive months and the seismic output compared with real recorded seismicity over the same period.

Section- and plan views of the renison mine layout as received from the mine. Historical mining areas are represented by grey solids. The main ore body is intersected by a fault (federal fault). Estimated Talcose intrusions are shown in pink volumes, while mining areas are shaded grey (colour figure online)

4.1 Model Geometry and Input Parameters

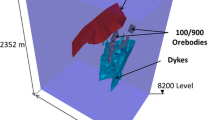

Figure 18 shows the layout of the mine. The model domain (which is highlighted in blue) spans 1100 m in a North-South direction and extends roughly 750 m both East-West and in the vertical. Due to the extensive size of this model, a conventional MPM using a regular grid is not suited for modelling purposes as the sheer size of the problem makes its computationally infeasible. To alleviate this problem, the gridding technique described in Sect. 2 was implemented. In this, the coarsest grid was composed of cells that were cubes of side 10 m. Four levels of refinement were applied to the grid so that the smallest cells (lowest level cells) had dimension 1.25 m. All mining excavations were captured by these cells and thereby represent areas of potential large deformation. In total, our grid contained roughly 6.5 million cells which is significantly less than the 320 million cells needed in a conventional MPM with a regular grid throughout the domain.

Layout of the renison bell mine. Seismicity is simulated within the region highlighted in red. Left: a section view of the mining layout looking to the East. Middle: the model domain of dimensions \(1100 \times 750 \times 750\) m. Right: the mining steps performed. The coloured boxes with numbers denote areas that were extracted in the order indicated (colour figure online)

Insitu stress and material properties of the host rock that were used in this experiment were provided by the mine and are listed in Table 2. Note that talcose materials were excluded in this simulation as none of this material is considered present in the study area. A Coulomb constitutive relation was used to simulate failure in the model and the properties of particles in the areas that failed were reduced from intact values to a residual value following the procedure as described in Sect. 3.3. Excavated mining areas were filled with a weak elastic material to prevent a dynamic failure of the model. Otherwise failed particles can be ejected into the void, which significantly hinders the model from reaching an equilibrium state.

4.2 Model Solution and Identification of Seismic Events

The MPM grid as outlined in the previous section was initialized based on the geometry of the mining configuration, as sketched in Fig. 18. Grid nodes positioned on the boundary of the domain were fixed, implying there is no displacement over the edge of the computational region. Each grid cell was seeded with a single particle. Insitu stresses and material properties of particles sitting within the host rock were initialized using the parameters listed in Table 2. Particles that fell in voids were assigned zero stress and weak material properties to reduce the computational cost.

The MPM time-stepping scheme described in Sect. 2.2 above was run to an equilibrium state assuming all materials were initially elastic. This reduces the possibility of artificial failure within the model, since the introduction of the entire mine layout into the model in a single epoch is unrealistic and may cause instability. In other words, if a large excavation to the scale of an entire mining layout is made in a nonlinear material then dynamic failure may occur and the simulation may become unstable, whereas in reality these excavations were made over a long period of time. This problem is circumvented by allowing the model to initially reach elastic equilibrium. Once equilibrium was achieved, particles that possessed the host rock material properties were permitted to fail by changing the constitutive relation to the Coulomb relation. At this point, the simulation was again allowed to reach equilibrium. Finally, simulated seismic events were extracted from the model.

The simulation was repeated several times to optimise the residual values of friction angle, dilation angle and cohesion. This was done such that the total modelled seismic potency defined by Eq. (10) best matches the total observed seismic potency in the area over the same period and these optimised values are those given in Table 2. Once these values were decided, the closeness variable of Eq. (13) was calibrated so that a best match between the modelled event size and the practically observed sizes was found. For this experiment, a closeness of about 0.8 provided a good correlation between modelled and observed data.

4.3 Results

Approximately 750 real microseismic events, encompassing a range of activities with \(\log P\in [-4,0.9]\), were recorded in the study area between August and November 2019. Two of these events were so strong that \(\log P>0\). These were excluded from further consideration, since the large numerical values on the logarithmic scale overwhelmed all other events. Therefore, we restricted our focus to reproducing those events of strength with \(-4\le \log ~P\le 0\).

Three measures were taken to compare the modelled and real seismic events. First, there should be a good agreement between the three-dimensional location of modelled seismic events and that of the observed data. Therefore, the spatial correlation (see Fig. 19) between the real and simulated data was assessed. It is seen that in both cases the majority of the seismicity is concentrated in the mining areas that were extracted. In addition the real data contained some scattered seismicity below the mining excavations, which is absent from the model data; these events may arise from heterogeneities in the material which was unknown at the time of the experiment and not accounted for in our model. The second measure of correlation focused on the size distribution of both sets. Figure 20 illustrates the potency frequency distribution (also commonly referred to as the Gutenberg–Richter plot) (Mendecki 2016). In seismology the Gutenberg–Richter law is expressed as \(N(>P)=aP^{-b}\), where the power-law exponent is commonly known as the b value, N is the number of events greater than P and a quantifies the level of seismicity that is determined by the ground deformation. For \(b=1\) the number of events increase by a factor of 10 with each integer decrease in its potency. In this plot, bars indicate the number of events that fall within a specific potency range that is represented by the bar width. Circles represent the cumulative distribution of events that has potency greater than the representative size. The model (as expressed above) is then fitted to the data and the slope of the line represents the b value. This value for the real and modelled data in Fig. 20 is very similar indicating a very good correlation over the range M, where \(-3.5<\log P\). This suggests strongly that the parameters used in the model of seismicity are likely to be useful in gauging the potential seismic hazard for future mining activities in the area.

The third indicator of correlation is provided by a comparison of the directions of the principal components of the potency tensors of the various seismic events. The P-axis of the tensor corresponds to the eigenvector with the largest eigenvalue. Similarly the B- and T axes corresponds to the eigenvectors with the intermediate and smallest eigenvalues, respectively. Figure 21 shows some stereonet projections for each of the principal axes of both the real and model datasets. In general, a favourable comparison is observed between the two sets, especially with respect to the orientations of the P- and B axes, while there is a discernible slight rotation in the T axes for the modelled mechanisms when compared to the real data. Taken together, the three measures of comparison between real seismic data and the modelled seismicity all suggest strongly that there is value in deploying the model for the purpose intended. Despite a few small discrepancies, promising results have been obtained, indicating that there is value in using the modified MPM in mining applications.

Plots showing the P (left column), B (middle column) and T (right column) axes of seismicity. Data in the top row pertain to the real mine, while the model simulation results are shown in the bottom row. A good correlation is observed for the P- and B axes and a rotation in the T axis is seen for the modelled data. Both P axes are oriented about 160\(^\circ\) and dip around 10\(^\circ\). The B axes in both scenarios are oriented about 70\(^\circ\) and dip 0 \(^\circ\). The real seismic data show that the T axis is oriented about 310\(^\circ\) and dip approximately 85\(^\circ\), whereas the simulated data show that it is oriented about 70\(^\circ\) with zero dip

5 Conclusions

Large scale mining problems have traditionally been modelled using either finite element or boundary element techniques. Both of these methods can be difficult and computationally expensive to implement, owing principally to the cost of generating the underlying grid. The Material Point Method has the attraction that permits a relatively easy way to construct quite complex grids, but the standard version of this procedure requires a standard uniform mesh. This makes the application to mining situations less than straightforward, principally because the size of the problem makes the practical solution of the numerous equations infeasible. In this paper we have suggested a composite technique that serves to address twin problems; on the one hand we have an MPM which retains the attractiveness of ease of mesh generation, but have proposed a new gridding technique specifically focused on quasi-static mining related problems. Combined with a novel way of time-stepping the method, we have a strategy that is able to simulate microseismic events in a large-scale mine, which is efficient with regards to the required computational resources.

The performance of the new grid refinement technique was validated using the well-known Kirch solution. The output of our grid was compared to that of the classical regular MPM grid and to the predicted values of the analytical expressions for this problem. In both cases there were excellent agreements. Our new method of extracting seismic events from the numerical model was also validated using the Brazilian Disk Test experiment. We compared the output of our procedure with some results already reported in the literature. We again found a good agreement between the output of our method and this previous work. This theoretical work has been complemented by a real case study which gave a good comparison between real recorded seismic data and simulated events. Some relatively minor discrepancies were observed, which suggests further work could usefully be directed toward asking how the calibration of failure parameters might be improved. However, the principal outcome of our considerations is the demonstration that our refinement makes it feasible to use adapted MPM ideas to tackle some large-scale mining problems that contain micro-seismicity. The algorithm for extracting seismic events showed encouraging results and could potentially be used to simulate future scenarios of seismic hazard in mines. All in all then, this strengthens the case that the MPM family has a prominent role to play in analysing large-scale mining-related problems.

Availability of Data and Material

Mine seismic data is the property of the mine and will not be shared.

Code Availability

Not applicable.

Abbreviations

- L :

-

Three-dimensional grid cell length

- \(L_i\) :

-

Level i grid cell size

- t :

-

Time

- \(\Delta {t}\) :

-

Time step

- \(S_l\) :

-

Shape functions used to interpolate values between MPM particles and grid nodes

- \(G_l\) :

-

Derivatives of shape functions \(S_i\)

- \(C_L\) :

-

A list of sorted grid cells ranging from \(L_n\) to \(L_0\)

- \(m_l\) :

-

Mass of node l

- \(\mathbf{v} _l\) :

-

Velocity of node l

- \(\mathbf{v} _p\) :

-

Velocity of particle p

- \(\mathbf{f} _l\) :

-

Force acting on node l

- \(\sigma _p\) :

-

Stress of particle p

- \(\sigma _\mathrm{{r}}\) :

-

Radial stress

- \(\sigma _\theta\) :

-

Tangential stress

- \(\sigma _\infty\) :

-

Uniform applied stress in Kirces test

- d :

-

Radius of opening in Kirches test

- \(V_p\) :

-

Particle volume l

- \(\mathbf{b}\) :

-

External body forces acting on a particle

- \(m_p\) :

-

Mass of particle p

- \(\mathbf{a} _p\) :

-

Acceleration of node l

- \(\mathbf{f} _\mathrm{{d}}\) :

-

Damping force to remove energy from the MPM system

- \(\mathbf{x} _p\) :

-

Position of particle p

- \(\dot{\varepsilon }_{p}\) :

-

Strain increment of particle p

- \(\Delta \sigma {_p}^\mathrm{{p}}\) :

-

Stress drop of particle p due to constitutive relation

- P :

-

Seismic potency

- \(\bar{u}\) :

-

Average slip calculated over seismic source area

- \(A_\mathrm{{s}}\) :

-

Seismic source area

- \(P_\mathrm{{s}}\) :

-

Seismic potency of a single dislocation seismic source

- \(\Delta \varepsilon\) :

-

Strain change

- \(\Delta \sigma\) :

-

Stress change

- \(\mu\) :

-

Rigidity of rock mass

- \(V_\mathrm{{s}}\) :

-

Seismic source volume

- \(\Delta \varepsilon ^\mathrm{{p}}\) :

-

Plastic strain change

- V :

-

Volume

- \(\sigma _1\) :

-

Major principal stress

- \(\sigma _2\) :

-

Intermediate principal stress

- \(\sigma _3\) :

-

Minor principal stress

- \(P^\mathrm{{T}}\) :

-

Total potency of a group of failed particles

- \(P_{ij}\) :

-

Major principal stress

- \(\Delta \varepsilon ^\mathrm{{p}}_{ij}\) :

-

Tensor of plastic strain change

- \(X_{ij}\) :

-

Arbitrary tensor in closeness test

- \(Y_{ij}\) :

-

Arbitrary tensor in closeness test

- D :

-

Mine datum (depth from surface)

- \(\alpha\) :

-

A constant scaling factor ranging between 0 and 1 to account for a proportion of aseismic potency

- b :

-

Power-law exponent in Gutenberg–Richter law

- a :

-

A constant in the Gutenberg–Richter law

- N :

-

Number of events in Gutenberg–Richter law

References

Al-Kafaji I (2013) Formulation of a dynamic material point method (MPM) for geomechanical problems. Ph.D. thesis, University of Stuttgart. https://doi.org/10.18419/opus-496

Anderson L, Anderson SM (2007) Material-point method analysis of bending in elastic beams. In: The eleventh international conference on civil, structural and environmental engineering computing, vol 1. Aalborg University, pp 1–30

Bardenhagen S, Kober E (2004) The generalized interpolation material point method. Comput Model Eng Sci 5(6):477–495

Brebbia C (1973) The boundary element method for engineers. Pentech Press Limited, London

Cundall P (2009) FLAC 3D user guide, 2009, 1st edn. ITASCA

Davis S, Frolich C (1991) Single-link cluster analysis, synthetic earthquake catalogues, and aftershock identification. Geophys J Int 104(2):289–306

Ester M, Kriegel HP, Sander J, Xu X (1996) A density-based algorithm for discovering clusters a density-based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the second international conference on knowledge discovery and data mining, AAAI Press, KDD’96, pp 226–231. http://dl.acm.org/citation.cfm?id=3001460.3001507

Fairhurst C (1964) On the validity of the ‘Brazilian’ test for brittle materials. Int J Rock Mech Min Sci Geomech Abstr 1(4):535–546

Gao M (2017) An adaptive generalized interpolation material point method for simulating elastoplastic materials. ACM Trans Graph (TOG) 36(6):1–12

Hamad F (2014) Formulation of a dynamic material point method and applications to soil-water-geotextile systems. Ph.D. thesis, University of Stuttgart

Hughes T (1987) The finite element method: linear static and dynamic finite element analysis, vol 1. Prentice Hall, New Jersey

Itasca (2019) Fast Lagrangian analysis of continua. https://www.itascacg.com/software/FLAC. Accessed Feb 2021

Itasca (2021) Distinct element modelling of joint or blocky material. https://www.itascacg.com/software/3DEC. Accessed Feb 2021

Lian Y, Zhang X, Zhang F, Cui X (2014) Tied interface grid material point method for problems with localized extreme deformation. Int J Impact Eng 70(1):50–61

Lian Y, Zhang X, Zhang F, Cui X, Huang Y (2015) A mesh-grading material point method and its parallelization for problems with localized extreme deformation. Comput Methods Appl Math Eng 289(1):291–315

Liao Z, Zhu J, Tang C (2019) Numerical investigation of rock tensile strength determined by direct tension, Brazilian and three-point bending tests. Int J Rock Mech Min Sci 115(1):21–32

Linkov A (2005) Numerical modeling of seismic and aseismic events in geomechanics. J Min Sci 41(1):14–26

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability. Statistics, vol 1. University of California Press, Berkeley, California, pp 281–297. https://projecteuclid.org/euclid.bsmsp/1200512992. Accessed Feb 2021

Madariaga R (1979) On the relation between seismic moment and stress drop in the presence of stress and strength heterogeneity. J Geophys Res 84(B5):2243–2250

Malovichko D, Basson G (2014) Simulation of mining induced seismicity using Salamon–Linkov method. In: Hudyma M, Potvin Y (eds) Proceedings of the seventh international conference on deep and high stress mining, Australian Centre for Geomechanics, Perth

Mendecki A (2016) Mine seismology reference book: seismic hazard, 1st edn. Institute of Mine Seismology. ISBN 978-0-9942943-0-2

Michael A (1984) Determination of stress from slip data: faults and folds. J Geophys Res 90(B13):11517–11526

Michael A (1987) Use of focal mechanisms to determine stress: a control study. J Geophys Res 92(B1):357–368

Nairn J (2003) Material point method calculations with explicit cracks. Comput Model Eng Sci 4(6):649–663

Pantev I (2016) Contact modelling in the material point method. MSc Thesis. Delft University of Technology, Geoscience & Engineering

Ryder J, Jager A (2002) A textbook on rock mechanics for tabular hard rock mines. Safety in Mines Research Advisory Committee (SIMRAC), Johannesburg, p 489

Salamon M (1993) Keynote address: Some applications of geomechanical modelling in rockburst and related research. In: Young BP (ed) 3rd international symposium on rockbursts and seismicity in mines. Rotterdam, pp 297–309

Simulia (2019) Abaqus finite element analysis. http://www.3ds.com. Accessed Feb 2021

Steffen M, Wallstedt P, Guilkey J, Kirby R, Berzins M (2008) Examination and analysis of implementation choices within the material point method. Comput Model Eng Sci 31(2):107–127

Sulsky D, Chen Z, Scheyer H (1994) A particle method for history-dependent materials. Comput Methods Appl Mech Eng 118(1):179–196

Wang B, Vardon P, Hicks M, Chen Z (2015) Development of an implicit material point method for geotechnical applications. Comput Geotech 71:159–167

Wei J, Zhu W, Guan K, Zhou J, Song J (2019) An acoustic emission data-driven model to simulate rock failure process. Rock Mech Rock Eng 53:1605–1621

Acknowledgements

We are indebted to Kevin Stacey from Renison Bell mine for allowing us to use and show the mine data. We are also grateful to the referees whose numerous suggestions led to substantial improvements to this paper. We are indebted to the editors for their guidance and advice.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by GB. The first draft of the manuscript was written by GB and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Basson, G., Bassom, A.P. & Salmon, B. Simulating Mining-Induced Seismicity Using the Material Point Method. Rock Mech Rock Eng 54, 4483–4503 (2021). https://doi.org/10.1007/s00603-021-02522-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00603-021-02522-y