Abstract

In this paper we study the total variation flow (TVF) in metric random walk spaces, which unifies into a broad framework the TVF on locally finite weighted connected graphs, the TVF determined by finite Markov chains and some nonlocal evolution problems. Once the existence and uniqueness of solutions of the TVF has been proved, we study the asymptotic behaviour of those solutions and, with that aim in view, we establish some inequalities of Poincaré type. In particular, for finite weighted connected graphs, we show that the solutions reach the average of the initial data in finite time. Furthermore, we introduce the concepts of perimeter and mean curvature for subsets of a metric random walk space and we study the relation between isoperimetric inequalities and Sobolev inequalities. Moreover, we introduce the concepts of Cheeger and calibrable sets in metric random walk spaces and characterize calibrability by using the 1-Laplacian operator. Finally, we study the eigenvalue problem whereby we give a method to solve the optimal Cheeger cut problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and preliminaries

A metric random walk space [X, d, m] is a metric space (X, d) together with a family \(m = (m_x)_{x \in X}\) of probability measures that encode the jumps of a Markov chain. Important examples of metric random walk spaces are: locally finite weighted connected graphs, finite Markov chains and \([{\mathbb {R}}^N, d, m^J]\) with d the Euclidean distance and

where \(J:{\mathbb {R}}^N\rightarrow [0,+\infty [\) is a measurable, nonnegative and radially symmetric function with \(\int J =1\). Furthermore, given a metric measure space \((X,d, \mu )\) satisfying certain properties we can obtain a metric random walk space \([X, d, m^{\mu ,\epsilon }]\), called the \(\epsilon \)-step random walk associated to \(\mu \), where

Since its introduction as a means of solving the denoising problem in the seminal work by Rudin et al. [45], the total variation flow has remained one of the most popular tools in Image Processing. Recall that, from the mathematical point of view, the study of the total variation flow in \({\mathbb {R}}^N\) was established in [5]. On the other hand, the use of neighbourhood filters by Buades et al. [12], that was originally proposed by Yaroslavsky [52], has led to an extensive literature in nonlocal models in image processing (see for instance [8, 28, 31, 32] and the references therein). Consequently, there is great interest in studying the total variation flow in the nonlocal context. As further motivation, note that an image can be considered as a weighted graph, where the pixels are taken as the vertices and the “similarity” between pixels as the weights. The way in which these weights are defined depends on the problem at hand, see for instance [24, 32].

The aim of this paper is to study the total variation flow in metric random walk spaces, obtaining general results that can be applied, for example, to the different points of view in Image Processing. In this regard, we introduce the 1-Laplacian operator associated with a metric random walk space, as well as the notions of perimeter and mean curvature for subsets of a metric random walk space. In doing so, we generalize results obtained in [34, 35] for the particular case of \([{\mathbb {R}}^N, d, m^J]\), and, moreover, generalize results in graph theory. We then proceed to prove existence and uniqueness of solutions of the total variation flow in metric random walk spaces and to study its asymptotic behaviour with the help of some Poincaré type inequalities. Furthermore, we introduce the concepts of Cheeger and calibrable sets in metric random walk spaces and characterize calibrability by using the 1-Laplacian operator. Let us point out that, to our knowledge, some of these results were not yet known for graphs, nonetheless, we have specified in the main text which important results were already known for graphs. Moreover, in the forthcoming paper [37], we apply the theory developed here to obtain the \((BV,L^p)\)-decomposition, \(p=1,2\), of functions in metric random walk spaces. This decomposition can be applied to Image Processing if, for example, images are regarded as graphs and, moreover, to other nonlocal models.

Partitioning data into sensible groups is a fundamental problem in machine learning, computer science, statistics and science in general. In these fields, it is usual to face large amounts of empirical data, and getting a first impression of the data by identifying groups with similar properties can prove to be very useful. One of the most popular approaches to this problem is to find the best balanced cut of a graph representing the data, such as the Cheeger ratio cut [17]. Consider a finite weighted connected graph \(G =(V, E)\), where \(V = \{x_1, \ldots , x_n \}\) is the set of vertices (or nodes) and E the set of edges, which are weighted by a function \(w_{ji}= w_{ij} \ge 0\), \((i,j) \in E\). The degree of the vertex \(x_i\) is denoted by \(d_i:= \sum _{j=1}^n w_{ij}\), \(i=1,\ldots , n\). In this context, the Cheeger cut value of a partition \(\{ S, S^c\}\) (\(S^c:= V {\setminus } S\)) of V is defined as

where

and \(\mathrm{vol}(S)\) is the volume of S, defined as \(\mathrm{vol}(S):= \sum _{i \in S} d_i\). Furthermore,

is called the Cheeger constant, and a partition \(\{ S, S^c\}\) of V is called a Cheeger cut of G if \(h(G)=\mathcal {C}(S)\). Unfortunately, the Cheeger minimization problem of computing h(G) is NP-hard [29, 47]. However, it turns out that h(G) can be approximated by the second eigenvalue \(\lambda _2\) of the graph Laplacian thanks to the following Cheeger inequality [18]:

This motivates the spectral clustering method [51], which, in its simplest form, thresholds the second eigenvalue of the graph Laplacian to get an approximation to the Cheeger constant and, moreover, to a Cheeger cut. In order to achieve a better approximation than the one provided by the classical spectral clustering method, a spectral clustering based on the graph p-Laplacian was developed in [13], where it is showed that the second eigenvalue of the graph p-Laplacian tends to the Cheeger constant h(G) as \(p \rightarrow 1^+\). In [47] the idea was taken up by directly considering the variational characterization of the Cheeger constant h(G)

where

The subdifferential of the energy functional \(\vert \cdot \vert _{TV}\) is the 1-Laplacian in graphs \(\Delta _1\). Using the nonlinear eigenvalue problem \(0 \in \Delta _1 u - \lambda \, \mathrm{sign}(u)\), the theory of 1-Spectral Clustering is developed in [14,15,16, 29], and good results on the Cheeger minimization problem have been obtained.

In [36], we obtained a generalization, in the framework of metric random walk spaces, of the Cheeger inequality (1.1) and of the variational characterization of the Cheeger constant (1.2). In this paper, in connection with the 1-Spectral Clustering, also in metric random walk spaces, we study the eigenvalue problem of the 1-Laplacian and then relate it to the optimal Cheeger cut problem. Then again, these results apply, in particular, to locally finite weighted connected graphs, complementing the results given in [14,15,16, 29].

Additionally, regarding the notion of a function of bounded variation in a metric measure space \((X,d, \mu )\) introduced by Miranda in [41], we provide, via the \(\epsilon \)-step random walk associated to \(\mu \), a characterization of these functions.

1.1 Metric random walk spaces

Let (X, d) be a Polish metric space equipped with its Borel \(\sigma \)-algebra. A random walk m on X is a family of probability measures \(m_x\) on X, \(x \in X\), satisfying the two technical conditions: (i) the measures \(m_x\) depend measurably on the point \(x \in X\), i.e., for any Borel set A of X and any Borel set B of \({\mathbb {R}}\), the set \(\{ x \in X \ : \ m_x(A) \in B \}\) is Borel; (ii) each measure \(m_x\) has finite first moment, i.e. for some (hence any, by the triangle inequality) \(z \in X\), and for any \(x \in X\) one has \(\int _X d(z,y) dm_x(y) < +\infty \) (see [44]).

A metric random walk space [X, d, m] is a Polish metric space (X, d) together with a random walk m on X.

Let [X, d, m] be a metric random walk space. A Radon measure \(\nu \) on X is invariant for the random walk \(m=(m_x)\) if

that is, for any \(\nu \)-measurable set A, it holds that A is \(m_x\)-measurable for \(\nu \)-almost all \(x\in X\), \(\displaystyle x\mapsto m_x(A)\) is \(\nu \)-measurable, and

Consequently, if \(\nu \) is an invariant measure with respect to m and \(f \in L^1(X, \nu )\), it holds that \(f \in L^1(X, m_x)\) for \(\nu \)-a.e. \(x \in X\), \(\displaystyle x\mapsto \int _X f(y) d{m_x}(y)\) is \(\nu \)-measurable, and

The measure \(\nu \) is said to be reversible for m if, moreover, the following detailed balance condition holds:

that is, for any Borel set \(C \subset X \times X\),

where \(\upchi _{C}\) is the characteristic function of the set C defined as

Note that the reversibility condition implies the invariance condition. However, we will sometimes write that \(\nu \) is invariant and reversible so as to emphasize both conditions.

We now give some examples of metric random walk spaces that illustrate the general abstract setting. In particular, Markov chains serve as paradigmatic examples that capture many of the properties of this general setting that we will encounter during our study.

Example 1.1

- (1)

Consider \(({\mathbb {R}}^N, d, \mathcal {L}^N)\), with d the Euclidean distance and \(\mathcal {L}^N\) the Lebesgue measure. For simplicity we will write dx instead of \(d\mathcal {L}^N(x)\). Let \(J:{\mathbb {R}}^N\rightarrow [0,+\infty [\) be a measurable, nonnegative and radially symmetric function verifying \(\int _{{\mathbb {R}}^N}J(x)dx=1\). In \(({\mathbb {R}}^N, d, \mathcal {L}^N)\) we have the following random walk, starting at x,

$$\begin{aligned} m^J_x(A) := \int _A J(x - y) dy \quad \hbox { for every Borel set } A \subset {\mathbb {R}}^N . \end{aligned}$$Applying Fubini’s Theorem it is easy to see that the Lebesgue measure \(\mathcal {L}^N\) is an invariant and reversible measure for this random walk.

Observe that, if we assume that in \({\mathbb {R}}^N\) we have an homogeneous population and \(J(x-y)\) is thought of as the probability distribution of jumping from location x to location y, then, for a Borel set A in \({\mathbb {R}}^N\), \(m^J_x(A)\) is measuring how many individuals are going to A from x following the law given by J. See also the interpretation of the m-interaction between sets given in Sect. 2.1. Finally, note that the same ideas are applicable to the countable spaces given in the following two examples.

- (2)

Let \(K: X \times X \rightarrow {\mathbb {R}}\) be a Markov kernel on a countable space X, i.e.,

$$\begin{aligned} K(x,y) \ge 0 \quad \forall x,y \in X, \quad \quad \sum _{y\in X} K(x,y) = 1 \quad \forall x \in X. \end{aligned}$$Then, for

$$\begin{aligned} m^K_x(A):= \sum _{y \in A} K(x,y), \end{aligned}$$\([X, d, m^K]\) is a metric random walk space for any metric d on X.

Moreover, in Markov chain theory terminology, a measure \(\pi \) on X satisfying

$$\begin{aligned} \sum _{x \in X} \pi (x) = 1 \quad \hbox {and} \quad \pi (y) = \sum _{x \in X} \pi (x) K(x,y) \quad \forall y \in X, \end{aligned}$$is called a stationary probability measure (or steady state) on X. This is equivalent to the definition of invariant probability measure for the metric random walk space \([X, d, m^K]\). In general, the existence of such a stationary probability measure on X is not guaranteed. However, for irreducible and positive recurrent Markov chains (see, for example, [30] or [43]) there exists a unique stationary probability measure.

Furthermore, a stationary probability measure \(\pi \) is said to be reversible for K if the following detailed balance equation holds:

$$\begin{aligned} K(x,y) \pi (x) = K(y,x) \pi (y) \ \hbox { for } x, y \in X. \end{aligned}$$By Tonelli’s Theorem for series, this balance condition is equivalent to the one given in (1.3) for \(\nu =\pi \):

$$\begin{aligned} dm^K_x(y)d\pi (x) = dm^K_y(x)d\pi (y). \end{aligned}$$ - (3)

Consider a locally finite weighted discrete graph \(G = (V(G), E(G))\), where each edge \((x,y) \in E(G)\) (we will write \(x\sim y\) if \((x,y) \in E(G)\)) has a positive weight \(w_{xy} = w_{yx}\) assigned. Suppose further that \(w_{xy} = 0\) if \((x,y) \not \in E(G)\).

A finite sequence \(\{ x_k \}_{k=0}^n\) of vertices on the graph is called a path if \(x_k \sim x_{k+1}\) for all \(k = 0, 1, \ldots , n-1\). The length of a path \(\{ x_k \}_{k=0}^n\) is defined as the number n of edges in the path. Then, \(G = (V(G), E(G))\) is said to be connected if, for any two vertices \(x, y \in V\), there is a path connecting x and y, that is, a path \(\{ x_k \}_{k=0}^n\) such that \(x_0 = x\) and \(x_n = y\). Finally, if \(G = (V(G), E(G))\) is connected, define the graph distance \(d_G(x,y)\) between any two distinct vertices x, y as the minimum of the lengths of the paths connecting x and y. Note that this metric is independent of the weights. We will always assume that the graphs we work with are connected.

For \(x \in V(G)\) we define the weight at the vertex x as

$$\begin{aligned} d_x:= \sum _{y\sim x} w_{xy} = \sum _{y\in V(G)} w_{xy}, \end{aligned}$$and the neighbourhood of x as \(N_G(x) := \{ y \in V(G) \, : \, x\sim y\}\). Note that, by definition of locally finite graph, the sets \(N_G(x)\) are finite. When \(w_{xy}=1\) for every \(x\sim y\), \(d_x\) coincides with the degree of the vertex x in a graph, that is, the number of edges containing vertex x.

For each \(x \in V(G)\) we define the following probability measure

$$\begin{aligned} m^G_x:= \frac{1}{d_x}\sum _{y \sim x} w_{xy}\,\delta _y. \end{aligned}$$We have that \([V(G), d_G, m^G]\) is a metric random walk space and it is not difficult to see that the measure \(\nu _G\) defined as

$$\begin{aligned} \nu _G(A):= \sum _{x \in A} d_x, \quad A \subset V(G), \end{aligned}$$is an invariant and reversible measure for this random walk.

Given a locally finite weighted discrete graph \(G = (V(G), E(G))\), there is a natural definition of a Markov chain on the vertices. We define the Markov kernel \(K_G: V(G)\times V(G) \rightarrow {\mathbb {R}}\) as

$$\begin{aligned} K_G(x,y):= \frac{1}{d_x} w_{xy}. \end{aligned}$$We have that \(m^G\) and \(m^{K_G}\) define the same random walk. If \(\nu _G(V(G))\) is finite, the unique stationary and reversible probability measure is given by

$$\begin{aligned} \pi _G(x):= \frac{1}{\nu _G(V(G))} \sum _{z \in V(G)} w_{xz}. \end{aligned}$$ - (4)

From a metric measure space \((X,d, \mu )\) we can obtain a metric random walk space, the so called \(\epsilon \)-step random walk associated to \(\mu \), as follows. Assume that balls in X have finite measure and that \(\mathrm{Supp}(\mu ) = X\). Given \(\epsilon > 0\), the \(\epsilon \)-step random walk on X starting at \(x\in X\), consists in randomly jumping in the ball of radius \(\epsilon \) centered at x with probability proportional to \(\mu \); namely

$$\begin{aligned} m^{\mu ,\epsilon }_x:= \frac{\mu |\!\_B(x, \epsilon )}{\mu (B(x, \epsilon ))}. \end{aligned}$$Note that \(\mu \) is an invariant and reversible measure for the metric random walk space \([X, d, m^{\mu ,\epsilon }]\).

- (5)

Given a metric random walk space [X, d, m] with invariant and reversible measure \(\nu \), and given a \(\nu \)-measurable set \(\Omega \subset X\) with \(\nu (\Omega ) > 0\), if we define, for \(x\in \Omega \),

$$\begin{aligned} m^{\Omega }_x(A):=\int _A d m_x(y)+\left( \int _{X{\setminus } \Omega }d m_x(y)\right) \delta _x(A) \ \hbox { for every Borel set } A \subset \Omega , \end{aligned}$$we have that \([\Omega ,d,m^{\Omega }]\) is a metric random walk space and it easy to see that \(\nu |\!\_\Omega \) is reversible for \(m^{\Omega }\).

In particular, if \(\Omega \) is a closed and bounded subset of \({\mathbb {R}}^N\), we obtain the metric random walk space \([\Omega , d, m^{J,\Omega }]\), where \(m^{J,\Omega } = (m^J)^{\Omega }\), that is

From this point onwards, when dealing with a metric random walk space, we will assume that there exists an invariant and reversible measure for the random walk, which we will always denote by \(\nu \). In this regard, when it is clear from the context, a measure denoted by \(\nu \) will always be an invariant and reversible measure for the random walk under study. Furthermore, we assume that the metric measure space \((X,d,\nu )\) is \(\sigma \)-finite.

1.2 Completely accretive operators and semigroup theory

Since Semigroup Theory will be used along the paper, we would like to conclude this introduction with some notations and results from this theory along with results from the theory of completely accretive operators (see [9, 10, 22], or the Appendix in [6], for more details). We denote by \(J_0\) and \(P_0\) the following sets of functions:

Let \(u,v\in L^1(X,\nu )\). The following relation between u and v is defined in [9]:

An operator \(\mathcal {A} \subset L^1(X,\nu )\times L^1(X,\nu )\) is called completely accretive if

and every \((u_i, v_i) \in \mathcal {A}\), \(i=1,2\). Moreover, an operator \(\mathcal {A}\) in \(L^1(X,\nu )\) is m-completely accretive in \(L^1(X,\nu )\) if \(\mathcal {A}\) is completely accretive and \({Range}(I + \lambda \mathcal {A}) = L^1(X,\nu )\) for all \(\lambda > 0\) (or, equivalently, for some \(\lambda >0\)).

Theorem 1.2

[9, 10] If \(\mathcal {A}\) is an m-completely accretive operator in \(L^1(X,\nu )\), then, for every \(u_0 \in \overline{D(\mathcal {A})}\) (the closure of the domain of \(\mathcal {A}\)), there exists a unique mild solution (see [22]) of the problem

Moreover, if \(\mathcal {A}\) is the subdifferential of a proper convex and lower semicontinuous function in \(L^2(X,\nu )\) then the mild solution of the above problem is a strong solution.

Furthermore we have the following contraction and maximum principle in any \(L^q(X,\nu )\) space, \(1\le q\le +\infty \): for \(u_{1,0},u_{2,0} \in \overline{D(\mathcal {A})}\) and denoting by \(u_i\) the unique mild solution of the problem

\( i=1,2,\) we have

where \(r^+:=\max \{r,0\}\) for \(r\in {\mathbb {R}}\).

2 Perimeter, curvature and total variation in metric random walk spaces

2.1 m-perimeter

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). We define the m-interaction between two \(\nu \)-measurable subsets A and B of X as

Whenever \(L_m(A,B) < +\infty \), by the reversibility assumption on \(\nu \) with respect to m, we have

Following the interpretation given after Example 1.1 (1), for a \(\nu \)-homogeneous population which moves according to the law provided by the random walk m, \(L_m(A,B)\) measures how many individuals are moving from A to B, and, thanks to the reversibility, this is equal to the amount of individuals moving from B to A. In this regard, the following concept measures the total flux of individuals that cross the “boundary” (in a very weak sense) of a set.

We define the concept of m-perimeter of a \(\nu \)-measurable subset \(E \subset X\) as

It is easy to see that

Moreover, if E is \(\nu \)-integrable, we have

The notion of m-perimeter can be localized to a bounded open set \(\Omega \subset X\) by defining

Observe that

and, consequently, we have

when both integrals are finite.

Example 2.1

-

(1)

Let \([{\mathbb {R}}^N, d, m^J]\) be the metric random walk space given in Example 1.1 (1) with invariant measure \(\mathcal {L}^N\). Then,

$$\begin{aligned} P_{m^J} (E) = \frac{1}{2} \int _{{\mathbb {R}}^N} \int _{{\mathbb {R}}^N} \vert \upchi _{E}(y) - \upchi _{E}(x) \vert J(x -y) dy dx, \end{aligned}$$which coincides with the concept of J-perimeter introduced in [34]. On the other hand,

$$\begin{aligned} P_{ m^{J,\Omega }} (E) = \frac{1}{2} \int _{\Omega } \int _{\Omega } \vert \upchi _{E}(y) - \upchi _{E}(x) \vert J(x -y) dy dx. \end{aligned}$$Note that, in general, \(P_{ m^{J,\Omega }} (E) \not = P_{m^J} (E).\)

Moreover,

$$\begin{aligned} P_{ m^{J,\Omega }} (E)= & {} \mathcal {L}^N(E) - \int _E \int _E dm_x^{J,\Omega }(y) dx = \mathcal {L}^N(E) - \int _E \int _E J(x-y) dy dx \\&-\, \int _E \left( \int _{{\mathbb {R}}^N {\setminus } \Omega } J(x - z) dz\right) dx \end{aligned}$$and, therefore,

$$\begin{aligned} P_{ m^{J,\Omega }} (E) = P_{ m^{J}} (E) - \int _E \left( \int _{{\mathbb {R}}^N {\setminus } \Omega } J(x - z) dz\right) dx, \quad \forall \, E \subset \Omega . \end{aligned}$$(2.2) -

(2)

In the case of the metric random walk space \([V(G), d_G, m^G ]\) associated to a finite weighted discrete graph G, given \(A, B \subset V(G)\), \(\mathrm{Cut}(A,B)\) is defined as

$$\begin{aligned} \mathrm{Cut}(A,B):= \sum _{x \in A, y \in B} w_{xy} = L_{m^G}(A,B), \end{aligned}$$and the perimeter of a set \(E \subset V(G)\) is given by

$$\begin{aligned} \vert \partial E \vert := \mathrm{Cut}(E,E^c) = \sum _{x \in E, y \in V {\setminus } E} w_{xy}. \end{aligned}$$Consequently, we have that

$$\begin{aligned} \vert \partial E \vert = P_{m^G}(E) \quad \hbox {for all} \ E \subset V(G). \end{aligned}$$

Let us now give some properties of the m-perimeter.

Proposition 2.2

Let \(A,\ B \subset X\) be \(\nu \)-measurable sets with finite m-perimeter such that \(\nu (A \cap B) = 0\). Then,

Proof

We have

and then, by the reversibility assumption on \(\nu \) with respect to m,

\(\square \)

Corollary 2.3

Let \(A,\ B,\ C\) be \(\nu \)-measurable sets in X with pairwise \(\nu \)-null intersections. Then

2.2 m-mean curvature

Let \(E \subset X\) be \(\nu \)-measurable. For a point \(x \in X\) we define the m-mean curvature of \(\partial E\) at x as

Observe that

Note that \(H^m_{\partial E}(x)\) can be computed for every \(x \in X\), not only for points in \(\partial E\). This fact will be used later in the paper. Having in mind (2.1), we have that, for a \(\nu \)–integrable set \(E\subset X\),

Consequently,

2.3 m-total variation

Associated to the random walk \(m=(m_x)\) and the invariant measure \(\nu \), we define the space

We have that \(L^1(X,\nu )\subset BV_m(X,\nu )\). The m-total variation of a function \(u\in BV_m(X,\nu )\) is defined by

Note that

Observe that the space \(BV_m(X,\nu )\) is the nonlocal counterpart of classical local bounded variation spaces. Note further that, in the local context, given a Lebesgue measurable set \(E\subset {\mathbb {R}}^n\), its perimeter is equal to the total variation of its characteristic function (see (2.19)) and the above Eq. (2.5) provides the nonlocal counterpart. In (2.21) and Theorem 2.22 we illustrate further relations between these spaces.

However, although they represent analogous concepts in different settings, the classical local BV-spaces and the nonlocal BV-spaces are of a different nature. For example, in our nonlocal framework \(L^1(X,\nu )\subset BV_m(X,\nu )\) in contrast with classical local bounded variation spaces that are, by definition, contained in \(L^1\). Indeed, since each \(m_x\) is a probability measure, \(x\in X\), and \(\nu \) is invariant with respect to m, we have that

Recall the definition of the generalized product measure \(\nu \otimes m_x\) (see, for instance, [3]), which is defined as the measure on \(X \times X\) given by

where it is required that the map \(x \mapsto m_x(E)\) is \(\nu \)-measurable for any Borel set \(E \in \mathcal {B}(X)\). Moreover, it holds that

for every \(g\in L^1(X\times X,\nu \otimes m_x)\). Therefore, we can write

Example 2.4

Let \([V(G), d_G, (m^G_x)]\) be the metric random walk space given in Example 1.1 (3) with invariant and reversible measure \(\nu _G\). Then,

which coincides with the anisotropic total variation defined in [50].

In the following results we give some properties of the total variation.

Proposition 2.5

If \(\phi : {\mathbb {R}}\rightarrow {\mathbb {R}}\) is Lipschitz continuous then, for every \(u \in BV_m(X,\nu )\), \(\phi (u) \in BV_m(X,\nu )\) and

Proof

\(\square \)

Proposition 2.6

\(TV_m\) is convex and continuous in \(L^1(X, \nu )\).

Proof

Convexity follows easily. Let us see that it is continuous. Let \(u_n \rightarrow u\) in \(L^1(X, \nu )\). Since \(\nu \) is invariant and reversible with respect to m, we have

\(\square \)

As in the local case, we have the following coarea formula relating the total variation of a function with the perimeter of its superlevel sets.

Theorem 2.7

(Coarea formula) For any \(u \in L^1(X,\nu )\), let \(E_t(u):= \{ x \in X \ : \ u(x) > t \}\). Then,

Proof

Since

we have

Moreover, since \(u(y) \ge u(x)\) implies \(\upchi _{E_t(u)}(y) \ge \upchi _{E_t(u)} (x)\), we obtain that

Therefore, we get

where Tonelli–Hobson’s Theorem is used in the third equality. \(\square \)

Let us recall the following concept of m-connectedness introduced in [34]: A metric random walk space [X, d, m] with invariant and reversible measure \(\nu \) is m-connected if, for any pair of \(\nu \)-non-null measurable sets \( A,B\subset X\) such that \(A\cup B=X\), we have \(L_m(A,B)> 0\). Moreover, in [36, Theorem 2.19], we see that this concept is equivalent to the following concept of ergodicity (see [30]) when \(\nu \) is a probability measure.

Definition 2.8

Let [X, d, m] be a metric random walk space with invariant and reversible probability measure \(\nu \). A Borel set \(B \subset X\) is said to be invariant with respect to the random walk m if \(m_x(B) = 1\) whenever x is in B. The invariant probability measure \(\nu \) is said to be ergodic if \(\nu (B) = 0\) or \(\nu (B) = 1\) for every invariant set B with respect to the random walk m.

Furthermore, by [36, Theorem 2.21], we have that \(\nu \) is ergodic if, and only if, for \(u\in L^2(X,\nu )\), \(\Delta _m u = 0\) implies that u is \(\nu \)-a.e. equal to a constant, where

As an example, note that the metric random walk space associated to an irreducible and positive recurrent Markov chain on a countable space together with its steady state is m-connected (see [30]). Moreover, the metric random walk space \([V(G), d_G, m^G]\) associated to a locally finite weighted connected discrete graph \(G = (V(G), E(G))\) is \(m^G\)-connected. In [36] we give further examples involving the metric random walk space given in Example 1.1 (1).

Observe that, for a metric random walk space [X, d, m] with invariant and reversible measure \(\nu \), if the space is m-connected, then the m-perimeter of any \(\nu \)-measurable set E with \(0<\nu (E)<\nu (X)\) is positive.

Lemma 2.9

Assume that \(\nu \) is ergodic and let \(u\in BV_m(X,\nu )\). Then,

Proof

(\(\Leftarrow \)) Suppose that u is \(\nu \)-a.e. equal to a constant k, then, since \(\nu \) is invariant with respect to m, we have

(\(\Rightarrow \)) Suppose that

Then, \(\int _X |u(y)-u(x)| dm_x(y)=0\) for \(\nu \)-a.e. \(x\in X\), thus

and we are done by the comments preceding the lemma. \(\square \)

From now on we will assume that the metric random walk spaces that we work with are m-connected (this assumption is only dropped in Sect. 2.5). However, we would like to point out that if a metric random walk space [X, d, m] is not m-connected then it may be broken down as \(X=A\cup B\) where A, \(B\subset X\) have \(\nu \)-positive measure and \(L_m(A,B)=0\), allowing us to work with A and B independently. Then, for example, if \(E\subset X\) is a \(\nu \)-measurable set we get

and, if \(u\in BV_m(X,\nu )\),

2.4 Isoperimetric and Sobolev inequalities

The n-dimensional isoperimetric inequality states that

for every domain \(\Omega \subset {\mathbb {R}}^n\) with smooth boundary and compact closure, where \(c_n = \frac{1}{n \omega _n}\), and \(\omega _n\) is the volume of the unit ball. It is well known (see for instance [39]) that (2.7) is equivalent to the Sobolev inequality

If we replace the Euclidean space \({\mathbb {R}}^n\) by a Riemannian manifold M with measure \(\mu _n\), then the isoperimetric inequality takes the following form:

for all bounded sets \(\Omega \subset M\) with smooth boundary, being \(\mu _{n-1}\) the surface measure. As in the Euclidean case (see [38] or [46]), (2.8) is equivalent to the Sobolev inequality

Consequently, it is natural to say that a Riemannian manifold M has isoperimetric dimension n if (2.9) holds (see [21]). The equivalence between isoperimetric inequalities and Sobolev inequalities in the context of Markov chains was obtained by Varopoulos in [49]. Let us state these results under the context treated here.

Definition 2.10

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). We say that \([X,d,m,\nu ]\) has isoperimetric dimension n if there exists a constant \(I_n>0\) such that

We assume that, for \(n = 1\), \( \frac{n}{n-1} = +\infty \) by convention.

We will denote by \(BV^0_m(X,\nu )\) the set of functions \(u \in BV_m(X,\nu )\) satisfying that there exists \(A\subset X\), with \( 0< \nu (A)<\nu (X)\), such that \(u=0\) in \(X{\setminus } A\).

Theorem 2.11

\([X,d,m,\nu ]\) has isoperimetric dimension n if, and only if,

The constant \(I_n\) is the same as in (2.10).

Proof

(\(\Leftarrow \)) Given \(A \subset X\) with \(0<\nu (A)<\nu (X)\), applying (2.11) to \(\upchi _A\), we get

(\(\Rightarrow \)) Let us see that (2.10) implies (2.11). Since \(TV_m(|u|) \le TV_m( u )\), we may assume that \(u \ge 0\) without loss of generality.

Suppose first that \(n=1\) and let \(u \in BV^0_m(X,\nu )\) such that \(u\ge 0\) and is not \(\nu \)-a.e. equal to 0 (otherwise, (2.11) is trivially satisfied). Note that, in this case, since u is null outside of a \(\nu \)-measurable set A with \(\nu (A)<\nu (X)\), we have \(\nu (E_t(u))<\nu (X)\) for \(t>0\) and, moreover, by the definition of the \(L^\infty (X,\nu )\)-norm, \(0<\nu (E_t(u))\) for \(t<\Vert u \Vert _{L^\infty (X,\nu )}\). Then, by the coarea formula and (2.10), we have

Therefore, we may suppose that \(n >1\). Let \(p:= \frac{n}{n-1}\). Again, by the coarea formula and (2.10), if \(u \in BV^0_m(X,\nu )\), \(u\ge 0\) and not identically \(\nu \)-null, we get

where \(\Vert u \Vert _{L^\infty (X,\nu )}=+\infty \) if \(u\notin L^\infty (X,\nu )\). On the other hand, since the function \(\varphi (t):= \nu (E_t(u))^{\frac{1}{p}}\) is nonnegative and non-increasing, we have

Integrating over (0, t) and letting \(t \rightarrow \Vert u \Vert _{L^\infty (X,\nu )}\), we obtain

that is,

Now,

Thus, by (2.13), we get

Finally, from (2.12) and (2.14), we obtain (2.11). \(\square \)

Note that, if we take \(\Psi _n (r):= \frac{1}{I_n} r^{-\frac{1}{n}}\), we can rewrite (2.10) as

The next definition was given in [21] for Riemannian manifolds.

Definition 2.12

Given a non-increasing function \(\Psi : ]0, \infty [ \rightarrow [0,\infty [\), we say that \([X,d,m,\nu ]\) satisfies a \(\Psi \) -isoperimetric inequality if

Example 2.13

-

(1)

In [48] (see also the references therein) it is shown that the lattice \({\mathbb {Z}}^n\) has isoperimetric dimension n with constant \(I_n= \frac{1}{2n}\), and that the complete graph \(K_n\) satisfies a \(\Psi \)-isoperimetric inequality with \(\Psi (r) = n - r \). In addition, it is also proved that the n-cube \(Q_n\) satisfies a \(\Psi \)-isoperimetric inequality with \(\Psi (r) = \mathrm{log}_2 (\frac{\nu (Q_n)}{r})\).

-

(2)

In [35], for \([\mathbb {R}^N,d,m^J]\), it is proved that

$$\begin{aligned} \Psi _{_{J,N}}(|A|)\le P_J(A) \quad \hbox {for all } \ A \subset X \ \hbox {with} \ |A| < +\infty , \end{aligned}$$being

$$\begin{aligned} \Psi _{_{J,N}}(r)= \int _{B_{\left( r/\omega _N\right) ^\frac{1}{N}}}H^J_{\partial B_{\Vert x\Vert }}(x)dx =\int _0^{r} H_{\partial B_{\left( s/\omega _N\right) ^\frac{1}{N}}}^J (\left( s/\omega _N\right) ^\frac{1}{N}, 0, \ldots , 0)ds, \end{aligned}$$where \(B_r\) is the ball of radius r centered at 0 and \(H_{\partial B_r}^J\) is the \(m^J\)-mean curvature of \(\partial B_r\) (see Sect. 2.2). Therefore, \([\mathbb {R}^N,d,m^J, \mathcal {L}^N]\) satisfies a \(\Psi \)-isoperimetric inequality, where \(\Psi (r) = \frac{1}{r} \Psi _{_{J,N}}(r)\) is a decreasing function.

The next result was proved in [21] for Riemannian manifolds and in [20] for graphs (see also [48, Theorem 2]).

Proposition 2.14

Given a non-increasing function \(\Psi : ]0, \infty [ \rightarrow [0,\infty [\), we have that \([X,d,m,\nu ]\) satisfies a \(\Psi \)-isoperimetric inequality if, and only if, the following inequality holds:

for all \(\nu \)-measurable sets \(A \subset X\) with \(0<\nu (A)<\nu (X)\) and all \(u \in L^1(X, \nu )\) with \(u = 0 \ \hbox {in} \ X {\setminus } A.\)

Proof

Taking \(u = \upchi _A\) in (2.15), we obtain that \([X,d,m,\nu ]\) satisfies a \(\Psi \)-isoperimetric inequality. Conversely, since \(TV_m(|u|) \le TV_m( u )\), it is enough to prove (2.15) for \(u \ge 0\). If \(u\equiv 0\) in X the result is trivial. Therefore, let A be a \(\nu \)-measurable set with \(0<\nu (A)<\nu (X)\) and \(0 \le u \in L^1(X, \nu )\) a non-\(\nu \)-null function with \(u \equiv 0 \ \hbox {in} \ X {\setminus } A\). For \(t>0\) we have that \(E_t(u) \subset A\) and, therefore, \(\nu (E_t(u)) \le \nu (A)\), thus, since \(\Psi \) is non-increasing, we have that \(\Psi (\nu (E_t(u)) \ge \Psi (A)\). Therefore, by the coarea formula, we have

\(\square \)

As a consequence of Theorem 2.11 and Proposition 2.14, we obtain the following result.

Corollary 2.15

The following assertions are equivalent:

- (i)

\(\Vert u \Vert _{L^{\frac{n}{n-1}}(X, \nu ) } \le I_n TV_m(u) \quad \forall u \in BV^0_m(X,\nu ).\)

- (ii)

\( \Vert u \Vert _{L^1(X, \nu )} \le I_n \nu (A)^{\frac{1}{n}}TV_m(u)\) for all \(A \subset X\) with \(0<\nu (A)<\nu (X)\) and all \(u \in L^1(X, \nu )\) with \(u = 0\) in \(X {\setminus } A.\)

Consider the Dirichlet energy functional \(\mathcal {H}_m : L^2(X, \nu ) \rightarrow [0, + \infty ]\) defined as

The next result, in the context of Markov chains, was obtained by Varopoulos in [49].

Theorem 2.16

Let \(n >2\). If the Sobolev inequality

holds, then there exists \(C_n >0\) such that

Proof

We can assume that \(u \ge 0\). Let \(p:= \frac{2(n-1)}{n-2}\). By (2.16), we have

On the other hand, since, for \(a,b>0\),

by the convexity of \(|x|^p\), and having in mind the reversibility of \(\nu \), we have

Then, by (2.17), we get

Now,

thus, from (2.18),

and, therefore,

where \(C_n = \frac{8(n-1)^2}{(n-2)^2} I_n^2.\) \(\square \)

Following Theorems 2.11 and 2.16 we can also obtain a Sobolev inequality as a consequence of the isoperimetric dimensional inequality.

Corollary 2.17

Assume that \(\nu (X) < \infty \). Let \(n >2\). If \([X,d,m,\nu ]\) has isoperimetric dimension n then there exists \(C_n >0\) such that

Let us point out that an important consequence of this result is Theorem 5 in [19], which corresponds to Corollary 2.17 for the particular case of finite weighted graphs.

2.5 m-TV versus TV in metric measure spaces

Let \((X, d, \nu )\) be a metric measure space and recall that, for functions in \(L^1(X, \nu )\), Miranda introduced a local notion of total variation in [41] (see also [2]). To define this notion, first note that for a function \(u : X \rightarrow {\mathbb {R}}\), its slope (or local Lipschitz constant) is defined as

with the convention that \(\vert \nabla u \vert (x) = 0\) if x is an isolated point.

A function \(u \in L^1(X, \nu )\) is said to be a BV-function if there exists a sequence \((u_n)\) of locally Lipschitz functions converging to u in \(L^1(X, \nu )\) and such that

We shall denote the space of all BV-functions by \(BV(X,d, \nu )\). Let \(u \in BV(X,d, \nu )\), the total variation of u on an open set \(A \subset X\) is defined as:

A set \(E \subset X\) is said to be of finite perimeter if \(\upchi _E \in BV(X,d, \nu )\) and its perimeter is defined as

We want to point out that in [2] the BV-functions are characterized using different notions of total variation.

As aforementioned, the local classical BV-spaces and the nonlocal BV-spaces are of different nature although they represent analogous concepts in different settings. In this section we compare these spaces, showing that it is possible to relate the nonlocal concept to the local one after rescaling and taking limits.

Remark 2.18

Obviously,

Furthermore, there exist metric measures spaces in which the equality in this expression does not hold (see [4, Remark 4.4]).

Proposition 2.19

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). Let \(u\in BV(X,d,\nu )\). Then \(u\in BV(X,d,m_x)\) for \(\nu \)-a.e. \(x\in X\) and

Proof

Since \(u \in BV(X,d, \nu )\), there exists a sequence \(\{ u_n \}_{n \in {\mathbb {N}}} \subset Lip_{\text {loc}}(X,\nu )\) such that

Now, using the invariance of \(\nu \),

Therefore, we may take a subsequence, which we still denote by \(u_{n}\), such that \(\lim _{n \rightarrow \infty }\Vert u_n - u \Vert _{L^1(X, m_x)}=0\) for \(\nu \)-a.e. \(x\in X\).

Moreover, by Fatou’s lemma and the invariance of \(\nu \),

Consequently, \(\liminf _{n \rightarrow \infty } \int _{X} \vert \nabla u_n \vert (y) dm_x(y)<\infty \) and \(\lim _{n\rightarrow \infty }u_n=u\) in \(L^1(X,m_x)\) for \(\nu \)-a.e. \(x\in X\), thus \(u\in BV(X,d,m_x)\) for \(\nu \)-a.e. \(x\in X\), and

\(\square \)

It is shown in [35] that, in the context of Example 1.1 (1), and assuming that J satisfies

we have that

for every \(u \in BV({\mathbb {R}}^N)\).

In the next example we see that there exist metric random walk spaces in which it is not possible to obtain an inequality like (2.20).

Example 2.20

Let \(G= (V(G), E(G))\) be a locally finite weighted discrete graph with weights \(w_{x,y}\). For a fixed \(x_0 \in V(G)\) the function \(u = \upchi _{\{x_0\}}\) is a Lipschitz function and, since every vertex is isolated for the graph distance, \(|\nabla u|\equiv 0\), thus

However, by Example 2.4, we have

Let \([\mathbb {R}^N,d,m^J]\) be the metric random walk space of Example 1.1 (1). Then, if J is compactly supported and \(u \in BV({\mathbb {R}}^N)\) has compact support we have that (see [23] and [34])

where

In particular, if we take

then

Hence,

and, consequently, by (2.21), we have

Therefore, it is natural to pose the following problem: Let \((X,d, \mu )\) be a metric measure space and let \(m^{\mu ,\epsilon }\) be the \(\epsilon \)-step random walk associated to \(\mu \), that is,

Are there metric measure spaces for which

To give a positive answer to the previous question we recall the following concepts on a metric measure space \((X,d, \nu )\): The measure \(\nu \) is said to be doubling if there exists a constant \(C_D \ge 1\) such that

A doubling measure \(\nu \) has the following property. For every \(x \in X\) and \(0< r \le R < \infty \) if \(y \in B(x,R)\) then

where C is a positive constant depending only on \(C_D\) and \(q_{\nu } = \log _2 C_D\).

On the other hand, the metric measure space \((X,d, \nu )\) is said to support a 1-Poincaré inequality if there exist constants \(c>0\) and \(\lambda \ge 1\) such that, for any \(u \in \mathrm{Lip}(X,d)\), the inequality

holds, where

The following result is proved in [33, Theorem 3.1].

Theorem 2.21

[33] Let \((X,d, \nu )\) be a metric measure space with \(\nu \) doubling and supporting a 1-Poincaré inequality. Given \(u \in L^1(X, \mu )\), we have that \(u \in BV(X,d,\nu )\) if, and only if,

where \(\Delta _\epsilon := \{ (x,y) \in X \times X \ : \ d(x,y) < \epsilon \}\). Moreover, there is a constant \(C \ge 1\), that depends only on \((X,d, \nu )\), such that

Now, by Fubini’s Theorem, we have

On the other hand, by (2.22), there exists a constant \(C_1 >0\), depending only on \(C_D\), such that

By (2.24), we have

Hence, from (2.23) and (2.25), we get

Therefore, we can rewrite Theorem 2.21 as follows.

Theorem 2.22

Let \((X,d, \nu )\) be a metric measure space with doubling measure \(\nu \) and supporting a 1-Poincaré inequality. Given \(u \in L^1(X, \nu )\), we have that \(u \in BV(X,d,\nu )\) if, and only if,

Moreover, there is a constant \(C \ge 1\), that depends only on \((X,d, \nu )\), such that

Remark 2.23

Monti, in [42], defines

and uses this to prove rearrangement theorems in the setting of metric measure spaces. Moreover, he proposes \(\Vert \nabla u\Vert _{L^1(X,\mu )}^-\) as a possible definition of the \(L_1\)-length of the gradient of functions in metric measure spaces.

3 The 1-Laplacian and the total variation flow in metric random walk spaces

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). Assume, as aforementioned, that [X, d, m] is m-connected.

Given a function \(u : X \rightarrow {\mathbb {R}}\) we define its nonlocal gradient \(\nabla u: X \times X \rightarrow {\mathbb {R}}\) as

which should not be confused with the slope \(\vert \nabla u \vert (x)\), \(x\in X\), introduced in Sect. 2.5.

For a function \(\mathbf{z}: X \times X \rightarrow {\mathbb {R}}\), its m -divergence \(\mathrm{div}_m \mathbf{z}: X \rightarrow {\mathbb {R}}\) is defined as

and, for \(p \ge 1\), we define the space

Let \(u \in BV_m(X,\nu ) \cap L^{p'}(X,\nu )\) and \(\mathbf{z}\in X_m^p(X)\), \(1\le p\le \infty \), having in mind that \(\nu \) is reversible, we have the following Green’s formula:

In the next result we characterize \(TV_m\) and the m-perimeter using the m-divergence operator. Let us denote by \(\hbox {sign}_0(r)\) the usual sign function and by \(\hbox {sign}(r)\) the multivalued sign function:

Proposition 3.1

Let \(1\le p\le \infty \). For \(u \in BV_m(X,\nu ) \cap L^{p'}(X,\nu )\), we have

In particular, for any \(\nu \)-measurable set \(E \subset X\), we have

Proof

Let \(u \in BV_m(X,\nu ) \cap L^{p'}(X,\nu )\). Given \(\mathbf{z}\in X_m^p(X)\) with \(\Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)} \le 1\), applying Green’s formula (3.1), we have

Therefore,

On the other hand, since (X, d) is \(\sigma \)-finite, there exists a sequence of sets \(K_1 \subset K_2 \subset \cdots \subset K_n \subset \cdots \) of \(\nu \)-finite measure, such that \(X = \cup _{n=1}^\infty K_n\). Then, if we define \(\mathbf{z}_n(x,y):= \mathrm{sign}_0(u(y) - u(x))\upchi _{K_n \times K_n}(x,y)\), we have that \(\mathbf{z}_n \in X_m^p(X)\) with \(\Vert \mathbf{z}_n \Vert _{L^\infty (X\times X, \nu \otimes m_x)} \le 1\) and

\(\square \)

Corollary 3.2

\(TV_m\) is lower semi-continuous with respect to the weak convergence in \(L^2(X, \nu )\).

Proof

If \(u_n \rightharpoonup u\) weakly in \(L^2(X, \nu )\) then, given \(\mathbf{z}\in X_m^2(X)\) with \(\Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)} \le 1\), we have that

by Proposition 3.1. Now, taking the supremum over \(\mathbf{z}\) in this inequality, we get

\(\square \)

Consider the formal nonlocal evolution equation

In order to study the Cauchy problem associated to the previous equation, we will see in Theorem 3.8 that we can rewrite it as the gradient flow in \(L^2(X,\nu )\) of the functional \(\mathcal {F}_m : L^2(X, \nu ) \rightarrow ]-\infty , + \infty ]\) defined by

which is convex and lower semi-continuous. Following the method used in [5] we will characterize the subdifferential of the functional \(\mathcal {F}_m\).

Given a functional \(\Phi : L^2(X,\nu ) \rightarrow [0, \infty ]\), we define \(\widetilde{\Phi }: L^2(X,\nu ) \rightarrow [0, \infty ]\) as

with the convention that \(\frac{0}{0} = \frac{0}{\infty } = 0\). Obviously, if \(\Phi _1 \le \Phi _2\), then \(\widetilde{\Phi }_2 \le \widetilde{\Phi }_1\).

Theorem 3.3

Let \(u \in L^2(X,\nu )\) and \(v \in L^2(X,\nu )\). The following assertions are equivalent:

- (i)

\(v \in \partial \mathcal {F}_m (u)\);

- (ii)

there exists \(\mathbf{z}\in X_m^2(X)\), \(\Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)} \le 1\) such that

$$\begin{aligned} v = - \mathrm{div}_m \mathbf{z}\end{aligned}$$(3.4)and

$$\begin{aligned} \int _{X} u(x) v(x) d\nu (x) = \mathcal {F}_m (u); \end{aligned}$$ - (iii)

there exists \(\mathbf{z}\in X_m^2(X)\), \(\Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)} \le 1\) such that (3.4) holds and

$$\begin{aligned} \mathcal {F}_m (u) = \frac{1}{2}\int _{X \times X} \nabla u(x,y) \mathbf{z}(x,y) d(\nu \otimes m_x)(x,y); \end{aligned}$$ - (iv)

there exists \(\mathbf{g}\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric with \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) such that

$$\begin{aligned} -\int _{X}\mathbf{g}(x,y)\,dm_x(y)= v(x) \quad \hbox {for }\nu -\text{ a.e } x\in X, \end{aligned}$$(3.5)and

$$\begin{aligned} -\int _{X} \int _{X}{} \mathbf{g}(x,y)dm_x(y)\,u(x)d\nu (x)=\mathcal {F}_m(u). \end{aligned}$$(3.6) - (v)

there exists \(\mathbf{g}\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric with \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) verifying (3.5) and

$$\begin{aligned} \mathbf{g}(x,y) \in \mathrm{sign}(u(y) - u(x)) \quad \hbox {for }(\nu \otimes m_x)-a.e. \ (x,y) \in X \times X. \end{aligned}$$(3.7)

Proof

Since \(\mathcal {F}_m\) is convex, lower semi-continuous and positive homogeneous of degree 1, by [5, Theorem 1.8], we have

We define, for \(v \in L^2(X,\nu )\),

Observe that \(\Psi \) is convex, lower semi-continuous and positive homogeneous of degree 1. Moreover, it is easy to see that, if \(\Psi (v) < \infty \), the infimum in (3.9) is attained i.e., there exists some \(\mathbf{z}\in X_m^2(X)\) such that \(v = - \mathrm{div}_m\mathbf{z}\) and \(\Psi (v) = \Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)}.\)

Let us see that

We begin by proving that \(\widetilde{\mathcal {F}_m}(v) \le \Psi (v)\). If \(\Psi (v) = +\infty \) then this assertion is trivial. Therefore, suppose that \(\Psi (v) < +\infty \). Let \(\mathbf{z}\in L^\infty (X \times X,\nu \otimes m_x)\) such that \(v = - \mathrm{div}_m \mathbf{z}\). Then, for \(w\in L^2(X,\nu )\), we have

Taking the supremum over w we obtain that \(\widetilde{\mathcal {F}_m}(v) \le \Vert \mathbf{z}\Vert _{L^\infty (X\times X, \nu \otimes m_x)}\). Now, taking the infimum over \(\mathbf{z}\), we get \(\widetilde{\mathcal {F}_m}(v) \le \Psi (v)\).

To prove the opposite inequality let us denote

Then, by (3.2), we have that, for \(v\in L^2(X, \nu )\),

Thus, \( \mathcal {F}_m \le \widetilde{ \Psi }\), which implies, by [5, Proposition 1.6], that \(\Psi = \widetilde{\widetilde{\Psi }} \le \widetilde{ \mathcal {F}_m}\). Therefore, \(\Psi = \widetilde{\mathcal {F}_m}\), and, consequently, from (3.8), we get

from where the equivalence between (i) and (ii) follows .

To prove the equivalence between (ii) and (iii) we only need to apply Green’s formula (3.1).

On the other hand, to see that (iii) implies (iv), it is enough to take \(\mathbf{g}(x,y)=\frac{1}{2}(\mathbf{z}(x,y)-\mathbf{z}(y,x))\). Moreover, to see that (iv) implies (ii), take \(\mathbf{z}(x,y)= \mathbf{g}(x,y)\) (observe that, from (3.5), \(- \mathrm{div}_m(\mathbf{g}) = v\), so \(\mathbf{g}\in X^2_m(X)\)). Finally, to see that (iv) and (v) are equivalent, we need to show that (3.6) and (3.7) are equivalent. Now, since \(\mathbf{g}\) is antisymmetric with \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) and \(\nu \) is reversible, we have

from where the equivalence between (3.6) and (3.7) follows. \(\square \)

By Theorem 3.3 and following [6, Theorem 7.5], the next result is easy to prove.

Proposition 3.4

\(\partial \mathcal {F}_m\) is an m-completely accretive operator in \(L^2(X,\nu )\).

Definition 3.5

We define in \(L^2(X,\nu )\) the multivalued operator \(\Delta ^m_1\) by

\((u, v ) \in \Delta ^m_1\) if, and only if, \(-v \in \partial \mathcal {F}_m(u)\).

As usual, we will write \(v\in \Delta ^m_1 u\) for \((u,v)\in \Delta ^m_1\).

Chang in [14] and Hein and Bühler in [29] define a similar operator in the particular case of finite graphs:

Example 3.6

Let \([V(G), d_G, (m^G_x)]\) be the metric random walk given in Example 1.1 (3) with invariant measure \(\nu _G\). By Theorem 3.3, we have

and

The next example shows that the operator \(\Delta ^{m^G}_1\) is indeed multivalued. Let \(V(G) = \{ a, b \}\) and \(w_{aa} = w_{bb} = p\), \(w_{ab} = w_{ba} = 1- p\), with \(0< p <1\). Then,

and

Now, since \(\mathbf{g}\) is antisymmetric, we get

Proposition 3.7

[Integration by parts] For any \((u,v)\in \Delta _1^m\) it holds that

and

Proof

Since \(-v \in \partial \mathcal {F}_m(u)\), given \(w \in BV_m(X,\nu )\), we have that

so we get (3.10). On the other hand, (3.11) is given in Theorem 3.3. \(\square \)

As a consequence of Theorem 3.3, Proposition 3.4 and on account of Theorem 1.2, we can give the following existence and uniqueness result for the Cauchy problem

which is a rewrite of the formal expression (3.3).

Theorem 3.8

For every \(u_0 \in L^2( X,\nu )\) and any \(T>0\), there exists a unique solution of the Cauchy problem (3.12) in (0, T) in the following sense: \(u \in W^{1,1}(0,T; L^2(X,\nu ))\), \(u(0, \cdot ) = u_0\) in \(L^2(X,\nu )\), and, for almost all \(t \in (0,T)\),

Moreover, we have the following contraction and maximum principle in any \(L^q(X,\nu )\)–space, \(1\le q\le \infty \):

for any pair of solutions, \(u,\, v\), of problem (3.12) with initial data \(u_0,\, v_0\) respectively.

Definition 3.9

Given \(u_0 \in L^2(X, \nu )\), we denote by \(e^{t \Delta ^m_1}u_0\) the unique solution of problem (3.12). We call the semigroup \(\{e^{t\Delta ^m_1} \}_{t \ge 0}\) in \(L^2(X, \nu )\) the Total Variational Flow in the metric random walk space [X, d, m] with invariant and reversible measure \(\nu \).

In the next result we give an important property of the total variational flow in metric random walk spaces.

Proposition 3.10

The TVF satisfies the mass conservation property: for \(u_0 \in L^2(X, \nu )\),

Proof

By Proposition 3.7, we have

and

Hence,

and, consequently,

\(\square \)

4 Asymptotic behaviour of the TVF and Poincaré type inequalities

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). Assume as always that [X, d, m] is m-connected.

Proposition 4.1

For every initial data \(u_0 \in L^2(X, \nu )\),

with

Moreover, if \(\nu (X) < \infty \) then

Proof

Since \(\mathcal {F}_m\) is a proper and lower semicontinuous function in X attaining a minimum at the constant zero function and, moreover, \(\mathcal {F}_m\) is even, by [11, Theorem 5], we have

with

Now, since \(0 \in \Delta _1^m (u_\infty ) \), we have that \(TV_m(u_\infty ) = 0\) thus, by Lemma 2.9, if \(\nu (X)<\infty \) (then \(\frac{1}{\nu (X)}\nu \) is ergodic) we get that \(u_\infty \) is constant. Therefore, by Proposition 3.10,

\(\square \)

Let us see that we can get a rate of convergence of the total variational flow \((e^{t\Delta ^m_1})_{t \ge 0}\) when a Poincaré type inequality holds.

From now on in this section we will assume that

Hence, \(\mathcal {F}_m(u) = TV_m(u)\) for all \(u\in L^2(X,\nu )\).

Definition 4.2

We say that \([X,d,m,\nu ]\) satisfies a (q, p)-Poincaré inequality (\(p, q\in [1,+ \infty [\)) if there exists a constant \(c>0\) such that, for any \(u \in L^q(X,\nu )\),

or, equivalently (by the triangle inequality for one direction and taking \({\tilde{u}}=u-\nu (u)\) for the other), there exists a \(\lambda > 0\) such that

where \(\nu (u):= \frac{1}{\nu (X)} \int _X u(x) d \nu (x)\).

When \([X,d,m,\nu ]\) satisfies a (q, p)-Poincaré inequality, we will denote

When \([X,d,m,\nu ]\) satisfies a (1, p)-Poincaré inequality, we will say that \([X,d,m,\nu ]\) satisfies a p-Poincaré inequality and write

The following result was proved in [6, Theorem 7.11] for the particular case of the metric random walk space \([\Omega , d, m^{J,\Omega }]\).

Theorem 4.3

If \([X,d,m,\nu ]\) satisfies a 1-Poincaré inequality, then, for any \(u_0 \in L^2(X, \nu )\),

Proof

Since the semigroup \(\{e^{t\Delta ^m_1 } \ : \ t \ge 0 \}\) preserves the mass (Proposition 3.10), we have

Furthermore, the complete accretivity of the operator \(-\Delta ^m_1\) (see Sect. 1.2) implies that

is a Liapunov functional for the semigroup \(\{e^{t\Delta ^m_1 } \ : \ t \ge 0 \}\), which implies that

Now, by the Poincaré inequality we get

and, by (4.2) and (4.3), we obtain that

On the other hand, by integration by parts (Proposition 3.7),

and then

which implies

Hence, by (4.4)

which concludes the proof. \(\square \)

To obtain a family of metric random walk spaces for which a 1-Poincaré inequality holds, we need the following result.

Lemma 4.4

Suppose that \(\nu \) is a probability measure (thus ergodic) and

Let \(q\ge 1\). Let \(\{u_n\}_n\subset L^q(X,\nu )\) be a bounded sequence in \(L^1(X,\nu )\) satisfying

Then, there exists \(\lambda \in \mathbb {R}\) such that

Proof

Let

and

From (4.5), it follows that

Passing to a subsequence if necessary, we can assume that

On the other hand, by (4.5), we also have that

Therefore, we can suppose that, up to a subsequence,

Let \(B_2\subset X\) be a \(\nu \)-null set satisfying that,

Finally, set \(B:=B_1\cup B_2.\)

Fix \(x_0\in X{\setminus } B\). Up to a subsequence we have that \(u_n(x_0)\rightarrow \lambda \) for some \(\lambda \in [-\infty ,+\infty ]\), but then, by (4.7), for every \(y\in X{\setminus } C_{x_0}\) we also have that \(u_n(y)\rightarrow \lambda \). However, since \(m_{x_0}\ll \nu \) and \(m_{x_0}( X {\setminus } C_{x_0})>0\), we have that \(\nu (X{\setminus } C_{x_0})>0\); thus, if \(A=\{x\in X: u_n(x)\rightarrow \lambda \}\) then \(\nu (A)>0\).

Let us see that

Indeed, let \(x\in A{\setminus } B\). Then, for \(y\in X {\setminus } C_x\), \(u_n(y)\rightarrow \lambda \), thus \(y\in A\); that is, \(X{\setminus } C_x\subset A\), and, consequently, \(m_x(A)=1\). Now, since \(m_x(B)=0\), we have

Therefore, since \(\nu \) is ergodic, (4.8) implies that \(1=\nu (A{\setminus } B)=\nu (A)\).

Consequently, we have obtained that \(u_n\) converges \(\nu \)-a.e. in X to \(\lambda \):

Since \(\Vert u_n\Vert _{L^1 (X,\nu )}\) is bounded, by Fatou’s Lemma, we must have that \(\lambda \in {\mathbb {R}}\). On the other hand, by (4.6),

for every \(x\in X{\setminus } B_1\). In other words, \(\Vert u_n(\cdot )-u_n(x)\Vert _{L^q (X, m_x)}\rightarrow 0\). Thus

\(\square \)

Theorem 4.5

Suppose that \(\nu \) is a probability measure and

Let (H1) and (H2) denote the following hypothesis.

- (H1)

Given a \(\nu \)-null set B, there exist \(x_1,x_2,\ldots , x_N\in X{\setminus } B\), \(\nu \)-measurable sets \(\Omega _1,\Omega _2,\ldots ,\Omega _N\subset X\) and \(\alpha >0\), such that \( X= \bigcup \nolimits _{i=1}^N\Omega _i\) and \(\displaystyle \frac{dm_{x_i}}{d\nu }\ge \alpha >0\) on \(\Omega _i\), \(i=1,2,\ldots ,N\).

- (H2)

Let \( 1\le p<q\). Given a \(\nu \)-null set B, there exist \(x_1,x_2,\ldots , x_N\in X{\setminus } B\) and \(\nu \)-measurable sets \(\Omega _1,\Omega _2,\ldots ,\Omega _N\subset X\), such that \( X= \bigcup \nolimits _{i=1}^N\Omega _i\) and, for \(g_i:=\displaystyle \frac{dm_{x_i}}{d\nu } \) on \(\Omega _i\), \(\displaystyle g_i^{-\frac{p}{q-p}}\in L^{1}(\Omega _i,\nu )\), \(i=1,2,\ldots ,N\).

Then, if (H1) holds, we have that \([X,d,m,\nu ]\) satisfies a (p, p)-Poincaré inequality for every \(p\ge 1\), and, if (H2) holds, then \([X,d,m,\nu ]\) satisfies a (q, p)-Poincaré inequality.

Proof

Let \(1\le p\le q\). We want to prove that there exists a constant \(c>0\) such that

for any \(p=q\ge 1\) when assuming (H1) and for the \(1\le p<q\) appearing in (H2) when this hypothesis is assumed. Suppose that this inequality is not satisfied. Then, there exists a sequence \((u_n)_{n\in {\mathbb {N}}}\subset L^q(X,\nu )\), with \(\Vert u_n\Vert _{L^p (X,\nu )}=1\), satisfying

and

Therefore, by Lemma 4.4, there exist \(\lambda \in \mathbb {R}\) and a \(\nu \)-null set \(B\subset X\) such that

We will now prove, distinguishing the cases in which we assume hypothesis (H1) or (H2), that

Suppose first that hypothesis (H1) is satisfied. Then, there exist \(x_1,x_2,\ldots , x_N\in X{\setminus } B\), \(\nu \)-measurable sets \(\Omega _1,\Omega _2,\ldots ,\Omega _N\subset X\) and \(\alpha >0\), such that \(\displaystyle X= \bigcup _{i=1}^N\Omega _i\) and \(\displaystyle g_i:=\frac{dm_{x_i}}{d\nu }\ge \alpha >0\) on \(\Omega _i\), \(i=1,2,\ldots ,N\). Note that, in this case, \(p=q\) in the previous computations. Now,

Consequently, since \(X= \bigcup _{i=1}^N\Omega _i\),

Therefore,

Suppose now that hypothesis (H2) holds. Then, there exist \( 1\le p<q\), such that, given a \(\nu \)-null set B, there exist \(x_1,x_2,\ldots , x_N\in X{\setminus } B\) and \(\nu \)-measurable sets \(\Omega _1,\Omega _2,\ldots ,\Omega _N\subset X\), such that \(\displaystyle X= \bigcup _{i=1}^N\Omega _i\) and, for \(g_i:=\displaystyle \frac{dm_{x_i}}{d\nu }\) on \(\Omega _i\), \(\displaystyle g_i^{-\frac{p}{q-p}} \in L^{1}(\Omega _i)\), \(i=1,2,\ldots ,N\). Hence,

Consequently, since \(X= \bigcup _{i=1}^N\Omega _i\),

Therefore,

which concludes the proof of (4.9) in both cases.

Now, since \(\displaystyle \lim _n\int _X u_n\,d\nu =0\), by (4.9) we get that \(\lambda =0\), but this implies

which is a contradiction with \(||u_n||_p=1\), \(n\in {\mathbb {N}}\), so we are done. \(\square \)

On account of Theorem 4.3, we obtain the following result on the asymptotic behaviour of the TVF.

Corollary 4.6

Under the hypothesis of Theorem 4.5, for any \(u_0 \in L^2(X, \nu )\),

Example 4.7

We give two examples of metric random walk spaces in which a 1-Poincaré inequality does not hold.

- (1)

A locally finite weighted discrete graph with infinitely many vertices: Let \([V(G),d_G,m^G]\) be the metric random walk space associated to the locally finite weighted discrete graph with vertex set \(V(G):=\{x_3,x_4,x_5\ldots ,x_n\ldots \}\) and weights:

$$\begin{aligned} w_{x_{3n},x_{3n+1}}=\frac{1}{n^3} , \ w_{x_{3n+1},x_{3n+2}}=\frac{1}{n^2}, \ w_{x_{3n+2},x_{3n+3}}=\frac{1}{n^3} , \end{aligned}$$for \(n\ge 1\), and \(w_{x_i,x_j}=0\) otherwise (recall Example 1.1 (3)). Moreover, let

$$\begin{aligned} f_n(x):=\left\{ \begin{array}{ll} \displaystyle n^2 &{}\quad \hbox {if} \ \ x=x_{3n+1},x_{3n+2} \\ \\ 0 &{}\quad \hbox {else}. \end{array}\right. \end{aligned}$$Note that \(\nu _G(V)<+\infty \) (we avoid its normalization for simplicity). Now,

$$\begin{aligned} 2TV_{m^G}(f_n)= & {} \int _V\int _V \vert f_n(x)-f_n(y)\vert dm_x(y)d\nu _G(x) \\= & {} d_{x_{3n}}\int _V \vert f_n(x_{3n})-f_n(y)\vert dm_{x_{3n}}(y)\\&+\,d_{x_{3n+1}}\int _V \vert f_n(x_{3n+1})-f_n(y) \vert dm_{x_{3n+1}}(y) \\&+\,d_{x_{3n+2}}\int _V \vert f_n(x_{3n+2})-f_n(y) \vert dm_{x_{3n+2}}(y)\\&+\,d_{x_{3n+3}}\int _V \vert f_n(x_{3n+3})-f_n(y) \vert dm_{x_{3n+3}}(y) \\= & {} n^2\frac{1}{n^3}+n^2\frac{1}{n^3}+n^2\frac{1}{n^3}+n^2\frac{1}{n^3}=\frac{4}{n} . \end{aligned}$$However, we have

$$\begin{aligned} \int _V f_n(x)d\nu _G(x)=n^2 (d_{x_{3n+1}}+d_{x_{3n+2}})=2n^2 \left( \frac{1}{n^2}+\frac{1}{n^3}\right) =2\left( 1+\frac{1}{n}\right) , \end{aligned}$$thus

$$\begin{aligned} \nu _G(f_n)=\frac{2\left( 1+\frac{1}{n}\right) }{\nu _G(V)}=O\left( 1\right) , \end{aligned}$$where we use the notation

$$\begin{aligned} \varphi (n) = O(\psi (n)) \iff \limsup _{n \rightarrow \infty } \left| \frac{\varphi (n)}{\psi (n)}\right| = C \ne 0. \end{aligned}$$Therefore,

$$\begin{aligned} \vert f_n(x)-\nu _G(f_n)\vert =\left\{ \begin{array}{ll} \displaystyle O(n^2) &{}\quad \hbox {if} \ \ x=x_{3n+1},x_{3n+2}, \\ \\ O\left( 1\right) &{}\quad \hbox {otherwise}. \end{array}\right. \end{aligned}$$Finally,

$$\begin{aligned} \int _V \vert f_n(x)-\nu _G(f_n)\vert d\nu _G(x)= & {} O\left( 1\right) \sum _{x\ne x_{3n+1},x_{3n+2}}d_{x}+ O(n^2)(d_{x_{3n+1}}+d_{x_{3n+2}}) \\= & {} O\left( 1\right) +2 O(n^2)\left( \frac{1}{n^2}+\frac{1}{n^3}\right) =O(1) . \end{aligned}$$Consequently,

$$\begin{aligned} \inf \left\{ \frac{TV_{m^G}(u)}{\Vert u - \nu _G(u) \Vert _{L^1(V(G),\nu _G)}} \ : \ u\in L^1(V,\nu _G) , \ \Vert u \Vert _{L^1(V(G),\nu _G)} \not = 0 \right\} =0, \end{aligned}$$and a 1-Poincaré inequality does not hold for this space.

- (2)

The metric random walk space \([{\mathbb {R}}, d, m^J]\), where d is the Euclidean distance and \(J(x)= \frac{1}{2} \upchi _{[-1,1]}\): Define, for \(n \in {\mathbb {N}}\),

$$\begin{aligned} u_n= \frac{1}{2^{n+1}} \upchi _{[2^n, 2^{n+1}]} - \frac{1}{2^{n+1}}\upchi _{[-2^{n+1}, - 2^n]}. \end{aligned}$$Then \(\Vert u_n \Vert _1 := 1\), \(\displaystyle \int _{\mathbb {R}}u_n(x) dx = 0\) and it is easy to see that, for n large enough,

$$\begin{aligned} TV_{m^J} (u_n) = \frac{1}{2^{n+1}}. \end{aligned}$$Therefore, \((m^J, \mathcal {L}^1)\) does not satisfy a 1-Poincaré inequality.

Let us see that, when \([X,d,m,\nu ]\) satisfies a 2-Poincaré inequality, the solution of the Total Variational Flow reaches the steady state in finite time.

Theorem 4.8

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). If \([X,d,m,\nu ]\) satisfies a 2-Poincaré inequality then, for any \(u_0 \in L^2(X, \nu )\),

where \(\lambda ^{2}_{[X,d,m,\nu ]}\) is given in (4.1). Consequently,

Proof

Let \(v(t):= u(t) - \nu (u_0)\), where \(u(t):= e^{t\Delta ^m_1}u_0\). Since \(\Delta _1^m u(t)=\Delta _1^m \big (u(t) - \nu (u_0)\big )\), we have that

Note that \(v(t)\in BV_m(X,\nu )\) for every \(t>0\). Indeed, since \(-\Delta _m^1= \partial \mathcal {F}_m\) is a maximal monotone operator in \(L^2(X,\nu )\), by [10, Theorem 3.7] in the context of the Hilbert space \(L^2(X,\nu )\), we have that \(v(t)\in D(\Delta _m^1)\subset BV_m(X,\nu )\) for every \(t>0\).

Hence, for each \(t>0\), by Theorem 3.3, there exists \(\mathbf{g}_t\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric with \(\Vert \mathbf{g}_t \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) such that

and

Then, multiplying (4.10) by v(t) and integrating over X with respect to \(\nu \), having in mind (4.11), we get

Now, the semigroup \(\{e^{t\Delta ^m_1 } \ : \ t \ge 0 \}\) preserves the mass (Proposition 3.10), so we have that \(\nu (u(t)) = \nu (u_0)\) for all \(t \ge 0\), and, since \([X,d,m,\nu ]\) satisfies a 2-Poincaré inequality, we have

Therefore,

Now, integrating this ordinary differential inequation we get

that is,

\(\square \)

We define the extinction time as

Under the conditions of Theorem 4.8, we have

To obtain a lower bound on the extinction time, we introduce the following norm which, in the continuous setting, was introduced in [40]. Given a function \(f \in L^2(X, \nu )\), we define

Theorem 4.9

Let \(u_0 \in L^2(X, \nu )\). If \(T^*(u_0) < \infty \) then

Proof

If \(u(t):= e^{t\Delta ^m_1}u_0\), we have

Then, by integration by parts (Proposition 3.7), we get

\(\square \)

We will now see that we can get a 2-Poincaré inequality for finite graphs.

Theorem 4.10

Let \(G = (V(G), E(G))\) be a finite weighted connected discrete graph. Then, following the notation of Example 1.1 (3), \([V(G),d_G,m^G, \nu _G]\) satisfies a 2-Poincaré inequality, that is,

Proof

Let \(V := V(G) = \{x_1, \ldots , x_m\}\) and suppose that (4.12) is false. Then, there exists a sequence \((u_n)_{n\in {\mathbb {N}}} \subset L^2(V, \nu _G)\) with \(\Vert u_n \Vert _{L^2(V,\nu _G)} =1\) and \(\int _V u_n(x) d \nu _G(x) =0\), \(n\in {\mathbb {N}}\), such that

Hence,

Moreover, since \(\Vert u_n \Vert _{L^2(V,\nu _G)} =1\), we have that, up to a subsequence,

Now, since the graph is connected, we have that \(\lambda = \lambda _k\) for \(k= 1, \ldots , m\), thus

However, by the Dominated Convergence Theorem, we get that \(u_n \rightarrow \lambda \) in \(L^2(V,\nu _G )\) and, therefore, since \(\int _V u_n(x) d \nu _G(x) =0\), we have \(\lambda =0\), which is a contradiction with \(\Vert u_n \Vert _{L^2(V,\nu _G)} =1\). \(\square \)

As a consequence of this last result and Theorem 4.8, we get:

Theorem 4.11

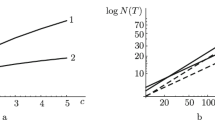

Let \(G = (V(G), E(G))\) be a finite weighted connected discrete graph. Then,

where \({\hat{t}}:=\frac{\left\| u_0-\nu (u_0)\right\| _{L^2(V(G),\nu _G)}}{\lambda ^{2}_{[V(G),d_G,m^G,\nu _G]}}\). Consequently,

5 m-Cheeger and m-calibrable sets

Let [X, d, m] be a metric random walk space with invariant and reversible measure \(\nu \). Assume, as before, that [X, d, m] is m-connected.

Given a set \(\Omega \subset X\) with \(0< \nu (\Omega ) < \nu (X)\), we define its m-Cheeger constant by

where the notation \(h_1^m(\Omega )\) is chosen together with the one that we will use for the classical Cheeger constant (see (5.2)). In both of these, the subscript 1 is there to further distinguish them from the upcoming notation \(h_m(X)\) for the m-Cheeger constant of X (see (6.6)). Note that, by (2.1), we have that \(h_1^m(\Omega ) \le 1\).

A \(\nu \)-measurable set \(E \subset \Omega \) achieving the infimum in (5.1) is said to be an m-Cheeger set of \(\Omega \). Furthermore, we say that \(\Omega \) is m-calibrable if it is an m-Cheeger set of itself, that is, if

For ease of notation, we will denote

for any \(\nu \)-measurable set \(\Omega \subset X\) with \(0<\nu (\Omega )<\nu (X)\).

Remark 5.1

-

(1)

Let \([{\mathbb {R}}^N, d, m^J]\) be the metric random walk space given in Example 1.1 (1) with invariant and reversible measure \(\mathcal {L}^N\). Then, the concepts of m-Cheeger set and m-calibrable set coincide with the concepts of J-Cheeger set and J-calibrable set introduced in [34] (see also [35]).

-

(2)

If \(G = (V(G), E(G))\) is a locally finite weighted discrete graph without loops (i.e., \(w_{xx} =0\) for all \(x \in V\)) and more than two vertices, then any subset consisting of two vertices is \(m^G\)-calibrable. Indeed, let \(\Omega = \{x, y \}\), then, by (2.1), we have

$$\begin{aligned} \frac{P_{m^G}(\{x\})}{\nu _G(\{x\})}= 1 - \int _{\{x\}}\int _{\{x\}} dm^G_x(z) d\nu _G(z) = 1 \ge \frac{P_{m^G}(\Omega )}{\nu _G(\Omega )}, \end{aligned}$$and, similarly,

$$\begin{aligned} \frac{P_{m^G}(\{y\})}{\nu _G(\{y\})} = 1 \ge \frac{P_{m^G}(\Omega )}{\nu _G(\Omega )}. \end{aligned}$$Therefore, \(\Omega \) is \(m^G\)-calibrable.

In [34] it is proved that, for the metric random walk space \([{\mathbb {R}}^N, d, m^J]\), each ball is a J-calibrable set. In the next example we will see that this result is not true in general.

Example 5.2

Let \(V(G)=\{ x_1,x_2,\ldots , x_7 \} \) be a finite weighted discrete graph with the following weights: \( w_{x_1,x_2}=2 , \ w_{x_2,x_3}=1 , \ w_{x_3,x_4}=2 , \ w_{x_4,x_5}=2 , \ w_{x_5,x_6}=1 , \ w_{x_6,x_7}=2 \) and \(w_{x_i,x_j}=0\) otherwise. Then, if \(E_1=B(x_4,\frac{5}{2})=\{ x_2, x_3, \ldots , x_6 \}\), by (6.7) we have

But, taking \(E_2=B(x_4,\frac{3}{2})=\{ x_3, x_4, x_5 \}\subset E_1\), we have

Consequently, the ball \(B(x_4,\frac{5}{2})\) is not m-calibrable.

In the next Example we will see that there exist metric random walk spaces with sets that do not contain m-Cheeger sets.

Example 5.3

Consider the same graph of Example 6.21, that is, \(V(G)=\{x_0,x_1,\ldots ,x_n\ldots \}\) with the following weights:

and \(w_{x_i,x_j}=0\) otherwise. If \(\Omega :=\{ x_1, x_2, x_3\ldots \}\), then \(\frac{P_{m^G}(D)}{\nu _G(D)} > 0\) for every \(D\subset \Omega \) with \(\nu _G(D)>0\) but, working as in Example 6.21, we get \(h_1^m(\Omega )=0\). Therefore, \(\Omega \) has no m-cheeger set.

It is well known (see [25]) that the classical Cheeger constant

for a bounded smooth domain \(\Omega \), is an optimal Poincaré constant, namely, it coincides with the first eigenvalue of the 1-Laplacian:

In order to get a nonlocal version of this result, we introduce the following constant. For \(\Omega \subset X\) with \(0<\nu (\Omega )< \nu (X)\), we define

Theorem 5.4

Let \(\Omega \subset X\) with \(0< \nu (\Omega ) < \nu (X)\). Then,

Proof

Given a \(\nu \)-measurable subset \(E \subset \Omega \) with \(\nu (E )> 0\), we have

Therefore, \(\Lambda _1^m(\Omega ) \le h_1^m(\Omega )\). For the opposite inequality we will follow an idea used in [25]. Given \(u \in L^1(X,\nu )\), with \(u= 0\) in \(X {\setminus } \Omega \), \(u \ge 0\) and \(u\not \equiv 0\), we have

where the first equality follows by the coarea formula (2.6) and the last one by Cavalieri’s Principle. Taking the infimum over u in the above expression we get \(\Lambda _1^m(\Omega ) \ge h_1^m(\Omega )\). \(\square \)

Let us recall that, in the local case, a set \(\Omega \subset {\mathbb {R}}^N\) is called calibrable if

The following characterization of convex calibrable sets is proved in [1].

Theorem 5.5

[1] Given a bounded convex set \(\Omega \subset {\mathbb {R}}^N\) of class \(C^{1,1}\), the following assertions are equivalent:

- (a)

\(\Omega \) is calibrable.

- (b)

\(\upchi _\Omega \) satisfies \(- \Delta _1 \upchi _\Omega = \frac{\text{ Per }(\Omega )}{\vert \Omega \vert } \upchi _\Omega \), where \(\Delta _1 u:= \mathrm{div} \left( \frac{Du}{\vert Du \vert }\right) \).

- (c)

\(\displaystyle (N-1) \underset{x \in \partial \Omega }{\mathrm{ess\, sup}} H_{\partial \Omega } (x) \le \frac{\hbox {Per}(\Omega )}{\vert \Omega \vert }.\)

Remark 5.6

-

(1)

Let \(\Omega \subset X\) be a \(\nu \)-measurable set with \(0<\nu (\Omega )<\nu (X)\) and assume that there exists a constant \(\lambda >0\) and a measurable function \(\tau \) such that \(\tau (x)=1\) for \(x\in \Omega \) and

$$\begin{aligned} - \lambda \tau \in \Delta _1^m \upchi _\Omega \ \ \hbox {on} \ X. \end{aligned}$$Then, by Theorem 3.3, there exists \(\mathbf{g}\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric with \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) satisfying

$$\begin{aligned} -\int _{X}\mathbf{g}(x,y)\,dm_x(y)= \lambda \tau (x) \quad \hbox {for }\nu -\text{ a.e } x\in X \end{aligned}$$and

$$\begin{aligned} -\int _{X} \int _{X}\mathbf{g}(x,y)dm_x(y)\,\upchi _\Omega (x)d\nu (x)=\mathcal {F}_m(\upchi _\Omega ) = P_m(\Omega ). \end{aligned}$$Then,

$$\begin{aligned} \displaystyle \lambda \nu (\Omega )= & {} \int _{X} \lambda \tau (x)\upchi _\Omega (x) d\nu (x) \\ \displaystyle= & {} -\int _{X} \left( \int _{X}\mathbf{g}(x,y)\,dm_x(y) \right) \upchi _\Omega (x) d\nu (x) \\ \displaystyle= & {} P_m(\Omega ) \end{aligned}$$and, consequently,

$$\begin{aligned} \lambda = \frac{P_m(\Omega )}{\nu (\Omega )}=:\lambda _\Omega ^m. \end{aligned}$$ -

(2)

Let \(\Omega \subset X\) be a \(\nu \)-measurable set with \(0<\nu (\Omega )<\nu (X)\), and \(\tau \) a \(\nu \)-measurable function with \(\tau (x)=1\) for \(x\in \Omega \). Then

$$\begin{aligned} - \lambda ^m_\Omega \tau \in \Delta _1^m \upchi _\Omega \, \quad \hbox {in} \ X \ \Longleftrightarrow \ - \lambda ^m_\Omega \tau \in \Delta _1^m 0 \, \quad \hbox {in} \ X. \end{aligned}$$(5.3)Indeed, the left to right implication follows from the fact that

$$\begin{aligned} \partial \mathcal {F}_m(u)\subset \partial \mathcal {F}_m(0), \end{aligned}$$and for the converse implication, we have that there exists \(\mathbf{g}\in L^\infty (X \times X,\nu \otimes m_x)\), \(\mathbf{g}(x,y) = -\mathbf{g}(y,x)\) for almost all \((x,y) \in X \times X\), \(\Vert \mathbf{g}\Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\), satisfying

$$\begin{aligned} - \lambda ^m_\Omega \tau (x)=\int _{X} \mathbf{g}(x,y)\,dm_x(y) \quad \hbox {for }\nu -\text{ a.e. } x\in X. \end{aligned}$$Now, multiplying by \(\upchi _\Omega \), integrating over X and applying integrating by parts we get

$$\begin{aligned} \displaystyle \lambda ^m_\Omega \nu ( \Omega )= & {} \lambda ^m_\Omega \int _{X} \tau (x)\upchi _\Omega (x) d\nu (x) = - \int _{X} \int _{X} \mathbf{g}(x,y) \upchi _\Omega (x) dm_x(y)d\nu (x) \\ \displaystyle= & {} \frac{1}{2} \int _{X} \int _{X} \mathbf{g}(x,y) (\upchi _\Omega (y) - \upchi _\Omega (x) ) dm_x(y)d\nu (x) \\ \displaystyle\le & {} \frac{1}{2} \int _{X} \int _{X} \left| \upchi _\Omega (y) - \upchi _\Omega (x)\right| dm_x(y)d\nu (x) = P_m(\Omega ) . \end{aligned}$$Then, since \(P_m(\Omega )=\lambda ^m_\Omega \nu ( \Omega )\), the previous inequality is, in fact, an equality and, therefore, we get

$$\begin{aligned} \mathbf{g}(x,y) \in \hbox {sign}(\upchi _\Omega (y) - \upchi _\Omega (x) ) \quad \hbox {for }(\nu \otimes m_x)-\text{ a.e. } (x,y) \in X \times X, \end{aligned}$$and, consequently,

$$\begin{aligned} - \lambda ^m_\Omega \tau \in \Delta ^m_1 \upchi _\Omega \quad \hbox {in} \ X. \end{aligned}$$

The next result is the nonlocal version of the fact that (a) is equivalent to (b) in Theorem 5.5.

Theorem 5.7

Let \(\Omega \subset X\) be a \(\nu \)-measurable set with \(0<\nu (\Omega )<\nu (X)\). Then, the following assertions are equivalent:

- (i)

\(\Omega \) is m-calibrable,

- (ii)

there exists a \(\nu \)-measurable function \(\tau \) equal to 1 in \(\Omega \) such that

$$\begin{aligned} - \lambda ^m_\Omega \tau \in \Delta _1^m \upchi _\Omega \, \quad \hbox {in} \ X, \end{aligned}$$(5.4) - (iii)

$$\begin{aligned} - \lambda ^m_\Omega \tau ^* \in \Delta _1^m \upchi _\Omega \, \quad \hbox {in} \ X, \end{aligned}$$

for

$$\begin{aligned} \tau ^*(x)=\left\{ \begin{array}{ll} 1 &{}\quad \hbox {if } x\in \Omega ,\\ \displaystyle - \frac{1}{\lambda _\Omega ^m} m_x(\Omega )&{}\quad \hbox {if } x\in X{\setminus }\Omega . \end{array} \right. \end{aligned}$$

Proof

Observe that, since we are assuming that the metric random walk space is m-connected, we have \(P_m(\Omega )>0\) and, therefore, \(\lambda _\Omega ^m>0\).

\((iii)\Rightarrow (ii)\) is trivial.

\((ii)\Rightarrow (i)\): Suppose that there exists a \(\nu \)-measurable function \(\tau \) equal to 1 in \(\Omega \) satisfying (5.4). Hence, there exists \(\mathbf{g}\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric with \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) satisfying

and

Then, for \(F\subset \Omega \) with \(\nu (F) >0\), since \(\mathbf{g}\) antisymmetric, by using the reversibility of \(\nu \) with respect to m, we have

Therefore, \(h_1^m(\Omega ) = \lambda ^m_\Omega \) and, consequently, \(\Omega \) is m-calibrable.

\((i)\Rightarrow (iii)\) Suppose that \(\Omega \) is m-calibrable. Let

We claim that \(-\lambda ^m_\Omega \tau ^* \in \Delta _1^m0\), that is,

Take \( w \in L^2(X,\nu )\) with \(\mathcal {F}_m(w)<+\infty \). Since

and

we have

Now, using that \(\tau ^*=1\) in \(\Omega \) and \(\Omega \) is m-calibrable we have that

By Proposition 2.2 and the coarea formula given in Theorem 2.7 we get

Hence, if we prove that

we get

which proves (5.5). Now, since

and \(\tau ^*(x)=- \frac{1}{\lambda _\Omega ^m} m_x(\Omega )\) for \(x\in X{\setminus }\Omega \), we have

Then, by (5.3), we have that

and this concludes the proof. \(\square \)

Even though, in principle, the m-calibrability of a set is a nonlocal concept, in the next result we will see that the m-calibrability of a set depends only on the set itself.

Theorem 5.8

Let \(\Omega \subset X\) be a \(\nu \)-measurable set with \(0<\nu (\Omega )<\nu (X)\). Then, \(\Omega \) is m-calibrable if, and only if, there exists an antisymmetric function \(\mathbf{g}\) in \(\Omega \times \Omega \) such that

and

Observe that, on account of (2.1), (5.7) is equivalent to

Proof

By Theorem 5.7, we have that \(\Omega \) is m-calibrable if, and only if, there exists \(\mathbf{g}\in L^\infty (X\times X, \nu \otimes m_x)\) antisymmetric, \(\Vert \mathbf{g} \Vert _{L^\infty (X \times X,\nu \otimes m_x)} \le 1\) with \(g(x,y) \in \mathrm{sign}(\upchi _\Omega (y) - \upchi _\Omega (x))\) for \(\nu \otimes m_x\)-a.e. \((x,y) \in X\times X\), satisfying

and

Now, having in mind that \(g(x,y) = -1\) if \(x \in \Omega \) and \(y \in X {\setminus } \Omega \), we have that, for \(x\in \Omega \),

Bringing together (5.9) and these equalities we get (5.6) and (5.7).

Let us now suppose that we have an antisymmetric function \(\mathbf{g}\) in \(\Omega \times \Omega \) satisfying (5.6) and (5.7). To check that \(\Omega \) is m-calibrable we need to find \({\tilde{\mathbf{g}}}(x,y)\in \hbox {sign}\left( \upchi _\Omega (y)-\upchi _\Omega (x)\right) \) antisymmetric such that

which is equivalent to

since, necessarily, \({\tilde{\mathbf{g}}}(x,y)=-1\) for \(x\in \Omega \) and \(y\in X{\setminus }\Omega \), and \({\tilde{\mathbf{g}}}(x,y)=1\) for \(x\in X{\setminus }\Omega \) and \(y\in \Omega \). Now, the second equality in this system is satisfied if we take \({\tilde{\mathbf{g}}}(x,y)=0\) for \(x,y\in X{\setminus }\Omega \), and the first one is equivalent to (5.8) if we take \({\tilde{\mathbf{g}}}(x,y)=\mathbf{g}(x,y)\) for \(x,y\in \Omega \).

\(\square \)

Set

where

Corollary 5.9

A \(\nu \)-measurable set \(\Omega \subset X\) is m-calibrable if, and only if, it is \(m^{\Omega _m}\)-calibrable as a subset of \([\Omega _m,d,m^{\Omega _m}]\) with reversible measure \(\nu |\!\_\Omega _m\) (see Example 1.1 (5)).

Remark 5.10

-

(1)

Let \(\Omega \subset X\) be a \(\nu \)-measurable set with \(0<\nu (\Omega )<\nu (X)\). Observe that, as we have proved,