Abstract

Many engineering optimization problems are typically multi-objective in their natures and multidisciplinary with a large number of decision variables. Furthermore, Pareto dominance loses its effectiveness in such situations. Thus, developing a robust optimization algorithm undoubtedly becomes a true challenge. This paper proposes a multi-objective orthogonal opposition-based crow search algorithm (M2O-CSA) for solving large-scale multi-objective optimization problems (LSMOPs). In the M2O-CSA, a multi-orthogonal opposition strategy is employed to mitigate the conflicts among the convergence and distribution of solutions. First, two individuals are randomly chosen to undergo the crossover stage and then orthogonal array is presented to obtain nine individuals. Then individuals are used in the opposition stage to improve the diversity of solutions. The effectiveness of the proposed M2O-CSA is investigated by implementing it on different dimensions of multi-objective optimization problems (MOPs). The Pareto front solutions of these MOPs have various characteristics such as convex, non-convex and discrete. It is also applied to solve multi-objective design applications with distinctive features such as four bar truss (FBT) design, welded beam (WB) deign, disk brake (DB) design, and speed reduced (SR) design, where they involve different characteristics. In this context, a new decision making tool based on multi-objective optimization on the basis of ratio analysis (MOORA) technique is employed to help the designer for extracting the operating point as the best compromise or satisfactory solution to execute the candidate engineering design. Simulation results affirm that the proposed M2O-CSA works efficiently and effectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In almost real design applications, the designer often faces the problem of achieving many design targets. These targets are often conflicting and incommensurable and needed to be optimized simultaneously. The process of optimizing multiple targets (objectives) is denoted by a multi-objective optimization problem (MOP). In this regard, there is no single optimal solution but a set of solutions that optimize all the objective functions simultaneously. The set of solutions is denoted as Pareto-optimal solutions, non-inferior, non-dominated or efficient solutions [1]. According to Pareto optimality, a solution is identified as Pareto-optimal solution (or non-dominated, efficient, non-inferior), if no objective can be improved without deteriorating one other objective at least.

The traditional approaches for solving multi-objective optimization problems (MOPs) are classified into three categories according to the preferences that are provided by expert or decision maker (DM), namely a priori, a posteriori or generation, and progressive approaches. In a priori approach (decide ⇒ search), the expert or the DM set his/her preferences before the solution process (e.g., weight for each objective function that reflects the significance of the objective). Afterward, the MOP is converted into a single-objective problem (SOP) using the set of weights and then it can be solved as a single-objective optimizer. The disadvantages are as follows: there is a difficulty in quantifying (i.e., weights) his/her preferences. They give one solution for certain weights, so they required multiple runs to obtain the Pareto-optimal set (POS). In addition, they fail to deal with non-convex problems. The prominent methods of this category are the weighted sum method and ε-constraint method [1,2,3,4]. In a posteriori approach (search ⇒ decide), the expert or the DM engages eventually to choose one of the obtained solutions according to preferences. However, they require higher computational cost, the advantage of such approaches is that the Pareto-optimal set can be obtained in one single run. For progressive method (decide ↔ search), the decision maker is integrated with an interactive form to set his/her preferences during the optimization process until a satisfying solution is obtained or no further improvement is possible [5]. Since the conventional approaches are occupied by some drawbacks, such as they suffer from stagnation in local optima, they rely on initial guess and are derivative based-algorithms. Thus, such techniques are not suitable for solving a large variety of optimization problems [6].

In 1985, Schaffer [7] proposed a revolutionary idea to handle multi-objective optimization using an evolutionary optimization technique. The advantages occupied by this technique are: it can avoid local optima and gradient-free mechanism, which made it readily applicable to the real problems as well. Since this work was proposed, a significant number of studies were flourished in this regard such as vector evaluated genetic algorithms (VEGA) [8], non-dominated sorting genetic algorithm (NSGA) [9], niched Pareto genetic algorithm (NPGA) [10], multi-objective genetic algorithm (MOGA) [11], strength Pareto evolutionary algorithm (SPEA) [12]. Also, some recent population-based search algorithms for multi-objective optimization [13,14,15,16,17] have been proposed in the literature and applied to a variety of MOPs among them multi-objective bee algorithm [18], multi-objective salp swarm [19], multi-objective ant lion optimizer [20], and multi-objective grey wolf optimizer (MOGWO) [21], etc.

Apart from the previously developed optimization algorithms, the literature becomes very rich with several recent methodologies that presented to solve different aspects of multi-objective optimization applications [22,23,24,25,26,27,28,29,30,31]. Tian et al. [22] developed an enhanced multi-objective evolutionary algorithm (MOEA) based on distance indicator to address the versatility. Rong et al. [23] solved the dynamic MOPs through the multidirectional prediction strategy, where the multiple directions are induced by multiple representative solutions acquiring from the previous environments to predict the new set of the Pareto-optimal solutions. Zhang et al. [24] proposed a novel multi-objective particle swarm algorithm based on the competitive mechanism as a learning strategy to guide the search of particles and enhance the robustness while handling the MOPs. The MOPs that contain more than three objectives are usually denoted as many-objective optimization problems (Ma-OPs). In this sense, Liu et al. [25] proposed a many-objective EA based on a one-by-one selection mechanism to maintain the balance among the convergence and diversity of solution while solving the Ma-OPs. Additionally, Liu et al. [26] introduced a reference point-based EA to maintain the spread and uniform distribution of solutions while tackling the Ma-OPs. Gong Liu et al. [27] presented a set-based GA to solve interval Ma-OPs, where an evolutionary scheme and set-based Pareto dominance concepts are introduced to improve the performance of this approach. Yue et al. [28] exhibited a novel multi-objective particle swarm algorithm based on ring topology and crowding distance concept. The crowding distance aims to improve the distribution of solutions in both decision and objective spaces while the ring topology technique helps in generating stable niches. Gu et al. [29] introduced a self-organizing multi-objective PSO algorithm to deal with multimodal MOPs. This approach can map the solutions of the population to a latent space in which a neighboring relation is building to emphasize the distribution of solutions. Adel et al. [30] proposed a new multi-objective whale optimization algorithm (WOA) for MOPs. It is established based on the external archive to store the non-dominated, where an external archive is to guide the population toward promising areas in the search space. In addition, crowding distance strategy is introduced to maintain the diversity of solutions. Wenjun et al. [31] developed an effective ensemble algorithm for solving MOPs. This algorithm embeds various evolutionary operators and different selection criteria which are performed on multiple populations to achieve more distribution of solutions. Additionally, there is an increasing interest in mathematical MOPs using recent MOEAs as it is presented in the papers [32, 33].

Despite the notable number of optimization algorithms which are proposed in this field, there is an important question: why we create more optimization techniques? The answer is referring to the No Free Lunch (NFL) theorem [34] which proves that no optimization algorithm copes all optimization problems; when the algorithm successes in solving a specific set of problems, it does not mean that it successes with all natures and types of optimization problems. Meanwhile, all the optimization techniques are supposed to equal on average when considering all optimization problems, despite the superior performance on a subset of optimization problems. Thus NFL theorem permits the researchers to develop new algorithms or enhance/modify the current ones to deal with different natures of optimization fields whether for single optimization (SO) or multi-objective optimization (MOO) techniques. In an effort to maintain the diversity and convergence of the Pareto front solutions for the multiple objectives, this work proposes a modified version of the recently proposed crow search algorithm (CSA) [35]. The proposed is named multi-objective orthogonal opposition-based crow search algorithm (M2O-CSA) for solving large-scale multi-objective optimization problems (LSMOPs). The M2O-CSA comprises a multi-orthogonal-opposition strategy to maintain the convergence and distribution of solutions. It works by making a crossover among two individuals chosen randomly to generate a third one. These three individuals are arranged as orthogonal arrays and also their opposition arrays are obtained, where the former enhances the convergence and the later enables the diversity of solutions. The validation of the proposed M2O-CSA is investigated by employing different dimensions for multi-objective optimization problems (MOPs). Also, multi-objective design applications are solved such as four bar truss (FBT) design, welded beam (WB) deign, disk brake (DB) design, and speed reduced (SR) design. Simulation results affirm that the proposed M2O-CSA works efficiently and effectively.

The contributions of this research are as follows:

-

1.

A multi-objective orthogonal opposition-based crow search algorithm (M2O-CSA) is proposed for large-scale multi-objective optimization.

-

2.

The crows’ memory is updated using the domination concepts by using a parallel-orthogonal-opposition (P2O) strategy.

-

3.

An archive is integrated into the M2O-CSA to maintain Pareto (non-dominated) solutions.

-

4.

A grid mechanism based on P2O is employed to improve the stored solutions in the archive.

-

5.

The effectiveness of M2O-CSA is validated by comprehensive simulation for different dimensions of MOPs and real engineering applications.

-

6.

A new strategy based on the MOORA technique to extract the satisfactory or compromise solution which helps the designers to perform prudent designs.

The novelty and strength of the M2O-CSA lie in the amalgamation of the orthogonal arrays-based crossover scheme and opposition arrays-based strategy that is dynamically evolved during the optimization process, thereby maintaining the balance among the convergence pattern and distribution of solutions. In addition, the MOORA technique is suggested for automatically determining the best compromise solution from Pareto-optimal solutions, which practically help the inexperienced designer to meet the diverse needs regarding different operating conditions and then provide a more realistic decision. Besides, to the best of our information, no endeavors have been suggested in the literature to innovate the M2O-CSA algorithm in solving the multi-objective optimization applications as well as the integration of the MOORA technique for solving MOPs.

Following the introduction in Sect. 1, the rest of the paper is organized as follows. Section 2 provides the preliminaries of multi-objective optimization with some performance indices. Section 3 briefly overviews the basics of CSA and then presents the M2O-CSA algorithm. The simulations of the obtained results as well as discussion are developed in Sect. 4. Lastly, Sect. 5 concludes the present work and states some recommendations for future works.

2 Preliminaries

This section gives the fundamental concepts of multi-objective optimization and performance assessments of the optimization techniques.

2.1 Statements of the multi-objective optimization

A multi-objective optimization problem refers to optimize more than one objective function. This means that set conflicted objective functions are optimized simultaneously in the presence of some equality and inequality constraints. It can be formulated as a minimization problem as follows:

where \( K \), \( m,\,p, \), and \( n \) represent the number of objective functions, inequality constraints, equality constraints, and variables, respectively.\( f_{k} \) is the \( k{\text{th}} \) objective function in the vector \( F \), \( g_{l} \) denotes the \( l{\text{th}} \) inequality constraints, \( h_{j} \) defines the \( j{\text{th}} \) equality constraints, and \( [x_{i}^{L} ,x_{i}^{U} ] \) denotes the interval of lower and upper boundaries of \( i{\text{th}} \) variable.

It is worth mentioning that for single-objective function, the optimal solution can be obtained easily due to the unary single criterion or objective. For the minimization problem, the solution \( {\mathbf{u}} \) is better than \( {\mathbf{v}} \) if and only if \( f({\mathbf{u}}){\mathbf{ < }}f({\mathbf{v}}) \). However, the solutions in multiple objectives cannot be compared due to the presence of multiple criteria that judge the solutions. In this case, the concepts of Vilfredo Pareto [1] are employed that state that a solution dominates (is better than) another solution if and only if it provides better or equal on all of the objectives and shows a better value in at least one of the objective functions. Without loss of generality, the definitions regarding Pareto dominance for a minimization problem are as follows [1]:

Definition 1

Pareto dominance Assume that there are two vectors \( {\mathbf{x}}_{{\mathbf{1}}} \) and \( {\mathbf{x}}_{{\mathbf{2}}} \), where vector \( {\mathbf{x}}_{{\mathbf{1}}} \) dominates vector \( {\mathbf{x}}_{{\mathbf{2}}} \) (denote as \( {\mathbf{x}}_{{\mathbf{1}}} \succ {\mathbf{x}}_{2} \)) iff :

Figure 1 provides an overview of Definition 1, where three are solutions a, b, and c. It can be seen that the solution c has the biggest values for both \( f_{1} \) and \( f_{2} \). This explains that the solution c is dominated by solutions a and b. In contrast, solutions a and b are non-dominated solutions, as neither of them dominates each other.

Definition 2

Pareto optimality The solution \( {\mathbf{x}}_{{\mathbf{1}}} \in X \) is defined as Pareto-optimal iff:

where \( X \) is feasible region that is defined as

It is worth mentioning that the set which includes all non-dominated solutions of a problem is named Pareto-optimal set and it is shown as follows:

Definition 3

Pareto-optimal set A set all Pareto-optimal solutions is called Pareto set as follows:

Definition 4

Pareto -optimal front The set containing the value of objective functions for Pareto solutions set is called Pareto-optimal front:

The Pareto-optimal set and the Pareto-optimal front are illustrated for a minimization problem in Fig. 2.

2.2 Performance indices

To perform fair quantitative judgments and evaluations among different types of metaheuristic algorithms, some performance indices that are widely employed are investigated to evaluate the performances of metaheuristic algorithms. These performance indices are defined in detail in the following subsections.

2.2.1 Generational distance index

The generational distance (GD) index was proposed by Veldhuizen and Lamont [36] with the aim to clarify the capability of the candidate algorithms for obtaining a set of non-dominated solutions at a lowest distance to the true Pareto-optimal front (PFO). Meanwhile, the algorithm that has a minimum value of GD possesses the best convergence to PFO. The GD index can be defined mathematically as follows [37]:

where \( n_{\text{PF}} \) indicates the number of solutions in generated Pareto front (PFG) and \( d_{i}^{{}} \) denotes the Euclidean distance between \( i{\text{th}} \) member of the PFG and the nearest one in PFO. Meanwhile, the Euclidean distance (\( d \)) is determined as follows:

where \( l = \left( {f_{1l} ,f_{2l} , \ldots ,f_{nl} } \right) \) is a point on PFG and \( r = \left( {f_{1r} ,f_{2r} , \ldots ,f_{nr} } \right) \) is the nearest member to \( l \) in PFO. Figure 3 provides a schematic diagram of this performance index in the 2D space. The best value for the GD index is equal to zero which occurs when the PFG exactly coincides with the PFO. On the other hand, the inverted generational distance (IGD) can be defined mathematically as follows [37]:

where \( nt \) denotes the number of solutions in the true Pareto-optimal front (PFO) and \( d^{\prime}_{i} \) defines the Euclidean distance between the \( i{\text{th}} \) true Pareto-optimal solution and the closest Pareto solution obtained by a certain methodology.

2.2.2 Metric of spacing

The metric of spacing (S) was developed by Scott [38] to show the distribution among non-dominated solutions which are obtained by a particular algorithm. This criterion can be defined mathematically as follows [6]:

where \( n_{\text{PF}} \) represents the number of solutions in PFG, while \( d_{i}^{{}} \) denotes the Euclidean distance between \( i{\text{th}} \) member and its nearest one in PFG, and \( \bar{d} \) is the average (mean) of all distances. The small value of \( S \) provides a better uniform distribution in PFG. If all non-dominated solutions are uniformly distributed in the PFG, this implies that \( d_{i}^{{}} \) is equal to \( \bar{d} \) and therefore, the value of S metric equals zero. Figure 4 presents a schematic diagram for the spacing index.

2.2.3 Spread metric

The spread metric presents the third performance index which was proposed by Deb [6]. It aims to determine the extent of spread achieved by the non-dominated solutions found from a certain algorithm. To be more precise, this criterion shows how the obtained solutions are extended across PFO. This criterion is stated as follows [6]:

where \( d_{f} \) and \( d_{l} \) represent the Euclidean distances between the extreme solutions in the PFO and PFG, respectively. \( n_{\text{pf}} \) and \( \bar{d} \) are the total number of members in PFG and the mean (average) of all distances, respectively. Further, \( d_{i} \) represents the Euclidean distance between each member in PFG and the closest one in PFO. Equation (11) shows that the value of \( \Delta \) index is always greater than zero, while the small value of \( \Delta \) means spread of the solutions and well distribution. In this regard, when the value of \( \Delta \) is equal to zero this indicates that the extreme solutions of PFO have been found and \( d_{i} = \bar{d} \) for all non-dominated points. Figure 5 shows a schematic structure of the index \( \Delta \) for a given Pareto front.

3 Multi-objective crow search algorithm

This section focuses on extending the classical CSA to develop a multi-objective approach variant. In this context, two subsections are discussed. The first one presents the concepts of classical CSA. The second subsection incorporates multi-objective strategies based on orthogonal opposition improvements to the CSA leading to the multi-objective orthogonal opposition-based crow search algorithm (M2O-CSA).

3.1 Crow search algorithm

Crow search algorithm is introduced by Askarzadeh [35] which mimics the intelligent behaviors of crows. Crows exhibit awesome behaviors during collecting and storing foods. In this sense, crows memorize the positions where they hide exceeded food and during this process two cases may be occur. The first one, a specific crow pilfers the hidden food of another crow when it does not realize that it is followed by another one. The second one, the crow protects hidden food if it realizes that followed by another crow and therefore it can fool this crow.

From a searching point of view, CSA is a population-based search algorithm with population \( {\varvec{\updelta}}^{t} = \left\{ {{\varvec{\updelta}}_{1}^{t} ,{\varvec{\updelta}}_{2}^{t} , \ldots ,{\varvec{\updelta}}_{N}^{t} } \right\} \) of \( N \) crows (individuals) is evolved during each iteration \( t \). The \( i{\text{th}} \) crow \( {\varvec{\updelta}}_{i}^{{}} \) is represented by a n-dimensional vector as \( {\varvec{\updelta}}_{i}^{{}} = \delta_{i,1} ,\delta_{i,2} , \ldots ,\delta_{i,n} \), where each of its element (dimension) corresponds to a decision variable of the candidate problem to be solved. According to the two scenarios of crow behavior, the new population \( {\varvec{\updelta}}^{t + 1} \) is updated by considering two options whether it is aware (realizes) or not aware (not realizes) being followed by another crow. Thus each new element can be determined as follows:

where the memory of each crow (\( {\mathbf{mc}} \)) is updated as follows:

where \( r_{i} \) and \( r_{j} \) are random numbers between 0 and 1 which are drawn from a uniform distribution, \( fl \) defines the flight length parameter that controls the searching extent, \( {\mathbf{mc}}_{j}^{t} \) denotes the memory of the crow \( j \) at iteration \( t \); it saves the best location obtained so far by the crow \( j \), and \( {\text{AP}} \) is awareness probability of the following. The working steps of the CSA are listed in Algorithm 1.

3.2 The proposed M2O-CSA algorithm

In this section, the CSA is extended to deal with multiple objectives which is named multi-objective orthogonal opposition CSA (M2O-CSA), where some modifications are developed. The first is that an external archive is equipped to store the obtained Pareto-optimal solutions during runs of the proposed algorithm. The second, all crows employ an external archive as the memory of the hoard positions. Finally, a parallel-orthogonal-opposition (P2O) strategy is introduced to enhance the convergence to the true Pareto front as well as acquire its well-spread solutions over the Pareto front.

3.2.1 Updating mechanism

The algorithm starts with the initialization process, and the archive is filled by non-dominated solutions by this process. Afterward, the updating strategy is presented to enhance the convergence of solutions, improve the distribution of the whole Pareto front, and complete the finite archive until it is filled. Also, the redundancy or the existence of similar solutions is prevented according to the dominance concepts. To perform the updating strategy, one of the memorized solutions in the archive (\( {\mathbf{Ac}} \)) is selecting by a roulette-wheel mechanism with the following probability for each segment:

where \( t \) and \( T \) define the current iteration and maximum number of iterations, respectively. In this regard, the solution is determined as follows:

Therefore, the crows will be encouraged to update their positions by such archive’ solutions. On the other hand, the archive is updated regularly in each iteration and may be fully filled during optimization. In this sense, the archive is managed by dominance criteria such that the solution is prevented from entering if it is dominated by at least one of the archive residences. Also, it should be allowed to enter the archive if it is non-dominated with respect to all of the solutions in the archive, and if the solution dominates some of the Pareto solutions of the archive, they all should be removed from the archive. If the archive is full, some solutions are removed using the rule of Coello Coello et al. [39].

3.2.2 Orthogonal opposition strategy

The orthogonal opposition strategy (OOS) is a novel phase that combines both the merits of orthogonal arrays and oppositional points. This strategy is incorporated with CSA to solve large-scale MOPS. Therefore, a wider space can be explored during evolution. The OOS is parallelized to perverse the spread and convergence of solutions. This strategy is working by three stages, namely forming the orthogonal arrays-based crossover, generating the opposition points, and parallelization. The strategy of the OOS is stated by the pseudo-code as in Algorithm 2.

3.2.2.1 Orthogonal crossover

In this stage, the orthogonal array of \( N \) factors (variables) through \( G \) levels and \( C \) combinations is often denoted as \( L_{C} (G^{N} ) \). For example, if an experiment has four factors (variables) and three levels, then there are 81 combinations for this experiment, but when the orthogonal array is employed, only nine combinations can be attained (\( L_{9} (3^{4} ) \)). The term orthogonal means that each level per factor occurs at the same time for any column.

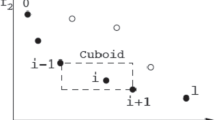

The orthogonal crossover strategy is introduced based on generating two individuals (\( {\mathbf{v}}_{{\mathbf{1}}} {\mathbf{,}}\,{\mathbf{v}}_{{\mathbf{2}}} \)) randomly, and these individuals are quantified to generate the dynamic third level that involves \( (G^{2} - 2) \) combinations indexed from 2 to \( G^{2} - 1 \) arrays in which the difference among any two successive arrays is the same. The first array (level) is the minimum among the individuals \( {\mathbf{v}}_{{\mathbf{1}}} \) and \( {\mathbf{v}}_{{\mathbf{2}}} \), while the last array or level represents the maximum among them. By this strategy, \( C = G \times G \) individuals or combinations are obtained that are incorporated for survival. In this sense, the general idea behind the orthogonal crossover strategy is to provide a set of solutions that are controlled by the dynamic \( G \) levels to maintain a better diversification in the early stages and enhance the intensification of convergence behavior toward the promising regions of the Pareto front at the later stages. Besides, the proposed dynamic orthogonal crossover strategy provides a general intelligent framework to overcome the lack of conventional crossover that generates only two individuals which leads to insufficient population diversity and falling into non-efficient solutions. In this context, the different combinations are obtained as follows:

For large-scale dimensions, the \( d \) (i.e., the number of dimensions) is usually much larger than \( N \). In such a situation, the different variables are group into \( N \) sub-vectors. Finally, \( C \) arrays are obtained according to the orthogonal array \( L_{C} (G^{N} ) \). In this work, \( L_{9} (3^{4} ) \) is used to define an orthogonal-based crossover and it is denoted as ObC9.

3.2.2.2 Oppositional learning

Oppositional learning is proposed by Tizhoosh et al. [40] which aims to accelerate the convergence speeds of optimization algorithms. It operates to consider the opposite of each individual. The concepts of opposition learning are as follows:

Definition 5

Assume that \( x \) is a real number with bounds \( l \) and \( u \) such that \( x \in [l,u] \), then its opposite value \( xo \) is obtained as (Fig. 6).

Definition 6

Suppose that \( x \) is a real number such that \( x \in [l,u] \), then its new opposite value \( xo \) is determined as (Fig. 6).

where \( xo \) is the opposite of \( x \).

3.3 Eliciting the satisfactory solution

The multiple objective optimization algorithms yield a set of Pareto-optimal solutions due to the conflict natures among the objectives. The practical situations are concerned with one design point or one operating point for these purposes. Such a design point is called the best (satisfactory) compromise solution. MOORA (multi-objective optimization on the basis of ratio analysis) method has the ability to extract the one option from a set of available options. The idea of MOORA can be stated in a series of steps.

-

1.

Exhibit the performance for \( N \) alternatives over \( K \) criteria.

$$ F = \left[ {\begin{array}{*{20}c} {f_{11} } & {f_{12} } & \cdots & \cdots & {f_{1K} } \\ {f_{21} } & {f_{22} } & \cdots & \cdots & {f_{2K} } \\ \cdots & \cdots & \cdots & \cdots & \cdots \\ \cdots & \cdots & \cdots & \cdots & \cdots \\ {f_{N1} } & {f_{N2} } & \cdots & \cdots & {f_{NK} } \\ \end{array} } \right] $$where \( f_{ij} \) presents the performance measure of the \( i{\text{th}} \) option on \( j{\text{th}} \) attribute or criterion which also \( f_{ij} \) represents the value of the \( i{\text{th}} \) solution at the \( j{\text{th}} \) criterion. The row of the matrix \( F \) represents the values of all \( K \) objective functions for \( i{\text{th}} \) solution.

-

2.

Obtain the normalized form of the decision matrix so that it becomes dimensionless. This normalization or the system ratio is expressed as follows:

$$ f_{ij}^{*} = {{f_{ij}^{{}} } \mathord{\left/ {\vphantom {{f_{ij}^{{}} } {\left( {\sum\limits_{i = 1}^{N} {f_{ij}^{2} } } \right)^{{{1 \mathord{\left/ {\vphantom {1 2}} \right. \kern-0pt} 2}}} }}} \right. \kern-0pt} {\left( {\sum\limits_{i = 1}^{N} {f_{ij}^{2} } } \right)^{{{1 \mathord{\left/ {\vphantom {1 2}} \right. \kern-0pt} 2}}} }},\quad j = 1,2, \ldots ,K $$where \( f_{ij}^{*} \) is a dimensionless value that belongs to the interval [0, 1] and represents the normalized performance of the \( i{\text{th}} \) option on \( j{\text{th}} \) attribute or criterion.

-

(3)

Add the obtained normalized performances for maximization cases (for beneficial attributes) and subtract these performances for minimization cases (for non-beneficial attributes). This step is expressed as follows:

$$ Y_{i} = \sum\limits_{j = 1}^{l} {f_{ij}^{*} } - \sum\limits_{j = l + 1}^{K} {f_{ij}^{*} } $$where \( Y_{i} \) denotes the normalized assessment for \( i{\text{th}} \) option (alternative) with considering all the attributes.

The \( Y_{i} \) may be positive or negative according to the totals of its maxima and minima, respectively.

-

4.

Rank the values of \( Y_{i} \) to show the final preference.

-

5.

Recommend the highest value of \( Y_{i} \) as the best alternative.

All the parameters of the M2O-CSA are typical of the original work of the CSA by introducing a new parameter for denoting archive size. After all, the working of the M2O-CSA is exhibited by the pseudo-code as in Algorithm 3 and the flowchart of M2O-CSA is provided in Fig. 7.

4 Simulation results

In this section, a set of multi-objective benchmark problems are employed to clarify and validate the performance of the proposed M2O-CSA. These multi-objective problems involve two categories of experiments. The first category is to test our algorithm on a well-known ZDT test suites that have been selected from some of the credible research studies and these suites are listed in Table 1, where the ZDT5 isn’t considered in Table 1 because this problem involves a discrete Pareto front and thus it needs a different methodology to generate discrete Pareto front. Also, in this category, we focus on investigating the performance of the proposed M2O-CSA regarding two scales of dimensions, namely, \( n = 30, \) and \( n = 100 \). In the second category, a set of real engineering multi-objective design problems are employed for investigating the performance of the proposed M2O-CSA. Moreover, the M2O-CSA as a modified optimizer is compared with its classical version (i.e., MOCSA [41]) and chaotic version (MOCCSA [41]).

To achieve unbiased results, all algorithms are performed under the same conditions. In this regard, all algorithms are uniformly randomly initialized within the candidate range and the maximum number of iterations is set to 200 and the number of individuals in the population is set to 100 for all algorithms. Also, the number of parallel branches for M2O-CSA is set to 4. Furthermore, in order to mitigate the impact of randomness, all algorithms were run 20 times independently of all categories. The selected parameters of the candidate algorithms are set as in their respective works, where they are: population size, archive size, flight length, awareness probability, and the number of iterations which are 100, 100, 2, 0.1, and 200, respectively. For a fair comparison among MOCSA, MOCCSA and M2O-CSA, the first randomly generated population is used for the first run of MOCSA, MOCCSA and M2O-CSA, the second randomly generated population is used for the second run of MOCSA, MOCCSA and M2O-CSA, and so on.

4.1 Results of ZDT test functions

In this subsection, the proposed M2O-CSA is evaluated and compared with the MOCSA and MOCCSA algorithms on the ZDT test functions. The validation is investigated through the different assessment metrics such as (1) generational distance (GD), (2) inverted generational distance (IGD), (3) spacing (SP), and (4) the maximum spread (MS). The proposed M2O-CSA is investigated through two experiments. Experiment 1 employs thirty variables (\( n = 30 \)), while, experiment 2 employs a hundred variables (\( n = \,100 \)).

In experiment 1, the assessment metrics (i.e., GD, IGD, SP, and MS) are obtained and reported in Tables 2, 3, 4, and 5. The obtained results regarding these metrics show that the M2O-CSA outperforms the compared versions, namely MOCSA and MOCCSA for all ZDT test suits with \( n = 30 \). The superiority is demonstrated by the mean values, where the reported values show a higher accuracy of M2O-CSA compared to the other algorithms. Also, the shape of the obtained Pareto-optimal front by the proposed M2O-CSA and the compared ones on ZDT1, ZDT2, ZDT3, ZDT4, and ZDT6 are portrayed in Fig. 8. Inspecting this figure, it is noted that MOCCSA exhibits the poorest convergence, while the proposed M2O-CSA provides a good convergence and useful diversity toward the true Pareto fronts on all ZDT test suits. On the other hand, the MOCSA provides good convergence for ZDT2 and ZDT6. The most interesting features behind the superior performance of the proposed M2O-CSA are contained in parallel-orthogonal-opposition (P2O) strategy that preserves the diversity of solutions and enhances the convergence toward the true Pareto fronts.

In experiment 2, the behavior of the proposed M2O-CSA is further investigated and compared with the MOCSA and MOCCSA on ZDT1, ZDT2, ZDT3, ZDT4, and ZDT6 test suits with \( n = \,100 \) to analyze their scalability. The statistical measures of the assessment metrics include the best, mean, worst values, and standard deviation (SD) are obtained and listed in Tables 6, 7, 8, and 9. The reported results provide that the proposed M2O-CSA achieves better results of GD, IGD, SP, and MS in terms of mean values under increasing the dimensionality. Thus, we can conclude that the proposed M2O-CSA is insensitive to grow the dimensionality. Compared to the MOCSA and MOCCSA algorithms, the proposed M2O-CSA gives better results for all ZDT test suits. Furthermore, the Pareto front shapes obtained by the proposed M2O-CSA and the other algorithms are depicted in Fig. 9. Based on Fig. 9, it is noted that M2O-CSA provides the boost convergence in finding Pareto-optimal fronts. The compared algorithms suffer from achieving the Pareto-optimal fronts. The superior performance regarding the proposed M2O-CSA is due to the parallel-orthogonal-opposition (P2O) strategy that helps in exploring more regions of the search space and focuses on approaching the true Pareto fronts.

4.2 Comparison with state-of-the-art optimization algorithms

In this subsection, the proposed M2O-CSA is further verified and compared against some recent state-of-the-art algorithms: MOGOA [33], MOALO [33], MOPSO [33], NSGA-II [33], MOLAPO [32], MOGWO [32], HEIA [31], MOEA/D-DRA [31], FRRMAB [31], EAG [31], BCE [31], and EF-PD [31]. The performance evaluations of these algorithms are performed with four assessment criteria including GD, IGD, SP, and MS, where lower GD, IGD, and SP values indicate better performance, while the high MS value defines the better performance of the candidate method. For the mentioned algorithms, the results are reported in terms of mean and standard deviation (St. dev) values. Table 10 provides the comparisons among the proposed M2O-CSA and the comparative ones, M2O-CSA MOGOA, MOALO, MOPSO, NSGA-II, MOLAPO, and MOGWO, where the comparisons show that the M2O-CSA outperforms the other comparative algorithms for GD and MS metrics for most ZDT problems and it can provide competitive results in terms of SP metric. In terms of the IGD, Table 10 shows that the proposed M2O-CSA affirms its superiority over the HEIA, MOEA/D-DRA, FRRMAB, EAG, BCE, and EF-PD algorithms for all ZDT problems. Also, the rank for each algorithm at each ZDT problem is provided and the average rank (AR) regarding all ZDT problems is provided. Based on the results of average rank, the presented algorithms follow this order based on GD metric: M2O-CSA > MOLAPO > MOGOA > MOALO > MOGWO > MOPSO > NSGA-II, M2O-CSA > NSGA-II > MOLAPO > MOGWO > MOPSO > MOALO > MOGOA in terms of MS metric, and follow this order for SP metric: MOLAPO > M2O-CSA ≈ MOGWO > MOGOA > MOALO > MOPSO > NSGA-II. Also in terms of IGD metric, the following order is conducted: M2O-CSA > EF-PD > HEIA > BCE > MOEA/D-DRA ≈ EAG > FRRMAB. According to the assessment criteria, the M2O-CSA has a superior performance in terms of GD and MS metrics, and a strong competitive edge based on SP metric. Here, the symbol > is to indicate that a certain algorithm better than another, while ≈ is to indicate similar results for both competitors in terms of average rank.

4.3 Results of multi-objective constrained problems (MOCPs)

In this section, a set of well-known multi-objective constrained optimization problems are employed to evaluate and clarify the performance of the proposed M2O-CSA. The constrained test suits problems are named as follows: SRN, CONSTR, BNH, KIT, and TNK. These problems have diverse features of Pareto fronts such as continuous convex and discrete natures. The details of the MOCPs are shown in Table 11.

To compare the performance of the proposed M2O-CSA against the other ones, the assessment metrics including GD and IGD are utilized to quantify and compare the performances in terms of convergence and coverage. These metrics are obtained and reported in Tables 12 and 13 for the proposed M2O-CSA and the compared ones. Based on the obtained results, we can note that the M2O-CSA outperforms the other algorithms in terms of means for GD and IGD metrics. In addition, the Pareto-optimal fronts for all algorithms are depicted and shown in Fig. 10. It is noted that the proposed M2O-CSA provides high coverage toward the true Pareto fronts for all multi-objective constrained test suits. The results show that the proposed M2O-CSA managed to achieve the Pareto fronts successfully for all multi-objective constrained problems.

4.4 Results of multi-objective constrained engineering designs (MOCEDs)

In this subsection, M2O-CSA has experimented on four multi-objective constrained engineering designs (MOCEDs) which are popular in the engineering design field. The engineering design suits are as follows: four bar truss (FBT) design, welded beam (WB) deign, disk brake (DB) design and speed reduced (SR) design, where they involve different characteristics. The details for all engineering designs are provided in Table 14, where their figures are appended in Fig. 11. The proposed M2O-CSA is compared with MOCSA and MOCCSA algorithms for all engineering design suits. All engineering design suits have experimented through a population size of 60, 200 for iterations, archive size of 100, flight length of 2, and 0.1 for awareness probability.

For design suits, 100 Pareto-optimal points are generated. In this context, a new methodology-based MOORA technique is employed to help the designer for extracting the operating point as the best compromise or satisfactory solution to execute the candidate engineering design.

The Pareto fronts for all engineering designs are presented in Fig. 12, where the proposed M2O-CSA provides spread Pareto fronts for all designs and also can dominate the most points of the compared algorithms. On the other hand, MOCSA and MOCCSA provide the poorest convergence of Pareto fronts. As the designers or engineers concern and realize the carrying out of the design tasks, MOORA technique is introduced as a decision making tool to acquire the operating point of the candidate design. This operating point is denoted as the compromise solution that is elicited from the obtained Pareto front based on MOORA technique, where the results of the compromise solutions for all designs are reported in Table 15. Based on the obtained results, we can see that the proposed M2O-CSA gives superior solutions in terms of the constraints violations compared with the MOCSA and MOCCSA.

4.5 Discussion

The convergence and coverage of the Pareto fronts obtained by the proposed M2O-CSA are shown based on its benefits of qualitative and quantitative results. The convergence of M2O-CSA is guaranteed by implanting the orthogonal-based crossover strategy of solutions, while the coverage is emphasized through adopting the opposition strategy. In this context, the proposed method is applied on different tests such as ZDT test suits, some constrained problems such as SRN, CONSTR, BNH, KIT, TNK problems and some of the real applications such as FBT, WB, DB, and SR designs. The comparisons among the different algorithms prove that the M2O-CSA algorithm performs the test suits well and does not suffer from attaining the Pareto fronts, while the compared algorithms suffer from achieving the Pareto solutions. Furthermore, visualizing the Pareto fronts for engineering design problems can hesitate inexpert designer to perform them, so the MOORA technique is employed to extract the compromise solution of the candidate design. Therefore, it helps and facilities the mission of designs for engineering fields. Finally, the feasibility of employing the proposed M2O-CSA methodology for solving the multi-objective design problems has been practically approved, where it was employed to study four real-world optimization problems related to computational design fields that involve multiple objectives optimization. These designs include four bar truss design, welded beam design, disk brake design, and speed reduced design. The recorded simulations show that the M2O-CSA provides a powerful assistance for designing tasks not only for inexpert designers by invoking the MOORA tool to attain satisfactory or preferred solution from the Pareto front to execute the candidate engineering design but also for experts through envisioning various scenarios of designing conditions that are denoted by Pareto front and they can choose the most preferred scenario according to their preferences. Also, it is useful when no a priori knowledge about the candidate application is available. Prudently, this methodology can be a very promising candidate tool in handling practical situations with complicated operating conditions as well. Furthermore, we hope that the released methodology will encourage the engineers and practitioners to apply it in multi-objective real environmental areas such as environmental-economic load dispatch problem that aims to simultaneously minimize the pollution induced by fossil fuels and the operational costs. Another further direction including the energy-based wind farm layout that aims to maximize the output power produced by this farm and minimize the operating cost is also a very environmental interesting area.

5 Conclusion

This paper proposed an improved multi-objective orthogonal opposition version of the CSA called M2O-CSA. With maintaining the search strategy of CSA, M2O-CSA is introduced through incorporating CSA with a multi-orthogonal opposition strategy, an archive and a new updating mechanism based on Pareto solutions as the stored food sources. The proposed M2O-CSA algorithm is benchmarked on ten test instances including five unconstrained test suits and five constrained test suits. The results are assessed through some performance metrics as quantitative indicators such as GD, IGD, SP, and MS. The results are verified by comparisons with MOCSA and MOCSA algorithms. For further investigation, the proposed M2O-CSA is compared with some recent state-of-the-art algorithms. The obtained results prove that the proposed M2O-CSA is highly competitive or outperform the compared algorithms and it is insensitive to the high dimensional problems. Moreover, the proposed M2O-CSA algorithm was successfully investigated to tackle real engineering applications including four bar truss design, welded beam design, disk brake design, and speed reduced design. The results of M2O-CSA show the superiority over those reported by other methods, indicating that the proposed M2O-CSA algorithm has an improved convergence and coverage capabilities for these designs and can be a good alternative to effectively solve the multi-objective design tasks. In addition, qualitative results are demonstrated by visualizing the Pareto front for engineering design problems. Furthermore, the hesitance of the designer handled by introducing MOORA technique is to extract the compromise solution of the candidate design and therefore it provides a friendly-user system for designers.

Besides, the proposed work is giving two new directions toward practical implementation: (1) improving the crows’ searchability such that multi-objective real life problems can be handled; (2) presenting a fruitful decision making model for engineers or designers to decide the best alternative regarding the operating situations when the non-dominated set is considered. Although the M2O-CSA has been demonstrated to be superior and competitive to other compared algorithms, a fact of no free lunch (NFL) theorem cannot be ignored, that is the M2O-CSA may still have more room to be competitive enough with huge dimensions and/or many constraints that may deteriorate both of the convergence pattern and diversification abilities toward the true Pareto front. Therefore, some improvement strategies such as mutation schemes, parallel implementation, and hybridization with other algorithms are other research paths that can be implemented in the future to improve performance behavior where these strategies can be applied around the best Pareto front obtained by M2O-CSA to guide the search toward better vicinities and then the performance of the solutions is strengthened. In addition, we attempt to develop a discrete fusion of the M2O-CSA to solve combinatorial optimization applications. In this context, the discrete fusion can be implemented through a truncation strategy or mapping mechanism to transfer the continuous decision variable to a discrete decision variable. Furthermore, investigating the performance of M2O-CSA on dynamic many-objective optimization problems, incorporation of uncertainties in optimization models and more practical problems such as energy-based optimization, and labor-aware flexible job shop scheduling under dynamic electricity pricing optimization tasks are also worthwhile directions.

References

Miettinen K (2002) Non-linear multiobjective optimization. Kluwer Academic Publisher, Dordrecht

Ehrgott M, Gandibleux X (2002) Multiobjective combinatorial optimization—theory, methodology, and applications. In: Ehrgott M, Gandibleux X (eds) Multiple criteria optimization: state of the art annotated bibliographic surveys. Springer, US, pp 369–444

Berube JF, Gendreau M, Potvin JY (2009) An exact e-constraint method for bi-objective combinatorial optimization problems: application to the Traveling salesman problem with profits. Eur J Oper Res 194(1):39–50

Laumanns M, Thiele L, Zitzler E (2006) An efficient, adaptive parameter variation scheme for metaheuristics based on the epsilon-constraint method. Eur J Oper Res 169(3):932–942

Branke J, Deb K (2005) Integrating user preferences into evolutionary multiobjective optimization. Knowledge incorporation in evolutionary computation. Springer, pp 461–477

Deb K (2001) Multi-objective optimization using evolutionary algorithms, vol 16. Wiley, London

Schaffer JD (1985) Multiple objective optimization with vector evaluated genetic algorithms. In: Proceedings of the 1st international conference on genetic algorithms. L. Erlbaum Associates. Inc., pp 93–100

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Trans Evol Comput 3(4):257–271

Srinivas N, Deb K (1994) Multiobjective optimization using nondominated sorting in genetic algorithms. Evolut Comput 2(3):221–248

Corne DW, Jerram NR, Knowles JD, Oates MJ (2001) PESA-II: region-based selection in evolutionary multiobjective optimization. In: Proceedings of the 3rd annual conference on genetic and evolutionary computation. Morgan Kaufmann Publishers Inc., pp 283–290

Knowles J, Corne D (1999) The Pareto archived evolution strategy: a new baseline algorithm for Pareto multiobjective optimisation. In: Proceedings of the 1999 congress on evolutionary computation (CEC 1999), vol 1, pp 98–105

Zhou A, Qu BY, Li H, Zhao SZ, Suganthan PN, Zhang Q (2011) Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evol Comput 1(1):32–49

Mousa AA, Abd El-Wahed WF, Rizk-Allah RM (2011) A hybrid ant colony optimization approach based local search scheme for multiobjective design optimizations. J Electr Power Syst Res 81:1014–1023

Rizk-Allah RM, El-Sehiemy RA (2018) A novel sine cosine approach for single and multiobjective emission/economic load dispatch problem. In: International conference on innovative trends in computer engineering (ITCE 2018) Aswan University, Egypt, pp 271–277

Rizk-Allah RM, El-Sehiemy RA, Deb S, Wang G-G (2017) A novel fruit fly framework for multi-objective shape design of tubular linear synchronous motor. J Supercomput 73(3):1235–1256

Rizk-Allah RM, Abo-Sinna MA (2017) Integrating reference point, Kuhn-Tucker conditions and neural network approach for multi-objective and multi-level programming problems. OPSEARCH 54(4):663–683

Rizk-Allah RM, El-Sehiemy RA, Wang G-G (2018) A novel parallel hurricane optimization algorithm for secure emission/economic load dispatch solution. Appl Soft Comput 63:206–222

Akbari R, Hedayatzadeh R, Ziarati K, Hassanizadeh B (2012) A multi-objective artificial bee colony algorithm. Swarm Evol Comput 2:39–52

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Mirjalili S, Jangir P, Saremi S (2017) Multi-objective ant lion optimizer: a multi-objective optimization algorithm for solving engineering problems. Appl Intell 46:79–95

Mirjalili S, Saremi S, Mirjalili SM, Coelho L (2016) Multi-objective grey wolf optimizer: a novel algorithm for multicriterion optimization. Expert Syst Appl 47:106–119

Tian Y, Cheng R, Zhang X, Cheng F, Jin Y (2018) An indicator-based multiobjective evolutionary algorithm with reference point adaptation for better versatility. IEEE Trans Evol Comput 22(4):609–622

Rong M, Gong D, Zhang Y, Jin Y, Pedrycz W (2019) Multidirectional prediction approach for dynamic multiobjective optimization problems. IEEE Trans Cybern 49(9):3362–3374

Zhang X, Zheng X, Cheng R, Qiu J, Jin Y (2018) A competitive mechanism based multi-objective particle swarm optimizer with fast convergence. Inf Sci 427:63–76

Liu Y, Gong D, Sun J, Jin Y (2017) A many-objective evolutionary algorithm using a one-by-one selection strategy. IEEE Trans Cybern 47(9):2689–2702

Liu Y, Gong D, Sun X, Zhang Y (2017) Many-objective evolutionary optimization based on reference points. Appl Soft Comput 50:344–355

Gong DW, Sun J, Miao Z (2018) A set-based genetic algorithm for interval many-objective optimization problems. IEEE Trans Evol Comput 22(1):47–60

Yue C, Qu B, Liang J (2018) A multiobjective particle swarm optimizer using ring topology for solving multimodal multiobjective problems. IEEE Trans Evol Comput 22(5):805–817

Gu F, Cheung YM (2018) Self-organizing map-based weight design for decomposition-based many-objective evolutionary algorithm. IEEE Trans Evol Comput 22(2):211–225

Adel G, Abdelouahab M, Djaafar Z (2020) A guided population archive whale optimization algorithm for solving multiobjective optimization problems. Expert Syst Appl 141:112972

Wang W, Yang S, Lin Q, Zhang Q, Wong KC, Coello CAC, Chen J (2019) An effective ensemble framework for multi-objective optimization. IEEE Trans Evol Comput 23(4):645–659

Nematollahi AF, Rahiminejad A, Vahidi B (2019) A novel multi-objective optimization algorithm based on Lightning Attachment Procedure Optimization algorithm. Appl Soft Comput 75:404–427

Tharwat A, Houssein EH, Ahmed MM, Hassanien AE, Gabel T (2018) MOGOA algorithm for constrained and unconstrained multi-objective optimization problems. Appl Intell 48(8):2268–2283

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

Askarzadeh A (2016) A novel metaheuristic method for solving constrained engineering optimization problems: crow search algorithm. Comput Struct 169:1–12

Veldhuizen DAV, Lamont GB (1998) Multiobjective evolutionary algorithm research: a history and analysis, Technical Report TR-98-03, Department of Electrical and Computer Engineering, Graduate School of Engineering, Air Force Institute of Technology, Wright-Patterson AFB, OH

Coello CAC, Pulido GT (2005) Multiobjective structural optimization using a micro Genetic algorithm. Struct Multidiscip Optim 30(5):388–390

Schott JR (1995) Fault tolerant design using single and multicriteria genetic algorithm optimization (Master’s thesis), Department of Aeronautics and Astronautics, Massachusetts Institute of Technology, Cambridge, MA

Coello CAC, Pulido GT, Lechuga MS (2004) Handling multiple objectives with particle swarm optimization. IEEE Trans Evol Comput 8:256–279

Tizhoosh HR (2006) Opposition-based reinforcement learning. J Adv Comput Intell Intell Inform 10(3):578–585

Hinojosa S, Oliva D, Cuevas E, Pajares G, Avalos O, Galvez J (2018) Improving multi-criterion optimization with chaos: a novel Multi-Objective Chaotic Crow Search Algorithm. Neural Comput Appl 29(8):319–335

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rizk M. Rizk-Allah and Aboul Ella Hassanien: Scientific Research Group in Egypt.

Rights and permissions

About this article

Cite this article

Rizk-Allah, R.M., Hassanien, A.E. & Slowik, A. Multi-objective orthogonal opposition-based crow search algorithm for large-scale multi-objective optimization. Neural Comput & Applic 32, 13715–13746 (2020). https://doi.org/10.1007/s00521-020-04779-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04779-w