Abstract

Decomposition strategy which employs predefined subproblem framework and reference vectors has significant contribution in multi-objective optimization, and it can enhance local convergence as well as global diversity. However, the fixed exploring directions sacrifice flexibility and adaptability; therefore, extra reference adaptations should be considered under different shapes of the Pareto front. In this paper, a population-based heuristic orientation generating approach is presented to build a dynamic decomposition. The novel approach replaces the exhaustive reference distribution with reduced and partial orientations clustered within potential areas and provides flexible and scalable instructions for better exploration. Numerical experiment results demonstrate that the proposed method is compatible with both regular Pareto fronts and irregular cases and maintains outperformance or competitive performance compared to some state-of-the-art multi-objective approaches and adaptive-based algorithms. Moreover, the novel strategy presents more independence on subproblem aggregations and provides an autonomous evolving branch in decomposition-based researches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Decomposition-based approaches have achieved great success in the field of optimizing multi-objective problems (MOPs) and attracted a great deal of attention [3, 23, 33]. Scalarization is one of the decomposition techniques, in which multiple objectives are aggregated into a set of scalar single-objective subproblems based on predefined reference vectors [46]. The reference vectors represent preference searching orientations. Usually, a uniformly distributed reference vector set is employed for optimization with no special preferences [26, 40]. With algorithm convergence, each of the references is related to a best fitting individual corresponding to the representative direction. The more reference vectors initialized, the more specific division of the objective space can be obtained, and the more feasible solutions can be found. In other words, the uniform reference vectors guarantee a well-spaced and distributed solution set. However, does the uniformity equal satisfied performance? Since the exploration orientation is strictly constrained by the uniform reference vectors, the algorithm lacks flexibility and most likely misses undefined areas; while at the same time, inefficient references occupying unnecessary computational cost make no contribution to the searching process. As a consequence, uniform decomposition is not a definition well suited to all situations [18], and a stochastic local learning behavior is encouraged for better exploration [10]. Moreover, the aggregation approach which can determine the balance between convergence and diversity control has great influence on the performance of scalarization [22, 37, 41]. Therefore, the aggregation should be designed according to the problem property and parameter definition [39]. The following information is arranged considering the above investigations.

First, the number and distribution of the reference vectors have a critical impact on the searching direction and performance of the algorithms. Except for the preference requirement, in most cases, vector sets are generated uniformly according to Das and Dennis’s [6] systematic approach. However, the uniform reference vectors waste resources on worthless directions on the irregular Pareto front and result in few feasible solutions. To tackle this issue, the regenerate procedures for invalid vectors are activated in some researches. For example, an “addition and deletion” strategy was introduced to adapt the distribution of reference vectors [17]; an elite population-based adaptive weight vector adjustment strategy was proposed and carried out periodically during the iteration [27]. Wang and Xiong [36] introduced three specifications of reference points with different \(\epsilon _{i}\) values, which determine the starting points of the reference vectors; and they proved that the dynamic reference point specification has a better balance performance. A global replacement strategy was designed to adapt the reference points between the ideal point and the nadir point. On the other hand, a k-means cluster technique was introduced to partition the solutions for local reproduction on the irregular Pareto [43]. However, the adaptive period has great influence on the searching procedure. High adjusted frequency leads to premature and reduced diversity, while low frequency results in insufficient adjusted pressure. Furthermore, considering the close relationship between the reference distribution and searching direction, the adaptive procedure needs to be accurate and reasonable for stable searching performance. Another consideration is that while changing reference distribution for pursuing irregular performance, the adaptation strategy should have the ability to maintain diversity on the regular Pareto. Tian and Cheng [31] proposed an adaptation approach, which adjusted the location of the reference points according to the orthogonal projection of elite solutions and has achieved satisfying results on both regular and irregular instances. In addition, a stable matching model which has been studied in many researches is essential. For instance, Li and Kwong have discussed the mutual-preferences and interrelationship between subproblems and solutions [21].

Second, the setting of the aggregation method is another key role in the decomposition-based algorithms which determines the collaborative approach among sub-agents. Zhang and Li [44] integrated the Tchebycheff (TCH) approach and penalty-based boundary intersection (PBI) method, respectively, with the scalar subproblem converting. The experimental result illustrated that PBI has obtained better uniformity than the TCH approach, especially when the number of vectors is not large. Ishibuchi and Sakane investigated the advantages and disadvantages of the weighted TCH and the weighted sum fitness evaluations and proposed an adaptive simultaneous scalarization approach to alleviate the difficulty in selecting the appropriate methods [16]. An inverted PBI function was proposed [30] to improve the searching performance in MaOPs. An angle-penalized distance (APD) [5] is another scalarization approach introduced to replace the Euclidean distance in PBI, which has achieved better performance in higher objective spaces. Our previous study proposed a velocity-penalized interaction (VPI) using an adaptive penalty parameter based on PSO’s moving velocities and provided a dynamic balance between convergence and diversity [25]. To sum up, the difficulty and emphasis of the aggregation lie in the balance evaluation between convergence and diversity in every single subproblem.

Third, the convergence, diversity and uniformity are three main performances in assessing MOP solutions, where diversity is the most difficult to characterize mathematically [12, 19], and easy to deteriorate in high-objective spaces [1, 13]. Technically speaking, diversity represents a dissimilarity which can provide multiple information sources. It can be seen as a synthesis of spreading, uniformity and randomness. Researches have demonstrated that a good uniformity or spreading is not equal to a good diversity [34, 42]. Assume there are two solution sets provided by two well-performed scalar MOP processes using the same reference vectors, the difference between the two sets is unnoticeable. This kind of similarity provides advantages in stable performance, while also exposing the algorithms to local convergence and similar approximate optima. Accordingly, the decision makers need to redefine the reference vectors for diverse solutions, which goes against the expectation of diversity. In conclusion, although more subproblems provide more potential directions for diversity enhancement, they still sacrifice randomness and flexibility in the evolution procedure.

Motivated by ideas from decomposition and reference-guided evolutions, and aiming for more flexible exploration ability, a heuristic orientation adjustment method is proposed in this paper. This novel strategy replaces the exhaustive references with incomplete indicated directions expressed from an evolving population and introduces a subproblem reduction approach named R-MOEA/D. Compared to the existing decomposition-based approaches, the main contributions and advantages of this work can be summarized as follows:

- (1):

Given a fixed population size, reduce the number of subproblems through generating fewer reference vectors, and employ a many-one matching mechanism instead of one-one matching mechanism in a solution-to-subproblem matching procedure. Under this alteration, the individual experiences less pressure from the reference vector’s constraint; meanwhile, the randomness and diversity of the exploration can be improved.

- (2):

A shifting strategy is proposed to adjust the reference vectors periodically following the population’s evolution. The vectors are initialized uniform at the beginning of the algorithm. While the searching progresses, individuals tend to gather around more activated areas which have a higher probability of being parts of the true Pareto front. Under this circumstance, the shifting strategy leads the references to move toward the new centers of the population. Different from the existing dynamic vector techniques, our shifting strategy has certain advantages for the following three reasons. First, the shifting strength is evaluated by the changing situation of the population distribution, which ensures a better stability and fewer mistakes in the wrong direction. Second, the shifting strategy avoids the rapid descent in spreading sustained by reference vectors. Third, together with the many-one matching mechanism, the novel dynamic approach achieves more robustness during the shifting procedure and reserves margins for searching adjustment.

- (3):

R-MOEA/D is proved to have less sensitivity on aggregation methods, because the convergence and diversity controls are technically apart during the evolution. The aggregate method for scalarization is mainly responsible for the convergence part, while the diversity is controlled by additional crowding during the elite selection in each subproblem procedure. This advantage guarantees a universal adaptability toward different optimization problems.

The rest of this paper is organized as follows: The preliminaries of multi-objective definition and decomposition-based techniques are introduced in Sect. 2. The proposed algorithm is detailed in Sect. 3. Then, the experiments and comparison results on several benchmark instances are presented in Sect. 4. Thereafter, the additional test and analysis including parametric, reference efficiency and sensitivity are arranged in Sect. 5. Finally, Sects. 6 and 7 present the discussions and conclusions, respectively.

2 Preliminaries

In this section, we first present some basic definitions of multi-objective optimization. Then, a brief survey of the decomposition-based MOP algorithm is given.

2.1 Basic definitions

A MOP is defined as:

where \(\Omega =\prod _{i=1}^{n}[a_{i},b_{i}]\subseteq {{\mathbb {R}}}^{n}\) is the decision space, \({{\mathbf {x}}}=(x_{1},x_{2},\ldots ,x_{n})^{{\mathrm{T}}}\in \Omega\) is the candidate solution, and \(F\in {{\mathbb {R}}}^{M}\) is the objective space, which constitutes M objective functions. A vector \({{\mathbf {u}}} = (u_{1},u_{2},\ldots ,u_{n})^{{\mathrm{T}}}\) is said to dominate \({{\mathbf {r}}}=(v_{1},v_{2},\ldots ,v_{n})^{{\mathrm{T}}}\) (denoted as \({{\mathbf {u}}}\prec {{\mathbf {r}}}\)) if and only if element in \({{\mathbf {u}}}\) is partially less than \({{\mathbf {r}}}\), i.e, \(\forall i\in \{1,2,\ldots ,k\},u_{i}\le v_{i}\) and \(\exists i\in \{1,2,\ldots ,k\}, u_{i}<v_{i}\). \({{\mathbf {u}}}\) and \({{\mathbf {r}}}\) are incomparable when they are non-dominated with each other, denoted as \(u\Vert v\). Pareto set (PS) is defined as \(PS=\{{{\mathbf {x}}}\in \Omega |\lnot \exists {{\mathbf {x'}}}\in \Omega , {{\mathbf {f}}} ({{\mathbf {x'}}}) \preceq {{\mathbf {f}}} ({{\mathbf {x}}})\}\), and the corresponding set in objective space is called the Pareto front (PF). MOP methods try to find a manageable number of solutions that are uniformly distributed and close to the PF, which are termed as Pareto optimal solutions. MOPs with at least four objectives are informally known as many-objective optimization problems (MaOPs).

2.2 Decomposition-based approaches through scalarization

The principle of scalar decomposition-based MOP is to transform the multiple objectives to a number of scalar single-objective subproblems through aggregations. These subproblems are defined by a set of reference vectors. The decomposition expectation is to fulfill a one-one matching relationship between population and subproblems so that the solutions can be exploited according to the reference directions.

The definitions of reference vectors are essential to determine the MOP performance. To guarantee a maximum diversity over the objective space, most researches use uniformly distributed points from a normalized hyperplane as the reference points and generate reference vectors, respectively [2, 7]. If p divisions are considered along each objective, the total number of reference vectors is \(H=\left( {\begin{array}{c}M+p-1\\ p\end{array}}\right)\). Two examples of \(M=3, \, p=13\) and \(p=4\) are displayed in Fig. 1.

On the other hand, the uniformly distributed vectors expose disadvantages to the one-one matching mechanism, especially in solving irregular PF caused by the objective relation and constraints. Under this circumstance, existing modifications can be divided into two orientations: first, introducing dynamic strategy in reference vectors’ definitions, and adjusting the vectors in high-activated and low-activated directions and second, authorizing a many-one matching mechanism in high-activated vectors to associate more solutions, and a zero-one mechanism in low-activated subproblems [4]. Both aspects involve an appropriate adjusting strength: Over adjustment may lead to the incorrect judgment of the PF shape and result in low diversity and premature convergence.

Another important part of decomposition is the selection of the scalarization approach, such as TCH and PBI [24, 29, 35], which influence the balance of convergence and diversity. In MOEA/D and its variants, the scalarization values are calculated on individuals associated with each subproblem and the corresponding neighbor subproblems. Then, subproblems are updated with the new fittest individuals. Some reference-assisted approaches adopt distance measure as the solution-to-subproblem matching method. For example, NSGA-III [7] calculates the vertical distance between the individual and vector, and RVEA [5] uses the cosine distances to associate individuals with the closest vector. After these kinds of associate approaches, subproblems with more than one individual will go through extra selection procedures to complete one-one matching.

Some researches combine the dominance-based approach with the decomposition. NSGA-III applied a non-dominated sorting procedure to prioritize good individuals in offspring selection and adopted references for diversity control. MOEA/DD [20] introduced a unified paradigm considering both the dominance relations and subregion exploration.

3 The proposed algorithm

3.1 Framework of R-MOEA/D

The main framework of R-MOEA/D is illustrated in Algorithm 1. First, the initialization procedure generates the initial reference vectors and population. Within the main loop, the offspring generating procedure consists of crossover and mutation. The crossover operator can be set as simulated binary crossover (SBX) or differential evolution (DE). The parent and offspring population are then combined and prepared for an elite selection for the next generation. The main contributions of R-MOEA/D lie in the elite selection procedure and reference vector adaptation. In the following paragraphs, the implementation details of selection and adaptation will be explained.

3.2 Many-one matching and selection mechanism

Most decomposition-based methods associate population with reference vectors following the one-one matching approach, which is illustrated in Fig. 2a. First, individuals are assigned to different subproblems. Taking the \(i\hbox {th}\) subproblem as an example, \({{\mathbf {\lambda }}}_{i}\) is the corresponding reference vector, and the distance is the assumed matching principle. Secondly, in each subproblem, only one fittest individual is selected as the associated elite solution. Although both individual B and G are the fittest solutions to subproblem i which have advantageous aggregate values, only B is selected as subproblem ith’s associated solution while individual G is abandoned. Many improvements have been proposed on the one-one matching technique to pursue an unprejudiced platform for selection between subproblems and individuals [38].

These decompositions with one-one matching procedures can achieve good performance when the objective space is divided into a sufficient number of subregions. When the reference vectors are sparse (Fig. 2b), the spaces between neighboring vectors are ignored, such as the directions of individual F and H. The population’s plumpness is strictly fixed according to the definition of references. Moreover, because of the curse of dimension, the increasing number of references will hardly express a high-dimensional objective space completely, so the diversity and flexibility are constrained. Some existing researches have also introduced a many-one matching approach to reserve more elite candidates under popular sub-directions, which use distance to assist aggregate selection [4]. However, as the searching converses, aggregate information becomes the dominating factor toward elite selection. In other words, all solutions gather around best-aggregated areas, where crowding selection shows less efficient in diversity maintenance.

In this paper, a novel many-one matching elite selection approach is proposed. This selection technique integrates domination and crowding condition, which are considered as separate convergence and diversity controls. First, the individuals are assigned into sub-populations according to the minimum angle distance toward reference vectors. Then, the selection procedure contains four steps, which are operated in each subproblem.

- (1):

Screen out poor individuals In elite selection considering crowding and dominance rank, some of the sparsest solutions far behind the whole population can be frequently selected as reserved offsprings, which retrograde the convergence process. As a preparation of crowding selection, the individuals with relatively larger aggregation values are filtered to guarantee convergence strength. The convergence strength should be primary at the beginning and gradually release authority to diversity control while searching. So, the reserve size \(N_{{\mathrm{g}}}\) is defined as an adapting form,

$$\begin{aligned} \begin{aligned} N_{{\mathrm{g}}} = \left\lfloor 2 * (1+{{\mathrm{theta}}}) *N_{{\mathrm{s}}} \right\rfloor \end{aligned} \end{aligned}$$(2)where \(N_{{\mathrm{s}}}\) is maximum subpopulation size, theta is the balance factor calculated while evolving,

$$\begin{aligned} \begin{aligned} theta = \min \left[ alpha * ({{\mathrm{Gene / Generation}}})^M, 1 \right] \end{aligned} \end{aligned}$$(3)alpha is set to be 0.9 in the experiments.

- (2):

Corner elite selection The corner elite in subproblem is defined as the solution which has the best aggregation value, which supports the subproblem searching direction. Only one corner elite is selected and reserved for the next generation.

- (3):

Non-dominated selection While decomposition is incomplete, domination is an essential complement for selection. Similar to NSGA-III, individuals inside the last front level l are directly included in population for the next generation. The individuals on the last level will go through the selection process via the following method. Different from NSGA-III which operates in whole objective space, R-MOEA/D operates a non-dominated selection in the subpopulation which is determined by the central orientation.

- (4):

Adjusting selection As indicated before, R-MOEA/D integrates domination and crowding condition as separate convergence and diversity controls. The above procedures considering aggregation and domination are regarded as convergence control parts, while diversity control is detailed as follows: We adopt a popular diversity evaluation, crowding distance r2, as a part of selecting criterion. Without convergence constraint, diversity will lose control and result in poor convergence distribution; thus, a convergence evaluation r1 is also added in diversity control. The weighted combination of the two evaluations is regarded as the selection criterion, and the proportion of the two parts is determined by the balance factor theta. It should be noted that although diversity control takes convergence performance into consideration, the two procedures are independent in control space since the operated individuals are different under these two procedures.

3.3 Reference vector adjustment

In most reference vector adapting methods, the adapting operation only works on vectors which have weak exploration ability, or generates new references in activated directions. In this paper, we present a novel reference vector adaptive approach, denoted as heuristic center-guided distribution.

In population-based optimization methods, individuals tend to gather around and distribute in local optimums and potential areas. Under a multi-objective environment, the potential areas imply the distribution of the Pareto front, which can lead to the searching direction. Different from existing addition–deletion and adaptation approaches, we regenerate all reference vectors according to current elite distribution. Since the relation between individuals and reference vector is many-one matching, the regeneration procedure can inherit the exploiting directions and expand exploration in newly defined searching areas.

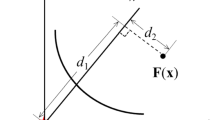

Generate new reference vectors. a Extending the target reference vectors: the sectors represent the searching areas in two reference sets, which can be indicated that the extended set has a larger scope for searching. b Matching and tracking target vectors: numbers on the individuals represent the belonging vectors; the dotted circles represent cluster result; the width of the arrow represents the tracking strength

Algorithm 3 presents the operation of the proposed reference vector regeneration method, which consists of the following four procedures. (1) Grouping the population into K groups through k-means clustering method based on cosine distances. The center location of each group is defined as a target reference point. The target reference vectors are the normalized vectors from coordinate origin to the target reference points. (2) Because the center of the subpopulation may reduce the preference scope, the target reference set \(\Lambda _{{\mathrm{target}}}\) is extended by 0.1% to maintain the preference region as illustrated in Fig. 3a. (3) Matching the target vectors with current vectors according to closest distance principle, and current vectors with no associated subpopulation have priority in choosing available target vectors. (4) The evolution of population has uncertainty in moving and converging. In order to avoid overfitting, the new reference \(\lambda _i'\) is updated adaptively by tracking the target references as follows: Accordingly, the new reference set \(\Lambda ' = \{\lambda _1', \lambda _2', \ldots , \lambda _H'\}\) is generated. The tracking procedure is illustrated in Fig. 3b.

The proportion \(c_{i}\) between target and current references depends on the number of subpopulation \(n_{i}\) and the distribution uniformity std(n). This design can be interpreted that the reference set tends toward stabilization when the uniformity among subproblems become better, and the subproblems with less components deserve a larger move for exploration.

3.4 Computational complexity of R-MOEA/D

The computational complexity of R-MOEA/D is mainly generated in the elite selection procedure and reference regeneration procedure. The complexity of assigning individuals to subproblems in line 3 of Algorithm 2 is O(MN). The calculation of aggregation function in line 8 requires a total of O(MN) computations. The non-dominated sort requires \(O(MN^{2})\) operations. The worst case in adjust selection in line 22 requires O(MN) computations. In Algorithm 3, the k-means cluster costs O(MNH) calculations, where H is the number of clusters, \(H<N\). The reference matching in line 10 has \(O(MH^{2})\) complexity. Taking all the above considerations and computations into account, the worst-case complexity of one generation of R-MOEA/D is \(O(MN^{2})\), which is efficient and comparable with most recently proposed MOP algorithms.

4 Comparative studies

In this section, comparative studies are carried out among the proposed algorithm and six popular MOP methods. The test instances include regular and irregular Pareto fronts, which are selected from three widely used test suites, DTLZ [9], WFG [15] and UF [45]. The experiments and numerical studies are conducted in two groups: three-dimension objective space and five-dimension objective space, respectively.

4.1 Experiment settings

Among the DTLZ benchmark instances, the optimal solutions lie on the linear hyperplane and concave surfaces in DTLZ1-4, which all belong to regular instances. DTLZ5-6 present degenerates fronts, while DTLZ7 has disconnected Pareto consisting of (\(2^m-1\)) segments; DTLZ35, a more convergence challenging irregular problem combined by DTLZ3 and DTLZ5, shares the same Pareto shape with DTLZ5. These four instances above are classified as irregular instances. WFGs involve nonseparable variables. For WFG1, a mixed Pareto front is considered; WFG2 has a disconnected convex Pareto front and WFG3 has a degenerate linear optimal front. All of the above three WFGs are classified as irregular problems. WFG4 has a concave Pareto which belongs to regular problems. UF8 and UF9 are three-objective benchmark functions where UF8 is a regular instance and UF9 is an irregular instance. All test instances are optimized on three- and five-objective problems, respectively, except UF8 and UF9, which can only be operated in three-dimension.

The statistical results are organized according to the objective dimensions. For three-objective experiments, MOEA/D [44], NSGA-II [8], A-NSGA-III [17] MaOPSO [11] and MaOEA-R&D [14] are selected as the comparison algorithms, where A-NSGA-III implements non-dominated sorting and introduces an adaptive reference points updating process. For five-objective, AR-MOEA [31], NSGA-III [7], A-NSGA-III and MaOPSO are chosen as comparisons. AR-MOEA is an efficient indicator-based method for many-objective problems considering the irregular situations, and the indicator calculated by references can be seen as a special aggregation function in decomposition; NSGA-III is a popular algorithm based on both decomposition and Pareto dominance; MaOPSO is a PSO-engine decomposition-based algorithm, which shows better performance in high-dimensional objective space than the traditional MOPSO [28]; MaOEA-R&D is designed for many-objective using a novel space reduction approach. MaOPSO and the proposed R-MOEA/D are programmed in Matlab R2016a, and codes of all the other comparison algorithms are found in PlatEMO [32].

4.2 Parameter settings

4.2.1 Settings of crossover and mutation

For DTLZs and WFGs test instances, simulated binary crossover (SBX) is selected as the crossover operators, while differential evolution (DE) is defined for UF problem. All algorithms use the same distribution index and crossover probability, where \(\eta _{{\mathrm{c}}}=20\), \(p_{{\mathrm{c}}}=1\). Polynomial mutation is used as the mutation operator in all experiments, where \(\eta _{{\mathrm{m}}}=20\), \(p_{{\mathrm{m}}}=1/n\).

4.2.2 Setting of experiment

The maximal number of evaluations is set to be 50,000 for each run as the termination condition. Each result is calculated by 15 independent runs, and statistical experimental results, hypervolume (HV) and inverse general distance (IGD), are outputted by PlatEMO at a significance level of 0.05. The two indicators reflect different comparison results. IGD value is the sum distance among predefined points on true Pareto front and the found solutions, so it mainly reflects the convergence performance of the selected areas; HV value, which is the volume bounded by the approximate Pareto surface and a reference point, focuses more on the diversity score.

4.2.3 Setting of specific parameters

For MOEA/D, the neighborhood size T is 20. Both MOEA/D and R-MOEA/D adopt penalty boundary interaction (PBI) as the aggregation method, and the penalty parameter \(\theta\) is set to be 5. Uniformly distributed reference vector sets for MOEA/D, NSGA-III, A-NSGA-III, AR-MOEA and R-MOEA/D are defined according to Das and Dennis’s approach. The number of references are listed in Table 1, where R-MOEA/D adopts the reduced references while the other comparison decomposition algorithms adopt the full references. In order for a fair competition, subproblem population size in R-MOEA/D is designed to make the whole population size approximately equalize the size under a full reference situation.

One parameter, alpha, in R-MOEA/D must be predefined. \(alpha = 0.9\) for all three and five objectives experiments. An additional parameter sensitivity test for alpha is in the following section.

4.3 Performance on three-objective problems

Tables 2 and 3 present the HV and IGD values obtained by the algorithms, respectively. The best result of each test instance is highlighted in a dark gray background; the second best result is shown in a gray background. In regular DTLZ optimizations, R-MOEA/D obtains best HV performance on DTLZ1-3 and is competitive on DTLZ4 compared to MaOEA-&D, which indicates a good diversity led by the heuristic orientations in full objective spaces; MaOEA-R&D, MOEA/D and A-NSGA-III obtain the best convergence performances observed from IGD assessment on DTLZ1-4, respectively, while R-MOEA/D ranks second on DTLZ2 and DTLZ3. On WFG4, MOEA/D obtains best HV and IGD performances, while R-MOEA/D presents competitive convergence performance. On UF8, MaOEA-R&D and R-MOEA/D run the best HV and IGD values, respectively. Overall in regular three-objective environments, R-MOEA/D, MOEA/D and MaOEA-R&D perform best considering both diversity and convergence. The good performances of MOEA/D are promised by the predefined references which uniformly partition the searching spaces. Moreover, it can be seen that R-MOEA/D has achieved satisfied diversity results and competitive convergence performances, which proves the subproblem reduction approach not only has no negative influence to the searching process that covers the whole objective spaces, but presents a better diversity potential in diversity maintain.

Among irregular DTLZs, R-MOEA/D has achieved simultaneous best HV and IGD scores on DTLZ6 and DTLZ35, and second-best performances on DTLZ5 and DTLZ7. On WFGs, R-MOEA/D and A-NSGA-III have comparable best IGD performances on WFG1-2, while NSGA-II and A-NSGA-III have advantages toward HV metric. R-MOEA/D has obtained significant best performances on UR9 in both convergence and diversity. NSGA-II and A-NSGA-III also show good performance on irregular instances. The reason is that adaptive reference vectors are adopted in A-NSGA-III; and the elite selection in NSGA-II is only based on domination. Oppositely, MOEA/D and MaOEA-R&D present relatively poor performance on irregular DTLZs compared to that on the regular Pareto. As a result, the uniform references will deteriorate the exploration. Toward irregular three-dimension spaces, R-MOEA/D shows relatively better scores in most IGD measurements and presents top-ranked HV performances in some instances. MOEA/D displays much worse rankings under irregular environments. Therefore, it can be proved that the directions expressed by evolving population present good leading performance than the predefined subproblems in irregular Pareto environments. Meanwhile, A-NSGA-III has obtained better HV rankings on irregular fronts than regular instances, which illustrates the effectiveness of using adaptive reference assigning strategy. Although A-NSGA-III has obtained competitive diversity performance, R-MOEA/D presents even better in overall IGD score. Therefore, the subproblem update strategy in R-MOEA/D outperforms the “addition and deletion” strategy in A-NSGA-III on convergence control.

It can be concluded that the uniform references have disadvantages on irregular instances, while the adaptive reference vectors and decomposition orientations can contribute to determining appropriate learning orientations. R-MOEA/D shows significant improvement than MOEA/D and has stable performances among regular and irregular environments than other comparison algorithms, which indicates the ability to persist a reasonable searching scope through heuristic reference regeneration, and a satisfied diversity maintenance ability through reference reduction.

4.4 Performance on five-objective problems

Tables 4 and 5 present the HV and IGD performance on five-objective experiments. On regular DTLZs, R-MOEA/D has ranked first or second place with respect to HV performance, which is comparable on DTLZ1 with AR-MOEA and NSGA-III, and has slight improvements than AR-MOEA/D on DTLZ3 and DTLZ4. AR-MOEA has the best performance on WFG4, and NSGA-III ranks second. It can be observed from the overall performances on regular instances under five-objective R-MOEA/D shows slight better or competitive performances than NSGA-III and AR-MOEA, which are well-perform algorithms among currently researches, in most cases. Therefore, the proposed method has satisfied performance in regular MaOP environment. In contrary, compared to NSGA-III, A-NSGA-III shows a significant disadvantage on regular MaOPs, which may be caused by the premature reference adjusting procedure which results in the decrease in the diversity. The results in the regular environment demonstrate the better spreading performance of the shifting strategy in the proposed method.

In irregular MaOP experiments, R-MOEA/D has achieved the first places in both HV and IGD statistic scores on DTLZ6 and DTLZ35, and achieved second places on DTLZ5 and DTLZ7, respectively. MaOPSO and AR-MOEA obtain the best results on DTLZ5 and DTLZ7, respectively. The stable performance among DTLZ instances illustrates the superiority in population-guided decomposition strategy in R-MOEA/D. Meanwhile, the overall satisfied diversity performances reflect the robustness of the many-one matching mechanism relative to one-one matching in AR-MOEA and NSGA-III. AR-MOEA, A-NSGA-III and MaOEA-R&D present best performances on WFG1-3. Compared with three-dimensional environments, the ability of R-MOEA/D remains competitive in irregular DTLZs, but deteriorates in irregular WFGs. The problem will be studied and improved in the future study. Moreover, the performances of MaOEA-R&D and A-NSGA-III decline in five-objective, which indicates the difficulty in exploring in high dimension.

As a conclusion, according to the statistical comparison results, R-MOEA/D has significantly better performances than A-NSGA-III and MaOPSO on regular five-dimensional instances, insignificantly better ability than AR-MOEA, and competitive results with NSGA-III. On irregular MaOPs, the proposed method is comparable with AR-MOEA/D and shows, respectively, better HV and IGD performances than NSGA-III and A-NSGA-III. Furthermore, it can be illustrated from A-NSGA-III’s performances the high-dimensional objective space raises the challenge of reference-adaptive strategies on satisfying both regular and irregular environments. R-MOEA/D and AR-MOEA both present leading stability toward different Pareto shapes. The shifting strategy and many-one matching technique in R-MOEA/D guarantee a stable and flexible orientation adjustment during the searching process. The performance of R-MOEA/D also demonstrates a simple but efficient approach for multi-objective study.

5 Parametric, efficiency and sensitivity analysis in R-MOEA/D

5.1 Parametric analysis

In R-MOEA/D, one parameter, alpha, must be predefined. This parameter controls the diversity and convergence proportions during the evolution. To analyze the parameter’s influence on different problems, empirical experiments are conducted on three-objective benchmarks with alpha equal to 0.7, 0.9, 1.1, 1.5 and 2, respectively. The average values of HV and IGD scores from 15 independent runs are displayed in bar Fig. 4. For better visualization for the differences, the HVs, which are transformed to [0.1, 0.9], and the inverse values of IGDs are displayed in the bar graph.

According to the printed results, R-MOEA/D shows the best HV performances when \(alpha = 0.7\) in all regular Pareto instances, and best IGD performances obtained at 0.9 in most instances. On irregular instances, the best performed alpha is 0.9 and 1.1 for HV measure and ranges from 0.9 to 1.5 for IGD measure. As a result, 0.9 is compatible with both regular and irregular instances. Furthermore, it can be observed from the result distribution the irregular environments require higher alpha value than regular cases, which means the population heading to the irregular Pareto destination needs more flexibility in exploration procedure. Therefore, it proves that the orientation adjusts strategy is necessary for solving irregular optimization problems, and the definition of aggregation function shows less importance during the elite selection.

5.2 Reference efficiency analysis

In order to testify the relationship between reference efficiency and optimization performance, a comparison is arranged considering three assessments during the evolution. Among these assessments, a reference efficiency (RE) metric is defined as the proportion of the contributing orientations, each of which is presented as the closest reference to one individual solution. It can be mathematically defined as

where

The other two assessments are HV and IGD metrics. The optimal solutions are evaluated in every generation on a regular DTLZ3 instance and irregular DTLZ5 instance, and the records of 400-generation runs are displayed in Fig. 5. It can be observed that R-MOEA/D has obvious higher reference efficiency on both regular and irregular cases, while MOEA/D only activates half references during the evolution toward irregular Pareto surface. At the same time, R-MOEA/D has achieved better HV and IGD performances than MOEA/D on both regular and irregular problems. Therefore, it can be concluded that the MOP performance is related to the reference efficiency, and inefficient reference arrangement may result in inefficient exploring scope and deterioration of optimization performance. Meanwhile, the heuristic orientation adjustment proposed in this paper is proved to have better explore ability which can enhance the population diversity and discover a more comprehensive Pareto front.

5.3 Sensitivity analysis among aggregation approaches

In order to study the influence of MOP performance toward different aggregation approaches, the regular instance, DTLZ3, and an irregular instance, IDTLZ1, are optimized using MOEA/D and R-MOEA/D methods, respectively. The comparison aggregations are with Tchebycheff approach and penalty-based boundary intersection approach. The appropriate Pareto solutions obtained in 50000 evaluations are displayed in Fig. 6.

Because of the uniform reference vectors, MOEA/D has achieved pretty good performance on regular DTLZ3, where PBI approach shows better distribution than TCH. The difference between the two aggregations using R-MOEA/D is small, and both present good randomness as well as diversity. On irregular IDTLZ1, although MOEA/D shows good convergence and spacing, the solutions distribute in biased directions close to the borderline, while R-MOEA/D presents a better uniformity. The PBI shows slightly better performance than TCH with R-MOEA/D. These observations illustrate that compared to MOEA/D, R-MOEA/D presents less sensitivity toward different aggregation functions, especially in the regular Pareto fronts.

5.4 Sensitivity analysis on different numbers of decomposition

Considering that the number of reference vectors has impacts on the performance of traditional decomposition-based algorithms, the similar sensitivity issue is tested in this section. The division number p controls the number of uniformly distributed references. Experimental results have been collected when p equals to 3, 4, 5, 6, 7 and 9, while the corresponding number of references is 10, 15, 21, 28, 36 and 55, respectively. The population sizes are designed to approximate 105, so the subpopulation sizes are defined as 11, 7, 5, 4, 3 and 2 accordingly. Regular benchmark DTLZ3 and irregular DTLZ5 are selected as the operated problems.

The HV and IGD comparisons which include the average values among ten independent runs are illustrated in Fig. 7. In bar figures, the assessment values are transformed into region [0.1, 0.9] for intuitive comparison. It can be observed that both HV and IGD have approximate unimodal distribution with the increasing number of division. The assessment values reach best when p equal to 4, 5 and 6. For the regular DTLZ3 instance, the performances rarely change when \(p\ge 4\), while for the irregular DTLZ5, too much division deteriorate convergence and diversity. It is worth mentioning that the traditional decomposition is a situation when p equals to 13, where each reference corresponds to one individual. According to the performance trend, the obvious advantage of reduced decomposition can be proved. The qualities of the normalized performance statistics toward different p are displayed by box figures. It seems that irregular problems present higher variance and higher sensitivity to the decomposition number.

As a conclusion, the reduced decomposition approach provides chances for better exploration especially on irregular instances and maintains competitive results under regular environments.

6 Discussion

The comparative studies present a comprehensive performance on both benchmarks solving ability and some property analyses of the proposed algorithm. First, during the benchmark experiments, three- and five-objective environments are provided including problems with regular and irregular Pareto fronts. It can be observed from the statistical results the heuristic-based adaptive references have achieved significant improvement relative to the uniform references on irregular Pareto problems. Meanwhile, compared to other dynamic reference adjusting strategies, the proposed R-MOEA/D shows improved or comparable performance in high-dimensional objective spaces. Furthermore, the randomness of the elite distribution and the corresponding diversity improvement are worth to be studied in the further study. Second, during property analyses, the parametric sensitivity is carried out to determine the most compatible alpha value, and the reference efficiency during evolving is studied and proved its relevance with the diversity performance. The R-MOEA/D has achieved a better reference efficiency which guarantees a better MOP performance. The proposed method also presents less sensitivity on different aggregate equations and different numbers of decomposition, which proves a convenience parameter definition and a prospective robustness to new optimization problems.

7 Conclusion

In this article, an orientation adjustment approach is proposed for better exploration in multi-objective optimization. This approach benefits from the population-based heuristic evolving tendency and invokes the decomposition mechanism via a reduced number of reference vectors. Compared to traditional scalarization approaches, individuals in this novel R-MOEA/D algorithm can explore with a sparse direction constraint and are authorized more freedom in order to adapt a manifold Pareto environment. Comparison studies demonstrate that the proposed R-MOEA/D outperforms the compared state-of-the-art algorithms in several instances including regular and irregular Pareto fronts under multi- and many-objective environments. The heuristic orientation adjustment approach with more flexibility and autonomous evolving is proved to have a satisfied diversity maintaining the ability and better intuition on defining the exploration scope. Moreover, a more efficient reference definition has been proved in R-MOEA/D compared to MOEA/D, and a relevance relationship between reference efficiency and optimize performance has also been established in the reference efficiency study. In addition, R-MOEA/D proves more independent on aggregation approaches in decomposition-based frameworks, which can simplify several tune procedures in decomposition studies. In the end, the trends of MOP performance toward a different number of decomposition are tested and the efficacy of reduced reference is proved. As a conclusion, the novel heuristic orientation adjustment technique indicates a new branch in decomposition-based approaches, which deserved to be studied for better exploration and exploitation in future works.

References

Adra SF, Fleming PJ (2011) Diversity management in evolutionary many-objective optimization. IEEE Trans Evol Comput 15(2):183–195

Asafuddoula M, Ray T, Sarker R (2015) A decomposition-based evolutionary algorithm for many objective optimization. IEEE Trans Evol Comput 19(3):445–460. https://doi.org/10.1109/tevc.2014.2339823

Cai X, Li Y, Fan Z, Zhang Q (2015) An external archive guided multiobjective evolutionary algorithm based on decomposition for combinatorial optimization. IEEE Trans Evol Comput 19(4):508–523. https://doi.org/10.1109/tevc.2014.2350995

Cai X, Yang Z, Fan Z, Zhang Q (2016) Decomposition-based-sorting and angle-based-selection for evolutionary multiobjective and many-objective optimization. IEEE Trans Cybern PP(99):1–14

Cheng R, Jin Y, Olhofer M, Sendhoff B (2016) A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 20(5):773–791

Das I, Dennis JE (1998) Normal-boundary intersection: a new method for generating the pareto surface in nonlinear multicriteria optimization problems. SIAM J Optim 8(3):631–657. https://doi.org/10.1137/s1052623496307510

Deb K, Jain H (2014) An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans Evol Comput 18(4):577–601. https://doi.org/10.1109/tevc.2013.2281535

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: Nsga-ii. IEEE Trans Evol Comput 6(2):182–197. https://doi.org/10.1109/4235.996017 Pii S 1089-778x(02)04101-2

Deb K, Thiele L, Laumanns M, Zitzler E (2002) Scalable multi-objective optimization test problems. In: Cec’02: proceedings of the 2002 congress on evolutionary computation, vol 1–2, pp 825–830

Ding J, Liu J, Chowdhury KR, Zhang W, Hu Q, Lei J (2014) A particle swarm optimization using local stochastic search and enhancing diversity for continuous optimization. Neurocomputing 137:261–267

Figueiredo EMN, Ludermir TB, Bastos-Filho CJA (2016) Many objective particle swarm optimization. Inf Sci 374:115–134. https://doi.org/10.1016/j.ins.2016.09.026

Gee SB, Tan KC, Shim VA, Pal NR (2015) Online diversity assessment in evolutionary multiobjective optimization: a geometrical perspective. IEEE Trans Evol Comput 19(4):542–559. https://doi.org/10.1109/tevc.2014.2353672

Giagkiozis I, Purshouse RC, Fleming PJ (2014) Generalized decomposition and cross entropy methods for many-objective optimization. Inf Sci 282:363–387

He Z, Yen GG (2016) Many-objective evolutionary algorithm: objective space reduction and diversity improvement. IEEE Trans Evol Comput 20(1):145–160. https://doi.org/10.1109/tevc.2015.2433266

Huband S, Hingston P, Barone L, While L (2006) A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans Evol Comput 10(5):477–506

Ishibuchi H, Sakane Y, Tsukamoto N, Nojima Y (2010) Simultaneous use of different scalarizing functions in MOEA/D. In: Proceedings of the 12th annual conference on genetic and evolutionary computation, ACM, pp 519–526

Jain H, Deb K (2014) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: handling constraints and extending to an adaptive approach. IEEE Trans Evol Comput 18(4):602–622

Jiang Q, Wang L, Hei X, Yu G, Lin Y, Lu X (2016) Moea/d-ara+ sbx: a new multi-objective evolutionary algorithm based on decomposition with artificial raindrop algorithm and simulated binary crossover. Knowl Based Syst 107:197–218

Jost L (2006) Entropy and diversity. Oikos 113(2):363–375

Li K, Deb K, Zhang Q, Kwong S (2015) An evolutionary many-objective optimization algorithm based on dominance and decomposition. IEEE Trans Evol Comput 19(5):694–716. https://doi.org/10.1109/tevc.2014.2373386

Li K, Kwong S, Zhang Q, Deb K (2015) Interrelationship-based selection for decomposition multiobjective optimization. IEEE Trans Cybern 45(10):2076–2088

Liu B, Fernandez FV, Zhang Q, Pak M, Sipahi S, Gielen G (2010) An enhanced MOEA/D-DE and its application to multiobjective analog cell sizing. In: 2010 IEEE congress on evolutionary computation (CEC), IEEE, pp 1–7

Mei Y, Tang K, Yao X (2011) Decomposition-based memetic algorithm for multiobjective capacitated arc routing problem. IEEE Trans Evol Comput 15(2):151–165. https://doi.org/10.1109/tevc.2010.2051446

Ming M, Wang R, Zha Y, Zhang T (2017) Pareto adaptive penalty-based boundary intersection method for multi-objective optimization. Inf Sci 414:158–174. https://doi.org/10.1016/j.ins.2017.05.012

Pan A, Wang L, Guo W, Wu Q (2018) A diversity enhanced multiobjective particle swarm optimization. Inf Sci 436–437:441–465

Pilat M, Neruda R (2015) Incorporating user preferences in moead through the coevolution of weights. In: Proceedings of the 2015 annual conference on genetic and evolutionary computation, ACM, pp 727–734

Qi Y, Ma X, Liu F, Jiao L, Sun J, Wu J (2014) Moea/d with adaptive weight adjustment. Evol Comput 22(2):231–264

Reyes-Sierra M, Coello Coello CA (2006) Multi-objective particle swarm optimizers: a survey of the state-of-the-art. Int J Comput Intell Res 2(3):287–308

Rubio-Largo A, Zhang Q, Vega-Rodriguez MA (2014) A multiobjective evolutionary algorithm based on decomposition with normal boundary intersection for traffic grooming in optical networks. Inf Sci 289:91–116. https://doi.org/10.1016/j.ins.2014.08.004

Sato H (2014) Inverted PBI in MOEA/D and its impact on the search performance on multi and many-objective optimization. In: Proceedings of the 2014 annual conference on genetic and evolutionary computation, ACM, pp 645–652

Tian Y, Cheng R, Zhang X, Cheng F, Jin Y (2017) An indicator based multi-objective evolutionary algorithm with reference point adaptation for better versatility. IEEE Trans Evol Comput 22:609–622

Tian Y, Cheng R, Zhang X, Jin Y (2017) Platemo: a matlab platform for evolutionary multi-objective optimization. Neural Evol Comput 12(4):73–87

Trivedi A, Srinivasan D, Sanyal K, Ghosh A (2017) A survey of multiobjective evolutionary algorithms based on decomposition. IEEE Trans Evol Comput 21(3):440–462. https://doi.org/10.1109/tevc.2016.2608507

Wang H, Jin Y, Yao X (2016) Diversity assessment in many-objective optimization. IEEE Trans Cybern PP(99):1–13

Wang L, Zhang Q, Zhou A, Gong M, Jiao L (2016) Constrained subproblems in a decomposition-based multiobjective evolutionary algorithm. IEEE Trans Evol Comput 20(3):475–480. https://doi.org/10.1109/tevc.2015.2457616

Wang R, Xiong J, Ishibuchi H, Wu G, Zhang T (2017) On the effect of reference point in MOEA/D for multi-objective optimization. Appl Soft Comput 58(Supplement C):25–34. https://doi.org/10.1016/j.asoc.2017.04.002

Wang Z, Zhang Q, Li H (2015) Balancing convergence and diversity by using two different reproduction operators in MOEA/D: some preliminary work. In: 2015 IEEE international conference on systems, man, and cybernetics (SMC), IEEE, pp 2849–2854

Wu M, Li K, Kwong S, Zhou Y (2017) Adaptive two-level matching-based selection for decomposition multi-objective optimization. IEEE Trans Evol Comput PP(99):1–1. https://doi.org/10.1109/TEVC.2017.2656922

Yang S, Jiang S, Jiang Y (2017) Improving the multiobjective evolutionary algorithm based on decomposition with new penalty schemes. Soft Comput 21(16):4677–4691

Yu G, Zheng J, Shen R, Li M (2016) Decomposing the user-preference in multiobjective optimization. Soft Comput 20(10):4005–4021. https://doi.org/10.1007/s00500-015-1736-z

Yuan Y, Xu H, Wang B, Zhang B, Yao X (2016) Balancing convergence and diversity in decomposition-based many-objective optimizers. IEEE Trans Evol Comput 20(2):180–198. https://doi.org/10.1109/tevc.2015.2443001

Zhang H, Llorca J, Davis CC, Milner SD (2012) Nature-inspired self-organization, control, and optimization in heterogeneous wireless networks. IEEE Trans Mob Comput 11(7):1207–1222

Zhang H, Song S, Zhou A, Gao XZ (2014) A clustering based multiobjective evolutionary algorithm. In: 2014 IEEE congress on evolutionary computation, pp 723–730

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11(6):712–731. https://doi.org/10.1109/tevc.2007.892759

Zhang Q, Zhou A, Zhao S, Suganthan PN, Liu W, Tiwari S (2008) Multiobjective optimization test instances for the CEC 2009 special session and competition. University of Essex, Colchester, UK and Nanyang technological University, Singapore, special session on performance assessment of multi-objective optimization algorithms, technical report, pp 1–30

Zhou A, Zhang Q (2016) Are all the subproblems equally important? Resource allocation in decomposition-based multiobjective evolutionary algorithms. IEEE Trans Evol Comput 20(1):52–64. https://doi.org/10.1109/tevc.2015.2424251

Acknowledgements

This work is supported by the China Scholarship Council, 201706260064, National Natural Science Foundation of China under Grant Nos. 61503287 & 71771176, NUSRI China Jiangsu Provincial Grant BK20150386 & BE2016077, and Zhejiang Provincial Natural Science Foundation of China under Grant No. LY18F030010. The authors would like to thank Abigail Martin for the proofreading.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Pan, A., Wang, L., Guo, W. et al. Heuristic orientation adjustment for better exploration in multi-objective optimization. Neural Comput & Applic 32, 4757–4771 (2020). https://doi.org/10.1007/s00521-018-3848-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3848-8