Abstract

The present study investigate the numerical solution of nonlinear singular system represented with sixth Painlev́e equation by the strength of artificial intelligence using feed-forward artificial neural networks (ANNs) optimized with genetic algorithms (GAs), interior point technique (IPT), sequential quadratic programming (SQP), and their hybrids. The ANN provided a compatible method for finding nature-inspired mathematical model based on unsupervised error for sixth Painlev́e equation and adaptation of weights of these networks is carried out globally by the competency of GA aided with IPT or SQP algorithms. Moreover, a hybrid approach has been adopted for better proposed numerical results. An extensive statistical analysis has been performed through several independent runs of algorithms to validate the accuracy, convergence, and exactness of the proposed scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The second order ordinary differential equations (ODE) has been studies by Painlev́e, Gambier, and many researchers later on. The Painlev́e equations were discovered by Painlev́e [1] and Gambier [2]; they found six new functions which were defined by nonlinear ordinary differential equations depending on complex parameters this result led to the problem of finding new function which could be defined by nonlinear ODEs like the Painlev́e equations, while studying a problem posed by Picard [3]. Problem was about the second-order ordinary differential equations of the form,

where Ψ is rational function in \(\frac {du}{dx}\), algebraic in u and analytic in x with the Painlev́e property, i.e., the singularities other than poles of the solutions are independent of the integrating constant and so are dependent only on the equation. The differential equations, possessing Painlev́e property, are called Painlev́e type equations. Painlev́e showed that within a Mobius transformation, there are fifty canonical equations [4] of the form (1). Among the 50 equations, the 6 were well known. Remaining 44 are integrable in terms of known elementary functions and they are reducible to one of these 6 equation. These six equations are commonly known as Painlev́e equations and denoted by PI-PVI. Although the Painlev́e equations were discovered from mathematical considerations, they occur in many physical situations; plasma physics, statistical mechanics, nonlinear waves, quantum gravity, general relativity, quantum field theory, nonlinear optics, and fiber optics. Painlev́e equations have attracted much interest as reduction of the soliton equations which are solvable by inverse scattering transformation [5,6,7].

The general solution of Painlev́e equations are called Painlev́e transcendent. However, for certain values of parameters, PI-PVI having rational solutions and solutions are expressible in terms of special functions [8,9,10]. The PII-PVI expression admitted Backlund transformations which relate one solution to another solution of the same equation but with different values of parameters [11, 12]. Painlev́e equations can be written as Hamiltonian systems [13, 14]. Painlev́e equations appear on the compatibility conditions of linear system of equation, Lax-pairs, possessing irregular singular points. By using Lax-pairs, one can find the general solution of a given Painlev́e equations as the Fredholm integral equation.

At the end of the nineteenth century, it was proposed that new transcendental functions could be found as solutions of ordinary differential equations (ODEs). For those, a classification process was undertaken, which it was foreseen would proceed order by order of ODEs having what is today known as the Painlev́e property. An ODE is said to have the Painlev́e property if its general solution is free of movable branched singularities (movable means, the location of the singularity depends on initial conditions). This is the base of discovery of the well-known six Painlev́e equations [4, 15,16,17], which did indeed define new transcendental functions. However, this classification process then stalled somewhat, with only partial classifications being undertaken at third order [18,19,20,21,22], and no new transcendent being found. At fourth order, even the classification of dominant terms for the polynomial case was left incomplete [23]. Interest in the six Painlev́e equations was reignited by the work of Ablowitz et al. [24, 25], they found that similarity reductions of completely integrable partial differential equations (PDEs) gave rise to ODEs with the Painlev́e property. In many cases, one or other of the Painlev́e equations themselves.

Airault [26] made the next step of using higher order integrable PDEs to derive higher order ODEs with the Painlev́e property. She derived a whole hierarchy of ODEs, a second Painlev́e hierarchy, i.e., having as first member the second Painlev́e equation, by similarity reduction of the Korteweg de Vries and modified Korteweg de Vries hierarchies. This open the possibility of deriving higher order Painlev́e equations as sequences of ODEs of increasing order, as opposed to the classification of ODEs order-by-order originally proposed. However, it was not until the work of Kudryashov [27], who derived both a first and second Painlev́e hierarchy, that further work in this direction was undertaken. Later on, many researchers have been interested in deriving Painlev́e hierarchies and in investigating their properties and underlying structures [28,29,30,31,32,33,34,35]. At the same time, the present day continuation of the original order-by order classification process [36,37,38,39,40] is informed by knowledge of the connection with higher order completely integrable PDEs.

There are many types of Painlev́e equations but commonly known are only following six Painlev́e equations. A brief introductory material about the one to six Painlev́e equations is described here. These equations are used extensively by the research community in different applications in physical science and engineering technologies.

The PI-equation based on quadratic nonlinear factor in one of its term, PII-equation has special importance due to its cubic nonlinearity along with variations of one constant parameter α, PIII-equation is well recognized due to its singularity at origin with variation in four constants parameters α, β, γ, and δ. Comparatively, very few numerical and analytical solvers are available to handle such type of problem. The Painlev́e -IV equation is famous for its strong nonlinearity. Moreover, this problem has two constants parameters α, β, as well and the PV-equation is very complicated differential equation, that has three terms possess singularities and also four constants parameters namely α, β, γ, and δ. The last Painlev́e equation which focus of our study is known as PVI-equation have special attention among all six Painlev́e and this is the most complicated Painlev́e equation having multiple singularities. This equation have multiple singularities at x = 1,0, and u = 1,0, u = x. It depends upon the four constants parameters known as α, β, γ, and δ. It has quadratic nonlinearity in many terms as well [41,42,43,44,45].

General properties of Painlev́e equations

-

(a)

PII-PVI have Backlund transformations which relate solutions of a given Painlev́e equation to solutions of the same Painlev́e equation, though with different values of the parameters with associated Affine Weyl groups that act on the parameter space.

-

(b)

PII-PVI have rational, algebraic, and special function solutions expressed in terms of the classical special functions

-

(c)

These rational, algebraic and special function solutions of PII-PVI, called classical solutions, can usually be written in determinant form, frequently know as wronskians. Often, these can be written as Hankel determinants or Toeplitz determinants

-

(d)

PI-PVI can be written as a (non-autonomous) Hamiltonian system and the Hamiltonians satisfy a second-order, second-degree differential equations (PI-PVI)

-

(e)

PI-PVI possess Lax pairs (isomonodromy problems)

2 Design methodology for sixth Painlev́e equation

The brief description of designed methodology will be presented for the solution of the nonlinear sixth Painleve differential equation. In this section, the procedure has been developed two feed-forward unsupervised neural networks models of the equation.

2.1 Heuristic mathematical modeling

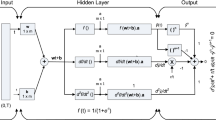

Generalized design methodology for sixth Painlev́e differential equation can be expressed as a MATLAB function. Mathematical model for the sixth Painlev́e equation is constructed with the strength of feed-forward ANN, in the form of following continuous mapping for the solution \(\hat {u}(x)\), and its first- and second-order derivatives are \({d\hat {u}}/{dx}\) and \({d^{2}\hat {u}}/{dx^{2}}\) respectively,

Equations (2–4) are based on defined log-sigmoid function \(f(t) = \frac {1}{1 + e^{-t}}\) and its respective derivatives are working as activation functions, therefore, system of equations can be written as,

The suitable combination of these above equations can be used to model the differential equations like (5–7), for reader interest see more references like [46, 47] and [48].

2.2 Fitness function

A fitness function or objective function E is developed in an unsupervised manner and it is defined by sum of two mean square error E1 and E2. Therefore, E can be written as

where E1 is error function associated with given differential equation and it is given as

Similarly, E2 is the error function associated with boundary conditions for given equation is given as:

3 Numerical and analytical learning techniques

Differential equations are solved under the conditions of existing techniques. A lot of analytical and numerical solvers have been developed by researchers to solve higher order nonlinear boundary value problems. Painlev́e equations have been examined by number of researchers by means of several techniques including both analytical, as well as, numerical solvers. For example, variation iteration method (VIM), homotopy perturbation method (HPM) [49], Adomian decomposition method (ADM), Legendre-Tau methods, Sinc-collocation method, wavelet method, and so on. In all of these methods, the solution is generally given in the form of an infinite series usually convergent to an accurate approximate solution. The results showed that all of these methods have their own limitations and advantages over others. As per our literature survey about the stochastic solver to Painlev́e equation, no body yet applied to solve sixth Painlev́e equation; however, the first Painlev́e equation is solved using neural networks optimized with evolutionary and swarm intelligence technique. Further, some latest work has been done through these techniques [50, 51].

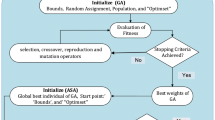

3.1 Hybrid approach

Hybrid approach is one of the best algorithms in the class of constrained optimization techniques. Alongside GA, AST, IPT, and SQP, their hybrid combination GA-AST, GA-IPT, and GA-SQP are also used to train the design parameters of neural network models for solving problems of sixth painlev́e type. Flow diagram of the generic hybrid approach based on GA-AST is shown in Fig. 1.

3.2 Parameter setting

MATLAB function GA and FMINCON have used in graphical user interface (GUI) of optimization tool box for learning of unknown parameter of ANN model. The parameters setting used for GA and AST have listed in Tables 1 and 2, respectively.

3.3 Procedural steps of proposed method

The main points of proceeding our solvers algorithm are discussed below

-

1.

InitializationInitial values of parameters are set in this step with random assignment and declarations. These setting are also tabulated in Table 1 for important parameter of GA.

-

2.

Fitness evaluationCalculate the fitness of each individual or chromosome of population using the (9) and (10), for first and second type of modeling, respectively.

-

3.

Termination criteriaTerminate the algorithm when either of following criteria matches:

-

Predefined fitness values |E|≤ 10−15 is achieved.

-

Predefine number of generations are executed.

-

Any of termination setting given in Table 1 for GA is fulfilled.

If termination criterion meets, then go to step 5.

-

-

4.

ReproductionCreate next generation on the basis of Crossover: call for scattered function, Mutation: call for adaptive feasible function, Selection: call for stochastic uniform function and elitism account is step 4, etc. Repeat the procedure from step 2 to step 4 with newly produced population and continues.

-

5.

ImprovementsActive set technique has used for further refinement of results by taking final adaptive weights of GA as initial weights (start point) of AST algorithm. AST has applied as per setting of parameters given in Table 2. Store also the refined final weights of the algorithm.

-

6.

Neurons analysisRepeat steps 1 to 5 for by taking size of initial weights, i.e., 30 and 45 for N = 10, 15 neurons, respectively. These results are used for detail analysis of algorithm later. The architectural diagram of proposed model is presented in Fig. 2.

4 Numerical results of Painlev́e equation-VI

Consider Painlev́e equation-VI

with boundary conditions defined as

Now, our main objective is to find the solution of the proposed problem using scheme based on two neural networks models. The fitness functions constructed for proposed problem (11) is given as

where E1 is error function associated with (11) and it is defined by

Similarly, E2 is the error function associated with proposed boundary conditions for given problem is as

Case 1

For N = 30 (number of neurons)Let us define the series solution of (11) in the form of our proposed weights as,

The proposed solution of (11) can be written in the form of neurons, which are obtained by optimal technique (AST, SQP, IPT, GA, GA-AST, GA-SQP, GA-IPT). Therefore, proposed solution of equation is written as

The values of the number of weights of our six proposed techniques like SQP, IPT, GA, and their hybrid approach GA-AST, GA-SQP, and GA-IPT are presented in Tables 3 and 4. Furthermore, we concluded on the basis of 100 times runs through these solvers results that there are minimum five digits in each value of the weights are good for approximated solution of proposed problem. The comparison of the proposed results with reference solution are presented in Table 5, which showed that, there are up to one to three digits places accuracy with the reference solution of our techniques SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT and their graphical representation is shown in Fig. 3. Moreover, we calculated the absolute error (AEs) of proposed results with reference solution as shown in Fig. 4. Table 6 showed that hybrid technique GA-AST is more accurate than the others techniques but SQP technique is also good in accuracy than IPT, GA, GA-SQP, and GA-IPT. The absolute errors of GA-AST, SQP, IPT, GA, GA-SQP, and GA-IPT lie in the range of [1.65E − 09,9.18E − 04], [1.04E − 08,8.88E − 02], [6.48E − 02,1.18E − 01], [4.99E − 08,1.08E − 01], [4.28E − 08,9.45E − 02], and [4.33E − 08,1.05E − 01] respectively.

Case 2

For N = 45 (number of neurons)The proposed solution of (11) by taking N = 45 is written as:

The values of the number of weights of six proposed techniques for case 2 like SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT are plotted in Tables 7 and 8, respectively. We obtained accuracy in weights up to five digit places is good approximation for proposed series solutions. We also tabled the values of proposed techniques to construct a comparison table of the values with the reference solution and presented in Table 9, which showed that there are accuracy up to three decimal places with the reference solution of other proposed techniques SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT. Moreover, their plot is presented in Fig. 5. For a better picture of the whole analysis, the absolute errors is presented in Fig. 6. Table 10 showed that hybrid technique GA-AST is more accurate as compared to other techniques. GA-SQP technique is also good in accuracy than other optimizers SQP, IPT, GA, and GA-IPT. The absolute errors (AEs) of GA-AST, GA-SQP, SQP, IPT, GA, and GA-IPT lie in the range of [2.45E − 08,7.39E − 03], [5.27E − 09,2.55E − 02], [8.46E − 08,2.50E − 02], [3.86E − 08,4.99E − 02], [2.85E − 08,6.98E − 02], and [2.45E − 08,4.92E − 02] respectively.

4.1 Statistical analysis

The probability plots with 95% confidence interval (CI) is used to determine whether a solver result follow the Normal distribution or not. It was also used to compare the accuracy of all proposed solvers. The p values for the Anderson-Darling (AD) test in each case was higher than the chosen significance level (0.05), so we concluded that all solver results follow the normal distributions. Moreover, the p values of SQP and Hybrid-SQP at level 30 were higher than the others which showed the best result. Similarly, IPT and Hybrid-IPT at level 45 have best results than others. The results have been shown in Figs. 7 and 8 for cases 1 and 2, respectively.

5 Conclusion

The sixth Painlev́e equation is highly nonlinear with multiple singularities, so very hard to find the solution of such type problems. However, in this study, we obtained the approximated solution of this problem through artificial neural network (ANN) with log-sigmoid as transfer function inside the hidden layer of structure by using optimizers like active set techniques, interior point techniques, sequential quadratic programming, and their hybridization GA-AST, GA-SQP, GA-IPT. Proposed techniques provide the better numerical solution of sixth Painlev́e equation. It is not so easy to find analytical solutions of nonlinear, stiff, and multi-singular differential equation in literature.

The best least absolute errors (AEs) are obtained through ANNs which provided the best fitted solution with reference solution. We presented the numerical results of sixth painlev́e equation for N = 10 in Table 5 by using solvers SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT. The least absolute errors are calculated by the difference of numerical results of proposed solutions with respect to the reference solution and are presented in Table 6 and the absolute errors of solvers SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT lie in the range of [1.04E − 08,8.88E − 02], [6.48E − 02,1.18E − 01], [4.99E − 08,1.08E − 01], [1.65E − 09,9.18E − 04], [4.28E − 08,9.45E − 02], and [4.33E − 08,1.05E − 01] respectively. Similarly for case 2, the absolute errors of solvers SQP, IPT, GA, GA-AST, GA-SQP, and GA-IPT lie in the range of [8.45E − 08,2.50E − 02], [3.86E − 08,4.99E − 02], [2.85E − 08,6.98E − 02], [2.45E − 08,7.39E − 03], [5.27E − 09,2.55E − 02], and [2.45E − 08,4.92E − 02] respectively.

Thus from absolute errors (AEs), it has clear that hybrid technique (GA-SQP) was more effective than others technique like sequential quadratic programming and hybrid techniques (GA-AST, GA-IPT) in case 1. However, IPT takes less time to converge the desired solution as compared to AST and SQP technique. In the case of by taking lesser number of neurons, the performance of our methods were efficient and fast to converge the solution; however, with the increase of neurons in number, we should need strong CPU configuration; we spend more time to find the solution due to stiffness of problem in nature. Moreover, for future work, one can construct the more reliable optimal techniques based on neural network to investigate it and compare with other numerical results.

References

Painlev P (1902) Sur les quations diffrentielles du second ordre et d’ordre sup rieure dont l’intgrale gnrale est uniforme. Acta Math 25:185

Gambier B (1910) Sur les quations diffrentielles du second ordre et du premier degr dont l’intgrale gnrale est points critiques fixes. Acta Math 33:155

Picard E (1887) Sur une classe d’équations diffrentielles. CR Acad Sci Paris 104:41–43

Ince EL (1956) Ordinary differential equations. Dover, New York

Ablowitz MJ, Clarkson PA (1997) Solitons, nonlinear evolution equations and inverse scattering, vol 149

Ablowitz MJ, Segur H (1981) Solitons and the inverse scattering trans-form. SIAM, Philadelphia

Its AR, Novokshenov VY (1986) The isomonodromic deformation method in the theory of Painleve equations, vol 1191

Lukashevich NA (1967) Theory of the fourth Painleve equation. Diff Equat 3:395–399

Lukashevich NA (1968) Solution of fifth equation of Painleve. Diff Urav 4:732–735

Lukashevich NA, Yablonskii AI (1967) On a class of solutions of the sixth Painleve equations. Diff Urav 3:264

Fokas AS, Ablowitz MJ (1982) On a unified approach to transformations and elementary solutions of Painlev equations. J Math Phy 23:2033–2042

Gromak VI, Laine I, Shimomura S (2002) Painleve differential equations in the complex plane. Walter de Gruyter, Berlin, New York

Okamoto K, Conte R (1999) The Painleve property, one century later

Okamoto K (1986) Studies on Painleve equation III, second and fourth Painleve equation, PII and PIV. Math Ann 275:221–255

Painleve P (1900) Memoire sur les equations differentielles dont 1’integrale generale est uniforme. Bull Soc Math Fr 28:201– 261

Painleve P (1902) Memoire Sur les equations differentielles du second ordre et d’ordre superieur dont 1’integrale generale est uniforme. Acta Math 25:1–85

Gambier B (1910) Sur les equations differentielles du second ordre et du premier degre dont 1’integrale generale est a points critiques fixes. Acta Math 33:1–55

Chazy J (1911) Sur les equations differentielles du troisieme ordre et d’ordre suprieur dont 1’intgrale generale a ses points critiques fixes. Acta Math 34:317–385

Garnier R (1912) Sur des equations differentielles du troisieme ordre dont l’integrale generale est uniforme et sur une classe d’equations nouvelles d’ordre superieur. Ann Sci Ecole Normale Sup 48:1–126

Exton H (1973) Non-linear ordinary differential equations with fixed critical points. Rend Mat (6):419–462

Martynov IP (1985) Analytic properties of solutions of a third-order differential equation. Differents Uravn 21:764–771

Martynov IP (1985) Third-order equations without moving critical singularities. Differents Uravn 21:937–946

Bureau FJ (1964) Differential equations with fixed critical points. Ann Mat Pura Appl (IV) 66:1–116

Ablowitz MJ, Segur H (1977) Exact linearization of a Painleve transcendent. Phys Rev Lett 38:1103–1106

Ablowitz MJ, Ramani A, Segur H (1980) A connection between nonlinear evolution equations and ordinary differential equations of P-type. I. J Math Phys 21:715–721

Airault H (1979) Rational solutions of Painleve equations. Stud Appl Math 61:31–53

Kudryashov NA (1997) The first and second Painleve equations of higher order and some relations between them. Phys Lett A 224:353–360

Gordoa PR, Pickering A (1999) Non-isospectral scattering problems: a key to integrable hierarchies. J Math Phys 40:5749–5786

Gordoa PR, Pickering A (2000) On a new non-isospectral variant of the Boussinesq hierarchy. J Phys A 33:557–567

Gordoa PR, Joshi N, Pickering A (2001) On a generalized 2 + 1 dispersive water wave hierarchy. Publ Res Inst Math Sci (Kyoto) 37:327–347

Pickering A (2002) Coalescence limits for higher order Painleve equations. Phys Lett A 301:275–280

Kawai T, Koike T, Nishikawa Y, Takei Y (2004) On the stokes geometry of higher order Painleve equations, Analyse complexe, systemes dynamiques, sommabilite des series divergentes et theories galoisiennes. II. Astrisque No 297:117–166

Gordoa PR, Joshi N, Pickering A (2006) Second and fourth Painlev hierarchies and Jimbo-Miwa linear problems. J Math Phys 47:073504

Koike T (2007) On the Hamiltonian structures of the second and the fourth Painlev hierarchies, and the degenerate Garnier systems, algebraic, analytic and geometric aspects of complex differential equations and their deformations. Painlev hierarchies. RIMS Kkyroku Bessatsu, B2, Res Inst Math Sci (RIMS), Kyoto pp 99–127

Sakka AH (2009) On special solutions of second and fourth Painleve hierarchies. Phys Lett A 373:611–615

Mugan U, Jrad F (1999) Painleve test and the first Painleve hierarchy. J Phys A 32:7933–7952

Mugan U, Jrad F (2002) Painleve test and higher order differential equations. J Nonlinear Math Phys 9:282–310

Mugan U, Jrad F (2004) Non-polynomial third order equations which pass the Painleve test. Z Naturforsch A 59:163–180

Cosgrove CM (2000) Higher-order Painleve equations in the polynomial class. I. Bureau symbol P2. Stud Appl Math 104:1–65

Cosgrove CM (2006) Higher-order Painleve equations in the polynomial class. II. Bureau symbol P1. Stud Appl Math 116:321– 413

Dai D, Zhang L (2010) On tronque solutions of the first Painlev hierarchy. J Math Anal Appl 368:393–399

Lebeau G, Lochak P (1987) On the second painleve equation: the connection formula via a Riemann-Hilbert problem and other results. Journal of Uiffekential Equations 68:344–372

Kajiwara K (2003) On a q-difference Painleve III equation: II. rational solutions. J Nonlinear Math Phys 10 (3):282–303

Yoshikatsu S (2007) Value distribution of the fifth Painlev transcendentsin sectorial domains. J Math Anal 330:817–828

Contea R, Musetteb M (2002) New contiguity relation of the sixth Painlev equation from a truncation. A Physica D 161:129– 141

Ahmad I, Raja MAZ, Bilal M, Ashraf F (2016) Neural network methods to solve the Lane-Emden type equations arising in thermodynamic studies of the spherical gas cloud model. Neural Comput and Applic 1–16. doi:10.1007/s00521-016-2400-y

Ahmad I, Mukhtar A (2015) Stochastic approach for the solution of multi-pantograph differential equation arising in cell-growth model, Appl. Math Comput 261:360

Ahmad I, Ahmad S, Bilal M, Anwar N (2016) Stochastic numerical treatment for solving Falkner-Skan equations using feed forward neural networks. Neural Comput and Applic 1–14. doi:10.1007/s00521-016-2427-0

He JH (1999) Homotopy perturbation technique. Comput Math Appl Mech Engy pp 178–257

Abu Arqub O (2016) Approximate solutions of DASs with nonclassical boundary conditions using novel reproducing kernel algorithm. Fundamenta Informaticae 146:231–254

Abu Arqub O, Maayah B (2016) Solutions of Bagley-Torvik and Painlev equations of fractional order using iterative reproducing kernel algorithm. Neural Comput and Applic. doi:10.1007/s00521-016-2484-4

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

There is no conflict of interest among all the authors.

Rights and permissions

About this article

Cite this article

Ahmad, I., Rehman, A., Ahmad, F. et al. Heuristic computational intelligence approach to solve nonlinear multiple singularity problem of sixth Painlev́e equation. Neural Comput & Applic 31, 101–115 (2019). https://doi.org/10.1007/s00521-017-2982-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-2982-z