Abstract

One heuristic evolutionary algorithm recently proposed is the gravitational search algorithm (GSA), inspired by the gravitational forces between masses in nature. This algorithm has demonstrated superior performance among other well-known heuristic algorithms such as particle swarm optimisation and genetic algorithm. However, slow exploitation is a major weakness that might result in degraded performance when dealing with real engineering problems. Due to the cumulative effect of the fitness function on mass in GSA, masses get heavier and heavier over the course of iteration. This causes masses to remain in close proximity and neutralise the gravitational forces of each other in later iterations, preventing them from rapidly exploiting the optimum. In this study, the best mass is archived and utilised to accelerate the exploitation phase, ameliorating this weakness. The proposed method is tested on 25 unconstrained benchmark functions with six different scales provided by CEC 2005. In addition, two classical, constrained, engineering design problems, namely welded beam and tension spring, are also employed to investigate the efficiency of the proposed method in real constrained problems. The results of benchmark and classical engineering problems demonstrate the performance of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, various heuristic evolutionary optimisation algorithms have been developed mimicking natural phenomena. Some of the most popular are particle swarm optimisation (PSO) [1, 2], genetic algorithm (GA) [3], differential evolution (DE) [4, 5], ant colony optimisation (ACO) [6], and other, recent additions such as bio-geography optimisation algorithm (BBO) [7, 8], grey wolf optimiser (GWO) [9], and Krill Herd (KH) algorithm [10, 11]. The gravitational search algorithm (GSA) has been recently proposed by Rashedi et al. [12]. It has been shown that this algorithm is able to provide promising results compared with other well-known algorithms in this field. The similarity between these evolutionary algorithms is that they start the search process with an initial population as a set of candidate solutions. This population is evolved through a predefined number of steps with certain rules. For instance, PSO uses the social behaviour of birds flocking and GA utilises Darwin’s theory of evolution. Regardless of structure, these algorithms basically divide the search process into two main phases—exploration and exploitation—to find the global optimum.

Exploration requires an algorithm to search the problem space broadly, whereas exploitation needs the algorithm to converge to the best solution from promising solutions found in the exploration phase. The ultimate goal was to find an efficient trade-off between exploitation and exploration [13]. However, it is challenging to find a good balance due to the stochastic behaviour of evolutionary algorithms [14]. Moreover, exploration and exploitation conflict: emphasising one generally weakens the other one.

In the literature, merging optimisation algorithms is a popular way to obtain a better balance between exploration and exploration, as in the hybrids PSO–GA [15, 16], PSO–DE [17, 18], PSO–ACO [19, 20], KH–DE [21], and KH–BBO [22]. In improving the exploitation phase, local search [23, 24] and gradient descent [25, 26] have been utilised. Both of these methods are able to improve performance, but they bring additional computational cost. Moreover, gradient descent methods are also ill-defined for non-differentiable search spaces. Normally, in combining two algorithms, the computational time is greater than for either alone because both algorithms should be run either in parallel or sequentially. Local search is also computationally expensive itself. So these inevitable problems should be considered, especially for real-world engineering problems. There are also other methods for improving the performance of meta-heuristics: utilising chaotic maps [27–29] and employing different evolutionary operators [30, 31]. In this study, we try to enhance the exploitation of GSA with an extremely low-cost method since it appears to be the main drawback of this algorithm [32].

The search process in GSA slows as the number of iterations increases. Due to the cumulative effect of the fitness function on mass, masses get greater over the course of iteration. This prevents them from rapidly exploiting the best solutions in later iterations [33]. This problem has been addressed directly or indirectly in previous work. In 2010, Sinaie solved the travelling salesman problem (TSP) with GSA and neural networks (NN) sequentially, with the exploration and exploitation phases accomplished by GSA and NN, respectively [33]. Shaw et al. [34] used an opposition-based learning to improve the convergence speed of GSA. In 2011, GSA was employed as a global search algorithm, accompanied by a combined multi-type local improvement scheme as a local search operator [35]. A novel immune gravitation optimisation algorithm (IGOA) was proposed by Zhang et al. [32] in 2012, incorporating the characteristics of antibody diversity and vaccination to solve the slow convergence. Hatamlou et al. [36] developed a sequential method to solve clustering problems. They used GSA for finding a near-optimal solution and another heuristic method for improving the solutions obtained by GSA. In 2011, Li and Zhou [37] integrated social thinking and individual thinking of PSO to GSA and employed to solve parameter identification of hydraulic turbine governing system. However, there is no deep investigation of the proposed method in the paper especially on challenging benchmark problems.

All these studies show that GSA suffers from slow exploitation. However, none of them investigate this problem in detail. In addition, some of these studies adopted problem-specific solutions for this problem. The first author also has two publications [38, 39] on improving the performance of GSA, in which the social thinking of PSO was integrated with GSA in order to improve the exploitation. However, we observed that the constants proposed for balancing the effect of GSA and PSO in search need to be tuned adaptively due to the loss of exploration. In this work, the main reason for the slow search process is further discussed in detail and a general, low-cost solution is proposed, which utilises adaptive coefficients to balance between exploration and exploitation. In addition, we investigate the performance of both improved GSA and GSA in solving constrained problems.

The rest of the paper is organised as follows: Section 2 presents a brief introduction to the GSA. Section 3 discusses the problem of slow exploitation and proposes a method to overcome it. The experimental results for benchmark and classical engineering design problems are provided in Sects. 4 and 5, respectively. Finally, Section 6 concludes the work and suggests some directions for future research.

2 Gravitational search algorithm

The basic physical theory from which GSA is inspired is Newton’s law of universal gravitation. The GSA performs search by employing a collection of agents (candidate solutions) that have masses proportional to the value of a fitness function. During iteration, the masses attract each other by the gravity forces between them. The heavier the mass, the bigger the attractive force. Therefore, the heaviest mass, which is possibly close to the global optimum, attracts the other masses in proportion to their distances.

This algorithm is formulated as follows [12]:

Every mass has a position in search space as follows:

where N is the number of masses, n is the dimension of the problem, and x d i is the position of the ith agent in the dth dimension.

The algorithm starts by randomly placing all agents in a search space. During all epochs, the gravitational force from agent j on agent i at a specific time t is defined as follows:

where M aj is the active gravitational mass related to agent j, M pi is the passive gravitational mass related to agent i, G (t) is a gravitational constant at time t, ɛ is a small constant, and R ij (t) is the Euclidian distance between two agents i and j. It is recognised that Eq. 2.2 is not a correct expression of Newtonian gravitational forces, as has been explored in the critique of Gauci et al. [40]. However, the original formulation is retained here to allow direct comparison with the original GSA.

In order to emphasise exploration in the first iterations and exploitation in the final iterations, G has been designed with an adaptive value so that it is increased over iterations. In other words, G encourages the search agents to move with big steps in the initial iterations, but they are constrained to move slowly in the final iterations.

The gravitational factor (G) and the Euclidian distance between two agents i and j are calculated as follows:

where α is the coefficient of decrease, G 0 is the initial gravitational constant, iter is the current iteration, and maxiter is the maximum number of iterations.

In a problem space with dimension equal to d, the total force that acts on agent i is calculated by the following equation:

where rand j is a random number in the interval [0,1]. The random component has been included in this formula to have a random movement step along the gravitational force of each agent and the final resultant force. This helps to have more diverse behaviours in moving the search agents.

Newton’s law of motion has also been utilised in this algorithm, which states that the acceleration of a mass is proportional to the applied force and inverse to its mass, so the accelerations of all agents are calculated as follows:

where d is the dimension of the problem, t is a specific time, and M ii is the inertial mass of agent i.

The velocity and position of agents are calculated as follows:

where d is the problem’s dimension and is a random number in the interval [0,1].

As can be inferred from (2.7) and (2.8), the current velocity of an agent is defined as a fraction of its last velocity (\(0 \, \le \,{\text{rand}}_{i} \, \le \,1\)) added to its acceleration. Only a (random) fraction of the initial velocity has been used in order to prevent the search agents from over-shooting the boundaries of the search space. In addition, it is a random fraction in order to promote diversity in the behaviour of GSA.

Furthermore, the current position of an agent is set to its last position added to its current velocity. To correctly apply Newton’s laws of motion, the velocity used should be the average of the velocities at time t and t + 1. However, the original version of GSA used only the final velocity, and this same simplified method is used in this study to allow direct comparison.

Since agents’ masses are defined by their fitness evaluation, the agent with heaviest mass is the fittest agent. According to the above equations, the heaviest agent has the highest attractive force and the slowest movement. Since there is a direct relation between mass and the fitness function, a normalisation method has been adopted to scale masses as follows:

where fit i (t) is the fitness value of the agent i at time t, best (t) is the fittest agent at time t, and worst (t) is the weakest agent at time t.

In the GSA, at first, all agents are initialised with random values. During iteration, the velocities and positions are defined by (2.7) and (2.8). Meanwhile, other parameters such as the gravitational constant and masses are calculated by (2.3) and (2.10). Finally, the GSA is terminated by satisfying an end criterion.

3 Proposed method

In this section, the problem of slow exploitation is first clarified in detail. Following this, a method is proposed for overcoming the problem.

3.1 Slow exploitation

As mentioned earlier, there are two main, common characteristics between all population-based algorithms with evolutionary behaviour: an algorithm’s exploration of search spaces and its exploitation of the most promising solution. An algorithm should support these two vital characteristics to guarantee a favourable optimisation process.

In GSA, the gravitational constant (G) defines the speed at which solutions change their location in solution space. According to Eq. 2.2, a high value of G results in high intensities of gravitational forces and resulting rapid movement in earlier iterations. However, G is progressively decreased according to Eq. 2.3, and this, combined with the slow movement of increasingly heavy agents, helps GSA during exploitation [12, 41]. So the exploitation phase coincides with less intensity of attractive force and slow movement. Unfortunately, heavy masses with slow movement and less intensity of attractive force significantly degrade the speed of convergence as well. Therefore, it seems that GSA suffers from slow search speed in the exploitation phase originating from these factors.

Figure 1 shows a simple, one-dimensional problem where the fitness function is y = x 2. As may be seen in this figure, the masses M1 and M3 are attracted by M2 at iterations t + 1 and t + 2. However, these masses also attract M2 and move it slightly away from the optimum. So as particles approach an optimum, they are not necessarily able to accelerate towards it, instead moving towards the centre of mass of all the particles in the neighbourhood. It is worth mentioning here that GSA has no memory for saving the best solution obtained so far so the best solution might be lost as the best mass is attracted away by other less fit masses. All these problems motivate us to develop the solution discussed in the following section.

3.2 Improving the exploitation

The basic idea of the proposed method is to save and use the location of the best mass to speed up the exploitation phase. Figure 2 shows the effect of using the best solution to accelerate movement of agents towards the global optimum. As shown in this figure, the gbest element applies an additional velocity component towards the last known location for the best mass. In this way, the external gbest “force” helps to prevent masses from stagnating in a suboptimal situation. There are two benefits in this method: accelerating the movement of particles towards the location of the best mass, which may help them to surpass it and be the best mass in the next iteration, as illustrated in Fig. 2b and c and saving the best solution attained so far.

Equation 3.1 is proposed for mathematically modelling the proposed method, as follows:

where V i (t) is the velocity of agent i at iteration t, \({{c}}_{1}^{\prime}\) and \({{c}}_{2}^{\prime}\) are accelerating coefficients, rand is a random number between 0 and 1, ac i (t) is the acceleration of agent i at iteration t, and gbest is the position of the best solution acquired so far. In Eq. 3.1, the first component \((\hbox{rand} \times V_{i} (t) + {{c}}_{1}^{\prime} \times {\rm ac}_{i} (t) + {{c}}_{2}^{\prime})\) is the same as that of GSA, in which the exploration of the masses is emphasised. The second component \(({{c}}_{2}^{\prime} \times (\hbox{gbest} - X_{i} (t)))\) is responsible for attracting masses towards the best masses obtained so far. The distance of each mass from the best mass is calculated by gbest − X i (t). The final force towards the best mass is a random fraction the distance defined by \({{c}}_{2}^{\prime}\) that will be the final external force applied to each mass.

In each iteration, the positions of agents are updated as follows:

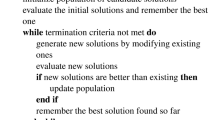

In the proposed method, all agents are randomly initialised. Then, gravitational force, gravitational constant and resultant forces between them are calculated using (2.2), (2.3) and (2.5), respectively. After that, the accelerations of particles are defined as in (2.6). At each iteration, the best solution obtained so far should be updated. After calculating the accelerations and updating the best solution, the velocities of all agents can be calculated using (3.1). Finally, the positions of agents are updated as (3.2). The process terminates by satisfying an end criterion. The general steps of the proposed method are represented in Fig. 3.

A problem may arise in that this method could affect the exploration phase as well, since it establishes a permanent element of velocity updating. In order to prevent the new updating velocity method from degrading the exploration ability, we use adaptive values for \({{c}}_{1}^{\prime}\) and \({{c}}_{2}^{\prime}\) as shown in Fig. 4. We adaptively decrease \({{c}}_{1}^{\prime}\) and increase \({{c}}_{2}^{\prime}\) so that the masses tend to accelerate towards the best solution as the algorithm reaches the exploitation phase. Since there is no clear border between the exploration and exploitation phases in evolutionary algorithms, the adaptive method is the best option for allowing a gradual transition between these two phases. In addition, this adaptive approach emphasises exploration in the first iterations and exploitation in the final iterations.

It is worth mentioning that the second part of Eq. 3.1, \({{c}}_{2}^{\prime} \times (\hbox{gbest} - X_{i} (t))\), is quite similar to the social component of PSO, so the proposed method could be also considered as a hybrid of GSA and PSO. A high value of \({{c}}_{1}^{\prime}\) biases towards GSA behaviour, while a high value of \({{c}}_{2}^{\prime}\) emphasises the social component of PSO in performing the search process. The adaptive method allows GSA to explore the search space and a PSO-like exploitation of the best solution discovered by GSA.

Some remarks on the proposed method and its advantages are the following:

-

The proposed method uses a memory (gbest) for saving the best solution obtained so far, in contrast to the unmodified GSA, so it is accessible at any time and will not be lost.

-

Each agent can observe the best solution (gbest) and move towards it, so masses are provided with a sort of social intelligence.

-

The effect of gbest is emphasised in the exploitation phase by adapting \({{c}}_{1}^{\prime}\) and \({{c}}_{2}^{\prime}\).

-

The effect of gbest on agents is independent of their masses and so can be considered as an external force not subject to gravitational rules. This effectively prevents particles from gathering together and having extremely slow movement.

-

The computational cost of this method is extremely low.

Because of these features, the proposed method has the potential to provide superior results compared to GSA. In the following section, various benchmark functions are employed to explore the effectiveness of the proposed method in action.

4 Experimental results and discussion

To evaluate the performance of the proposed method, termed gbest-guided GSA (GGSA), 25 standard benchmark functions, the CEC 2005 test functions, are employed in this section [42]. These benchmark functions are the shifted, rotated, expanded and combined variants of the classical functions, the most challenging forms of the test functions. They can be divided into three groups: unimodal, multimodal and composite functions. Table 1 lists the functions, where Dim indicates dimension of the function, Range is the boundary of the function’s search space, and f min is the minimum return value of the function. We compare GGSA and GSA on problems of six different dimensions to verify the performance of both algorithms dealing with problems of different scale. A detailed description of the benchmark functions is available in the technical report by Suganthan et al. [42].

The GGSA and GSA have several parameters that were defined as in Table 2.

The experimental results are presented in Tables 3, 4, 5, 6, 7 and 8. The results are averaged over 30 independent runs, and the best results are indicated in bold type. The average (AVE) and standard deviation (STD) of the best solution obtained in the last iteration are reported in the form of AVE ± STD. Note that the source codes of the GGSA algorithm can be found in http://www.alimirjalili.com/Projects.html.

According to Derrac et al. [43], to improve the evaluation of evolutionary algorithms’ performance, statistical tests should be conducted to prove that a proposed new algorithm presents a significant improvement over other existing methods for a particular problem. In order to judge whether the results of the GGSA and GSA differ from each other in a statistically significant way, a nonparametric statistical test, Wilcoxon’s rank-sum test [44], is carried out at 5 % significance level. The p values calculated in the Wilcoxon’s rank-sum comparing the algorithms over all the benchmark functions are given in Tables 4, 6 and 8. In these tables, N/A indicates “Not Applicable,” which means the statistical test cannot be done because both algorithms found the optimum successfully in all the runs. According to Derrac et al., p values that are less than 0.05 can be considered as strong evidence against the null hypothesis. Note that the results are provided in the form of Algorithm (p value), where Algorithm is the better algorithm based on the average of the results (AVE). In the following subsections, the simulation results of benchmark functions are explained and discussed in terms of search performance and convergence behaviour.

4.1 Search performance analysis

As shown in Table 3, the GGSA and GSA provide similar results on 10-D unimodal benchmark functions. For the remaining dimensions, GGSA has the best results for all benchmark functions. The p values presented in Table 4 indicate that the results of GGSA are significantly better than GSA. The unimodal benchmark functions have only one global solution without any local optima so they are highly suitable for examining exploitation. Therefore, these results demonstrate that the proposed method provides greatly improved exploitation compared to the original GSA.

However, multimodal test functions have many local minima, with the number increasing exponentially with dimension, so they are well suited to test the exploration capability of an algorithm. The mean and STD values in Table 5 and p values in Table 6 are for this class of benchmark functions. These tables show that GGSA provided better results than GSA across the majority of dimensions. GGSA completely outperformed GSA on F7 and F9 for all dimensions. For some of the remaining benchmark functions, GSA provides apparently better results but the p values indicate the results for some cases were not statistically significant. The results of the third group of benchmark functions, composite benchmark functions, are provided in Tables 7 and 8.

The third class of benchmark functions, the composite functions, have extremely complex structures with many local minima, similar to real-world problems. These functions are thus suitable to test an algorithm in terms of both exploration and exploitation. Many of the results for these functions presented in Tables 7 and 8 are inconclusive. However, GGSA still showed generally better results, particularly for higher-dimensional functions.

To give an overall image of the performance of these algorithms, a summary of the results is provided in Figs. 5 and 6. It may be seen that the results of GGSA tend to be significantly better than GSA as the dimension and complexity of the problems rises (e.g., the ESGA provides significant improvement on almost all benchmark functions in 200 and 300 dimensions). This improving trend can be seen in both figures.

In summary, the results on unimodal functions strongly suggest that GGSA has superior exploitation to GSA. The results obtained on multimodal functions also show that the proposed method is capable of efficiently exploring the search space. Moreover, the results on composite functions highly support this statement that the proposed adaptive values for \({{c}}_{1}^{\prime}\) and \({{c}}_{2}^{\prime}\) is a suitable method to balance exploration and exploitation. In the next section, the convergence behaviour of GGSA and GSA is investigated.

4.2 Convergence analysis

The convergence curves of both algorithms over 30-D benchmarks at all dimensions are illustrated in Fig. 11 in the Appendix. As may be seen from these figures, GGSA has a much better convergence rate than GSA on unimodal benchmark functions for all dimensions. Generally speaking, the convergence of GGSA tends to surpass GSA from the initial steps of a run. Considering this behaviour and the characteristics of unimodal functions indicates that the proposed method successfully improves the convergence rate compared with GSA and the consequent effectiveness of exploitation.

The convergence of the algorithms on the majority of multimodal and composite benchmark functions follows a different scenario. The GGSA has worse convergence than GSA in the initial iterations. However, the search process is progressively accelerated during iterations for this algorithm. This acceleration helps GGSA to surpass GSA after completing about a quarter of the iterations. This demonstrates how GGSA is capable of balancing exploration and exploitation to find the global optimum rapidly and effectively.

Overall, these results show that the proposed algorithm is able to significantly improve on the performance of GSA, overcoming its major shortcomings. In the next section, the performance of GGSA solving real engineering constraint problems is examined.

5 GGSA for classical design engineering problems

In the field of meta-heuristics, it is very common that an algorithm provides very promising results on test problems, but poor results on real challenging problems. In other words, there are possibilities that an algorithm utilises the known characteristics of test functions (symmetric for instance) and provides good superior results. In this case, some classical engineering design problems with unknown search spaces are available in the literature to benchmark meta-heuristics. Moreover, real problems usually have constraints, and an algorithm should be able to handle constraints in order to solve them. In this section, two constrained engineering design problems (a tension/compression spring and welded beam) are employed to further investigate the efficiency of the proposed method for real problems. We have chosen these classical engineering problems because they are constrained, so the ability of the proposed method would be benchmarked in terms of solving constrained problems. Moreover, the employed engineering problems are the simplified version of real problems with unknown search spaces, so the performance of the proposed method would be examined in terms of finding the optima of unknown challenging search spaces.

5.1 Tension/compression spring design

The objective of this problem is to minimise the weight of a tension/compression spring [45–47]. The minimisation process is subject to some constraints such as shear stress, surge frequency and minimum deflection. There are three variables in this problem: wire diameter (d), mean coil diameter (D) and the number of active coils (N). The mathematical formulation of this problem is as follows:

This problem has been tackled by both mathematical and heuristic approaches. Ha and Wang tried to solve this problem using PSO [48]. The evolution strategy (ES) [49], GA [50], harmony search (HS) [51] and DE [52] algorithms have also been employed as heuristic optimisers for this problem. The mathematical approaches that have been adopted to solve this problem are the numerical optimisation technique (constraints correction at constant cost) [45] and mathematical optimisation technique [46]. The comparison of results of these techniques and the proposed method are provided in Table 9. Note that we use a penalty function for both GGSA and GSA to perform a fair comparison [53]. As shown in Table 9, GSA only outperforms two of the algorithms, whereas GGSA consistently has the best results. The convergence curves of GGSA and GSA in this problem are illustrated in Fig. 7. The convergence behaviour is entirely consistent with the previous results whereby GGSA is accelerated over the iteration. As the exploitation phase comes up, the GGSA surpasses GSA and exploits a better solution eventually.

5.2 Welded beam design

The objective of this problem was to minimise the fabrication cost of a welded beam [50]. The constraints are shear stress (τ), bending stress in the beam (θ), buckling load on the bar (P c ), end deflection of the beam (δ) and side constraints. This problem has four variables: thickness of weld (h), length of attached part of bar (l), the height of the bar (t) and thickness of the bar (b). The mathematical formulation is as follows:

Coello [54] and Deb [55, 56] employed GA, whereas Lee and Geem [57] utilised HS to solve this problem. Richardson’s random method, simplex method, Davidon–Fletcher–Powell, Griffith and Stewart’s successive linear approximation are the mathematical approaches that have been adopted by Radgsdell and Philips [58] for this problem. The comparison results are provided in Table 10. The results show that GGSA and GSA both have high performance in this problem. The result of GGSA, however, is better than GSA. Moreover, Fig. 8 demonstrates that GGSA has a slightly better convergence rate with the acceleration noted in previous benchmarks.

The possible reason for this is that GSA is not able to discover new feasible areas in the search space when masses should cross an infeasible area to reach a new feasible area. This phenomenon is visualised in Fig. 9. It can be observed in this figure that the masses are not able to move to the second feasible zone since there is no mass there to attract them. Moreover, the infeasible masses are extremely light when using a penalty function or assigning large fitness function values to handle constraints. Thus, they get attracted back immediately they exit feasible areas. However, as is clearly shown in Fig. 10, the proposed method helps masses cross infeasible areas of search space. Therefore, the proposed method helps masses not only to accelerate towards the best solution but also discover new feasible areas in some special situations.

In summary, the results show that the proposed method successfully outperforms GSA in a majority of benchmark functions. Furthermore, tests using classical engineering problems show that GGSA is capable of providing very competitive results, indicating the superior performance of this algorithm in solving constrained problems compared to GSA. It therefore appears from this comparative study that the proposed method has merit in the field of evolutionary algorithm and optimisation.

6 Conclusion

In this work, the exploitation of GSAs was improved by accelerating the masses towards the best solution currently obtained. The proposed method proved its superior performance on 25 benchmark functions in terms of improved avoidance of local minima and increased convergence rate.

Furthermore, two classical engineering design problems were employed to benchmark the performance of the proposed method when applied to real problems. The results verified the superior performance of GGSA on optimising constrained problems when compared to GSA, due to its improved ability to discover new, promising feasible areas.

For future studies, it would be interesting to expand the application of GGSA further on other real-world engineering problems.

References

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of IEEE international conference on neural networks, vol 4, pp 1942–1948

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. In: IEEE international conference on evolutionary computation. Anchorage, Alaska, pp 69–73

Holland JH (1992) Genetic algorithms. Scientific Am 267:66–72

Price K, Storn R (1997) Differential evolution. Dr. Dobb’s J 22:18–20

Price KV, Storn RM, Lampinen JA (2005) Differential evolution: a practical approach to global optimization. Springer, New York

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. Comput Intell Mag, IEEE 1(4):28–39. doi:10.1109/MCI.2006.329691

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12:702–713

Mirjalili S, Mirjalili SM, Lewis A (2014) Let a biogeography-based optimizer train your multi-layer perceptron. Inf Sci 269:188–209. doi:10.1016/j.ins.2014.01.038

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Gandomi AH, Alavi AH (2012) Krill Herd: a new bio-inspired optimization algorithm. Commun Nonlinear Sci Num Simul 17:4831–4845

Guo L, Wang G-G, Gandomi AH, Alavi AH, Duan H (2014) A new improved krill herd algorithm for global numerical optimization. Neurocomputing. doi:10.1016/j.neucom.2014.01.023

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179:2232–2248

Mirjalili S, Mirjalili SM, Yang X-S (2013) Binary bat algorithm. Neural Comput Appl 1–19. doi:10.1007/s00521-013-1525-5

Mirjalili S, Lewis A (2013) S-shaped versus V-shaped transfer functions for binary particle swarm optimization. Swarm Evol Comput 9:1–14

Lai X, Zhang M (2009) An efficient ensemble of GA and PSO for real function optimization. In: 2nd IEEE International conference on computer science and information technology, 2009. ICCSIT 2009. pp 651–655

Esmin AAA, Lambert-Torres G, Alvarenga GB (2006) Hybrid evolutionary algorithm based on PSO and GA mutation. In: International conference on hybrid intelligent systems. IEEE Computer Society, Los Alamitos, CA, USA, p 57. http://doi.ieeecomputersociety.org/10.1109/HIS.2006.33

Zhang WJ, Xie XF (2003) DEPSO: hybrid particle swarm with differential evolution operator, vol. 4, pp. 3816–3821

Niu B, Li L (2008) A novel PSO-DE-based hybrid algorithm for global optimization. In: advanced intelligent computing theories and applications. With aspects of artificial intelligence, pp 156–163

Holden NP, Freitas AA (2007) A hybrid PSO/ACO algorithm for classification, pp 2745–2750

Holden N, Freitas AA (2008) A hybrid PSO/ACO algorithm for discovering classification rules in data mining. J Artif Evol Appl 2008:2

Wang G-G, Gandomi AH, Alavi AH, Hao G-S (2013) Hybrid krill herd algorithm with differential evolution for global numerical optimization. Neural Comput Appl 1–12. doi:10.1007/s00521-013-1485-9

Wang G-G, Gandomi AH, Alavi AH (2013) An effective krill herd algorithm with migration operator in biogeography-based optimization. Appl Math Model. doi:10.1016/j.apm.2013.10.052

Noman N, Iba H (2008) Accelerating differential evolution using an adaptive local search. IEEE Trans Evol Comput 12:107–125

Chen J, Qin Z, Liu Y, Lu J (2005) Particle swarm optimization with local search, pp 481–484

Chen S, Mei T, Luo M, Yang X (2007) Identification of nonlinear system based on a new hybrid gradient-based PSO algorithm, pp 265–268

Meuleau N, Dorigo M (2002) Ant colony optimization and stochastic gradient descent. Artif Life 8:103–121

Wang G–G, Gandomi AH, Alavi AH (2013) A chaotic particle-swarm krill herd algorithm for global numerical optimization. Kybernetes 42:9

Wang G-G, Guo L, Gandomi AH, Hao G-S, Wang H (2014) Chaotic Krill Herd algorithm. Inf Sci. doi:10.1016/j.ins.2014.02.123

Saremi S, Mirjalili SM, Mirjalili S (2014) Chaotic Krill Herd optimization algorithm. Procedia Technol 12:180–185

Wang G-G, Gandomi AH, Alavi AH (2014) Stud Krill Herd algorithm. Neurocomputing 128:363–370

Wang G, Guo L, Wang H, Duan H, Liu L, Li J (2014) Incorporating mutation scheme into krill herd algorithm for global numerical optimization. Neural Comput Appl 24(3–4):853–871. doi:10.1007/s00521-012-1304-8

Zhang Y, Wu L, Zhang Y, Wang J (2012) Immune gravitation inspired optimization algorithm advanced intelligent computing, vol 6838. In: Huang D-S, Gan Y, Bevilacqua V, Figueroa J (eds). Springer, Berlin/Heidelberg, pp. 178–185

Sinaie S (2010) Solving shortest path problem using gravitational search algorithm and neural networks. Master, Faculty of Computer Science and Information Systems, Universiti Teknologi Malaysia (UTM), Johor Bahru, Malaysia

Shaw B, Mukherjee V, Ghoshal SP (2012) A novel opposition-based gravitational search algorithm for combined economic and emission dispatch problems of power systems. Int J Electric Power Energy Syst 35:21–33

Chen H, Li S, Tang Z (2011) Hybrid gravitational search algorithm with random-key encoding scheme combined with simulated annealing. IJCSNS 11:208

Hatamlou A, Abdullah S, Othman Z (2011) Gravitational search algorithm with heuristic search for clustering problems. In: Data mining and optimization (DMO), 2011 3rd conference on 2011, pp 190–193

Li C, Zhou J (2011) Parameters identification of hydraulic turbine governing system using improved gravitational search algorithm. Energy Convers Manag 52:374–381

Mirjalili S, Mohd Hashim SZ, Moradian Sardroudi H (2012) Training feed forward neural networks using hybrid particle swarm optimization and gravitational search algorithm. Appl Math Comput 218:11125–11137

Mirjalili S, Hashim SZM (2010) A new hybrid PSOGSA algorithm for function optimization. In: Computer and information application (ICCIA), 2010 international conference on, 2010, pp 374–377

Gauci M, Dodd TJ, Groß R (2012) Why ‘GSA: a gravitational search algorithm’ is not genuinely based on the law of gravity. Nat Comput 11(4):719–720. doi:10.1007/s11047-012-9322-0

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2010) BGSA: binary gravitational search algorithm. Nat Comput 9:727–745

Suganthan PN, Hansen N, Liang JJ, Deb K, Chen Y, Auger A, Tiwari S (2005) Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization. Nanyang Technological University, Singapore, Tech. Rep, vol 2005005

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol Comput 1(1):3–18. doi:10.1016/j.swevo.2011.02.002

Wilcoxon F (1945) Individual comparisons by ranking methods. Biometrics Bull 1:80–83

Arora JS (2004) Introduction to optimum design. Academic Press, London

Belegundu AD (1983) Study of mathematical programming methods for structural optimization. Dissertation abstracts international part B: science and engineering [DISS. ABST. INT. PT. B- SCI. & ENG.], vol 43, p 1983

Coello Coello CA, Mezura Montes E (2002) Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Adv Eng Inf 16:193–203

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20:89–99

Mezura-Montes E, Coello CAC (2008) An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int J General Syst 37:443–473

Coello Coello CA (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41:113–127

Mahdavi M, Fesanghary M, Damangir E (2007) An improved harmony search algorithm for solving optimization problems. Appl Math Comput 188:1567–1579

Huang F, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186:340–356

Yang XS (2011) Nature-inspired metaheuristic algorithms. Luniver Press, UK

Carlos A, Coello C (2000) Constraint-handling using an evolutionary multiobjective optimization technique. Civil Eng Syst 17:319–346

Deb K (1991) Optimal design of a welded beam via genetic algorithms. AIAA J 29:2013–2015

Deb K (2000) An efficient constraint handling method for genetic algorithms. Comput Methods Appl Mech Eng 186:311–338

Lee KS, Geem ZW (2005) A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Comput Methods Appl Mech Eng 194:3902–3933

Ragsdell K, Phillips D (1976) Optimal design of a class of welded structures using geometric programming. ASME J Eng Ind 98:1021–1025

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

See Fig. 11.

Rights and permissions

About this article

Cite this article

Mirjalili, S., Lewis, A. Adaptive gbest-guided gravitational search algorithm. Neural Comput & Applic 25, 1569–1584 (2014). https://doi.org/10.1007/s00521-014-1640-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1640-y