Abstract

Information quantification in numerical form for any given data is very useful in decision-making problems. In Atanassov intuitionistic fuzzy sets (A-IFSs), such quantification becomes more important due to uncertainties such as intuitionism and fuzziness. Distribution of these uncertainties is a key to determine knowledge associated with Atanassov intuitionistic values (A-IFVs). In this paper, first distribution of the above-mentioned uncertainties and their relationship are discussed. Then, knowledge measures are defined as a function of entropy and uncertainty index, with certain desired properties. Existence of such knowledge measures has been established. Further, it is shown that how proposed knowledge measures are useful in multi-criteria group decision-making (MCGDM) problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fuzzy set theory is very useful to handle uncertainty exhibited in many situations (Zadeh 1965). In fuzzy sets, information about an element is represented by a membership degree from the interval [0, 1]. There are many situations in daily life, where both positive and negative aspects are attached to certain objects. In such circumstances, intuitionistic fuzzy sets theory as proposed by Atanassov is very useful. Atanassov intuitionistic fuzzy sets (A-IFSs in brief) theory is a generalization of fuzzy sets theory (Atanassov 1986, 1999; Atanassov et al. 2010). In A-IFSs, membership and non-membership degrees are assigned to every element of a set under consideration. These assignments of membership and non-membership degrees are from the unit interval [0,1] and convey the information in favor and against for an element to be in an A-IFS. In (Szmidt and Kacprzyk 2014), such information is termed as positive and negative. During past decades, A-IFSs became popular among mathematicians, scientists, researchers and practitioners, due to their various applications (De et al. 2001; Kharal 2009; Lin et al. 2007; Liu and Wang 2007; Ouyang and Pedrycz 2016).

In A-IFSs, at least two types of uncertainties are attached to every element of the universe set. The first one is the uncertainty index (or hesitation margin or intuitionism). If \(\mu_{A} \left( x \right) \) is the membership degree and \(\gamma_{A} \left( x \right)\) is the non-membership degree associated with an element \(x\) in A-IFS A, then its uncertainty index is given by \( 1 - \mu_{A} \left( x \right) - \gamma_{A} \left( x \right)\). Second type of uncertainty associated with them is the entropy. In order to define entropy in A-IFSs, there are at least two different approaches. In the first approach, given by Bustince and Burillo (1996) the entropy measure of A-IFS refers to its intuitionism, whereas in the second approach presented by Szmidt and Kacprzyk (2001), it is related to its fuzziness. Some studies where the entropy measures for A-IFSs have been discussed are given in Bustince and Burillo (1996), Chen (2011), De et al. (2001), Farhadinia (2013); Nguyen (2016), Nguyen (2015), Szmidt and Baldwin (2004), Szmidt et al. (2009), Szmidt and Kacprzyk (2001).

Study of knowledge measures for different types of fuzzy sets is an area of keen interest among the researchers (Guo and Xu 2018; Lalotra and Singh 2020; Szmidt and Kacprzyk 2014). As discussed above, the entropy measure tells how much fuzzy is an A-IFS? Initially it was thought that both entropy and knowledge measure might behave as a dual of each other, for example, see (Nguyen 2016, 2015; Szmidt et al. 2009). But later Szmidt and his colleagues proposed that a knowledge measure must depend on both entropy and uncertainty index. Therefore, they defined knowledge measure in such a way that it was dependent upon both entropy and uncertainty index (Szmidt and Kacprzyk 2014). For a constant value of uncertainty index, it was expected that both entropy and knowledge measure would behave dual of each other. But on the contrary, it was not the case. According to Guo (2016) and Guo and Xu (2018), both entropy and knowledge measure are distinct quantities; therefore, these must be treated independently. Therefore, an axiomatic approach was adopted to define knowledge measure (Guo 2016; Guo and Xu 2018). On a closer look, it becomes clear that axioms for defining a knowledge measure in Guo (2016); Guo and Xu 2018) are closely linked with certain type of entropy. Different knowledge measures have been defined for A-IFSs see (Guo 2016; Guo and Xu 2018; Nguyen 2016, 2015; Szmidt and Kacprzyk 2014). All of these can differentiate only between a crisp sets and A-IFSs. These knowledge measures do not have ability to distinguish a fuzzy set from A-IFS. It is well known that uncertainty index in case of fuzzy sets is zero; therefore, such knowledge measures will not produce reasonable results. In the available literature on knowledge measures, almost all the authors have the same view point that when entropy or uncertainty index is decreasing, knowledge measure must be increasing and vice versa, which means a knowledge measure must be a decreasing function of both entropy and uncertainty index. But according to the best of our knowledge, there is no definition of knowledge measures available in the form of a generic function of entropy and uncertainty index.

The aim of this paper is to show that knowledge measures should be defined by multi variable functions depending on both entropy and uncertainty index and having certain desired properties. Moreover, this establishes the existence of such functions.

In Yu (2014), Yu has suggested confidence aggregation operators for IFVs. These operators are very useful in MCGDM problems. However, in Yu (2014), it is not suggested how confidence related to certain IFVs will be calculated. Proposed knowledge measures provide an insight to know about level of confidence related to an IFV for a particular alternative.

Arrangement of the sequel of this paper is as follows. In Sect. 2, some basic notions linked with A-IFSs are given. Section 3 is devoted for a detailed discussion on intuitionism and entropy of A-IFSs. Relationship between entropy and intuitionism and their distribution in set of A-IFVs are discussed in detail. In Sect. 4, existing knowledge measures have been criticized. A framework for new knowledge measures is given in Sect. 5. In Sect. 6, it is shown with help of an example how proposed knowledge measures are useful in MCGDM problems.

2 Preliminaries

In this section, some basic notions related to A-IFS are given.

Definition 1

Atanassov (1986) Let X be a fixed set, then an Atanassov intuitionistic fuzzy set (A-IFS) A on X is defined as,

where \(\mu_{A} :X \to \left[ {0,1} \right] \) and \(\gamma_{A} :X \to \left[ {0,1} \right]\) are two functions. \(\mu_{A} \left( x \right)\) is the membership degree and \(\gamma_{A} \left( x \right)\) is the non-membership degree of \(x \in X\), such that \(\mu_{A} \left( x \right) + \gamma_{A} \left( x \right) \le 1\). The values \(\mu_{A} \left( x \right) \) and \( \gamma_{A} \left( x \right)\) indicate the degree up to which the element x satisfies and does not satisfy the property described by A, respectively. If there is no confusion, then briefly an A-IFS A is denoted by order pair \( {\text{A}} = \left( {\mu_{A} ,\gamma_{A} } \right)\).

Definition 2

Atanassov (1999) Let \({\text{A}} = \left( {\mu_{A} ,\gamma_{A} } \right)\) be an A-IFS on a set \( X\). The quantity \(\pi_{A} \left( x \right) = 1 - \mu_{A} \left( x \right) - \gamma_{A} \left( x \right) \) is called degree of hesitation or uncertainty index of the element \( x \in X\). If \(\pi_{A} \left( x \right) = 0\) for all \(x \in X,\) then A-IFS will reduce to an ordinary fuzzy set.

Notion of Atanassov intuitionistic fuzzy values (A-IFVs) is given by Xu and Yager (2006).

Definition 3

Xu and Yager (2006) An A-IFV \(\alpha = \left( {\mu_{\alpha } ,\gamma_{\alpha } } \right)\) is an ordered pair such that \(\left( {\mu_{\alpha } ,\gamma_{\alpha } } \right) \in \left[ {0,1} \right]^{2}\) and \(\mu_{\alpha } + \gamma_{\alpha } \le 1.\)

The set of all A-IFVs can be denoted by Θ. Geometrically Θ can be represented by the region bounded by right triangle OAB as shown in Fig. 1.

The quantity \(\pi_{\alpha } = 1 - \mu_{\alpha } - \gamma_{\alpha }\) is called uncertainty index associated with α. If value of \(\pi_{\alpha }\) is higher, then there is a small information about \( \alpha\). Hence, a higher risk is involved about accepting or rejecting and object has this value α. As in the case of fuzzy sets \(\pi_{\alpha } = 0\)⇒\(1 - \mu_{\alpha } - \gamma_{\alpha } = 0\)⇒\(\gamma_{\alpha }\) = \(1 - \mu_{\alpha }\). Therefore, \(\alpha = \left( {\mu_{\alpha } ,\gamma_{\alpha } } \right) \) or \( \alpha = \left( {1 - \gamma_{\alpha } ,\gamma_{\alpha } } \right)\).

3 Uncertainty index (intuitionism) and entropy (fuzziness)

There are at least two types of uncertainties associated with A-IFSs: one is related to Intuitionism is called uncertainty index, whereas the other is associated with fuzziness called entropy. In this section, notions of uncertainty index and entropy will be studied in detail. It will be seen that how these are distributed in Θ, the set all Atanassov intuitionistic fuzzy values.

3.1 Classification of A-IFVs by uncertainty index

Define a relation \(\sim_{\pi }\) on Θ as follows:

For \(\alpha ,\beta \in \Theta , \alpha \sim_{\pi } \beta\) if and only if \( \pi_{\alpha } = \pi_{\beta }\).

It is easy to see that \(\sim_{\pi }\) is an equivalence relation on Θ. This means that a classification of A-IFVs is available with respect to uncertainty index. In this classification, all A-IFVs with the same values of uncertainty index are the members of the same class. Denote all such classes by Θ/\(\sim_{\pi }\). That is, Θ/\(\sim_{\pi }\) = \(\left\{ {\left[ a \right]_{{\sim_{\pi } }} { }: \alpha \in {\Theta }} \right\}\) Here \(\left[ a \right]_{{\sim_{\pi } }}\) is representing the class containing \( \alpha \in \) Θ. Geometrical representation of some such classes is given in Fig. 2.

From Fig. 2, it can be seen that each class \(\left[ a \right]_{{\sim_{\pi } }}\) is a straight line segment parallel to the line \( \mu + \gamma = 1\). It may be considered that these line segments have their initial point on \(\mu - axis,\) whereas the terminal point on the \( \gamma - axis\). Length of these line segments varies from \(0 \) to \( \surd 2\). In general, any point on these line segments can represent the whole class of A-IFV. As it has been seen that the line segments representing a class have same \(\mu\) and \(\gamma\) intercepts; therefore, any initial or terminal point of the line segment can represent the whole class. Now \(\left( {a,0} \right)\) where \( 0 \le a \le 1\), is the initial point of some class in Θ/\(\sim_{\pi }\). Therefore, \(\left[ {\left( {a,0} \right)} \right]_{{\sim_{\pi } }}\) represents the class containing A-IFV \( \left( {a,0} \right)\).

3.2 Classification of A-IFVs by entropy

In fuzzy sets theory, fuzziness is measured by entropy. It is a non-probabilistic concept different from that was introduced by Shannon (1948). De Luca and Termini in Luca and Termini (1972) introduced the concept of entropy in fuzzy sets. Many authors studied this notion in context of fuzzy sets. Liu (2007) gave a survey on entropy for fuzzy variable with reference to credibility theory. Study of entropy in case of A-IFSs attracted many authors. There is a long list of contributions to this theory, for example, see (Bustince et al. 2013; Bustince and Burillo 1996; Hung and Yang 2006; Szmidt and Baldwin 2004, 2003, 2006; Szmidt et al. 2006, 2009; Szmidt and Kacprzyk 2001, 2004, 2014). In the literature, many entropy measures for both A-IFSs and A-IFVs exist. Notion of the entropy for A-IFSs has been introduced by Bustince and Burillo in Bustince et al. (2013); Bustince and Burillo 1996). This entropy measure refers to intuitionism in A-IFS. Axioms for the entropy measure in A-IFSs introduced by Burillo and Bustince can be found in Bustince and Burillo (1996).

Another approach for defining the entropy measure for A-IFS is given by Szmidt and Kacprzyk in Szmidt and Kacprzyk (2001). This entropy measure tells about fuzziness in A-IFSs.

Definition 4

Pal et al. (2013) A function \(E: \) Θ \( \to \left[ {0,1} \right]\) may be called an entropy measure if the following hold:

-

(E4)

For \(\forall \alpha ,\beta \in \) Θ\(, E\left( \alpha \right) \le E\left( \beta \right)\; if \mu_{\alpha } \le \mu_{\beta } \;and\; \gamma_{\alpha } \ge \gamma_{\beta } \;for \;\mu_{\beta } \le \gamma_{\beta }\; if \mu_{\alpha } \ge \mu_{\beta } \;and\; \gamma_{\alpha } \le \gamma_{\beta } \;for\; \mu_{\beta } \ge \gamma_{\beta } .\)

An entropy measure \(E_{S}\) for any \(x \in \) Θ is defined in Szmidt and Kacprzyk (2001) as follows.

where \(\mu ,\gamma\) and \(\pi\) are the membership, non-membership, and the hesitation margin, respectively.

Another entropy measure \(E_{X}\) is given in Wei and Liang (2013) by Xia et al.

For any \(x \in \) Θ,

Nguyen (2015) criticized (E2) in definition 4. According to his suggestion \(E\left( \alpha \right) = 1\;iff\;\pi \left( \alpha \right) = 1.\) That is, fuzziness is maximum if and only intuitionism is maximum.

Nguyen (Nguyen 2015) defined the entropy measure \(E_{N}\) as given below. For any \(x \in\) Θ,

Entropy based on ratios of Hamming distances from \(\left( {1,0} \right)\) and \(\left( {0,1} \right)\) is discussed in Szmidt and Kacprzyk (2014).

Definition 5

Szmidt and Kacprzyk (2014) Normalized hamming distance between two A-IFSs A and B given by:

Definition 6

Szmidt and Kacprzyk (2014) Entropy \(E_{SK} \left( x \right)\) of an A-IFV \(x\) is defined as follows:

where \(a\) = shorter normalized Hamming distance of \(x\) from either \(\left( {1,0} \right)\) or \(\left( {0,1} \right)\) and \(b\) = larger normalized Hamming distance of \(x\) from either \(\left( {1,0} \right)\) or \( \left( {0,1} \right)\).

Recently, question of distribution of entropy has gained importance (Guo and Xu 2018). Distribution of entropy mainly depends on its definition. In order to study this concept in A-IFSs, a very nice idea is to see what types of classifications of Θ are produced by different entropies.

In (Nguyen 2015), the following entropy \(E_{N}\) is given as:

Here concept of maximum entropy (fuzziness) is the same as the maximum uncertainty index (intuitionism). Perhaps this notion limits importance of both concepts. This entropy will not be discussed further in this paper.

As entropy measures reflect the fuzziness of A-IFVs, in the following their distribution is discussed in detail.

Define a relation on Θ as follows. For \(\alpha ,\beta \in \) Θ,

For \(\alpha ,\beta \in \Theta , \alpha \sim_{E } \beta\) if and only if \( E\left( \alpha \right) = E\left( \beta \right)\) where E is an Entropy measure.

Here \(\sim_{E }\) is an equivalence relation. Now Θ/\(\sim_{E }\) = \(\left\{ {\left[ a \right]_{{\sim_{E} }} { }: \alpha \in {\Theta }} \right\}\) is the set of all classes obtained by the entropy measure.

First of all it is seen that geometrically what type of classes of Θ are produced when entropy measure

is considered. All classes Θ/\(\sim_{{E_{S} }}\) = \(\left\{ {\left[ a \right]_{{\sim_{{E_{S} }} }} { }: \alpha \in {\Theta }} \right\}\) in this set are part of straight lines passing through the point \(\left( {1,1} \right) \) with slopes ranging from \( 0\) to \(\infty\). The class \(\left[ {1,0} \right]_{{\sim_{{E_{S} }} }}\) is a set with two elements \(\left( {1,0} \right)\) and \( \left( {0,1} \right)\). The class \(\left[ {0,0} \right]_{{\sim_{{E_{S} }} }}\) has only one line segment with initial point \(\left( {0,0} \right)\) and terminal point \(\left( {0.5,0.5} \right)\). All other classes have exactly two line segments as their members, one above the class \(\left[ {0,0} \right]_{{\sim_{{E_{S} }} }}\) and other below it. The two line segments which are members of the same class have same length. Shapes of some classes are given in Fig. 3.

When entropy

given in Wei and Liang (2013) is considered, all classes Θ/\(\sim_{{E_{X} }}\) = \(\left\{ {\left[ a \right]_{{\sim_{{E_{X} }} }} { }: \alpha \in {\Theta }} \right\}\) in this set are part of straight lines passing through the point \(\left( {1,1} \right)\) with slopes ranging from \(0\) to \(\infty\). The class \(\left[ {1,0} \right]_{{\sim_{{E_{X} }} }}\) is a set with two elements \(\left( {1,0} \right)\) and \( \left( {0,1} \right)\). Class \(\left[ {0,0} \right]_{{\sim_{{E_{X} }} }}\) is represented by a single line segment with initial point \(\left( {0,0} \right)\) and terminal point \( \left( {0.5,0.5} \right)\). All other classes have exactly two line segments as their members, one above the class \(\left[ {0,0} \right]_{{\sim_{{E_{X} }} }}\) and other below it. The two line segments which are members of the same class have same length.

From the above discussion and Figs. 3 and 4, it can be concluded that entropies defined by Eqs. (1) and (2) classify Θ in the same manner, through same set of lines which pass through the point \(\left( {1,1} \right).\)

Geometrical representations of some classes for the entropy measure \(E_{sk}\) given in definition 6 are shown in Fig. 5.

All classes in the set Θ/\(\sim_{{E_{Sk} }}\) = \(\left\{ {\left[ a \right]_{{\sim_{{E_{Sk} }} }} { }: \alpha \in {\Theta }} \right\}\) are parallel straight lines with slope 1. The class \(\left[ {0,0} \right]_{{\sim_{{E_{Sk} }} }}\) is a line segment with initial point \(\left( {0,0} \right)\) and terminal point \(\left( {0.5,0.5} \right),\) whereas the class \(\left[ {1,0} \right]_{{\sim_{{E_{Sk} }} }}\) has only two elements \(\left( {1,0} \right)\) and \( \left( {0,1} \right)\). All the remaining classes are represented by two line segments; one is above the line \(\mu = \gamma\) and the other below the line \(\mu = \gamma\). In Fig. 5, lines equidistant from the line \(\mu = \gamma \) represent the single class.

3.3 Entropy in a class \(\left[ {{\varvec{a}},0} \right]_{{\sim_{{\user2{ \pi }}} }}\)

In any class \(\left[ {a,0} \right]_{{\sim_{ \pi } }}\) entropy is lowest at the end points \(\left( {a,0} \right)\) and \(\left( {0,a} \right).\) From \(\left( {a,0} \right)\) to the middle point \(\left( {\frac{a}{2},\frac{a}{2}} \right), \) it increases gradually and attains its maximum value 1 at \(\left( {\frac{a}{2},\frac{a}{2}} \right)\). Then, from \(\left( {\frac{a}{2},\frac{a}{2}} \right)\) to \(\left( {0,a} \right)\) its value decreases gradually from 1 to a minimum in this particular class. Actually the line \(\mu = \gamma\) with maximum entropy has divided the class \(\left[ {a,0} \right]_{{\sim_{ \pi } }}\) into two equal parts. A-IFVs in these two parts, which are equidistant from the line,\(\mu = \gamma ,\) have the same value of the entropy measure.

For example, in Fig. 6 the points \(A\left( {0.4,0.1} \right)\) and \(B\left( {0.1,0.4} \right)\) are equidistant from \(\mu = \gamma\) and they have the same entropy.

3.4 Uncertainty index in a class \(\left[ {{\varvec{a}},0} \right]_{{\sim_{{\user2{E }}} }}\)

Consider \(E \in \left\{ {E_{S, } E_{X, } E_{SK} } \right\}.\) Before discussing the behavior of uncertainty index in any general class, it is appropriate to study it in the classes \(\left[ {0,0} \right]_{{\sim_{E } }}\) and \(\left[ {1,0} \right]_{{\sim_{E } }}\) first. In class \(\left[ {0,0} \right]_{{\sim_{E } }}\) uncertainty index attains all possible values that is from 1 to 0. At \(\left( {0,0} \right)\) uncertainty index is 1, which decreases gradually to 0 along the elements of this class from (0,0) to (0.5,0.5). At \(\left( {0.5,0.5} \right)\) uncertainty index is 0. When the class \(\left[ {1,0} \right]_{{\sim_{E } }}\) is considered, it has only two elements \(\left( {1,0} \right)\) and \(\left( {0,1} \right)\) for these two elements uncertainty index is 0.

When any class \(\left[ {a,0} \right]_{{\sim_{E } }}\) where \(0 < a < 1\) is considered, it is represented by two segments with initial points on \(\mu - axis\) and \(\gamma - axis\) and terminal points on the line \(\mu + \gamma = 1\). Uncertainty index is maximum in these classes at initial points \(\left( {a,0} \right)\) and \(\left( {0,a} \right)\); then, it gradually decreases to 0 as we move along the line segments from initial points to terminal points.

4 Knowledge measure

In this section, some notions about the knowledge measure are discussed. The term knowledge measure for A-IFV is a numerical value to quantify how much information is available in it. Initially, it was thought that a knowledge measure is a dual of the entropy (Nguyen 2015; Szmidt and Kacprzyk 2010). Later on it was realized that both of these must be dealt separately (Szmidt and Kacprzyk 2014).

Definition 7

(Nguyen 2015) For an A-IFS A on a set \( X = \{ {x_{1}, x_{2}, \ldots, x_{n} }\},\) the knowledge measure \(K\left( A \right)\) is defined as \( K\left( A \right) = 1 - E\left( A \right)\), where \(E\left( A \right)\) is the entropy of A.

Example 1

Nguyen (2015) Let A be an A-IFS on a set \( X = \left\{ x_{1}, x_{2}, \ldots ,x_{n} \right\}\). Then, the \(K_{N} \left( A \right)\) defined as follows is a knowledge measure for A:

For an A-IFV α, the knowledge measure is defined as:

In the above given knowledge measure \( K_{N}\), it is clear that it provides information based on membership and non-membership grades, but aspect of associated fuzziness has been ignored completely. Therefore, real-life application of this has difficulties (Guo and Xu 2018). Later on, it was thought that both entropy (fuzziness) and uncertainty index (intuitionism) of an A-IFS/A-IFV must be considered when a Knowledge measure is defined for it.

In Szmidt and Kacprzyk (2014), it is pointed out that the following four characteristics are associated with a knowledge measure.

Definition 8

For an A-IFV α, the quantity \(K\left( \alpha \right)\) is a knowledge measure if it satisfies the following;

-

1.

\( 0 \le K\left( \alpha \right) \le 1 \)

-

2.

\( K\left( \alpha \right) = K\left( { \alpha^{c} } \right) \)

-

3.

For the fixed \(E\left( \alpha \right), K\left( \alpha \right)\) increases while \(\pi \left( \alpha \right)\) decreases\(.\)

-

4.

For a fixed \(\pi (\alpha ), K(\alpha )\) behaves dually to an entropy measure (that is \(1-E(\alpha )\)).

where \(E\left( \alpha \right)\) is the entropy measure and \(\pi \left( \alpha \right)\) is the hesitation margin associated with \(\alpha \in\) Θ.

Example 2

Szmidt and Kacprzyk (2014) For any \(\alpha \in\) Θ, \(K_{S} \left( \alpha \right)\) defined as follows.

is a knowledge measure.

Proposition 1

For any \(\alpha = \left( {a,a} \right) \in \) Θ, we have \( K_{S} \left( \alpha \right) = a\).

Proof

Let \(\alpha = \left( {a,a} \right) \in \) Θ. Then, clearly \(E\left( \alpha \right) = 1\) for any \(E \in \left\{ {E_{S, } E_{X, } E_{SK} } \right\}\) and \(\pi \left( \alpha \right) = 1 - 2a\). As \(K_{S} \left( \alpha \right) = 1 - 0.5\left( {E\left( \alpha \right) + \pi \left( \alpha \right)} \right)\), \(K_{S} \left( \alpha \right) = a\).

The above proposition shows that the knowledge measure \(K_{S} \left( \alpha \right)\) for an A-IFVs on the line \(\mu = \gamma\) is just membership or non-membership grade.

In view of Guo (2014), Guo and Xu (2018), the knowledge measures and the entropy measures are two distinct quantities, so these must be independent of each other. Guo and Xu (2018) defined two types of knowledge measures one is based on axiomatic approach while the other is parametric. Here only axiomatic approach is considered as discussed in Guo and Xu (2018).

Definition 9

Guo and Xu (2018) Let \({K}_{G}\) be a mapping from the set of A-IFSs on a set \(X to [\mathrm{0,1}]\). Then, \({K}_{G}\) is a knowledge measure if the following hold:

-

1.

\({K}_{G}(A)=1\;iff\;A\) is a crisp set

-

2.

\( K_{G} \left( A \right) = 0\;iff\;\pi_{A} \left( x \right) = 1 \;for\; all\; x \in X \)

-

3.

\({K}_{G}(A)\ge {K}_{G}(B)\) if A-IFS A is less fuzzy than A-IFS B.

In Definition 9, axiom 2 considers only one extreme case when \(\pi_{A} \left( x \right) = 1\). This happens only for one A-IFV \(x = \left( {0,0} \right)\). But in reality \(\pi_{A} \left( x \right)\) may have any value from the interval \(\left[ {0,1} \right] \) when \(x \in\) Θ. Therefore, scope of this definition is very limited while considering uncertainty index \(\pi_{A} \left( x \right)\). The above definition for knowledge measure apparently does not depend on the entropy. However, some of its connections in particular situations have been discussed in Guo and Xu (2018).

-

(1)

All the knowledge measures \(K_{N} , K_{G}\) and \(K_{S}\) given above do not differentiate between a fuzzy set and an A-IFS. However, these differentiate between the crisp set and A-IFS.

-

(2)

The knowledge measure \( K_{S}\) is merely the membership degree or non-member ship degree for A-IFVs lying on the line \( \mu = \gamma\), that \(is, K_{S} \left( \alpha \right) = \mu = \gamma\) for any entropy measure \(E\).

-

(3)

In general, \(K_{S} \left( \alpha \right)\) does not behave dually to the entropy measure for any fixed value of \(\pi\). It happens only when \(E\left( \alpha \right) = \pi \left( \alpha \right)\) for some \(\alpha \in\) Θ. However, for some fixed \( \pi\), \(K_{S} \left( \alpha \right) \ge K_{S} \left( \beta \right)\) iff \(E\left( \alpha \right) \le E\left( \beta \right)\) for all \(\alpha ,\beta \in\) Θ. On the other hand for a fixed value of E, \(K_{S} \left( \alpha \right) \ge K_{S} \left( \beta \right)\) iff \(\pi \left( \alpha \right) \le \pi \left( \beta \right)\) for all \(\alpha ,\beta \in\) Θ.

-

(4)

The knowledge measure \(K_{S} \left( \alpha \right)\) does not differentiate among the A-IFVs (\(0.5,0.5), \left( {0.5,0} \right), (0,0.5\)) for \(E \in \left\{ {E_{S, } E_{SK} } \right\}\). For all these three A-IFVs, knowledge measure is 0.5.

5 Generalized knowledge measures for A-IFVs

In previous section, different knowledge measures and their shortcomings have been discussed. Perhaps these short comings are due to the fact that these knowledge measures are given without defining any function which regulate the role of both entropy and uncertainty index. In this section, a generic definition based on the above-mentioned function is given. Then, existence of such functions has been established. A knowledge measure is a numerical value form the interval \(\left[ {0,1} \right]\) to show how much information is associated with A-IFV/A-IFS. Therefore, in Szmidt and Kacprzyk (2014), it is explained that a knowledge measure must take into account both entropy (fuzziness) and degree of hesitation (intuitionism). As in fuzzy sets no degree of hesitation is involved, a knowledge measure for A-IFSs must be able to differentiate between fuzzy sets and A-IFSs. As explained above knowledge measure \(K_{N} , K_{G}\) and \(K_{S}\) cannot differentiate between fuzzy sets and A-IFSs. Therefore, there is a need to define new knowledge measures which not only depend on both intuitionism and fuzziness of AIFVs but also be able to differentiate between fuzzy sets and A-IFSs. For this purpose, first define a function

such that.

-

(G1)

G \(\left( {x,y} \right) = 1\) iff either of \(x = 0 \;or\; y = 0.\)

-

(G2)

G is decreasing in \(x\) when \(y\) is fixed.

G is decreasing in \(y\) when \(x\) is fixed.

-

(G3)

G \(\left( {x,y} \right) =\) G \(\left( {y,x} \right)\) for all \(x,y \in \left[ {0,1} \right].\)

Now with the help of this function knowledge measures can defined as follows.

Definition 10

A function \(K: \) Θ \(\to \left[ {0,1} \right]\) may be called knowledge measure if:

where \(E\left( \alpha \right)\) is any entropy and \(\pi \left( \alpha \right)\) the hesitation margin for all \(\alpha \in \) Θ.

For an A-IFS A on a set \( X\), knowledge measure K may be considered as: \( K\left( A \right) = \frac{1}{n}\mathop \sum \limits_{x \in X} G\left( {E\left( {\mu_{A} \left( x \right),\gamma_{A} \left( x \right)} \right),\pi \left( {\mu_{A} \left( x \right),\gamma_{A} \left( x \right)} \right)} \right) \)From (G1), it is clear that.

\(K\left( \alpha \right) = 1 \Leftrightarrow G\left( {E\left( \alpha \right),\pi \left( \alpha \right)} \right) = 1 \Leftrightarrow E\left( \alpha \right) = 0 or \pi \left( \alpha \right) = 0\). That is, if \(\pi \left( \alpha \right) = 0\) then \(\alpha \in \left[ {1,0} \right]_{{\sim_{\pi } }}\) if \(E\left( \alpha \right) = 0\) then \(\alpha \in \left[ {1,0} \right]_{{\sim_{E} }}\) that is \(\alpha = \left( {1,0} \right) or \alpha = \left( {0,1} \right)\). This means the knowledge measure K has ability to differentiate among crisp sets, fuzzy set and A-IFS.

Theorem 1

For any fixed finite positive integer p, the function \( K_{I}^{p}\):Θ \(\to \left[ {0,1} \right]\) defined by.

for all \(\alpha \in \) Θ where \(E\left( \alpha \right)\) and \(\pi \left( \alpha \right)\) are, respectively, the entropy measure and the hesitation margin associated with α ∈ Θ is a knowledge measure.

Proof

Let \(K_{I}^{p} \left( \alpha \right) = 1\) for some \(\alpha \in\) Θ \(\Leftrightarrow 1 - \left[ {E\left( \alpha \right)\pi \left( \alpha \right)} \right]^{\frac{1}{p}} = 1 \Leftrightarrow \left[ {E\left( \alpha \right)\pi \left( \alpha \right)} \right]^{\frac{1}{p}} = 0\).

\(\Leftrightarrow E\left( \alpha \right)\pi \left( \alpha \right) = 0 \;if \;only\; if\; either\; E\;\left( \alpha \right) = 0 or \pi \left( \alpha \right) = 0\).

Now let for any \(\alpha ,\beta \in\) Θ, such that \(E\left( \alpha \right) = E\left( \beta \right)\) and \(\pi \left( \alpha \right) \le \pi \left( \beta \right) \Leftrightarrow E\left( \alpha \right)\pi \left( \alpha \right) \le E\left( \beta \right)\pi \left( \beta \right)\)

\(\Leftrightarrow 1 - \left[ {E\left( \alpha \right)\pi \left( \alpha \right)} \right]^{\frac{1}{p}} \ge 1 - \left[ {E\left( \beta \right)\pi \left( \beta \right)} \right]^{\frac{1}{p}} \Leftrightarrow K_{I}^{p} \left( \alpha \right) \ge K_{I}^{p} \left( \beta \right)\).

Similarly, for \(E\left( \alpha \right) \le E\left( \beta \right) \Leftrightarrow K_{I}^{p} \left( \alpha \right) \ge K_{I}^{p} \left( \beta \right) \; when \; \pi \left( \alpha \right) = \pi \left( \beta \right)\).

Also \(K_{I}^{p} \left( \alpha \right) = 1 - \left[ {E\left( \alpha \right)\pi \left( \alpha \right)} \right]^{\frac{1}{p}} = 1 - \left[ {\pi \left( \alpha \right)E\left( \alpha \right)} \right]^{\frac{1}{p}}\)

Another knowledge measure can be defined as below:

Theorem 2

For any fixed finite positive integer p, the function \(K_{I}^{^{\prime}p} \left( \alpha \right):\Theta \to \left[ {0,1} \right]\) defined by.

where \(E\left( \alpha \right)\) and \(\pi \left( \alpha \right)\) are, respectively, the entropy measure and the hesitation margin associated with \(\alpha \in\) Θ, is a knowledge measure.

It can be seen that for \( p = 1, K_{I}^{p} \left( \alpha \right) = K_{I}^{^{\prime}p} \left( \alpha \right)\).

Further, a new knowledge measure can be defined.

Theorem 3

For any fixed \(p = 1\) or \( 2\), the function \(K_{I}^{^\circ p} :\Theta \to \left[ {0,1} \right] \) defined by.

where \(E\left( \alpha \right)\) and \(\pi \left( \alpha \right)\) are, respectively, the entropy measure and the hesitation margin associated with \( \alpha \in \Theta\), is a knowledge measure.

Proof

It is easy to see that \(K_{I}^{^\circ p} \left( \alpha \right) = 1 \) if only if either \( E\left( \alpha \right) = 0\; or\; \pi \left( \alpha \right) = 0\). Further, let for any \(\alpha ,\beta \in\) Θ, such that E \(\left( \alpha \right) = E\left( \beta \right)\) and \(\pi \left( \alpha \right) \le \pi \left( \beta \right) \Leftrightarrow E\left( \alpha \right)\pi \left( \alpha \right) \le E\left( \beta \right)\pi \left( \beta \right)\).

Similarly for \(E\left( \alpha \right) \le E\left( \beta \right) \Leftrightarrow K_{I}^{^\circ p} \left( \alpha \right) \ge K_{I}^{^\circ p} \left( \beta \right) when \pi \left( \alpha \right) = \pi \left( \beta \right)\).

Further\(, K_{I}^{^\circ p} = 1 - p\left( {\frac{E\left( \alpha \right)\pi \left( \alpha \right)}{{E\left( \alpha \right) + \pi \left( \alpha \right)}}} \right) = 1 - p\left( {\frac{\pi \left( \alpha \right)E\left( \alpha \right)}{{\pi \left( \alpha \right) + E\left( \alpha \right)}}} \right)\) when either of \(\pi \left( \alpha \right)\) or \(E\left( \alpha \right)\) is nonzero.

Theorem 4

For any \(\alpha \in \Theta ,K_{I}^{^\circ 2} \left( \alpha \right) \ge K_{I}^{2} \left( \alpha \right) \ge K_{S} .\)

6 Application of the proposed knowledge measures in MCGDM problems

In (Yu 2014), Yu defined different confidence aggregate operators for IFVs. These operators involve level of confidence during the aggregation process. It is not mentioned in Yu (2014) how to determine the level of confidence. In this paper, we are of the view that confidence level is directly associated with the notion how much knowledge is associated with a certain IFV. That is if higher value of knowledge measure is associated with some IFV, then more confidently that IFV can be selected.

In (Ali et al. 2019), it is shown that there does not exist any natural ordering among IFVs. Therefore, comparison of ordering among the IFVs with respect to two distinct criteria is not suitable. In this paper, for the ranking IFVs, graphical method proposed in Ali et al. (2019) will be employed.

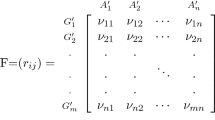

In this section, an application of knowledge measure in MAGDM under confidence level approach is discussed. First, we describe the framework of MAGDM under confidence level approach. Let \( Y = \left\{ {x_{1},x_{2}, \ldots , x_{m} } \right\}\) represent the alternatives and the set \( C = \left\{ {c_{1},c_{2}, \ldots , c_{n} } \right\}\) represents the attributes (criteria). Some IFV is allotted to each alternative \( x_{i}\), \(1 \le i \le m\) against criteria \( c_{j}\), \(1 \le j \le n\). Moreover, each criterion \( c_{j}\), \(1 \le j \le n\) has some predetermined weight \( \varpi_{j} ,1 \le j \le n\), where \(\varpi_{j} \ge 0\) and \(\mathop \sum \nolimits_{j = 1}^{n} \varpi_{j} = 1. \) Let \( D = \left\{ {d_{1},d_{2}, \ldots , d_{r} } \right\}\) be the group of experts/ decision makers with weights \( \Upsilon_{l} , 1 \le \Upsilon_{l} \le r\). Each expert gives his/her preferences for each alternative according to each criterion on the basis of his best knowledge and experience. An IF decision matrix \(A^{l} = \left[ {a_{ij}^{l} } \right]_{mxn}\) is obtained for each expert, with \( a_{ij}^{l} = \left( {\mu_{ij}^{l} ,\gamma_{ij}^{l} } \right)\), where \(\mu_{ij}^{l}\) represents the membership (favor) and \(\gamma_{ij}^{l} \) the non-membership (against) degrees by \(\ell_{th}\) expert for \( i_{th}\) alternative and \(j_{th}\) criterion. To assimilate the thought of confidence level, the decision makers simultaneously also assign the values that they are familiar with the assessed alternatives. The confidence levels for IFVs are calculated by the proposed knowledge measure.

To understand the complete procedure for decision-making problems, we have the following steps.

Step I: The individual decision maker assessment related to each alternative is obtained in the form of IFVs which composed of ℓ, IF decision matrices \(A^{l} = \left[ {a_{ij}^{l} } \right]_{mxn} = \left( {\mu_{ij}^{l} ,\gamma_{ij}^{l} } \right)_{mxn}\). The confidence level \( \lambda_{ij}^{l}\) for each IFV is calculated by using any of the knowledge measures \( K_{I}^{p} , K_{I}^{^{\prime}p} and K_{I}^{^\circ p}\). All IF decision matrices \(A^{l}\) are converted to \( A^{l} = \left( {\mu_{ij}^{l} ,\gamma_{ij}^{l} } \right), \lambda_{ij}^{l} \) by including the confidence level values \( \lambda_{ij}^{l}\) for each IFV \(\left( {\mu_{ij}^{l} ,\gamma_{ij}^{l} } \right)\).

Step II: Normalize the IF decision matrix \(A^{l}\) for each expert according to the benefit and cost criteria as follows:

Since the knowledge measure \( K_{I}^{p}\) is symmetric, the value of confidence level for each IFV remains unchanged.

Step III: For each normalized IF decision matrix \( A^{l}\), we apply IF weighted average operator under confidence level (CIFWA) defined by Yu (2014) to aggregate the information from each IF decision matrix \(A^{l}\) as follows:

\(= \left( {\mu_{{z_{i} }}^{l} ,\gamma_{{z_{i} }}^{l} } \right)\).

Here the IFV \(Z_{i}^{l} = \left( {\mu_{{z_{i} }}^{l} ,\gamma_{{z_{i} }}^{l} } \right)\) represents the individual overall evaluation values of alternatives \(x_{i}\) by \(l_{th}\) expert, i.e., we obtain \(Z^{l} = \left\{ {Z_{1}^{l} ,Z_{2}^{l} , \ldots .., Z_{m}^{l} } \right\}\) for each individual \(l_{th}\) expert.

Step IV: To calculate the weights of each individual expert \( d_{i}\), we use knowledge measure \( K_{I}^{p}\). The knowledge measure \( K_{I}^{p}\) of \(Z^{l}\) by \(l_{th}\) expert is calculated as follows:

Then, the expert weight vector \({\Upsilon } = \left( {{\Upsilon }_{1} ,{\Upsilon }_{2} , \ldots ..,{\Upsilon }_{r} } \right)^{T}\) is calculated as follows:

Step V: For all alternatives \( x_{i}\), the information is aggregated from the overall evaluation values \(Z^{l}\) calculated in Step III by using CIFWA operator as follows:

Step VI: To rank the alternatives \(x_{i}\), the graphical method for ordering the IFVs based on the uncertainty index and entropy is used. The graphical method for ranking the IFVs was proposed by Ali et al. (2019), where we have seen that the most of the already proposed ranking methods are not locally orthodox criterion.

In the following, an example of anonymous review process of the doctoral dissertation in Chinese universities is discussed. Data for this example are borrowed from Yu (2014).

Example 3

In certain universities, doctoral dissertation is assessed by a panel of thee experts. This review is made for the following criteria including topic selection and literature review, innovation, theory basis and special knowledge, capacity of scientific research and thesis writing. Certain weights are given to the attributes. Experts assess the dissertation of a student for the above-mentioned criteria and give their preferences in the form of IFVs. Three IF decision matrix A` (` = 1; 2; 3) one from each expert are presented in Tables 1, 2, and 3.

Secondly, we apply the CIFWA operator in each IF decision matrix \(A^{l}\) by using Eq. 8. The weight vector associated with the attributes is \( \varpi = \left\{ {0.15,0.3,0.2,0.2,0.15} \right\}^{T}\). After calculations, the individual overall evaluation values of alternatives \(x_{i}\) by each expert are obtained as follows:

Next, to calculate the weights of each individual expert \(d_{l}\), the knowledge measure \(K_{I}^{p} \left( {p = 2} \right)\) in Eq. 9 is used. The values of knowledge measure are \( K_{I}^{p} \left( {Z^{1} } \right) = 0.839865\), \(K_{I}^{p} \left( {Z^{2} } \right) = 0.820715,K_{I}^{p} \left( {Z^{3} } \right) = 0.824165\). The weights of the experts are calculated by using Eq. 10, and results are \({\Upsilon }_{1} = 0.338008, {\Upsilon }_{2} = 0.330302 \) and \({\Upsilon }_{3} = 0.33169.\)

The information obtained in Eqs. 12–14 is aggregated with expert’s weights by using CIFWA operators defined in Eq. 11. The aggregated A-IFVs are

The graphical method for ordering A-IFVs given in Ali et al. (2019) is employed. The uncertainty index of alternatives \(z_{i}\) is calculated as follows:

From Fig. 7, It is clear that the A-IFVs attached to each alternative are below the line \(\mu = \gamma .\) Therefore, A-IFVs with a lower value of uncertainty index will be preferred over those with higher uncertainty index.

We have

The alternative \(x5\) is obtained as the best alternative by the proposed method.

The order of the alternatives obtained from the proposed method is different from the order obtained by Yu (2014). Yu obtained the following order of the alternatives: x1≻x2≻x4≻x5≻x2. Yu used the score function to rank alternatives and thus obtained the different ranking of alternatives. But we have seen that the score function is not locally orthodox (Ali et al. 2019); therefore, we used the graphical method of ranking for IFVs. Also, the familiarity level of the experts by evaluating the alternative was assigned arbitrarily by Yu (2014), while the generalized knowledge measures are used to calculate the familiarity level of the experts in the proposed method.

7 Conclusion

Uncertainty index and entropy are two different types of uncertainties linked with A-IFSs. These two kinds of uncertainties are very useful to convey information about the quantity of knowledge associated with A-IFS. Different types of knowledge measures have been defined in A-IFSs, but those which depend on both uncertainty index and entropy are more useful. In the present paper, knowledge measures are defined with the help of a multi-variable function depending on both uncertainty index and entropy. These knowledge measures have the ability to differentiate crisp sets, fuzzy sets and A-IFSs. Proposed knowledge measures are very useful in MCGDM problems.

Data availability

The data utilized in this manuscript are hypothetical and artificial, and one can use these data before prior permission by just citing this manuscript.

References

Ali MI, Feng F, Mahmood T, Mehmood I, Faizan H (2019) A graphical method for ranking Atanassov’s intuitionistic fuzzy values using the uncertainty index and entropy. Int J Intell Syst 34:2692–2712

Atanassov K (1986) Intuitionistic fuzzy Sets. Fuzzy Sets Syst 20:87–96

Atanassov K (1999) Intuitionistic fuzzy sets theory and applications. Phys Verlag

Atanassov K, Szmidt E, Kacprzyk J (2010) On some ways of determining membership and non-membership functions characterizing fuzzy sets. Notes IFS 16:26–30

Bustince H, Fernandez J, Kolesárová A, Mesiar R (2013) Generation of linear orders for intervals by means of aggregation functions. Fuzzy Sets Syst 220:69–77

Bustince H, Burillo P (1996) Entropy on intuitionistic fuzzy sets and on interval-valued fuzzy sets. Fuzzy Sets Syst 78:305–316

Chen T (2011) A comparative analysis of score functions for multiple criteria decision making in intuitionistic fuzzy settings. Inf Sci 181:3652–3676

De SK, Biswas R, Roy AR (2001) An application of intuitionistic fuzzy sets in medical diagnosis. Fuzzy Sets Syst 117:209–213

De Luca A, Termini S (1972) A definition of non-probabilistic entropy in the setting of fuzzy sets theory. Inf Control 20:301–312

Farhadinia B (2013) A theoretical development on the entropy of interval valued fuzzy sets based on the intuitionistic distance and its relationship with similarity measure. Knowl-Based Syst 39:79–84

Guo K (2014) Amount of information and attitudinal-based method for ranking Atanassov’s intuitionistic fuzzy values. IEEE Trans Fuzzy Syst 1(22):177–188

Guo K (2016) Knowledge measure for Atanassov’s intuitionistic fuzzy sets. IEEE Trans Fuzzy Syst 24:1072–1078

Guo K, Xu H (2018) Knowledge measure for intuitionistic fuzzy sets with attitude towards non-specificity. Int J Mach Learn Cybern (1):13

Hung WL, Yang MS (2006) Fuzzy entropy on intuitionistic fuzzy sets. Int J Intell Syst 4(22):443–551

Kharal A (2009) Homeopathic drug selection using intuitionistic fuzzy sets. Homeopathy 98:35–39

Lalotra S, Singh S (2020) Knowledge measure of hesitant fuzzy set and its application in multi-attribute decision-making. Comput Appl Math 39:86

Liu B (2007) A survey of entropy of fuzzy variables. J Uncertain Syst 1:4–13

Lin L, Yuan X, Xia Z (2007) Multi-criteria fuzzy decision-making methods based on intuitionistic fuzzy sets. J Comput Syst Sci 73:84–88

Liu H, Wang G (2007) Multi-criteria decision making methods based on Intuitionistic fuzzy sets. Eur J Oper Res 179:220–233

Nguyen H (2016) A new interval-valued knowledge measure for interval-valued intuitionistic fuzzy sets and application in decision making. Expert Syst Appl 56:143–155

Nguyen H (2015) A new knowledge-based measure for Intuitionistic Fuzzy Sets and its application in multiple attribute group decision making. Expert Syst Appl 42:8766–8774

Ouyang Y, Pedrycz W (2016) A new model for intuitionistic fuzzy multi-attributes decision making. Eur J Oper Res 249:677–682

Pal NR, Bustince H, Pagola M, Mukherjee UK, Goswami DP, Belikov G (2013) Uncertainties with Atanssov’s intuitionistic fuzzy sets: fuzziness and lack of knowledge. Inf Sci 228:61–74

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27:379–423

Szmidt E, Kacprzyk J (2010) Measuring information and knowledge in the context of Atanassov's intuitionistic fuzzy sets. In: 10th international conference on intelligent systems design and applications, 78–1–4244–8136–1/10 (2010)

Szmidt E, Baldwin J (2004) Entropy for intuitionistic fuzzy set theory and mass assignment theory. Notes IFSs, 3(10):15–28

Szmidt E, Baldwin J (2003) New similarity measure for intuitionistic fuzzy set theory and mass assignment theory. Notes IFSs, 3(9):60–76

Szmidt E, Baldwin J (2006) Intuitionistic fuzzy set functions, mass assignment theory, possibility theory and histograms. IEEE World Congress on Comput Intel 237–243

Szmidt E, Kacprzyk J (2006) An application of intuitionistic fuzzy set similarity measures to a multi-criteria decision making problem, ICAISC 2006, LNAI 4029, Springer, 314–323

Szmidt E, Kacprzyk J (2009) Amount of information and its reliability in the ranking of Atanassov’s intuitionistic fuzzy alternatives. In: Rakus-Andersson E, Yager R, Ichalkaranje N, Jain LC (eds) Recent advances in decision making, SCI 222., Springer, pp 7–19

Szmidt E, Kacprzyk J (2001) Entropy for intuitionistic fuzzy sets. Fuzzy Sets System, 3(118):467–477

Szmidt E, Kacprzyk J (2004) Similarity of intuitionistic fuzzy sets and the Jaccard coefficient, IPMU, 1405–1412

Szmidt E, Kacprzyk J (2014) How to measure the amount of knowledge conveyed by Atanassov’s intuitionistic fuzzy sets. Inf Sci 257:276–285

Wei C, Liang X (2013) New entropy similarity measure of intuitionistic fuzzy sets and their applications in group decision making. Int J Comput Intel Syst 5(6):987–1001

Xu ZS, Yager RR (2006) Some geometric aggregation operators based on intuitionistic fuzzy environment. Int J General Syst 417—433

Yu D (2014) Intuitionistic fuzzy information aggregation under confidence levels. Appl Soft Comput 19:147–160

Zadeh LA (1965) Fuzzy sets. Inf Control 8:338–353

Funding

No funding is available for this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

About the publication of this manuscript, the authors declare that they have no conflict of interest.

Ethical approval

The authors state that this is their original work and it is neither submitted nor under consideration in any other journal simultaneously.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ali, M.I., Zhan, J., Khan, M.J. et al. Another view on knowledge measures in atanassov intuitionistic fuzzy sets. Soft Comput 26, 6507–6517 (2022). https://doi.org/10.1007/s00500-022-07127-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07127-3