Abstract

Palmprint is one of the most reliable biometrics and has been widely used for human identification due to its high recognition accuracy and convenience for practical application. But the existing palmprint-based human identification system often suffers from image misalignment, pixel corruption and much computational time on the large database. An effective palmprint recognition method is proposed by combining hierarchical multi-scale complete local binary pattern (HMS-CLBP) and weighted sparse representation-based classification (WSRC). The hierarchical multi-scale local invariant texture features are extracted firstly from each palmprint by multi-scale local binary pattern (MS-LBP) and multi-scale complete local binary pattern (MS-CLBP) and are concatenated into one hierarchical multi-scale fusion feature vector. Then, WSRC is constructed by the Gaussian kernel distance, and use the Gaussian kernel distances between the fusion feature vectors of the training and testing samples. Finally, the sparse decomposition of testing samples is implemented by solving the optimization problem based on l1 norm, and the palmprints are recognized by the minimum residuals. The proposed method inherits the advantages of CLBP and WSRC and has good rotation, illumination and occlusion invariance. The results on the PolyU and CASIA palmprint databases illustrate the good performance and rationale interpretation of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the increasing demand of biometric solutions for security systems, palmprint-based biometric, a relatively reliable but promising biometric technology, has been widely used in various access control and security-based applications due to its convenience in use, reliability, low cost, user friendliness and stable structure features and high speed and accuracy in the area of biometric recognition (Mansoor et al. 2011; Zhang et al. 2012; Raghavendra and Busch 2015). Compared with fingerprints, iris and face, palmprint contains a lot of discriminative characteristics, such as palm creases, principal lines, wrinkles, ridges, valleys, minutiae and even pores, and can be captured using low-cost sensors with low resolution (Kylberg and Sintorn 2013). Furthermore, ridge contains a lot of information, e.g., ridge path deviation, line shape, pores, edge contour, incipient ridges, warts and scars, a low-resolution palmprint image has rich principal line and wrinkle information, and specially, principal lines and wrinkles are difficult to be recovered from a crime scene. An online palmprint recognition system with high performance can be established by combining principal line and wrinkle characteristics (Abukmeil et al. 2015; Luo et al. 2016). The palmprint-based biometric has been investigated for more than 20 years, and many methods have been presented for biometric authentication systems. Kong et al. (2009) provided an overview of current palmprint research, including capture devices, preprocessing, palmprint-related segmentation and feature extraction and verification algorithms. Zhang et al. (2012) surveyed many palmprint recognition methods, and carried out a comparative study to evaluate the performance of these methods, demonstrated the limitations of the current palmprint recognition algorithms, and pointed out the future directions for biometric recognition algorithm. Dexing et al. (2018) presented a comprehensive overview of recent research progress of palmprint recognition, including data acquisition, database, preprocessing, feature extraction, matching and fusion. They discussed the challenges and future perspectives in palmprint recognition for further works. Multispectral palmprint recognition is one of the most well-known biometric recognition systems. Yassir et al. (2019) provided an overview of recent state-of-the-art multispectral palmprint recognition approaches by describing their feature extraction and fusion, matching and decision algorithms, evaluating their performances for both verification and identification.

Local binary pattern (LBP) algorithm and its variants have been widely applied to various feature extraction owing to its high texture discrimination capability and low computational cost (Song et al. 2013). LBP has shown its superiority in palmprint feature extraction. Mu et al. (2010) proposed a palmprint texture representation based on discriminative local binary patterns statistic (DLBPS). In the approach, a palmprint is firstly divided into non-overlapping and equal-sized regions and they are labeled into LBP independently, and then discriminative common vectors algorithm is applied for dimensionality reduction of the feature space. Dai et al. (2010) presented a palmprint recognition method by combining local binary pattern (LBP) and cellular automata. Sehgal (2015) proposed a palmprint recognition using LBP and support vector machines (SVM). Tamrakar and Khanna (2015) presented a palmprint recognition approach based on the local distribution of uniform LBP (ULBP). ULBP refers to the pattern of uniform appearance with limited discontinuities. ULBP histograms can be used as features to handle occlusion up to 36%. Inspired by the concepts of LBP feature descriptors and a random sampling subspace, El-Tarhouni et al. (2017) proposed a feature extraction technique that combines the pyramid histogram of oriented gradients and LBP, where the features are concatenated for palmprint classification. The performance of single LBP operator is limited, while multi-scale or multi-resolution could represent more image features under different settings. Guo et al. (2017) proposed a collaborative representation model with hierarchical multi-scale LBP (HMS-LBP) for palmprint recognition. HMS-LBP can retrieve useful information from non-uniform patterns and reduce the influence of gray scale, rotation and illumination.

Biometric authentication via sparse representation-based classification (SRC) has received more and more attention in recent years (Xu et al. 2013; Jia et al. 2015). Weighted SRC (WSRC) relies on a distance metric to penalize the dissimilar data points and award the similar points (Yin and Wu 2013). Given a test sample, WSRC pays more attention to those training samples that are more similar to the test sample in representing the test sample. In general, the representation result of WSRC is sparser than that of SRC and can obtain the better recognition results. In SRC and WSRC, the precise choice of feature space is no longer critical, and it is robust to corruption and occlusion. Specially, WSRC can preserve the similarity between the test sample and its neighbor training data in seeking the sparse linear representation (Fan et al. 2015). The recognition performance can be improved by combining SRC and LBP. Ouyang and Sang (2013) used histograms of oriented gradient (HOG) descriptors and LBP conjunction with SRC separately to get two judgment feature vectors and managed to fuse them to achieve a better performance. Chan and Kittler (2010) proposed a preliminary tentative of combining LBP-based features with SRC for face recognition. In view of the problem that common palmprint recognition methods and systems are susceptible to noise and rotation interference, Wang et al. (2014) presented a palmprint recognition method by using ULBP and SRC. The method utilizes ULBP to extract palmprint invariance features and takes ULBP features of the training samples to construct a redundant dictionary and achieves sparse decomposition of testing samples by solving the optimization problem based on l1 norm.

In the process of actual palmprint acquisition, the quality, position, direction and stretching degree may change from time to time, and the sizes and shapes of the palmprints collected from the same palmprint at different times are different. It is difficult to extract the robust invariant features from the palmprint for further matching (Kong et al. 2009; Zhang et al. 2012; Dexing et al. 2018). Therefore, palmprint identification is still a very competitive topic in biometric research because the palmprints often change in terms of illumination, size, shape, rotation, occlusion and noisy sensors, etc. (Raghavendra and Busch 2015; Kong et al. 2009). In the paper, motivated by HMS-LBP (Guo et al. 2017), multi-scale complete LBP (MS-CLBP) (Huang et al. 2016; Chen et al. 2015), weighted SRC (WSRC) (Lu et al. 2013, 2017) and the recent improvement in biometric recognition, combining HMS-CLBP and WSRC, a novel palmprint recognition method is proposed and validated on two public palmprint databases.

The major contributions of the proposed method are listed as the following:

HMS-CLBP is used to build a more powerful feature representation of palmprint, and a lot of extensive experiments are carried out to demonstrate its superior performance in terms of classification accuracy and computational complexity.

WSRC is combined with HMS-CLBP for palmprint identification to improve the robustness against noise and variation of illumination, rotation and occlusion.

The experimental results on two public palmprint databases verify the effectiveness of our proposed method as compared to the state-of-the-art algorithms.

The rest of this paper is arranged as follows: In Sect. 2, we briefly review some related works, including LBP, complete LBP (CLBP), HMS-CLBP, SRC and WSRC. A new palmprint recognition method is proposed by combining HMS-CLBP and WSRC in Sect. 3. Comparison experimental results are presented and analyzed in Sect. 4. Finally, the conclusions and the future work are given in Sect. 5.

.

2 Introduction

2.1 LBP, CLBP and HMS-CLBP

LBP is an effective descriptor of spatial structure information of local image texture by considering the relationship between a center pixel and its gray value. LBP is calculated by,

where \( g_{c} \) is the gray value of (x, y), \( g_{i} (i = 0,1, \ldots ,P - 1) \) is the gray value of its neighborhood pixel on a circle of radius, P is the number of neighborhood pixels around the center pixel, R is the neighborhood radius from the reference pixel, \( f(x) \) is the threshold function for the basic LBP and T is the threshold value. T is set as 0 in the traditional LBP.

The histogram of an image consists of information about the distribution of the local micro-patterns, including spots, flat areas, edge ends and curves. Suppose the texture image is of size N × M. After conducting LBP of the image, the histogram of LBP is calculated to represent the whole texture image by,

where \( k = 0,1, \ldots ,K - 1,\;h\{ A\} = \left\{ {\begin{array}{*{20}l} {1,\quad } \hfill & {A\,{\text{is}}\,{\text{true}}} \hfill \\ {0,\quad } \hfill & {\text{otherwise}} \hfill \\ \end{array} } \right.\)

and K is the number of different labels produced by the LBP operator.

The LBP image is more efficient than the original image in pattern classification due to the fact that the central gray level \( g_{\text{c}} \) is replaced by the combination of its neighbor gray values. From Eq. (1), it is found that the original LBP only uses the sign information of \( g_{i} - g_{\text{c}} \), while ignoring the magnitude information. However, the sign and magnitude are complementary, and they can be used to exactly reconstruct the difference \( g_{i} - g_{\text{c}} \). Complete LBP (CLBP) considers the signs and magnitudes of the image local differences (Chen et al. 2015). Different from LBP, in CLBP, “0” is coded as “− 1.” CLBP is defined as follows:

where c is a threshold that is set to the mean value of \( g_{i} - g_{\text{c}} \) from the whole image.

Figure 1 illustrates an example of the LBP- and CLBP-coded images corresponding to an input palmprint. From Fig. 1, it is observed that both LBP and CLBP can capture the spatial pattern and the contrast of local image texture such as principal lines, wrinkles and ridges; moreover, LBP is able to provide more detailed texture information than CLBP (Lim et al. 2017).

LBP and CLBP features computed from a single scale may not be able to detect the dominant texture features from the palmprint image since they characterize the image texture only at a particular resolution. In LBP and CLBP, we can change the neighborhood radius R to obtain the spatial resolution. A possible solution is to characterize the image texture in multiple resolutions, i.e., multi-scale LBP (MS-LBP) and multi-scale CLBP (MS-CLBP) (El-Tarhouni et al. 2017; Guo et al. 2017), which can cope with the limitation of LBP and CLBP. MS-LBP and MS-CLBP can be implemented by combining the information provided by multiple operators by varying (P, R) of LBP and CLBP. For simplicity, the number of neighbors is fixed to P and different values of R are tuned to achieve the optimal combination. An example of four LBPs with different scales is often set as P = 8, R1 = 1, R2 = 2, R3 = 3 and R4 = 4, as shown in Fig. 2, and then four LBP histogram feature vectors extracted from each LBP are concatenated to form a multi-scale multiple resolution image representation. One disadvantage of MS-LBP and MS-CLBP is that the computational complexity increases due to multiple resolutions.

Another simple implementation scheme of MS-LBP and MS-CLBP is often adopted by resizing the original image to different scales (e.g., 1/2 and 1/4 of the original image). First, the original image is down-sampled using the bicubic interpolation to obtain multiple images at different scales. The value of scale is between 0 and 1, where 1 denotes the original image. Then, the LBP and CLBP operators of fixed radius and the number of neighbors are applied to the multiple-scale images. Each histogram of all LBPs and CLBPs with different scales is calculated by Eq. (2), respectively. Figure 3 illustrates MS-LBPs, MS-CLBPs and their corresponding histograms with three scales.

From Fig. 3a–c, it is observed that MS-LBP and MS-CLBP operators can capture the spatial pattern and the contrast of local image texture, such as wrinkles, ridges, edges and corners. Compared with Fig. 2, we find that the images in Fig. 3 have smaller sizes than the original image leading to much fewer pixels for the CLBP operator. To facilitate computational efficiency, we choose the scale to be between 0 and 1 in the following experiments. HMS-CLBP is formed by combining MS-LBP and MS-CLBP, and a composite feature vector is concatenated by all the histogram features of HMS-CLBP, which is a hierarchical multiple-scale image representation for a palmprint, as shown in Fig. 3e, f. Then, the dimensionality of the HMS-CLBP histogram features is much less than that of multiple operators by varying (P, R) of LBP and CLBP.

2.2 SRC and WSRC

SRC utilizes an over-complete dictionary to linearly code a probe sample, where the over-complete dictionary is composed of all the training samples, the obtained coding coefficients are sparse by imposing the l1-norm constraint, and the probe sample is assigned into the class with the minimum residual. Suppose there are n training samples \( x_{i} \in R^{m} (i = 1,2, \ldots ,n,m < n) \) and a testing sample \( y \in R^{m} \) from C different classes.

First, construct the dictionary matrix \( A \in R^{m \times n} \) by n training samples \( x_{i} (i = 1,2, \ldots ,n) \), and then normalize each column of A to unit l2-norm, where each column of A is training sample called basis vector or atom. To learn a dictionary D to sparsely represent the test sample, solve the following optimization problem,

where \( \alpha \) is called as sparse representation coefficients of y, it is a column vector and λ > 0 is a scalar regularization parameter which balances the trade-off between the sparsity of the solution and the reconstruction error.

Equation (4) can be solved by exploiting the proposed algorithms in Ref. Jia et al. (2015). According to the nonzero coefficients in x, it can quickly know the class of the test sample.

Then, compute residue \( r_{i} (y) = \left\| {y - A\delta_{i} } \right\|_{2} ,\quad i = 1,2, \ldots ,C \), where \( \delta_{i} :R^{n} \to R^{n} \) is the characteristic function that selects the coefficients associated with the ith class.

Finally, identify the class label C(y) of y with the minimum residual, \( C (y )= \arg \mathop {\hbox{min} }\limits_{i} r_{i} (y) \).

In SRC, all training samples in the same class are considered equally important, which is unpractical. Moreover, SRC may perform worse than conventional classifier when there are enough training samples. WSRC relies on a distance metric to penalize the dissimilar data points and award the similar points (Chen et al. 2015; Lu et al. 2013). It tries to push the reconstructed sample from the true class near to the testing sample and push the reconstructed sample from all other false classes away from the testing sample. Therefore, WSRC integrates both sparsity and locality structures of the data to further improve the classification performance of SRC. Different from SRC, WSRC solves a weighted l2-norm minimization problem (Lu et al. 2017),

where W is a diagonal weighted matrix, and its diagonal elements are \( w_{1} ,w_{2} , \ldots ,w_{n} \).

Equation (5) makes sure that the coding coefficients of WSRC tend to be not only sparse but also local in linear representation (Dai et al. 2010), which can represent the test sample more robustly. After solving Eq. (5) and then obtaining the coefficients x, the image classification procedure is similar to that of SRC. Comparing Eq. (1) with Eq. (5), it is clear that WSRC is an extension of SRC. SRC is a special case of WSRC when each weight in WSRC is set to 1. The sparse representation of SRC and WSRC is calculated by exploiting l1-norm minimization problem (Lu et al. 2013).

Gaussian kernel distance can capture the nonlinear information within the dataset to measure the similarity between the samples, which is calculated by,

where \( \beta \) is the Gaussian kernel width which is simply set as the mean of all Gaussian kernel distances.

Then, for a training sample \( x_{i} \) and a testing sample y, its weight is \( w_{i} = d(x_{i} ,y) \). After computing the weight of each training sample, the weighted matrix W is denoted as:

In the following experiments, \( \beta \) is set as the average Euclidean distance of the training samples (Fan et al. 2015),

where M is the number of the training samples.

Gaussian kernel distance is usually applied to the typical kernel-based image classification methods such as kernel principal component analysis (KPCA) and kernel discriminant analysis (KDA), which put different weights on individual images. It can be used to construct the weighted values for WSRC (Fan et al. 2015). From Eq. (6), it is seen the Gaussian kernel distance between any two samples is between 0 and 1, so we can directly utilize this distance as the weight of the training samples in WSRC (Yin and Wu 2013; Lu et al. 2013, 2017).

3 Palmprint identification by combining HMS-CLBP and WSRC

Given a training sample and a test sample, if they belong to the same class, they are commonly similar, and then the training sample should be more important in representing the test sample than other training samples. Therefore, we should assign large weight to this training sample. When we employ the weighted training samples to represent the test sample, the representation coefficients may be sparser than that obtained by the un-weighted training samples in the typical SRC. This representation will be beneficial for image classification. In classical WSRC, the distance between test samples and training sample is used to form the weight matrix, which can utilize data locality to seek the sparse linear representation, but the local feature is not extracted effectively. We aim to measure the significance of each training sample in representing the test samples. The significance can be evaluated by computing the distance between the training sample and the test sample. Gaussian kernel distance is used for defining the weight of the training sample.

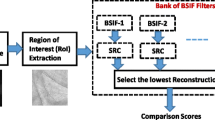

From the above analysis, we propose a palmprint identification method by combining HMS-CLBP and WSRC, namely HMS-CLBP + WSRC. It contains three main steps: The first step is to extract the HMS-CLBP feature vectors of all palmprints. The second step is to calculate Gaussian kernel distances between the HMS-CLBP feature vectors of the training samples and a given test sample. Then, the weights of the training samples are determined by the distance information. The third step is to perform WSRC by using the weighted training samples. The framework of the proposed method is shown in Fig. 4.

Suppose there are m training palmprint images and a testing palmprint image. The steps of the proposed method are listed in detail as follows:

- 1.

Crop each palmprint image and obtain a region-of-interest (ROI) image.

- 2.

Resize each ROI image, generate three ROI images corresponding to three different scales, i.e., scale = 1, 1/2 and 1/4, implement MS-LBPs and MS-CLBPs, calculate their histograms from each ROI image, respectively, and concatenate six histograms to form a HMS-CLBP feature vector for the original image. To address the inherent overfitting problem of linear discriminant analysis, reduce the dimensionality of the concatenated feature vector by principle component analysis (PCA) with retaining 50 maximum eigenvalues, and obtain final feature vector for a palmprint, called as HMS-CLBP feature vector.

- 3.

Compute the Gaussian kernel distances between the HMS-CLBP feature vectors of test image and training images, arrange all weighted HMS-CLBP feature vectors of all the training images into a matrix X, normalize the columns of X to have unit l2-norm, and establish a redundant dictionary (training sample library).

- 4.

Form the weight matrix W in weighted l1-norm minimization problem.

- 5.

Construct and solve the weighted l1-norm minimization problem as Eq. (5), and obtain the sparse representation coefficients.

- 6.

Calculate the residual of each class.

- 7.

Assign the test sample to the class with the minimum residual.

4 Experiments and analysis

In this section, we validate the identification performance of the proposed method using two widely used public palmprint datasets and compare it with four state-of-the-art palmprint recognition algorithms: local line directional patterns (LLDP) (Luo et al. 2016), LBP and SVM (LBP-SVM) (Sehgal 2015), ULBP histograms (ULBPH) (Tamrakar and Khanna 2015) and Pascal coefficients-based LBP and PHOG descriptors (LBP-PHOG) (El-Tarhouni et al. 2017). In the proposed method, the HMS-CLBP features are extracted and SRC is utilized for palmprint identification. In LLDP, ULBPH and LBP-PHOG, the different features are extracted from each cropped ROI image and then the simple nearest neighborhood classifier is employed to recognize palmprint, while in LBP-SVM, the LBP features are extracted and support vector machines (SVM) are used as classifier. All parameters in these methods can be decided via cross-validation experiments using the training data. For simplification, some parameters can be empirically according to the existing references as P = 8, R = 2, scale = 1, 1/2 and 1/4, and the parameter \( \lambda \) in Eq. (5) is set \( \lambda = 0.01 \)(Fan et al. 2015; Lu et al. 2013, 2017). All experiments are conducted on an Intel i7 Quadcore 3.4 GHz desktop computer with 8-GB RAM, Window 7 and MATLAB 7.0.

4.1 Preprocessing

Similar to other biometric identification systems, a palmprint identification system includes capturing palmprint, segmenting region of interest (ROI), extracting and selecting features and classifying and identifying individuals (Kong et al. 2009; Zhang et al. 2012), where segmenting accurately ROI from the palmprint plays a crucial role in the overall palmprint recognition, because the rich textural information of palmprint is in the center part of a palm, and it can define a coordinate system to align the different palmprints collected from the same palm; otherwise, the recognition result would be unreliable. PolyU 2D palmprints were collected in a constraint environment, and their ROIs have been extracted in the database. CASIA palmprints were captured from different distances and positions between hand and camera. Due to the different sizes of hand of each subject, the ROIs of different sizes were segmented from CASIA database. The main steps to extract ROIs include palmprint collection, binarization, palmprint alignment, finger boundary extraction, coordinate alignment, ROI region and extracted ROI. These steps are explained in detail as follows (Mansoor et al. 2011; Zhang et al. 2012; Raghavendra and Busch 2015; Kylberg and Sintorn 2013; Abukmeil et al. 2015; Luo et al. 2016) (Fig. 5):

- 1.

Convert the original palmprints into grayscale image using a threshold obtained by local minima of palmprint histogram and segment the palmprint region from the background image. Then, the irrelevant parts of this binary image are removed by morphological open operations.

- 2.

Segment palmprint by bounding box operation on the mask of the region having the maximum area in the binary images, and then perpendicularly rotate the obtained segmented image to the maximum elliptical axis, which is a line between tip of the middle finger and mid of the wrist (Raghavendra and Busch 2015).

- 3.

Extract the boundary of four fingers by tracing the boundary pixel of palmprint segment from top left to right bottom, while limiting the height of the fingers to one-third height of the palmprint image.

- 4.

Select the tips of four fingers on the basis of local maximum of y coordinate on the boundary pixels of fingers and label the gaps between two fingers as A and B through the local minimum of the boundary pixels between tips of two fingers.

- 5.

Connect A and B by a line L1 and draw another line L2 passing through the middle point of L1 perpendicularly.

- 6.

Draw a square region of size AB parallel to line AB at a distance of one-tenth of line AB and finally segment ROI image.

.

4.2 Experiments on PolyU dataset

The first experiment is carried out to test the algorithm on PolyU dataset, which was constructed for research and non-commercial purposes by the Hong Kong Polytechnic University (PolyU) (http://www4.comp.polyu.edu.hk/~biometrics/). The database has totally 7752 grayscale images (384 × 288 pixels at 75 dpi) in BMP image format, corresponding to 386 different the left and right palms of 193 individuals, and about 20 palmprints from each individual were collected in a constraint environment in two sessions, where about 10 palmprints were collected in each session. The palmprints in the PolyU database have relatively high image qualities. Figure 6 shows 40 normal images and eight noisy images of palmprint ROI of PolyUI database. In the database, the size of each ROI image is 128 × 128 pixels. From Fig. 6a, c, it is observed that there are some noises and some variations in illumination and position between the images captured at two sessions. From Fig. 4, although the resolution is low, the wrinkles and the principal lines are still clear in whole.

First, we select 200 palmprints of the top 10 subjects in the PolyUI dataset to conduct a lot of experiments by WSRC and SRC to indicate the advantage of WSRC. Figure 7 shows some visualization results in two cases. Figure 7a shows the first palmprint as a test image belonging to the first subject from the PolyUI dataset. Figure 7b shows the sparse representation coefficients for this test image by SRC and WSRC directly using the original palmprints, where the weights in WSRC are the Gaussian kernel distances between any training palmprints and the test palmprint. Figure 7c shows the sparse representation coefficients for this test image by SRC and WSRC using the HMS-CLBP feature vectors, where the weights in WSRC are the Gaussian kernel distances between the HMS-CLBP feature vectors of any training palmprints and the test palmprint. The final coefficient of each training sample equals the weight of this training sample multiplying its representation coefficient obtained by exploiting the typical SRC on the weighted training samples.

From Fig. 7b, c, it is seen that the first training sample belonging to the first class gets the largest coefficient in both SRC and WSRC, and the four largest coefficients of the training sample are 0.34223, 04125,0.3617 and 0.4615, respectively, and the final representation coefficients derived from WSRC are the sparsest. Therefore, the effectiveness of HMS-CLBP + WSRC is the best on the HMS-CLBP feature vector set, and WSRC is better than SRC as a whole in two cases, i.e., on the original dataset and the extracted feature vector set. For most of the test samples, the same result can be achieved in many experiments on the PolyUI database.

In the following experiments, fivefold cross-validation scheme is performed in which the PolyUI dataset is randomly partitioned into five equal subsets and four palmprint images from each individual in a subset. Among them, four subsets are used for training and the remaining subset is used for testing. The classification accuracy of one cross-validation is the average over the five cross-validation evaluations. The cross-validation experiment is repeated 50 times, and the average result is shown in Table 1. The comparison results of LLDP (Luo et al. 2016), LBP-SVM (Sehgal 2015), ULBPH (Tamrakar and Khanna 2015) and LBP-PHOG (El-Tarhouni et al. 2017) are also shown in Table 1.

4.3 Experiments on CASIA dataset

The second experiment is performed on CASIA palmprint dataset. The dataset was made by Institute of Automation, Chinese Academy of Sciences (http://www.cbsr.ia.ac.cn/english/Palmprint%20Databases.asp), which contains 5335 grayscale palmprint images (640 × 480 pixels at 72 dpi) collected from 312 individuals in a single session using CMOS camera, ten left palmprints and ten right palmprints per subject. The two palms of the same subject are considered as two distinct classes. The setup imposes less physical constraints on the users’ palm as compared to PolyU capturing device and hence leads to palm movements introducing distortions and blurring in the captured palmprint images. Figure 8 illustrates 20 palmprints of a subject of CASIA palmprint dataset.

Considering the palmprints of CASIA database suffer more from palm movements and distortions, we firstly resize each palmprint to 380 × 284 pixels before extracting the ROI and then extract ROI image as in Sect. 4.1. Fivefold cross-validation scheme is also applied and repeated 50 times for palmprint identification. All the other parameters are kept unchanged as in Sect. 4.2. The average results of LLDP, LBP-SVM, ULBPH, LBP-PHOG, and the proposed method are listed in Table 2.

4.4 Experiments on abnormal and occluded palmprint sets

To show the robustness of the proposed method, two sets of experiments are carried out two abnormal and occluded palmprint testing sets, where the normal palmprint recognition template used in Sects. 4.2 and 4.3 is employed.

4.4.1 Experiment 1

We select all palmprints of the top 20 subjects from PolyU database. From of them, we artificially construct a set of 50 abnormal palmprints as test set, which are Gaussian noise, salt- and pepper-noise and speckle noise, and irregular, corruption and misalignment samples. The noise samples are generated by the imnoise (I, type, M, V) function of MATLAB 7.0, where type is noise modal such as Gaussian, salt and pepper, and speckle noises, M is noise mean and V is variance to the image I. We set as zero-mean noise with 0.01 variance. Figure 9 shows ten abnormal palmprints. We carry out and repeat the recognition experiment 50 times. The average of the recognition rates of five methods is shown in Table 3.

4.4.2 Experiment 2

We also use all palmprints of the top 20 subjects from PolyU database to test the robustness of the proposed method. In real life, the size and position of occlusion in palmprints vary for different reasons. To test the robustness of the proposed method, we randomly select 50 palmprints and artificially shade each palmprint with occlusion from 1 to 20% at any portion on it. Figure 10 shows some occluded palmprints. The recognition template used in Sects. 4.2 and 4.3 is also utilized to perform the palmprint identification experiments. The identification experiments are carried out and repeated 50 times. The averages of 50 experiments by five methods are listed in Table 4.

4.5 Computational complexity

In this subsection, we compare the computational complexity of the related algorithms and the proposed method. Fivefold cross-validation scheme is performed on the PolyUI dataset to obtain the processing time for each method. The processing time is the average over all the palmprint images. Our code is written in MATLAB 7.0 software, and the experiments are performed on an Intel i7 Quadcore 3.4 GHz desktop computer with 8-GB RAM. The related methods are LLDP (Luo et al. 2016), HM-LBP (Guo et al. 2017), WSRC-MSLBP (Yin and Wu 2013) and uniform LBP and sparse representation (ULBP-SR) (Wang et al. 2014). In WSRC-MSLBP and ULBP-SR algorithms, SR and WSRC are implemented based on l1 norm, and WSRC is performed often faster than SR. Their efficiency is usually lower than that based on l2 norm. The proposed method is based on l2 norm. Table 5 lists the computational time of each algorithm. From Table 5, our method is the fastest. The main reason may be that the Gaussian kernel distance in WSRC in our method can accelerate convergence in WSRC. Our method is much faster than HM-LBP, because we down-sample the original image instead of altering the radius of a circle to change the spatial resolution. For an image with size of Ix × Iy pixels, a total of Ix × Iy × m thresholding operations are required for all the pixels in each scale. Moreover, the MS-LBPs and MS-CLBPs histograms are calculated based on Ix × Iy binary strings. Therefore, HM-LBP is time-consuming. In our method, we fix the radius and number of neighbors to the images of different scales to develop a second multi-scale analysis, which can reduce the computational complexity. Among these algorithms, since LLDP needs to extract the features from each image, which is also time-consuming, it is worth mentioning that the proposed HMS-CLBP descriptor can be implemented in parallel to achieve high computational efficiency.

4.6 Analysis

From Fig. 7, it is found that Gaussian kernel distance-based WSRC (GWSRC) algorithm is more effective than Euclidean distance-based WSRC (EWSRC) algorithm. Compared with Euclidean distance, Gaussian kernel distance can effectively capture the nonlinear information within the dataset. EWSRC may fail to perform well if the Euclidean distance between training and testing samples is very large and the training sample is normalized to have unit l2-norm which is often conducted in many SRC-based image classification methods. Because GWSRC between any training sample and the test sample is between 0 and 1, it not only can be directly used to calculate the sample weights in SRC-based methods, but also can be used as the nonlinear mapping that transforms the original input space into a high-dimensional feature space.

Tables 1, 2, 3, 4 and 5 show that the proposed method outperforms the other comparison methods, i.e., the recognition rate is the highest and the computational time is the least. Particularly, from Tables 3 and 4, it can achieve the high recognition effect when there are some bad and occluded samples. The improvement comes from making full use of the respective advantages of HMS-CLBP and WSRC. In fact, collecting palmprints is inevitably affected by position, rotation, illumination, noise and occlusion. The hierarchical multi-scale local invariant texture features are extracted by HMS-CLBP, which not only have rotation invariance and noise resistance, but also better represent the biological characteristics of palmprint image and effectively reduce the feature dimension. The palmprints are effectively recognized by WSRC. It uses the Gaussian kernel distance between the training and test samples as the prior information to construct the SRC model. It is robust against varying extents of occlusion.

5 Conclusion and the future work

Palmprint-based identification has always attracted increasing amount of attention because line features are abundant in palmprint so that palmprint recognition can be used in complex environments. To improve the recognition performance of the existing palmprint recognition system, a palmprint recognition method is proposed by combining HMS-CLBP and WSRC. Firstly, the hierarchical multi-scale local invariant texture features are extracted from each palmprint image by HMS-CLBP. The extracted features are weighted and expanded into column vectors to establish a redundant dictionary (training sample set). Then, the test sample is sparsely represented in the dictionary to obtain the sparse representation coefficients. The recognition of palmprint images is realized by comparing the residuals of different classes. The experimental results on the PolyU and CASIA databases validate the good performance of the proposed method. Although many palmprint recognition methods have achieved satisfactory recognition accuracy in the normal palmprint database, robust palmprint recognition is still challenging. Several interesting directions, such as unconstrained acquisition, efficient palmprint representation, corrupted or occluded palmprint recognition, might be promising for future research. In future work, we intend to investigate a more robust method for palmprint recognition system.

References

Abukmeil MAM, Hatem E, Alhanjouri M (2015) Palmprint recognition via bandlet, ridgelet, wavelet and neural network. J Comput Sci Appl 3(2):23–28

Chan CH, Kittler J (2010) Sparse representation of (multiscale) histograms for face recognition robust to registration and illumination problems. In: IEEE 17th international conference on image processing (ICIP 2010), pp 3009–3012

Chen C, Zhang B, Su H et al (2015) Land-use scene classification using multi-scale completed local binary patterns. Signal Image Video Process 10(4):745–752

Dai X, Wang B, Wang P (2010) Palmprint recognition combining LBP and cellular automata. Lect Notes Comput Sci 6215:460–466

Dexing Z, Xuefeng D, Kuncai Z (2018) Decade progress of palmprint recognition: a brief survey. Neurocomputing. https://doi.org/10.1016/j.neucom.2018.03.081

El-Tarhouni W, Boubchir L, Elbendak M et al (2017) Multispectral palmprint recognition using Pascal coefficients-based LBP and PHOG descriptors with random sampling. Neural Comput Appl 4:1–11. https://doi.org/10.1007/s00521-017-3092-7

Fan Z, Ming N, Qi Z et al (2015) Weighted sparse representation for face recognition. Neurocomputing 151(1):304–309

Guo X, Zhou W, Zhang Y (2017) Collaborative representation with HM-LBP features for palmprint recognition. Mach Vis Appl 28(3–4):283–291

Huang L, Chen C, Li W et al (2016) Remote sensing image scene classification using multi-scale completed local binary patterns and fisher vectors. Remote Sens 8(6):483. https://doi.org/10.3390/rs8060483

Jia Q, Gao X, Guo H et al (2015) Multi-layer sparse representation for weighted LBP-blocks based facial expression recognition. Sensors (Basel) 15(3):6719–6739

Kong A, Zhang D, Kamel M (2009) A survey of palmprint recognition. Pattern Recognit 42(7):1408–1418

Kylberg G, Sintorn IM (2013) Evaluation of noise robustness for local binary pattern descriptors in texture classification. EURASIP J Image Video Process 1:17. https://doi.org/10.1186/1687-5281-2013-17

Lim ST, Ahmed MK, Lim SL (2017) Automatic classification of diabetic macular EDEMA using a modified completed local binary pattern (CLBP). In: IEEE international conference on signal and image processing applications. https://doi.org/10.1109/icsipa.2017.8120570

Lu CY, Min H, Gui J et al (2013) Face recognition via weighted sparse representation. J Vis Commun Image Represent 24(2):111–116

Lu Z, Xu B, Liu N et al (2017) Face recognition via weighted sparse representation using metric learning. In: IEEE International conference on multimedia and expo (ICME), Hong Kong, pp 391–396

Luo YT, Zhao LY, Zhang B (2016) Local line directional pattern for palmprint recognition. Pattern Recognit 50:26–44

Mansoor AB, Masood H, Mumtaz M et al (2011) A feature level multimodal approach for palmprint identification using directional subband energies. J Netw Comput Appl 34(1):159–171

Mu M, Ruan Q, Shen Y (2010) Palmprint recognition based on discriminative local binary patterns statistic feature. In: International conference on signal acquisition and processing. https://doi.org/10.1109/icsap.20

Ouyang Y, Sang N (2013) A facial expression recognition method by fusing multiple sparse representation based classifiers. In: Proceedings of the 10th international symposium on neural networks, pp 479–488

Raghavendra R, Busch C (2015) Texture based features for robust palmprint recognition: a comparative study. EURASIP J Inf Secur 2015(1):5. https://doi.org/10.1186/s13635-015-0022-z

Sehgal P (2015) Palm recognition using LBP and SVM. Int J Inf Technol Syst 4(1):35–41

Song KC, Yan YH, Chen WH et al (2013) Research and perspective on local binary pattern. Acta Autom Sin 39(6):730–744

Tamrakar D, Khanna P (2015) Occlusion invariant palmprint recognition with ULBP histograms. Procedia Comput Sci 54:491–500

Wang W, Jin W, Xie Y et al (2014) Palmprint recognition using uniform local binary patterns and sparse representation. Opto Electron Eng 41(12):60–65

Xu Y, Fan Z, Qiu M et al (2013) A sparse representation method of bimodal biometrics and palmprint recognition experiments. Neurocomputing 103(2):164–171

Yassir A, Larbi B, Boubaker D (2019) Multispectral palmprint recognition: a survey and comparative study. J Circuits Syst Comput 28(07):1950107

Yin HF, Wu XJ (2013) A new weighted sparse representation based on MSLBP and its application to face recognition. Partial Superv Learn LNAI 8183:104–115

Zhang D, Zuo W, Yue F (2012) A comparative study of palmprint recognition algorithms. ACM Comput Surv 44(1):1–37

Acknowledgements

This work is partially supported by the China National Natural Science Foundation under Grant Nos. 61473237. The authors would like to thank all the editors and anonymous reviewers for their constructive advices. The authors would like to thank the Hong Kong Polytechnic University (PolyU) and Institute of Automation, Chinese Academy of Sciences (CASIA) for sharing their palmprint databases with us.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Communicated by V. Loia.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, S., Wang, H. & Huang, W. Palmprint identification combining hierarchical multi-scale complete LBP and weighted SRC. Soft Comput 24, 4041–4053 (2020). https://doi.org/10.1007/s00500-019-04172-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04172-3