Abstract

Over the last decade, several metaheuristic algorithms have emerged to solve numerical function optimization problems. Since the performance of these algorithms presents a suboptimal behavior, a large number of studies have been carried out to find new and better algorithms. Therefore, this paper proposes a new metaheuristic algorithm, namely the car tracking optimization algorithm; it is inspired by observing the programming methods of other metaheuristic algorithms. And the proposed algorithm has been tested over 55 benchmark functions, and the results have been compared with firefly algorithm (FA), cuckoo searching algorithm (CS), and vortex search algorithm (VS). The results indicate that the performance of the proposed algorithm surpasses FA, CS, and VS algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

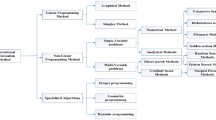

Finding a set of parameter values to satisfy the required performance metric under certain constraints is called optimization. When it comes to practical problems, it refers to choosing the best scheme. With engineering, manufacturing, medicine, finance, biology, chemistry, physics, and other areas being deeply researched, many complicated optimization problems occur. It is time consuming to solve these optimization problems using single traditional optimization methods which are based on gradient. Therefore, since the 1970s, inspired by the nature of some physical, biological, and social phenomena, researchers have proposed a series of intelligent optimization algorithms, which provide a good solution to solve complex optimization problems. These algorithms are called meta-heuristics algorithms. In the 1980s, the simulated annealing algorithm (Gelatt et al. 1983; Goffe et al. 1994), random climbing algorithm (Goldfeld et al. 1966; Choi and Yeung 2006), and evolutionary algorithm (Holland 1975; Rechenberg 1965; Golberg 1989) came into being. Since the 1990s, some scholars have gained inspiration from the foraging behavior of natural swarm biological, and a stochastic optimization algorithm is put forward by simulating the foraging behavior of these creatures, and then the swarm intelligence algorithms are established. The current swarm intelligence algorithms include ant colony algorithm (Colorni et al. 1991; Dorigo et al. 1996; Dorigo and Stützle 1999), particle swarm optimization (Eberhart and Kennedy 1995; Shi and Eberhart 1998), fish swarm algorithms (Li et al. 2002; Wang et al. 2005), artificial bee colony algorithm (Karaboga and Basturk 2007; Gao and Liu 2012), firefly algorithm (Yang 2010b; Yang et al. 2012), cuckoo search algorithm (Yang and Deb 2009, 2010), bat algorithm (Yang 2010a; Yang and Hossein Gandomi 2012), fruit fly algorithm (Pan 2012; Li et al. 2013). These proposed swarm intelligence algorithms provide more choices to solve complex optimization problems.

Although meta-heuristic algorithms show a strong ability of optimization in solving modern nonlinear global optimization problems, some algorithms will fall into local optimum when facing different optimization problems. Yang (2010c) pointed out that all meta-heuristic algorithms strive for making balance between randomization and local search to some extent. Each optimization algorithm has its own strengths and weaknesses. Therefore, it is necessary to come up with a better algorithm that can solve most optimization problems, and that is why so many swarm intelligence algorithms have emerged in recent years.

We can summarize the rules of these algorithms by studying these optimization algorithms: the set of all possible solutions of the problem being regarded as the solution space, generate a new solution set by applying certain operator operation to a subset of the possible solutions of the problem, and gradually evolve the population to optimal or near-optimal solution. In these swarm intelligence optimization algorithms, particle swarm algorithm (Eberhart and Kennedy 1995) updates the velocity and position of all population according to Eqs. (1) and (2):

As can be seen from Eq. (1), the flight path of the particles consists of three parts: The first part is the inertial motion of particles which contains the information of original speed \(v_i (t)\) of the particle itself; the second part is “cognitive component,” which is reflected by the distance of optimum position acquired from their experiences because this part consider their own experience of the particles; the third part is the “social part”, which indicates the sharing of social information reflected by the distance between the particles and the best position \(p_\mathrm{g} \) of swarm. \(c_1 , c_2 , \omega \) are weighs that control these three parts. The value of the speed of the next generation is updated, and then the particle positions are updated by Eq. (2). We can see from the composition of the velocity update formula of the particle swarm algorithm that the algorithm does not take the relationship between the particles into account, but take only the relationship between each particle and the global optimal position \(p_i \) and the relationship between each particle and the optimal position \(p_\mathrm{g} \) of the population.

Firefly algorithm (Yang 2010b) updates the speed and location according to Eqs. (3) and (4):

It can be seen from Eq. (3) that firefly flight path consists of two parts: The first part is reflected by the attraction \(\beta _{ij} \left( {r_{ij} } \right) \) of firefly j whose absolute brightness is greater than firefly i to firefly i and the relative distance in between; the second part is a random term with a specific coefficient \(\alpha \varepsilon _i \). We can see from the composition of the speed update formula of the firefly algorithm that the algorithm only considers the relationship between the fireflies and does not use the relationship between each firefly and the global optimal position to improve the global optimization ability.

Cuckoo search algorithm (Yang and Deb 2009) performs global random search according to Eq. (5) to update the speed, where \(\alpha \) is step size and \(L\left( \lambda \right) \) is Levy distribution function. Equation (6) represents the global random search trail of cuckoo according to Lévy flight process. \(L\left( \lambda \right) \) can improve the global search ability, but the algorithm does not use the relationship between the various populations and the relationship between each population and the global optimal population to improve the search ability.

Fruit fly algorithm (Pan 2012) updates the X axis (\(X_i\left( {t{+}1} \right) \)) and Y axis (\(Y_i \left( {t+1} \right) \)) positions of fruit flies in the next generation according to Eqs. (7) and (8); \(X\_\mathrm{axisbest}(t)\) and \(Y\_\mathrm{axisbest}(t)\) are the best place found by all fruit flies searching food in the last generation, the reciprocal of the distance (\(\mathrm{Dist}_i \)) between that position and the origin is taken as a solution, as in Eqs. (9) and (10). The fruit fly algorithm considers the position of the fruit flies population on the x, y axis and updates the position using the random number, but it is seen in Eqs. (7)–(10) that the algorithm does not use the relationship between each population and the relationship between each population and the global optimal population.

If we do not consider the background of these algorithms and their advantages and disadvantages, and simply take position and velocity updating formula of the four kinds of algorithms above into account, we can find that the velocity updating formula is constructed by the biological information of themselves, as well as some stochastic number for the particle swarm optimization algorithm (PSO) and the firefly algorithm. While the velocity updating formula of the cuckoo algorithm is derived by Levy distribution function, the fruit fly algorithm is different from optimization architecture of the former three algorithms; it can be seen from Eqs. (7) and (8) that the first part of the two equation is not current location \(x_i (t)\) of each population, but the best position \(X\_\mathrm{axisbest}(t)\) and \(Y\_\mathrm{axisbest}(t)\) is gained in last generation.

Velocity update formula of different algorithms is different, but these algorithms basically change the current position using different operating operator to change the speed. So, in a way, we can also learn the speed update method of the above algorithms to design reasonable and effective speed update formula artificially to get the new algorithm even without the observation of the natural environment.

Although the algorithms above have good search ability, they only use either the relationship among the various populations, or the relationship between each population and the global optimal population. Therefore, this paper designs a new optimization algorithm to find optimal solutions for more optimization problems by studying the advantages and disadvantages of the above algorithms. This paper designs a new adaptive global velocity updating method by using the relationship between each population and the global optimal population, and a new local speed update method by using the relationship among various groups. The algorithm divides the search population into two groups. One group uses the adaptive global velocity update method to find the global optimal solution, and the other group uses the adaptive local velocity update method to help the algorithm jump out of the local optimal when it falls into the local optimum. The initial background of the algorithm is a scenario where a lot of cars are looking for an object on a road, so the proposed algorithm is named car tracking optimization algorithm. To test the effectiveness of the algorithm, the algorithm will optimize a total of 55 test functions mentioned in Doğugan and Ölmez (2015) and Osuna-Enciso et al. (2016), and optimization results obtained by this algorithm will be compared with results of the firefly algorithm, cuckoo search algorithm, and VS algorithm, and it can be found that the proposed algorithm is able to find more optimal solution to test functions comparing to the results of above three algorithms.

The rest part of this article is arranged as follow: The following section will describe car tracking algorithm in detail. The third part will analyze and discuss the experimental results. Finally, the fourth section summarizes this paper.

2 The proposed car tracking algorithm

As shown in Fig. 1, assume that there are N cars on a road (only A and B are shown in Fig. 1), which locates on both sides of the origin, and that they are assigned to search an object p on this road. Car A is on the left side of the origin, so the value that represents car A is negative, car B is on the right of the origin, so the value that car B is positive.

The characteristics of the car are the desired speed (\(V_N\)) to find object p and current position (\(X_N\)) of the car . The cars can decide the magnitude and direction of the speed (\(V_N\)) according to their own ideas to find the location (\(X_p\)) where the object p probably is. When a car finds the possible location of object p, all cars will move to that location, and then restart searching the real location where the object p is.

In this article, based on the principle of cars searching object p, the procedure can be divided into several steps, and the readers can refer to programming example by following steps:

-

(1)

Randomly initialize the car population location (\(X\_\mathrm{axis}_{i,j} \)), where i is the size of randomly generated population, j represents that there are j cars in a population. If the objective function is one-dimensional, then \(j=1\). If the objective function is n-dimensional, then \(j ={ n}\).

$$\begin{aligned} \mathrm{Init}\,X\_\mathrm{axis}_{i,j}. \end{aligned}$$(11) -

(2)

Randomly initialize the speed (\(V\_\mathrm{RandomValue}_{{i,j}} \), positive and negative represents direction) and the initialized position (\(X_{i,j} (t))\) of the car population (i, j) individuals, and the position is limited within a certain range, as shown in Eq. (13), where t represents the iteration times.

$$\begin{aligned}&X_{i,j} (t)=X\_\mathrm{axis}_{i,j} +V\_\mathrm{RandomValue}_{{i,j}} \end{aligned}$$(12)$$\begin{aligned}&X_{i,j} (t)\nonumber \\&\quad = \left\{ {{\begin{array}{l} {\mathrm{rand}\left( {0,1} \right) \cdot \mathrm{upperlimit}_j ,X_{i,j} (t)>\mathrm{upperlimit}_j } \\ {X_{i,j} (t),\mathrm{lowerlimit}_j \le X_{i,j} (t)\le \mathrm{upperlimit}_j } \\ {\mathrm{rand}\left( {0,1} \right) \cdot \mathrm{lowerlimit}_j ,X_{i,j} (t)<\mathrm{lowerlimit}_j } \\ . \end{array} }} \right. \nonumber \\ \end{aligned}$$(13) -

(3)

After the initial move, substitute car population position (\(X_\mathrm{i} (t)\)) into objective judgment function (or called fitness function) in order to obtain possible objects p (\(P.\mathrm{Might}_i (t)\)) that is found by every car population.

$$\begin{aligned} P.\mathrm{Might}_i (t)=\mathrm{Function}\left( {X_i (t)} \right) . \end{aligned}$$(14) -

(4)

Seek the \(i_{\mathrm{best}} \) (\(i_{\mathrm{best}} =\hbox {first,\,second,\,third,}\,\hbox {forth}{\ldots }\)) car population which is most likely to find the object p in all car population, \(P.\mathrm{Might}_{\mathrm{best}} (t)\) is the optimal value of the objective function found after the iteration for t times, which can be seen as the object p most likely to be found in the iteration of t, and compared with historical optimum \(P_{\mathrm{best}} (P_{\mathrm{best}} \) in the first iteration can be set as any solution). If it is better than \(P_{\mathrm{best}} \), then replace \(P_{\mathrm{best}} \) and the best position \(X\_\mathrm{axisBest}_j (t)\) with \(P.\mathrm{Might}_{\mathrm{best}} (t)\) and \(X_{\mathrm{bestindex},j} (t)\), and replace the position of the least possible object p (\(X\_\mathrm{axisWorst}_{j} (t)\)) with \(X_{\mathrm{worstindex},j} (t)\) in addition. After then all other car populations will be starting from that location (\(X\_\mathrm{axisBest}\)).

$$\begin{aligned}&\left[ {P.\mathrm{Might}_{\mathrm{best}} (t)\quad i_{\mathrm{best}} } \right] =\mathrm{min}\left( {P.\mathrm{Might}} \right) \end{aligned}$$(15)$$\begin{aligned}&\left[ {P.\mathrm{Might}_{\mathrm{worst}} (t)\quad i_{\mathrm{worst}} } \right] =\mathrm{max}\left( {P.\mathrm{Might}} \right) \end{aligned}$$(16)$$\begin{aligned}&P_{\mathrm{best}} =P.\mathrm{Might}_{\mathrm{best}} (t) \end{aligned}$$(17)$$\begin{aligned}&X\_\mathrm{axisBest}_{j} (t)=X_{\mathrm{bestindex},j} (t) \end{aligned}$$(18)$$\begin{aligned}&X\_\mathrm{axisWorst}_{j} (t)=X_{\mathrm{worstindex},j} (t) \end{aligned}$$(19)$$\begin{aligned}&X_{\mathrm{bestindex},j} (t)=\mathrm{lowerlimit}_j\nonumber \\&\quad +\,\mathrm{rand}\left( {0,1} \right) \cdot \left( {\mathrm{upperlimit}_j -\mathrm{lowerlimit}_j } \right) \end{aligned}$$(20)$$\begin{aligned}&X_{\mathrm{worstindex},j} (t)=\mathrm{lowerlimit}_j\nonumber \\&\quad +\,\mathrm{rand}\left( {0,1} \right) \cdot \left( {\mathrm{upperlimit}_j -\mathrm{lowerlimit}_j } \right) . \end{aligned}$$(21) -

(5)

In order to make all cars which have arrived at that place capable of searching object p more intelligently after reaching this position, all cars are divided into two groups, group A whose speed is \(V_{{i,j}}\) conducts local searching, which is obtained from the Eqs. (22) to (24), global searching for group B, speed is \(U_{{i,j}} \), which is obtained from Eqs. (25) and (26). Iterative search number is the MAXGEN, t on behalf of the car has searched t times current, Eqs. (22) and (25) are updated in every generation of searching.

$$\begin{aligned} V_{i,j} =\left\{ \begin{array}{ll} S_i /\left( X_{i,j} \left( {t-1} \right) -X_{i-1,j} \left( {t-1} \right) \right) ,&{}i=2:{k}/2 \\ S_i /X_{i,j} ,&{}i=1 \\ \end{array} \right. \end{aligned}$$(22)In Eq. (22),

$$\begin{aligned} S_i= & {} 10^{\left( {10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }\cdot \mathrm{rand}\left( {0,1} \right) \cdot ( {1/( {m( i )\cdot t} )^{2}} ),\nonumber \\ i= & {} 1:{k}/2 \end{aligned}$$(23)In Eq. (23),

$$\begin{aligned} m=m_0 +P.\mathrm{Might}_{\mathrm{best}} (t) /10{,}000\cdot \mathrm{rand}n\left( {{k}/2,1} \right) \end{aligned}$$(24)

The physical meaning of Eq. (24) is that if the distance of car (i, j) and car (\(i-1, j\)) is smaller, then car (i, j) will conduct the next search at a relatively higher speed after it reaches the best position, and vice versa. However, such definition mode can increase local search range to some extent and prevent the search range from being too dense. S is defined as a local search speed change factor sequence, and it consists of three parts of \(10^{\left( {10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }, \mathrm{rand}\left( {0,1} \right) \) and \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \), multiple \(10^{\left( {10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }\) by \(\mathrm{rand}\left( {0,1} \right) \) to get a random number between 0 and \(10^{10}\) which is able to improve the ability of the algorithm to jump out of the local optimal to a certain extent. \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) is a set of m-determined sequences, and m is a set of numbers whose mean value is \(m_0 \) and standard deviation is Mightbest / 10, 000. It can be seen that m is positively to \(P.\mathrm{Might}_{\mathrm{best}} (t)\), so \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) is a set of negative correlations that are negatively related with \(P.\mathrm{Might}_{\mathrm{best}} (t)\). Because the general algorithm will gradually fall into the local optimal in the optimization process, for the minimum optimization, the smaller \(P.\mathrm{Might}_{\mathrm{best}} (t)\) is, the bigger the \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) value is, and with the increase in the iterations times t, the value of \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) will continue to increase in the optimization process, together with stronger ability the algorithm to jump out of local best. Noting that for the maximum optimization at the same time, it needs to replace \(\left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) with \(\left( {m\left( i \right) \cdot t} \right) ^{2}\), which leads to the same effect as the minimum optimization. Therefore, the product of \(10^{\left( {10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }, \mathrm{rand}\left( {0,1} \right) , \left( {1/\left( {m\left( i \right) \cdot t} \right) ^{2}} \right) \) (or \(\left( {m\left( i \right) \cdot t} \right) ^{2})\) can be obtained to ensure that the local search speed change factor sequence S is random and the algorithm has the ability to jump out of local optimal. The local search speed is related to the relative distance of \(X_{i,j} \) and \(X_{i-1,j}\), and the distance of \(X_{i,j} \) and \(X_{i-1,j} \) will be closer in the optimization process, and S is getting bigger and bigger, resulting in a larger result of Eq. (22), and once again improve the algorithm’s ability to jump out of the local optimal.

In Eq. (25),

The global search speed \(U_{i,j} \) is related to the relative distance of \(X_{i,j} \left( {t-1} \right) , X\_\mathrm{axisBest}_j \left( {t-1} \right) \) and \(X\_\mathrm{axisWorst}_j \left( {t-1} \right) \), obtained by Eq. (25). The physical meaning of Eq. (25) is that if the distance of car (i,j) position \(X_{i,j} \left( {t-1} \right) \) and the current best car position \(X\_\mathrm{axisBest}_j \left( {t-1} \right) \) is close, therefore car (i,j) is relatively far to the current worst car position \(X\_\mathrm{axisWorst}_j \left( {t-1} \right) \), then car (i, j) will use relatively greater speed in the next search after it reaches the best position, and vice versa; this defined way increases flexibility and intelligence of searching; meanwhile, it has the advantage of increasing the global search capability. The SS is a global search speed change factor, which is a random number defined by Eq. (26); it consists of \(10^{\left( {-10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }, \mathrm{rand}\left( {0,1} \right) \) and \(\left( {t/{ mm}} \right) ^{2}\). \(10^{\left( {-10\cdot \mathrm{rand}\left( {0,1} \right) } \right) }\) is multiplied by \(\mathrm{rand}\left( {0,1} \right) \) to get a random number ranging from 0 to \(10^{-10}\). The optimization process of the algorithm will gradually approach the optimal value. In this case, the step size of optimization cannot be set too large so as to avoid missing the optimal value in the process. \(\left( {t/{ mm}} \right) ^{2} \) increases with the increment of iteration times, which ensures that the optimal size of the search is not too small to cause the optimization process to be too slow and ineffective. The multiplication of the three parts guarantees that the optimization process of the algorithm will gradually approach the optimal value. In the optimization process, both the relative distance of \(X_{i,j} \left( {t-1} \right) \) and \(X\_\mathrm{axisBest}_j \left( {t-1} \right) \) and the relative distance of \(X_{i,j} \left( {t-1} \right) \) and \(X\_\mathrm{axisWorst}_j \left( {t-1} \right) \) will be closer, while SS is getting bigger and bigger, resulting in a larger result of Eq. (26), which can guarantee the algorithm to jump out of the local optimal solution near the global optimal solution. In addition, the reason why the relative distance between \(X_{i,j} \left( {t-1} \right) \) and \(X\_\mathrm{axisWorst}_j \left( {t-1} \right) \) gets closer is because the algorithm will make the optimal solution \(P.\mathrm{Might}_{\mathrm{best}} (t) \) and the worst solution \(P.\mathrm{Might}_{\mathrm{worst}} (t) \) closer in the optimization process, and it can be seen that the relative distance of \(X_{i,j} \left( {t-1} \right) \) and \(X\_\mathrm{axisWorst}_j \left( {t-1} \right) \) will be getting closer in some sense.

Equations (20) and (21) are set to prevent the situation of Eqs. (29) or (30), which results in zero denominator of Eq. (25) when Eq. (25) tends to acquire speed (\(U_{{i,j}}\)). In the formulas above, \(m_0 \) is 10,000 and mm is 500,000. The function of \(\mathrm{rand}n\left( {k/2,1} \right) \) is to generate k/2 numbers whose mean value is 0 and standard deviation is 1.

-

(6)

Repeat steps 2–4 to enter the iterative optimization, and then determine whether the current position is better than previous iterations position, if so, enter step 5.

Figure 2 is pseudocode of complete car tracking optimization algorithm procedure. Figure 2 shows that car tracking optimization algorithm is not more complex compared with the algorithms mentioned previously, the difference is that the population is divided into two groups, designing a new adaptive global velocity updating method by utilizing the relationship between each population and the global optimal population, as well as a new local speed update method by utilizing the relationship among the various groups, thereby changing the position of the population. But the idea of separating the population into groups is not new, which has been mentioned in the literature (Dai et al. 2011; Askarzadeh and Rezazadeh 2011; Han et al. 2013). At the same time, the proposed CTA algorithm only has two parameters, mm and \(m_0 \), apart from the number of iterations (MAXGEN), the number of population (k), the upper and lower limits of the problem and the dimension of the problem (D), we can use the method of trial and error to get these two parameters. Here is a method for readers to refer to, the reader can take mm as 500,000 on the basis, and multiplied or divided by 10 to select the appropriate mm, in the same way of selection \(m_0 \). For the vast majority of test functions in this article, we will be able to find the optimal solution when setting mm as 500,000, and \(m_0 \) as 10,000.

3 Experimental results

The proposed car tracking algorithm is tested on 55 benchmark functions, 50 of which are from the research of Karaboga and Akay (2009). In their study, Karaboga and Akay has compared ABC algorithm with GA, PSO, and DE algorithms. After that, Berat and Tamer proposed VS algorithm (Doğugan and Ölmez 2015), the performance of which is tested using the same 50 functions, in comparison with SA, PS, PSO2011, and ABC algorithms. Therefore, in this study, by using the same 50 test functions, the performance of CTA will be compared with the FA, CS and VS algorithm. FA and CS have been introduced in the first part, and VS algorithm showed good optimization capabilities in the test results of Doğugan and Ölmez (2015). In order to test the optimization capability of algorithms on high-dimensional functions, another five functions include Salomon, rotated hyper-ellipsoid, Apline, hyper-ellipsoid, and Levy function are added from study (Osuna-Enciso et al. 2016) of Valentín and Erik, et al. the dimension of five functions all are 100.

In order to evaluate the performance of the algorithm, this paper will test the optimization capability and the convergence behavior of the algorithm. First, in order to test the optimization capability, the algorithms are evaluated according to the mean and best fitness values found from each benchmark function repeated for a fixed number of evaluations. Then in order to test the convergence behavior, the average number of evaluations of finding the optimal solution by each algorithm are compared.

Table 1 shows the tested 55 functions, which includes many different kinds of problems, such as unimodal and separable problems, unimodal and non-separable problems, multimodal and separable problems, multimodal and non-separable problems. In Table 1, the parameters used in some functions can be seen in “Appendix A.” Specific introduction of these types of functions can be referred to in Doğugan and Ölmez (2015) and Karaboga and Akay (2009). F51–F55 are the additional five high-dimensional test functions, as previously described in Osuna-Enciso et al. (2016).

3.1 Parameter settings

The basic parameters of all algorithms are the same, including population size and the maximum number of function evaluations. Population size of each algorithms is 50, and the maximum number of function evaluations of all algorithms is 500,000. The other specific parameters of algorithms are given as follows:

FA settings: the largest attractiveness \(\beta _0 \) is chosen to be 1, the light absorption coefficient \(\gamma \) is set to 1, take \(\alpha \) as 0.2, as recommended in Yang (2010b).

CS settings: the probability \(p_a \) that the host bird can find the egg laid by a cuckoo is 0.25, and the step size is set to 1, as recommended in Yang and Deb (2009).

VS settings: the x of gamma function is set to 0.1 as recommended in Doğugan and Ölmez (2015).

3.2 Results

3.2.1 Optimization performances comparison

For each algorithm, all benchmark functions are run for 30 times, and the mean, the best values, the standard deviation, and average time of finding the best value are recorded in Table 2. In addition, the best ones are highlighted in bold, and the values below \(10^{-16}\) are assumed to be 0, being the same as in Doğugan and Ölmez (2015). All algorithms are coded in MATLAB and run in a PC using an Intel Core i5-2450M with 8GB RAM workstation.

After no more than 500,000 evaluations, the number of minimum of various types of functions found by each algorithm in Table 2 are summarized in Table 3. It can be seen from Table 3 that for the test function of all types, the optimization performance of FA is the worst the CS algorithm performs better than VS algorithms and the performance of the CTA proposed in this paper are better than that of the other three kinds of optimization algorithms. However, in order to better compare the proposed algorithm with other algorithms, the results of each function obtained from 30 runs are used in a Wilcoxon signed-rank test which is performed with a statistical significance value \(a = 0.05\) as in Doğugan and Ölmez (2015). The p values, T\(+\), T− (i.e., T\(+\) and T−, as defined in Civicioglu (2013)) and Winner after each algorithm performs pair-wise Wilcoxon signed-rank test are recorded in Table 4. ‘\(+\)’ indicates that the CTA exhibits a statistically superior performance than compared algorithm; ‘_’ indicates the CTA exhibits an inferior performance than compared algorithm; and ‘\(=\)’ indicates cases in which there is no statistical difference between the two algorithms. Table 5 is a summary of Table 4, each cell in Table 5 shows the total count of the three statistical significance cases (\(+/=/-\)) in the pair-wise comparison obtained from Table 4, it can be found from Table 5 that the proposed CTA outperforms other algorithms on three types of function including US, UN and MS. For MN problem, the optimization performance of CTA and CS algorithm is almost the same, but the VS and FA underperform the CTA. In summary, the optimization performance of CTA proposed in this paper is superior to the other three algorithms.

Doğugan and Ölmez (2015) indicates that VS is very simple, and it is not population based algorithms. Furthermore, the it can be concluded that with the same iterations, the average computational time of VS algorithm is smaller than the other population based algorithms (ABC, PSO2011). While the algorithms compared with VS algorithm in this paper are all population based algorithms, therefore, it can be predicted that the results should be similar as that in Doğugan and Ölmez (2015). So this paper does not compare the average computational time for same iterations, but the average time of finding the best value of all algorithms, as shown in Fig. 3. It shows that the proposed CTA is quite competitive comparing with VS algorithm. Although the average time of finding the best value of VS algorithm is much faster than others on most of the function, it is not the fastest on a few of test functions. Moreover, it can be seen by observing the optimization time of CS algorithm and CTA that the running time of CTA is shorter than the CS algorithm.

3.2.2 Convergence behavior comparison

It can be seen in Table 2 that for a large proportion of functions, the optimal value can be obtained after 500,000 evaluations, so in order to compare the capability of convergence behavior of the algorithms, the number of evaluations is reduced to 250,000 times. The mean value and the average number of evaluations is counted to find the optimal value of 30 runs. The results are shown in Table 6. Method of comparison is that if the algorithms are able to find the optimal value within 250,000 evaluations, the algorithm which runs the least average evaluations to find the optimal value has better convergence performance; if the algorithms are unable to find the optimal value after 250,000 evaluations, the final results are compared and the smaller optimization result of the algorithm is, the better the performance convergence of that algorithm is.

The optimal solution in Table 6 displays in bold. The results of the algorithms are compared, the method of comparison is described above and the comparison results are expressed in the form of a/b/c shown in Table 7, where ‘a’ represents the number of evaluations where convergence performance of CTA is better than compared algorithm, ‘b’ represents the number where convergence performance of CTA is similar to compared algorithm, ‘c’ represents the number where convergence performance of CTA is relatively inferior to compared algorithm. It can be drawn that the proposed CTA has shown greater convergence behavior compared with the other three algorithms on different types of functions. In addition, for some of the functions, more number of evaluation is required in order to find the optimal solution for all of the algorithms.

In order to show how the proposed algorithms dominate the others, we selected 11 out of 55 benchmark functions and plotted the error iteration graphs obtained by each algorithm solving function as shown in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12, 13 and 14. Figures 4, 5, 6, 10, 11 show that the CTA algorithm dominates the others before the 1000th iterations, while Figs. 7, 8, 9, 12, 13 and 14 show that the CTA algorithm dominates the others at the beginning. So we can conclude that the solving speed of CTA is quite efficient compared to other algorithms.

4 Conclusion

This paper presents a new swarm intelligence optimization algorithm named CTA, which is different from the previous swarm intelligence algorithms. It is not obtained by observing the foraging behavior of biological, but created artificially through observing the programming methods of these algorithms. This algorithm divides the car population into two groups, and uses global and local iterative search strategy to find the optimal solution, respectively. Global search strategy adaptively adjusts the pace based on the best and the worst current position, while the local search strategy adaptively adjusts the pace based on the relative distance between the position of the cars around

The proposed CTA is tested over a large set of 55 benchmark, and these 55 functions are rich in type which comprises unimodal, multimodal, separable and non-separable problems, and range covers 100-dimensional function as well. The results are compared with those of FA, CS and VS; the results showed that CTA is highly competitive compared with the other algorithms. Because the CTA algorithm is a new algorithm and the velocity updating formula is not perfect, so it needs further discussion and improvement.

References

Askarzadeh A, Rezazadeh A (2011) A grouping-based global harmony search algorithm for modeling of proton exchange membrane fuel cell. Int J Hydrogen Energy 36:5047–5053

Choi S, Yeung D (2006) Learning-based SMT processor resource distribution via hill-climbing. ACM SIGARCH Comput Archit News 34:239–251

Civicioglu P (2013) Backtracking search optimization algorithm for numerical optimization problems. Appl Math Comput 219:8121–8144

Colorni A, Dorigo M, Maniezzo V et al (1991) Distributed optimization by ant colonies. In: Proceedings of the first European conference on artificial life, pp 134–142

Dai C, Chen W, Ran L et al (2011) Human group optimizer with local search. In: International conference in swarm intelligence, pp 310–320

Doğugan B, Ölmez T (2015) A new metaheuristic for numerical function optimization: vortex search algorithm. Inf Sci (Ny) 293:125–145

Dorigo M, Stützle T (1999) The ant colony optimization metaheuristic. In: New ideas in optimization, pp 11–32

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst Man Cybern Part B Cybern A Publ IEEE Syst Man Cybern Soc 26:29–41

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: International symposium on micro machine and human science, pp 39–43

Gao WF, Liu SY (2012) A modified artificial bee colony algorithm. Comput Oper Res 39:687–697

Gelatt CD, Vecchi MP et al (1983) Optimization by simulated annealing. Science 220:671–680

Goffe WL, Ferrier GD, Rogers J (1994) Global optimization of statistical functions with simulated annealing. J Econom 60:65–99

Golberg DE (1989) Genetic algorithms in search, optimization, and machine learning. Addison Wesley, London, p 102

Goldfeld SM, Quandt RE, Trotter HF (1966) Maximization by quadratic hill-climbing. Econometrica 34:541–551

Han M-F, Liao S-H, Chang J-Y, Lin C-T (2013) Dynamic group-based differential evolution using a self-adaptive strategy for global optimization problems. Appl Intell 39:41–56

Holland JH (1975) Adaptation in natural and artificial systems. Control Artif Intell Univ Michigan Press 6:126–137

Karaboga D, Akay B (2009) A comparative study of artificial bee colony algorithm. Appl Math Comput 214:108–132

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Glob Optim 39:459–471

Li X, Shao Z, Qian J (2002) An optimizing method based on autonomous animats: fish-swarm algorithm. Syst Eng Theory Pract 22:32–38

Li H-Z, Guo S, Li C-J, Sun J-Q (2013) A hybrid annual power load forecasting model based on generalized regression neural network with fruit fly optimization algorithm. Knowl-Based Syst 37:378–387

Osuna-Enciso V, Cuevas E, Oliva D et al (2016) A bio-inspired evolutionary algorithm: allostatic optimisation. Int J Bio-Inspired Comput 8:154–169

Pan W-T (2012) A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl-Based Syst 26:69–74

Rechenberg I (1965) Cybernetic solution path of an experimental problem

Shi Y, Eberhart R (1998) Modified particle swarm optimizer. In: IEEE international conference on evolutionary computation proceedings, 1998. IEEE world congress on computational intelligence, pp 69–73

Wang C-R, Zhou C-L, Ma J-W (2005) An improved artificial fish-swarm algorithm and its application in feed-forward neural networks. In: 2005 International conference on machine learning and cybernetics, pp 2890–2894

Yang X-S (2010a) A new metaheuristic bat-inspired algorithm. In: Nature inspired cooperative strategies for optimization (NICSO 2010). Springer, pp 65–74

Yang X-S (2010b) Firefly algorithm, stochastic test functions and design optimisation. Int J Bio-Inspired Comput 2:78–84

Yang X-S (2010c) Nature-inspired metaheuristic algorithms. Luniver Press, Frome

Yang XS, Deb S (2009) Cuckoo Search via Lévy flights. In: World congress on nature and biologically inspired computing, 2009. NaBIC 2009, pp 210–214

Yang XS, Deb S (2010) Engineering optimisation by cuckoo search. Int J Math Model Numer Optim 1:330–343

Yang X-S, Hossein Gandomi A (2012) Bat algorithm: a novel approach for global engineering optimization. Eng Comput 29:464–483

Yang XS, Hosseini SSS, Gandomi AH (2012) Firefly algorithm for solving non-convex economic dispatch problems with valve loading effect. Appl Soft Comput 12:1180–1186

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Communicated by A. Di Nola.

Appendix A

Appendix A

See Tables 8, 9, 10, 11, 12, 13, 14 and 15.

Rights and permissions

About this article

Cite this article

Chen, J., Cai, H. & Wang, W. A new metaheuristic algorithm: car tracking optimization algorithm. Soft Comput 22, 3857–3878 (2018). https://doi.org/10.1007/s00500-017-2845-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-017-2845-7