Abstract

Defect prediction models help software project teams to spot defect-prone source files of software systems. Software project teams can prioritize and put up rigorous quality assurance (QA) activities on these predicted defect-prone files to minimize post-release defects so that quality software can be delivered. Cross-version defect prediction is building a prediction model from the previous version of a software project to predict defects in the current version. This is more practical than the other two ways of building models, i.e., cross-project prediction model and cross- validation prediction models, as previous version of same software project will have similar parameter distribution among files. In this paper, we formulate cross-version defect prediction problem as a multi-objective optimization problem with two objective functions: (a) maximizing recall by minimizing misclassification cost and (b) maximizing recall by minimizing cost of QA activities on defect prone files. The two multi-objective defect prediction models are compared with four traditional machine learning algorithms, namely logistic regression, naïve Bayes, decision tree and random forest. We have used 11 projects from the PROMISE repository consisting of a total of 41 different versions of these projects. Our findings show that multi-objective logistic regression is more cost-effective than single-objective algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Software project teams adopt various techniques such as testing and expert reviews of software artifacts to improve quality. Due to limited resources and tight schedules, it may not be possible to take up these activities on all files. The defect prediction model predicts defect-prone files using trained models built from the historical data. Software project teams can prioritize and focus on rigorous QA activities of these predicted defect-prone files to minimize post-release defects. The defect prediction work has evolved in many different directions like finding effective predictor metrics, efficient prediction algorithms and performing defect prediction at different granularity levels like method, file and project. In the past, defect prediction models were built using process metrics, product metrics or bug history of software systems.

Prediction models are built with training data, and they are evaluated for performance on the testing data. In the literature, defect prediction models are built using three prediction techniques that differ based on the source of training data and testing data. The different prediction techniques are: (1) cross-validation prediction: In this approach, training and testing data are taken from the same version of a project. The defect prediction models are built by taking 70% of data as the training data and tested on remaining 30% of the data, or by using tenfold cross-validation technique; (2) cross-version prediction: In this approach, the data of the previous version of a software project are taken as the training data to build prediction model and it is tested on the data of current version of the same project; and (3) cross-project prediction: The prediction models are built by taking data from different projects as the training data and tested on the data of the project under study.

It is our intuitive understanding that using data of the previous version of the same project is more appropriate and can provide better results instead of using data from different projects to build defect prediction models. The main architectural or design characteristics of the project will remain more or less the same across different releases. The pattern of bug occurrences of the current version might also get influenced by the bug occurrences in the previous version. Hence, for predicting defect-prone files of the current version, the most suitable training data are the data of previous version of the same project. These factors motivated us to explore further into cross-version defect prediction model.

Harman suggested that the defect prediction problem can be viewed as a search problem, which can be solved using evolutionary algorithms (Harman 2010). The defect prediction problem can be formulated as a multi-objective optimization problem with contrasting objectives. We have attempted to solve two multi-objective defect prediction problems with competing objective functions in cross-version setup.

We formulate our first multi-objective defect prediction problem with the following contrasting objectives

-

Maximize effectiveness of the model

-

Minimize misclassification cost.

Effectiveness is the ratio of the number of components correctly predicted as defective to the number of actual defective components (recall). The misclassification cost is the cost incurred due to quality assurance activities required on wrongly classified files, i.e., cost of reviewing/testing the false-positive (predicted to be defective but not actually defective) files and cost of the false- negative (predicted to be non-defective but actually defective) files during post-release phase. Ideally any good model attempts to minimize the misclassification cost and maximize the effectiveness of model. Hence, we have chosen them as objective functions.

There are many evolutionary algorithms which can solve multi-objective optimization problems. In this study, we have used NSGA-II, a multi-objective genetic algorithm proposed by Deb et al. (2000). Any multi-objective genetic algorithm requires a fitness function that guides the search process to find optimal or near optimal solutions. We have considered logistic regression function as fitness function to find out cost and effectiveness. Hence, we are denoting this approach as multi-objective logistic regression (MOLR).

We build a cross-version defect prediction model using multi-objective logistic regression (MOLR) for our first problem and this model is denoted by M1. We also build prediction models using four traditional single-objective algorithms, namely logistic regression, naïve Bayes, decision tree and random forest. We try to answer the following research question.

\({RQ}_{1}\): How does the proposed M1 perform as compared to traditional single-objective defect prediction models in the cross-version defect prediction?

We formulate second multi-objective defect prediction problem with the following contrasting objectives.

-

Maximize effectiveness of the model

-

Minimize LOC cost.

Canfora et al. (2013, 2015) proposed this objective function to solve multi-objective defect prediction problem in cross-project setup. Effectiveness is the same as it is defined in M1. The cost borne by project team to perform review/test/any QA activity on all defect-prone files is taken to be another objective. As the effort required is directly proportional to the sum of lines of code of all defect-prone files, cost is taken as the sum of lines of code (LOC) of all the files which are predicted defective. This includes both true positives (predicted to be defective and actually defective) and false positives. Canfora et al. (2013, 2015) showed that the multi-objective cross- project prediction is more cost-effective than single-objective algorithms when there is lack of training data for a project, which is true for relatively newer projects. We consider these objective functions to implement multi-objective defect prediction models across versions of the same project.

We build cross-version defect prediction model using multi-objective logistic regression (MOLR) for second problem, and this model is denoted by M2. We try to answer the following research question.

\({RQ}_{2}\): How does the proposed M2 perform as compared to traditional single-objective defect prediction models in the cross-version defect prediction?

We have used 11 software projects from the PROMISE repository (Menzie et al. 2015), having a total of 41 different versions to answer RQ1 and RQ2. We had 30 pairs of training and testing versions. For each pair, we compared multi-objective logistic regression with the traditional classification algorithms in terms of cost-effectiveness.

The rest of the paper is structured as follows. Related work on defect prediction is discussed in Sect. 2. Datasets that consist of features and projects used in experiments are discussed in Sect. 3. We formulate problems in Sect. 4. And the process of building prediction models with multi-objective algorithm is discussed in Sect. 5. Experimental setup and analysis carried out to compare MOLR with traditional algorithms are described in Sect. 5. Section 6 discusses the results and threats to validity is explained in Sect. 7. Conclusions are presented at the end.

2 Related work

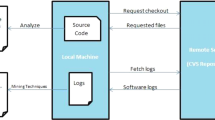

Defect prediction models have been built using various sets of static code attributes and process metrics. Metrics like lines of code (LOC), Halstead, CK and OO, change metrics and past bugs are mined from the code base, version control system and bug trackers. They are used to predict defect-prone files either within a project or across the projects. D’Ambros et al. (2010) tried to consolidate the defect prediction work, using the metrics mentioned above. Though they found that WCHU (weighted churn of source code metrics) and LDHH (linearly decayed entropy of source code metrics) are better performing metrics for defect prediction, they concluded that there is not a single metric that predicts defect-prone files well across software projects. But they agree to many other past works which say that past bugs and source code metrics are right alternatives in terms of overall prediction and computational requirements. Researchers often say most of the source code metrics are proxies of size. Zhang et al. (2009) investigated the relationship between size of files and defect, with an assumption that large code base may correlate with more defects. Their study concludes that the defect proneness increased with the size of the classes, but they suggested spending more resources on smaller classes which were found to be more problematic than larger classes. Kim et al. (2007) predicted defects using cached history. They assumed that the defects will not occur alone, but rather in bursts of several related faults. So they cached locations that are likely to have bugs. Basili et al. (1996) found that CK metrics are useful in finding defect proneness in early phases of the software development. Subramanyam and Krishnan (2003) showed that CK metrics are associated with defects even after controlling for the size of the software. A few other studies endorsed the defect prediction models built with change metrics, as they gave better prediction performance in classifying defect-prone files (Hassan 2009; Krishnan et al. 2011; Moser et al. 2008; Muthukumaran et al. 2015).

Apart from finding better prediction metrics, coming up with competitive prediction techniques for defect prediction is also an interesting research area. Classifiers such as logistic regression, naïve Bayes, decision tree, support vector machine and random forest are applied by different researchers in the past (He et al. 2013; Kamei et al. 2010; Kim et al. 2007; Mende and Koschke 2009; Peters et al. 2013). Czibula et al. used relational association rule mining to predict defective modules in software systems. Their model, defect prediction using relational association rules (DAPR) gives better predictive performance compared to the existing defect prediction techniques (Czibula et al. 2014). Marian et al. (2015) proposed unsupervised machine learning method based on self-organizing maps which puts defective and non-defective files in two clusters. Lessmann et al. performed a comparative study on defect prediction experiments with 22 classifiers applied on 10 public domain datasets from NASA repository and concluded that though the metrics-based classification was useful in this domain, the importance of classification algorithm was not significant. They did not find any significant performance difference between top 17 out of 22 classifiers used in the study (Lessmann et al. 2008). But Ghotra et al. (2015) argue that by making use of cleaned versions of NASA, PROMISE corpus and different classification algorithms, it is possible to produce defect prediction models with significant differences in performance.

With a perspective different from traditional approaches, Harman suggested to reformulate the classic software engineering problems as a search problem. This will help the community in finding solutions to difficult problems with competing constraints (e.g., quality, cost) (Harman 2010). Harman and Clark (2004) showed that many metrics can be used as the guiding force behind the search for optimal or near optimal solutions to many software engineering problems. Taking a clue from Harman’s work, some researchers have come up with multi-objective defect prediction techniques. Canfora et al. (2013, 2015) proposed MODEP (multi-objective defect predictor) based on multi-objective forms of machine learning techniques, logistic regression and decision tree, trained using genetic algorithm. Carvalho et al. (2010) came up with multi-objective particle swarm optimization (MOPSO), which generates a model composed of rules with specific properties, which are intuitive and comprehensible.

Research studies mentioned above were focused on either within project cross-validation or cross-project defect prediction to build defect prediction models. According to our understanding, there is not much comprehensive work done on the defect prediction across multiple versions of a software project. Zimmermann et al. (2007) showed that defect prediction models learned from earlier releases can be used to predict defects for future releases. For instance, the model trained from release 1.0 can be used to predict defects in release 2.0. But they concluded that their results were far from being perfect. Yang et al. (2015) built a regression model based on the data of previous version to predict defect proneness of components in the current version and ranked them based on their defect proneness. They compared many traditional regression algorithms with their newly proposed learning-to-rank (LTR) approach and concluded that LTR performed better as compared to other algorithms. One of the recent works in cross-version defect prediction found that network measures are more effective in cross- version defect prediction. But they conclude that it does not improve the prediction performance in a bigger way, especially when ranking fault-prone modules (Ma et al. 2016). Our work treats cross-version defect prediction within a project as a multi-objective optimization problem, with competing constraints like cost and effectiveness. We have presented a comprehensive comparison of multi-objective algorithm with traditional algorithms. We have conducted experiments in a large number of projects with multiple releases, with the motivation of providing more generalizable results.

3 Datasets and metrics

We are using six Chidamber and Kemerer metrics (CK) (Chidamber and Kemerer 1994) and LOC as features to build defect prediction models. The choice of the metrics is based on the fact that all projects used in our study are object-oriented projects and CK metrics has been widely used as quality indicators for object oriented softwares (D’Ambros et al. 2010; He et al. 2013; Herbold 2013; Jureczko and Madeyski 2010; Peters et al. 2013). Table 1 gives brief description about predictors used in our study.

We have considered 11 open-source projects that are having data for 3 or more versions in PROMISE repository (Menzie et al. 2015). The details of these versions, namely number of classes and percentage of defective classes, are presented in Table 2. As the table shows, different versions of the projects have 109–965 classes with average number of classes being 385. The choice of projects is done with a view of evaluating performance of multi-objective algorithm across projects having wide diversity, so that the results can be generalized.

4 Formulation of multi-objective defect prediction models

We formulate defect prediction problem as multi-objective optimization problem with contrasting objectives. We propose two multi-objective prediction problems each with distinct set of objective functions.

Most of the machine learning algorithms are single objective in nature. That is, their final goal is to estimate one solution that minimizes the prediction error. For example, logistic regression minimizes prediction error, i.e., root-mean-square error (RMSE) where RMSE is defined as follows,

where \(f{ (c }_{ i })\) and \(dp{ (c }_{ i })\) take either 1 or 0, \(f{ (c }_{ i })\) represents whether \({ c }_{ i }\) is defective class or not and \(dp{ (c }_{ i })\) represents whether \({ c }_{ i }\) is predicted to be defective or not.

It is always good to have defect prediction models with high recall so as to minimize post-release defects. And it is easy to build models with recall value of 1. A dummy model which predicts all files to be defect prone will have a recall value of 1. But this model is of no use because recall is maximized without considering cost of misclassification. Hence, we would like to view defect prediction problem as multi-objective optimization problem rather than single-objective optimization problem. There will be two types of costs associated with defect prediction models. We will discuss building multi-objective defect prediction models that maximizes effectiveness and minimizes cost(misclassification cost/LOC cost) in the next two subsections.

4.1 Optimize misclassification cost and effectiveness

There are two types of errors for any prediction model. For defect prediction problem, type I error is predicting non-defective file to be defect prone and type II error is predicting defective file to be non-defective. In fact, the number of type I errors and type II errors is number of false-positive files and number of false- negative files, denoted by FP and FN. The cost incurred due to Type I errors is the effort spent by project team on quality assurance (QA) activities such as reviewing and testing of false-positive files. The cost incurred to fix type II errors is the effort spent by project team to fix the defective file in post-release phase.

It is evident that cost of type II error is much more than the cost of type I error for our problem as fixing defective file during post release phase takes huge effort as compared to reviewing / testing a file before release. It is difficult to say how much type I error is costly as compared to type II error. Cost factor denotes how much is the cost of misclassifying a defective class as non-defective compared to misclassifying a non-defective class as defective. The cost factor \(\alpha \) can be written as follows:

If cost of \(Type\ I\) error is c, then cost of \(Type\ II\) error is \(\alpha c\).

We can normalize misclassification cost as follows:

where nClasses is number of classes in given version. It is always recommended to have defect prediction model that maximizes the effectiveness and minimizes misclassification cost. As nClasses and c are constants for a project, minimizing the misclassification cost is same as minimizing normalized misclassification cost. And minimizing normalized misclassification is same as minimizing \((FP + \alpha (FN)) / nclasses\). Hence, c can be taken to be 1 for these kinds of optimization problems. Now, we formulate our first multi-objective defect prediction problem with the following contrasting objectives.

-

maximize recall and minimize normalized misclassification cost.

We take effectiveness of model to be recall, misclassification cost as normalized misclassification cost where

4.2 Optimize cost of QA activities and effectiveness

In cross-version defect prediction, the defect-prone files of the current version will be predicted by making use of prediction models built using previous version data. The project team performs QA activities on the files which are predicted defective. The predicted defective files involve both TP and FP. Hence, the cost incurred by project team is cost of QA activities on TP and FP files. The cost of QA activities is proportional to LOC of TP and FP files. Thus, cost is measured in terms of lines of code and effectiveness in terms of recall. The main motive behind these objectives is to find set of models such that they minimize cost of reviewing/testing files while achieving best possible effectiveness. And among these models, the most effective model can be selected based on the cost borne by project team.

We formulate second multi-objective defect prediction problem with the following contrasting objectives.

-

maximize recall and minimize LOC cost.

We take effectiveness of model to be recall, and it is the same as defined in Eq. 6. We believe that the cost of QA activities of defect-prone class(\(c_i\)) is proportional to number of lines of \(c_i\)(\(LOC(c_i)\)) and this is also confirmed by Rahman et al. (2012). We can find LOC cost using the following equation,

where \(P(c_i)\) denotes whether \(i^{th}\) class, \(c_i\), is defect prone or not. \(P(c_i)\) is set to 1 if the predicted probability \(p(c_i)>0.5\), otherwise it is set to 0.

5 Proposed approach

In this section, we illustrate our approach to build multi-objective prediction models. Let \(C=\{c_1,c_2,\ldots ,c_n\}\) be classes of a given version \(V_k\) of a project P with each class having ‘m’ attributes. So training data take form of \(n \times m\) matrix. A matrix entry \(x_{i,j}\) denotes the value of \(j^{th}\) attribute for \(i^{th}\) class. The mathematical formulation of logistic regression is given as,

where \(p(c_i)\) denotes probability of \(i^{th}\) class being defective, while the set of scalars

\(W = \{w_0, w_1,w_2, \ldots , w_m\}\) represents the coefficients for the attributes \(\{x_{i,1}, \ldots , x_{i,m}\}\). The objective of traditional logistic regression approach is to find out a set of coefficients \(W = \{w_0, w_1, w_2,\ldots , w_m\}\) that minimize the prediction error. It is usually done with the help of gradient descent algorithm. This model is built using training data, and it is used to predict defective classes in the test data. A class \(c_i\) is predicted to be defective if \(p(c_i) > 0.5\), based on the coefficients found during training process and the metrics \(\{x_{i,1}, x_{i,2},\ldots , x_{i,m}\}\).

The goal of multi-objective problem stated above is to find out a set of coefficients W, which optimizes two objectives. As there are two contrasting objectives, we will get multiple models with different trade-offs between two objectives. This problem of multi-objective optimization can be solved by using genetic algorithm.

Now we briefly describe some basic concepts used to solve multi-objective optimization problem. The definitions are presented here for the sake of continuity. The set of all possible values that can be taken by solutions is defined as the feasible region. In our case, the set of values that can be taken by the coefficients W forms the feasible region, which is the set of real numbers, as there are no constraints on the values that coefficients can take.

In multi-objective optimization problems, concepts of Pareto dominance and Pareto optimality are used to define the optimality of solutions (Coello et al. 2007). These terms are defined for two contrasting objectives.

A solution x dominates another solution y (also written \(x <_p y)\) if and only if the values of the objective functions satisfy the following conditions:

Let us assume that objective1 is the cost (LOC cost or misclassification cost) and objective2 is effectiveness (recall) of a prediction model. In simple words, above definition indicates that x is better than y if and only if, at the same level of cost x achieves greater effectiveness than that of y, or at the same level of effectiveness x incurs lower cost than that of y. A solution x is Pareto optimal if and only if it is not dominated by any other solution in the feasible region. The set of solutions (coefficient vectors \(W_i\)) that are not dominated by any other solutions is said to form Pareto optimal set, while the corresponding objective vectors, cost and effectiveness values of the solution set W, said to form Pareto frontier.

The final set of solutions on the Pareto frontier gives different cost-effectiveness trade-offs. It is up to software project team to decide upon how much amount of time (cost) they can spend and then choose appropriate model. For example, with one month away from the release, owing to shortage of time, the software project manager typically needs to plan the cost of quality assurance activities on topmost \(n\%\) of defective components. The value of n can vary based on quality requirements, cost borne by project and organization. In this case, there is a need of having a model which can provide multiple solutions with different cost-effectiveness trade-offs. So multi-objective approach presented here can be of great help in similar situations.

To search for coefficient vectors in the solution space, we have used a multi-objective genetic algorithm (MOGA) presented by Goldberg (2006). In general terms, a genetic algorithm works as follows:

-

It starts with random set of solutions called population of size p. Each individual in the population is known as chromosome.

-

The population evolves through set of iterations, known as generations.

-

From the given generation, new population called offspring is created using crossover and mutation operations. Crossover operator combines two individuals to generate new offspring. Mutation operator modifies the internal structure of an individual.

-

A fitness function is applied on each individual of the current population to find the values of objective functions. From the current population, fittest set of p individuals are selected to be part of next generation using a selection operator. The fittest set of individuals are selected based on their objective values. The selection process follows the concepts of Pareto dominance and crowding distance. This is done to keep the size of population as constant in each generation.

-

This process is repeated until termination criteria are met.

The intuition behind the genetic algorithm is that at the end of every generation only the fittest set of individuals make it to the next generation. So there is some improvement in the solution at the end of every generation. After many generations, the population approaches to an ideal solution or approximately ideal solution.

In our implementation, we have used NSGA-II proposed by Deb et al. (2000). For multi-objective logistic regression used in our study, one chromosome represents the coefficient vector \(W = \{w_0, w_1, w_2,\ldots , w_m\}\), which forms one logistic regression model. Initially, a process starts with a population size of say p. For each model, the fitness function computes the values of objectives. Based on the definition of Pareto optimality and crowding distance, the best p set of coefficient vectors are selected for the next generation. The process of selecting best chromosomes depends on implementation of multi-objective genetic algorithm. NSGA-II uses fast non-dominated sorting algorithm and the concept of crowding distance for selection process. Complete explanation of the selection process, used in NSGA-II, is beyond the scope of this paper. One can refer to the original paper by Deb et al. (2000). The process of generating new population and selection of fittest set of individuals repeats in each iteration, until optimal set of coefficients are found or maximum number of generations are reached. At the end of training process, we get optimal set of coefficients, i.e., logistic regression models.

5.1 Data preprocessing

We describe the steps required to build and evaluate the prediction models built using single-objective and multi-objective algorithms. We preprocess the data of training version and testing version using data standardization. We have used CK metrics (Chidamber and Kemerer 1994) and lines of code (LOC) as predictor metrics. Since the values of different metrics have different ranges, metrics are standardized to reduce the heterogeneity. Mathematically, a metric m is normalized as follows:

where \(m(i,c_j,V_k,P)\) is the value of \(i^{th}\) metric computed on class \(c_j\) of version \(V_k\) of project P. Mean \(\mu (i,V_k,P)\) and standard deviation \(\sigma (i,V_k,P)\) have been calculated on all the classes of version \(V_k\) of the project P for the \(i^{th}\) metric. \(m_z(i,c_j,V_k,P)\) is the normalized value of \(i^{th}\) metric. We apply this normalization on data of both the training and testing versions, while building and predicting the use of any modeling technique.

5.2 Training process

-

1.

We train MOLR for our first multi-objective optimization problem as mentioned above on normalized data of the training version \(V_{k-1}\). At the end of training, we get multiple MOLR models and four single-objective models. From the final set of MOLR models, we find out the best model. The best model is the one which is closest to the ideal model. The ideal model has misclassification cost \(=\) 0 and recall \(=\) 1. We find the closest model with help of Euclidean distance measure as shown in Algorithm 1. If more than one models are equidistant to the ideal model, then we chose the model with least misclassification cost.

-

2.

We train MOLR for our second problem as mentioned above and single-objective models on normalized data of the training version \(V_{k-1}\). At the end of training, we get multiple MOLR models and four single-objective models. We retain all the MOLR models and apply it on the data of the test version.

5.3 Testing process

We illustrate testing process for both of our problems in this subsection.

5.3.1 Testing process for M1

The best possible MOLR model and other four single-objective models are applied on the data of test version. We compare all the single objective algorithms with MOLR in terms of misclassification cost, recall and F-measure.

Misclassification cost changes based on the value of cost factor \(\alpha \). So, for both MOLR and single-objective algorithms, misclassification cost is calculated each time cost factor changes. For any model (single-objective or MOLR model) misclassification, cost and recall can be calculated as per Eqs. 6 and 7. As recall is one of the objectives in MOLR, its value changes based on cost factor. F-measure is the harmonic mean of recall and precision. It denotes the balance achieved by the model between recall and precision values. This, in turn, shows how a model achieves the balance between false negatives and false positives. This is also a useful measure to find out effectiveness of the prediction model. For MOLR, F-measure changes as cost factor value changes, recall being one of the objectives of MOLR. For single-objective models, it remains the same. F-measure can be calculated using the following equation.

As explained earlier, after choosing the best MOLR model from the training process we apply it on the data of test version. The evaluation measures (recall, misclassification cost and F-measure) are calculated for the MOLR and single-objective models.

We perform two-tailed Wilcoxon signed-rank test for each pair of results between MOLR and other single-objective algorithms to determine whether the following null hypothesis can be rejected.

-

\({ H }_{ 01 }\): There is no significant difference between evaluation measures of MOLR and single-objective models.

This comparison is different for all four single-objective algorithms and for different cost factors. We have conducted experiments with different cost factors—5, 10, 15, 20. One can choose appropriate cost factor as required by project, company and past experiences. For all the tests, the significance level is assumed to be 0.05, i.e., probability of rejecting the null hypothesis is 5%, when it should not be rejected.

5.3.2 Testing process for M2

From the training process, we get multiple models for MOLR. In this approach, we apply all the training models on the data of test version. We compare all the single-objective algorithms with multi-objective algorithm in terms of LOC cost and effectiveness. LOC cost and effectiveness can be found using Eqs. 6 and 8. After complete training and testing process, we plot LOC cost and effectiveness obtained from data of testing version on the same graph for comparison purpose.

From the definition of Pareto dominance described in the previous section, for each single-objective model, we try to find out one MOLR model that has the same or lesser LOC cost than that of the single-objective model. In particular, we try to compare single- and multi-objective models in terms of effectiveness at the same LOC cost that we have to spend with the single-objective model. Figure 1 depicts the process of selecting multi-objective model corresponding to single-objective model, having same LOC cost as that of single objective model. For the situation shown in Fig. 1, multi-objective model has more effectiveness than single-objective model.

Due to inherent randomness of NSGA-II algorithm, we may not get a multi-objective model having the same LOC cost as that of the single objective model. So we try to find nearest multi-objective model having lesser cost than single-objective model. We find out how multi-objective model performs compared to the single-objective model by spending lesser LOC cost than that to be spent with use of single-objective model. The results show that multi-objective model is more effective even at lesser LOC cost. One example for this situation is depicted in Fig. 2. Here multi-objective model has more effectiveness than single-objective model even at lesser LOC cost than that of the single-objective model.

For M2, we compare single-objective and multi-objective models in terms of effectiveness. As explained above, we find out MOLR model having same or lesser LOC cost as compared to given single-objective model. After finding such a MOLR model for each of the single objective algorithm, we compare recall values of both the models. We find out how much effective the MOLR model is compared to single objective model at the same LOC cost.

To prove significance of the results statistically, we compare recall values of MOLR and single-objective model using two-tailed Wilcoxon paired test to determine whether the following null hypotheses could be rejected.

-

\({ H }_{ 02 }\): There is no significant difference between recall values of MOLR and single-objective predictors for cross-version defect prediction at the same LOC cost.

This comparison is different for all four single-objective algorithms. In other words, we find out closest multi-objective model with respect to each single-objective models, using the process described above. For all tests, the significance level is assumed to be 0.05, i.e., probability of rejecting the null hypothesis is 5%, when it should not be rejected.

5.4 Implementation settings

MOLR is implemented using MATLAB Global Optimization Toolbox (MATLAB 2015). Other single-objective algorithms are also implemented in MATLAB. The implementation settings are kept the same for all experiments and for both the approaches, so that we can compare results in the same conditions. Otherwise, one can choose appropriate parameters according to project requirement.

The implementation details about single-objective algorithms are as follows:

-

Logistic Regression: glmfit function has been used for logistic regression implementation. The distribution parameter has been set to binomial and link parameter has been set to logit.

-

Naïve Bayes: NaïveBayes.fit function has been used for naïve Bayes implementation. Implementation of naïve Bayes function requires unnormalized data as input. Rest of the functions requires normalized data as described in data preprocessing process (Sect. 5.1).

-

Decision Tree: classregtree function has been used for decision tree implementation. The method parameter has been set to classification.

-

Random Forest: TreeBagger function has been used for random forest implementation. The method parameter has been set to classification. Number of trees (ntrees parameter) has been set to 100. We experimented with different number of trees and chose the one having the least variation in results.

gamultiobj function has been used for NSGA-II implementation. Selection of parameters for NSGA-II implementation has been done with reference to some of the previous studies like (Canfora et al. 2013, 2015; Coello et al. 2007; Krall et al. 2015) and experimentation. For the parameters chosen by experimentation, we have taken spread value, defined by Deb, as the evaluation criteria (Deb 2001). Spread value gives an indication of how well the solutions are spaced on the final Pareto front. The greater the spread value, the better the solutions on Pareto front. Krall et al. (2015) have also used spread as one of the parameters to evaluate the multi-objective algorithm proposed by them. Spread value has been calculated on the Pareto fronts obtained after each run of NGSA-II on the data of training version. The parameters that are used for NSGA-II implementation are described in Table 3.

For the first multi-objective defect prediction problem M1, NSGA-II algorithm has been executed 30 times during training process. This is done to account for the inherent randomness of GAs. After each run, we choose a best model as described with algorithm 1. At the end of 30 runs, we take best model among all 30 models obtained during each run. This is also done with help of Algorithm 1, i.e., final logistic regression model is the one which is closest to ideal model (0 misclassification cost, 1 recall) among all the 30 models obtained in 30 runs. Final logistic regression model obtained from the training process is applied on the data of the testing version.

For our second problem, NSGA-II algorithm has been executed 31 times during training process. The reason behind choosing an odd number (31 runs) is to choose coefficient vectors with respect to median Pareto front. We chose Pareto front with median spread value as the final solution, i.e., the coefficient vectors corresponding to median spread value among these 31 runs. These coefficient vectors represent final logistic regression models obtained from the training process, and these models will be applied on the data of the testing version.

6 Results and discussion

We discuss the results obtained for both the multi-objective defect prediction models and four single-objective approaches in this section. We have conducted 30 experiments in 11 projects among 41 versions. We have taken projects which had at least 3 versions to put more strength to cross-version study, with the hope of achieving more generalizable results. Each experiment will be referred as ‘project_name train_version-test_version.’ For example, ‘Ant 1.3–1.4’ denotes that data of version 1.3 of Ant project are used as training data and data of version 1.4 are used as testing data. We use this notation to denote each experiment throughout this section.

\({RQ}_{1}\): How does the proposed M1 perform as compared to traditional single-objective defect prediction models in the cross-version defect prediction?

In this section, we discuss about the results obtained from our experiments and try to answer research question \({RQ}_{1}\). As explained earlier, we compare the performance in terms of misclassification cost, recall and F-measure.

We have built multi-objective defect prediction model MOLR with cost factors being 5, 10, 15, 20, and the results are presented in Tables 4, 5, 6 and 7, respectively. At the end of training phase of MOLR, we will have the best possible MOLR model that has (misclassification cost, recall) closest to (0,1) as explained in Sect. 5.2. For each of the experiments, misclassification cost and recall values of MOLR and four single-objective prediction models are recorded. For each of the experiment, we have boldfaced misclassification value if it is the lowest among all other misclassification values. Similarly, we have boldfaced recall value if it is the largest among all other recall values. For example, misclassification cost is the lowest and recall is the highest for MOLR for the experiment ‘Ant 1.3–1.4’ as shown in Table 4. And in this scenario, we can always claim that MOLR is preferred to other four single objective models.

From Table 4, we can observe that MOLR achieves lesser misclassification cost and higher recall as compared to other single objective algorithms in most of the experiments. Overall, in 20 out of 30 experiments, MOLR achieve better performance in terms of misclassification cost and recall combined. As cost factor increases, the performance of MOLR keeps improving. We can observe that, for the cost factors 10, 15 and 20, MOLR model dominates 24, 25 and 28 out of 30 experiments, respectively, in terms of both evaluation measures (misclassification cost and recall).

With increase in cost factors, FN file is penalized more than FP file. Minimizing misclassification cost takes care of controlling false-negative files in prediction, and hence, recall improves. Hence, MOLR performs better than other single-objective models with increase in cost factor value.

There are only 2 experiments, namely Ivy 1.1–1.4 and Jedit 4.2–4.3, where MOLR is not able to achieve least misclassification cost. One of the reasons can be that the test versions of both experiments have very few classes which are actually defective.

For Camel 1.2–1.4, Ivy 1.1–1.4, Poi 1.5–2.0 And Velocity 1.5–1.6 experiments, recall values achieved by MOLR are 1. This means MOLR is able to identify all the defective classes accurately.

F-measure is the harmonic mean of recall and precision, and it explains how the model is balanced against false positives and false negatives. For each value of cost factor—5, 10, 15, 20, F-measure achieved by the respective models is reported in Table 8. And also F-measure of four single-objective prediction models is also shown in the same table. F-measure of MOLR models is relatively better than other single-objective algorithms.

For Xalan 2.6–2.7 experiment, F-measure is greater than 0.9 for all values of cost factors. Unlike other experiments, F-measure does not always increase with increase in cost factor. We can observe that on an average, for different values of cost factor, MOLR achieves better F-measure as compared to four single-objective prediction models.

Wilcoxon signed test is applied to test whether MOLR model is significantly better than single-objective optimization model. If p value is \({<}\)0.05, we can conclude that MOLR is significantly better than the single-objective prediction model. For each evaluation measure (recall, misclassification cost, F-measure) and cost factor value (5, 10, 15, 20), the MOLR model is tested to check whether it is significantly better than the four single-objective prediction models. And p values are shown in Table 9.

From the results, one can observe that p value is \({<}\)0.05 for all evaluation measures and cost factors. In fact, for comparison in terms of recall value, p value is zero for all the experiments. Even for misclassification cost, p value is zero for the cost factor greater than or equal to 10. This shows that MOLR is working quite dominantly. As p value is \({<}\)0.05 for all evaluation measures and cost factors, we can easily reject null hypothesis \({ H }_{ 01 }\). This confirms domination of MOLR over single-objective prediction models.

\({RQ}_{2}\) How does the proposed M2 perform as compared to traditional single-objective defect prediction models in the cross-version defect prediction?

In this subsection, we discuss about results of our experiments to answer our second research question \({RQ}_{2}\). As explained earlier, we compare the performance of MOLR and four single objective prediction models in terms of cost and recall.

At the end of the training phase of building multi-objective defect prediction model, there are several models with varying cost and effectiveness. As described in Sect. 5.3.2, for each single objective prediction model we select the closest multi-objective model having the same or lesser LOC cost. We find out how effective (in terms of recall) the multi-objective model is compared to single objective model at the same or lesser LOC cost.

The comparative results of single-objective logistic regression model and the corresponding multi-objective prediction model are shown in Table 10. We also report LOC cost difference along with recall difference of two models since we compare effectiveness at the same or lesser LOC cost. From Table 10, it can be observed that, for the experiment Ant 1.6–1.7, MOLR gained recall of 0.1446 by incurring lesser cost (−1050) than that of single-objective logistic regression.

The comparative results of multi-objective prediction model with single-objective prediction models, naïve Bayes classifier, decision tree, random forest, are shown in Tables 11, 12 and 13, respectively. We take a deeper look on each comparison table. For each comparison table, we discuss the gain in recall values achieved by MOLR and the special cases where MOLR did not achieve any gain compared to single-objective algorithm. From the tables, it can be seen that recall values of MOLR are better than single-objective algorithms for most of the cases.

The comparative results of MOLR and single-objective logistic regression are shown in Table 10. For 25 out of 30 experiments, MOLR is able to achieve better recall than single objective logistic regression. MOLR is able to achieve 1–65% gain in recall. In Xalan project, all 3 experiments achieved more than 40% gain in recall, with Xalan 2.6–2.7 experiment achieving highest recall gain of 65.14%. The LOC cost difference is highest for Xerces 1.3–1.4 experiment (LOC difference of 11412), but recall gain achieved by MOLR model is 63.39%.

There are five cases when recall value of MOLR is lesser than or equal to SOLR values (Ant 1.3–1.4, Ant 1.4–1.5, Ant 1.5–1.6, Jedit 4.2–4.3 and Synapse 1.0–1.1). In Synapse 1.0–1.1 experiment, SOLR achieves zero LOC cost and effectiveness. The reason for this can be that the train version Synapse-1.0 had very few defective classes (10% of 157 classes) that gave poor performance when tested on Synapse version 1.1. The SOLR predictor classified all classes as non-defective, resulting in zero LOC cost and zero effectiveness. The loss in recall varies from 0 to 10% for these five cases, which is very less compared to gains we achieve for rest of the 25 experiments. For three projects, recall remains the same, but for only two project MOLR was not able to get better recall with lesser cost.

Average recall gain across all experiments is 22.77%. This shows how effectively MOLR model is able to predict defect-prone classes compared to SOLR.

The recall and LOC cost values for MOLR and naïve Bayes classifier are reported in Table 11. MOLR is able to outperform naïve Bayes in all 30 experiments. The gain in recall varies from 1 to 76%. In particular, there are 17 cases when MOLR is able to achieve more than 30% gain in recall compared to naïve Bayes classifier. In this case also, maximum cost difference is reported for Xerces 1.3–1.4 experiment (LOC difference of 13834). The gain in recall value for this experiment is 76.89%, which is maximum among all experiments. MOLR is able to achieve average recall gain of 32.65% across all experiments.

The recall and LOC cost values for MOLR and decision tree are reported in Table 12. In most of the cases, decision tree achieves good recall values. The decision tree is able to achieve more than 60% recall for ten experiments, but still MOLR achieves better recall values in most of the cases. There are five cases where the recall values of MOLR are less than or equal to decision tree (Ant 1.4–1.5, Ant 1.5–1.6, Jedit 4.2–4.3, Log4j 1.0–1.1 and Synapse 1.0–1.1). The loss in recall varies from 0 to 13% for these five cases. For rest of the 25 cases, recall values of MOLR are higher than decision tree. The gain in recall value varies from 2 to 72%. Maximum recall gain of 72.31% is reported for Xerces 1.3–1.4 experiment. And maximum cost difference is reported for Xerces init-1.2 case (LOC difference of 25530). In this case, MOLR achieves recall gain of 36.62%. MOLR is able to achieve average recall gain of 21.08% across all experiments.

The comparative results of MOLR and random forest classifier are shown in Table 13. There are only three cases when recall values of MOLR are lesser than random forest recall values (Camel 1.0–1.2, Synapse 1.0–1.1 and Xerces init-1.2). For rest of the 27 cases, MOLR achieves recall gain up to 77%. Maximum recall gain of 77.12% is achieved for Xerces 1.3–1.4 experiment. MOLR is able to achieve average recall gain of 21.83% across all experiments. Overall recall gains are not as significant as other single objective algorithms like logistic regression and naïve Bayes. One of the reasons might be that, being an ensemble algorithm, random forest forms a model consisting of multiple decision trees. This reduces overfitting and enhances performance compared to single decision tree model.

Overall, there are very few cases when MOLR is achieving lesser effectiveness than single-objective algorithms.

We summarize our findings in Table 14. The average recall of each of single-objective prediction model and MOLR is reported in this table. And also the percentage of increase achieved by MOLR as compared to average recall value of single-objective algorithm is reported. Overall MOLR achieved more than 48% increase in all four cases, with maximum of 117% increase in case of comparison with naïve Bayes algorithm. This shows the dominance of multi-objective approach as compared to single-objective algorithms. Single objective algorithms only optimize prediction error as their objective, and they do not consider cost of prediction. MOLR models are trained to minimize LOC cost and maximize effectiveness as their objectives. This is one of the major reasons why MOLR outperformed single-objective algorithms. MOLR is able to identify more defect prone classes than single-objective algorithms at same or lesser LOC cost.

To confirm these findings statistically, we performed Wilcoxon two- tailed paired test (Rahman et al. 2012) for all four cases. p values for all four types of experiments, i.e., SOLR versus MOLR, naïve Bayes versus MOLR, decision tree versus MOLR and random forest versus MOLR, are 0.00000131, 0.00000000, 0.00000117 and 0.00000008, respectively. As p values are significantly lower than threshold value 0.05, we can easily reject null hypothesis \({ H }_{ 02 }\). This confirms domination of MOLR over single-objective algorithms in terms of LOC cost and recall.

7 Threats to validity

This section discusses various threats to validity that may impact the analysis of the proposed approach and the experimental study presented here.

7.1 Threats to construct validity

The choice of recall as performance measure is widely adopted in previous studies. The choice of cost factor is based on the fact that it is more costly to fix defects during post-release phase as compared to performing QA activities on non-defective files in pre-release phase (Moser et al. 2008). One can choose appropriate cost factor based on the project and organization. And also different values of cost factor may yield different results. For our second problem, the choice of LOC cost is inspired from the fact that it is related to amount of time that will be spent to review or test the code. And the same measure has been used in Canfora et al. (2013, 2015) and Rahman et al. (2012). But one can use other measures as well. One more threat to construct validity can be the choice of metrics and datasets. The CK metrics are one of the standard sets of metrics used for object-oriented projects. We have considered datasets from widely used PROMISE repository (Menzie et al. 2015), but we are not denying the fact that the datasets are prone to imperfection and incompleteness.

7.2 Threats to internal validity

One of the biggest threat is the choice of parameter settings for implementation. These parameters are chosen from experimentation and past research work (Canfora et al. 2013, 2015; Coello et al. 2007; Krall et al. 2015). For the parameters chosen from experimentations, we have used spread as the evaluation criteria for measuring goodness of Pareto optimal solutions, as defined by Deb (2001). The choice of different evaluation criteria may lead to different parameter settings. The choice of best parameters may vary from one dataset to another. We chose a uniform set of parameters to compare the results at the same scale. To mitigate inherent randomness of GA, we ran training process multiple times. For the problem M1, we ran NSGA-II 30 times to get the best model of MOLR which is used for testing purpose. For the problem M2, we ran and took coefficients corresponding to median Pareto front. The choice of median Pareto front corresponds to median spread value obtained among all 31 runs. The parameters for other algorithms are standard ones available in MATLAB. We have used logistic regression as fitness function to multi-objective genetic algorithm and four traditional machine learning algorithms for the comparison purpose. All of these algorithms have been used in many of the past works (He et al. 2013; Kamei et al. 2010; Kim et al. 2007; Mende and Koschke 2009; Peters et al. 2013), but the results may not hold true for other machine learning algorithms. Our aim was to compare cost-effectiveness of multi-objective algorithm with traditional algorithms for cross-version defect prediction, rather than comparing different machine learning algorithms with each other.

7.3 Threats to conclusion validity

We have performed two-tailed Wilcoxon paired test to statistically compare the difference in the performance measures obtained from MOLR and traditional single-objective algorithms. Wilcoxon test is a nonparametric test, which does not make any assumptions about input value distributions. We have confirmed our findings at 5% significance level.

7.4 Threats to external validity

We have experimented with 11 open-source projects from the PROMISE repository having different versions. One may find different results with the projects developed in industry, where certain standards are followed. So the findings presented by us may or may not hold good for industrial software projects. We have used CK metrics as predictors in our study. The choice of different metrics may yield different results.

8 Conclusion

In this paper, we formulated cross-version defect prediction as multi-objective optimization problem with two distinct set of objective functions, and the same was solved using multi-objective genetic algorithm. We compared multi-objective logistic regression with four single-objective algorithms for cross-version defect prediction. We applied the proposed approach to 11 projects and a total of 30 train version-test version pairs. Our results indicate following benefits of multi-objective approach:

-

1.

The multi-objective defect prediction model (M1) is able to identify more defects at the same or lesser misclassification cost incurred by all four single-objective defect prediction models. And this observation holds good for four different values of cost factor 5, 10, 15, 20.

-

2.

The multi-objective defect prediction model (M2) incurs the same or lesser LOC cost to achieve better recall as compared to all four single-objective defect prediction models. This proves that M2 is able to identify more defect-prone classes at the same or lesser LOC cost than the cost incurred with single-objective algorithms.

In summary, multi-objective approach can yield better results for cross-version defect prediction compared to traditional single objective approaches.

References

Basili VR, Briand LC, Melo WL (1996) A validation of object-oriented design metrics as quality indicators. Softw Eng IEEE Trans 22(10):751–761

Canfora G, De Lucia A, Di Penta M, Oliveto R, Panichella A, Panichella S (2013) Multi-objective cross-project defect prediction. In: 2013 IEEE sixth international conference on software testing, verification and validation (ICST), IEEE, pp 252–261

Canfora G, Lucia AD, Penta MD, Oliveto R, Panichella A, Panichella S (2015) Defect prediction as a multiobjective optimization problem. Softw Test Verif Reliab 25(4):426–459

Chidamber S, Kemerer C (1994) A metrics suite for object oriented design. IEEE Trans Softw Eng 20(6):476–493

Coello CC, Lamont GB, Van Veldhuizen DA (2007) Evolutionary algorithms for solving multi-objective problems. Springer, Berlin

Czibula G, Marian Z, Czibula IG (2014) Software defect prediction using relational association rule mining. Inf Sci 264:260–278. doi:10.1016/j.ins.2013.12.031

D’Ambros M, Lanza M, Robbes R (2010) An extensive comparison of bug prediction approaches. In: 2010 7th IEEE working conference on mining software repositories (MSR), IEEE, pp 31–41

De Carvalho AB, Pozo A, Vergilio SR (2010) A symbolic fault-prediction model based on multiobjective particle swarm optimization. J Syst Softw 83(5):868–882

Deb K (2001) Multi-objective optimization using evolutionary algorithms. Wiley, New York

Deb K, Agrawal S, Pratap A, Meyarivan T (2000) A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. Lect Notes Comput Sci 1917:849–858

Ghotra B, McIntosh S, Hassan AE (2015) Revisiting the impact of classification techniques on the performance of defect prediction models. In: Proceedings of the 37th international conference on software engineering, ICSE ’15—Volume 1. IEEE Press, Piscataway, pp 789–800

Goldberg DE (2006) Genetic algorithms. Pearson Education India, New Delhi

Harman M (2010) The relationship between search based software engineering and predictive modeling. In: Proceedings of the 6th international conference on predictive models in software engineering, PROMISE ’10. ACM, New York, pp 1:1–1:13. doi:10.1145/1868328.1868330

Harman M, Clark J (2004) Metrics are fitness functions too. In: Proceedings of 10th international symposium on software metrics. IEEE, pp 58–69

Hassan AE (2009) Predicting faults using the complexity of code changes. In: Proceedings of the 31st international conference on software engineering. IEEE Computer Society, pp 78–88

He Z, Peters F, Menzies T, Yang Y (2013) Learning from open-source projects: an empirical study on defect prediction. In: 2013 ACM/IEEE international symposium on empirical software engineering and measurement, pp 45–54. doi:10.1109/ESEM.2013.20

Herbold S (2013) Training data selection for cross-project defect prediction. In: Proceedings of the 9th international conference on predictive models in software engineering, PROMISE ’13. ACM, New York, pp 6:1–6:10. doi:10.1145/2499393.2499395

Jureczko M, Madeyski L (2010) Towards identifying software project clusters with regard to defect prediction. In: Proceedings of the 6th international conference on predictive models in software engineering, PROMISE ’10. ACM, New York, pp 9:1–9:10. doi:10.1145/1868328.1868342

Kamei Y, Matsumoto S, Monden A, Matsumoto Ki, Adams B, Hassan A (2010) Revisiting common bug prediction findings using effort-aware models. In: 2010 IEEE international conference on software maintenance (ICSM), pp 1–10. doi:10.1109/ICSM.2010.5609530

Kim S, Zimmermann T, Whitehead EJ Jr, Zeller A (2007) Predicting faults from cached history. In: Proceedings of the 29th international conference on software engineering. IEEE Computer Society, pp 489–498

Krall J, Menzies T, Davies M (2015) GALE: Geometric active learning for search-based software engineering. IEEE Trans Softw Eng 41(10):1001–1018

Krishnan S, Strasburg C, Lutz RR, Goševa-Popstojanova K (2011) Are change metrics good predictors for an evolving software product line? In: Proceedings of the 7th international conference on predictive models in software engineering, Promise ’11. ACM, New York, pp 7:1–7:10. doi:10.1145/2020390.2020397

Lessmann S, Baesens B, Mues C, Pietsch S (2008) Benchmarking classification models for software defect prediction: a proposed framework and novel findings. IEEE Trans Softw Eng 34(4):485–496

Ma W, Chen L, Yang Y, Zhou Y, Xu B (2016) Empirical analysis of network measures for effort-aware fault-proneness prediction. Inf Softw Technol 69:50–70

Marian Z, Czibula IG, Czibula G, Sotoc S (2015) Software defect detection using self-organizing maps. Stud Unive Babes-Bolyai Inform 60(2):55–69

MATLAB (2015) version 8.5.0 (R2015a). The MathWorks Inc., Natick

Mende T, Koschke R (2009) Revisiting the evaluation of defect prediction models. In: Proceedings of the 5th international conference on predictor models in software engineering, PROMISE ’09. ACM, New York, pp 7:1–7:10. doi:10.1145/1540438.1540448

Menzie T, Krishna R, Pryor D (2015) The promise repository of empirical software engineering data. http://openscience.us/repo

Moser R, Pedrycz W, Succi G (2008) A comparative analysis of the efficiency of change metrics and static code attributes for defect prediction. In: ACM/IEEE 30th international conference on software engineering, 2008. ICSE’08. IEEE, pp 181–190

Muthukumaran K, Choudhary A, Murthy NB (2015) Mining github for novel change metrics to predict buggy files in software systems. In: 2015 international conference on computational intelligence and networks (CINE). IEEE, pp 15–20

Peters F, Menzies T, Marcus A (2013) Better cross company defect prediction. In: 2013 10th IEEE working conference on mining software repositories (MSR), pp 409–418. doi:10.1109/MSR.2013.6624057

Rahman F, Posnett D, Devanbu P (2012) Recalling the “imprecision” of cross-project defect prediction. In: Proceedings of the ACM SIGSOFT 20th International Symposium on the foundations of software engineering, FSE ’12. ACM, New York, pp 61:1–61:11. doi:10.1145/2393596.2393669

Subramanyam R, Krishnan M (2003) Empirical analysis of CK metrics for object-oriented design complexity: implications for software defects. IEEE Transa Softw Eng 29(4):297–310. doi:10.1109/TSE.2003.1191795

Yang X, Tang K, Yao X (2015) A learning-to-rank approach to software defect prediction. IEEE Trans Reliab 64(1):234–246

Zhang D, El Emam K, Liu H et al (2009) An investigation into the functional form of the size-defect relationship for software modules. IEEE Trans Softw Eng 35(2):293–304

Zimmermann T, Premraj R, Zeller A (2007) Predicting defects for eclipse. In: International workshop on predictor models in software engineering, PROMISE’07: ICSE Workshops 2007. IEEE, pp 9–9

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Shukla, S., Radhakrishnan, T., Muthukumaran, K. et al. Multi-objective cross-version defect prediction. Soft Comput 22, 1959–1980 (2018). https://doi.org/10.1007/s00500-016-2456-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-016-2456-8