Abstract

Background

Worldwide, the annual number of robotic surgical procedures continues to increase. Robotic surgical skills are unique from those used in either open or laparoscopic surgery. The acquisition of a basic robotic surgical skill set may be best accomplished in the simulation laboratory. We sought to review the current literature pertaining to the use of virtual reality (VR) simulation in the acquisition of robotic surgical skills on the da Vinci Surgical System.

Materials and methods

A PubMed search was conducted between December 2014 and January 2015 utilizing the following keywords: virtual reality, robotic surgery, da Vinci, da Vinci skills simulator, SimSurgery Educational Platform, Mimic dV-Trainer, and Robotic Surgery Simulator. Articles were included if they were published between 2007 and 2015, utilized VR simulation for the da Vinci Surgical System, and utilized a commercially available VR platform.

Results

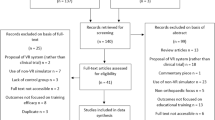

The initial search criteria returned 227 published articles. After all inclusion and exclusion criteria were applied, a total of 47 peer-reviewed manuscripts were included in the final review.

Conclusions

There are many benefits to utilizing VR simulation for robotic skills acquisition. Four commercially available simulators have been demonstrated to be capable of assessing robotic skill. Three of the four simulators demonstrate the ability of a VR training curriculum to improve basic robotic skills, with proficiency-based training being the most effective training style. The skills obtained on a VR training curriculum are comparable with those obtained on dry laboratory simulation. The future of VR simulation includes utilization in assessment for re-credentialing purposes, advanced procedural-based training, and as a warm-up tool prior to surgery.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Worldwide, the annual number of robotic surgical procedures continues to increase rapidly [1]. While many young surgeons have grown up with the technology in their residency and fellowship programs, many other surgeons have adopted the robotic approach to surgery well into their careers. Robotic surgical skills are unique and not derivative from either open or laparoscopic surgery. While industry sponsored training courses are a core component of training for new robotic surgeons, the current approach of case observations followed by an immersive training experience over 1–2 days is analogous to the experience early in the days of laparoscopic cholecystectomy [2]. As demonstrated in laparoscopy, moving the venue for the acquisition of a reliable basic skill set out of the operating room and into the simulation laboratory has significant advantages for trainees, hospitals, and patients alike. The learning curve for a novice robotic surgeon is best met in the simulation laboratory, not in the operating room [3]. Using the robotic surgical system itself for practice outside of the operative setting can be an issue due to expense (instruments that might be used for practice can often only be used a finite number of times before the computer will no longer accept them), heavy clinical utilization of the robotic surgical system, and a lack of validated curricula with objective performance metrics. Computer-based, or virtual reality simulators designed specifically for robotic surgery may overcome many of these obstacles. Virtual reality (VR) training for robotic skills acquisition is described in the literature as early as 2007 [4, 5]. The da Vinci Robotic Surgical System (Intuitive Surgical, Sunnyvale, CA, USA) is currently the only commercially available robotic surgical platform. We sought to review the current literature pertaining to the use of VR simulation in the acquisition of robotic surgical skills on the da Vinci system.

Methods

A PubMed search was conducted between December, 2014 and January 2015 utilizing the following keywords: virtual reality, robotic surgery, da Vinci, da Vinci skills simulator, SimSurgery Educational Platform, Mimic dV-Trainer, and Robotic Surgery Simulator. Articles were included if they were published between 2007 and 2015, utilized VR simulation for the da Vinci Surgical System, and utilized a commercially available VR platform. Articles were excluded for utilizing laparoscopic VR simulation, simulation-based training curricula utilizing the actual da Vinci system and not a VR simulator, and VR simulators not designed specifically for the da Vinci surgery system.

Validity is defined as the extent to which a measurement corresponds accurately to the real world. The validity of a VR robotic surgical simulator is the degree to which the tool measures what it purports to measure—in this case robotic surgical skill. For the various robotic surgical VR simulators, we were interested in studies that examined different kinds of validity including: face validity or the extent to which the simulator resembles reality; construct validity or the extent to which the simulators can differentiate between novice and expert robotic surgeons; content validity or whether the simulator measures what it intends to measure; concurrent validity or how the simulators compare to a ‘gold standard’; and predictive validity or the ability of the simulator to predict future performance.

Results

The initial search criteria returned 227 published articles. After all inclusion and exclusion criteria were applied, a total of 47 peer-reviewed manuscripts were deemed suitable for inclusion in the final review (Fig. 1). Four commercially available VR robotic surgical simulators currently exist: the da Vinci Skills simulator (dVSS; Intuitive Surgical, Sunnyvale, CA, USA), the Mimic dV-Trainer (dV-Trainer; Mimic Technologies, Inc, Seattle, WA, USA), the Robotic Surgical Simulator (RoSS; Simulated Surgical Systems, Buffalo, NY, USA), and SimSurgery Educational Platform (SEP, SimSurgery, Norway). A total of 26 validation studies on the individual simulators were reviewed (Table 1).

Robotic surgical simulator (RoSS™)

Overview

The RoSS (Simulated Surgical Systems LLC, San Jose, CA, USA) is a portable, stand-alone system that has been available since 2009. This system costs >$100,000 and contains 52 unique exercises organized into five categories: orientation module, motor skills, basic surgical skills, intermediate surgical skills, and hands-on surgical training. The RoSS utilizes its own hardware. The most notable difference in hardware compared to the actual da Vinci Surgical System are the hand controllers, which have a smaller range of motion resulting in increased need for clutching [6]. RoSS is depicted in Fig. 1.

Validation

Three groups have performed studies concluding that the RoSS is an appropriate training tool to develop robotic surgical skills [7–10]. Validation studies are presented in Table 1. Seixas-Mikelus et al. showed that experts thought the RoSS were realistic and a useful tool for skills acquisition, establishing face and content validity. Raza et al. and Chowriappa et al. showed the performance of experts on the RoSS exceeded that of novices, demonstrating construct validity.

Skills training

Acquisition of improved surgical skill was first demonstrated by Stegemann et al. [11] who noted that completion of a standardized training curriculum on the RoSS results in significant improvements in basic robotic surgery skills. The curriculum, formally known as the Fundaments Skills of Robotic Surgery (FSRS), consists of 16 RoSS tasks from four modules: basic console orientation, psychomotor skills training, basic surgical skills, and intermediate surgical skills (Table 2).

Other uses

The RoSS has the capability to measure multiple performance metrics. In an effort to delineate real world performance metrics from others, Chowriappa et al. [10] developed the Robotic Skills Assessment (RSA) score. This score provides users with a valid and standardized assessment tool for VR simulation. A panel of expert robotic surgeons developed the score by defining tasks, assigning weights, and integrating performance metrics into a hierarchal scoring system. Special consideration was given to surgical safety and critical errors. Less weight was assigned to time to task completion. The individual metrics on the RoSS software are then computed into the RSA algorithm to provide the final RSA score. A comparison of scores between novice and expert surgeons confirmed construct validity. The RSA score algorithm can potentially be applied across all robotic VR simulators.

Mimic dV-Trainer (dV-Trainer®)

Overview

The dV-Trainer (Mimic Technologies, Seattle, WA, USA) is a stand-alone, portable, tabletop device with mobile foot pedals. The simulator launched its first prototype in 2007, costs approximately $100,000, and contains 65 unique exercises. The dV-Trainer hardware differs from the actual da Vinci Surgical System. The most apparent difference is in the hand controls, which utilize three cables to measure hand movements rather than the more precise arms found on the RoSS and the true da Vinci Surgical System [6].

Validation

Nine groups concluded that the dV-Trainer is an appropriate robotic surgery training tool [12–20] (Table 1). Seven groups demonstrated face validity by means of participant survey responses. Of these, six groups established content validity through the same surveys by concluding that the simulator is an effective training tool. Seven groups established construct validity by showing experts outperformed novices [12–18]. Two groups showed concurrent validity through correlating VR performance with performance on the actual da Vinci robot [14, 19].

Basic skills training

Completion of a training curriculum on the dV-Trainer leads to an increase in basic robotic skills [13, 21] (Table 2). In addition to describing acquisition of basic robotic skills, Korets et al. demonstrated that training on the dV-Trainer resulted in similar skills acquisition as training on da Vinci dry laboratory exercises. In their study, 16 urology residents were assigned to one of three groups. All residents were tested for baseline robotic skills. Group one completed a training curriculum on the dV-Trainer, Group two completed a training curriculum on da Vinci dry laboratory exercises, and Group three served as a control. Results indicated that Groups one and two displayed a similar increase in post testing performance compared to baseline.

Daily training has been suggested to be the most appropriate for the dV-Trainer [22]. Kang et al. compared three training schedules: 1 h daily for four consecutive days, 1 h weekly for four consecutive weeks, and four consecutive hours in 1 day. The 1-h daily for four consecutive days schedule was associated with increased final performance and continuous score improvement.

Other uses

Aside from acquisition of basic skills through a training curriculum, the dV-Trainer can be utilized for advanced procedural-based practicing. Kang et al. [23] established face, content, and construct validity of a specific procedural module on the dV-Trainer. The group recruited 10 novice and 10 expert robotic surgeons to perform a VR module designed to mimic a vesicourethral anastomosis. Experts outperformed the novice group in most metrics, including time, total score, economy of motion, and instrument collisions.

Lendvay et al. [24] used the dV-Trainer as a warm-up tool for surgeons before entering the operating room. The study group enrolled 51 surgeons and randomized 25 to receive a 3–5 min warm-up period on the dV-Trainer before completing tasks on a da Vinci dry laboratory session meant to mimic surgical procedures. Their results were compared to 26 control surgeons who were robotically trained but received no warm-up period. They found the warm-up group outperformed the controls in measures such as task time and economy of motion.

da Vinci skills simulator (dVSS)

Overview

The dVSS (Intuitive Surgical, Sunnyvale, CA, USA) is the only simulator that attaches directly to the actual da Vinci Surgical System console. The prototype was first described in 2011, costs nearly $85,000, and includes 40 unique exercises [6]. The simulator cannot function independently and requires a da Vinci Surgical System console. No hardware discrepancies exist. One disadvantage of this design is that when the da Vinci Surgical System is in use for clinical cases, the dVSS cannot be used for training purposes. The dVSS is depicted in Fig. 2.

Validation

The dVSS is an established training tool [16, 25–33] (Table 1). A variety of studies have been conducted on this simulator demonstrating face, content, and construct, and predictive validity. Predictive validity was reported by Culligan et al. [32] who trained robotic surgeons on the dVSS. Training on the dVSS led to improved performance on actual human robotic hysterectomy cases. Similarly, Hung et al. [33] demonstrated that baseline skills on the dVSS were predictive of baseline and final scores on da Vinci ex vivo tissue performance.

Basic skills training

Many groups established and validated specific training curricula on the dVSS, and noted completion of the curricula led to increased skill on the da Vinci. [32–38]. A summary of training curricula is presented in Table 2.

The protocol for curriculum tasks varies from proficiency-based, time-based, and proficiency-based with a maximum number of attempts limit. Three groups utilized a purely proficiency-based training curriculum [32, 34, 38]. Bric et al. [34] used expert proficiency levels, requiring 3-consecutive marks at or above expert levels in order to be deemed proficient. Culligan et al. [32] also utilized expert proficiency levels, but did not comment if consecutive attempts were required. Zhang et al. [38] utilized a proficiency of 91 % composite score.

Two groups utilized a proficiency-based training curriculum with a maximum number of attempts. Gomez et al. [36] described a curriculum with a proficiency goal of 80 % overall score with an attempts-cap at six. The authors noted six attempts were not sufficient for all subjects to reach proficiency, which resulted in some subjects advancing through the curriculum without gaining the desired skill. Similarly, Vaccaro et al. [37] utilized an 80 % overall score proficiency level and maximum number of attempts of ten. The authors did not comment if ten attempts were an appropriate limit, or quantify the amount of subjects who reached the limit before reaching proficiency.

One group directly compared a time-based and proficiency-based training curriculum. Foell et al. [35] described the Basic Skills Training Curriculum (BSTC), which utilized one group with time-based training (three 1-h sessions) and one group with proficiency-based training (80 % total score). Although both groups exhibited improvement in da Vinci dry laboratory tasks of ring transfer and needle passing, the proficiency group demonstrated greater improvement on post-curriculum testing. These investigators noted excellent face validity of the dVSS.

Interestingly, one study by Hung et al. [33] was unable to show statistically significant improvement in da Vinci animal laboratory scores following completion of a 17-task training program on the dVSS. The authors attributed the failure to show statistical power to inadequate duration of simulator training and the imprecise method of baseline and final skills measurement.

SimSurgery Educational Platform (SEP)

Overview

The SEP (SimSurgery, Oslo, Norway) is a stand-alone robot developed in the Netherlands. It costs between $40–45,000 and provides 21 unique tasks [39]. A two-dimensional monitor serves the function of the eyepiece. Operator hand controls are mounted to a portable hand board, and the fourth arm component does not exist as compared to the da Vinci Surgical System.

Validation

Two groups concluded that the SEP is a valid training tool [40, 41] (Table 1). Gavazzi et al. demonstrated face and content validity using a questionnaire to determine the usefulness of the SEP. Both Gavazzi et al. and Shamin Khan et al. showed construct validity by measuring the performance of experts compared to novices. Conversely, Balasundaram et al. were unable to show construct validity of the SEP through comparison of novice and expert performances [42].

Other uses

SimSurgery AS advertises that the SEP robot is compatible with procedural software originally designed for its laparoscopic training modules [43]. These are specific simulations of important parts of various procedures (i.e., the cholecystectomy module includes two simulations: dissection of Calot’s triangle and dissection of the gall bladder). No studies have been found in the research validating these modules on the SEP robot.

Discussion

This study is a review of the current literature pertaining to the use of VR simulation in the acquisition of robotic surgical skills. We have found that the literature demonstrates that VR simulators are appropriate tools for measuring robotic surgical skills. Basic robotic surgical skills on the actual da Vinci System have been demonstrated to improve after training on VR simulators.

The use of VR simulators as valid assessment tools for robotic skills have been previously summarized [39, 44–46]. Construct validity in this context is the extent to which a simulated task can measure or discriminate between operators of different levels of surgical skill. Most often, performance is compared between novice and expert robotic surgeons. In VR simulation, it is assumed that the improved or superior performance demonstrated by an expert robotic surgeon represents actual robotic skill obtained in the operating room. This infers that the VR simulator actually measures robotic skill [7, 10, 12–17, 25–31, 40, 41]. Predictive validity is the extent to which performance on the simulator can predict future performance on the actual da Vinci robot. Mastery of the simulator is thought to translate into actual surgical proficiency [32]. It is difficult to definitively demonstrate that robotic VR training leads to improved performance or outcomes in robotic surgery on human patients for many reasons. Easily derived metrics such as time to perform a case are confounded by variations in patient and procedure complexity. Complications are difficult to attribute to a specific training methodology and hopefully infrequent enough that a very large sample size would be required to demonstrate a true effect. Both Culligan et al. and Hung et al. were able to demonstrate predictive validity using the dVSS. However, only Culligan was able to associate VR training with performance during actual human robotic surgical cases. This association is crucial as the ultimate goal of VR training is to improve operator performance and robotic skills for human cases beyond the training environment.

The curricula and tasks utilized in the various studies published examining the utility of VR robotic trainers in skills assessment and training tend to vary widely from study to study. A standardized tool or definition of proficiency that could be applied across the various platforms and applied more universally would be of value. The RSA developed by Chowriappa is a standardized assessment tool that can be applied to all simulators. This provides an opportunity to use the VR to assess the skill of certified robotic surgeons at any time point. The ability to standardize assessments on a VR simulator has the potential to be used in re-credentialing of robotic surgeons [10, 15, 27, 31]. In this application, a brief assessment on a VR simulator using a score such as the RSA may be enough to ensure that a practicing robotic surgeon is maintaining his/her basic robotic surgical skill set, especially in cases where the volume of robotic surgical procedures performed in a period of time may fall below a threshold number. A major limitation to this application is the lack of a consensus definition and standard for robotic surgical competency.

Many groups have demonstrated that training on VR simulators leads to improved performance on the actual da Vinci Surgery System. Seven independent groups in the literature described training curricula on the dVSS that resulted in increased robotic skill. Training on both the dV-Trainer and the RoSS has also been demonstrated to result in improved performance of the da Vinci system in simulated tasks. [11, 21, 34–38]. A major limitation of these studies is that the measurement of basic robotic skills was completed in a simulated robotic environment and not actual human robotic cases. To date, there is only one published study that measured robotic skill performance on a human robotic case [32].

Despite a lack of evidence for a direct relationship between VR simulation and performance on actual human cases, it has been well described that the skills gained from VR training are similar to those attained via traditional robotic dry laboratory simulation training [13, 19, 28, 47]. Lerner et al. and Korets et al. both demonstrated that training on the dV-Trainer leads to similar skills gained when compared to dry laboratory simulation. Hung et al. and Tergas et al. showed the same on the dVSS. Therefore, training on VR simulation may be a viable alternative to dry laboratory training with the actual da Vinci system when considering the cost benefit of VR simulation. Rehman et al. [48] described the indirect cost benefit of using the RoSS at the Roswell Park Cancer Institute. Over a 1-year period, it was determined that approximately $622,784 could be saved if VR simulation replaced dry laboratory training. The investigators assumed that RoSS training is comparable and possibly more economical in comparison with animal/dry laboratory training.

Although investigators attempt to describe VR training protocols, there is no standardization among training curricula. Proficiency training is thought to be the most efficient manner in which to acquire basic robotic surgery skills [35, 49–51]. Many robotic surgery teams have attempted to develop their own proficiency-based VR training curriculums [32, 34–38]. Differences in clinically relevant performance standards are often debated. Currently, proficiency levels often use an arbitrary 80 % ‘overall score’ metric output by the simulator software [35–37]. The utility of an 80 % goal is unknown because the software metrics were not developed by expert robotic surgeons and instead represent standard pre-programmed metrics [10]. In an attempt to identify meaningful training goals, Connolly et al. [26] and Culligan et al. [32] established proficiency scores based on expert robotic surgeon performance metrics. Both teams demonstrated that completion of their specific training curriculums placed VR simulator trainees at the same level as expert robotic surgeons.

A maximum number of attempts have also been applied to proficiency-based VR training; however, this methodology may result in advancement through the curriculum by trainees without acquiring the desired skills [36, 37]. Setting maximum attempts to a low limit has been shown to be undesirable in basic skills training [52]. Brinkman et al. described learning curves of dVSS tasks and found 10 attempts were insufficient for novice trainees to reach expert proficiency levels. A premature attempt-cap on a task can inappropriately shorten training time before the desired skill has been achieved [53]. Kang et al. described the learning curve of the ‘tube 2’ task on the dV-Trainer and determined a mean of 74 attempts was needed to reach a performance plateau. Based on these observations, proficiency-based training with the goal of obtaining expert skill levels benefits from an unlimited amount of attempts limit during basic skills training curriculum on VR simulators. Under this model, robotic trainees are not limited by time or attempts, but rather adjust training to meet their individualized learning curve. Ultimately, completion of the curriculum leaves every trainee at the same level of skill on the simulator, provided proficiency targets have been met.

The use of VR simulators is evolving from basic skill development to more advanced uses. Similar to athletes warming up for a game or competition, the work done by Lendvay et al. suggests a brief VR warm-up period before procedures as a potential means of decreasing procedure time and reducing intra-operative errors. The opportunity for training and warm-up on a virtual environment mimicking the procedure the surgeon is about to perform could reduce errors and procedural time [23].

The validation and skills testing of virtual reality environments in robotic skills training has been extensive. As technology advances, the sophistication of the simulated tasks, and potentially even entire procedures continues to evolve and improve. There are several distinct advantages of VR trainers for acquiring robotic surgical skills including safety, convenience, efficiency (especially when the alternative is to train on a da Vinci system also used clinically), and cost effectiveness (after initial investment in the simulator and if used extensively). Over the past several years, a group of 80 national/international robotic surgery experts, behavioral psychologists, medical educators, statisticians and psychometricians have been working to develop a multispecialty, proficiency-based curriculum of basic technical skills and didactic content known as the Fundamentals of Robotic Surgery (FRS). The FRS technical skills are based on a physical model using the da Vinci system. The FRS is a joint educational program funded through a Department of Defense grant and an unrestricted educational grant from Intuitive Surgical [54]. The FRS was designed to be device and specialty independent. The FRS curriculum is set to undergo validation studies at the time of this writing. While outside of the scope of this review of VR simulators in robotic surgical skills training, a validated curriculum widely accepted by robotic surgeons of multiple disciplines would be a major advance in robotic surgical skills training. A version of this curriculum using the validated tasks could potentially be programmed into future VR simulators. The final phase of validation studies as described by the FRS consensus conference working group includes plans to proceed with a research study at 10 American College of Surgeons Accredited Education Institutes to determine the predictive validity of the curriculum. This should help bridge the gap identified earlier in this manuscript when it comes to simulation-based training and robotic surgical outcomes.

At our own institution, we acquired a daVinci™ Skills Simulator several years ago along with the purchase of 2 robotic surgical systems. We were frustrated with the extensive array of tasks and drills pre-programmed into the trainer, and the computer derived performance metrics that were difficult to interpret and not validated. We developed and validated a proficiency-based curriculum that has become the standard curriculum used for training and maintaining robotic credentials at our institution [26, 34]. As we considered the literature and state of VR training in robotic surgery, we came to realize that there are numerous training systems, a multitude of curricula, and various studies validating and supporting the use of these systems as training tools. This was the motivation for the current review.

The full spectrum of robotic surgical skills needed to safely perform surgical procedures has yet to be completely defined and adequately measured. It is clear that VR simulation can play an important role in future curricula designed to help surgeons acquire basic and/or advanced robotic skills, to document the maintenance of previously acquired skills, and possibly even to practice or warm-up prior to actual procedures. The currently published literature evaluating VR robotic surgical skills simulation is confounded by the different hardware and software platforms, and the different metrics used to measure performance. It is likely that in the near future, another robotic surgical system or systems will become commercially available. The interface of these systems is likely to differ from that of the da Vinci system. Discrete surgical skills may be needed to perform complex tasks on these new systems that are unique from those needed on the da Vinci system and not derivative from those attained on a different platform. This training gap will need to be addressed as new robotic surgical systems become available. Further research is necessary to define discrete skills and curricula that are valid and can be universally applied.

Conclusion

There are many benefits to utilizing VR simulation for robotic skills acquisition. Four commercially available simulators (dVSS, dV-Trainer, RoSS, SEP) have been demonstrated to be capable of assessing robotic skill. Three of the four simulators (dVSS, dV-Trainer, RoSS) demonstrate the ability of a VR training curriculum to improve basic robotic skills, with proficiency-based training being the most effective training style. The skills obtained on a VR training curriculum are comparable with those obtained on dry laboratory simulation. The future of VR simulation includes utilization in assessment for re-credentialing purposes, advanced procedural-based training, and as a warm-up tool prior to surgery.

References

Kenngott HG, Fischer L, Nickel F, Rom J, Rassweiler J, Müller-Stich BP (2012) Status of robotic assistance—a less traumatic and more accurate minimally invasive surgery? Langenbecks Arch Surg 397:333–341

Callery MP, Strasberg SM, Soper NJ (1996) Complications of laparoscopic general surgery. Gastrointest Endosc Clin N Am 6(2):423–444

Scott DJ, Dunnington GL (2008) The new ACS/APDS skills curriculum: moving the learning curve out of the operating room. J Gastrointest Surg 12:213–221

Sun LW, Van Meer F, Bailly Y, Thakre AA (2007) Advanced da Vinci surgical system simulator for surgeon training and operation planning. Int J Med Robot 3(3):245–251

Albani JM, Lee DI (2007) Virtual reality-assisted robotic surgery simulation. J Endourol 21:285–287

Smith R, Truong M, Perez M (2015) Comparative analysis of the functionality of simulators of the da Vinci surgical robot. Surg Endosc 29(4):972–983

Raza SJ, Froghi S, Chowriappa A, Ahmed K, Field E, Stegemann AP, Rehman S, Sharif M, Shi Y, Wilding GE, Kesavadas T, Kaouk J, Guru K (2014) Construct validation of the key components of fundamental skills of robotic surgery (FSRS) curriculum—a multi-institution prospective study. J Surg Educ 71:316–324

Seixas-mikelus SA, Kesavadas T, Srimathveeravalli G, Chandrasekhar R, Wilding GE, Guru KA (2010) Face validation of a novel robotic surgical simulator. Urology 76:357–360

Seixas-Mikelus S, Stegemann AP, Kesavadas T, Srimathveeravalli G, Sathyaseelan G, Chandrasekhar R, Wilding GE, Peabody JO, Guru K (2011) Content validation of a novel robotic surgical simulator. BJU Int 107:1130–1135

Chowriappa AJ, Shi Y, Raza SJ, Ahmed K, Stegemann A, Wilding G, Kaouk J, Peabody JO, Menon M, Hassett JM, Kesavadas T, Guru K (2013) Development and validation of a composite scoring system for robot-assisted surgical training—the Robotic Skills Assessment Score. J Surg Res 185:561–569

Stegemann AP, Ahmed K, Syed JR, Rehman S, Ghani K, Autorino R, Sharif M, Rao A, Shi Y, Wilding GE, Hassett JM, Chowriappa A, Kesavadas T, Peabody JO, Menon M, Kaouk J, Guru KA (2013) Fundamental skills of robotic surgery: a multi-institutional randomized controlled trial for validation of a simulation-based curriculum. Urology 81:767–774

Kenney P, Wszolek MF, Gould JJ, Libertino J, Moinzadeh A (2009) Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology 73:1288–1292

Korets R, Mues AC, Graversen J, Gupta M, Benson MC, Cooper KL, Landman J, Badani KK (2011) Validating the use of the Mimic dV-trainer for robotic surgery skill acquisition among urology residents. Urology 78:1326–1330

Lee JY, Mucksavage P, Kerbl DC, Huynh VB, Etafy M, McDougall EM (2012) Validation study of a virtual reality robotic simulator-role as an assessment tool? J Urol 187:998–1002

Lendvay T, Casale P, Sweet R, Peters C (2008) VR robotic surgery: randomized blinded study of the dV-trainer robotic simulator. Stud Health Technol Inform 132:242–244

Liss M, Abdelshehid C, Quach S, Lusch A, Graversen J, Landman J, McDougall EM (2012) Validation, correlation, and comparison of the da Vinci trainer and the daVinci surgical skills simulator using the Mimic software for urologic robotic surgical education. J Endourol 26:1629–1634

Perrenot C, Perez M, Tran N, Jehl J, Felblinger J, Bresler L, Hubert J (2012) The virtual reality simulator dV-Trainer(®) is a valid assessment tool for robotic surgical skills. Surg Endosc 26:2587–2593

Schreuder HW, Persson JE, Wolswijk RG, Ihse I, Schijven MP, Verheijen RH (2014) Validation of a novel virtual reality simulator for robotic surgery. Sci World J. doi:10.1155/2014/507076

Lerner MA, Ayalew M (2010) Does training on a virtual reality robotic simulator improve performance on the da Vinci surgical system? J Endourol 24:467–472

Sethi AS, Peine WJ, Ph D, Mohammadi Y, Sundaram CP (2009) Validation of a novel virtual reality robotic simulator. J Endourol 23:503–508

Cho JS, Hahn KY, Kwak JM, Kim J, Baek SJ, Shin JW, Kim SH (2013) Virtual reality training improves da Vinci performance: a prospective trial. J Laparoendosc Adv Surg Tech A 23:992–998

Kang SG, Ryu BJ, Yang KS, Ko YH, Cho S, Kang SH, Patel VR, Cheon J (2014) An effective repetitive training schedule to achieve skill proficiency using a novel robotic virtual reality simulator. J Surg Educ 72(3):369–376

Kang SG, Cho S, Kang SH, Haidar AM, Samavedi S (2014) The tube 3 module designed for practicing vesicourethral anastomosis in a virtual reality robotic simulator: determination of face, content, and construct validity. Urology 84:345–350

Lendvay TS, Brand TC, White L, Kowalewski T, Jonnadula S, Mercer LD, Khorsand D, Andros J, Hannaford B, Satava RM (2013) Virtual reality robotic surgery warm-up improves task performance in a dry laboratory environment: a prospective randomized controlled study. J Am Coll Surg 216:1181–1192

Alzahrani T, Haddad R, Alkhayal A, Delisle J, Drudi L, Gotlieb W, Fraser S, Bergman S, Bladou F, Andonian S, Anidjar M (2013) Validation of the da Vinci surgical skill simulator across three surgical disciplines: a pilot study. Can Urol Assoc 7(7–8):E520–E529

Connolly M, Seligman J, Kastenmeier A, Goldblatt M, Gould JC (2014) Validation of a virtual reality-based robotic surgical skills curriculum. Surg Endosc 28:1691–1694

Finnegan KT, Meraney AM, Staff I, Shichman SJ (2012) da Vinci skills simulator construct validation study: correlation of prior robotic experience with overall score and time score simulator performance. Urology 80:330–335

Hung AJ, Jayaratna IS, Teruya K, Desai MM, Gill IS, Goh AC (2013) Comparative assessment of three standardized robotic surgery training methods. BJU Int 112(6):864–871

Hung AJ, Zehnder P, Patil MB, Cai J, Ng CK, Aron M, Gill IS, Desai MM (2011) Face, content and construct validity of a novel robotic surgery simulator. J Urol 186:1019–1025

Kelly DC, Margules AC, Kundavaram CR, Narins H, Gomella LG, Trabulsi EJ, Lallas CD (2012) Face, content, and construct validation of the da Vinci skills simulator. Urology 79:1068–1072

Lyons C, Goldfarb D, Jones SL, Badhiwala N, Miles B, Link R, Dunkin BJ (2013) Which skills really matter? Proving face, content, and construct validity for a commercial robotic simulator. Surg Endosc 27:2020–2030

Culligan P, Gurshumov E, Lewis C, Priestley J, Komar J, Salamon C (2014) Predictive validity of a training protocol using a robotic surgery simulator. Female Pelvic Med Reconstr Surg 20:48–51

Hung AJ, Patil MB, Zehnder P, Cai J, Ng CK, Aron M, Gill IS, Desai MM (2012) Concurrent and predictive validation of a novel robotic surgery simulator: a prospective, randomized study. J Urol 187:630–637

Bric J, Connolly M, Kastenmeier A, Goldblatt M, Gould JC (2014) Proficiency training on a virtual reality robotic surgical skills curriculum. Surg Endosc 28:3343–3348

Foell K, Finelli A, Yasufuku K, Bernardini MQ, Waddell TK, Pace KT, Honey RJ, Lee JY (2013) Robotic surgery basic skills training: evaluation of a pilot multidisciplinary simulation-based curriculum. Can Urol Assoc 7:430–434

Gomez PP, Willis RE, Van Sickle KR (2014) Development of a virtual reality robotic surgical curriculum using the da Vinci Si surgical system. Surg Endosc. PMID: 25361648

Vaccaro CM, Crisp CC, Fellner ÞAN, Jackson C, Kleeman SD, Pavelka J (2013) Robotic virtual reality simulation plus standard robotic orientation versus standard robotic orientation alone : a randomized controlled trial. Female Pelvic Med Reconstr Surg 19(5):266–270

Zhang N, Sumer BD (2013) Transoral robotic surgery: simulation-based standardized training. JAMA Otolaryngol Head Neck Surg 139:1111–1117

Lallas CD (2012) Robotic surgery training with commercially available simulation systems in 2011: a current review and practice pattern survey from the society of urologic robotic surgeons. J Endourol Endourol Soc 26:283–293

Gavazzi A, Bahsoun AN, Van Haute W, Ahmed K, Elhage O, Jaye P, Khan MS, Dasgupta P (2011) Face, content and construct validity of a virtual reality simulator for robotic surgery (SEP robot). Ann R Coll Surg Engl 93:152–156

Shamim Khan M, Ahmed K, Gavazzi A, Gohil R, Thomas L, Poulsen J, Ahmed M, Jaye P, Dasgupta P (2013) Development and implementation of centralized simulation training: evaluation of feasibility, acceptability and construct validity. BJU Int 111:518–523

Balasundaram I, Darzi A (2008) Short-phase training on a virtual reality simulator improves technical performance in tele-robotic surgery. Int J Med Robotics Comput Assist Surg 4(2):139–145

SimSurgery AS. SEP robot. http://www.simsurgery.com/robot.html. Accessed 17 Feb 2015

Abboudi H, Khan MS, Aboumarzouk O, Guru K, Challacombe B, Dasgupta P, Ahmed K (2013) Current status of validation for robotic surgery simulators—a systematic review. BJU Int 111:194–205

Kumar A, Smith R, Patel VR (2015) Current status of robotic simulators in acquisition of robotic surgical skills. Curr Opin Urol 25:168–174

Liu M, Curet M (2015) A review of training research and virtual reality simulators for the da Vinci surgical system. Teaching and learning in medicine. Teach Learn Med 27(1):37–41

Tergas AI, Sheth SB, Green IC, Giuntoli RL, Winder AD, Fader AN (2013) A pilot study of surgical training using a virtual robotic surgery simulator. JSLS 17:219–226

Rehman S, Raza SJ, Stegemann AP, Zeeck K, Din R, Llewellyn A, Dio L, Trznadel M, Seo YW, Chowriappa AJ, Kesavadas T, Ahmed K, Guru K (2013) Simulation-based robot-assisted surgical training: a health economic evaluation. Int J Surg 11:841–846

Dulan G, Rege RV, Hogg DC, Gilberg-Fisher KM, Arain N, Tesfay ST, Scott DJ (2012) Proficiency-based training for robotic surgery: construct validity, workload, and expert levels for nine inanimate exercises. Surg Endosc 26:1516–1521

Dulan G, Rege RV, Hogg DC, Gilberg-Fisher KM, Arain N, Tesfay ST, Scott DJ (2012) Developing a comprehensive, proficiency-based training program for robotic surgery. Surgery 152:477–488

Schreuder HW, Wolswijk R, Zweemer RP, Schijven MP, Verheijen RH (2012) Training and learning robotic surgery, time for a more structured approach: a systematic review. BJOG 119:137–149

Brinkman WM, Luursema JM, Kengen B, Schout BM, Witjes JA, Bekkers RL (2013) Da vinci skills simulator for assessing learning curve and criterion-based training of robotic basic skills. Urology 81:562–566

Kang SG, Yang KS, Ko YH, Kang SH, Park HS, Lee JG, Kim JJ, Cheon J (2012) A study on the learning curve of the robotic virtual reality simulator. J Laparoendosc Adv Surg Tech A 22:438–442

Smith R, Patel V, Satava R (2014) Fundamentals of robotic surgery: a course of basic robotic surgery skills based upon a 14-society consensus template of outcomes measures and curriculum development. Int J Med Robot 10(3):379–384

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

Dr. Gould is a consultant for Torax Medical. Mr. Bric, Lumbard, and Frelich have no conflicts.

Rights and permissions

About this article

Cite this article

Bric, J.D., Lumbard, D.C., Frelich, M.J. et al. Current state of virtual reality simulation in robotic surgery training: a review. Surg Endosc 30, 2169–2178 (2016). https://doi.org/10.1007/s00464-015-4517-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-015-4517-y