Abstract

Background

As surgical robots begin to occupy a larger place in operating rooms around the world, continued innovation is necessary to improve our outcomes.

Methods

A comprehensive review of current surgical robotic user interfaces was performed to describe the modern surgical platforms, identify the benefits, and address the issues of feedback and limitations of visualization.

Results

Most robots currently used in surgery employ a master/slave relationship, with the surgeon seated at a work-console, manipulating the master system and visualizing the operation on a video screen. Although enormous strides have been made to advance current technology to the point of clinical use, limitations still exist. A lack of haptic feedback to the surgeon and the inability of the surgeon to be stationed at the operating table are the most notable examples. The future of robotic surgery sees a marked increase in the visualization technologies used in the operating room, as well as in the robots’ abilities to convey haptic feedback to the surgeon. This will allow unparalleled sensation for the surgeon and almost eliminate inadvertent tissue contact and injury.

Conclusions

A novel design for a user interface will allow the surgeon to have access to the patient bedside, remaining sterile throughout the procedure, employ a head-mounted three-dimensional visualization system, and allow the most intuitive master manipulation of the slave robot to date.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Enormous advances have been made in robotic surgery in the short time that it has been in clinical use. The operating field can be seen three-dimensionally, in high definition, and surgeons can sit comfortably at a console while performing major surgery. Some major limitations must be overcome for the field of robotics to reach its full potential in terms of safety and efficacy. Most of the current surgeon/robot interfaces rely on a master/slave orientation. When a surgeon moves their wrist or arm with the da Vinci Surgical System®, the robot mimics that same action. The PHANTOM Omni employs a master/slave relationship and uses an intuitive pencil-type interface to convey the surgeon’s motions to the robot. Other interfaces in development and production, such as the neuroArm, combine the movement of the Phantom with an additional lever for grasping functions to take place.

The purpose of this study was to identify the benefits of current surgical robotic platforms, specifically the surgeon’s user interface, maximum degrees of freedom and force feedback while assessing the limitations that they possess, such as restricted motion, vision, or compromised surgical quality.

As robotic surgical technology develops, in an effort to improve patient outcomes, the focus will be on novel interfaces that address and rectify issues that current robotic systems lack, most notably haptic feedback, and that the robots give surgeons the best opportunity to perform an operation successfully without hindrance from the technology they choose to employ. Upon examination of the elements of a successful surgical control interface, a novel user interface can be proposed.

Methods

A comprehensive review of current surgical robotic user interfaces was performed to describe the modern surgical platforms, identify the benefits, and address the issues of feedback and limitations of visualization.

Current robotic platforms and interfaces

da Vinci Surgical System

The da Vinci Surgical System® (Intuitive Surgical, Inc., Sunnyvale, CA; Fig. 1) is the most widely used robotic surgical system in the world, with more than 55,000 radical prostatectomies performed with its assistance in 2007 [1]. It has achieved this by utilizing wristed instrumentation (EndoWrist; Intuitive Surgical, Inc.), which allows seven degrees of freedom (DOF), tremor filtration, a high-resolution, three-dimensional visualization system, and a comfortable user console (Fig. 2) that allows for quick learning of even complex reconstructive tasks [2]. The da Vinci system uses a master/slave manipulator, with finger-cuff telemanipulators for your index and thumb to create the familiar grasping motion. This design is meant to be intuitive for a first-time user, which allows for rapid training of novices.

The da Vinci Surgical System® has comfortable ergonomics, offering a padded head rest and arm bars, adjustable finger loops, and an intraocular distance that can be adjusted based on the individual’s needs, making it a valuable tool for surgeons [3].

Despite the system’s advantages, two main criticisms of the da Vinci exist. With the da Vinci system, the surgeon is seated at a work station, removed from the bedside, distancing them from the other members of the operating team. The second issue not addressed by the da Vinci system is the lack of haptic feedback. Shah et al. [4] showed that the lack of haptics does not seem to affect surgical margin rates in robotic-assisted laparoscopic prostatectomy (RALP). This is largely due to its improved three-dimensional visualization system that allows for better representation of the tissue being manipulated.

While the introduction of the high-definition, three-dimensional display has greatly helped the surgeon’s visualization for tissue manipulation, the da Vinci still lacks force feedback. Force feedback in delicate procedures, especially those involving the bowel, heart, lungs, or other fragile tissues, is absolutely necessary to maximize surgical outcomes. The surgeon must know how much pressure he is applying to a tissue, to avoid causing potentially life-threatening surgical complications. Without this feedback, the surgeon must base his or her intraoperative decisions solely on visual cues encountered in the dissection [5].

The MASTER, a master–slave transluminal endoscopic robot

The MASTER is a novel robotic-enhanced endosurgical system designed for natural orifice transluminal endoscopic surgery (NOTES) and endoscopic submucosal dissection (ESD) [6]. Surgeons strive to perform surgeries that leave no visible scars by implementing these approaches. The MASTER consists of a master controller, a telesurgical workstation, and a slave manipulator that holds two end-effectors: a grasper, and a monopolar electrocautery hook. The master controller, connected to the manipulator by electrical and wire cables, is attached to the wrist and fingers of the operator. Movements of the operator are detected and converted into control signals, driving the slave manipulator (Fig. 3) via a tendon-sheath power transmission mechanism, allowing nine DOF [7]. The new platform is slightly larger than the size of the endoscope, which can pass through an overtube with an inner diameter of 16.7 mm (Fig. 4). The MASTER system’s nine DOF allow the operator to reach, position, and orient the attached manipulators at any point within the workspace. Providing effective triangulation, the platform can facilitate bimanual coordination of surgical tasks by minimizing the need to maneuver the endoscope and exerting off-axis force on the site of interest.

The maneuverability of the platform includes two aspects: one of the endoscope and one of the robotic systems. Although the robotic manipulators are attached to the tip of endoscope, its maneuverability is not affected. The endoscope still has the ability to maneuver in all planes, including vertical, horizontal, and lateral, and the shaft can perform 1800 retroflexion. The maneuvering of the robotic manipulators is controlled intuitively by a human/machine interface, the master console. Successful ESD and wedge hepatic resections have been performed on live porcine models. The MASTER exhibited good grasping and cutting efficiency throughout, and surgical maneuvers were achieved with ease and precision. There was no incidence of excessive bleeding or stomach wall perforation [8, 9].

MiroSurge robotic system

The MiroSurge robot provides the surgeon with seven DOF inside the patient. It consists of four arms, designed to mimic human arms at approximately 10 kg each, with two of these arms providing force feedback (Fig. 5). A third arm is equipped with two cameras that allow for a three-dimensional display (DLR Institute of Robotics and Mechatronics, Germany) [10, 11].

The MiroSurge interface is one that closely mimics the da Vinci, with its finger loops for the index finger and thumb and free movement of the end-effectors. The MiroSurge is different in that the images of the operation are displayed on a video monitor right in front of the operating surgeon, instead of the surgeon sitting at a console with their head looking down into a display. To its advantage, MiroSurge allows free movement of the surgical arm autonomously while keeping the end-effector in place. This function allows a member of the team to move a joint or provide for more room at the operating table, without disturbing the placement or location of one of the arms. A biopsy attachment allows for the positioning and execution of a biopsy as dictated by a preoperative plan laid out by the surgeons [10, 12].

The MiroSurge, although not commercially available at this time, seems to have a good vision for their robot. The MiroSurge, allows three-dimensional visualization and force feedback, giving surgeons the best opportunity to visualize the tissue being worked on and feel tension applied to that tissue [13]. Each joint contains torque sensing capabilities, position sensors, and programmable software technology.

RAVEN Surgical System/PHANTOM Omni

Despite all the benefits of the da Vinci Surgical System, it was not designed for harsh environments. With a reduced size, weight, and wireless connection, the RAVEN robot was designed and tested in field experiments. It is equipped with articulated arms, made of aluminum, and can utilize a variety of surgical tools: scalpels, graspers, scissors, and clip appliers (Fig. 6). The many joints provide six DOF. The arms are designed with embedded steel cables, similar to da Vinci, but the brushless DC motors running them are mounted outside the arms. This design allows the arms to be smaller and lighter.

During three days of intense trials in a desert environment, exposed to high temperatures and dust winds, two surgeons tested the robot. The robot performed precise surgical skills while operated remotely via wireless connection. A 100-meter distance was set between the robot and the surgeon, with wireless connection provided via an airborne communication link, an unmanned aircraft vehicle, flying in circles 200 m above the experimental area [14, 15].

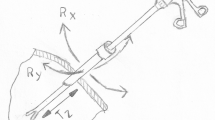

Surgeons operated the robot from a tent set 100 meters away, via two SensAble™ PHANTOM® Omni (SensAble Technologies, Inc., Woburn, MA) haptic controllers with three DOF and force feedback capabilities (Fig. 7). It has two pen-like controllers that sense and translate their position and orientation in a mechanically constrained volume of 1,340 cm3. The controllers can apply a force, in any direction, up to 3.3 Newtons [16].

The actual position of the robot is reported via encoders mounted on the six brushless motors. The computer uses the encoder positions to calculate whether the robot has reached a mechanical limit, or if there has been a collision between the robot parts, and then uses the force feedback capabilities of the controllers to apply a corresponding force to the surgeons’ hands, alerting the surgeon of an obstruction [17].

Two buttons on the pen end-effector are used to control the open/close position of the robotic gripper. Pressing the far button closes the gripper and pressing the near button opens the gripper. With no force measurement of the gripping force, the surgeon must use visual feedback to control the force, which for tissue is usually identified by both deformation information and color changes [18]. The surgeons successfully accomplished the tasks, despite the extremes of the surrounding environment and the presence of slight video picture pixelation and connection delays [15].

NeuroArm

The neuroArm is a master/slave robot that utilizes features of both the da Vinci [19] and the Phantom robotic surgical systems. This MRI-compatible, image-guided, computer-assisted robot is designed specifically for neurosurgery and utilizes a pencil-type master control (Fig. 8). The neuroArm has a lever for grasping objects, as well as an easily accessible button for use with instruments that are merely on/off, such as cautery. The console is what sets the neuroArm apart. The surgeon has access to numerous screens for viewing of CT, MRI, and other images that they may want to reference during an operation (Fig. 9). A binocular area allows the surgeon to view their working space via the surgical microscope.

The neuroArm can easily exchange instrumentation, visualize the operative field, as well as access any necessary radiologic images. Through its custom-made titanium tool tips with three DOF, the neuroArm can provide haptic feedback [20]. Each of the tools incorporates two strain-gauges that convey force feedback upon the user interface [21].

By allowing haptics, three-dimensional visualization, a fairly intuitive pencil-type interface, and the ability to visualize radiologic images with the operation, the neuroArm interface design incorporates some of the most instrumental aspects of surgery, maximizing the results obtained from the robotic interface.

UCSC Exoskeleton—prototype 3

The University of California Santa Cruz is developing an exoskeleton robot (Fig. 10) of interesting design, with surgical possibilities [22, 23]. Designed to be an assistive device for patients who are weakened or disabled, the exoskeleton has joints that mimic the operator’s joints and assist in bearing the load that the patient bears [24]. The idea is to evolve eventually to controlling the robot at the neuromuscular level for assisting motion.

Joints linking the movements of the exoskeleton to that of the operator could easily be transmitted to the corresponding joints of a slave robot. Highly intuitive, surgeons would merely have to mimic a procedure as though they were doing it as an open procedure. The full movements of the surgeon could be scaled down to allow for more precise operations and it would allow the surgeons more freedom of motion [assessment of surgical skills].

Because this robot was not intended for surgical use, critiquing its value as a surgical robot is difficult. However, aspects of this robot can be expounded upon for use and integration into the field of robotic surgery. A future iteration would need to include some force feedback, a more ergonomic design, and an exoskeleton that fits closer to the operator’s body. This way, theoretically, the surgeon could be standing near the operating table, draped in the normal sterile fashion, with the robot master underneath their gown. The end goal would be a design that eliminates constraint on the surgeon, so that they are not bothered or hindered by the robotic interface, and can focus all of their efforts on the operation at hand.

CAST, University of Nebraska Medical Center—Grasper Interface

The team at the University of Nebraska Medical Center’s Center for Advanced Surgical Technology (CAST) is developing a miniature in vivo robot, capable of performing numerous operations within the abdominal cavity (Fig. 11). Once inside the body, the surgeon controls the robot using standard laparoscopic-type grasper handles, attached to a pivoting arm system [25]. This system (Fig. 12) mimics the joints of the human arm and allows for the motion of the surgeon’s arm and hand to be transmitted to the robot, which has six DOF [26].

The graspers are familiar to surgeons accustomed to laparoscopic surgery and do not take much time getting used to. However, this interface attached to the robot master-end has produced numerous obstacles and is largely responsible for the research behind the current paper. The pivoting arm causes problems, especially when the surgeon performs complex maneuvers that involve multiple joints. The master arms are fixed to a chair that the surgeon is sitting in and, therefore, unable to swivel out of the way when they get placed in certain positions. The surgeon’s own hand and wrist often are constrained by the placement of the robotic arms, restricting movement.

Data Gloves

The medical applications for virtual reality (VR) platforms are just starting to be fully realized as the field of robotic medicine has grown. Various types of VR include immersive and augmented. In immersive VR, the user wears goggles or some type of visual screen, which conveys an image of the desired place; the user feels as though they are fully within the environment, able to interact with their surroundings. In augmented VR, a layer of computer images are placed over the real world to enhance or highlight certain features. Augmented VR can be seen as the integration of computer-generated images (possibly from preoperative MRI, etc.) with live video or other real-time images [27]. The da Vinci system and other surgical robots utilize some sort of VR platform to give surgeons the feeling of operating directly on the patient. Surgeons are able to feel fully immersed in the operation, as though the procedure were being done in an open manner [28, 29].

VR and three-dimensional devices allow a user to interact with a virtual world. Data gloves, such as the image-based data glove (Fig. 13) or the CyberGlove II (Fig. 14) can be used for VR simulation (CyberGlove II Systems LLC, San Jose, CA). The CyberGrasp can accompany the CyberGlove as an exoskeleton to provide force feedback in the virtual environment. This wireless system allows full range of motion of the hand without restricting movement, and the glove has flexible sensors that can measure position and movement of the fingers and wrist of the operator.

The possibility of adding preoperative mapping and “no-go” zones would allow a surgeon to map out areas preoperatively that they do not want the robot to intrude. With the help of sensors on the glove, as well as the augmented VR that could display the operative window, surgeons could perform the operation and minimize unwanted consequences.

Discussion

As robotic surgery gains popularity in market share of future surgical procedures, in the United States and abroad, surgeon/robot interaction will be emphasized. This is not a trivial issue, as evidenced by a number of different options available both to engineers and physicians [30]. The robots will become more sophisticated and more capable, requiring highly intuitive control systems to be built, for a surgeon to take advantage of all the opportunities.

Innovations in robotic surgery strive to improve patient outcomes and should address and rectify issues that current robotic systems lack, most notably haptic feedback, and provide the surgeon with the best opportunity to perform an operation successfully.

An interface that allows the surgeon to operate the robot from the patient bedside would use data gloves, worn directly over the surgeon’s hands, as the master for the surgical robot. The surgeon could cover the data gloves with standard sterile gowning and gloving while being able to stand at the patient bedside to communicate and interact with the rest of the surgical team.

This design would need to incorporate the necessary seven DOF allowed by the human arm, as well as integrate haptics for the design to be of maximal benefit for the patient. Haptics have been defined by Okamura as kinesthetic (involving forces and positions of the muscles and joints) or cutaneous (tactile, related to the skin), encompassing texture, vibration, touch, and temperature [31]. The gloves would have motion-detecting sensors, as used in VR simulation and video game production, attached to the glove at all of the critical moving joints. This way, the sensors, and in turn the robot, would be able to identify its position in space and its position relative to the other sensors. This would allow the robot to move, in real time, in the precise manner that the surgeon moves.

To account for haptic feedback, strain sensors would be incorporated within the gears of the robot, providing a scaled amount of feedback to the surgeon [32]. This could be accomplished through a vibratory sensation that becomes more intense as surgeons apply greater pressures to the tissues. Advances in three-dimensional visualization have helped to reduce unintended surgical consequences; however, it is not a substitute for force feedback. This technology would give that advantage back to the operating surgeon. Haptic feedback, despite the argument made by Shah et al., has been shown to reduce of tissue injury and suture breakage, while at the same time maintain a reasonable operating time, under an experienced surgeon [33–37].

The final design would include a head-mounted display (HMD) for the surgeon that had three-dimensional capabilities. This would allow the surgeon to focus on the operation but still be able to remove the display if they wanted to visualize something else in the operating room. Much like standard “loops” that surgeons wear, an HMD would allow for zooming, change in light contrast, and possibly thermo-sensing to identify at-risk tissue.

As a result, a proper surgical control system must have a number of fundamental features to allow surgeons to perform complicated robotic surgeries. Haptic interfaces, virtual and augmented reality, natural control surfaces allowing for surgeon movement, and the opportunity for sterility are fundamental requirements that each engineering robotic group should consider.

Finally, as more robotic systems become purpose-built, interfaces also will have to be purpose-built. For instance, a control for a robot performing orthopedic and milling maneuvers may require different specifications than a robot used for small, fine-motor movements, such as suturing or microscopic tissue manipulation.

References

Su L (2009) Role of robotics in modern urologic practice. Curr Opin Urol 19(1):63–64

Lee DI (2009) Robotic prostatectomy: what we have learned and where we are going. Yonsei Med J 50:177–181. doi:10.3349/ymj.2009.50.2.177

Palep JH (2009) Robotic assisted minimally invasive surgery. J Minim Access Surg 5(1):1–7

Shah A, Okotie OT, Zhao L, Pins MR, Bhalani V, Dalton DP (2008) Pathologic outcomes during the learning curve for robotic-assisted laparoscopic radical prostatectomy. Int Braz J Urol 34(2):159–163

Tan GY, Goel RK, Kaouk JH, Tewari AK (2009) Technological advances in robotic-assisted laparoscopic surgery. Urol Clin North Am 36(2):237–249

Phee SJ, Low SC, Huynh VA, Kencana AP, Sun ZL, Yang K (2009) Master and slave transluminal endoscopic robot (MASTER) for natural orifice transluminal endoscopic surgery (NOTES). Conf Proc IEEE Eng Med Biol Soc 2009:1192–1195

Sun Z, Ang RY, Lim EW, Wang Z, Ho KY, Phee SJ (2011) Enhancement of a master-slave robotic system for natural orifice transluminal endoscopic surgery. Ann Acad Med Singapore 40(5):223–228

Phee SJ, Ho KY, Lomanto D, Low SC, Huynh VA, Kencana AP, Yang K, Sun ZL, Chung SC (2010) Natural orifice transgastric endoscopic wedge hepatic resection in an experimental model using an intuitively controlled master and slave transluminal endoscopic robot (MASTER). Surg Endosc 24(9):2293–2298

Ho KY, Phee SJ, Shabbir A, Low SC, Huynh VA, Kencana AP, Yang K, Lomanto D, So BY, Wong YY, Chung SC (2010) Endoscopic submucosal dissection of gastric lesions by using a Master and Slave Transluminal Endoscopic Robot (MASTER). Gastrointest Endosc 72(3):593–599

Hagn U, Konietschke R, Tobergte A, Nickl M, Jörg S, Kübler B, Passig G, Gröger M, Fröhlich F, Seibold U, Le-Tien L, Albu-Schäffer A, Nothhelfer A, Hacker F, Grebenstein M, Hirzinger G (2010) DLR MiroSurge: a versatile system for research in endoscopic telesurgery. Int J Comput Assist Radiol Surg 5(2):183–193

Kuebler B, Seibold U, Hirzinger G (2005) Development of actuated and sensor integrated forceps for minimally invasive robotic surgery. Int J Med Robot 1(3):96–107. doi:10.1581/mrcas.2005.010305and10.1002/rcs.33

Konietschke R, Hagn U, Nickl M, Jörg S, Tobergte A, Passig G, Seibold U, Le Tien L, Kuebler B, Gröger M, Fröhlich F, Rink C, Albu-Schäffer A, Grebenstein M, Ortmaier T, Hirzinger G (2009) The DLR MiroSurge: a robotic system for surgery. Video contribution presented at ICRA

Suppa M, Kielhofer S, Langwald J, Hacker F, Strobl KH, Hirzinger G (2007) The 3D-modeller: a multi-purpose vision platform. IEEE Int Conf Robot Autom 2007:781–787. doi:10.1109/ROBOT.2007.363081

Harnett BM, Doarn CR, Rosen J, Hannaford B, Broderick TJ (2008) Evaluation of unmanned airborne vehicles and mobile robotic telesurgery in an extreme environment. Telemed J E Health 14(6):539–544

Rosen J, Hannaford B (2006) Doc at a distance. IEEE Spectrum 6:34

Bornhoft JM, Strabala KW, Wortman TD, Lehman AC, Oleynikov D, Farritor SM (2011) Stereoscopic visualization and haptic technology used to create a virtual environment for remote surgery. Biomed Sci Instrum 47:76–81

Zhang X, Nelson C, Oleynikov D (2011) Natural haptic interface for single-port surgical robot with gravity compensation. Int J Med Robot 7(S1). Epub 4 Nov 2011

Lum M, Friedman D, Rosen J et al (2009) The RAVEN–design and validation of a telesurgery system. Int J Rob Res 28(9):1183–1197

Lang MJ, Greer AD, Sutherland GR (2011) Intra-operative robotics: NeuroArm. Acta Neurochir Suppl 109:231–236

Samad MD, Hu Y, Sutherland GR (2010) Effect of force feedback from each DOF on the motion accuracy of a surgical tool in performing a robot-assisted tracing task. Conf Proc IEEE Eng Med Biol Soc 2010:2093–2096

Sutherland GR, Latour I, Greer AD (2008) Integrating an image-guided robot with intraoperative MRI. IEEE Eng Med Biol Mag 27(3):59–65

Joel P, Rosen J, Burns S (2007) Upper-limb powered exoskeleton design. IEEE ASME Trans Mechatron 12(4):408–417

Joel P, Powell J, Rosen J (2009) Isotropy of an upper limb exoskeleton and the kinematics and dynamics of the human arm. Appl Bionics Biomech 6(2):175–191

Ettore C, Rosen J, Joel P, Burns S (2006) Myoprocessor for neural controlled powered exoskeleton arm. IEEE Trans Biomed Eng 53(11):2387–2396

Wortman TD, Strabala KW, Lehman AC, Farritor SM, Oleynikov D (2011) Laparoendoscopic single-site surgery using a multi-functional miniature in vivo robot. Int J Med Robot 7(1):17–21

Lehman A, Wood N, Farritor S, Goede M, Oleynikov D (2011) Dexterous miniature robot for advanced minimally invasive surgery. Surg Endosc 25(1):119–123

Teber D, Baumhauer M, Guven EO et al (2009) Robotic and imaging in urological surgery. Curr Opin Urol 19:108–113

Pamplona VF, Fernandes LAF, Prauchner J, Nedel LP, Olivier MM (2008) The image-based data glove. Proceedings of X Symposium on Virtual Real (SVR 2008) 204–211

Sturman DJ, Zeltzer D (1994) A survey of glove based-input. IEEE Comput Graph Appl 14(1):30–39

Faraz A, Payandeh S (2000) Engineering approaches to mechanical and robotic design for minimally invasive surgery (MIS). Kluwer Academic Publishers, Boston, pp 1–11

Okamura AM (2009) Haptic feedback in robot-assisted minimally invasive surgery. Curr Opin Urol 19(1):102–107

Tholey G, Desai JP (2007) A general purpose 7 DOF haptic device: applications towards robot-assisted surgery. IEEE/ASME Trans Mech 12(6):662–669

Mavash M (2006) Novel approach for modeling separating forces between deformable bodies. IEEE Trans Inf Technol Biomed 10(3):618–626

Mavash M, Hayward V (2004) High fidelity haptic synthesis of contact with deformable bodies. IEEE Comput Graph Appl 24(2):28–55

Mavash M, Voo LM, Kim D et al (2008) Modeling the forces of cutting with scissors. IEEE Trans Biomed Eng 55(3):848–856

Weiss H, Ortmaier T, Maass H et al (2003) A virtual reality based haptic surgical training system. Comput Aided Surg 8(5):269–272

Wagner CR, Howe RD (2007) Force feedback benefit depends on experience in multiple degree of freedom robotic surgery. IEEE Trans Rob 23(6):1235–1240

Disclosures

Dmitry Oleynikov is a stockholder of Virtual Incision Corporation. R. Stephen Otte, Anton Simorov, and Courtni Kopietz have no conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Simorov, A., Otte, R.S., Kopietz, C.M. et al. Review of surgical robotics user interface: what is the best way to control robotic surgery?. Surg Endosc 26, 2117–2125 (2012). https://doi.org/10.1007/s00464-012-2182-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-012-2182-y