Abstract

We prove upper bounds on the graph diameters of polytopes in two settings. The first is a worst-case bound for polytopes defined by integer constraints in terms of the height of the integers and certain subdeterminants of the constraint matrix, which in some cases improves previously known results. The second is a smoothed analysis bound: given an appropriately normalized polytope, we add small Gaussian noise to each constraint. We consider a natural geometric measure on the vertices of the perturbed polytope (corresponding to the mean curvature measure of its polar) and show that with high probability there exists a “giant component” of vertices, with measure \(1-o(1)\) and polynomial diameter. Both bounds rely on spectral gaps—of a certain Schrödinger operator in the first case, and a certain continuous time Markov chain in the second—which arise from the log-concavity of the volume of a simple polytope in terms of its slack variables.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The polynomial Hirsch conjecture asks whether the diameter of an arbitrary bounded polytope \(P=\{x\in \mathbb {R}^d:Ax\le b\}\) is at most a fixed polynomial in m and d (which, since \(m > d\) is atmost a fixed polynomial in m). This conjecture is widely open, with the best known upper bounds being \((m-d)^{\log _2 d- \log _2\log d +O(1)}\) ([31], see also [18, 33]) and O(m) for fixed d ([4, 19]); the best known lower bound is \((1+\epsilon )m\) for some \(\epsilon >0\) when d is sufficiently large [25]. Given this situation, there has been interest in the following potentially easier questions:

-

Q1.

Assuming A, b have integer entries, bound the diameter of P in terms of their size.

-

Q2.

Assuming A, b are sampled randomly from some distribution, bound the diameter of P with high probability.

Progress on these questions [5, 6, 8, 9, 11, 12, 15, 29, 35] has relied mostly on techniques from polyhedral combinatorics, integral geometry, probability, and operations research (e.g., analysis of the simplex algorithm and its cousins).

On the other hand, the theory of mixed volumes of convex sets has developed largely separately over the past century, with several celebrated achievements including the Alexandrov–Fenchel inequality [1, 2] and more generally the Hodge-Riemann relations for certain algebras associated with simple polytopes [20, 21, 32]. When restricted to strongly isomorphic convex polytopes, the Alexandrov Fenchel inequality is equivalent to a statement about the signature of a certain quadratic form. McMullen, in his two articles [20, 21] greatly generalized this statement, leading to a geometric interpretation of Stanley’s proof [30] of the g-conjecture, which concerns the face numbers of simple polytopes.

One consequence of this theory is that a certain Schrödinger operator (weighted adjacency matrix plus diagonal) associated with the graph of every bounded polytope has a spectral gap [17] (see Definition 2.1 and Theorem 2.2). We use this fact to make progress on Q1 and Q2. In the first setting, we show the following theorem, where \(\Vert \cdot \Vert _\infty \) denotes the maximum magnitude entry of a matrix.

Theorem 1.1

Suppose \(P=\{x\in \mathbb {R}^d:Ax\le b\}\) is a bounded polytope with integer coefficients \(A\in \mathbb {Z}^{m\times d},b\in \mathbb {Z}^m\) such that every \(d\times d\) minor of [A|b] has determinant bounded by \(\Delta \). Then P has diameter

Theorem 1.1 follows from a more geometric result (Theorem 3.2) stated in terms of the angles between the \(d-2\)-faces of the polar of P, which is proven in Sect. 3. It may be contrasted with the best previously known result of this kind due to [11], who achieved a bound of \(O(d^3\Delta _{d-1}^2)\), where \(\Delta _{d-1}\) is the largest \((d-1)\times (d-1)\) subdeterminant of A, in particular independent of b. The two bounds are incomparable in general, but as \(\Delta \le d\Vert b\Vert _\infty \Delta _{d-1}\) it is seen that (1) is nearly linear in \(\Delta _{d-1}\) whereas the result of [11] is quadratic, which yields an improvement for large \(\Delta _{d-1}\) (compared to \(\Vert A\Vert _\infty ,\Vert b\Vert _\infty ,\log m)\). However, our diameter bound is nonconstructive whereas [11] show how to efficiently find a path between any two vertices of P; we refer the reader to the introduction of that paper for a more thorough discussion of previous work in this vein (originally initiated by [5, 14]). At a high level, the reason we are able to save a factor of d in comparison with previous works is that they rely on combinatorial expansion arguments, whereas we use spectral expansion, which is amenable to a “square root” improvement using Chebyshev polynomials, first introduced in [28, Thm. 3.1].

Regarding Q2, the study of diameters of random polytopes began with the influential work of Borgwardt [7, 8], who considered A with i.i.d. standard Gaussian entries and \(b=1\). Borgwardt showed the following “for each” guarantee: for any fixed objective functions \(c,c'\in \mathbb {R}^d\), the combinatorial distance between the vertices \(x,x'\) of P maximizing \(\langle c,x,\rangle ,\langle c',x'\rangle \) is at most \(O(d^{3/2}\sqrt{\log m})\) in expectation, provided \(m\rightarrow \infty \) sufficiently rapidly. This type of result was extended to the “smoothed unit LP” model by Spielman and Teng in the seminal work [29]; in this model one takes

where \(v_j\sim N(a_j,\sigma ^2)\) for some fixed vectors \(a_1,\ldots ,a_m\) normalized to have \(\Vert a_j\Vert \le 1\). The original \(\textrm{poly}(m,d,\sigma ^{-1})\) path length bound of [29] was improved and simplified in [12, 13, 35]; a key ingredient in each of these results was a “shadow vertex bound” analyzing the expected number of vertices of a two-dimensional projection of P. Note that all of these results provide “for each” guarantees: at best they bound the distance between a single pair of vertices, not between all pairs.

Our second contribution is to prove that for the smoothed unit LP model, most pairs of vertices in P are polynomially (in \(m,d,\sigma ^{-1}\)) close with high probability, where most is defined with respect to a certain locally defined measure on the vertices known as the mean curvature measure \(\chi _2\) in convex geometry (see [26, 27]; we recall the definition in Sect. 4). In the language of random graph theory, this means that the graph of P likely contains a “giant component” with respect to \(\chi _2\) which is of small diameter. Let \(\chi _2\) denote the mean curvature measure on the facets of \(P^\circ \), which corresponds naturally to a measure on the set of vertices of P, denoted \(\Omega \), when P is bounded and contains the origin.

Theorem 1.2

Assume P is a random polytope sampled from the smoothed LP model. Then with probability at least \(1-1/\textrm{poly}(m)\), for every \(\psi >0\) there is a subset \(G:=G(\psi )\subset \Omega \) with \(\chi _2(G)\ge (1-\psi )\chi _2(\Omega )\) such that the vertex diameter of G is at most

We prove Theorem 1.2 in Sect. 4.5, where we deduce it from a more refined theorem (Theorem 4.1, which includes explicit powers of m, d) for a certain class of well-rounded polytopes. The idea of the proof is to consider a certain continuous time Markov chain whose states are the vertices of P. This chain automatically has a large spectral gap by Theorem 2.2 and the main challenge is to bound its average transition rate. This is carried out in Sects. 4.2–4.4 and involves further use of the Alexandrov–Fenchel inequalities, tools from integral geometry, Gaussian anticoncentration, and an application of the shadow vertex bound of [12].

Remark 1.3

It was pointed out to us by an anonymous referee and by D. Dadush that there is a ”folklore” result that the average distance between a random pair of vertices (chosen by optimizing two uniformly random objective functions) of P as above is polynomial in \(m,d,\sigma ^{-1}\); this is seen by a Fubini type argument and the shadow vertex bounds of [12, 13, 29, 35]. Our paper considers a different measure on the vertices, and we are not aware of any relation between the two.

Remark 1.4

(Expansion of Polytopes) There has been a sustained interest in studying the expansion of graphs of combinatorial polytopes beginning with [22] which conjectured that all 0/1 polytopes have expanding graphs. The recent breakthrough [3] resolved this conjecture for the special case of matroid polytopes using techniques related to high dimensional expanders and the geometry of polynomials, which may be described as capturing “discrete log-concavity”. The present work, in contrast, uses “continuous log-concavity” (stemming from the Brunn-Minkowski inequality) to control the spectral gaps of certain matrices associated with the graphs of polytopes with favorable geometric properties.

We note that the Hirsch conjecture is already known to hold for 0/1 polytopes [23].

1.1 Preliminaries and Notation

We recall some basic terminology and facts regarding polytopes; the reader may consult [27, Chap. 4] for a more thorough introduction.

We denote the convex hull of a set of points by \(\text {conv}(\cdot )\) and its affine hull by \(\text {aff}(\cdot )\). Let \(P=\{x\in \mathbb {R}^d:Ax\le b\}\) with \(A\in \mathbb {R}^{m\times d}, b\in \mathbb {R}^m_{>0}\) be a bounded polytope containing the origin in its interior. Its polar is the polytope

where \(a_1^T,\ldots ,a_m^T\) are the rows of A.

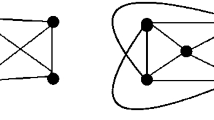

A polytope in \(\mathbb {R}^d\) is called simple if each of its vertices is contained in exactly d (codimension-1) facets, and simplicial if each codimension-1 facet contains exactly d vertices. Unless otherwise noted, “facet” refers to a codimension-1 facet. The polar of a simple polytope is simplicial and vice versa.

The 1-dimensional faces of a polytope are called edges, and are all line segments when it is bounded. The vertex diameter of a bounded polytope P is the diameter of the graph of its vertices and edges. Two facets of a polytope are adjacent if their intersection is a \((d-2)\)-face of the polytope. The facet diameter of a polytope K is the diameter of the graph with vertices given by its facets and edges given by the adjacency relation on facets. By duality, the vertex diameter of a simple polytope P is equal to the facet diameter of \(P^\circ \).

We use \(\text {dist}(\cdot ,\cdot )\) to denote the Euclidean distance between two subsets of \(\mathbb {R}^d\), and

to denote the Hausdorff distance between two sets.

We use \(V(K[j],L[d-j])\) to denote the mixed volume of j copies of K and \(d-j\) copies of L for convex bodies \(K,L\subset \mathbb {R}^d\). The Alexandrov–Fenchel inequalities imply that these are log-concave, in the sense that for \(j_1,j_2,j=\beta j_1+(1-\beta )j_2\) integers in \(\{0,\ldots ,d\}\) with \(\beta \in [0,1]\) then

The above inequality follows from the usual form of the Alexandrov–Fenchel inequalities in which \(j_1, j\) and \(j_2\) are consecutive positive integers and \(\beta = \frac{1}{2}.\) We use C to denote absolute constants whose value may change from line to line, unless specified otherwise.

2 Eigenvalues of the Hessian and Spectral Gaps

In this section, we recall that a certain matrix associated with every bounded polytope has exactly one positive eigenvalue.

Definition 2.1

(Formal Hessian) For K a bounded polytope containing the origin in its interior with N facets labeled \(\{1,\ldots ,N\}\), let H(K) denote the \(N\times N\) matrix with entries

where \(F_{ij}\) is the intersection of facets i and j, (and by abuse of notation is also used to denote their \(d-2\)-dimensional volume) and \(\theta _{ij}\) is the angle between the vectors normal to those faces, facing away from the origin.

When K is simple, H(K) is the Hessian of the volume of \(K(c)=\{x\,|\, Mx\le c\}\) with respect to the slack vector \(c>0\). (see [27, Chap. 4]). Log-concavity of the volume implies that this Hessian has exactly one positive eigenvalue. Izmestiev [17] has shown via an approximation argument that this remains true for the formal Hessian of any polytope.

Theorem 2.2

(Theorem 2.4 of [17]) \(H(K)\) has exactly one positive eigenvalue for any bounded polytope \(K\).

We include a self-contained proof of Theorem 2.2 in the Appendix of the arXiv version of this paper [24] for completeness.Footnote 1

We will apply Theorem 2.2 to certain matrices derived from the formal Hessian and the following diagonal scaling, which plays an important role in the remainder of the paper.

Definition 2.3

Let \(K,F_{ij},\theta _{ij}\) be as in Definition 2.1. Then let D(K) denote the \(N\times N\) positive diagonal matrix with entries \((D(K))_{ii}=\sum _kF_{ik}\tan (\theta _{ik}/2)\). Note that \(\theta _{ik}\ne \pi \) whenever \(F_{ik}=0\) since parallel facets of a convex polytope cannot intersect.

Lemma 2.4

(Spectral Gaps from Log-Concavity) Let K be a polytope and take \(H:=H(K),D:=D(K)\). Let L be the graph Laplacian with entries:

Then

-

1.

\(D^{-1/2}HD^{-1/2}\) has exactly one eigenvalue at 1 with the rest of the eigenvalues in \((-\infty ,0]\). The eigenvector corresponding to this eigenvalue is \(D^{1/2}\textbf{1}\).

-

2.

\(-D^{-1}L\) has exactly one eigenvalue at zero, with the rest of the eigenvalues in \((-\infty ,-1]\). The left corresponding to this eigenvalue is \(D\textbf{1}\).

Proof

Observe that H is “nearly” a graph Laplacian in the sense that:

where we have used the identity \(\frac{1-\cos {\theta }}{\sin \theta }=\tan (\theta /2)\). By Sylvester’s inertia law, the signature of H matches that of

which must therefore have exactly one positive eigenvalue by Theorem 2.2. However, \(L\succeq 0\) and \(L\textbf{1}=0\), so by Sylvester’s law \(-D^{-1/2}LD^{-1/2}\preceq 0\) with at least one eigenvalue equal to zero. Thus, \(D^{-1/2}HD^{-1/2}\) has exactly one eigenvalue equal to one, with eigenvector \(D^{1/2}\textbf{1}\) and the rest of the eigenvalues nonpositive, establishing the first claim. The second claim follows from (8) and the similarity of \(D^{-1}L\) and \(D^{-1/2}LD^{-1/2}\). \(\square \)

3 Diameter in Terms of Angles and Bit Length

In this section we use the spectral gap bound of Lemma 2.4(1) to give a bound on the diameter of a polytope specified by integer constraints. We begin by slightly generalizing the argument of [10, 28, 34], who used Chebyshev polynomials to control the diameter of regular (nonnegative weighted) graphs in terms of their spectra, to handle the matrix \(D^{-1/2}HD^{-1/2}\) by appropriately controlling its negative entries and top eigenvector.

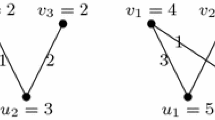

Lemma 3.1

(Diameter in terms of Spectrum) Let A be a weighted real symmetric adjacency matrix (possibly with self-loops and negative weights) for a graph G on N vertices. Suppose for some \(g>0\) there is exactly one eigenvalue of A at \(1+g\) with corresponding unit eigenvector v, the smallest absolute entry of which is \(v_{\min }\). Further suppose that the rest of the eigenvalues of A are in the interval \([-1,1]\). Then the diameter of G is at most

Proof

Note that if \(M\in \text {span}(I,A,\ldots ,A^{k})\) then \(e_i^TMe_j\ne 0\) implies that there is a path in G from i to j of length at most k. To this end, consider \(T_k(A)\) where \(T_k\) is the degree k Chebyshev Polynomial of the first kind. If we find that \(T_k(A)\ne 0\) entry-wise, then we can conclude the diameter of G is at most k. Let

be the spectral decomposition of A. Let \(|\cdot |\) denote the entry-wise absolute value. Then

since \(|T_k(x)|\le 1\) on \([-1,1]\). We would therefore have \(T_k(A)\ne 0\) entry-wise if N is smaller then the smallest absolute entry of \(vv^TT_k(1+g)\), which is lower bounded by

It suffices to pick

which is implied by taking

and using \(\log (1+\sqrt{2\,g})\ge \sqrt{2\,g}-g+2\sqrt{2}g^3/3\ge \sqrt{g}/2\) for g(0, 1/2]; if \(g\ge 1/2\) we may replace A by A/2g (which does not violate the hypotheses of the Lemma) and reach the desired conclusion.

\(\square \)

Theorem 3.2

Let \(P=\{x\in \mathbb {R}^d:Ax\le b\}\) be a bounded polytope containing the origin with \(m\ge d\), \(A\in \mathbb {Z}^{m\times d},b\in \mathbb {Z}^{m}\). Assume all angles between pairs of adjacent facets of \(P^o\) are contained in \([\theta _0, \pi -\theta _0]\) and let the magnitude of the largest \(d\times d\) subdeterminant of [A|b] be \(\Delta \). Then the vertex diameter of P is at most

Proof

Put \(D:=D(P^o)\) and \(H:=H(P^o)\). By Lemma 2.4, \(D^{-1/2}HD^{-1/2}\) is real symmetric with one eigenvalue at 1 and the rest at most 0. We can bound its smallest eigenvalue by using Lemma 3.3 and considering the similar matrix \(D^{-1}H\). We upper bound the absolute row sum of the ith row of \(D^{-1}H\) by

Taking the supremum of the above expression gives

Therefore by Lemma 3.3, the smallest eigenvalue of \(D^{-1}H\), and consequently of \(D^{-1/2}HD^{-1/2}\) is at least \(-\csc ^2(\theta _0/2)\). Then

has exactly one eigenvalue at \(\frac{1+\csc ^2(\theta _0/2)}{\csc ^2(\theta _0/2)}=1+\csc ^{-2}(\theta _0/2)\) with the rest contained in the interval [0, 1]. We can apply Lemma 3.1 with \(g=\csc ^{-2}(\theta _0/2)\) to obtain a diameter of at most:

The eigenvector v corresponding to eigenvalue \(1+\csc ^2(\theta _0/2)\) is simply \(\textbf{1}^TD^{1/2}\) normalized, so

where we used that \(\theta _{ik}\in [\theta _0,\pi -\theta _0]\) implies \(\sin (\theta _0/2)\le \tan (\theta _{ik}/2)\le \frac{1}{\sin (\theta _0/2)}\) and \(\sin (x/2)\ge \sin (x)/2\). Finally use \(N\le \left( {\begin{array}{c}m\\ d\end{array}}\right) \le m^d/2\) as well as the estimates from Lemma 3.4 to see that

yielding the advertised bound. \(\square \)

Lemma 3.3

(Gershgorin’s circle theorem) The smallest (real) eigenvalue of M is at least \(-\sup _i\sum _j|M_{ij}|\).

Lemma 3.4

(Worst Case Volumes and Angles) Let \(P^o=\text {conv}(a_1/b_1,\ldots ,a_m/b_m)\) be a polytope where each \(a_i/b_i\in \mathbb {R}^d\) is a vertex and \(a_i\in \mathbb {Z}^d,b_i\in \mathbb {Z}\). Then:

-

1.

The smallest co-dimension 2 face of \(P^o\) has volume at least \(1/(d!\Vert b\Vert _\infty ^{2d})\), and the largest co-dimension 2 face has volume at most \((\sqrt{d}\Vert A\Vert _\infty )^d\).

-

2.

If the largest \(d\times d\) minor of [A|b] is bounded in magnitude by \(\Delta \), then the angle between any two adjacent facets of \(P^o\) satisfies \(\csc (\theta )\le 2d\Delta \Vert A\Vert _\infty .\)

Proof

Every co-dimension 2 face can be written as the convex hull of some subset of size at least \(d-1\) of the vertices \(a_1/b_1,\ldots ,a_m/b_m\). Without loss of generality, say that \(F=\text {conv}(a_1/b_1,\ldots ,a_{d-1}/b_{d-1})\) is the smallest co-dimension 2 face. Then its volume is:

where M is the \(d\times (d-2)\) matrix whose ith column is \(a_i/b_i-a_{d-1}/b_{d-1}\), and we have used that the determinant of a nonsingular integer matrix is at least one. On the other hand, \(P^o\) is contained inside the \(\ell _{2}\) ball of radius \(d^{1/2}\Vert A\Vert _\infty \), and so each co-dimension 2 face of \(P^o\) is contained in a cross section of that ball, and consequently has volume at most \((\sqrt{d}\Vert A\Vert _\infty )^{d}\), establishing (1).

Regarding the angles, consider without loss of generality two adjacent facets of \(P^o\), with vertices numbered so that \(F=\text {conv}(a_1/b_1,\ldots ,a_d/b_d)\) and \(F'=\text {conv}(a_2/b_2,\ldots ,a_d/b_d,a_j/b_j)\), \(j>d\), and \(|b_j|\le |b_1|\). Observe that the angle \(\theta \) between the normals to these adjacent facets satisfies:

The numerator is at most the distance between \(a_j/b_j\) and any vertex of F, which is at most

by our choice of j. The denominator is given by

where M is the \((d+1)\times (d+1)\) matrix with columns \((\hat{a_1},\ldots ,\hat{a_d},\hat{a_j})\), which must be invertible since \(\text {conv}(a_1/b_1,\ldots ,a_d/b_d,a_j/b_j)\) is a full dimensional simplex. By the adjugate formula, the entries of \(M^{-1}\) are of magnitude at most \(\Delta \), so we have

Combining these bounds and cancelling the \(|b_j|\) yields

establishing (3). \(\square \)

Finally, we can prove the bound advertised in the introduction.

Proof of Theorem 1.1

Applying Theorem 3.2, Lemma 3.4(2), and \(\sin (x/2)\ge \sin (x)/2\), we find that the diameter of P is at most

as advertised. \(\square \)

4 Smoothed Analysis

In this section we consider the “smoothed unit LP” model defined in (2). Suppose \(P_0\) is a fixed polytope specified as

for some vectors \(\Vert a_j\Vert \le 1\), and consider the random polytope

where \(v_j=a_j+g_j\) for \(g_j\sim N(0,\sigma ^2 I_d)\) i.i.d spherical Gaussians. Denote the polars of \(P_0\) and P by

Note that K is simplicial with probability one, so each of its k-dimensional faces has exactly \(k+1\) vertices. We will use the notation \(F_S:=\text {conv}\{v_j:j\in S\}\) to denote faces of K and \(\mathcal {F}_k(K):=\left\{ S\in \left( {\begin{array}{c}[m]\\ k+1\end{array}}\right) : F_S \text { is a } k-\text { dimensional face of } K\right\} \) to denote the set of all faces of K. The k-dimensional volume of a face \(F_S, S\in \mathcal {F}_k(K)\) will be denoted by \(|F_S|\) or \(\text {Vol}_k(F_S)\). We will often abbreviate \(F_{S\cap T}\) as \(F_{ST}\) for adjacent S, T. For two \(S,T\in \left( {\begin{array}{c}[m]\\ d\end{array}}\right) \), let \(\theta _{ST}\in (0,\pi )\) denote the angle between the unit normals \(u_S, u_T\) to \(F_S, F_T\), respectively; note that almost surely \(\theta _{ST}\ne 0,\pi \) for every \(S,T\in \left( {\begin{array}{c}[m]\\ d\end{array}}\right) \).

We will pay special attention to the set of \((d-1)\)-faces of K, which we denote as

Define the measures \(\chi _2, \pi , \delta :\Omega \rightarrow \mathbb {R}_{\ge 0}\) by

It will be convenient to make two further technical assumptions on \(K_0\) and \(\sigma \) for the proofs of our results; in Sect. 4.5 we will show that any instance of the smoothed unit LP model may be reduced to one satisfying both assumptions with parameter

incurring only a \(\textrm{poly}(m)\) loss in the diameter. Let \(K_0^{(j)}=\text {conv}(a_i:i\ne j)\) be the polytope obtained from \(K_0\) by deleting vertex \(a_j\).

- (R):

-

Roundedness of Subpolytopes: There is an \(r\in (0,1)\) such that for every \(j\le m\) there is a vector \(v_j \in \mathbb {R}^d\) such that:

$$\begin{aligned} rB_2^d + v_j \subset K_0^{(j)}. \end{aligned}$$ - (S):

-

Smallness of \(\sigma \):

$$\begin{aligned} \alpha := 6\sigma \sqrt{d\log m} < r/d^2.\end{aligned}$$(13)

The main result of this section is the following “almost-diameter” bound with respect to the measure \(\pi \).

Theorem 4.1

Assume \(\mathbf{(S)}, \mathbf{(R)}\). Then with probability at least \(1- C/m^2\), for every \(\phi >0\) there is a subset \(G:=G(\phi )\subset \Omega \) with \(\pi (G)\ge (1-\phi )\pi (\Omega )\) such that the facet diameter of G is at most \( \tilde{O}(m^3d^8/\sigma ^2r\phi ).\)

Remark 4.2

The probability in Theorem 4.1 may be upgraded to \(1-m^{-c}\) for any c at the cost of an additional \(m^c\) factor in the diameter bound, by applying Markov’s inequality in the proof of Lemma 4.5 with a different threshold.

The proof of Theorem 4.1 relies on three properties of the (random) continuous time Markov chain with state space \(\Omega \) and infinitesimal generatorFootnote 2

where L is as in (6). The corresponding Markov semigroup

has stationary distribution proportional to \(D\textbf{1}=\pi (\cdot )\) by Lemma 2.4(2); call the normalized stationary distribution \(\overline{\pi }(\cdot ):=\pi (\cdot )/\pi (\Omega )\)

The first property is that the stationary distribution \(\overline{\pi }\) is (in a quite mild sense) non-degenerate, with high probability. Apart from being essential in our proofs, this relates the measure \(\pi \) to well-studied measures in convex geometry such as the surface measure and mean curvature measure \(\chi _2(\cdot )\), clarifying the meaning of Theorem 4.1. The proof of Lemma 4.3 appears in Sect. 4.1.

Lemma 4.3

(Non-degeneracy of \(\overline{\pi }\)) Assume \(\mathbf{(S)},\mathbf{(R)}\). With probability at least \(1-1/m^2\):

-

1.

\(\min _{S\in \Omega } \overline{\pi }(S)\ge \overline{\pi }_{min}:= C\frac{m^{-2d^2}r^2}{d^3}.\)

-

2.

\(c\textrm{Vol}_{d-1}(\partial K)\le \pi (\Omega )\le O(d^3r^{-2})\textrm{Vol}_{d-1}(\partial K).\)

-

3.

For every \(S\in \Omega \), \(\chi _2(S)/2\le \pi (S)\le O(r^{-1})\chi _2(S).\)

The second property is that Q (almost surely) has a spectral gap of at least one, by Lemma 2.4(2). This implies that the chain (14) mixes rapidly to \(\overline{\pi }\) (in the sense of continuous time) from any well-behaved starting distribution. In particular let us say that a probability measure p on \(\Omega \) is an M-warm start if

Let \(\ell _2(\overline{\pi })\) denote the inner product space on defined on \(\mathbb {R}^\Omega \), where the inner product is given by \(\langle f, g\rangle _{\ell _2(\overline{\pi })}:= \sum _{S\in \Omega } \overline{\pi }(S) f(S) g(S)\), and let \(\ell _1(\overline{\pi })\) be the corresponding \(\ell _1\) space. Let \(\Pi \) be the \(\Omega \times \Omega \) diagonal matrix whose \(S^{th}\) diagonal entry is \(\overline{\pi }(S)\). We define the density of p with respect to \(\overline{\pi }\) to be the the vector with entries \(\frac{p(S)}{\overline{\pi }(S)}\). We omit the proof of the following standard fact.

Lemma 4.4

(Warm Start Mixing) If p is M-warm, then for \(\tau >0\), \(t=\Omega (\log (M/\tau ))\) time, one has

The third and final property is a bound on the rate at which the continuous chain makes discrete transitions between states. Let \(J_{\textsf{avg}}\) denote the average number of state transitions made by the continuous time chain in unit time, from stationarity, and note that

as the diagonal entries of the generator Q are equal to \(-\delta (S)/\pi (S)\). The most technical part of the proof is the following probabilistic bound.

Lemma 4.5

(Polynomial Jump Rate) Assume \(\mathbf{(S)},\mathbf{(R)}\). With probability at least \(1-1/m^2\), the continuous time Markov chain defined by (14) satisfies:

The proof of this lemma involves showing that the facets of K are well-shaped and have non-degenerate angles between them in a certain average sense, and is carried out in Sects. 4.2, 4.3, and 4.4.

Combining these ingredients, we can prove Theorem 4.1

Proof of Theorem 4.1

Let T be a fixed positive time to be chosen later. Consider the continuous time chain (14), and for \(F\in \Omega \) let the random variable \(J_F^T\) denote the number of transitions in [0, T] when the chain is started at F. With probability \(1-1/m^2\) we have

by Lemma 4.5 so there is a facet \(F_0\in \Omega \) satisfying

By Lemma 4.3(1), the distribution \(\delta _{F_0}\) concentrated on \(F_0\) is \(\overline{\pi }_{min}^{-1}\)-warm with probability \(1-1/m^2\). Invoking Lemma 4.4 with starting distribution \(\delta _{F_0}\) and parameters

we have

Combining this with (15), we obtain a distribution on discrete paths \(\gamma \) in \(\Omega \) (with respect to the adjacency relation \(\sim \)) such that each path has source \(F_0\),

and the distribution of \(\textrm{target}(\gamma )\) is within total variation distance \(\overline{\pi }_{min}/2\) of \(\overline{\pi }\). Letting

we immediately have that the diameter of G is at most

and by Markov’s inequality \(\overline{\pi }(G)\ge 1-\phi \), as desired. \(\square \)

Before proceeding with the proofs of Lemmas 4.3 and 4.5, we collect the probabilistic notation used throughout the sequel. We will often truncate on the following two high probability events. Fix

and define:

Note that whenever \(\sigma >m^{-d}\) (which we may assume without loss of generality, as otherwise the diameter is trivially at most \(1/\sigma \)):

since the density of the component of \(v_j\) orthogonal to \(\text {aff}(F_S)\) is bounded by \(1/\sigma \) and there are at most \(m^d\) facets. We also have

by standard Gaussian concentration and a union bound.

We will repeatedly use that on \(\mathcal {C}\), we have the Hausdorff distance bounds

for \(\alpha \) as in (13), since if \(x=\sum _{j\le m}c_j (a_j+g_j)\in K\) for some convex coefficients \(c_j\) then \(x_0=\sum _{j\le m} c_j a_j\in K_0\) and \(\Vert x-x_0\Vert \le \alpha \).

For an index \(j\in [m]\) let \(\hat{\textbf{g}}_j:=(g_1,\ldots ,g_{j-1},g_{j+1},\ldots g_m)\) and let \(K^{(j)}=\text {conv}(v_i:i\ne j)\). Note that \(K^{(j)}\) is a deterministic function of \(\hat{\textbf{g}}_j\). Define the indicator random variables

for subsets \(S\in \left( {\begin{array}{c}[m]\\ d\end{array}}\right) \). It will be convenient to fix in advance a total order < on \(\left( {\begin{array}{c}[m]\\ d\end{array}}\right) \).

We will occasionally refer to

as the k-perimeter of K.

4.1 Nondegeneracy of \(\overline{\pi }\)

We will repeatedly use the following fact relating Hausdorff distance and containment of convex bodies.

Lemma 4.6

(Containment of Small Perturbations) If \(\textrm{hdist}(K,K_0)\le \alpha < \frac{r}{2}\) for any two convex bodies and \(rB_2^d\subset K_0\), then

Proof

The second containment is immediate from

The condition \(\textrm{hdist}(K,K_0)\le \alpha \) also implies \(K_0\subset K+\alpha B_2^d\). To turn this into a multiplicative containment, we claim that \((r/2)B_2^d\subset K\). If not, there is a point \(z\in \partial (r/2)B_2^d{\setminus } K\). Choose a halfspace H supported at z containing K. Let y be a point in \(\partial (rB_2^d)\) at distance at least r/2 from H and note that \(y\in K_0\). But now \(\text {dist}(y,K)\ge \text {dist}(y,H)\ge r/2>\alpha \), violating that \(K_0\subset K+\alpha B_2^d\). Thus, we conclude that \(K_0\subset (1+2\alpha /r)K\), establishing the first containment. \(\square \)

Proof of Lemma 4.3

Condition on \(\mathcal {C}\). By \(\mathbf{(S)}, \mathbf{(R)}\), (19), and Lemma 4.6, we have

and also \(K\subset (1+\alpha )B_2^d\). Consequently, the angle between any two adjacent facets \(F_S,F_T\) of K must satisfy

which implies

for all \(\theta _{ST}\). Thus, for each facet \(S\in \Omega \):

establishing Lemma 4.3(3).

Equation (20) further implies:

By e.g. [27, Sect. 4.2], we have the quermassintegral formulas:

By the Alexandrov–Fenchel inequality with \(\beta =1/2\):

By Alexandrov–Fenchel with \(\beta =1/(d-1)\), we also have

The last step follows from the fact that \(K\subset (1+\alpha )B_2^d\) so \(|\partial K|^{\frac{1}{d-1}}=O(1)\). Combining these inequalities with (21),(22), we conclude that:

establishing Lemma 4.3(2).

The event \(\mathcal {B}\) implies that for every \(S\in \Omega \):

where in the last step we used \(|F_S|=O(1)\). Conditional on \(\mathcal {B}\) Lemma 4.7 implies that \(|F_S|\ge \frac{\epsilon ^{d-1}}{d}\) for every \(S\in \Omega \), so we conclude that

and consequently by (25)

yielding Lemma 4.3(1), as desired. \(\square \)

Lemma 4.7

(Inradius of a Simplex) If \(L=\text {conv}(v_1,\ldots ,v_{t+1})\) is a t-dimensional simplex such that each vertex of L is at distance s from the affine span of the remaining vertices, then L contains a ball of radius \(s/({t+1})\).

Proof

We see that the distance of the centroid \(\frac{\sum _{i=1}^{t+1} v_i}{t+1}\) of the simplex from any face is at least \(\frac{s}{t+1}\), proving the lemma. \(\square \)

4.2 Average Jump Rate Bound

In this section we establish the following Lemma, which immediately implies Lemma 4.5 by \(\mathbb {P}[\mathcal {B}\mathcal {C}]\ge 1-2/m^3\) and Markov’s inequality applied to the expectation below (absorbing the \(\log (m)\) factor into the \(\tilde{O}\)).

Lemma 4.8

(Main Estimate) Assume \(\mathbf{(S)},\mathbf{(R)}\) in the above setting. Then

Proof

implying the desired conclusion. \(\square \)

Proof of Lemma 4.9

Lemma 4.9

(Angles Large On Average) For every \(S,T\in \left( {\begin{array}{c}[m]\\ d\end{array}}\right) \) with \(S{\setminus } T=\{j\}\):

Proof

By trigonometry,

conditional on \(\mathcal {C}\), since K has diameter at most \(2+2\alpha \le 3\). The distance in the denominator can be rewritten as

where \(h_j=\langle g_j,m_T\rangle \) and \(x_T=\text {dist}(0,\text {aff}(F_T)-a_j)\le 4\) for \(m_T\) the unit normal to \(\text {aff}(F_T)\). Moreover,

with probability one conditional on \(\hat{\textbf{g}}_j\) since \(S\cap T\in \mathcal {F}_{d-2}(K)\) implies \(S\cap T\in \mathcal {F}_{d-2}(K^{(j)})\) as \(j\notin S\cap T\). Combining these facts, the left hand side of (27) is at most

Notice that \(h_j\) has density on \(\mathbb {R}\) bounded by

and \(\epsilon \le |h_j-x_T|\le |h_j|+x_T\le 4+\alpha <5\) conditioned on \(\mathcal {B},\mathcal {C}\), so the last conditional expectation is at most

completing the proof. \(\square \)

The most technical part of the proof is the following \((d-2)\)-perimeter estimate, whose proof is deferred to Sect. 4.3. The conceptual meaning of this estimate is that on average, the \((d-2)\)-dimensional surface area of a random facet of \(K^{(j)}\) is well-bounded by its \((d-1)\)-dimensional volume.

Lemma 4.10

(Codimension 2 Perimeter versus Surface Area) Assume \(\mathbf{(R)},\mathbf{(S)}\). For every \(j\in [m]\):

4.3 Proof of Lemma 4.10

The key step in the proof is to show that for any well-rounded polytope \(L_0\), there a distribution on two-dimensional planes W such that the \((d-2)\)-perimeter of every nearby polytope L is accurately reflected in the average number of vertices of \(W\cap L\). Since this number of vertices is small in expectation by [12], we can then conclude that the codimension 2 perimeter is small.

In this section and the next only, the variable \(\epsilon \) will refer to a quantity tending to zero (as opposed to the definition (16)).

Lemma 4.11

(Quadrature by Planes) Let \(r_1 B_2^d\subset L_0\subset r_2 B_2^d\). There there is a probability distribution on two dimensional planes W in \(\mathbb {R}^d\) such that for sufficiently small \(\epsilon >0\) the following holds uniformly over every polytope L with at most \(m^d\) facets satisfying

every \((d-2)\)-dimensional disk \(S_\epsilon \) of radius \(\epsilon \) contained in the interior of a \((d-2)\)-dimensional face of L satisfies

Moreover, for every \((d-2)\)-dimensional affine subspace \(H\subset \mathbb {R}^d\), \(\mathbb {P}[W\cap H > 1] = 0\).

The proof of Lemma 4.11 is deferred to Sect. 4.4.

We rely on the following result of Dadush and Huiberts [12, Thm. 1.13] (they prove something a little stronger, but we use a simplified bound).

Theorem 4.12

(Shadow Vertex Bound) Suppose W is a fixed two dimensional plane and \(Q=\text {conv}\{v_1,\ldots ,v_m\}\) where \(v_i\sim N(a_i,\sigma ^2 I)\) with \(\Vert a_i\Vert \le 1.\)Then

Combining these two ingredients, we can prove Lemma 4.10.

Proof of Lemma 4.10

Fix \(j\le m\) and recall that \(rB_2^d\subset K_0^{(j)}\subset B_2^d\) by \(\mathbf{(R)}\). Conditioning on \(\mathcal {C}\), we also have \(\textrm{hdist}(K^{(j)},K_0^{(j)})\le \alpha \). Thus we may invoke Lemma 4.11 with \(L_0=K_0^{(j)}\), \(L=K^{(j)}\), \(r_1=r\), \(r_2=1\), and \(\eta =\alpha =\Omega (\sigma \sqrt{d\log m})\) to obtain a probability measure \(\nu \) on two dimensional planes \(W\subset \mathbb {R}^d\) with the advertised properties; note that crucially W depends only on \(K_0\) and is independent of K. Let \(I_\epsilon \) be a maximal collection of disjoint \((d-2)\)-dimensional disks \(S_\epsilon \) of radius \(\epsilon \), with each \(S_\epsilon \) contained in some \((d-2)\)-face of L. Notice that

The integrand in the first expression above above is at most

since each set in \(\left( {\begin{array}{c}[m]\\ d-2\end{array}}\right) \) appears as the intersection of at most \(m^2\) adjacent pairs S, T. Therefore by Theorem 4.12 the first expression above is bounded above by \(O(m^2d^{5/2}\log ^2(m)/\sigma ^2)\). Rearranging yields

implying the desired conclusion.

4.4 Proof of Lemma 4.11

We provide an explicit construction for the distribution of W. Let \(\tilde{L}=L_0+2\eta B_2^d\) and note that its boundary \(\partial \tilde{L}\) is smooth; let \(\psi \) be the \(d-1\)-dimensional surface measure on \(\partial \tilde{L}\). This equals both the \(d-1\) dimensional Hausdorff measure and the Minkowski content of \(\partial \tilde{L}\). Then let \(W=V+a\) where a is a point sampled according to \(\psi \), and V is sampled by taking the span of two Gaussian vectors (or any radially symmetric random vectors). In order to compute \(\mathbb {P}(V+a\cap S_\epsilon \ne \emptyset )\), it will help to first reduce it to the related probability \(\mathbb {P}(W'\cap S_\epsilon \ne \emptyset )\) for \(W=V+a'\) where \(a'\) is sampled uniformly from the unit ball which shares a center with \(S_\epsilon \). In particular, let x be the center of \(S_\epsilon \) and denote \(B_x=B_2^d+x\). Let \(\psi '\) be the \(d-1\)-dimensional Hausdorff measure on \(\partial B_x\). Then we will reduce to the case of \(\mathbb {P}(V+a'\cap S_\epsilon \ne \emptyset )\) for \(a'\) sampled according to \(\psi '\). For any z, define the radial projection \(\Pi _z\) by

Note that \(\Pi _x\) is a bijection between \(\partial \tilde{L}\) and \(\partial B_x\) since every ray originating from x intersects \(\partial \tilde{L}\) in exactly one point because x is in the interior of \(\tilde{L}\), which is convex.

Claim 4.13

The push-forward of \(\psi \) by \(\Pi _x\) is absolutely continuous with respect to \(\psi '\) with Radon-Nikodym derivative

where \(\phi \) is the angle in \([0,\pi ]\) between the tangent plane to \(\partial \tilde{L}\) at a and the line segment \(\overline{xa}\).

Proof

An explicit Jacobian calculation given the definition of \(\Pi _x\) and smoothness of \(\partial \tilde{L}\) gives the result. \(\square \)

Lemma 4.14

Let \(z\not \in \text {aff}(S_\epsilon )\) be a point such that \(\Pi _z\) is injective on \(S_\epsilon \). Let V be a random two-dimensional subspace. Then

where \(\mu \) is the Hausdorff measure of \(\Pi _z(\text {aff}(S))\) and \(A_{d-2}=\mu (\Pi _z(\text {aff}(S)))\) (half the surface area of \(S^{d-2}\)).

Proof

Since \(\text {aff}(S_\epsilon )\) misses z, we have that \(\text {aff}(\{z\}\cup S_\epsilon )\) is \(d-1\) dimensional. On the other hand, \(\Pi _z\) is smooth and injective on \(\text {aff}(S_\epsilon )\) so \(\Pi _z(S_\epsilon )\) itself is \(d-2\) dimensional. Condition on \((V+z)\not \subset \text {aff}(\{z\}\cup S) \), which occurs with probability 1. Then \((V+z)\cap \text {aff}(\{z\}\cup S) \) is a line through z. By symmetry, the intersection of that line with \(B_2^d+z\) will be a uniformly random antipodal pair. Exactly one point from each pair will fall in \(\Pi _z(\text {aff}(S))\). Thus, the event we care about is the event that \(y\in \Pi _z(S)\) where y is sampled uniformly from \(\mu \). \(\square \)

The following Lemma takes a and \(a'\) to be fixed, and depends only on the randomness of V.

Lemma 4.15

Let a be a point not in \(\text {aff}(S_\epsilon )\) and \(a'=\Pi _x(a)\). Let \(\theta \) be the angle between \(S_\epsilon \) and the ray emanating from a through x. Then, for a uniformly random 2-plane V,

and

where the convergence is uniform in \(a,a'\). In particular, the ratio of the above two quantities is \(\Vert x-a\Vert ^{d-2}\).

Proof

We apply Lemma 4.14 twice, both times with \(S_\epsilon \) playing the role of S. The first time we take a to play the role of z, and the second time \(a'\). This gives

where \(\mu _a,\mu _{a'}\) are the Hausdorff measures on \(\Pi _a(\text {aff}(S_\epsilon )),\Pi _{a'}(\text {aff}(S_\epsilon ))\) respectively. Let \(\mu '\) be the surface measure on \(\text {aff}(S_\epsilon )\). Then the Radon-Nikodym derivatives of \(\mu '\) and the pull-backs of \(\mu _a\) and \(\mu _{a'}\) are

where \(\theta ^a_y,\theta ^{a'}_y\) are the angles between \(S_\epsilon \) and the rays from \(a,a'\) to y respectively. This allows us to compute

The same is true for \(a'\) in place of a. Note that \(\theta ^{a'}_x=\theta ^a_x=\theta \), and that \(\Vert x-a'\Vert =1\). That gives the desired result. \(\square \)

Lemma 4.16

(Reduction to \(\partial B_x\)) Let W be as above and let \(W'\) be a uniformly random two dimensional plane through a uniformly random point \(a'\) chosen from \(\partial B_x\). Then for sufficiently small \(\epsilon >0\) (depending only on \(L_0\)):

Proof

Note that \(a,a'\) miss \(\text {aff}(S_\epsilon )\) with probability 1, so we implicitly condition on that event in the following.

where in the final inequality we have used \(\Vert x-a\Vert \le 2(r_2+\eta )\) and \(\sin \phi \ge \frac{r_1}{4r_2}\) because \(\tilde{L}\supset L\supset (r_1-\eta )B_2^d\supset (r_1/2)B_2^d\) and \(\tilde{L}\subset (r_2+\eta )B_2^d\subset 2r_2 B_2^d\). \(\square \)

Lemma 4.17

(Intersection Probability for \(\partial B_x\))

for some constant \(C_d=\Theta (1)\) depending on d.

Proof

Using iterated expectation, we can write

where the outer expectation is over the randomness of \(a'\) and inner probability over V. The inner probability is given by 4.15 as

The only dependence on \(a'\) is in \(\cos (\theta _x^{a'})\). However, by symmetry of the distribution of \(a'\), \(\theta _x^{a'}\) might as well measure the angle between a uniform random vector selected from \(\partial B_x\) and any fixed line. Thus

for some constant \(C_d=\Theta (1)\) depending on d. \(\square \)

We can now complete the proof of Lemma 4.11. Combining Lemmas 4.16 and 4.17, we have for sufficiently small \(\epsilon >0\):

since \(\text {Vol}_{d-2}(\partial B_x)/A_{d-2}=2\pi /d\), as desired.

4.5 Removing Assumptions (S),(R)

In this section we explain how any instance of the smoothed unit LP model may be reduced to one for which \(\mathbf{(S)},\mathbf{(R)}\) hold with parameter (12), incurring only a polynomial loss in m.

Proof of Theorem 1.2

The idea is to add the noise vector \(g_j\) as the sum of two independent Gaussians \(g_{j, 1} \sim N(0, \sigma _1^2)\) and \(g_{j, 2} \sim N(0,\sigma _2^2)\) with \(\sigma _1\) guaranteeing roundedness and \(\sigma _2\) supplying the necessary anticoncentration and concentration for the main part of the proof. Given \(\sigma <1/d\), set

and \(\sigma _1^2+\sigma _2^2=\sigma ^2\) and let \(K_1\) be equal to \(K_0\) perturbed by \(g_1\) only. Applying Lemma 4.18 to each \(K_0^{(j)}\) and taking a union bound, we have

with probability \(1-O(m^{-2})\). Since \(\sigma <1/d\), another union bound reveals that

with probability \(1-O(m^{-2})\); let \(K_2=K_1/2\). Now \(K_2\) is an instance of the smoothed unit LP model, \((K_2,\sigma _2)\) satisfy \(\mathbf{(R)}\) with \(r=\Omega (\sigma _2 m^3)=\Omega (\sigma /m^5)\), and

so \((K_2,\sigma _2)\) also satisfy \(\mathbf{(S)}\), establishing (12) with the role of \((K_0,\sigma )\) now played by \((K_2,\sigma _2)\).

Invoking Theorem 4.1, we conclude that with probability \(1-1/m^2\), for every \(\phi \in (0,1)\) there is a subset \(G\subset \Omega \) with \(\pi (G)\ge (1-\phi )\pi (\Omega )\) and facet diameter

Moreover, by Lemma 4.3(3), we have

so we conclude that \(\chi _2(G)\ge (1-\psi )\chi _2(\Omega )\) for \(\psi = O(m^5\phi /\sigma )\). Rewriting the diameter bound in terms of \(\psi \) yields the desired conclusion. The probability may be upgraded to \(1-1/\textrm{poly}(m)\) by Remark 4.2.

Lemma 4.18

(Roundedness of Smoothed Polytopes) Suppose we have \(m \ge d+1\) points \(a_1, \ldots , a_m \in \mathbb {R}^d\), and these are perturbed to \(v_1, \ldots , v_m\) by adding independent \(g_j \sim N(0, \sigma _1^2 I_d)\) to each respective \(a_j\). Then, with probability at least \(1 - O(m^{-3})\), the convex hull K of \(v_1, \ldots , v_m\) contains a ball of radius \(r_{in} \ge \Omega (\sigma _1 m^{-5}).\)

Proof

Without loss of generality, taking the first \(d+1\) points \(a_i\), we may assume that \(m = d+1\). Then K is the convex hull of \(d + 1\) points \(v_1, \ldots , v_{d+1}\). The probability that the affine span of these points equals \(\mathbb {R}^d\) is 1. Let \(r_{in}\) be the inradius of K; by Lemma 4.7, we have

Let us now fix an i and obtain and obtain a probabilistic lower bound on \(\frac{\text {dist}(v_i, \text {aff}(F_i))}{d+1}.\) Reorder the points (if necessary) so that \(i = d + 1\). It now follows that given the the affine span A of the points \(v_1, \ldots , v_d\) and given \(a_{d+1}\), the distribution of \(\text {dist}(v_{d+1}, A)\) is the same as the distribution of \(|\tilde{g} + \text {dist}(a_{d+1}, A)|\), where \(\tilde{g} \sim N(0, \sigma _1^2)\) has the distribution of a one dimensional Gaussian with variance \(\sigma _1^2\). However, the probability that \(|\tilde{g} + \text {dist}(a_{d+1}, A)|\) is less than \(\sigma _1 m^{-4}\) is at most \(O\left( m^{-4}\right) \). Therefore, by the union bound,

It follows that

as desired. \(\square \)

References

Alexandrov, A.D.: Zur theorie der gemischten volumina von konvexen körpern ii. Mat. Sbornik N.S. 2, 1205–1238 (1937)

Alexandrov, A.D.: Zur theorie der gemischten volumina von konvexen körpern iv. Mat. Sbornik N.S. 3, 227–251 (1938)

Anari, N., Liu, K., Gharan, S.O., Vinzant, C.: Log-concave polynomials II: high-dimensional walks and an FPRAS for counting bases of a matroid. In: STOC’19—Proceedings of the 51st annual ACM SIGACT symposium on theory of computing (pp. 1–12). ACM, New York (2019)

Barnette, D.: An upper bound for the diameter of a polytope. Discret. Math. 10, 9–13 (1974)

Bonifas, N., Di Summa, M., Eisenbrand, F., Hähnle, N., Niemeier, M.: On sub-determinants and the diameter of polyhedra. Discrete Comput. Geom. 52(1), 102–115 (2014)

Borgwardt, K., Huhn, P.: A lower bound on the average number of pivot-steps for solving linear programs valid for all variants of the simplex-algorithm. Math. Methods OR 49, 175–210 (1999)

Borgwardt, K.-H.: Untersuchungen zur Asymptotik der mittleren Schrittzahl von Simplexverfahren in der linearen Optimierung. In: 2nd Symposium on Operations Research (Rheinisch-Westfälische Tech. Hochsch. Aachen, Aachen, 1977), Teil 1, Operations Res. Verfahren, XXVIII, pp. 332–345. Hain, Königstein/Ts (1978)

Borgwardt, K.-H.: The Simplex Method. Algorithms and Combinatorics: Study and Research Texts, vol. 1. Springer, Berlin (1987). A probabilistic analysis

Brunsch, T., Röglin, H.: Finding short paths on polytopes by the shadow vertex algorithm. In: Automata, Languages, and Programming. Part I. Lecture Notes in Computer Sciences, vol. 7965, pp. 279–290. Springer, Heidelberg (2013)

Chung, F.R.K.: Diameters and eigenvalues. J. Am. Math. Soc. 2(2), 187–196 (1989)

Dadush, D., Hähnle, N.: On the shadow simplex method for curved polyhedra. Discrete Comput. Geom. 56(4), 882–909 (2016)

Dadush, D., Huiberts, S.: A friendly smoothed analysis of the simplex method. SIAM J. Comput. (2020). https://doi.org/10.1137/18M119720

Deshpande, A., Spielman, D.A.: Improved smoothed analysis of the shadow vertex simplex method. In 46th Annual IEEE Symposium on Foundations of Computer Science (FOCS’05), pages 349–356 (2005)

Dyer, M., Frieze, A.: Random walks, totally unimodular matrices, and a randomised dual simplex algorithm. Math. Program. 64(1, Ser. A), 1–16 (1994)

Eisenbrand, F., Vempala, S.: Geometric random edge. Math. Program. 164(1–2, Ser. A), 325–339 (2017)

Grimmett, G.R., Stirzaker, D.R.: Probability and Random Processes. Oxford University Press, Oxford (2020). 4th edn [of 0667520]

Izmestiev, I.: The Colin de Verdière number and graphs of polytopes. Isr. J. Math. 178, 427–444 (2010)

Kalai, G., Kleitman, D.J.: A quasi-polynomial bound for the diameter of graphs of polyhedra. Bull. Am. Math. Soc. (N.S.) 26(2), 315–316 (1992)

Larman, D.G.: Paths of polytopes. Proc. Lond. Math. Soc. 3(20), 161–178 (1970)

McMullen, P.: On simple polytopes. Invent. Math. 113, 419–444 (1993)

McMullen, P.: Weights on polytopes. Discrete Comput. Geom. 15, 363–388 (1996)

Mihail, M.: On the expansion of combinatorial polytopes. In: Mathematical Foundations of Computer Science 1992 (Prague, 1992). Lecture Notes in Computer Science, vol. 629, pp. 37–49. Springer, Berlin (1992)

Naddef, D.: The Hirsch conjecture is true for \((0,1)\)-polytopes. Math. Program. 45(1, (Ser. B)), 109–110 (1989)

Narayanan, H., Shah, R., Srivastava, N.: A spectral approach to polytope diameter. arXiv preprint (2021). arXiv:2101.12198

Santos, F.: A counterexample to the Hirsch conjecture. Ann. Math. 176(1), 383–412 (2012)

Schneider, R.: Polytopes and Brunn–Minkowski theory. In: Polytopes: Abstract, Convex and Computational (Scarborough, ON, 1993). NATO Advanced Study Institute, Nonstandard Analysis and Its Applications, vol. 440, pp. 273–299. Kluwer, Dordrecht (1994)

Schneider, R.: Convex Bodies: The Brunn–Minkowski Theory. Encyclopedia of Mathematics and Its Applications, vol. 151, expanded edn. Cambridge University Press, Cambridge (2014)

Sokal, A.D., Thomas, L.E.: Absence of mass gap for a class of stochastic contour models. J. Stat. Phys. 51(5), 907–947 (1988)

Spielman, D.A., Teng, S.-H.: Smoothed analysis of algorithms: why the simplex algorithm usually takes polynomial time. J. ACM 51(3), 385–463 (2004)

Stanley, R.P.: The numbers of faces of a simplicial convex polytope. Adv. Math. 35, 236–238 (1980)

Sukegawa, N.: An asymptotically improved upper bound on the diameter of polyhedra. Discrete Comput. Geom. 62(3), 690–699 (2019)

Timorin, V.A.: An analogue of the Hodge–Riemann relations for simple convex polyhedra. Uspekhi Mat. Nauk, 54(2(326)), 113–162 (1999)

Todd, M.J.: An improved Kalai–Kleitman bound for the diameter of a polyhedron. SIAM J. Discret. Math. 28(4), 1944–1947 (2014)

Van Dam, E.R., Haemers, W.H.: Eigenvalues and the diameter of graphs. Linear Multilinear Algebra 39(1–2), 33–44 (1995)

Vershynin, R.: Beyond Hirsch conjecture: walks on random polytopes and smoothed complexity of the simplex method. SIAM J. Comput. 39(2), 646–678 (2009)

Acknowledgements

We thank Daniel Dadush, Bo’az Klartag, and Ramon van Handel for helpful comments and suggestions on an earlier version of this manuscript. We thank Ramon van Handel for pointing out the important reference [17]. We thank the IUSSTF virtual center on “Polynomials as an Algorithmic Paradigm” for supporting this collaboration.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Kenneth Clarkson

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

There is no data associated with this manuscript. Supported by a Swarna Jayanti fellowship

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Narayanan, H., Shah, R. & Srivastava, N. A Spectral Approach to Polytope Diameter. Discrete Comput Geom (2024). https://doi.org/10.1007/s00454-024-00636-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00454-024-00636-y