Abstract

In this paper, we consider the non-negative submodular function minimization problem with covering type linear constraints. Assume that there exist m linear constraints, and we denote by \(\varDelta _i\) the number of non-zero coefficients in the ith constraints. Furthermore, we assume that \(\varDelta _1 \ge \varDelta _2 \ge \cdots \ge \varDelta _m\). For this problem, Koufogiannakis and Young proposed a polynomial-time \(\varDelta _1\)-approximation algorithm. In this paper, we propose a new polynomial-time primal-dual approximation algorithm based on the approximation algorithm of Takazawa and Mizuno for the covering integer program with \(\{0,1\}\)-variables and the approximation algorithm of Iwata and Nagano for the submodular function minimization problem with set covering constraints. The approximation ratio of our algorithm is \(\max \{\varDelta _2, \min \{\varDelta _1, 1 + \varPi \}\}\), where \(\varPi \) is the maximum size of a connected component of the input submodular function.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Assume that we are given a finite set U. Then a function \(f :2^U \rightarrow {\mathbb {R}}\) is said to be submodular, if

for every pair of subsets X, Y of U, where \({\mathbb {R}}\) is the set of real numbers. Submodular functions play an important role in many fields, e.g., combinatorial optimization, machine learning, and game theory. One of the most fundamental problems related to submodular functions is the submodular function minimization problem. In this problem, we are given a submodular function \(f :2^U \rightarrow {\mathbb {R}}\), and the goal is to find a subset X of U minimizing f(X) among all subsets of U, i.e., to find a minimizer of f. It is known [5, 6, 8, 20] that this problem can be solved in polynomial time (we assume the oracle model).

In this paper, we consider constrained variants of the submodular function minimization problem. Constrained variants of the submodular function minimization problem have been extensively studied in various fields [4, 7, 9,10,11,12,13,14,15, 21, 23]. For example, Iwata and Nagano [9] considered the submodular function minimization problem with vertex covering constraints, set covering constraints, and edge covering constraints, and gave approximability and inapproximability. Goel, Karande, Tripathi, and Wang [4] considered the vertex cover problem, the shortest path problem, the perfect matching problem, and the minimum spanning tree problem with a monotone submodular cost function. Svitkina and Fleischer [21] also considered several optimization problems with a submodular cost function. Especially, Svitkina and Fleischer [21] proved that for the submodular function minimization problem with cardinality lower bound, there does not exist a polynomial-time \(o(\sqrt{n / \ln n})\)-approximation algorithm. Iyer and Bilmes [10] and Kamiyama [14] considered the submodular function minimization problem with submodular set covering constraints. Furthermore, Jegelka and Bilmes [13] considered the submodular function minimization problem with cut constraints. Koufogiannakis and Young [15] considered the monotone submodular function minimization problem with general covering constraints. Hochbaum [7] considered the submodular minimization problem with linear constraints having at most two variables per inequality. Zhang and Vorobeychik [23] considered the submodular function minimization problem with routing constraints.

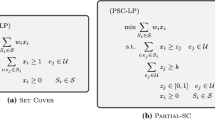

In this paper, we consider the non-negative submodular function minimization problem with covering type linear constraints. Assume that there exist m linear constraints, and we denote by \(\varDelta _i\) the number of non-zero coefficients in the ith constraints. Furthermore, we assume that \(\varDelta _1 \ge \varDelta _2 \ge \cdots \ge \varDelta _m\). For this problem, Koufogiannakis and Young [15] proposed a polynomial-time \(\varDelta _1\)-approximation algorithm. In this paper, we propose a new polynomial-time primal-dual approximation algorithm based on the approximation algorithm of Takazawa and Mizuno [22] for the covering integer program with \(\{0,1\}\)-variables and the approximation algorithm of Iwata and Nagano [9] for the submodular function minimization problem with set covering constraints. The approximation ratio of our algorithm is

where \(\varPi \) is the maximum size of a connected component of the input submodular function (see the next section for its formal definition). It is not difficult to see that the approximation ratio of our algorithm is at most \(\varDelta _1\). Furthermore, if \(\varPi \) is small (i.e., the input submodular function is close to a linear function) and \(\varDelta _2\) is also small, then our approximation can improve the algorithm of Koufogiannakis and Young [15]. For example, in the minimum knapsack problem with a forcing graph (see, e.g., [22] for its formal definition), \(\varDelta _1\) is large, but \(\varDelta _2\) is small.

2 Preliminaries

We denote by \({\mathbb {R}}\) and \({\mathbb {R}}_+\) the sets of real numbers and non-negative real numbers, respectively. For each finite set U, each vector v in \({\mathbb {R}}^U\), and each subset X of U, we define \(v(X) {:=} \sum _{u \in X}v(u)\).

Throughout this paper, we are given finite sets N and \(M = \{1,2,\ldots , m\}\) such that \(m \ge 2\), and a non-negative submodular function \(\rho :2^N \rightarrow {\mathbb {R}}_+\) such that \(\rho (\emptyset ) = 0\). We assume that for every subset X of N, we can compute \(\rho (X)\) in time bounded by a polynomial in |N|. Furthermore, we are given vectors a in \({\mathbb {R}}_+^{M \times N}\) and b in \({\mathbb {R}}_+^M\). For each subset X of N, we define the vector \(\chi _X\) in \(\{0,1\}^N\) by

Then we consider the following problem SCIP.

Without loss of generality, we assume that for every element i in M,

Otherwise, there does not exist a feasible solution of SCIP.

For each element i in M, we define \(\varDelta _i\) as the number of elements j in N such that \(a(i,j) \ne 0\). Without loss of generality, we assume that \(\varDelta _1 \ge \varDelta _2 \ge \cdots \ge \varDelta _m\).

A subset X of N is said to be separable, if there exists a non-empty proper subset Y of X such that

Furthermore, a subset X of N is said to be inseparable, if X is not separable. It is known [1, Proposition 4.4] that N can be uniquely partitioned into non-empty subsets \(I_1,I_2,\ldots ,I_{\delta }\) satisfying the following conditions in polynomial time by using the algorithm of Queyranne [19]. (For completeness, we give an algorithm for computing \(I_1,I_2,\ldots ,I_{\delta }\) in Appendix 4.)

-

1.

\(I_p\) is inseparable for every integer p in \(\{1,2,\ldots ,\delta \}\).

-

2.

For every subset X of N,

$$\begin{aligned} \rho (X) = \rho (X \cap I_1) + \rho (X \cap I_2) + \cdots + \rho (X \cap I_{\delta }). \end{aligned}$$

Define

and we call \(\varPi \) the dependency of \(\rho \). In this paper, we propose a polynomial-time approximation algorithm for SCIP whose approximation ratio is

For SCIP, Koufogiannakis and Young [15] proved that if \(\rho \) is monotone, i.e., \(\rho (X) \le \rho (Y)\) for every pair of subsets X, Y of N such that \(X \subseteq Y\), then there exists a \(\varDelta _1\)-approximation algorithm. (See [9, p.675] for the monotonicity of an objective function.) Iwata and Nagano [9] considered the case where \(a(i,j) \in \{0,1\}\) and \(b(i) = 1\) for every element i in M and every element j in N, and proposed a \(\varDelta _1\)-approximation algorithm. Notice that if there exists a vector c in \({\mathbb {R}}_+^N\) such that \(\rho (X) = c(X)\) holds for every subset X of N, then the dependency \(\varPi \) is equal to 1. Thus, if we assume that \(\varDelta _2 \ge 2\), then the approximation ratio of our algorithm is \(\varDelta _2\). This implies that our result can be regarded as a generalization of the \(\varDelta _2\)-approximation algorithm of Takazawa and Mizuno [22] for the covering integer program with \(\{0,1\}\)-variables.

3 Algorithm

For proposing an approximation algorithm for SCIP, we need to introduce a linear programming relaxation of SCIP. This approach was proposed by Iwata and Nagano [9] for the submodular function minimization problem with set covering constraints.

We first define the function \({\widehat{\rho }} :{\mathbb {R}}_+^N \rightarrow {\mathbb {R}}_+\) called the Lovász extension of \(\rho \) [16]. Assume that we are given a vector v in \({\mathbb {R}}^N_+\). Furthermore, we assume that for non-negative real numbers \({\hat{v}}_1,{\hat{v}}_2,\ldots ,{\hat{v}}_s\) such that \({\hat{v}}_1> {\hat{v}}_2> \cdots > {\hat{v}}_s\), we have \(\{{\hat{v}}_1,{\hat{v}}_2,\ldots ,{\hat{v}}_s\} = \{v(j) \mid j \in N\}\). Then for each integer p in \(\{1,2,\ldots ,s\}\), we define \(N_p\) by

Then we define \({\widehat{\rho }}(v)\) by

where we define \({\hat{v}}_{s + 1} {:=} 0\). It is known [3] that

where we define \(\mathrm{P}(\rho )\) by

By considering the dual problem of (2), we can see that for every vector v in \({\mathbb {R}}_+^N\), \({\widehat{\rho }}(v)\) is equal to the optimal objective value of the following problem (see, e.g., [9]).

It is not difficult to see that for every subset X of N, \(\rho (X) = {\widehat{\rho }}(\chi _X)\). Thus, SCIP is equivalent to the following problem.

Define the vectors \({\overline{a}}\) in \({\mathbb {R}}^{M \times N \times 2^N}_+\) and \({\overline{b}}\) in \({\mathbb {R}}^{M \times 2^N}_+\) by

Then we consider the following problem.

The constraints of (5) are based on the results of [1, 2]. It is known [1, 2] that for every vector x in \(\{0,1\}^N\), x is a feasible solution of the problem (4) if and only if x is a feasible solution of the problem (5). We give the proof of this statement for completeness.

Theorem 1

For every vector x in \(\{0,1\}^N\), x is a feasible solution of the problem (4) if and only if x is a feasible solution of the problem (5).

Proof

Let us fix a vector x in \(\{0,1\}^N\) and an element i in M. Assume that x is a feasible solution of the problem (4). Let A be a subset of N. If there exists an element \(j^{*}\) in \(N \setminus A\) such that \(x(j^{*}) = 1\) and \(a(i,j^{*}) \ge {\overline{b}}(i,A)\), then since \({\overline{a}}(i,j,A) \ge 0\) for every element j in N,

Assume that \(a(i,j) < {\overline{b}}(i,A)\) for every element j in \(N \setminus A\) such that \(x(j) = 1\). Since \({\overline{a}}(i,j,A) \ge 0\) for every element j in N,

Furthermore, since

we have

This implies that x is a feasible solution of the problem (5).

Assume that x is a feasible solution of the problem (5). Then we have

This implies that x is a feasible solution of the problem (4). \(\square \)

We consider the following relaxation problem RP of the problem (5). Notice that Theorem 1 implies that RP is a relaxation problem of the problem (4).

Since for every vector v in \({\mathbb {R}}_+^N\), \({\widehat{\rho }}(v)\) is equal to the optimal objective value of the problem (3), the optimal objective value of RP is equal to that of the following problem LP.

Notice that we neglect the redundant non-negativity constraint of x. Then the dual problem of LP can be described as follows.

We call this problem DLP.

Let z be a vector in \(\mathrm{P}(\rho )\). Define the function \(\rho - z :2^N \rightarrow {\mathbb {R}}_+\) by \((\rho - z)(X) {:=} \rho (X) - z(X)\). Then \(\rho - z\) is submodular, and \(\min _{X \subseteq N} (\rho - z)(X) = (\rho - z)(\emptyset ) = 0\). Furthermore, it is not difficult to see that for every pair of minimizers X, Y of \(\rho - z\), \(X \cup Y\) is a minimizer of \(\rho - z\). Thus, there exists the unique maximal subset X of N such that \(\rho (X) = z(X)\).

We are now ready to propose our algorithm, called Algorithm 1. This algorithm is based on the approximation algorithm of Takazawa and Mizuno [22] for the covering integer program with \(\{0,1\}\)-variables. For each element i in M and each subset S of N, we define a vector \(g_{i,S}\) in \({\mathbb {R}}^N_+\) by

Then Algorithm 1 can be described as follows. Notice that \(y_1,y_2,\ldots ,y_T\) are needed only for the analysis of Algorithm 1.

The following lemmas imply that Algorithm 1 is well-defined and halts in finite time.

Lemma 1

Assume that we are given an element i in M and a subset S of N such that \({\overline{b}}(i,S) > 0\). Then there exists an element j in \(N \setminus S\) such that \({\overline{a}}(i,j,S) > 0\). Furthermore, there exists a subset X of N such that \(g_{i,S}(X) \ne 0\).

Proof

The second statement follows from the first statement. Assume that for every element j in \(N \setminus S\), \({\overline{a}}(i,j,S) = 0\) (notice that \({\overline{a}}(i,j,S) \ge 0\)). Then for every element j in \(N \setminus S\), since \({\overline{b}}(i, S) > 0\), the definition of \({\overline{a}}(i,j,S)\) implies that \(a(i,j) = 0\). Thus, we have

where the strict inequality follows from the fact that \({\overline{b}}(i,S) > 0\). This contradicts (1). \(\square \)

Lemma 2

Assume that we are given an element i in M, a subset S of N, and a vector z in \(\mathrm{P}(\rho )\) such that \({\overline{b}}(i,S) > 0\). Furthermore, we assume that S is the unique maximal subset of N such that \(\rho (S) = z(S)\). If we define

and \(z^{\prime } {:=} z + \alpha \cdot g_{i,S}\), then we have

- (1):

-

\(z^{\prime } \in \mathrm{P}(\rho )\).

Furthermore, we define \(S^{\prime }\) as the maximal subset of N such that \(\rho (S^{\prime }) = z^{\prime }(S^{\prime })\). Then we have

- (2):

-

\(S \subsetneq S^{\prime }\).

Proof

We first prove (1). For every subset X of N such that \(g_{i,S}(X) = 0\), we have \(z^{\prime }(X) = z(X) \le \rho (X)\). Furthermore, for every subset X of N such that \(g_{i,S}(X) \ne 0\),

This completes the proof.

Next we prove (2). Since \(z^{\prime }(j) = z(j)\) for every element j in S, \(\rho (S) = z^{\prime }(S)\). The maximality of \(S^{\prime }\) implies that \(S \subseteq S^{\prime }\). Let Z be a subset of N such that \(g_{i,S}(Z) \ne 0\) and

Then \(\rho (Z) = z^{\prime }(Z)\). The maximality of \(S^{\prime }\) implies that \(Z \subseteq S^{\prime }\) holds. Furthermore, since \(g_{i,S}(Z) \ne 0\), we have \(Z \not \subseteq S\), which implies that \(S\subsetneq S^{\prime }\). This completes the proof. \(\square \)

Notice that since \(Q_{\delta } = S_T\) and \({\overline{b}}(1,S_T) = 0\), \(\beta \) is well-defined.

4 Analysis

In this section, we analyze properties of Algorithm 1.

We first prove that Algorithm 1 is a polynomial-time algorithm. It follows from Lemma 2(2) that T is at most \(|N| + 1\). It is known [18] that \(\alpha _t\) can be computed in polynomial time. Furthermore, it is known (see, e.g., [17, Note 10.11]) that we can find the unique maximal subset \(S_{t+1}\) of N such that \(\rho (S_{t+1}) = z_{t+1}(S_{t+1})\) in polynomial time. These imply that Algorithm 1 is a polynomial-time algorithm.

Next we evaluate the approximation ratio.

Lemma 3

For every integer t in \(\{1,2,\ldots ,T\}\), \((y_t,z_t)\) is a feasible solution of DLP.

Proof

We prove this lemma by induction on t. If \(t = 1\), then this lemma follows from the fact that \(\rho (X) \ge 0\) for every subset X of N. Assume that this lemma holds when \(t = k\) (\(\ge 1\)), and then we consider the case of \(t = k + 1\). Assume that \(t(r+1) < k + 1 \le t(r)\) for an integer r in \(\{1,2,\ldots ,m\}\), where we define \(t(m+1) {:=} 0\). Since \(\alpha _k \ge 0\) follows from \(z_k \in \mathrm{P}(\rho )\), we have \(y_{k+1} \in {\mathbb {R}}_+^{M \times 2^N}\). Furthermore, Lemma 2(1) implies that \(z_{k+1} \in \mathrm{P}(\rho )\). For every element j in \(S_k\), \(z_{k+1}(j) = z_k(j)\) and

For every element j in \(N \setminus S_k\), \(z_{k+1}(j) - z_{k}(j) = {\overline{a}}(r,j,S_k) \cdot \alpha _k\) and

This completes the proof. \(\square \)

Lemma 4

The vector \(\chi _Q\) is a feasible solution of the problem (4), i.e., Q is a feasible solution of SCIP.

Proof

Let i be an element in M. Define a subset X of N by

Since \({\overline{b}}(i,X) = 0\), we have

Thus, since \(a(i,j) \ge 0\) for every element j in M and \(X \subseteq Q\), we have

This implies that \(\chi _Q\) is a feasible solution of the problem (4). This completes the proof. \(\square \)

Lemma 5

We have \(\rho (Q) = z_T(Q)\).

Proof

If \(t(1) = t(2)\), then \(Q = S_T\), and thus this lemma follows from \(z_T(S_T) = \rho (S_T)\). In what follows, we assume that \(t(1) \ne t(2)\). Since \(z_T \in \mathrm{P}(\rho )\),

for every integer p in \(\{1,2,\ldots ,\delta \}\). Since \(z_{T-1}(S_{T-1}) = \rho (S_{T-1})\) and \(z_T(j) = z_{T-1}(j)\) for every element j in \(S_{T-1}\),

This implies that we have

for every integer p in \(\{1,2,\ldots ,\delta \}\). In the same way, we can prove that

for every integer p in \(\{1,2,\ldots ,\delta \}\). Thus, since

we have

This completes the proof. \(\square \)

Theorem 2

Algorithm 1 is an approximation algorithm for SCIP whose approximation ratio is \(\max \{\varDelta _2, \min \{\varDelta _1, 1 + \varPi \}\}\).

Proof

Lemma 4 implies that Algorithm 1 is an approximation algorithm for SCIP. Let OPT be the optimal objective value of SCIP. Lemma 3 implies that

Furthermore, Lemma 5 implies that

Let i be an element in M. Then we have

Assume that \(t(1) \ne t(2)\). Define \(Q_0 {:=} S_{T-1}\). For every subset A of \(S_{T-1}\),

where the strict inequality follows from the definition of \(\beta \) (i.e., \({\overline{b}}(1,Q_{\beta -1}) > 0\)). Furthermore, the definition of Algorithm 1 and Lemma 2(2) imply that for every subset A of N, if \(y_T(1,A) > 0\), then \(A \subseteq S_{T-1}\). Thus,

Notice that if \(t(1) = t(2)\), then \(y_T(1,A) = 0\) for every subset A of N. Thus, (6), (7), (8), and (10) imply that

This completes the proof. \(\square \)

5 A Algorithm for Computing \(I_1,I_2,\ldots ,I_{\delta }\)

It is known [1, Proposition 4.4] that we can compute \(I_1,I_2,\ldots ,I_{\delta }\) by greedily partitioning a separable subset in a current partition. Formally speaking, we can compute \(I_1,I_2,\ldots ,I_{\delta }\) by using Algorithm 2.

For proving that \(I_1,I_2,\ldots ,I_{\delta }\) can be computed in polynomial time, it suffices to prove that the following problem can be solved in polynomial time.

- Input: :

-

A subset X of N.

- Task: :

-

Decide whether there exists a non-empty proper subset Y of X such that \(\rho (X) = \rho (Y) + \rho (X \setminus Y)\). If there exists such a subset Y, then find Y.

Define \({\overline{\rho }} :2^X \rightarrow {\mathbb {R}}\) by

Then it is not difficult to see that for every subset Y of X, we can compute \({\overline{\rho }}(Y)\) in time bounded by a polynomial in |N|. Furthermore, \({\overline{\rho }}(\emptyset ) = {\overline{\rho }}(X) = 0\), \({\overline{\rho }}(Y) = {\overline{\rho }}(X \setminus Y)\) for every subset Y of X. For each pair of subsets Y, Z of X,

That is, \({\overline{\rho }}\) is a submodular function. For every subset Y of X,

Thus, there exists a non-empty proper subset Y of X such that \(\rho (X) = \rho (Y) + \rho (X \setminus Y)\) if and only if there exists a minimizer Y of \({\overline{\rho }}\) such that \(Y \ne \emptyset , X\). It is known [19] that we can find a non-empty proper subset Y of X minimizing \({\overline{\rho }}(Y)\) among all non-empty proper subsets of X in polynomial time. Let \(Y^{*}\) be a non-empty proper subset of X minimizing \({\overline{\rho }}(Y^{*})\) among all non-empty proper subsets of X. If \({\overline{\rho }}(Y^{*}) >0\) holds, then there does exist a non-empty proper subset Y of X such that \(\rho (X) = \rho (Y) + \rho (X \setminus Y)\). Otherwise, \(Y^{*}\) is a solution of the above problem. This complete the proof.

References

Bach, F.R.: Learning with submodular functions: a convex optimization perspective. Found. Trends Mach. Learn. 6(2–3), 145–373 (2013)

Carnes, T., Shmoys, D.B.: Primal-dual schema for capacitated covering problems. Math. Program. 153(2), 289–308 (2015)

Carr, R.D., Fleischer, L.K., Leung, V.J., Phillips, C.A.: Strengthening integrality gaps for capacitated network design and covering problems. In: Proceedings of the 11th Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 106–115 (2000)

Edmonds, J.: Submodular functions, matroids, and certain polyhedra. In: Guy, R., Hanani, H., Sauer, N., Schönheim, J. editors, Combinatorial Structures and their Applications, pp. 69–87. Gordon and Breach (1970)

Goel, G., Karande, C., Tripathi, P., Wang, L.: Approximability of combinatorial problems with multi-agent submodular cost functions. In: Proceedings of the 50th Annual Symposium on Foundations of Computer Science, pp. 755–764 (2009)

Grötschel, M., Lovász, L., Schrijver, A.: The ellipsoid method and its consequences in combinatorial optimization. Combinatorica 1(2), 169–197 (1981)

Grötschel, M., Lovász, L., Schrijver, A.: Geometric Algorithms and Combinatorial Optimization. Springer, Berlin (1988)

Hochbaum, D.S.: Submodular problems–approximations and algorithms. Technical Report arXiv:1010.1945 (2010)

Iwata, S., Fleischer, L., Fujishige, S.: A combinatorial strongly polynomial algorithm for minimizing submodular functions. J. ACM 48(4), 761–777 (2001)

Iwata, S., Nagano, K.: Submodular function minimization under covering constraints. In: Proceedings of the 50th Annual Symposium on Foundations of Computer Science, pp. 671–680 (2009)

Iyer, R.K., Bilmes, J.A.: Submodular optimization with submodular cover and submodular knapsack constraints. Adv. Neural Inf. Process. Syst. 26, 2436–2444 (2013)

Iyer, R.K., Jegelka, S., Bilmes, J.A.: Curvature and optimal algorithms for learning and minimizing submodular functions. Adv. Neural Inf. Process. Syst. 26, 2742–2750 (2013)

Iyer, R.K., Jegelka, S., Bilmes, J.A.: Monotone closure of relaxed constraints in submodular optimization: Connections between minimization and maximization. In: Proceedings of the 30th Conference on Uncertainty in Artificial Intelligence, pp. 360–369 (2014)

Jegelka, S., Bilmes, J.A.: Graph cuts with interacting edge weights: examples, approximations, and algorithms. Mathematical Programming, pp. 1–42 (2016)

Kamiyama, N.: Submodular function minimization under a submodular set covering constraint. In: Proceedings of the 8th International Conference on Theory and Applications of Models of Computation, volume 6648 of Lecture Notes in Computer Science, pp. 133–141 (2011)

Koufogiannakis, C., Young, N.E.: Greedy \({\varDelta }\)-approximation algorithm for covering with arbitrary constraints and submodular cost. Algorithmica 66(1), 113–152 (2013)

Lovász, L.: Submodular functions and convexity. In: Bachem, A., Grötschel, M., Korte, B. (eds.) Mathematical Programming–The State of the Art, pp. 235–257. Springer-Verlag, (1983)

Murota, K.: Discrete Convex Analysis, volume 10 of SIAM monographs on discrete mathematics and applications. Society for Industrial and Applied Mathematics (2003)

Nagano, K.: A faster parametric submodular function minimization algorithm and applications. Technical Report METR 2007-43, The University of Tokyo (2007)

Queyranne, M.: Minimizing symmetric submodular functions. Math. Program. 82(1), 3–12 (1998)

Schrijver, A.: A combinatorial algorithm minimizing submodular functions in strongly polynomial time. J. Comb. Theory Ser. B 80(2), 346–355 (2000)

Svitkina, Z., Fleischer, L.: Submodular approximation: sampling-based algorithms and lower bounds. SIAM J. Comput. 40(6), 1715–1737 (2011)

Takazawa, Y., Mizuno, S.: A 2-approximation algorithm for the minimum knapsack problem with a forcing graph. J. Op. Res. Soc. Japan 60(1), 15–23 (2017)

Acknowledgements

The author would like to thank Naonori Kakimura and the anonymous referees for helpful comments. This research was supported by JST PRESTO Grant Number JPMJPR14E1, Japan.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kamiyama, N. A Note on Submodular Function Minimization with Covering Type Linear Constraints. Algorithmica 80, 2957–2971 (2018). https://doi.org/10.1007/s00453-017-0363-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-017-0363-8