Abstract

An important question in implicit sequence learning research is how the learned information is represented. In earlier models, the representations underlying implicit learning were viewed as being either purely motor or perceptual. These different conceptions were later integrated by multidimensional models such as the Dual System Model of Keele et al. (Psychol Rev 110(2):316–339, 2003). According to this model, different types of sequential information can be learned in parallel, as long as each sequence comprised only one single dimension (e.g., shapes, colors, or response locations). The term dimension, though, is underspecified as it remains an open question whether the involved learning modules are restricted to motor or to perceptual information. This study aims to show that the modules of the implicit learning system are not specific to motor or perceptual processing. Rather, each module processes an abstract feature code which represents both response- and perception-related information. In two experiments, we showed that perceiving a stimulus-location sequence transferred to a motor response-location sequence. This result shows that the mere perception of a sequential feature automatically leads to an activation of the respective motor feature, supporting the notion of abstract feature codes being the basic modules of the implicit learning system. This result could only be obtained, though, when the task instructions emphasized the encoding of the stimulus-locations as opposed to an encoding of the color features. This limitation will be discussed taking into account the importance of the instructed task set.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Implicit learning is assumed to be one fundamental learning process enabling humans to exploit sequential structures inherent in the environment. It is assumed to take place without any intention or additional effort and even without the learner’s conscious awareness about that they learn or what they actually learn.

One of the most frequently utilized paradigms in the field of implicit learning is the Serial Reaction Time Task (SRTT; Nissen & Bullemer, 1987). In this standard SRTT, participants see locations on the screen which are mapped to spatially corresponding keys. Participants are instructed to press the appropriate response key whenever an asterisk occurs at a certain location. Unbeknownst to the participants, the locations of the asterisk follow a regular sequence. After several blocks of practice, the regular sequence is replaced by a random sequence. This leads to a performance decrement that disappears almost immediately when the original sequence is reintroduced. Importantly, participants are not able to explicate their acquired knowledge when asked to do so.

Despite approximately 30 years of research with the SRTT, there is one crucial and not yet solved debate. This debate concerns the building blocks of implicit learning. While some researchers assume that implicit learning is coded with reference to the external world (e.g., stimulus locations and/or response locations; e.g., Cohen, Ivry & Keele, 1990; Grafton, Hazeltine & Ivry, 1998, 2002; Howard, Mutter & Howard, 1992; Keele, Jennings, Jones, Caulton, & Cohen, 1995; Willingham, 1999; Willingham, Wells, Farrell, & Stemwedel, 2000), other researchers assume that it is inherently motor-based or stimulus–response based (e.g., Deroost & Soetens, 2006; Schumacher & Schwarb, 2009; Schwarb & Schumacher, 2010).

However, meanwhile several findings suggest that such a single learning mechanism in implicit learning does not exist. Since the seminal article of Keele, Ivry, Mayr, Hazeltine, and Heuer (2003), many researchers now view implicit learning as a multimodal, flexible process (e.g., Abrahamse et al., 2010; Haider, Eberhardt, Kunde, & Rose 2012; Haider, Eberhardt, Esser, & Rose 2014; Gaschler, Frensch, Cohen, & Wenke 2012; Goschke & Bolte 2012). Thus, they no longer assume that implicit learning is based on that one central learning mechanism, involving only S–S, R–R, or S–R learning. Rather, they postulate that implicit learning is based on specific modules (e.g., Cleeremans & Dienes, 2008; Frensch, 1998; Whittlesea & Dorken, 1993). These modules are specialized to process information that is restricted along one single dimension. This dimension-specific information processing within the different modules enables the cognitive system to learn two or even three sequences simultaneously (e.g., Deroost & Soetens, 2006; Goschke & Bolte, 2012; Mayr, 1996; Haider et al., 2012).

Overall, Keele et al.’s (2003) Dual System Model largely contributed to the understanding of implicit learning processes. Nevertheless, one drawback of this account is that the central term Dimension and thereby the exact content of the modules is underspecified. This under-specification opened a new discussion. Keele et al. (2003) state that they understand the term Dimension to be interchangeable with the term Modality. However, some modalities might also be made up of more than one dimension. For example, vision is a modality that might be subdivided into dimensions like location, shape, or color. In addition, the question remains whether the specialized modules can be distinguished as being perception-related (i.e., modules for different feature dimensions of the stimulus; e.g., its color, its shape, etc.) or response related (i.e., modules for different feature dimensions of the response; e.g., its location, its direction, its force, etc.; e.g., Abrahamse et al., 2010). Alternatively, it might be more helpful to refrain from this stimulus–response distinction and regard modules as being dedicated to process one specific feature dimension. For instance, the feature dimension location in the distal world might be part of the stimulus and the response as well, as is proposed by the Theory of Event Coding (TEC, Hommel, Müsseler, Aschersleben, & Prinz, 2001).

A recently published article of Eberhardt, Esser, and Haider (2017) contributed to a clarification of the term Dimension by integrating some of the ideas of the TEC into the field of implicit learning. In three experiments, the authors provided evidence that the term Dimension refers to single feature dimensions, like location or color. These feature dimensions represent external features of the stimuli and of the responses as well. In more detail, the findings of Eberhardt et al. suggest that when a stimulus-location sequence and a response-location sequence were both built into a SRTT, they were not learned simultaneously. By contrast, concurrent learning of an uncorrelated stimulus-location sequence and a stimulus-color sequence was found. Most convincing is their last experiment. This experiment contained two sequences, a stimulus-location sequence and also a dual sequence. This latter sequence consisted of the colors of the stimuli and correlated of the response-locations. The crucial manipulation was that the participants in one condition were instructed to code the dual sequence in terms of response-locations, and in a second condition, in terms of colors (see, Gaschler et al., 2012). The results show that the participants did not learn the stimulus-location sequence when they coded the dual sequence in terms of response-locations. However, learning of the stimulus-location sequence was found when the participants coded the dual sequence in terms of colors. Hence, what these findings suggest is that learning of two uncorrelated sequences is possible as long as they do not share a common feature dimension. Put it the other way around: if the features between the two to-be learned sequences overlap, they cannot be learned concurrently.

For the architecture of the implicit learning system, this means that the modules assumed to underlie implicit learning are apparently not specific to process stimulus or response characteristics. Rather, they are specific to distinct features of the environment. If this is indeed the case, it should be possible to show that participants can learn a sequence (e.g., a sequence of response-locations) even when processing the respective feature dimension (i.e., location) is not an active part of the actual task requirements, respectively the task set. Consequently, highly salient features such as response locations may find their way into systematic processing without having to rely on instructions from external sources.

The experiments of Eberhardt et al. (2017) have tested the defining characteristics of the implicit learning modules only by producing interference through competing parallel sequences. The participants were actively processing the competing response- and stimulus-location characteristics, because both streams of information were important for the correct execution of the task. Thus, the goal of showing that learning a pure stimulus-location sequence will simultaneously lead to learning of a response-location sequence, even when participants do not carry out any response during the learning phase goes one step further.

Such an assumption can directly be derived from the TEC. Throughout learning experiences hierarchical networks (with abstract feature dimensions on the highest level) develop where motor actions become associated with the multiple sensory effects they create. For instance, a movement to the left becomes associated with the visual, proprioceptive, auditive feedbacks it produces. These associations are assumed to be bidirectional so that any activation of either a sensory or a motor aspect of the complex hierarchical network will activate the whole abstract feature code and facilitate any action or perception that relies on these activated modules (see, e.g., Hommel et al., 2001; Hommel, 2004, for a detailed description of the learning process postulated by the TEC).

This implies that by experiencing the sequence of stimulus locations through observation, the feature codes should become associated within the location module. Since this sequence knowledge is represented in abstract feature codes, it should be accessible for other effectors that are used to express the respective sequence of feature codes (e.g., Hommel et al., 2001).

One further point should be noted: usually, stimuli are composed of more than one single feature (location, shape, etc.). Not all of them might be sequential or they even lead to conflicting information. Therefore, it has to be defined which feature dimensions are the relevant ones for conducting the task at hand. The current task set plays an important role as it defines the relevant feature dimension and therefore also influences implicit learning processes (Dreisbach & Haider, 2009; Hommel, 2004; Memelink & Hommel, 2013). The important constraints the task set provides for implicit learning processes has already been demonstrated in the above described findings of Eberhardt et al. (2017, Experiment 3). These show that the task instruction determined whether the key-presses were represented either by their color or by their location. This, in turn, influenced which knowledge had been acquired. Participants learned a sequence of colors or a sequence of locations depending on the instructed task set (see also Gaschler et al., 2012).

Therefore, it is important for learning a response-location sequence by merely observing a stimulus-location sequence to ensure that the stimuli are coded by the same feature. By contrast, if the stimuli are coded by a different feature than the responses no concurrent learning of the response-location sequence should be found.

Current study

The goal of the current experiments was to test if participants could learn a response-location sequence by merely observing a stimulus-location sequence on the screen; that is without ever overtly responding to this sequence. In addition, we focused on the constraints under which we would find such implicit learning effects for a response-location sequence. On the one hand, it is conceivable that the mere observation of a stimulus-location sequence already leads to associations of eye-movement responses. This learned (eye-movement) response-location sequence can then be transformed into key-presses (Howard et al., 1992; Vinter & Perruchet, 2002). On the other hand, according to the above described assumption of TEC, it might be that such implicit learning effects are only found when the stimuli are coded by the feature dimension (location) that—in this task—is relevant for the responses.

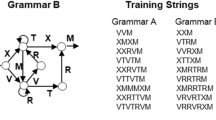

In order to test this hypothesis, we conducted two experiments. They both consisted of three parts: an Induction phase, a Training phase, and a Test phase. Only the Induction phase was varied between the two experimental conditions. Importantly, in this phase stimulus locations appeared in random order, no sequence was present. In the Location-Induction Condition, the Induction phase required participants to respond to one out of six different stimulus locations on the screen with spatially corresponding response keys on the keyboard. Participants were simply asked to respond with one of six response keys to the six stimulus locations. This should establish an activation of the feature dimension location. In the Color-Induction Condition, the participants were asked to count the appearance of either one of two different stimulus colors and to enter the amounts at the end of the block. Thus, the participants in this condition should be more likely to activate the feature dimension color upon perceiving the stimuli.

The following two phases of the experiments were identical for the experimental conditions. In the Training phase, the target stimuli appeared on the screen according to a six-element stimulus-location sequence. Concurrently, also one response square was presented at the same determined location in the upper third of the screen. The participants’ task was to compare the color of the target square with that of the response square and to press one key, if they were identical, and a second key, if they were different. This means that, during training, no participants ever overtly responded according to the stimulus-location sequence.

In a third Test phase, the former stimulus-location sequence was now presented as number symbols (one through six). In each trial, one single digit appeared centrally on the screen, and participants were asked to press the corresponding key out of six different response keys. Thus, in the test phase, the former stimulus-location sequence was now presented only as a response-location sequence.

All participants were asked about their explicit sequence knowledge at the end of the experiments. The assessment of explicit knowledge was especially important here because any explicit knowledge would contaminate the results of the pure implicit location-sequence learning (e.g., Willingham, 1999). Explicit or verbal representations should enable participants to flexibly use the learned sequence independently of the feature dimension the sequence is built in (e.g., Haider, Eichler, & Lange, 2011). Furthermore, since the stimulus-location sequence of the training phase was rather short (six elements), it might be easy to learn it even in the Training phase already. Thus, it is likely that at least some participants will have explicitly noticed the sequence.

If the target stimuli need to be coded by their location feature to learn the response-location sequence, only the participants in the Location-Induction Condition should demonstrate knowledge about the response-location sequence in the test phase. By contrast, if the participants learn the stimulus-location in terms of their eye-movements during training (Howard et al., 1992), we should find substantial response-location learning in both conditions.

Experiment 1

Participants

Forty-seven students of the University of Cologne took part in the experiment (15 men and 32 women). Mean age was 24.08 (SD = 3.54). The participants were randomly assigned to one of the two conditions. In exchange for participation, they received either course credit or 5€.

Material and procedure

Experiment 1 consisted of the above described three parts: the Induction phase, the Training phase and the Test phase. The Induction phase varied between the two conditions while the Training and Test phases were identical for both conditions.

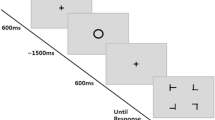

The Induction phase consisted of two blocks with 90 trials each. All participants saw six colored white frames (2 × 2 cm) horizontally arranged in the upper half of the screen. Each of these six frames had a corresponding frame in the lower half of the screen which was right underneath the upper one (see Fig. 1). On each trial, two target stimuli appeared for 100 ms: one in the upper half and one in the lower half of the screen. The two stimuli occurred one below the other, for example both could occur in the respective leftmost location. Both target stimuli contained the same color (one of six colors: red, green, yellow, blue, cyan, or magenta). Importantly, the location of the two target stimuli was randomly chosen in the Induction phase with the only constraint that no location was directly repeated. Also, colors appeared in a pseudo-random order with the same restriction. Thus, participants could not learn any sequence in this phase. The only difference between the two experimental conditions was that the participants in the Location-Induction Condition learned about the mapping between stimulus locations to response locations on the keyboard. The participants in the Color-Induction Condition conducted a color-counting task.

Depiction of the three experimental phases and their required response keys. In the Location-Induction Phase, participants respond to the location of the target pair and press one of six corresponding response keys (here: key #4). In the Color-Induction Phase, participants are instructed to count how often the targets are filled in with a certain color (e.g., red). In the Training Phase, on each trial, participants respond to whether the two filled-up targets are of the same color (here: both are red) or not identical. In the Test Phase, participants respond with six response keys to target numbers on the screen. (Color figure online)

In the Location-Induction Condition, the participants were introduced to the six response keys on the keyboard (y, x, c, b, n, m on a QWERTZ-keyboard) and were told about the mapping between each stimulus location and its corresponding key. The participant’s task was to press the key corresponding to the location of the stimuli.

In the Color-Induction Condition, the pair of target stimuli appeared in the same way. The only difference was that after one pair of two targets had appeared for 100 ms, the next one automatically appeared after a stimulus–stimulus interval of 300 ms. The fixed stimulus–stimulus interval was needed as the participants in the Color-Induction group did not respond to any target stimulus. Instead, they were shown two of the six colors before a block started. They were asked to memorize these two colors and to count separately how many times these two colors occurred within each block. After each block, participants were asked to enter their counted sums for the target colors via the keyboard. The to-be-counted colors changed from Block 1–2 and differed between participants.

Basically, one row of target stimuli would have sufficed in the Introduction phase to perform each task. However, the target stimuli were presented in two rows because in the training task two target stimuli per trial were required. In the Training phase, the presentation of stimuli was identical to that of the Induction phase. However, the participants now were asked to evaluate whether the colors in the two lit up squares were identical. They should press the “green”-key (the “f”-key marked with a green sticker), if the colors of the two squares were identical and the “red”-key (the “g”-key marked with a red sticker), if they were not. The two response keys were labeled with two colors to minimize the likelihood that response keys were coded primarily by their location (see Eberhardt et al., 2017). In 50% of the training trials, the upper and the lower targets were identical in color.

The Training phase consisted of eight blocks with 90 trials each. Here, a stimulus-location sequence was built into the task. The location of the two targets followed a 6-elements sequence (1-6-4-2-3-5). All participants only observed this location sequence and never overtly responded upon it.

In the Test phase, participants were informed that their task now no longer was to compare the colors of the two target stimuli. Instead, they were instructed to respond with six response keys on the keyboard to a single number symbol (one through six) centrally presented on the screen. Participants conducted three blocks with 90 trials, each: in the first and third test blocks, the number symbols followed a pseudo-random order. Only in the second test block, the digits followed the six elements sequence that had been presented in the Training phase as a stimulus-location sequence. To measure the transfer of the former stimulus-location sequence to the response-location sequence on the keyboard, we compared the reaction times between the sequential Block 2 and the random Block 3. Block 1 was used as a warming-up block to equalize the participants’ experience of responding with the six response keys.

After completing all three experimental phases, participants were interviewed about their possible explicit knowledge of either sequence characteristics. They were first asked if they had noticed anything about the task. Afterwards, they were told that there was a sequence and asked to name it.

Results and discussion

Our main research question concerns the results of the Test phase of the experiment. Therefore, besides reporting the results of the post-experimental interviews, we only focus on the findings of the Test phase. The results of the Induction and the Training phases mainly served as a manipulation-check and are described in the “Appendix”.

Analysis of explicit knowledge

As described above, we were interested in only those participants who possessed implicit knowledge. Therefore, we excluded all participants who showed any sign of possessing explicit knowledge (see, Willingham, 1999). Participants were excluded from further analyses if they correctly named three or more transitions of the six elements sequence.

Eight participants of the Color-Induction and five participants of the Location-Induction Conditions were assigned as having at least some explicit knowledge about the response-location sequence. In the post-experimental interviews, it was revealed that all of these participants acquired knowledge about the response-location sequence in the test phase. None of them noticed the stimulus-location sequence in the training phase. Even when asked if there had also been a sequence in the training phase, none of these explicit participants affirmed this question. This left 17 participants in both the Color-Induction and the Location-Induction Conditions. For these participants, we individually computed the mean error rates and median response times (median RTs) per block.

Test phase

Table 1 depicts the means of the median RTs and mean error rates per condition and block. As can be seen, error rates are generally low (3.73% in the Location-Induction Condition and 3.39% in the Color-Induction Condition). Also, the means of the median RTs are rather similar between conditions.

We conducted two 2 (Condition) × 2 (Block: Block 2 vs.3) mixed-design ANOVAs with percent error rates and median RTs as dependent variables. For the percent error rates, this ANOVA did not provide any significant effect (all Fs < 1) (Fig. 2).

Means of median RTs as a function of block type (sequential/random) and condition (Location-Induction/Color-Induction) in the test phase of Experiment 1. Error bars are 95% within-participant confidence intervals (Loftus & Masson, 1994)

Regarding the median RTs as dependent variable, the ANOVA yielded a main effect of Block Type, F(1, 32) = 5.11, MSe = 2214.2, p = .0307, ηp2 = 0.14. No other effect reached level of significance (Fs < 1.5, ps > 0.2). For means of comparison with Experiment 2, we conducted the planned Block contrasts within the two conditions even though the interaction between Condition and Block was not significant. In the Location-Induction Condition the contrast indicated a significant learning effect [F(1,32) = 6.04, MSe = 2214.2, p = .0196, ηp2 = 0.16], but not in the Color-Induction Condition [F(1,32) = 0.55, MSe = 2214.2, p = .4659, ηp2 = 0.02).

Thus, the results did not fully confirm our hypotheses. Only the planned contrasts suggest that the participants in the Location-Induction Condition who had experienced the mapping between stimulus locations and response locations in the Induction phase expressed some knowledge about the response-location sequence in the Test phase. By contrast, participants in the Color-Induction Condition, who coded the stimuli by their color in the Induction phase, showed no significant learning effect of the response-location sequence in the Test phase. This is in line with our assumption that a task set that emphasizes the location feature code is needed to learn a corresponding response-location sequence that had never overtly been part of the learning phase.

However, the important Condition × Block interaction in the Test phase was not significant. In addition, approximately 28% of the participants had to be excluded due to having acquired at least some explicit knowledge. This rate was higher in the Color-Induction Condition in which participants did not show any learning effect. Therefore, we modified the design in Experiment 2 in order to test whether the results could become more clear by ruling out these difficulties with the design of Experiment 1.

Experiment 2

With two exceptions, Experiment 2 was identical to that of Experiment 1. First, in the Test phase, all participants received the response-sequence trials intermixed with random trials. This should reduce the amount of participants acquiring explicit knowledge during the Test phase. Second, we included a Control Condition in which the trials in the Training phase followed a pseudo-random sequence instead of the six elements stimulus-location sequence. This condition was thought to further control for learning in the Test phase.

Method

Participants

Eighty-four participants took part in the experiment. The sample consisted of 17 male and 67 female students. Mean age was 23.76 (SD = 5.11). The participants were randomly assigned to one of the three conditions (Location-Induction Condition, Color-Induction Condition, Control Condition). In exchange for participation, they either received course credit or 5€. Due to technical problems, the data of 6 participants were lost.

Material and procedure

The Induction and the Training phases were identical to that of Experiment 1 with the only exception that in the Control Condition the stimulus locations in the Training phase did not follow any sequence. Participants in this Control Condition received the Induction phase of the Location-Induction condition.

In the Test phase, the amount of sequential trials was identical to that of Experiment 1 (90 trials). However, these sequential trials were randomly distributed across the three test blocks, leading to approximately 33% sequential trials within each test block. As in Experiment 1, we only included Blocks 2 and 3 in our analyses. Block 1 of the Test phase was regarded as a warming-up block. Explicit knowledge was again assessed after the last test block.

Results and discussion

As we did in Experiment 1, we first report the results of the post-experimental interview and then describe the findings of the Test phase. The results of the Induction and the Training phases are again reported in the “Appendix”.

Analysis of explicit knowledge

According to our criterion of three or more correctly named transitions, only two participants showed some sign of possessing explicit knowledge. These participants were excluded from further data analyses leading to 25 participants in the Control and the Color-Induction Conditions, and 26 in the Location-Induction Condition.

Test phase

Tables 2 and 3 show the percent error rates and the means of the median RTs per condition and block. As can be seen, the mean percent errors are again generally low and rather similar between conditions (4.93, 3.80, 4.37% for the Location-Induction, the Color Induction, and the Control conditions, respectively).

Again, we conducted two separate 3 (Condition) × 2 (Trial Type) mixed-design ANOVAs with percent error rates and median RTs as dependent variables. For percent error rates, the ANOVA yielded a significant main effect of Trial Type (F[1,73] = 10.27, MSe = 0.046, p < .01, ηp2 < 0.12). The Trial Type × Condition interaction just failed to reach level of significance (F[1,73] = 2.63, MSe = 0.046, p = .0788, ηp2 = 0.07). Thus, the participants in all three conditions produced more errors in the random than in the sequential trials.

For the median RTs (see Fig. 3), the ANOVA yielded a significant main effect of Trial Type (F[1,73] = 50.87, MSe = 479.75, p < .01, ηp2 = 0.41) as well as a significant interaction between condition and trial type (F[1,73] = 3.29, MSe = 479.75, p = .0427, ηp2 = 0.08). The within-participant contrasts revealed that the participants in all three conditions had acquired some knowledge about the sequence (F[1,73] = 39.21, MSe = 479.75, p < .01, ηp2 = 0.35; F[1,73] = 7.93, MSe = 479.75, p < .01, ηp2 = 0.10, F[1,73] = 11.00, MSe = 479.75, p < .01, ηp2 = 0.13; in the Location-Induction, the Color-Induction, and the Control Conditions, respectively). Importantly, the planned interaction contrast (Condition × Trial Type) between the Location-Induction Condition and the Color-Induction Condition was significant (F[1,73] = 5.63, MSe = 479.75, p = .0200, ηp2 = 0.07). In addition, the Location-Induction Condition also differed significantly from the Control Condition (F[1,73] = 4.06, MSe = 479.75, p = .0475, ηp2 = 0.05). This indicates that indeed the Location-Induction Condition expressed more knowledge about the response-location sequence than the Color-Induction Condition or the Control Condition.

Means of median RTs as a function of Trial Type (sequential/random) and condition (Control/Color-Induction/Location-Induction) in the test phase of Experiment 2. Error bars are 95% within-participant confidence intervals (Loftus & Masson, 1994)

Thus, even though the participants in all three conditions had acquired some knowledge about the response-sequence, this learning effect was significantly larger when the participants had experienced the mapping between stimulus locations and response locations in the Induction phase. In extent to Experiment 1, the current results also showed that participants, even in the Control Condition, who only could learn during the test phase, do possess some knowledge about the response-location sequence. However, the learning effect was rather small and most importantly comparable to the learning effect in the Color-Induction Condition.

General discussion

The two experiments reported here were aimed at contributing to our understanding of the architecture of the implicit learning system. More precisely, we investigated whether it is possible to express implicitly acquired knowledge of a location sequence in terms of key-presses when during training this sequence was only observed as a sequence of stimulus locations on the screen.

The current experiments provided two important main results. First, participants were able to express a location sequence in terms of response locations, albeit they only learned this sequence by merely observing stimuli appearing at different locations on the screen. At no time, the participants overtly responded to the locations on the keyboard in the sequential manner before reaching the Test phase. In the Induction phase, the participants in the Location-Induction Condition had made overt motor responses according to the stimulus-locations, but only in a random order. This means that they were most likely coding the stimuli by their locations, while they could not have learned the particular response-location sequence. In the Training phase, the participants in both the Location- and the Color-Induction Conditions observed the same stimulus-location sequence with their eyes. No motor responses towards the keyboard were made in this phase. In the Test phase, participants responded by pressing one of six keys on the keyboard. Importantly, now the stimuli no longer followed a location sequence. Instead, the stimuli signaled a response-location sequence by centrally presented number symbols.

Second, the participants in the Location-Induction Condition who were instructed to map the stimulus-location to key-presses prior to training expressed substantial more learning of the response-location sequence. This learning effect was almost entirely absent in the Color-Induction Condition of Experiment 1. In Experiment 2, these latter participants showed a small learning effect. However, this was significantly smaller than that of the Location-Induction Condition and did not differ from that of the Control Condition in which participants had no chance to learn the sequence as they received only a pseudo-random sequence during training.

It is also noteworthy, that we controlled meticulously for explicit knowledge, and included only those participants who showed no sign of explicit knowledge. This was done in order to preclude that any explicit knowledge would influence the expression of acquired stimulus-location sequence knowledge in terms of response-location knowledge (e.g., Willingham, 1999). Hence, we could be rather sure that the transfer found in our experiments was due to implicit knowledge.

One alternative way to explain why the participants were able to express their stimulus-location knowledge in terms of a response-location sequence is to assume that they had learned a sequence of eye-movements during training which they then transferred into a sequence of finger key-presses (e.g., Vinter & Perruchet, 2002). For two reasons such an explanation is rather unlikely: First, given the current findings on effector-independent transfer in the implicit learning literature, such transfer is only found if the effectors changed but concurrently the presentation of the stimuli remained the same (e.g., Cohen et al., 1990; Grafton et al., 1998, 2002; Willingham, 1999; Willingham et al., 2000). Second, such an assumption cannot explain why the participants in the Color-Induction Condition who most likely coded the stimuli by their colors and not by their locations showed no such transfer.

In line with the findings of Eberhardt et al. (2017), we assume that the learned stimulus-location sequence can be expressed in terms of a response-location sequence only if stimulus features and responses features are represented as the same abstract feature code (e.g., Hommel et al., 2001). The task set determines by which features events in the environment are coded and therefore also determines which abstract feature codes are learned. Whenever an event is coded by a certain feature this coding activates all stimulus and response related features belonging to this code.

Overall, the current experiments illustrate the consequences resulting from the way the implicit modules work. The findings suggest that since information is never restricted to either stimuli or responses in the implicit modules neither can sequence learning be restricted to one of these sides. If a stimulus-location sequence is extracted from the environment and learned inside the implicit Location module, it can be expressed in terms of a corresponding response-location sequence presupposed that a stimulus–response mapping has been established prior to training.

In a broader scope, the current findings contribute to the debate concerning the building blocks of implicit learning by supporting the assumption that implicit learning relies on modules that are thought to process single feature dimensions (e.g., Abrahamse et al., 2010; Cleeremans & Dienes, 2008; Goschke & Bolte, 2012; Eberhardt et al., 2017; Haider et al., 2012, 2014; Keele et al., 2003). According to this view, there is no single learning mechanism that exclusively refers to encoding processes or to motor processes (e.g., Schumacher and Schwarb). The modules are not specific for processing either sensory or motor information. This is entirely in line with what has been proposed by the TEC (Hommel et al., 2001) or also by the Dimension-Action Model (Magen & Cohen, 2002, 2007, 2010).

In addition, the experiments show that the assumptions of the TEC (Hommel et al., 2001) are applicable not only for trial-to-trial action coding but also on the level of implicit learning processes. A perceived event in the distal environment is always more than just a perception. In the cognitive system, multiple other related elements are co-activated and, as could be shown, are also associated with each other. Thus, separate R–R or S–S sequence learning is only possible when the responses and the stimuli are coded in terms of different feature codes. As long as R–R or S–S sequences include the same feature codes, they are probably not separable (Eberhardt et al., 2017). Note however that this kind of response learning was not investigated here. Instead, we tested for a more complex and arbitrary type of co-activated response learning.

These implications do not mean that it is no longer valid to speak of R-R sequences or S–S sequences. It merely means that they should be used to describe what is sequential in the distal environment. This “visible” contingency should then, however, be viewed as a mere representative of the underlying sequence of feature codes. Learning of these features is the reason why a person’s behavior can be adopted towards a stimulus- or response-sequence in the environment. Since these features consist of many more co-activated elements, other aspects related to the feature sequence are internally co-learned as well. If the environment changes and a different sequential element of the same underlying feature is now present in the environment, perceptions and responses towards this element will be enhanced from the beginning due to the prior made mental co-activations. In the current experiments, this was shown on the basis of a learned stimulus-location sequence. Participants responded faster to a sequence of response locations sharing the same location features even if the stimulus-location sequence were eliminated from the environment.

Further, with our findings we do not mean to state that the models such as of Keele et al. (2003) or Abrahamse et al. (2010) are wrong by implying that there is a distinction between stimulus- and response processing in the first place. After all, such a distinction is also made by the TEC (Hommel, 2004), with the addition that these stimulus- and response features belonging to the same supramodality (lacking in the other models) are intertwined and can therefore bidirectly co-activate each other. According to the model, they can however still be considered to be separate, e.g., in terms of their neurological basis (with the stimulus features being represented in the perceptual brain areas and the response features being represented in the motor brain areas).

In conclusion, the main finding of our study was to show that the mere perception of a sequential feature automatically led to an activation of the respective motor feature. This supports the notion of abstract feature codes being the basic modules of the implicit learning system. Such learning by co-activations is limited, though, to the features the distal events of the environment are coded by, emphasizing the importance of the current task set for learning processes.

References

Abrahamse, E.L., Jiménez, L., Verwey, W.B., & Clegg, B.A. (2010). Representing serial action and perception. Psychonomic Bulletin, 17, 603–623.

Cleeremans, A., & Dienes, Z. (2008). Computational models of implicit learning. In R. Sun (Ed.), The Cambridge handbook of computationalpsychology (pp. 396–421). Cambridge: Cambridge University Press.

Cohen, A., Ivry, R. I., & Keele, S. W. (1990). Attention and structure in sequence learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 16(1), 17–30.

Deroost, N., & Soetens, E. (2006). Perceptual or motor learning in SRT tasks with complex sequence structures. Psychological Research Psychologische Forschung, 70, 88–102.

Dreisbach, G., & Haider, H. (2009). How task representations guide attention: Further evidence for the shielding function of task sets. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35(2), 477–486.

Eberhardt, K., Esser, S., & Haider, H. (2017). Abstract feature codes: The basic modules of the implicit learning system. Journal of Experimental Psychology. Human Perception and Performance, 43(7), 1275–1290.

Frensch, P. A. (1998). One concept, multiple meanings: On how to define the concept of implicit learning. In M. A. Stadler & P. A. Frensch (Eds.), Handbook of implicit learning (pp. 47–104). Thousand Oaks, CA: Sage Publications, Inc.

Gaschler, R., Frensch, P. A., Cohen, A., & Wenke, D. (2012). Implicit sequence learning based on instructed task set. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 1389–1407.

Goschke, T., & Bolte, A. (2012). On the modularity of implicit sequence learning: Independent acquisition of spatial, symbolic, and manual sequences. Cognitive Psychology, 65, 284–320.

Grafton, S. T., Hazeltine, E., & Ivry, R. B. (1998). Abstract and effector-specific representations of motor sequences identified with PET. Journal of Neuroscience, 18, 9420–9428.

Grafton, S. T., Hazeltine, E., & Ivry, R. B. (2002). Motor sequence learning with the non-dominant hand: A PET functional imaging study. Experimental Brain Research, 146, 369–378.

Haider, H., Eberhardt, K., Esser, S., & Rose, M. (2014). Implicit visual learning: How the task set modulates learning by determining the stimulus-response binding. Consciousness and Cognition, 26, 145–161.

Haider, H., Eberhardt, K., Kunde, A., & Rose, M. (2012). Implicit visual learning and the expression of learning. Consciousness and Cognition, 22, 82–98.

Haider, H., Eichler, A., & Lange, T. (2011). An old problem: How can we distinguish between conscious and unconscious knowledge acquired in an implicit learning task? Consciousness and Cognition, 20, 658–672.

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494–500.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–937.

Howard, J. H., Mutter, S. A., & Howard, D. V. (1992). Serial pattern learning by event observation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18(5), 1029–1039.

Keele, S. W., Ivry, R., Mayr, U., Hazeltine, E., & Heuer, H. (2003). The cognitive and neural architecture of sequence representation. Psychological Review, 110(2), 316–339.

Keele, S. W., Jennings, P., Jones, S., Caulton, S., Caulton, D., & Cohen, A. (1995). On the modularity of sequence representation. Journal of Motor Behavior, 27, 17–30.

Loftus, G.R. & Masson, M.E. (1994). Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review, 1(4), 476–490.

Magen, H., & Cohen, A. (2002). Action-based and vision-based selection of input: Two sources of control. Psychological Research Psychologische Forschung, 66, 247–259.

Magen, H., & Cohen, A. (2007). Modularity beyond perception: Evidence from single task interference paradigms. Cognitive Psychology, 55, 1–36.

Magen, H., & Cohen, A. (2010). Modularity beyond perception: Evidence from the PRP paradigm. Journal of Experimental Psychology: Human Perception and Performance, 36, 395–414.

Mayr, U. (1996). Spatial attention and implicit sequence learning: Evidence for independent learning of spatial and nonspatial sequences. Journal of Experimental Psychology: Learning, Memory and Cognition, 22, 350–364.

Memelink, J., & Hommel, B. (2013). Intentional weighting: A basic principle in cognitive control. Psychological Research Psychologische Forschung, 77, 249–259.

Nissen, M. J., & Bullemer, P. (1987). Attentional requirements of learning: Evidence from performance measure. Cognitive Psychology, 19, 1–32.

Schumacher, E. H., & Schwarb, H. (2009). Parallel Response Selection Disrupts Sequence Learning Under Dual-Task Conditions. Journal of Experimental Psychology: General, 138, 270–290.

Schwarb, H., & Schumacher, E. H. (2010). Implicit sequence learning is represented by stimulus-response rules. Memory and Cognition, 38, 677–688.

Vinter, A., & Perruchet, P. (2002). Implicit motor learning through observational training in adults and children. Memory and Cognition, 30, 256–261.

Whittlesea, B. W., & Dorken, M. D. (1993). Incidentally, things in general are particularly determined: An episodic-processing account of implicit learning. Journal of Experimental Psychology: General, 122, 227–248.

Willingham, D. B., Wells, L. A., Farrell, J. M., & Stemwedel, M. (2000). Implicit motor sequence learning is represented in response locations. Memory and Cognition, 28(3), 366–375.

Willingham, D. T. (1999). Implicit motor sequence learning is not purely perceptual. Memory and Cognition, 27, 561–572.

Acknowledgements

Hilde Haider was supported by a Grant from the German Research Foundation (HA-5447/10-1).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Experiment 1

In the Induction Phase, the participants in the Location- and the Color-Induction Conditions received different tasks within the same design. Therefore, we analyzed the data of the induction phase separately for each condition.

In the Color-Induction Condition in which participants were asked to count the appearance of one certain color during the block, the mean difference between the actually presented number of critical color appearance and participants’ answer was 3.4 (SD = 2.2) in Block 1 and 2.4 (SD = 1.12) in Block 2.

For the Location-Induction Condition we computed the mean percent errors and the means of the median RTs in the two Induction Blocks. The results are depicted in Table 4. As can be seen, error rate was moderate and no participants exhibited more than 5% errors.

The Training Phase was identical for the two conditions and was composed of eight blocks. Table 5 depicts median reaction times and mean error rates per condition per block. A 2 (Condition) × 8 (Block) ANOVA with mean error rates as dependent variable yielded a significant main effect of Block [F(7,224) = 4.04, MSE = 6.271, p < .001, ηp2 < 0.12], indicating that error rates decreased with practice (no other effect was significant, Fs < 2; ps > 0.2). Regarding the median RTs across all eight blocks, a 2 (Condition) × 8 (Block) ANOVA with median RT as dependent variable also revealed only a significant main effect of Block F(7,224) = 56.29, MSe = 1973, p < .001, ηp2 = 0.64; all other Fs < 2; ps > 0.2. This Block-effect was due to both conditions showing decreasing RTs over practice blocks. Thus, the two different induction phases between conditions did not alter the performance in the training phase.

Experiment 2

Also in the Induction Phase of Experiment 2, the participants in the Color-Induction Condition received within the same design a different task than the participants in the Location-Induction and the Control Conditions. Therefore, we analyzed the data of the Induction phase separately for the Color-Induction Condition and the other two conditions.

In the Color-Induction Condition in which participants were asked to count the appearance of one certain color during the block, the mean difference between the actually presented number of critical color appearance and participants’ answer was 3.1 (SD = 1.9) in Block 1 and 2.3 (SD = 1.2) in Block 2.

For the Location-Induction Condition we computed the mean percent errors and the means of the median RTs in the two Induction Blocks. The results are depicted in Table 6. As can be seen, error rate was moderate and no participants exhibited more than 5% errors.

The Training Phase was identical for the Color-Induction and the Location-Induction Conditions. The participants in the Control Condition received the same training. However, here the stimulus locations followed a pseudo-random sequence. Tables 7 and 8 depict the mean error rates and median RTs per condition per block. A 3 (Condition) × 8 (Block) mixed-design ANOVA with mean error rates as dependent variable yielded a significant main effect of Block [F(7,511) = 16.11, MSE = 0.008, p < .001, ηp2 = 0.18], indicating that error rates decreased with practice. In addition, also the interaction was significant [F(7,511) = 1.93, MSE = 0.008, p = .0212, ηp2 = 0.05]. This interaction was due to the Control Condition showing smaller decreases in error rates since participants here received a pseudo-random sequence. When testing only the two experimental conditions, the Condition × Block interaction entirely disappeared (F < 1).

Regarding the median RTs across all eight blocks, the 3 (Condition) × 8 (Block) mixed-design ANOVA with median RTs as dependent variable showed the analogous results. The main effect of Block was significant [F(7,511) = 56.29, MSe = 1973, p < .001, ηp2 = 0.64] as was the interaction [F(7,511) = 56.29, MSe = 1973, p < .001, ηp2 = 0.64]. This interaction again disappeared when testing only the two experimental conditions (F < 1 for the interaction).

Thus, the results of the Training phase replicated the findings of Experiment 1. In addition, they showed that the Control Condition differed from the two experimental conditions in that here, the participants were more error-prone and slower.

Rights and permissions

About this article

Cite this article

Haider, H., Esser, S. & Eberhardt, K. Feature codes in implicit sequence learning: perceived stimulus locations transfer to motor response locations. Psychological Research 84, 192–203 (2020). https://doi.org/10.1007/s00426-018-0980-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-018-0980-0