Abstract

We investigated under which conditions sequence learning in a serial reaction time task can be based on perceptual learning. A replication of the study of Mayr (1996) confirmed perceptual and motor learning when sequences were learned concurrently. However, between-participants manipulations of the motor and perceptual sequences only supported motor learning in cases of more complex deterministic and probabilistic sequence structures. Perceptual learning using a between-participants design could only be established with a simple deterministic sequence structure. The results seem to imply that perceptual learning can be facilitated by a concurrently learned motor sequence. Possibly, concurrent learning releases necessary attentional resources or induces a structured learning condition under which perceptual learning can take place. Alternatively, the underlying mechanism may rely on binding between the perceptual and motor sequences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Learning about and adapting to our environment entail the prediction of a future sequence of events on the basis of preceding events. Over the past decade, learning sequences of events has been studied extensively by using the serial reaction time (SRT) task (Nissen & Bullemer, 1987). In a typical SRT task, participants have to respond as fast and accurately as possible to successively presented stimuli. They are not informed about the structured nature of the stimulus sequence. With practice, reaction times (RTs) progressively decrease. To demonstrate that this performance improvement is caused by sequence-specific knowledge and not by general practice effects, an unannounced switch is made to a random stimulus sequence. The cost in RTs on introduction of the random sequence is taken as an indirect measure of sequence learning.

The SRT task has often been used to investigate implicit learning, since sequence learning is believed to be based on the incidental learning of the sequence structure without the need for explicit knowledge or awareness (for reviews, see e.g., Shanks & St. John, 1994; Stadler & Frensch, 1998). However, the supposed implicit nature of the sequential knowledge is not the main issue of the present paper. Instead, we will focus on the debate as to whether sequence learning is based on the perceptual or motor characteristics of the sequence structure. This matter is of particular interest because it deals with the question of whether sequence learning is mediated by the perceptual or the motor brain system. Despite numerous studies (for a review, see Goschke, 1998) this issue still remains unclear and can be centralized around two main theoretical views.

According to the perceptual learning view, people primarily learn the structure of the stimulus sequence. That is, based on perception, associations are formed between successive stimuli (S-S learning). In contrast, the motor learning account states that sequence learning is mainly based on the response sequence, in the form of associations between successive responses (R-R learning). In addition, variations on the standard perceptual–motor debate have been proposed. On the one hand there are some indications that both kinds of associations may develop in a parallel way and both contribute to sequence learning (S-R learning). On the other hand, there is also evidence that sequence learning can be based on response-stimulus associations (R-S learning), in the sense that people become sensitive to the outcome (stimulus) that follows their action (response; Ziessler, 1994, 1998; Ziessler & Nattkemper, 2001).

Direct comparison between studies that favor either the perceptual or motor account is, however, rather difficult, due to the use of diverse variants of the SRT task and other methodological differences, which influence the amount of sequence learning. For example, the complexity of the sequence structure or the use of different response-stimulus intervals (RSI) are shown to have a strong impact on the size of learning effects (e.g., Frensch, Buchner, & Lin, 1994; Stadler & Neely, 1997; Soetens, Melis, & Notebaert, 2004). The aim of the present study was to contribute to the perceptual/motor debate when these differences are restricted to a minimum.

An overview of the main studies that examined perceptual or motor learning shows that it is generally accepted that motor learning plays a crucial role in sequence learning. Motor learning is a well-established learning phenomenon that is supported by a vast amount of behavioral (e.g., Nattkemper & Prinz, 1997; Willingham, 1999) as well as neuropsychological studies (e.g., Bischoff-Grethe, Goedert, Willingham, & Grafton, 2004; Grafton, Hazeltine, & Ivry, 1995; Rüsseler, & Rösler, 2000). For example, Willingham, Nissen, and Bullemer (1989, Experiment 3) found evidence for the motor learning of a color sequence, but were unable to show perceptual learning of a location sequence. All participants were trained to respond to the color of the stimulus, which appeared at different locations. Hence, the responses were determined by the target’s color, while the position of the target was irrelevant for responding. For the motor condition, color followed a repetitive sequence while location varied randomly. For the perceptual condition, location followed a repetitive sequence, but color was completely random. Both conditions were compared with a control group for which both positions and colors varied randomly. The data showed that RTs of participants in the motor condition, who were trained in the color sequence, decreased much faster over time than those of participants in the other two conditions, which did not reliably differ. However, motor learning does not imply that learning is restricted to the level of the specific effectors. There is a growing consensus that learning occurs at a more abstract level of motor planning (Keele, Jennings, Jones, Caulton, & Cohen, 1995). For example, Willingham, Wells, Farrell, and Stemwedel (2000) suggest that sequence learning is represented in response locations.

In contrast to the well-documented role of motor learning, there is an ongoing debate about the underlying mechanisms of perceptual learning. In the present study, we wanted to determine which experimental conditions are necessary for perceptual learning to arise. An overview of the studies that favor perceptual learning shows that perceptual learning has been established using different SRT paradigms, but it remains an open question which precise factors are crucial in order for perceptual learning to take place. According to Howard, Mutter, and Howard (1992), merely observing a sequence suffices for perceptual learning, but replications of their study by Willingham (1999, Experiment 1) and Kelly and Burton (2001) failed to demonstrate reliable observational learning. According to Kelly and Burton, observational learning is not likely to occur with complex sequences; Howard et al.’s results can be explained by the use of simple, unbalanced sequences.

Cock, Berry, and Buchner (2002, Experiment 2) established perceptual learning by using an alternative SRT task in which participants were presented with two simultaneously appearing colored asterisks during training. Participants were instructed to react only to the location of one colored asterisk whilst ignoring the other colored asterisk. Results showed that participants, who were trained in a sequence of asterisks that were to be ignored, were more disrupted when this sequence was imposed on the asterisk that required a response than participants who saw a random sequence of asterisks that were to be ignored. The authors explained their results in terms of a negative priming effect in sequence learning.

Other support for perceptual learning is provided by the studies of Heuer, Schmidtke, and Kleinsorge (2001) and Koch (2001). Both demonstrated learning of a sequence of task sets that was uncorrelated with the responses, by using a task-switching paradigm within the SRT task.

Perhaps the most convincing evidence of purely perceptual sequence learning comes from Remillard (2003). He showed that participants were able to learn an irrelevant position sequence that was independent of the responses. However, perceptual learning proved to be restricted to sequences with first-order constraints, in which the next target can be predicted on the basis of the previous target alone. Perceptual learning was absent when sequences of a higher order were used, where predicting the target requires the knowledge of two (second order) or three (third order) preceding stimuli.

Using another approach, Mayr (1996) showed that perceptual and motor learning can both contribute to sequence learning at the same time. In his study (Experiment 1), participants were instructed to respond to an object (black or white squares and circles) that could appear in four different locations. The object as well as its location was presented following different, uncorrelated sequences in order to separate the contributions of object and location learning. The results showed an increase in RT when the position and object sequences switched to a random order separately and an even larger increase in RT when both sequences switched to a random order concurrently. Mayr’s study demonstrated that motor (object) and perceptual (location) learning can develop independently and in parallel. Moreover, simultaneous learning of the position and object sequences indicated an extra benefit, which Shin and Ivry (2002) refer to as a multiple-sequence benefit.

In a replication of the Mayr study, however, Rüsseler, Münte, and Rösler (2002) were not able to demonstrate perceptual spatial learning. They only found evidence of object and simultaneous learning, and no multiple-sequence benefit was assessed.

To summarize, perceptual learning has been found in several studies, but does not systematically arise like motor learning. The cited studies also use different variants of the SRT task and even replications of the same experimental procedure lead to differences in the amount of perceptual learning. Therefore, in the present study we wanted to determine in a more systematic way under which conditions perceptual learning can take place. Factors that could influence the amount of sequence learning were kept largely under control. For example, the RSI is set to 50 ms throughout all experiments and the perceptual and response dimensions were always the target’s position and color respectively. In Experiment 1, we tried to replicate Mayr’s findings of parallel perceptual and motor learning. Thereafter, in Experiments 2 to 4, we determined whether perceptual learning could also be found with a between-participants manipulation of the perceptual and motor sequences instead of a within-participants manipulation, as in the Mayr design. Learning in these experiments was tested indirectly by randomizing the sequence after sufficient training. Finally, in Experiment 5, we examined the sensitivity of the between-participants design for detecting perceptual sequence learning. This was done by using a very simple sequence to assure that participants would be able to learn the sequence perceptually.

Experiment 1

Mayr (1996) was able to show independent motor and perceptual learning, but due to inconsistent results between the original study and a later replication of Rüsseler et al. (2002), it remains unclear whether perceptual learning effects are general within the Mayr design. Therefore, we first tried to replicate Mayr’s Experiment 1 and added a few important modifications. First of all, the present stimulus sequences are longer than in the original study to see whether learning can be generalized to more complex sequence structures. Secondly, whereas in the original study the perceptual and motor sequences switched to a random order before the introduction of the combined random block, in the present experiment all three random sequence blocks are counterbalanced across participants. Finally, we reduced the visual angle between the different stimulus positions.

Method

Participants

A total of 18 volunteers (10 women and 8 men) participated in the study. Their mean age was 22.61 years. All participants reported a normal or corrected-to-normal vision. None of them had previous experience with SRT or sequence learning tasks.

Stimuli and apparatus

Participants were tested individually in semi-darkened cubicles in the psychological laboratory of the Vrije Universiteit Brussel (VUB). The SRT experiment was run on IBM-compatible Pentium computers using MEL Professional software (Schneider, 1996). The target stimulus was a circle of 1 cm in diameter that appeared on the screen in red, green, blue or yellow against a black background. Stimuli were positioned in one of four corners of an imaginary square with a side of 11 cm. A white fixation cross (7 mm by 7 mm) was displayed in the center of the square and remained on the screen throughout a block. With a viewing distance of 60 cm, the between-stimulus visual angle amounted to 10.39° for stimuli placed along the side length and 14.54° for oblique stimuli. A four-stimulus four-response mapping was used, with response keys situated on the bottom row of a standard ‘azerty’ keyboard. Participants pushed the ‘w’ and ‘x’ keys with the middle and index fingers of their left hand for a red and green stimulus respectively. With the index and middle finger of their right hand they were instructed to push for a blue and yellow stimulus on the ‘:’ and ‘=’ keys respectively.

Design and procedure

Before the beginning of the experiment, participants were instructed to react as fast as possible to the color of the stimulus and to restrict the error rate to a maximum of 5%. Participants first completed two practice blocks of 50 trials with random appearance of color and location to learn the color-response assignment. After practice, participants received 20 experimental blocks of 100 trials each. At the start of each block, a warning for the upcoming trials appeared, urging participants to rest their fingers lightly on the response keys. Reaction time (RT) and accuracy were recorded in each trial. Response initiation terminated stimulus exposure and started the RSI of 50 ms. On incorrect responses a 50-ms tone of 400 Hz sounded. No error corrections were possible. Blocks were separated by a 30-s rest period, during which participants were informed about their error rate in the previous block.

Except for the random Blocks 10, 14, and 18, all experimental blocks followed two fixed sequences composed of 12 and 13 elements, assigned by counterbalancing to either location (perceptual) or color (motor). The 12-element sequence is the one used by Reed and Johnson (1994; 121342314324; numbers for location refer to the four corners starting with 1 at the upper left corner and going clockwise around the screen; for the color sequence number 1 corresponds to a red stimulus, 2 to green, 3 to blue, and 4 to yellow). This sequence is characterized by second-order constraints, meaning that an upcoming stimulus is determined by the two preceding stimuli. Hence, knowing the previous stimulus alone is not sufficient to predict the current stimulus. Subsequently, we paired each element of the 12-stimuli sequence once with each element of a 13-stimuli sequence (3134214324124). This ensued in a compound fixed sequence of 156 elements, which was cycled through continuously. So when Stimulus 156 was presented before all trials of a block had passed, it was followed by Stimulus 1 and subsequent stimuli. In the random Blocks 10, 14, and 18 the color sequence, the location sequence or both sequences switched to a random order to assess motor, perceptual, and combined learning respectively. The order of the introduction of the random sequences (color, location or both) was counterbalanced over the three random blocks. This entails that for one group of participants, the color sequence switched to a random order in Block 10, the location sequence in Block 14, and both sequences in Block 18. For the other two groups the order of the random sequence blocks was altered according to a Latin Square scheme. All random stimulus sequences were generated on the basis of a random seed that differed between participants.

Results and discussion

Responses faster than 100 ms and slower than 2.000 ms were considered outliers and were discarded from statistical analysis. Also excluded from further analysis were errors and four stimuli following an error, as were the first four trials of a series. This was done for all experiments.

Errors

Mean error rate per block amounted to 2.9% (SD = .29). The correlation between error rates and RTs revealed no indications of a speed–accuracy trade-off (r = .28, not significant). Error rates were not analyzed further (see Table 1 for error rates per block).

Reaction times

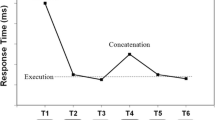

A repeated measures ANOVA was carried out on the participants’ mean correct RTs with the 17 training blocks as within-participants factor. A significant RT decrease over training blocks revealed a nonspecific learning effect, F(16,240) = 18.91, p = .000. Furthermore, as shown in Fig. 1, there was an increase of RT in each of the random Blocks 10, 14, and 18.

To determine sequence-specific learning, we analyzed the difference in RT between the random block and the mean of the respective adjacent structured sequence blocks for every sequence condition (motor color, perceptual location or both). We refer to this difference in RT as the amount of sequence learning. A repeated measures ANOVA with sequence learning and sequence condition as within-participants factors revealed no differences in RT between sequence conditions, F(2,34) = .55, p = .58. The general RT increase with the introduction of random sequences illustrated in Fig. 1 was confirmed by a significant main effect of sequence learning, F(1,17) = 50.97, p < .001. Importantly, there was a significant interaction between sequence learning and sequence condition, showing that the amount of learning differed between sequence conditions, F(2,34) = 4.54, p < .05. Figure 2 shows the learning performance for the three sequence conditions.

Mean RT as a function of the amount of sequence learning (difference between random block and mean of adjacent structured blocks) for the color, location, and both sequence conditions in Experiment 1. The blocks were color, location or both sequences switched to a random order were counterbalanced over Blocks 10, 14, and 18.

Further analysis with planned comparisons confirmed significant learning of the (motor) color sequence (44 ms), the (perceptual) location sequence (19 ms), and the combined sequences (43 ms); F(1,17) = 43.63, p < .001, F(1,17) = 5.24, p < .05, and F(1,17) = 33.15, p < .001 respectively. Moreover, color learning did not differ reliably from combined learning, F(1,17) = .04, p = .85, but both the color and the combined learning effects were more pronounced than location learning, F(1,17) = 5.88, p < .05 and F(1,17) = 4.62, p < .05 respectively.

The results of Experiment 1 mainly agree with the findings of Mayr (1996). Participants were not only able to learn the color sequence, which indicates motor learning, but also demonstrated perceptual learning in the form of location learning. Additionally, the results showed combined sequence learning of both the color and location sequences. Unlike Mayr, we were unable to find a multiple-sequence benefit; the combined learning effect was not the most pronounced. This could indicate that the multiple-sequence benefit in Mayr’s study was likely to be due to the fact that participants always received the combined random block after two random motor or perceptual blocks, that is, after more training.

In the present study motor (color) and combined learning were both more apparent than perceptual (location) learning. This seems to suggest that motor learning contributes more to sequence learning than perceptual learning. Although perceptual learning was significant, participants do not seem to have any extra benefit from it, since the RT increased equally in the motor and the combined random blocks. Motor learning, on the other hand, appears to be crucial since it did not differ reliably from combined sequence learning.

Nevertheless, both the findings of Mayr and those of the present study provide support for at least a partial role of perceptual learning. These results are inconsistent with the lack of perceptual learning demonstrated in the study of Willingham et al. (1989, Experiment 3). A possible explanation is that comparisons of the sequence structure in the latter study were made between-participants. Structured sequence groups were contrasted with a random sequence group instead of interrupting the sequence by a random block. This measure is possibly less sensitive. Not only the sequence structure was assessed between-participants, but also motor and perceptual learning was tested between-participants. On the other hand, Mayr (Experiment 2) also found perceptual learning when he used a between-participants manipulation of the motor and perceptual sequences. Unfortunately, perceptual learning was tested with a very simple spatial sequence of six items in length. So it remains uncertain whether perceptual learning will also take place when more complex sequences are manipulated between-participants.

To verify this, we conducted three experiments in which the contributions of motor and perceptual learning were tested by means of a between-participants manipulation of the sequences. Participants were assigned to either a perceptual location condition in which only the irrelevant perceptual location of the targets was structured or to a motor color condition in which only the relevant color of the targets was structured. Sequence learning was tested by means of a standard within-participants manipulation of the sequence structure. After sufficient practice, the location and color sequence switched to a random order to assess perceptual and motor learning respectively.

Experiment 2

Method

Participants

A total of 33 undergraduate students (27 women and 6 men) of the VUB participated in return for extra credit in an introductory psychology course. Their mean age was 18.77 years. Participants were randomly assigned to one of two experimental conditions: 17 to the (motor) color condition and 16 to the (perceptual) location condition.

Stimuli and procedure

The stimuli and procedure were the same as in Experiment 1, except in the following respects. In each trial, the target stimulus (red, green, blue or yellow circle) appeared in one of four positions in a row. Stimulus positions were each marked by a white horizontal line of 1 cm in length on the computer screen. Position markers remained on the screen throughout each block. The distance between two adjacent stimulus positions (or targets) measured 8 cm. With a viewing distance of 60 cm, the visual angle of the distance between the targets was 7.59°. Participants again responded to the color of the stimulus by pressing the same response keys as in Experiment 1, namely ‘w,’ ‘x,’ ‘:,’ and ‘=’ on the bottom row of an ‘azerty’ keyboard.

A fixed stimulus sequence of 32 elements was used. The sequence was completely balanced up to second order (43244213234433111331412214241223; the numbers 1 to 4 refer to the location of the four horizontal positions starting with 1 for the leftmost position, 2 for left, 3 for right, and 4 for the rightmost position. For the color sequence number 1 corresponds to a red stimulus, 2 to green, 3 to blue, and 4 to yellow). Since a complex sequence would require more practice to learn, participants had to complete two learning sessions on two consecutive days. Each session consisted of 15 blocks of 100 trials, preceded by two 50-trial random practice blocks. Again, a 30-s rest period was inserted between blocks. All structured blocks followed the sequence of 32 stimuli, which was cycled through continuously. In random Blocks 10 to 12 of Session 2, a random stimulus sequence was introduced, generated on the basis of a random seed that differed between participants. As in Experiment 1, participants were instructed to react as fast as possible to the color of the stimulus and to restrict the error rate to a maximum of 5%.

To assess motor learning, color followed the structured sequence in the color condition, while location varied randomly. Conversely, in the location condition, the perceptual position sequence was structured and color varied randomly.

Results and discussion

Statistical analyses were performed on the means of three successive blocks of 100 trials, which from this point forward will be referred to as one block. Consequently, the experiment consists of ten experimental blocks, five blocks per session. All blocks contained the structured stimulus sequence of 32 elements, except for Block 9 in which the sequence was random.

Errors

Mean error rate per block amounted to 2.4% (SD = .26) in the (motor) color condition and 2.4% (SD = .27) in the (perceptual) location condition. The error rates did not correlate with RTs (r = .41 for color and r = .37 for location, both not significant) and were not analyzed further (see Table 2 for error rates per block).

Reaction times

The RT data of the nine training blocks were subjected to a repeated measures ANOVA with sequence condition (color or location) as between-participants factor and training block as within-participants factor. The difference in RT between sequence conditions was not significant, F(1,31) = 1.08, p = .31. Nonspecific learning was indicated by a significant decrease in RTs during training, F(8,248) = 59.60, p = .000 that did not differ between sequence conditions, F(8,248) = .60, p = .78. Figure 3 illustrates the learning curves for the (motor) color and (perceptual) location groups.

The amount of sequence learning was derived from an increase in RTs in the random Block 9 compared with the mean of the adjacent structured sequence Blocks 8 and 10. Planned comparisons indicated that, although the RT increase in the random block did not differ significantly between the two conditions, F(1,31) = .79, p = .38, sequence learning emerged in the (motor) color condition (18 ms), but not in the (perceptual) location condition (10 ms); F(1,31) = 8.22, p < .01, F(1,31) = 2.39, p = .13 respectively.

The results of Experiment 2 demonstrated that participants were able to learn the color sequence, but not the location sequence. This implies that the fixed sequence of 32 stimuli was only learned when the motor sequence was structured. We found no evidence of perceptual learning when the sequence structure was imposed on the irrelevant location of the stimuli.

While Experiment 2 does not support perceptual learning, motor learning was also less pronounced than in Experiment 1 (18 vs. 44 ms). The smaller learning effect is probably caused by the complex sequence structure of 32 elements. The large sequence length does not only result in a higher amount of stimulus transitions. Also, the fact that the sequence is balanced up to second order, renders the sequence more structurally complex. Since perceptual learning in particular seems vulnerable to stimulus complexity (e.g., Kelly & Burton, 2001; Remillard, 2003), we replicated Experiment 2 with the original Reed and Johnson sequence structure of 12 elements of Experiment 1 (121342314324; the numbers 1 to 4 refer to the location of the four horizontal positions starting with 1 at the outermost left position, 2 for left, 3 for right, and 4 for the outermost right position. Again, for the color sequence number 1 corresponds to a red stimulus, 2 to green, 3 to blue, and 4 to yellow).

Experiment 3

Method

Unless otherwise mentioned, the method was the same as in Experiment 2. A total of 27 undergraduate students (22 women and 5 men) of the VUB participated in return for course credit. Their mean age was 19.09 years. They were randomly assigned to one of two experimental conditions: 14 to the (motor) color condition, 13 to the (perceptual) location condition. Again, participants responded as fast and accurately as possible to the color of the stimulus by pressing the corresponding response keys. As in Experiment 2, participants completed 10 blocks of 300 trials spread over two subsequent days. All blocks contained the structured 12-element sequence of Reed and Johnson (1994), except for Block 9 in which the stimulus sequence was random. To establish motor learning, color followed the structured sequence, while location varied randomly. Perceptual learning, on the other hand, was tested by structuring the location while color varied randomly.

Results and discussion

Errors

The mean error rate per block was low for both the (motor) color (M = 2.3; SD = .51) and the (perceptual) location conditions (M = 2.5; SD = .28). The error rates were positively correlated with RTs (r = .64 for color and r = .77 for location, both p < .05). Errors were not analyzed further (see Table 2 for error rates per block).

Reaction times

A repeated measures ANOVA carried out on the RT data of all nine training blocks with sequence condition (color or location) as between-participants factor and training block as within-participants factor, indicated that RTs did not differ between sequence conditions, F(1,25) = .41, p = .53. Furthermore, RT decreased significantly over training, F(8,200) = 54.82, p = .000. A significant interaction between sequence condition and training block revealed that RTs decreased faster over time for the (motor) color than for the (perceptual) location condition, F(8,200) = 11.92, p = .000, as can also be seen in Fig. 4.

Planned comparisons confirmed a difference in sequence learning between conditions, F(1,25)=11.25, p <.001. Sequence learning, as indicated by a RT increase in the random Block 9 compared with the structured Blocks 8 and 10, was prominent in the color condition (92 ms), but absent in the location condition (6 ms), F(1,25)=26.96, p <.001 and F(1,25)=.12, p =.73 respectively.

In Experiment 3, participants demonstrated extensive motor learning of the color sequence, but no perceptual learning of the location sequence. Since these results are consistent with Experiment 2, the lack of perceptual learning demonstrated in Experiment 2 cannot be attributed to the complexity of the stimulus sequence of 32 elements.

Experiment 4

How can we explain that Experiment 3 failed to demonstrate perceptual learning with the Reed and Johnson sequence of 12 stimuli? In Experiment 1, perceptual learning clearly occurred when the same sequence structure was used. Unlike Experiment 3, however, the assessment of perceptual learning with the Reed and Johnson sequence was not so pure in Experiment 1, where two different sequence structures of 12 and 13 in length were intermixed. So learning of second-order constraints that characterized the 12-stimuli sequence was only tested partially in this experiment. In Experiment 3 as well as in Experiment 2, however, the perceptual sequences were always entirely made up of second-order constraints. Perceptual learning did not take place, in agreement with Remillard who was only able to demonstrate perceptual learning with sequences of first-order constraints. In Experiment 4, we therefore verified if perceptual learning could take place using a sequence structure with first-order constraints. First-order constraints allow prediction of an upcoming stimulus on the basis of the stimulus immediately preceding it. Soetens et al. (2004) demonstrated that this type of material leads to strong learning effects. However, since using fixed sequences with first-order restrictions can result in easy detection of transition regularities, this kind of learning would not be comparable to the previous experiments. For that reason, we used a probabilistic sequence that was generated on the basis of an artificial grammar. If sequence complexity indeed inhibited perceptual learning in the previous Experiments 2 and 3, we could expect perceptual learning to take place with a sequence composed of first-order constraints, in agreement with the findings of Remillard.

Method

Unless otherwise mentioned, the method was the same as in Experiments 2 and 3. A total of 39 undergraduate students (34 women and 5 men) of the VUB participated in return for course credit. Their mean age was 19.19 years. They were randomly assigned to one of two experimental conditions: 20 to the (motor) color condition and 19 to the (perceptual) location condition.

A sequence of 167 stimuli with first-order restrictions was generated on the basis of the artificial grammar depicted in Fig. 5.

The artificial grammar used in Experiment 4. S1 to S4 denote the four stimulus alternatives (red, green, blue, and yellow for color; leftmost, left, right, and rightmost for location). The arrows indicate permitted first-order transitions between successive stimuli, with first-order probabilities always being .50.

The first-order constraints entail that each stimulus can be followed only by two of four stimulus alternatives (see Soetens et al., 2004 for a detailed description of the grammar). First-order probabilities were always .50. As with fixed sequences, the numbers 1 to 4 refer to the location of the four horizontal positions, starting with 1 at the outermost left position and so on up to 4 for the outermost right position. For the color sequence, number 1 corresponds to a red stimulus, 2 to green, 3 to blue, and 4 to yellow.

As in Experiments 2 and 3, participants completed two sessions of five blocks of 300 trials each on two successive days. In all structured blocks, the sequence of 167 stimuli was cycled through continuously, so that when Stimulus 167 was presented before all trials of a block had passed, it was followed by Stimulus 1 and subsequent stimuli. In Block 9 of Session 2, the sequence switched to a random order that differed between participants. Again, in the (motor) color condition, color followed the structured sequence, while location varied randomly. In the (perceptual) location condition, location was structured and color varied randomly.

Results and discussion

Errors

Mean error rate per block was low for both the (motor) color condition (M = 2.2; SD = .54) and the (perceptual) location condition (M = 1.7; SD = .25). The correlation between error rates and RTs was positive for the color condition (r = .69, p < .05), but absent for the location condition (r = .51, ns). Errors were not analyzed further (see Table 2 for error rates per block).

Reaction times

A repeated measures ANOVA with sequence condition (color or location) as between-participants factor and training block as within-participants factor, revealed that RTs were generally lower for the (motor) color condition than for the (perceptual) location condition, F(1,37) = 7.70, p < .01. Furthermore, RT decreased with training, F(8,296) = 73.45, p = .000, and the decrease was more pronounced for the color than for the location condition, F(8,296) = 3.38, p = .001.

Figure 6 shows a strong learning effect in the color condition, but no learning in the location condition. Planned comparisons confirmed that the RT increase in the random Block 9 relative to the RTs of the surrounding structured Blocks 8 and 10 is different between conditions, F(1,37) = 99.35, p = .000. Apparent learning occurred in the color condition (95 ms), F(1,37) = 199.79, p = .000. However, despite the use of a sequence with first-order restrictions, learning was completely absent in the location condition (−1 ms), F(1,37) = .02, p = .88.

Mean RTs per block for the color (motor) and location (perceptual) conditions in Experiment 4. Except for the random Block 9, all blocks were structured according to the artificial grammar depicted in Fig. 5.

We can conclude that the results of Experiment 4 are in complete agreement with the previous Experiments 2 and 3. Although a sequence with first-order constraints was used, perceptual (location) learning did not come about, while motor (color) learning again manifested clearly. The lack of perceptual learning in Experiments 2 and 3, therefore, cannot be ascribed to the use of sequences with second-order constraints.

Experiments 2 to 4 demonstrate that perceptual learning of an irrelevant position sequence is unlikely to occur using a between-participants design. All together, the results seem to indicate that perceptual learning can only take place in a within-participants design where participants concurrently learn a motor sequence, as is the case in Experiment 1. One possible explanation is that different cognitive processes are involved when participants perceive a structured sequence, while concurrently learning another uncorrelated motor sequence (Experiment 1), compared with a situation where participants can only learn through observation (Experiments 2–4). However, there are some important differences between Experiment 1 and Experiments 2–4 that can account for the discrepancy in perceptual learning as well. For example, in Experiment 1, the targets moved along two dimensions in the corners of a virtual square, whereas in Experiments 2–4 the target positions were linearly arranged. Although the large visual angle required eye movements in all experiments, it could be that a different pattern of eye movements could explain the inconsistent results between Experiment 1 and the subsequent Experiments 2–4. Moreover, since we were not able to demonstrate perceptual learning in Experiments 2–4, in which we used a between-participants design, it remains an issue if this design has enough sensitivity to detect perceptual learning effects. Put differently, does the set-up allow participants to express their sequential perceptual knowledge while responding to a random target? Can this knowledge be reflected in the RTs, so that randomizing the perceptual sequence leads to an increase in RT?

To determine whether the absence of perceptual learning in Experiments 2–4 is caused by a lack of sensitivity of the experimental design or can be ascribed to the differences in task characteristics with Experiment 1, we need to conduct an experiment in which perceptual learning can emerge within similar conditions as in Experiments 2–4. Following Mayr (1996, Experiment 2), who demonstrated perceptual learning with simple sequence structures, we examined whether perceptual learning in a between-participants design can take place with a simple sequence structure.

Experiment 5

The purpose of Experiment 5 was to determine whether perceptual learning can take place using the between-participants design of the previous Experiments 2–4. If learning emerges, we can safely state that perceptual knowledge has an influence on the responses to a random target, and that an absence of perceptual learning in Experiments 2–4 cannot be ascribed to a lack of sensitivity of the design.

Method

Participants

A total of 19 paid volunteers (9 women and 10 men) participated in the study. Their mean age was 20.84 years.

Stimuli and procedure

The experiment was run on IBM-compatible personal computers using E-prime Version 1.1 software (Schneider, Eschman, & Zuccolotto, 2002). The method was comparable to the perceptual location condition of the previous Experiments 2–4. In each trial, a colored dot of 1 cm in diameter appeared against a dark background in one of four horizontally aligned white squares with sides 1.5 cm in length that remained on screen throughout a block. Gaps between two squares measured 2.5 cm (or 2.39° with a viewing distance of 60 cm); the between-stimulus distance amounted to 4 cm (3.81°). Participants were instructed to respond to the color of the target dot by pressing the adjacent ‘c,’ ‘v,’ ‘b,’ and ‘n’ keys on the bottom row of a standard ‘azerty’ keyboard for a red, green, blue or yellow dot respectively. Responses were made with the middle and index fingers of both hands. The experiment started with two practice blocks of 50 trials each. Both the irrelevant location and the relevant color of the target varied randomly during practice. After practice, participants completed 15 experimental blocks, consisting of 100 trials. In all the experimental blocks, except for the random Block 13, the target’s position changed according to a very simple deterministic sequence of eight stimuli with first-order restrictions (12431423; numbers refer to the four positions with 1 for the leftmost position, 2 for the left, 3 for the right, and 4 for the rightmost position). The sequence was continuously repeated over blocks, except for the random Block 13, where the colored target appeared randomly in one of four positions. The color of the target was random throughout the entire experiment. Since the perceptual sequence was very simple, the experiment only consists of one session instead of two like in Experiments 2–4. The RSI was set to 50 ms, except in the case of an incorrect response when the word ‘Error’ was briefly presented for 750 ms. Instructions stressed the importance of fast responding. After each block, participants received feedback on both their error rate and their mean RT for that particular block. Blocks were separated by a break of 30 s.

Results and discussion

Errors

Mean error rate per block amounted to 4.8% (SD = 1.41). The errors did not correlate with RTs (r = .44, not significant) and were not analyzed further (see Table 3 for error rates per block).

Reaction times

A repeated measures ANOVA performed on the 14 training blocks as within-participants factors indicated that the RTs decreased over training, F(13,234) = 3.83, p < .001 (see Fig. 7).

To analyze sequence-specific perceptual learning, we compared the RTs in the random Block 13 with the mean of the surrounding structured Blocks 12 and 14. A planned comparison test revealed that RTs significantly increased (16 ms) with the introduction of the random sequence in Block 13, thus, indicating perceptual learning of the location sequence, F(1,18) = 4.58, p < .05.

The results of Experiment 5 demonstrated that knowledge of an irrelevant location sequence can be reflected in the responses to a random target. Therefore, an absence of perceptual learning in Experiments 2 to 4 cannot be ascribed to a lack of sensitivity of the between-participants design used.

General discussion

In the present study we investigated the perceptual or motor nature of sequence learning in SRT tasks. Unlike motor learning that arises as a general learning effect, it is unclear under which circumstances learning can be based on perception. Therefore, we wanted to determine in a systematic way which task conditions are necessary for perceptual learning to occur. In agreement with Mayr (1996), we found that perceptual learning can take place with concurrent motor learning. However, we were unable to demonstrate perceptual learning of similar complex sequences when these were trained independently from the motor sequence. In a last experiment, we showed that perceptual knowledge can be expressed in the responses to a random target when participants are trained with a very simple perceptual sequence, a condition known to enhance perceptual learning (Mayr, 1996; Kelly & Burton, 2001). Therefore, the results seem to imply that perceptual learning of more complex sequences is only possible under conditions of a concurrently learned motor sequence.

In Experiment 1, we replicated the study of Mayr (1996) and confirmed that participants were able to learn uncorrelated perceptual and motor sequences independently in parallel. However, perceptual learning effects were rather small while motor and combined sequence learning were comparable, indicating that participants did not benefit much from additional perceptual learning in the combined learning conditions. This effect did not occur in Mayr’s original study. Possibly, the small perceptual learning effect was caused by the complexity of the sequence structures we used. As pointed out by Kelly and Burton (2001) and supported by Remillard (2003), perceptual learning is much more vulnerable to sequence complexity than motor learning. This latter type of learning has been found to occur with different types of sequences, from simple fixed sequences to complex probabilistic sequences based on artificial grammars (e.g., Cleeremans & McClelland, 1991). Alternatively, it could be that perceptual learning was less pronounced due to the way we counterbalanced the random blocks. Where counterbalancing of the three random blocks in the present study was complete, Mayr always presented the combined random block as the last random block. This could also explain the absence of a multiple-sequence benefit in our study. Possibly, incomplete counterbalancing could account for the findings of Rüsseler et al. (2002), who were unable to demonstrate perceptual spatial learning in a replication of the Mayr study. Rüsseler et al. always tested spatial learning first, so insufficient training could be a plausible explanation for a lack of perceptual learning. These results stress the importance of a complete counterbalancing of the random sequence blocks.

The perceptual learning effect emerging in Experiment 1 is not in agreement with the results of Willingham et al. (1989), who found no evidence of perceptual learning when using a between-participants measure of learning. Therefore, in Experiments 2, 3, and 4, we examined whether perceptual learning would occur when the motor and perceptual sequences were manipulated between participants. Perceptual learning was absent in all three experiments.Footnote 1

In Experiment 5, we verified whether the absence of perceptual learning in Experiments 2 to 4 could be due to a lack of sensitivity of the between-participants design used to detect perceptual learning. In agreement with Mayr (1996, Experiment 2), we demonstrated that perceptual learning can take place with a very simple sequence structure (see also Kelly & Burton, 2001). This rules out the alternative explanation that participants are unable to express their perceptual sequential knowledge in the responses to a random target.

The present study seems to indicate that perceptual learning of more complex sequence structures, as emerging in Experiment 1, only takes place under specific task conditions. It seems that when the structural characteristics of the SRT task are solely determined by the perceptual sequence, sequence learning can only occur with very simple sequence structures (Experiment 5). In cases of more complex sequences, like deterministic sequences of second-order constraints (Experiments 2 and 3), or probabilistic sequences with first-order restrictions (Experiment 4), perceptual learning remains absent. Perceptual learning of more complex sequences, however, does seem possible when the motor sequence is also structured, as in the Mayr design (Experiment 1).

This would imply that perceptual learning only takes place when certain conditions are met, when learning is explicit (e.g., Rüsseler & Rösler, 2000), when learning involves simple sequence structures (e.g., Mayr, 1996, Experiment 2; Howard et al., 1992), or when a motor sequence is learned concurrently (Mayr, Experiment 1). The other factors determining the occurrence of perceptual learning are not yet clear, since it is difficult to establish learning that is truly completely unaffected by either the responses or response-related processes. For example, the results of Cock, Berry, and Buchner (2002), who found learning of an ignored sequence of stimuli, can also be interpreted in terms of response inhibition processes. So that what participants learned was actually a sequence of responses that were to be inhibited, rather than a response-independent stimulus sequence. On the other hand, the use of simple sequences may also explain their results.

Learning sequences of tasks that are independent of the responses, as assessed by Koch (2001) as well as Heuer et al. (2001), is again not completely independent of response-related processes. Since every trial forces the participant to reconsider the task set or the S-R mapping, this type of learning could well be seen as learning a sequence of S-R mappings. This is especially the case in the experiments of Heuer et al. where compatible and incompatible S-R mapping take turns. S-R mapping is well known to be influenced by response selection processes, so this type of learning is again not purely perceptual.

Likewise, varying the RSIs (Experiment 1) or stimulus onset asynchronies (SOAs, Experiment 2) to assess temporal sequence learning in correlation with spatial learning, as was done by Shin and Ivry (2002), also clearly affects response-related processes, like the stage of response preparation.

Coming back to the Mayr design, why would a concurrently learned motor sequence facilitate perceptual learning? A possible explanation is that when participants are engaged in motor learning, this encourages them to search for further structure in the task.Footnote 2 So when they are already embedded in a structural learning context, it is easier for the perceptual learning system to catch on. This would mean that when structure is provided by the SRT task, participants are able to learn additional structures, like perceptual sequence structures. Whether perceptual learning is only enhanced in cases of additional motor structure requires more investigation. But it does seem that when the perceptual sequence itself is sufficiently salient, as in Experiment 5, no additional structure is required for perceptual learning to take place.

Alternatively, the occurrence of perceptual learning could be related to the available attentional capacity. Because the motor sequence in the Mayr design speeds up responses, more attentional resources become available. As a result, compared with a situation where all resources are needed in order to control random response events, the spare capacity can be used to ‘discover’ other task structures, like a perceptual sequence. In this way, perceptual learning can be considered to be a secondary task and takes place to the extent that the primary task, i.e., motor sequence learning, leaves sufficient resources for the secondary task. Thus, when motor learning demands less capacity, as in the Mayr design, perceptual learning seems to be enhanced. This hypothesis can be examined, for example, by manipulating the complexity of the motor sequence within the Mayr design. If perceptual learning is related to attentional resources, it is likely to be affected by the complexity of the motor sequence. Likewise, the degree to which perceptual learning takes place depends upon the complexity of the perceptual sequence itself. In Experiments 2–5, the primary task always consisted of responding to random target features. But only with a very simple, salient perceptual sequence (Experiment 5), the remaining attentional resources were sufficient for learning to occur. Within the attentional capacity view, the amount of training can also be related to the amount of perceptual learning. The more training, the more the task becomes automatic. This would imply that with sufficient training, more complex sequence structures can be learned.

It is possible that the capacity hypothesis can account for the results of Remillard (2003). Contrary to our results, he was able to demonstrate perceptual learning of a complex position sequence that was unrelated to the random responses. In his experiments, participants had to respond to the identity of a randomly changing bigram (xo or ox) that was marked in one of six locations. So, not only was response discrimination limited to two choices instead of four, the positional sequence itself was also very salient with six alternatives. Together with an extensive training phase of 3 days, it seems that his type of SRT task allows for more attentional resources to be allocated to perceptual learning than the standard version of the SRT task used in the present study.

The attentional capacity view of sequence learning implies that sequence learning in its origin is not an automatic process, but instead is capacity-demanding. In accordance with this view, a number of studies have shown that learning is adversely affected in a double-task paradigm. Participants performing the SRT task under single task conditions show better learning that participants who additionally perform a concurrent task, mostly a tone-counting task (e.g., Cohen, Ivry, & Keele, 1990). However, the attention account is challenged by other researchers. For example, Frensch, Lin, and Buchner (1998) argue in their suppression hypothesis that dual task learners may learn as much about the sequence as single task learners, but are less able to express this knowledge when tested under dual task conditions. Another important problem with a secondary task, like tone counting, concerns the fact that external stimuli are interspersed between the trials, hereby possibly affecting chunking (Frensch & Miner, 1994). As a consequence, the double task paradigm is faced with a number of problems that render conclusions about the attentional demands in sequence learning rather difficult. If learning in the Mayr design is indeed affected by attentional resources, this paradigm could offer an important tool for studying attention in sequence learning, since it largely overcomes the problems of the standard double task. Because the primary motor task and the secondary perceptual task are both sequential in nature, the structural inherence of the task is preserved. There is no need to present external stimuli in the intertrial intervals, so the attentional capacity can be manipulated within the SRT task itself.

While the mechanisms of additional task structure and attentional resources mentioned above would imply parallel and independent learning to explain perceptual learning, another possibility is that concurrent perceptual and motor learning relies on integration or binding between the two sequence structures. Notebaert and Soetens (2003), for example, were able to demonstrate binding between an irrelevant stimulus feature and a response feature, called an event file (Hommel, 1998), when using a Simon task. There are also a number of sequence learning studies that have examined the role of binding between sequences. For example, Schmidtke and Heuer (1997) let participants respond to a spatial sequence of stimuli. Concurrently, in alternation with the visual stimuli, participants were instructed to respond to tones varying in pitch by pressing a foot pedal in the case of low-pitched tones and to withhold their responses in the case of high-pitched tones. Both the spatial stimuli and the tones followed structured sequences, either correlated or uncorrelated. The results showed that participants were able to learn the spatial and auditory sequences, both in the correlated and uncorrelated condition. More importantly, when they altered the relationship between the sequences by introducing a phase-shift probe (this was only possible in the correlated condition), a cost in RT was observed, demonstrating that the sequences had been integrated when they were correlated. If binding can also underlie sequence learning in cases of uncorrelated sequences remains an open question. In the study of Mayr (1996), as well as in Experiment 1 of the present study, spatial sequences were used, which have been shown to play a crucial role in sequence learning (Koch & Hoffmann, 2000). It is possible that binding is enhanced when spatial sequences are involved, for example, because they elicit automatic shifts of attention. This is supported by the results of Notebaert and Soetens (2003), who found less binding in a Simon task when a nonspatial irrelevant stimulus sequence (shape) was integrated with the response sequence (Experiment 2). Whether binding of uncorrelated sequences truly depends on spatial learning, remains to be investigated. The study of Shin and Ivry (2002) where temporal perceptual learning with an uncorrelated motor sequence was absent, suggests that spatial sequences are indeed a necessary condition.

Nevertheless, more research is needed to determine whether and how concurrent motor learning can facilitate perceptual learning. On the basis of our results we cannot rule out that other factors may account for the perceptual learning effect in Experiment 1. For example, it could be that the squared array of stimulus positions enhanced spatial perceptual learning, compared with the horizontal alignment of the stimulus positions in Experiments 2–5. Since participants had to use four adjacent linearly arranged response keys, Simon effects could have been induced in Experiments 2–5 due to dimensional overlap between the irrelevant stimulus positions and the responses. Possibly, these effects interfered with more complex spatial perceptual learning. For that reason, more research is needed to determine whether perceptual spatial learning within the Mayr set-up can also take place with horizontally arranged stimulus positions. Another approach to reducing Simon effects is to use nonspatial sequences and to investigate whether perceptual learning extends further than spatial sequences as well.

Lastly, perceptual learning could also be facilitated by eye movements extending over two dimensions, as in Experiment 1, instead of one dimension, as in Experiments 2–5. The present study, however, shows no indication of a crucial role for eye movements. Compared with Mayr’s original study (squared array with visual angle between stimuli amounting to approximately 20.14° for stimuli placed along the side and 27.41° for stimuli placed diagonally), we substantially reduced the visual angle in our replication (between-stimulus visual angle was 10.39° for stimuli placed along the side and 14.54° for diagonal stimuli). Nevertheless, comparable perceptual learning took place. Furthermore, a large visual angle between the two outermost positions (24 cm or 21.80° visual angle) in Experiments 2 to 4 was not sufficient for perceptual learning to take place. On the other hand, a smaller visual angle in Experiment 5 did not inhibit perceptual learning of a simple sequence. In agreement with Remillard (2003) and Rüsseler et al. (2002), our data show no indications of an effect of stimulus distance on sequence learning. Further research of the role of eye movements in sequence learning is nevertheless necessary before we can definitively rule out that perceptual spatial learning is unaffected by eye movements.

To conclude, the results of the present study can have important implications for the perceptual/motor debate in sequence learning. In addition to explicit perceptual learning or when involving simple sequences, they seem to imply that perceptual learning of more complex sequences is possible when participants concurrently learn a motor sequence. The precise mechanisms that underlie this facilitating effect of concurrent motor learning remain to be investigated. In general, however, perceptual learning effects were much smaller than those of motor learning. Additionally, the fact that purely perceptual learning could only be established with a very simple sequence structure suggests that the perceptual learning system is rather limited in nature and secondary to response-related learning.

Notes

Although Experiments 2–4 show no trend toward perceptual learning, it could be remarked that more statistical power in these experiments is required in order to accept an absence of perceptual learning. Therefore, to determine whether perceptual learning effects would come about when statistical power was increased, we conducted a repeated measures ANOVA on the combined data of the perceptual conditions of all three Experiments 2–4, with sequence structure (32, 12 or probabilistic in Experiments 2, 3, and 4 respectively) as between-participants factor and block as within-participants factor. With a total sample size of 48 participants, neither the main effect of sequence structure nor the interaction between block and sequence proved to be significant respectively F(2,45) = .95, p = .40 and F(18,405) = .81, p = .70. This allowed us to further analyze perceptual learning in the form of an increase in RT in the random Block 9 compared with the surrounding structured Blocks 8 and 10. Even across 48 participants, planned comparisons revealed that perceptual learning did not emerge, F(1,45) = 1.48, p = .23. Hence, the combined analysis of the data of Experiments 2–4 shows that perceptual learning is still absent when the statistical power is increased. Nevertheless, we were always able to assess clear motor learning effects in Experiments 2–4, although the samples used to assess motor and perceptual learning were always comparable. This indicates that a lack of statistical power probably cannot explain the absence of perceptual learning in Experiments 2–4. Even if it is assumed that perceptual learning in Experiments 2–4 was indeed present, but that the effect was so small that it required more statistical power (than motor learning) to be detected, the difference between the motor and perceptual condition remains. Hence, it can only be concluded that sequence learning primarily relies on motor learning and that this type of learning is much more dominant than perceptual learning.

We would like to thank the reviewers for these suggestions.

References

Bischoff-Grethe, A., Goedert, K. M., Willingham, D. B., & Grafton, S. T. (2004). Neural substrates of response-based sequence learning using fMRI. Journal of Cognitive Neuroscience, 16, 127–138.

Cleeremans, A., & McClelland, J. L. (1991). Learning the structure of event sequences. Journal of Experimental Psychology: General, 120, 235–253.

Cock, J. J., Berry, D. C., & Buchner, A. (2002). Negative priming and sequence learning. European Journal of Cognitive Psychology, 14, 24–48.

Cohen, A., Ivry, R. I., & Keele, S. W. (1990). Attention and structure in sequence learning. Journal of Experimental Psychology: Learning, Memory and Cognition, 16, 17–30.

Frensch, P. A., & Miner, C. (1994). Effects of presentation rate and individual differences in short-term memory capacity on an indirect measure of serial learning. Memory & Cognition, 22, 95–110.

Frensch, P. A., Buchner, A., & Lin, J. (1994). Implicit learning of unique and ambiguous serial transitions in the presence and absence of a distractor task. Journal of Experimental Psychology: Learning, Memory and Cognition, 20, 567–584.

Frensch, P. A., Lin, J., & Buchner, A. (1998). Learning versus behavioral expression of the learned: The effects of a secondary tone-counting task on implicit learning in the serial reaction time task. Psychological Research, 61, 83–98.

Goschke, T. (1998). Implicit learning of perceptual and motor sequences: Evidence for independent learning systems. In M. A. Stadler & P. A. Frensch (Eds.). Handbook of implicit learning (pp. 401–444). Thousand Oaks, CA: Sage.

Grafton, S. T., Hazeltine, E., & Ivry, R. (1995). Functional anatomy of sequence learning in normal humans. Journal of Cognitive Neuroscience, 7, 497–510.

Heuer, H., Schmidtke, V., & Kleinsorge, T. (2001). Implicit learning of sequences of tasks. Journal of Experimental Psychology: Learning, Memory and Cognition, 27, 967–983.

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus-response episodes. Visual Cognition, 5, 183–216.

Howard, J. H., Mutter, S. A., & Howard D. V. (1992). Serial pattern learning by event observation. Journal of Experimental Psychology: Learning, Memory and Cognition, 18, 1029–1039.

Keele, S. W., Jennings, P., Jones, S., Caulton, D., & Cohen, A. (1995). On the modularity of sequence representation. Journal of Motor Behavior, 27, 17–30.

Kelly, S. W., & Burton, M. A. (2001). Learning complex sequences: No role for observation? Psychological Research, 65, 15–23.

Koch, I. (2001). Automatic and intentional activation of task sets. Journal of Experimental Psychology: Learning, Memory and Cognition, 27, 1474–1486.

Koch, I., & Hoffmann, J. (2000). The role of stimulus-based and response-based spatial information in sequence learning. Journal of Experimental Psychology: Learning, Memory & Cognition, 26, 863–882.

Mayr, U. (1996). Spatial attention and implicit sequence learning: Evidence for independent learning of spatial and nonspatial sequences. Journal of Experimental Psychology: Learning, Memory and Cognition, 22, 350–364.

Nattkemper, D. & Prinz, W. (1997). Stimulus and response anticipation in a serial reaction task. Psychological Research, 60, 98–112.

Nissen, M. J., & Bullemer, P. (1987). Attentional requirements of learning: evidence from performance measures. Cognitive Psychology, 19, 1–32.

Notebaert, W., & Soetens, E. (2003). The influence of irrelevant stimulus changes on stimulus and response repetition effects. Acta Psychologica, 112, 143–156.

Reed, J., & Johnson, P. (1994). Assessing implicit learning with indirect tests: Determining what is learned about sequence structure. Journal of Experimental Psychology: Learning, Memory and Cognition, 20, 585–594.

Remillard, G. (2003). Pure perceptual-based sequence learning. Journal of Experimental Psychology: Learning, Memory and Cognition, 29, 518–597.

Rüsseler, J., & Rösler, F. (2000). Implicit and explicit learning of event sequences: evidence for distinct coding of perceptual and motor responses. Acta Psychologica, 104, 45–67.

Rüsseler, J., Münte, T. F., & Rösler, F. (2002). Influence of stimulus distance in implicit learning of spatial and nonspatial event sequences. Perceptual and Motor Skills, 95, 973–987.

Schmidtke, V., & Heuer, H. (1997). Task integration as factor in secondary-task effects on sequence learning. Psychological Research, 60, 53–71.

Schneider, W. (1996). MEL Professional. Pittsburgh, PA: Psychology Software Tools.

Schneider, W., Eschman, A., & Zuccolotto, A. (2002). E-prime, Version 1.1. Pittsburgh, PA: Psychology Software Tools.

Shanks, D. R., & St. John, M. F. (1994). Characteristics of dissociable human learning systems. Behavioral and Brain Sciences, 17, 367–447.

Shin, J. C., & Ivry, R. B. (2002). Concurrent learning of temporal and spatial sequences. Journal of Experimental Psychology: Learning, Memory & Cognition, 28, 445–457.

Soetens, E., Melis, A., & Notebaert, W. (2004). Sequential effects and sequence learning. Psychological Research, 10.1007/s00426-003-0163-4.

Stadler, M. A., & Frensch, P. A. (1998). Handbook of implicit learning. Thousand Oaks, CA: Sage.

Stadler, M. A., & Neely, C. B. (1997). Effects of sequence length and structure on implicit serial learning. Psychological Research, 60, 14–23.

Willingham, D. B. (1999). Implicit motor sequence learning is not purely perceptual. Memory and Cognition, 27, 561–572.

Willingham, D. B., Nissen, M., & Bullemer, P. (1989). On the development of procedural knowledge. Journal of Experimental Psychology: Learning, Memory and Cognition, 15, 1047–1060.

Willingham, D. B., Wells, L. A., Farrell, J. M., & Stemwedel, M. E. (2000). Implicit motor sequence learning is represented in response locations. Memory and Cognition, 28, 366–375.

Ziessler, M. (1994). The impact of motor responses on serial pattern learning. Psychological Research, 57, 30–41.

Ziessler, M. (1998). Response-effect learning as a major component of implicit serial learning. Journal of Experimental Psychology: Learning, Memory and Cognition, 24, 962–978.

Ziessler, M., & Nattkemper, P. (2001). Learning of event sequences is based on response-effect learning: Further evidence from a serial reaction time task. Journal of Experimental Psychology: Learning, Memory and Cognition, 27, 595–613.

Acknowledgements

The first author, Natacha Deroost, is holder of the mandate of Aspirant of the National Fund for Scientific Research of Flanders, Belgium (Fonds voor Wetenschappelijk Onderzoek—Vlaanderen, FWOTM247).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Deroost, N., Soetens, E. Perceptual or motor learning in SRT tasks with complex sequence structures. Psychological Research 70, 88–102 (2006). https://doi.org/10.1007/s00426-004-0196-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-004-0196-3