Abstract

We used a novel stimulus set of human and robot actions to explore the role of humanlike appearance and motion in action prediction. Participants viewed videos of familiar actions performed by three agents: human, android and robot, the former two sharing human appearance, the latter two nonhuman motion. In each trial, the video was occluded for 400 ms. Participants were asked to determine whether the action continued coherently (in-time) after occlusion. The timing at which the action continued was early, late, or in-time (100, 700 or 400 ms after the start of occlusion). Task performance interacted with the observed agent. For early continuations, accuracy was highest for human, lowest for robot actions. For late continuations, the pattern was reversed. Both android and human conditions differed significantly from the robot condition. Given the robot and android conditions had the same kinematics, the visual form of the actor appears to affect action prediction. We suggest that the selection of the internal sensorimotor model used for action prediction is influenced by the observed agent’s appearance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Action perception is often discussed within the framework of motor resonance, whereby action understanding involves an internal simulation of the seen action by the observer (Rizzolatti, Fogassi, & Gallese, 2001). But what are the boundary conditions for this resonance? What if the actor looks different from the observer? Or moves differently? It has been suggested that the closer the match between the observed action and the observers’ own body, the stronger the resonance should be (e.g., Calvo-Merino, Grezes, Glaser, Passingham, & Haggard, 2006; Cross, Hamilton, & Grafton, 2006). On the other hand, brain areas that are active during action perception also respond to simple animations (Pelphrey et al., 2003) or to point-light displays (Saygin, 2007), indicating they may be relatively insensitive to the surface properties of the stimuli depicting the actions.

With recent advances in technology, lifelike humanoid robots are becoming commonplace and assistive technologies based on such agents are starting to change the face of education and healthcare (Coradeschi et al., 2006). However, there is little systematic research on human perception of such agents (Saygin, Chaminade, & Ishiguro, 2010). Artificial agents can have various different appearances and movement kinematics. As such, they can provide us with unique opportunities to test theories of perception and cognition (Saygin, Chaminade, Urgen, & Ishiguro, 2011b).

There is an existing literature on the perception of actions of robots. Although some have explored behavioral measures, previous work has largely focused on whether or not the so-called mirror neuron system (Rizzolatti et al., 2001) responds during the perception of robots. Unfortunately, the results are inconsistent, suggesting a need for further exploration (Saygin et al., 2011a, b).

Here, we tested the effects of the observed agent’s form and motion on behavioral performance in a dynamic action prediction task. Action prediction has been linked to internal sensorimotor models and in some studies, to motor or premotor areas of the brain (Prinz, 2006; Schutz-Bosbach & Prinz, 2007; Sparenberg, Springer, & Prinz, 2011; Springer et al., 2011; Wilson & Knoblich, 2005). This notion was highlighted by studies reporting predictive gaze behavior during action observation (Flanagan & Johansson, 2003), and by predictive activation of premotor areas of the brain in humans (Kilner, Vargas, Duval, Blakemore, & Sirigu, 2004) and in nonhuman primates (Umilta et al., 2001). Moreover, explicit action prediction during action occlusion engages premotor areas more strongly than other tasks (Stadler et al., 2011). Such results suggest a link to the motor resonance concept discussed above, and may be indicative of on simulation of the observed/predicted action in the motor programs of the observer. On the other hand, prediction appears to preferentially engage premotor cortex whether or not the predicted event is produced by a human (Schubotz, 2007). More generally, prediction has been proposed as a ubiquitous processing principle in the brain (Bar, 2009; Bubic, von Cramon, & Schubotz, 2010; Friston, 2005). Studies of action prediction may also be helpful in linking research on motor resonance and simulation more generally to established computational frameworks of sensorimotor control (Kawato & Wolpert, 1998; Wolpert, Doya, & Kawato, 2003), which could help bridge findings from neuroimaging studies of action perception to neuro-computational theory (Kilner, Friston, & Frith, 2007).

The present study explored the prediction of human and artificial agents’ actions. Consistent with the increased perceptual sensitivity or neural activity for actions that are in the observer’s “motor repertoire” (Calvo-Merino et al., 2006; Casile & Giese, 2006; Catmur, Walsh, & Heyes, 2007; Cross et al., 2006; Kilner, Paulignan, & Blakemore, 2003; Press, Gillmeister, & Heyes, 2007), we may expect artificial agents’ actions to be predicted less precisely than those of human agents since their body form differs from those of the observer and their motion is not biological (Pollick, Hale, & Tzoneva-Hadjigeorgieva, 2005). Conversely, if predictive functions are more generally applicable, and/or if information from different sources than match to the observer’s own body dominate in action prediction, then we may find no difference in prediction accuracy between actions of human and artificial agents.

Here, we used an explicit action prediction task to study processing of human and artificial agent actions (Graf et al., 2007; Stadler et al., 2011). During each trial, actions were briefly occluded from view. The participants were required to mentally continue, i.e., predict the actions in order to perform the task, which was to decide whether or not the action’s timing continued naturally and coherently (i.e., in-time) after occlusion.

In addition to comparing human and artificial agents, the present study used novel stimuli that allowed us to delineate the influence of humanlike form on the one hand, of biological motion kinematics on the other. Instead of using toys or industrial robot hands as previous studies did, we collaborated with a robotics lab and worked with state-of-the-art humanoid robots. This allowed us to address the role of robot appearance, which was not explored in previous work, though it is known that appearance can affect action perception (Chaminade, Hodgins, & Kawato, 2007). Furthermore, by using actual robots, we can engage more productively with social robotics, a rapidly developing field (Chaminade & Cheng, 2009; Saygin, et al., 2011a).

Our stimuli were video clips from the Saygin-Ishiguro Action Database (SIAD, Fig. 1), which contains actions performed by natural and artificial agents (Saygin et al., 2011a). Our study had actions from three agents from SIAD: the android Repliee Q2 (Ishiguro, 2006), which has a highly humanlike appearance (android condition); the android after stripping off its humanlike form, but retaining exactly the same kinematics (robot condition); and the human that the android was designed to replicate in appearance (human condition). Although future robotics systems may be able to mimic human motion kinematics, motions of present-day robots, including those of Repliee Q2, are noticeably different from biological motion dynamics (Pollick et al., 2005; Minato & Ishiguro, 2008; Shimada, Minato, Itakura, & Ishiguro, 2006). Thus in terms of motion, the android and robot featured nonhuman kinematics, whereas biological motion was unique to the human condition. In terms of form or appearance, the human and android conditions featured humanlike, biological appearance, whereas the robot condition featured a nonbiological, mechanical appearance (Fig. 1). This design allowed us to not only ask whether action prediction differs between humans and nonhuman artificial agents, but also to explore the role of visual form, biological motion (as well as their interaction) in action prediction (Saygin et al., 2011b).

Images from Saygin-Ishiguro action database (SIAD; Saygin et al., 2010) and the features each condition represents

In a recent fMRI adaptation study using SIAD stimuli, we found that brain activity in a network of areas subserving action perception was modulated not only by the appearance and the motion of the agent, but also by the congruence of form and motion, indicating these factors may interact (Saygin et al., 2011a). The present study complements this work using a more dynamic paradigm to continue to explore the different influences of visual form and motion on action perception.

Methods

Participants

Sixteen right-handed healthy adults (mean age 25.3; SD 2.8; range 22–32; 8 males) participated. All participants had normal or corrected vision, no cognitive, attentional, or neurological abnormalities by self-report. All participants were natives of Germany and were blind to the hypotheses or design of the study. Studies were carried out under ethical approval in accordance with the standards of the 1964 Declaration of Helsinki. All participants gave written informed consent and were paid for their participation.

Stimuli

The stimuli were videos from the Saygin-Ishiguro Action Database (SIAD), which comprises body movements of human and artificial agents. We used 2-second action clips performed by three agents: robot, android, and human (Fig. 1).

Actions were performed by the android Repliee Q2 and by the human “master” after whom it was modeled. Repliee Q2 was developed at Osaka University in collaboration with Kokoro Inc., and with brief exposures, can be mistaken for a human being (Ishiguro, 2006). Repliee Q2’s actuators were programmed over several weeks at Osaka University. The android has 42 degrees of freedom and can make head and upper body movements.

SIAD contains videos of Repliee Q2 both with its full humanlike appearance (android condition), and also with a mechanical appearance (robot condition). For the robot condition, the surface elements of the android were removed to reveal the materials underneath (e.g., wiring, metal limbs and joints), but retaining exactly the same mechanical movements. The silicone on the hands and face and some of the fine hair around the face could not be removed and were covered. The removed components were all foam and their weight did not influence the motion kinematics between the conditions (the resulting movies were matched frame by frame). In the robot condition, Repliee Q2 could no longer be mistaken for a human. However, the kinematics of the android and robot conditions was identical, since these conditions in fact featured the same robot, performing the very same movements.

For the human condition, the female adult on whom Repliee Q2’s appearance was based was videotaped. She watched each of the Repliee Q2’s actions, and performed the same action as she would naturally. The human performed these movements several times and the version of the action that most closely matched the speed of the robot’s movement was selected for inclusion in SIAD. The motion kinematics was not altered.

There were thus three agent conditions: human, android, and robot. Human and android conditions featured biological (humanlike) appearance, whereas the robot condition did not. In terms of motion, biological motion was unique to the human condition, with the other two agents having nonbiological, mechanical motion. The robot and the human are different from each other in both motion and appearance dimensions, whilst sharing a feature with the android. On the other hand, the robot and the human conditions both feature congruent appearance and motion, whereas the android features incongruent appearance and motion (biological appearance, mechanical motion, Fig. 1). SIAD thus allowed us to address the role of biological appearance and biological motion, as well as the congruence of the two features, in action prediction.

All SIAD actions were videotaped in the same room and with the same background, lighting and camera settings. Only the upper body was visible (cf. Fig. 1). For each agent, four different 2-second clips including both transitive and intransitive movements were used in the present study (wiping with a towel, drinking from a cup, picking up an object, nudging someone). Video recordings were digitized, converted to grayscale and cropped to 400 by 400 pixels. A semi-transparent white fixation cross (40 pixels across) was superimposed at the center of the movies.

Procedure

We used an occlusion paradigm to assess action prediction behaviorally (Graf et al., 2007; Stadler et al., in this issue). Stimuli were presented and responses recorded using Presentation software (Version 13, http://www.neurobs.com). In each trial, after at least 500 (mean 575 ± 62 ms, range 500–633 ms) from the start of the action, the videos were occluded for 400 ms. The occlusion point was varied from trial to trial in order to avoid learning effects that may result from repetitive presentations of the same videos. The video speeds were not altered.

After the 400 ms occlusion, the action continued either in-time, with natural timing (i.e., at a frame corresponding to 400 ms after the last frame before occlusion, which was also 400 ms), or with early timing (100 ms after the last frame before occlusion), or late timing (700 ms after the last frame before occlusion).

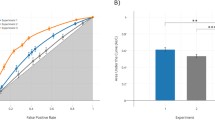

There were thus three continuation conditions (early, in-time, late), and three agent conditions (robot, android, human). There were 20 trials with each agent–timing pair, which resulted in a total trial number of 180, not including practice trials. The task was to determine whether or not the continuation of the video continued in-time after occlusion and was the same for all conditions (Fig. 2).

Schematic of an experimental trial. A trial from the android condition is shown here, but the procedure was identical for all conditions. The trial started with an action video, which was played for at least 500 ms before an occlusion period of 400 ms. After occlusion, the video continued at a point that was in-time (400 ms from offset), early (100 ms from offset), or late (700 ms from onset) compared to the point of occlusion. The participant then decided whether or not the continuation was coherent

An experimental session began with the presentation of all the video clips to be used in the study without occlusion so that subjects had uniform exposure to the stimuli at the start of testing. Subjects had not seen the videos or any other videos of these robots before the experiment. Participants were then briefly trained the experimental task with an example of each agent and with three repetitions of each continuation condition receiving feedback about their performance (9 trials total). Videos that were shown in the experiment could also appear in the practice session. Three videos of each agent were chosen randomly for each participant for the practice session. The actual experiment lasted 30 min and featured no feedback.

Data analysis

Data were analyzed with repeated-measures ANOVA (3 agents × 3 continuations; Greenhouse-Geisser corrected) with prediction accuracy as a dependent variable. Given our agent conditions related to our experimental interests of form and motion but not in a factorial design, we planned pairwise t tests for assessing form and motion effects (e.g., robot vs. android for the effect of form while keeping kinematics constant; android vs. human for the effect of motion kinematics with humanlike form). Bonferroni correction was used to correct for multiple comparisons. All p values are reported two-tailed.

Results

The results are summarized in Fig. 3. When the continuation was in-time, subjects were able to discriminate the continuation successfully only barely above chance (i.e., subjects still perceived it to be not in-time almost half of the time); this pattern of performance did not vary across agents. The early and late continuations were easier to discriminate. This overall difference was reflected in a trend to significance of the main effect of continuation [F(2,30) = 3.28, p = 0.062, η² = 0.10]. There was a significant interaction between agent and continuation [F(4,60) = 3.12, p < 0.05, η² = 0.05), driven by the early and late continuation conditions (Fig. 3). Subjects were significantly less accurate in the robot condition compared to the others when the continuation was early. Approximately 43% of these trials were perceived to be coherent in the robot condition, compared with 33 and 31% for the android and human conditions. The pattern was reversed for the late continuation condition, where ~41% of the trials were perceived to be coherent in the human condition, compared with 35 and 38% for the robot and android conditions. A paired-samples t test revealed a significant difference between the agents with human appearance and the robot (human vs. Robot: [t(15) = −3.04, p < 0.05]; android vs. Robot [t(15) = −3.58, p < 0.05] for the late continuation condition. For early continuations, the difference between the human and the robot was marginally significant [t(15) = 2.08, p = 0.056]. The overall main effect of agent was not significant.

A similar ANOVA carried out for reaction time (RT) revealed a significant main effect for continuation [F(2,30) = 4.26, p < 0.05, η² = 0.10], resulting from a general decrease of RTs in the late continuations. A similar trend was found in the study by Stadler et al. (in this issue) where a discussion of this effect is provided.

Discussion

We presented new data from a behavioural study exploring the role of humanlike form and/or motion in action prediction. We used an occlusion paradigm, along with a novel stimulus set, which allowed us to study the predictive use of sensorimotor representations during action perception (Graf et al., 2007; Stadler et al., 2011). Stimuli depicted human and artificial agents that either had a biological appearance, or biological motion, or both (Fig. 1). The results revealed an interaction between the observed actor (human, robot, android) and the continuation manipulation of our occlusion paradigm, expressing a complex interaction between the observed agent’s appearance and movement kinematics during action prediction.

The occlusion paradigm allows studying internally guided aspects of action prediction (Stadler et al., 2011). Graf et al. (2007) used point-light animations in a similar paradigm and showed that natural human movement is predicted with higher accuracy during brief occlusions. From the high sensitivity to violations in the actions’ time course, they concluded that the participants might have relied on real-time simulation in their motor repertoire during occlusion. Correspondingly, Stadler et al. (2011) have shown that premotor areas (including the pre-SMA which is particularly involved in internal guidance) are activated during the explicit prediction of occluded action sequences. Thus, the performance in the prediction task is likely to reflect the application of internal models.

Action processing is often discussed in relation to an embodied motor simulation (Rizzolatti et al., 2001). Consistent with our recent neuroimaging studies (Saygin et al., 2011b), the present data indicate that action processing mechanisms are not selectively tuned to process our conspecifics (Cross et al., 2006; Saygin et al., 2011a). Instead, action processing (and prediction) shows evidence for sensitivity for humanlike form and biological motion, but not selectivity for these features.

Overall, the present study found a significant effect of the actor’s visual form on action prediction performance. When continuations after occlusion were early (i.e., the action continued at a point that corresponded to 100 ms after the start of a 400 ms occlusion), subjects were most accurate in the human condition and least accurate in the robot condition. Performance in the android condition was more similar to the human condition (both conditions differed significantly from the robot condition). Conversely, when action continuations were late (i.e., the action continued at a point that corresponded to 700 ms after the start of a 400 ms occlusion), the relationship between the agents was reversed, with the human condition producing highest error rates and lowest error rates in the robot condition.

Our results show that predictive processing under the early and late continuation conditions is modulated differentially depending on the viewed agent. Given the asymmetry in the continuation conditions it may be useful to consider the relationship between the timing of the continuation of the stimuli, and the prediction process during the occlusion. In this way, the results can be examined in terms of the speed of the internal prediction during the occlusion period: when an early continuation is considered in-time by the subjects (lower accuracy in our task), the internal predictive models during the occlusion period may be thought to be operating in a relatively “slow” mode, since a point of continuation 100 ms after the start of a 400 ms occlusion is perceived as being in-time. This situation occurred most frequently for the robot and less frequently for the android and the human (Fig. 3). Vice versa, when a late continuation is perceived as being in-time, we may view the prediction process operating in a relatively “fast” mode, since a point of continuation 700 ms after the start of a 400 ms occlusion is judged in-time. This situation occurred least frequently for the robot (Fig. 3). Thus, accuracy patterns for both the early and the late continuation conditions are consistent with a “slower” predictive model being used for the robot compared with agents that have humanlike form. Note that actual in-time continuations were not being judged as such for any of the agents, but since subjects did not have to indicate whether the timing was perceived to be early or late, future work is needed to explore the possibility that the lack of an agent difference in the in-time continuation condition may also mask a similar differential timing of the internal simulation process for the different agents.

Given the dynamic nature of the task, and previous work with motor interference paradigms (e.g., Christensen, Ilg, & Giese, 2011; Kilner, Hamilton, & Blakemore, 2007; Press et al., 2007; Saunier, Papaxanthis, Vargas, & Pozzo, 2008), differences in prediction performance based on the motion of the agent may have been expected. Note that an asymmetry was found in a reversed form when point-light characters with artificial movement kinematics were used in the same paradigm: prediction errors for artificial movement were lowest in early continuations and highest in late continuations (submission by Stadler et al., to this issue). While Stadler et al.’s goal was to abstract away from form, the study constitutes an interesting counterpoint to ours, where the kinematics was matched between robot and android, but the appearance varied.

The observed interaction with visual appearance is especially interesting, given the robot and android conditions were actually the same machine, with the same action kinematics, matched frame-by-frame in the videos. The appearance of the agents thus appears to exert significant influence (perhaps via modulatory signals from visual areas that process form) on the process of action prediction. A role for motion cannot be ruled out however, given the accuracy in the android condition generally fell in between robot and human conditions.

Why would appearance affect action prediction? Previous work suggests an agent’s appearance might induce expectations about its movement kinematics (Saygin et al., 2011a; Ho & MacDorman, 2010). Whereas a human form would lead to an expectation of biological motion based on a lifetime of experience with how people move, a robotic or mechanical appearance is less likely to lead to an expectation of smooth, biological motion (Pollick, 2009; Saygin et al., 2011a). Although the precise mechanisms can only be speculated from the present data, the appearance of the agent appears to have biased the selection and/or use of internal models that led to an advantage for predicting movements of the android over the Robot, even though the two agents had identical kinematics. A humanlike appearance may facilitate the selection of a more precise and/or more human-like sensorimotor model with which to predict the action. It is also possible that what is more precise is not the model itself, but its timing: If we view the results from the alternate perspective of the speed of the internal prediction mechanisms, it is possible to view the mental continuation process for the robot as being “slower”, according to which, early continuations would be harder to discriminate from in-time continuations (as we observed). It is possible that, to reduce conflict between appearance and movement [cf. uncanny valley effects (Mori, 1970; Saygin et al., 2011a)], during the occlusion, the android “is not allowed to move as jerky” in the prediction process, even though in fact the kinematics are identical to the robot’s (Saygin et al., 2011a).

In a recent fMRI adaptation study using SIAD stimuli, we found a network of areas subserving action perception to be modulated by the appearance and the motion of the observed agents (Saygin et al., 2011a). A region in lateral temporal cortex (the extrastriate body area, EBA, in the left hemisphere) responded similarly in the human and android conditions, but exhibited less activation in the robot condition (an effect of form). In a larger network, most notably in parietal cortex, distinctive responses were found for the android condition, which features a mismatch between appearance and motion. We interpreted these data within a predictive coding account of neural responses (Friston, 2005; Rao & Ballard, 1999). It is possible that visual areas of the brain that specialize in processing form (especially bodily form, such as the EBA, which had a response pattern that parallels the findings here, as described above) can modulate the efficacy of the internal models used for action prediction. Given the present paradigm yielded a role for visual form in action prediction performance (whilst the effects of form-motion congruence or the uncanny valley had dominated the fMRI data), future work will be beneficial in linking fMRI studies of action perception and studies that allow access to more dynamic aspects of processing such as prediction paradigms, or neuroimaging studies with more time-resolved techniques (e.g., EEG or MEG).

Conclusion

We observed that the visual appearance of the observed actor affects performance on a dynamic action prediction task. We have a lifetime of experience viewing the actions of other humans. It is possible that that the brain can use internal sensorimotor models for action prediction more effectively for entities that have a humanlike form, even when they do not move biologically.

References

Bar, M. (2009). Predictions: a universal principle in the operation of the human brain. Introduction. Philos Trans R Soc Lond B Biol Sci, 364(1521), 1181–1182.

Bubic, A., von Cramon, D. Y., & Schubotz, R. I. (2010). Prediction, cognition and the brain. Front Hum Neurosci, 4, 25.

Calvo-Merino, B., Grezes, J., Glaser, D. E., Passingham, R. E., & Haggard, P. (2006). Seeing or doing? Influence of visual and motor familiarity in action observation. Curr Biol, 16(19), 1905–1910.

Casile, A., & Giese, M. A. (2006). Nonvisual motor training influences biological motion perception. Curr Biol, 16(1), 69–74.

Catmur, C., Walsh, V., & Heyes, C. (2007). Sensorimotor learning configures the human mirror system. Curr Biol, 17(17), 1527–1531.

Chaminade, T., & Cheng, G. (2009). Social cognitive neuroscience and humanoid robotics. J Physiol Paris, 103(3–5), 286–295.

Chaminade, T., Hodgins, J., & Kawato, M. (2007). Anthropomorphism influences perception of computer-animated characters’ actions. Soc Cogn Affect Neurosci, 2(3), 206–216.

Christensen, A., Ilg, W., & Giese, M. A. (2011). Spatiotemporal tuning of the facilitation of biological motion perception by concurrent motor execution. J Neurosci, 31(9), 3493–3499.

Coradeschi, S., Ishiguro, H., Asada, M., Shapiro, S. C., Thielscher, M., Breazeal, C., et al. (2006). Human-inspired robots. IEEE Intell Syst, 21(4), 74–85.

Cross, E. S., Hamilton, A. F., & Grafton, S. T. (2006). Building a motor simulation de novo: observation of dance by dancers. Neuroimage, 31(3), 1257–1267.

Flanagan, J. R., & Johansson, R. S. (2003). Action plans used in action observation. Nature, 424(6950), 769–771.

Friston, K. (2005). A theory of cortical responses. Phil Trans B, 360(1456), 815–836.

Graf, M., Reitzner, B., Corves, C., Casile, A., Giese, M., & Prinz, W. (2007). Predicting point-light actions in real-time. Neuroimage, 36(Suppl 2), T22–T32.

Ho, C–. C., & MacDorman, K. F. (2010). Revisiting the uncanny valley theory: developing and validating an alternative to the Godspeed indices. Comput Hum Behav, 26(6), 1508–1518.

Ishiguro, H. (2006). Android science: conscious and subconscious recognition. Connection Sci, 18(4), 319–332.

Kawato, M., & Wolpert, D. (1998). Internal models for motor control. Novartis Found Symp, 218, 291–304. Discussion 304–297.

Kilner, J. M., Friston, K. J., & Frith, C. D. (2007a). Predictive coding: an account of the mirror neuron system. Cogn Process, 8(3), 159–166.

Kilner, J. M., Hamilton, A. F., & Blakemore, S. J. (2007b). Interference effect of observed human movement on action is due to velocity profile of biological motion. Soc Neurosci, 2(3–4), 158–166.

Kilner, J. M., Paulignan, Y., & Blakemore, S. J. (2003). An interference effect of observed biological movement on action. Curr Biol, 13(6), 522–525.

Kilner, J. M., Vargas, C., Duval, S., Blakemore, S. J., & Sirigu, A. (2004). Motor activation prior to observation of a predicted movement. Nat Neurosci, 7(12), 1299–1301.

Minato, T. & Ishiguro, H. (2008) Construction and evaluation of a model of natural human motion based on motion diversity. Proc of the 3rd ACM/IEEE International Conference on Human-Robot Interaction, 65–71.

Mori, M. (1970). The uncanny valley. Energy, 7(4), 33–35.

Pelphrey, K. A., Mitchell, T. V., McKeown, M. J., Goldstein, J., Allison, T., & McCarthy, G. (2003). Brain activity evoked by the perception of human walking: controlling for meaningful coherent motion. J Neurosci, 23(17), 6819–6825.

Pollick, F. E. (2009). In search of the Uncanny Valley. In P. Daras & O. M. Ibarra (Eds.), UC Media 2009 (pp. 69–78). Venice, Italy: Springer.

Pollick, F. E., Hale, J. G., & Tzoneva-Hadjigeorgieva, M. (2005). Perception of humanoid movement. Int J Humanoid Robot, 3, 277–300.

Press, C., Gillmeister, H., & Heyes, C. (2007). Sensorimotor experience enhances automatic imitation of robotic action. Proc Biol Sci, 274(1625), 2509–2514.

Prinz, W. (2006). What re-enactment earns us. Cortex, 42(4), 515–517.

Rao, R. P., & Ballard, D. H. (1999). Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci, 2(1), 79–87.

Rizzolatti, G., Fogassi, L., & Gallese, V. (2001). Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci, 2(9), 661–670.

Saunier, G., Papaxanthis, C., Vargas, C. D., & Pozzo, T. (2008). Inference of complex human motion requires internal models of action: behavioral evidence. Exp Brain Res, 185(3), 399–409.

Saygin, A. P. (2007). Superior temporal and premotor brain areas necessary for biological motion perception. Brain, 130(Pt 9), 2452–2461.

Saygin, A. P., Chaminade, T., & Ishiguro, H. (2010). The perception of humans and robots: Uncanny hills in parietal cortex. In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd Annual Conference of the Cognitive Science Society (pp. 2716–2720). Portland, OR: Cognitive Science Society.

Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., & Frith, C. F. (2011a). The thing that should not be: predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Social Cognitive and Affective Neuroscience.

Saygin, A. P., Chaminade, T., Urgen, B. A., & Ishiguro, H. (2011b). Cognitive neuroscience and robotics: a mutually beneficial joining of forces. In L. Takayama (Ed.), Robotics: systems and science. CA: Los Angeles.

Schubotz, R. I. (2007). Prediction of external events with our motor system: towards a new framework. Trends Cogn Sci, 11(5), 211–218.

Schutz-Bosbach, S., & Prinz, W. (2007). Perceptual resonance: action-induced modulation of perception. Trends Cogn Sci, 11(8), 349–355.

Shimada, M., Minato, T., Itakura, S. & Ishiguro, H. (2006). Evaluation of android using unconscious recognition, Proc of the IEEE-RAS International Conference on Humanoid Robots, 157–162.

Sparenberg, P., Springer, A., & Prinz, W. (2011). Predicting others’ actions: evidence for a constant time delay in action simulation. Psychol Res.

Springer, A., Brandstadter, S., Liepelt, R., Birngruber, T., Giese, M., Mechsner, F., et al. (2011). Motor execution affects action prediction. Brain Cogn, 76(1), 26–36.

Stadler, W., Schubotz, R. I., von Cramon, D. Y., Springer, A., Graf, M., & Prinz, W. (2011). Predicting and memorizing observed action: differential premotor cortex involvement. Hum Brain Mapp, 32(5), 677–687.

Umilta, M. A., Kohler, E., Gallese, V., Fogassi, L., Fadiga, L., Keysers, C., et al. (2001). I know what you are doing. A neurophysiological study. Neuron, 31(1), 155–165.

Wilson, M., & Knoblich, G. (2005). The case for motor involvement in perceiving conspecifics. Psychol Bull, 131(3), 460–473.

Wolpert, D. M., Doya, K., & Kawato, M. (2003). A unifying computational framework for motor control and social interaction. Philos Trans R Soc B Biol Sci, 358(1431), 593–602.

Acknowledgments

SIAD was developed with support from the Kavli Institute of Brain and Mind (Innovative Research Award to APS). The research was additional supported by California Institute for Telecommunications and Information Technology (Calit2) Strategic Research Opportunities Program (CSRO), and the Hellman Fellowship Program. WS received funding from Deutsche Forschungsgemeinschaft (DFG; Project: STA 1076/1-1). Ulrike Riedel and Marcus Daum assisted in data collection. We thank Thierry Chaminade, Wolfgang Prinz, Hiroshi Ishiguro and students and staff at the Intelligent Robotics Laboratory at Osaka University.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Saygin, A.P., Stadler, W. The role of appearance and motion in action prediction. Psychological Research 76, 388–394 (2012). https://doi.org/10.1007/s00426-012-0426-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-012-0426-z