Abstract

A maximum entropy-based framework is presented for the synthesis of projections from multiple Earth climate models. This identifies the most representative (most probable) model from a set of climate models—as defined by specified constraints—eliminating the need to calculate the entire set. Two approaches are developed, based on individual climate models or ensembles of models, subject to a single cost (energy) constraint or competing cost-benefit constraints. A finite-time limit on the minimum cost of modifying a model synthesis framework, at finite rates of change, is also reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A major challenge facing humanity is the possibility of climate change due to human and/or natural forcings, and how best to respond in a rational and informed manner. To this end, detailed global circulation models (GCMs) have been developed to predict the behaviour of the Earth climate system (atmosphere and oceans), involving solution of the continuity, Navier-Stokes, angular momentum and energy equations and constitutive relations over two- or three-dimensional domains, subject to various initial and boundary conditions (Peixoto and Oort 1992; McGuffie and Henderson-Sellers 2005). These are run interrogatively to yield climate projections—predictions as a function of future time – to examine various forcing and response scenarios. However, a serious difficulty for policy-makers is the promulgation of multiple models by different research groups, due to different modelling priorities, assumptions and input parameters, and inherent difficulties in the construction of climate models, especially in the handling of coupled phenomena [e.g. humidity (Paltridge et al. 2009)] and the need to dramatically reduce their computational complexity, necessitating a turbulence closure scheme. Even with the same (or similar) inputs, different models can provide significantly different climate projections (Stocker et al. 2010). A rational framework for the synthesis of such projections—which operates in a transparent and fully defensible manner—is urgently required, to avoid the lack of objectivity of seemingly ad hoc amalgamations of projections from different groups.

Over the past century, maximum entropy (MaxEnt) methods have been developed for the construction of probabilistic models, initially in thermodynamics (Boltzmann 1877; Planck 1901) and subsequently for all probabilistic systems (Jaynes 1957, 1963; Jaynes and Bretthorst 2003). Although imbued with several information-theoretic interpretations (Jaynes 1957, 1963; Jaynes and Bretthorst 2003; Shannon 1948), the success of such models rests ultimately on the maximum probability (MaxProb) principle of (Boltzmann 1877; Planck 1901; Vincze 1974; Grendár et al. 2001; Niven 2005, 2006, 2009, 2009; Niven and Grendar 2009; Grendar and Niven 2010): “a system can be represented by its most probable state.” This provides a probabilistic definition of the (relative) entropy function:

where \(\mathbb{P}\) is the governing probability of an observable realization (macrostate) of the system and K is a constant. The maximum of \(\mathfrak{H}_{rel}\) thus coincides with that of \(\mathbb{P}.\) If the system can be represented by the allocation of distinguishable balls (objects) to distinguishable boxes (categories), then \(\mathbb{P}\) will satisfy the multinomial distribution (Brillouin 1930; Fortet 1977; Read 1983):

where n i is the observed occupancy (number of balls) and q i is the prior probability of the ith category, N is the total number of balls and s the number of categories. Insertion of (2) into (1) with \(K=N^{-1},\) taking the asymptotic limit \(N \to \infty\) and \(n_i/N \to p_i,\) gives the (negative) Kullback-Leibler entropy function:

Maximisation of (3) for a system which satisfies (2), subject to its constraints, is therefore equivalent to seeking its most probable realization in the asymptotic limit, subject to the same constraints.

We therefore adopt a broader concept of ‘entropy’ than that normally used in the physical sciences. Climatologists will be familiar with the thermodynamic entropy S, which has a clearly defined meaning as the state function S = ∫δQ/T (Clausius) or \(S = k \ln \mathbb{W}\) (Boltzmann), where δQ is the increment of heat entering a system, T is temperature, k is the Boltzmann constant and \(\mathbb{W}\) is the number of microstates within a given realization (macrostate) of a system. Its rate of change is dS/dt, of which the excess (exported) component is commonly termed the thermodynamic entropy production \(\dot{\sigma}\) (de Groot and Mazur 1984; Niven 2009, 2010). However, under the MaxProb or MaxEnt approach adopted here, entropy acquires a more fundamental meaning in terms of the probabilistic state space of a system, however defined. To emphasise their generic character, such entropies are here denoted \(\mathfrak{H}.\) The thermodynamic entropy is in fact a special case of the generic, being derivable by the application of MaxEnt to an energetic system (Boltzmann 1877; Planck 1901; Jaynes 1957, 1963; Jaynes and Bretthorst 2003). The ensuing analyses are based entirely on generic entropy functions, not necessarily related to S; that said, much of the underlying mathematical structure is identical.

The aim of this study is to construct a framework for the synthesis of climate projections from multiple climate models, based on the MaxProb (hence MaxEnt) principle. By analogy with thermodynamics, two approaches are presented, involving constraints on the properties of individual climate models or of ensembles of climate models. In each case, the analysis identifies the most representative (most probable) model from a set of climate models, circumventing the need to calculate the entire set. Other implications of these frameworks, which arise from the mathematical structure given by (Jaynes 1957, 1963; Jaynes and Bretthorst 2003), are examined. In addition, we report a curious finite-time limit on the minimum cost of varying the overall framework at specified rates of change, using a theorem from finite-time thermodynamics (Ruppeiner 1979; Salamon et al. 1980; Salamon and Berry 1983; Nulton et al. 1985; Niven and Andresen 2009).

2 Derivations

Consider an individual Earth general climate model (GCM), composed of J separable computational components. Each component j = 1, …,J is executed by a single choice i(j) of algorithm, methodology or paradigm, from a total of I(j) possible choices. As shown in Fig. 1, this gives a combinatorial scheme in which an individual model is constructed from a set of unique choices i(j) ∈ {1, …, I(j)}, ∀ j. We assume that all models are calculated using the same set of input parameters and assumptions \({\varvec{\theta}};\) moreover, to accommodate variability or errors in \({\varvec{\theta}},\) each model will yield a set or domain of climate projections, which can be explored by Monte Carlo analysis or by some other means. If we move beyond the deterministic mindset that an individual climate model must be the “correct” one, how should we weight the projection sets from different climate models, to obtain a (statistical) picture of their merged sets of projections? One could simply combine an available set of model outputs using equal or assigned weighting factors, as suggested in Stocker et al. (2010), but unless every possible combination has been computed, the resulting composite model will be rather arbitrary. In addition, if the model space is infinite (or merely very large), it will be impossible to compute the composite model in the lifetime of the universe (or in any reasonable time frame). Moreover, the use of equal weights does not allow the incorporation of additional constraints on the model space. We therefore propose a MaxProb-based (hence MaxEnt-based) framework for the weighting of multiple climate models, for which two distinct approaches are available.

2.1 “Microcanonical” framework

We first construct a “microcanonical” climate model weighting framework, based on the properties of individual climate models. Extending the representation in Fig. 1, consider a single climate model shown in Fig. 2a, in which we choose to rank each choice of algorithm or method i(j) by its cost or energy ε ij , indicating (for example) the relative programming and computational cost of execution of this particular choice. Each energy level i(j) is considered to have the degeneracy g ij ≥ 1, equal to the number of choices which share the same cost ε ij . The ranking scheme i(j) therefore accounts for, but does not distinguish between, choices of equal cost. Each level i(j) is taken to have the occupancy m ij ∈ {0,1} (the choices are unique). From simple probabilistic considerations (Brillouin 1930; Fortet 1977; Read 1983) for equiprobable degenerate choices, the probability of a given choice i(j) is given by the reduced multinomial distribution:

where \({\bf m}_j = \{m_{1j},\ldots,m_{I(j)j}\}, {\bf g}_j= \{g_{1j},\ldots,g_{I(j)j}\}, G_j = \sum\nolimits_{i=1}^{I(j)} g_{ij}\) and superscript μ denotes the microcanonical framework. Equation (4) reduces to \(\mathbb{P}_{\tau|j}^{(\mu)} = {g_{\tau j}}/{G_j},\) where τ(j) is the selected choice, but it is preferable to keep the m ij explicit using (4). The probability of selecting a single overall model, assuming that the J components are independent, is therefore given by the “multi-multinomial”:

where m and g are the respective matrices of m ij and g ij . Each model is subject to J constraints on the total occupancy within each component j:

Assuming that the costs are additive over the J components, we can also include a constraint on the total cost E of running the overall model:

To determine the most probable or equilibrium model, given the above occupancy and total energy constraints, we should maximise (5) with respect to the unknowns {m ij }, subject to (6)–(7). From the Boltzmann definition (1) with K = 1, this is equivalent to maximising the entropy:

subject to the same constraints. We again emphasise that (8) is defined on the space of climate models, and has no connection to the thermodynamic entropy S. If one adopts the Stirling (1730) approximation \(\ln {m_{ij}!}\approx m_{ij} \ln m_{ij}- m_{ij}\) for large m ij (in fact this is not strictly valid), (8) reduces to:

Extremisation of (9) subject to (6)–(7) yields the (microcanonical) Boltzmann distribution at equilibrium:

where * denotes the asymptotic (Stirling-approximate) extremum, λ0j and λ E are Lagrangian multipliers respectively for the allocation (6) and total energy (7) constraints, and \(Z_j^{(\mu)} = e^{\lambda_{0j}+1} = \sum\nolimits_{i=1}^{I(j)} g_{ij} e^{- \lambda_E \epsilon_{ij}}\) is the jth microcanonical partition function. Equation (10) can be solved in conjunction with (7) to calculate the predicted occupancies \(m_{ij}^{*}.\) If the occupancies are restricted to discrete values {0,1}, this will yield the choices i(j) of the optimal climate model, subject to the total energy constraint E. In practice, numerical solution will typically give floating-point values of \(m_{ij}^{*},\) which can be used as weighting factors with which to combine multiple models of the same total energy E.

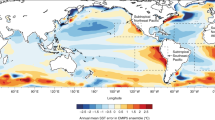

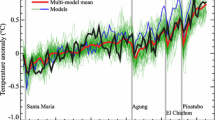

Combinatorial representations of a the microcanonical framework, showing a single model composed of g ij degenerate choices i(j) for each model component \(j=1,\ldots,J\) (ranked by energy level \(\epsilon_{ij}\)); and b the canonical framework, composed of an ensemble of N amalgamated microcanonical models. Ball numbers denote the model index

As noted, since m ij ∈ {0,1}, Stirling’s approximation does not strictly apply to the above analysis, and so (10) is only an approximate solution. This can be addressed by directly maximising the non-asymptotic entropy (8) with respect to m ij , subject to (6)–(7), giving the equilibrium distribution (Niven 2005, 2006, 2009, 2009):

where # denotes the non-asymptotic extremum and \(\Uplambda^{-1}(y)= \psi^{-1}(y-1)\) is the upper inverse of the function \(\Uplambda(x)=\psi(x+1),\) defined for convenience, in which ψ(x) is the digamma function. (Note (11) can be written with additional terms in G j and N (c.f. Niven 2009); these are here incorporated in λ0j .) In this case, no explicit partition functions exist, and (11) must be solved in conjunction with (6)–(7). This method will give more precise values of the optimal weighting factors \(m_{ij}^{\#}\), although in practice, its numerical solution can be difficult. The non-asymptotic solution (11) is itself an approximation to the true discrete MaxProb solution (with m ij ∈ {0,1}), which must be identified by a (computationally expensive) combinatorial search scheme.

Example

The above framework can be demonstrated by a simple example, in which a climate model is constructed from J = 3 components, with I = [3, 4, 3] choices of algorithm. The degeneracies and energy levels are taken as:

In this framework, more (degenerate) algorithms, and algorithms with a fourth energy level, are available for model component 2. For a total energy per model of E = 17 units, the inferred asymptotic (10) and non-asymptotic (11) solutions are, respectively:

and

All calculations were conducted in Maple 14. The equilibrium model should thus be constructed using the weights in \(\user2{m}^{*}\) (or, arguably, \(\user2{m}^{\#}\)). In this example, algorithms of intermediate cost (the second energy level) have the highest weighting. Some difference is evident between the asymptotic and non-asymptotic solutions m and Massieu functions \({{\varvec{\lambda}_{0}}}\), due to the small model space of this simplified example. The energy multipliers λ E of the two solutions are, however, quite similar.

2.2 “Canonical” framework I

The foregoing methodology is mathematically sound and provides a formal framework for the combination of different climate models. It is, however, somewhat restrictive in that it only includes models of a single total energy E. It is possible to conduct the analysis at a higher “canonical” level—in the same manner as in thermodynamics—by the analysis of “systems of systems”, in this case involving ensembles of individual climate models. This is shown in Fig. 2b, in which an ensemble is constructed by collecting a sample (without replacement) of N individual models, and amalgamating the results. This can be represented by a combinatorial scheme in which distinguishable balls—labelled by the model index z ∈ {1, …, N}—are allocated to distinguishable levels i(j), again indicating choices of energy level ε ij with degeneracy g ij . This gives the occupancies \(n_{ij} \in \{0,\mathbb{N} \}\) for each energy level of the ensemble, which are connected to those for each model by:

The probability of a specified set of occupancies n ij for a particular j is now given by (Brillouin 1930; Fortet 1977; Read 1983):

where n j = {n 1j ,…, n I(j)j }, while χ denotes the canonical framework. The multinomial factor \(N! \left / \prod\nolimits_{i=1}^{I(j)}\right. {n_{ij}!}\) accounts for number of permutations of models which attain the same set of occupancies n j . The probability of a specified ensemble, again assuming J independent components, is thus given by the “multi-multinomial”:

where n is the matrix of n ij , whence (1) with \(K=N^{-1}\) gives the entropy:

This is subject to constraints on the occupancies, given by the first part of (16).

How should we analyse ensembles of models? We could, in the first instance, examine the set of all possible models, of cardinality \(\prod\nolimits_{j=1}^{J} G_j^{N}\) (Niven and Grendar 2009). This would not, however, be very informative, since all models would a priori be of equal weight and so would not be discriminated by the MaxProb (or MaxEnt) method. The total ensemble also does not allow the inclusion of additional information about the desired set of models. If, on the other hand, we impose a constraint on the mean energy of the ensemble:

we then impose a decision rule on its desired composition, namely, on the average cost of constructing its constituent models. In contrast to the microcanonical framework, this allows models of greater-than-average total energy E > 〈E〉, so long as these are balanced in the ensemble by models of lower energy E < 〈E〉. Combining (19) with (16) and (20) gives the Lagrangian:

where κ0j and κ E are Lagrangian multipliers for the allocation (16) and energy (20) constraints. Extremisation gives the non-asymptotic equilibrium solution:

(again all constant terms are brought into κ0j ). For any given N, these can be solved numerically in conjunction with the constraints (16) and (20), to give the optimum number of times (weighting factor) n # ij that each choice i(j) should be included in the ensemble, subject to 〈E〉.

When the factorials in (19) satisfy Stirling’s approximation, (22) gives the (canonical) Boltzmann distribution at equilibrium:

where \(Z_j^{(\chi)} = N e^{\kappa_{0j}+1} = \sum\nolimits_{i=1}^{I(j)} g_{ij} e^{- \kappa_E \epsilon_{ij}}\) is the jth canonical partition function.

Example

The canonical framework can be demonstrated using the example described previously (12), now constrained by a mean total energy per model of 〈E〉 = 17 units (less than the mean of all possible models, 〈E〉 = 24.3904 units). The inferred asymptotic solution (23) is identical to (13), i.e.:

For N = 27 (say) this gives \({\varvec{\kappa_0}}^{*} =[- 3.1766, -2.2565, - 3.1766]^{\top}\). In comparison, the non-asymptotic solution (22) at N = 27 is:

Compared to (14), the latter exhibits a more uniform distribution for each j, and is closer to the asymptotic form (24).

2.3 “Canonical” framework II

One difficulty with the above canonical framework is that it—like its microcanonical precursor—still requires separability of the model into J distinct components, for which the costs ε ij are additive. In more general situations, this separability may not be possible due to coupling between components. In that case we must revert to a model space based on ensembles of entire models. Severing all connection to the components j, we consider a model space from which we collect a sample (ensemble) of \(\mathcal{{N}}\) models, containing \({n}_\imath\) models each of total energy \(E_\imath\). Each energy level has degeneracy g i . The probability of a specified ensemble is:

where \(\mathcal{{G}} = \sum\nolimits_{\imath=1}^{I} g_{\imath}\). Boltzmann’s equation (1) with \(K=\mathcal{{N}}^{-1}\) gives the entropy:

This is subject to the occupancy and mean ensemble energy constraints:

Forming the Lagrangian and extremisation gives the non-asymptotic equilibrium occupancies:

where φ0 and φ E are Lagrangian multipliers for the occupancy and energy constraints. If (27) satisfies the Stirling approximation, the distribution reduces to:

where \(Z^{(\chi)}_{II}= \mathcal{{N}} e^{\varphi_{0}+1} = \sum\nolimits_{\imath=1}^{I} g_{\imath} e^{- \varphi_E E_{\imath}}\) is the canonical II partition function. Either (30) or (if valid) (31) can be solved in conjunction with the constraints (28)–(29), to give the weights \(n_\imath\) of the most representative model.

2.4 Summary

At this point, it is worth summarising some important features of the microcanonical and two canonical frameworks proposed:

-

As evident from the predicted solutions (10)–(11) and (22)–(23), if one seeks the optimal model to describe a set of climate models, it is not necessary to compute all possible combinations of models. Using the MaxProb method, one can directly calculate the single model or a reduced set of models which best represents the model set, subject to constraint(s) on the model or ensemble properties. The effect of two competing constraints is examined in the next section.

-

The microcanonical framework imposes constraint(s) on individual models, whereas the two canonical frameworks impose constraint(s) over ensembles of models. The latter enable the synthesis of larger sets of models.

-

Note that, due to the assumed independence of the J model components, the microcanonical and canonical I frameworks are “multi-multinomial” (5) and (18). The choices i(j) for a specified j = ϑ are thus independent of the other choices j ≠ ϑ. The MaxProb prediction can therefore be computed using individual models composed of whichever choices i(j) are convenient, so long as the overall set conforms to the MaxProb prediction. In the canonical II model, we overcome the difficulty of coupled model components by considering ensembles of entire models, with constraints on the total energy of each model.

-

How should we interpret the Lagrangian multipliers on the energy constraint? By analogy with thermodynamics, these can be interpreted as λ E = 1/kT (μ), κ E = 1/kT (χ) and φ E = 1/kT (χ) II , where the T parameters are framework “temperatures” and k is a constant with units of energy (or cost) per temperature unit. The T’s are not thermodynamic temperatures, but express the distribution of energy over the available energy levels, in the relevant model or ensemble space. In effect, they serve as proxy variables for the total model cost E or mean ensemble cost 〈E〉.

-

Although the MaxProb framework is primarily designed to determine the most probable (maximum entropy) model, it is also possible to interrogate the Lagrangian to determine the minimum entropy model(s), i.e. those which lie farthest from the optimum. In this manner, one can explore the extremities of the model or ensemble space, to identify model outliers. Since minimum entropy solutions tend to lie on non-continuous boundaries of the solution domain, they are generally inaccessible to extremisation methods (Kapur and Kesevan 1992); nonetheless, they should be identifiable by numerical optimisation algorithms such as simulated annealing.

-

The mathematical structure of the output from the MaxEnt algorithm gives rise to many more features of the predicted solution. Some of these features are explored in later sections.

3 “Canonical” framework II with cost and benefit constraints

3.1 Derivation

We now examine a more comprehensive canonical II framework, in which we impose two constraints at the ensemble level: a constraint on the mean ensemble cost or energy 〈E〉, as before (29), and also a constraint on some measure of the average ensemble “worthiness” or “benefit” 〈B〉 (for example, a measure of its precision or accuracy). In this manner, we construct a MaxProb framework with which to conduct cost-benefit analyses of various ensembles of models, and to interrogate the trade-off between costs and benefits.Footnote 1 In general, the energy and benefit levels will have different ranks, necessitating the use of different indices i ∈ {1, …, I} (as before) and \(\ell \in \{1, \ldots, \mathfrak{L}\}\). We therefore consider model choices ranked by total model energies E iℓ and benefits B iℓ, of joint degeneracy g iℓ. The probability that an ensemble of \(\mathcal{{N}}\) models has the occupancies {n iℓ} is governed by the multinomial:

where now \(\mathcal{{G}} = \sum\nolimits_{i=1}^{I} \sum\nolimits_{\ell=1}^{\mathfrak{L}} g_{i\ell}\) (for convenience we drop the superscript labels). From (1) with \(K=\mathcal{{N}}^{-1},\) we maximise the entropy:

subject to the constraints:

to give the non-asymptotic equilibrium solution:

where ω0, ω E and ω B are the Lagrangian multipliers. If Stirling’s approximation applies, the entropy is:

whence extremisation gives:

where Z is the partition function.

The Lagrangian multiplier ω E can again be interpreted as an inverse ensemble temperature ω E = 1/kT, where k is a constant. The multiplier ω B can be considered as a measure of the overall benefit provided by the ensemble, in reciprocal benefit units. In effect, it acts as a proxy variable for the mean benefit 〈B〉. Since 〈B〉 measures the average information or value provided by the framework, it can be interpreted very crudely as a reciprocal density or volume, whereupon we can interpret ω B = P/kT, in which P is a mean ensemble pressure (this interpretation should not be taken too seriously).

3.2 Jaynesian mathematical structure

Now that we have the main results, we can examine several important mathematical features of the solution. Most of these were reported in a generic context by Jaynes (Jaynes 1957, 1963; Jaynes and Bretthorst 2003) [see also Kapur and Kesavan (1992) and Tribus (1961)], although many were previously known in thermodynamics. The foregoing microcanonical and canonical I and II frameworks also exhibit these features, but it is more interesting to examine the effect of two competing constraints.

Firstly, for the Stirling-approximate case, substitution of (39) into (38), by sorting into expectations along the lines of (Jaynes 1963), gives the asymptotic maximum entropy:

where for convenience we define the potential function (negative Massieu function) ϕ = −ω0 = −ln Z. The most probable state of the ensemble is thus given by a constant term, plus the Massieu function, plus the sum of products of the constraints and their conjugate Lagrangian multipliers.

Since the entropy function and constraints are state variables on the space of ensembles of models, (40) provides a linear homogenous equation which describes the framework.Footnote 2 This can be used to examine the response of the framework to changes in the constraints and/or multipliers. For constant G and ϕ we immediately see that (Jaynes 1957, 1963; Jaynes and Bretthorst 2003):

Second differentiation gives the Hessian matrix:

If the mixed derivatives are equivalent (i.e. \(\mathfrak{H}^{*}\) is continuous and continuously differentiable, at least up to second order), this gives the reciprocal or Maxwell-like relation (Jaynes 1963; Jaynes and Bretthorst 2003):

Equivalently, (40) can be rewritten as a function of the potential ϕ, whence it can be shown that (Jaynes 1957, 1963; Jaynes and Bretthorst 2003):

Second differentiation gives:

giving, again for equivalent mixed derivatives (Jaynes 1963, Jaynes and Bretthorst 2003):

From (42) and (45), it is evident that:

This defines a Legendre transformation between \(\mathfrak{H}^{*}\) and ϕ representations of the system (Jaynes 1963; Jaynes and Bretthorst 2003; Kapur and Kesevan 1992).

Finally, we note that it may be desirable to rank climate models by more than two properties, e.g. the model cost E and several different benefits B 1, B 2, …, B M . The foregoing analysis can readily be extended into as many dimensions as desired, giving the above mathematical structure as a function of the constraints 〈E〉, and 〈B 1〉, …, 〈B M 〉.

3.3 Implications

What are the implications of the above Jaynesian mathematical structure? In essence, it governs the effect of changes to the constraints and/or multipliers on the manifold of equilibrium positions of the framework. This includes:

-

Firstly, the first derivatives (41) and (44) can be interpreted as equations of state on the space of ensembles of models, describing the relationship between the rate of change of the entropy or potential as a function of the constraints or their conjugate multipliers (Jackson 1968).

-

Secondly, the second derivatives (42) and (45) describe the susceptibilities of the framework, i.e. the functional connections between the constraints and multipliers. In thermodynamics, such susceptibilities include the heat capacity, isothermal compressibility, coefficient of thermal expansion and so on (e.g. Gilmore 1982; Callen 1985; Niven 2009; Niven and Andresen 2009); if desired, such parameters can also be defined for the model framework proposed here. The Maxwell-like relations (43) and (46) reflect the coupling between the constraints, such that changes in one constraint or its multiplier, at constant \(\mathfrak{H}^{*}\) or ϕ, will produce adjustments to the other pair.

-

Thirdly, the second derivative matrix (45) of the potential function ϕ contains even further information, since in the asymptotic limit (N → ∞), it is equivalent (with change of sign) to the variance-covariance matrix of the constraints (Jaynes 1957, 1963; Jaynes and Bretthorst 2003; Kapur and Kesevan 1992):

$$ {\varvec{\alpha}} = \left[\begin{array}{ll}\langle E^{2} \rangle - \langle E \rangle^{2}, &\langle E B \rangle - \langle E \rangle \langle B \rangle\\ \langle E B \rangle - \langle E \rangle \langle B \rangle, &\langle B^{2} \rangle - \langle B \rangle^{2}\end{array}\right]. $$(48)Accordingly, \({\varvec{\alpha}}\) is positive definite (or semi-definite if singularities exist) (Kapur and Kesevan 1992). From the Legendre transformation (47), \({\mathbf a}\) is also positive definite (or semi-definite) (Kapur and Kesevan 1992). In consequence, from (42) and (45) (including the tensor sign reversals), \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) and ϕ(ω E , ω B ) are both concave functions.

Furthermore, the diagonal of (48) gives the magnitude of the standard deviation or “fluctuations” of the ensemble with respect to each constraint, usually expressed in normalised form by the coefficients of variation (Callen 1985):

$$ \begin{aligned} C_V(E) &= {\frac{\sqrt{\langle E^{2} \rangle - \langle E \rangle^{2}}} {\langle E \rangle}} = {\frac{1} {\langle E \rangle}} \sqrt{-{\frac{\partial \langle E \rangle} {\partial \omega_E}}}\\ C_V(B) &= {\frac{\sqrt{\langle B^{2} \rangle - \langle B \rangle^{2}}} {\langle B \rangle}} = {\frac{1} {\langle B \rangle}} \sqrt{-{\frac{\partial \langle B \rangle} {\partial \omega_B }}} \end{aligned}. $$(49)The covariance, similarly normalised, provides a measure of the coupling between constraints (Callen 1985):

$$ \begin{aligned} C_V(E,B) &= \sqrt{{\frac{ \langle E B \rangle - \langle E \rangle \langle B \rangle } {\langle E \rangle \langle B \rangle}}}\\ &= \sqrt{{\frac{1} {\langle E \rangle \langle B \rangle}} \left | - {\frac{\partial \langle B \rangle}{\partial \omega_E}} \right | } &= \sqrt{{\frac{1}{\langle E \rangle \langle B \rangle}} \left | - {\frac{\partial \langle E \rangle}{\partial \omega_B}} \right | } \end{aligned} $$(50) -

Fourthly, the manifold of predicted equilibrium positions defined by \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ) can be interpreted as a framework geometry, analogous to the thermodynamic geometry examined by (Gibbs 1873, 1873, 1875) [see also (Callen 1985; Gaggioli et al. 2002; Gaggioli and Paulus 2002)]. For example, if we consider 〈B〉 as a function of 〈E〉, as shown graphically in Fig. 3, we can represent positions of constant entropy \(\mathfrak{H}^{*}\) by a series of isentropic curves on this graph. From (40), these will be straight lines with negative gradient −ω E /ω B , indicating that an increase in the energy or cost 〈E〉, at constant \(\mathfrak{H}^{*}\) (and ϕ), causes a corresponding decrease in 〈B〉. Of course, many other curves can also be plotted on the diagram, including isoenergetic, isobenefit, iso-ω E and iso-ω B curves, defined by rearrangements of (40). We can also plot ω B as a function of ω E , on which we can construct isopotential curves with negative gradient −〈E〉/〈B〉. (Adopting the crude analogy of Sect. 3.1, these can be transformed to plots of P as a function of T for the model framework.) Three-dimensional graphs such as \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ) can also be constructed, containing isosurfaces of various kinds (Gibbs 1873, 1875). As pointed out by (Gibbs 1873, 1873, 1875), it is advantageous to plot “fundamental equations” such as \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ), rather than forms unobtainable from these by Legendre transformation (such as \({\mathfrak{H}^{*}}{(\omega_E, \omega_B)}\)), so that all parameters not represented on the axes can be calculated for a given path simply by differentiation.

Recalling that the frameworks herein consist of all possible models consistent with the constraints, the resulting manifold \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ) should for the most part be continuous in its geometric space, reflecting infinitesimal changes in parameters and incremental changes in model algorithms. However, in some circumstances there may be discontinuities in the manifold, due to abrupt changes in model algorithm or adoption of different scientific paradigms. Such changes can be described as phase changes or tipping points within the model space, leading to assortments of stable and unstable solutions and path-dependent hysteresis effects. These may create particular difficulties, but can of course be handled in much the same manner as in thermodynamics.

-

Finally, it can be shown that either framework \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ) can be endowed with a Riemannian geometry (entirely distinct from the framework geometry just described), using the metric furnished by the respective (positive definite) Hessian matrix \({\mathbf a}\) or \({\varvec{\alpha}}\) (Weinhold 1975; Ruppeiner 1979; Salamon et al. 1980; Salamon and Berry 1983; Nulton et al. 1985; Niven and Andresen 2009). As noted, the two metrics and hence the geometries are connected by Legendre transformation (47). The Riemannian interpretation leads to an important physical limit: a least action bound on the cost, in units of \(\mathfrak{H}^{*}\)or ϕ, to move the framework from one equilibrium position to another at finite rates of change of the constraints or multipliers. This bound—which constitutes an extension of finite time thermodynamics (Weinhold 1975; Ruppeiner 1979; Salamon et al. 1980; Salamon and Berry 1983; Nulton et al. 1985; Niven and Andresen 2009), but is in some sense allied to the informational limits identified by Szilard (1929), Landauer (1961), Bennett (1973) and similar workers (Leff and Rex 1990)—is examined in more detail in the Appendix.

4 Conclusions

In this study, several maximum-entropy frameworks are presented for the synthesis of outputs from multiple Earth climate models, based on constraints on the properties of individual models (microcanonical framework) or ensembles of models (two canonical frameworks). The asymptotic and non-asymptotic entropy functions for each case are derived by combinatorial reasoning, and applied to simple systems constrained by the total model energy E (microcanonical) or mean ensemble energy 〈E〉 (canonical). In each case it is shown that the MaxEnt method identifies the most representative (most probable) model from a set of climate models, subject to the specified constraints, eliminating the need to calculate the entire set. The parametric and geometric implications of the underlying Jaynesian mathematical structure are examined, with reference to a canonical framework with competing cost and benefit constraints, allowing interrogation of the trade-off between costs and benefits. Finally, a finite-time limit on the minimum cost of modification of the synthesis framework, at finite rates of change, is also reported.

The foregoing analysis therefore provides climate modellers—or those who must rank and combine climate models—with a rational tool to amalgamate a large set of models into a single representative model (or a small representative set). This enables the weighting of climate projections from different groups, and will also dramatically reduce the computational demand on the climate modelling community. Indeed, the benefits extend into other fields: as commented by a reviewer, for long-range weather forecasts it is common practice to combine projections from different meteorological models, to improve reliability. The MaxEnt frameworks proposed here could equally be applied to this task.

A caveat to the foregoing analysis is that the inferred equilibrium climate model will not necessarily be the “most correct” model, but merely the one which is most representative of the available set of models. If the model space is incomplete, or their underlying physical or modelling assumptions are incorrect, any resulting errors will also be incorporated in the equilibrium model. A more comprehensive probabilistic framework, which incorporates the errors associated with our lack of knowledge (of data, phenomena and models), would consist of a Bayesian inferential framework extending back to all raw climate data, a substantial endeavour which—as its minimum condition—would require climate scientists to abandon their use of orthodox methods for statistical inference and parameter estimation (Jaynes and Bretthorst 2003).

Notes

This approach is applicable not only to climate models, but models of any type, including economic models.

References

Bennett CH (1973) Logical reversibility of computation. IBM J Res Dev 17:525–532

Boltzmann L (1877) Über die Beziehung zwischen dem zweiten Hauptsatze dewr mechanischen Wärmetheorie und der Wahrscheinlichkeitsrechnung, respective den Sätzenüber das Wärmegleichgewicht. Wien. Ber 76:373–435; English transl.:Le Roux J (2002) 1–63 http://www.essi.fr/~leroux/

Brillouin L (1930) Les Statistiques Quantiques et Leurs Applications. Les Presses Universitaires de France, Paris

Callen HB (1985) Thermodynamics and an introduction to thermostatistics, 2nd edn. Wiley, NY

Fortet R (1977) Elements of probability theory. Gordon and Breach Science Publication, London

Gaggioli RA, Paulus DM Jr (2002) Available energy—part II: Gibbs extended. J Energy Resour Technol ASCE 124:110–115

Gaggioli RA, Richardson DH, Bowman AJ (2002) Available energy—part I: Gibbs revisited. J Energy Resour Technol ASCE 124:105–109

Gibbs JW (1873) Graphical methods in the thermodynamics of fluids. Trans Conn Acad 2:309–342

Gibbs JW (1873) A method of graphical representation of the thermodynamic properties of substances by means of surfaces. Trans Conn Acad 2:382–404

Gibbs JW (1875–1878) On the equilibrium of heterogeneous substances. Trans Connecticut Acad 3(1875–1876):108–248. (1877–1878):343–524

Gilmore R (1982) Le Châtelier reciprocal relations. J Chem Phys 76(11):5551–5553

Grendar M, Niven RK (2010) The Polya information divergence. Inf Sci 180:4189–4194

Grendár M Jr, Grendár M (2001) What is the question that MaxEnt answers? A probabilistic interpretation. In: Mohammad-Djafari A (ed) Bayesian inference and maximum entropy methods in science and engineering (MaxEnt 2000), AIP Conf Proc, 2001, pp 83–94

de Groot SR, Mazur P (1984) Non-equilibrium thermodynamics. Dover Publications, NY

Jackson EA (1968) Equilibrium statistical mechanics. Dover Publication, NY

Jaynes ET (1957) Information theory and statistical mechanics. Phys Rev 106:620–630

Jaynes ET (1963) Information theory and statistical mechanics. In: Ford KW (eds) Brandeis University Summer Institute, lectures in theoretical physics, vol. 3: statistical physics. Benjamin-Cummings Publication, USA, pp 181–218

Jaynes ET, Bretthorst GL (eds) (2003) Probability theory: the logic of science. Cambridge University Press, Cambridge

Kapur JN, Kesevan HK (1992) Entropy optimization principles with applications. Academic Press, Boston

Kleidon A, Lorenz RD (eds) (2005) Non-equilibrium thermodynamics and the production of entropy: life, earth and beyond. Springer, Heidelberg

Landauer R (1961) Irreversibility and heat generation in the computing process. IBM J Res Dev 5:183–191

Leff HS, Rex AF (1990) Maxwell’s demon: entropy, information, computing. Princeton University Press, Princeton

McGuffie K, Henderson-Sellers A (2005) A climate modelling primer, 3rd edn. Wiley, NY

Niven RK (2005) Exact Maxwell-Boltzmann, Bose-Einstein and Fermi-Dirac statistics. Phys Lett A 342(4):286–293

Niven RK (2006) Cost of s-fold decisions in exact Maxwell-Boltzmann, Bose-Einstein and Fermi-Dirac statistics. Phys A 365(1):142–149

Niven RK (2009) Combinatorial entropies and statistics. Eur Phys J B 70:49–63

Niven RK (2009) Non-asymptotic thermodynamic ensembles. EPL 86:20010

Niven RK (2009) Derivation of the maximum entropy production principle for flow-controlled systems at steady state. Phys Rev E 80:021113

Niven RK (2010) Minimisation of a free-energy-like potential for non-equilibrium systems at steady state. Philos Trans B 365:1323–1331

Niven RK, Andresen B (2009). In: Dewar RL, Detering F (eds) Complex physical, biophysical and econophysical systems. World Scientific, Hackensack, p 283

Niven RK, Grendar M (2009) Generalized classical, quantum and intermediate statistics and the Polya urn model. Phys Lett A 373:621–626

Nulton J, Salamon P, Andresen B, Anmin Q (1985) Quasistatic processes as step equilibrations. J Chem Phys 83(1):334–338

Paltridge GW (1975) Global dynamics and climate—a system of minimum entropy exchange. Q J R Meteorol Soc 101:475–484

Paltridge G, Arking A, Pook M (2009) Trends in middle- and upper-level tropospheric humidity from NCEP reanalysis data. Theor Appl Climatol 98(3-4):351–359

Peixoto JP, Oort AH (1992) Physics of climate. AIP, Melville

Planck M (1901) Über das gesetz der Energieverteilung im Normalspektrum. Annalen der Physik 4:553–563

Read CB (1983). In: Kotz S, Johnson NL (eds) Encyclopedia of statistical sciences, vol 3. Wiley, NY, pp 63–66

Ruppeiner G (1979) Thermodynamics: a Reimannian geometric model. Phys Rev A 20(4):1608–1613

Salamon P, Berry RS (1983) Thermodynamic length and dissipated availability. Phys Rev Lett 51(13):1127–1130

Salamon P, Andresen B, Gait PD, Berry RS (1980) The significance of Weinhold’s length. J Chem Phys 73(2):1001–1002. erratum 73(10):5407

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27:379–423. 623–659

Stirling J (1730) Methodus differentialis: sive tractatus de summatione et interpolatione serierum infinitarum. Gul. Bowyer, London, Propositio XXVII:135–139

Stocker TF, Qin D, Plattner G-K, Tignor M, Midgley PM (eds) (2010) Meeting report of the intergovernmental panel on climate change expert meeting on assessing and combining multi model climate projections, IPCC Working Group I Technical Support Unit, University of Bern, Bern, Switzerland

Szilard L (1929) Zeitschrift für Physik 53:840. English transl.: Rapoport A, Knoller M (1964). In: Leff HS, Rex AF (1990) Maxwell’s demon: entropy, information, computing. Princeton University Press, NJ, p 124

Tribus M (1961) Thermostatics and thermodynamics. D. Van Nostrand, Princeton

Vincze I (1974) Progress in statistics 2:869

Weinhold F (1975) Metric geometry of equilibrium thermodynamics. III. Elementary formal structure of a vector-algebraic representation of equilibrium thermodynamics. J Chem Phys 63(6):2488–2495

Acknowledgments

This work was inspired by discussions at the Mathematical and Statistical Approaches to Climate Modelling and Prediction workshop, Isaac Newton Institute for Mathematical Sciences, Cambridge, UK, 11 August to 22 December 2010. The author sincerely thanks the workshop organisers for travel support.

Author information

Authors and Affiliations

Corresponding author

Appendix: The least action bound

Appendix: The least action bound

The Riemannian geometric interpretation in §3.3 leads to a rather curious physical limit. Consider a path on the manifold of equilibrium positions defined by \({\mathfrak{H}^{*}}{(\langle E \rangle, \langle B \rangle)}\) or ϕ(ω E , ω B ), specified by some path parameter ξ in the model space, which may—but need not—correspond to time. The arc length of the path from position 1 to 2, represented by ξ = 0 to ξ = ξmax, is given by (Niven and Andresen 2009):

where \({\bf f}=[\langle E \rangle, \langle B \rangle]^{\top}, {\varvec{\Upomega}}=[\omega_E, \omega_B]^{\top}\) and the overdot indicates the rate of change with respect to ξ. Now, in the \(\mathfrak{H}^{*}\) representation, the total change in the framework entropy along the same path can be shown to be (Niven and Andresen 2009):

where \(\bar{\epsilon}\) is a mean dissipation parameter (e.g. minimum dissipation time) and \(\mathcal{{J}}\) is an action integral defined within the model space. Similarly, in the ϕ representation, the total change is:

From the Cauchy-Schwarz inequality, (51)–(53) give, in either case:

Equation (54) can be considered as a generalised least action bound on processes on the manifold of optimal solutions. In essence, it specifies the minimum cost or penalty, in units of \(\mathfrak{H}^{*}\) or ϕ, to move the system from ξ = 0 to ξ = ξ max at the specified rates \({\dot{{\mathbf f}}}\) or \({\dot{\varvec{\Upomega}}}.\) If the process occurs infinitely slowly, the lower bound of the action is zero (it is “reversible”); otherwise, it is necessary to pay the minimum penalty \(\Updelta {\mathfrak H}^{*}_{min} = - \Updelta \phi_{min} = \bar{\epsilon} \mathcal{{J}}_{min} = {\frac{1} {2}} \bar{\epsilon} {\L^{2}}/{\xi_{max}}\) to be able to alter the framework within the finite parameter duration ξ max (it is “dissipative”). In the present scenario, we assume that the costs 〈E 〉 and benefits 〈B 〉 of the model framework are realisable as external physical quantities, outside the model space itself; likewise, so will be the entropy \(\mathfrak{H}^{*}\) and potential ϕ, either in the units of k or the equivalent information units. Eq. (54) therefore provides an information limit on the minimum price for making alterations to a constrained modelling framework. (Of course, it applies to any modelling framework, not just for climate modelling.) In some sense, this limit is allied to the informational principles demonstrated by Szilard (1929), Landauer (1961), Bennett (1973) and many others (Leff and Rex 1990), although it is of quite different character.

Rights and permissions

About this article

Cite this article

Niven, R.K. Maximum-entropy weighting of multiple earth climate models. Clim Dyn 39, 755–765 (2012). https://doi.org/10.1007/s00382-011-1163-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-011-1163-5