Abstract

The engineering design process often entails optimizing the underlying geometry while simultaneously selecting a suitable material. For a certain class of simple problems, the two are separable where, for example, one can first select an optimal material, and then optimize the geometry. However, in general, the two are not separable. Furthermore, the discrete nature of material selection is not compatible with gradient-based geometry optimization, making simultaneous optimization challenging. In this paper, we propose the use of variational autoencoders (VAE) for simultaneous optimization. First, a data-driven VAE is used to project the discrete material database onto a continuous and differentiable latent space. This is then coupled with a fully-connected neural network, embedded with a finite-element solver, to simultaneously optimize the material and geometry. The neural-network’s built-in gradient optimizer and back-propagation are exploited during optimization. The proposed framework is demonstrated using trusses, where an optimal material needs to be chosen from a database, while simultaneously optimizing the cross-sectional areas of the truss members. Several numerical examples illustrate the efficacy of the proposed framework. The Python code used in these experiments is available at github.com/UW-ERSL/MaTruss.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

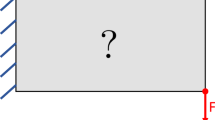

In engineering design, we are often faced with the task of optimizing the underlying geometry and selecting an optimal material [1]. As a simple example, consider the truss design problem illustrated in Fig. 1 where one must optimize the cross-sectional areas, while selecting, for simplicity, a single material for all the truss members. Formally, one can pose this as [2, 3]:

where \(\phi\) is the objective (for example, compliance), g denotes a set of constraints (such as yield stress and buckling), \({\varvec{f}}\) is the applied force, K is the truss stiffness matrix, and \({\varvec{u}}\) is the nodal displacements. The design variables are the cross-sectional areas of the members \({\varvec{A}} = \{A_1, A_2,\ldots ,A_{N}\}\), with limits \({\varvec{A}}_{{{\rm min}}}\) and \({\varvec{A}}_{{{\rm max}}}\), and the material choice \(m \in M\). We denote the collection of relevant material properties by \(\zeta _m\); these may include, for example, the Young’s modulus (\(E_m\)), cost per unit mass (\(C_m\)), mass density (\(\rho _m\)) and yield strength (\(Y_m\)).

1.1 Literature review

Observe that the two sets of design variables are tightly coupled. In other words, one cannot, for example, pick a material, and then optimize the cross-sectional areas (or, vice versa). To quote [4] “... either approach does not guarantee the optimal combination of geometry and material”. Furthermore, while the cross-sectional areas are continuously varying, the material choice is discrete, making the problem difficult to solve using classic gradient-based optimization. Note that treating material properties as continuous, independent design variables is not a viable option since the variables would simply converge to their optimal values. For example, Young’s modulus, tensile strength can be expected to converge to the maximum (upper limit), while the variables of cost and density would converge to the lower bound. However, such a material would never exist.

If the geometry is fixed, Ashby’s method [5] that relies on a performance metric, is the most popular strategy for material selection. Furthermore, for simple design problems where the loads, geometry and material functions are separable [6], a material index can be used to select the best material. This is simple, efficient and reliable. However, when there are multiple objectives or constraints, a weighted approach is recommended [7]. In practice, computing these weights is not trivial, and the material choice may be far from optimal. Several non-gradient methods have been proposed for material selection [8,9,10], but these cannot be integrated with gradient-based optimization.

An alternate concept of a design index was proposed in Ananthasuresh and Ashby [11]. It was shown that the design index can be used to construct a smooth material function, enabling simultaneous optimization of the geometry and selection of the material via gradient based optimization [4]. However, design indices were limited to simple determinate trusses. In [12], for the special case of a single stress constraint and a single load, the authors concluded that utmost two materials are sufficient and that the truss design problem can be cast as a linear programming problem. Unfortunately, this method does not apply when there are multiple constraints. Recently, the authors of [13] considered two materials (glue-laminated timber and steel) to design truss structures incorporating stress constraints as a mixed-integer quadratic problem (MIQPs). However, the authors note that “[MIQPs]... require algorithmic tuning and considerable computational power”. Another hybrid approach was proposed in [14] where a combination of the gradient-based methods such as sequential quadratic programming (SQP) and an evolution method such as genetic algorithm (GA) are used to solve MDNLPs. But these methods can be prohibitively expensive.

Mathematically, the problem of (discrete) material selection and (continuous) area optimization can be cast as mixed-discrete nonlinear programming problems (MDNLPs). Such problems are fairly common in engineering, and several solution strategies methods have been proposed; see [15,16,17]. However, regardless of the strategy, these methods entail repeated solution of a sequence of nonlinear programming problems with careful relaxations and approximations, making them sensitive to assumptions and underlying models [18]. Furthermore, the popular branch and bound algorithm used in solving MDNLPs [16] does not apply here since the optimization problem depends indirectly on the material index through the database.

1.2 Paper overview

The primary contribution of this work is the use of variational autoencoders (VAEs) to solve such problems. VAEs are a special form of neural networks that can convert discrete data, such as a material database, into a continuous and differentiable representation, making the design problem amenable to gradient-based optimization. In the proposed method (see Fig. 2), a data-driven VAE is trained using a material database, and the trained decoder is then integrated with neural networks to simultaneously optimize the geometry and material.

The proposed VAE framework is discussed in Sect. 2. In Sect. 3, the VAE is integrated with two additional and simple neural networks to solve the truss design problem. Specifically, we leverage the differentiable material representation to simultaneously optimize the geometry and material. Section 4 demonstrates the proposed framework through several numerical examples. In Sect. 5, limitations and future work are discussed.

2 A differentiable material representation

In this section, we discuss how variational autoencoders (VAEs) can be used to obtain a continuous and differentiable representation of a discrete material database. This will serve as a foundation for the next section on design optimization.

2.1 VAE architecture and training

VAEs are popular generative models that have wide applicability in data compression, semi-supervised learning and data interpolation; please see [19]. In essence, a VAE captures the data in a form that can be used to synthesize new samples similar to the input data. For example, VAEs have been used to generate new microstructures from image databases [20], in designing phononic crystals [21], and heat-conduction materials [22].

However, in this paper, we do not use VAEs to synthesize new data, instead we simply use the VAE’s ability to map uncorrelated data onto an abstract latent space. In that sense, VAEs are similar to principal component analysis (PCA) in its ability to extract useful information from the data. However, the nonlinear nature of VAEs allows for far greater generalization than PCA [23]. In particular, the proposed VAE architecture for capturing material properties is illustrated in Fig. 3, and consists of the following components:

-

1.

A four-dimensional input module corresponding to the four properties in Table 1, namely the Young’s modulus (E), cost (C), mass density (\(\rho\)) and yield strength (Y). The input set is denoted by \({\boldsymbol{\zeta }}\).

-

2.

An encoder F consisting of a fully-connected network of 250 neurons, where each neuron is associated with an ReLU activation function and weights [24].

-

3.

A two-dimensional latent space, denoted by \({z_0, z_1}\) that lies at the heart of the VAE.

-

4.

A decoder D, which is similar to the encoder, consists of a fully-connected network of 250 neurons.

-

5.

A four-dimensional output corresponding to the same four properties; the output set is denoted by \(\hat{{\boldsymbol{\zeta }}}\).

In this work, the VAE was trained on a material database consisting of 92 materials [25], where Table 1 represents a small sample.

As mentioned earlier, the VAE’s primary task is to match the output to the input as closely as possible. This is done through an optimization process (also referred to a training), using the weights associated with the encoder and decoder as optimization parameters. In other words, we minimize \(\Vert {\boldsymbol{\zeta }} - \hat{{\boldsymbol{\zeta }}}\Vert\). Additionally, a KL divergence loss is imposed to ensure that latent space resembles a standard Gaussian distribution \(z \sim {\mathcal {N}}(\mu = 0, \sigma = 1)\) [19]. Thus, the net loss can then expressed as:

where \(\beta\) is set to a recommended value of \(5\times 10^{-5}\). Further the input is scaled between (0, 1) for all attributes, and the output is re-scaled back after training. This ensures that all material properties are weighted equally. In this work, we used PyTorch [26] to model the VAE, and the gradient-based Adam optimizer [27] to minimize Eq. (2) with a learning rate of 0.002 and 50,000 epochs. The convergence is illustrated in Fig. 4; the training took approximately 51 s on a Macbook M1 pro.

Thus, the VAE captures each material uniquely and unambiguously using a non-dimensional latent space, i.e., \({z_0, z_1}\) in our case; this is visualized in Fig. 5. For example, annealed AISI 4340 is represented by the pair \((-1.2,1.0)\) while Acrylic is represented by \((0.6,-0.4)\). The VAE also clusters similar materials together in the latent space, as can be observed in Fig. 5. In an abstract sense, the latent space in Fig. 5 is similar to the popular Ashby charts [5].

2.2 Representational accuracy

One can expect the values reconstructed using the decoder to deviate from the true material data; Table 2 summarizes the errors. The following observations are worth noting:

-

1.

Despite the lack of correlation between material properties, and two orders of magnitude difference in values, the VAE captures the entire database of 92 materials reasonably well, using a simple two-dimensional latent space.

-

2.

The error can be further reduced by either increasing the dimension of the latent space, or limiting the type of materials considered (see Sect. 4).

-

3.

Finally, as will be discussed in the next section, the original material data will be used, as part of a post-processing step, at the end of optimization.

2.3 Differentiability of latent space

A crucial aspect of the latent space is its differentiability. In particular, we note that using the decoder, each of the output material properties is represented via analytic activation functions; for example, \({\hat{E}} = D^*_E(z_0, z_1)\) where \(D^*_E(\cdot )\) is a combination of activation functions and weights. It means that one can back propagate through the decoder to compute analytical sensitivities; for example, the sensitivity (\(\frac{\partial {\hat{E}}}{\partial z_0}\)). This plays an important role in gradient-driven design optimization. The proposed method thus falls under the category of differentiable programming, which has recently gained popularity motivated by deep learning methods in other fields, such as computer graphics [28] and physical simulation [29].

2.4 Material similarity

While the accuracy and differentiability of the VAE lends itself to design optimization, the latent space also provides key insights into material characteristics. For instance, one can compute the Euclidean distance between materials in the latent space. These distances are reported in Fig. 6. As one can expect, steels are closer to aluminum alloys, than they are to plastics.

Furthermore, one can overlay the latent space in Fig. 5 with specific material properties to gain further insight. This is illustrated in Fig. 7 where the contour plots of the Young’s moduli are illustrated in the latent space. This allows designers to visualize similarities between materials, specific to a material property.

3 Design optimization

Having constructed a differentiable representation of material properties, one can now pose the optimization problem discussed in Sect. 1, now using \(\{{\varvec{A}}, z_0, z_1\}\) as continuous design variables Eq. (3). Without a loss in generalization, we consider a specific instance of the truss optimization problem, where the objective is the compliance J Eq. (3a) with three sets of constraints: cost constraint \(g_c\), buckling constraint \(g_b\), and tensile yield constraint \(g_y\) as referred in Eqs. (3c)–(3e). We assume here that members will fail due to buckling, before failing due to compressive yield [4]. The resulting optimization problem for circular truss members can be posed as [30]:

where \(P_k\) is the internal force in member k, \(L_k\) is its length, \(C^*\) is the allowable cost, \(F_s\) is the safety factor, and the material properties (\({\hat{E}}, {\hat{\rho }}, {\hat{Y}}, {\hat{C}}\)) are decoded from the latent space coordinates (\(z_0,z_1\)). Further, to facilitate gradient-driven optimization, the max operator is relaxed here using a p-norm, i.e., \(\underset{i}{\text{max}}(x_i) \approx \Vert \varvec{x}\Vert _p\), with \(p=6\).

To solve the above optimization, we use two additional neural networks (NNs) (see Fig. 8a): a truss network \(\text{NN}_T\), parameterized by weights \({\varvec{w}}_T\), and a material network \(\text{NN}_M\), parameterized by weights \({\varvec{w}}_M\). The truss network \(\text{NN}_T\) is a simple feed-forward NN with two hidden layers with a width of 20 neurons, each containing an ReLU activation function. The input to the \(\text{NN}_T\) is a unique identifier for each truss member, in this case we use the vector of coordinates of the truss member centers. The output layer of \(\text{NN}_T\) consists of N neurons where N is the number of truss members, activated by a Sigmoid function, generates a vector \({\varvec{O}}_{\varvec{T}}\) of size N whose values are in [0, 1]. The output is then scaled as \({\varvec{A}} \leftarrow {\varvec{A}}_{{{\rm min}}} + {\varvec{O}}_{\varvec{T}}({\varvec{A}}_{{{\rm max}}} - {\varvec{A}}_{{{\rm min}}})\) to satisfy the area bounds Eq. (3f). The material network \(\text{NN}_M\) (Fig. 8b) is similar to \(\text{NN}_T\) in construction, for simplicity. Since we are selecting a single material for all the truss members, we do not require a vector of inputs corresponding to each member to the \(\text{NN}_M\) and instead pass a scalar identifier 1 as its input. The output layer consists of two output neurons activated by Sigmoid functions. The outputs \({\varvec{O}}_{\varvec{M}}\) are scaled as \({\varvec{z}} \leftarrow -3 + 6 {\varvec{O}}_{\varvec{M}}\), resulting in \(z_i \in [-3,3]\) corresponding to six Gaussian deviations. These outputs now interface with the trained decoder \(D^*\) from Fig. 3. Thus, by varying the weights \({\varvec{w}}_M\) of the material network one can create points in the latent space, that then feeds to the trained decoder resulting in values of material constants.

3.1 Loss function

With the introduction of the two NNs, the weights \({\varvec{w}}_T\) now control the areas \({\varvec{A}}\), while the weights \({\varvec{w}}_M\) control the material constants \(\hat{{\boldsymbol{\zeta }}}\). In other words, the weights \({\varvec{w}}_T\) and \({\varvec{w}}_M\) now form the design variables. Further, since NNs are designed to minimize an unconstrained loss function, we convert the constrained minimization problem in Eq. (3) into an unconstrained minimization by employing a log-barrier scheme as proposed in [31]:

where

with the parameter t updated during each iteration (as described in the next section). Thus, the optimization problem reduces to a simple form:

A schematic of the proposed framework is presented in Fig. 9.

3.2 Structural analysis

We rely on classical structural analysis to solve the state equation Eq. (6b) [32] and evaluate the performance of the truss structure during each iteration. The solver computes the stiffness matrix for each member based on the corresponding area, length and material. Upon assembling the global stiffness matrix, the nodal displacement vector u is computed using the standard linear solver torch.linalg.solve in PyTorch [26]. Since this is part of the PyTorch library, this allows us to exploit backward propagation for automatic differentiation [33], resulting in an end-to-end differentiable solver with automated sensitivity analysis as described next.

3.3 Sensitivity analysis

In the proposed framework, the loss function in Eq. (4) is minimized using gradient-based Adagrad optimizer [34]. Further, the sensitivities are computed automatically using back propagation. A schematic representation of the backward computation graph is shown in Fig. 10. For instance, the sensitivity of the loss function with respect to the weights \({\varvec{w}}_T\) can be expressed as:

This is illustrated in Fig. 10 where each term corresponds to an edge in the graph. Similarly, the sensitivity with respect to \({\varvec{w}}_M\) is given by:

These are once again illustrated in Fig. 10. Note that the backward computation is carried out automatically in the proposed framework.

3.4 Post processing

Once the optimization process is complete, we obtain an optimal set of latent coordinates \({\varvec{z}}^{\varvec{*}}\). However, there might not exist a material in the database corresponding precisely to \({\varvec{z}}^{\varvec{*}}\). We, therefore, define a confidence metric \(\gamma _{m}\) for each material m using the distance from \({\varvec{z}}^{\varvec{*}}\) to the material m in the latent space:

The metric serves to rank the materials based on their distance from \({\varvec{z}}^{\varvec{*}}\). After finding the closest material, we repeat the geometry optimization to compute the optimal areas \({\varvec{A}}^{\varvec{*}}\). This is analogous to the rounding method in discrete optimization [16]: “After rounding, it is usually best to repeat the optimization once more, allowing only the continuous design variables to vary. This process may not lead to the exact optimum, and sometimes may not even lead to a feasible solution, but for many problems this is an effective approach.” This is explored further through numerical experiments in Sect. 4.

3.5 Algorithm

The proposed algorithms are summarized in this section.

Algorithm 1 As mentioned earlier, we assume that a material database has been provided. The procedure begins with training the VAE as described in Algorithm 1, with the input training data being the material database. Encoder F is a neural network that takes in the set of material data \(\zeta\) and encodes the four-dimensional data to the two-dimensional latent space denoted by \(z_0,z_1\) through a probabilistic latent distribution governed by \(\mu ,\sigma\). The VAE Loss is then used to drive the training per Eq. (2) until a sufficiently high representational accuracy is attained. The encoder is then discarded and the decoder D is retained for material selection. The decoder takes the latent space coordinates as input and returns the expected material properties.

Algorithm 2 In Algorithm 2, starting with the given truss, specified loads, restraints, area bounds and other constraints, the areas are optimized and simultaneously the best material is selected as follows. The truss neural network and material neural network are constructed as described in Sect. 3; these output the cross sectional area and the latent space coordinates, respectively. The decoder D (from above) then returns the material properties, and now with the area and material information, the state equation can be solved and the objective J can be evaluated per Sect. 3.2. The constraints can also be computed post finite element analysis and the loss function Eq. (4) is next evaluated. Sensitivity of the objective and constraints is available through automatic differentiation as described in Sect. 3.3. In our experiments \(t_0 = 3\) and \(\mu = 1.01\) for the log-barrier implementation Eqs. (4) and (5), and the learning rate for the Adagrad optimizer was set to the value of \(2\times 10^{-3}\) to solve the Eq. (6). Once convergence is reached, we re-optimize for the optimal areas using the nearest material in the latent space as described in Sect. 3.4.

4 Numerical experiments

In this section, we present results from several experiments that illustrate the method and algorithm.

4.1 Simultaneous optimization of area and material

The central hypothesis of this paper is that simultaneous optimization of material and geometry leads to superior performance compared to sequential optimization. To validate this hypothesis, we consider the 6-bar truss system illustrated in Fig. 11, and perform the following three experiments:

-

1.

Material optimization: Here the areas are fixed at the initial value of \(A_k = 2\times 10^{-3}\,\text{m}^2, \forall k\), and only the material is optimized using the decoder.

-

2.

Area optimization: Using the optimal material from the first experiment, the areas are optimized by disregarding the decoder.

-

3.

Simultaneous material and area optimization: Here the material and areas are optimized simultaneously.

The results are reported in Table 3 for a cost constraint of \(C^* = \$60\) and a safety factor \(F_S = 4\). Observe that scenario-1 (material optimization) leads to the lowest performance since the areas are not optimized. For this scenario, we also performed a brute-force search wherein the compliance was evaluated for all the materials; the brute-force search also resulted in ‘AISI 4130 norm’ as the best choice. In scenario-2, when the areas are optimized using ‘AISI 4130 norm’ as the material, the performance improved significantly. Finally, the best performance is achieved in scenario-3 when both material and areas are optimized simultaneously. Observe that the optimal material in scenario-3 differs from the one found in scenario-1.

For scenario-3, Table 4 lists the three closest materials and their confidence values.

As an additional experiment, we replaced the cost constraint (Eq. (3c)) in the above problem with a mass constraint:

with \(M^* = 40\) kg; all other parameters being the same. The results are presented in Table 5. Once again we observe that simultaneous optimization results in the best performance. Furthermore, when cost constrained was imposed, a steel alloy was chosen as the best material; whereas when a mass constrained is imposed, an aluminum alloy was chosen.

For scenario-3 under mass constraint, Table 6 lists the three closest materials and the confidence values.

The location of the optimal material in the latent space for this scenario is illustrated in Figs. 12 and 13. We observe that the closest catalog material for scenario-3 is Al 2018. The latter is chosen, and the areas are subsequently re-optimized.

A close-up of Fig. 12; the closest material to the optimal one is Al 2018

4.2 Material database subsets

While we have used the entire material database in the previous experiment, we now consider subsets of the database. Subsets of materials can often be justified based on experience. The VAE reconstruction error for the full database and three sample subsets is reported in Table 7. As one expect, the VAE error reduces for the subsets.

We now carry out a few experiments using these subsets, and report the results. First, for the truss problem in Fig. 11, we carry out a cost-constrained simultaneous optimization for the full database, as in the previous section. However, we now report additional results in Table 8. When all materials are considered (row-2), the compliance prior to snapping to the closest material is reported as \(J = 2.25\). The closest material is AISI 1010, resulting in a final compliance of \(J^* = 2.58\). As one can expect, \(J^* > J\).

Next, since AISI 1010 is a steel alloy, we now consider only the Steel subset, and carry out simultaneous optimization. The results are reported in row-3. Observe that the raw compliance \(J=2.6\) is surprisingly larger than \(J = 2.25\) in the previous row. This can be attributed to the larger VAE reconstruction error when all materials are considered, i.e., \(J = 2.25\) is not as reliable as the estimate \(J = 2.6\). Further, after the closest material is selected (AISI 1020), the final compliance is \(J^* = 2.6\). This is slightly larger than the value in the previous row. This discrepancy is analogous to rounding errors in integer optimization [16].

Next, we repeated the above experiment for a mass constraint (instead of a cost constraint) and report the results in Table 9. When all materials are considered (row-2), the algorithm converges to the aluminum class, with an optimal material Al 2018, as before. Further, \(J^* > J\) as expected. The results using the aluminum subset is reported in row-3. Now, the optimal material is AA356.0-F, and it differs from Al 2018. Now the performance \(J^* = 8.38\) has significantly improved compared to \(J^* = 8.53\).

4.3 Convergence

To study the convergence characteristics of simultaneous optimization, we consider the antenna tower illustrated in Fig. 14. We impose a cost constraint of \(\$50\), factor of safety of \(F_S = 4\), and area bounds \([10^{-9}, 10^{-2}]\,\text{m}^2\). Then the areas and material are simultaneously optimized, resulting in an optimal material of ‘AISI 4130 norm’ and \(J^* = 32.5\). The convergence in compliance is illustrated in Fig. 15. We observe that the material choice converges early while the areas continue to be optimized; this was typical. The optimization took 1.89 s, with forward propagation and FEA, accounting for \(16\%\) each, while back-propagation consumed \(54\%\).

Convergence of compliance for the material and area for loading in Fig. 14

4.4 Extension to 3D and Pareto design

In this section we demonstrate two examples of simultaneous design optimization with material selection in 3D. The first example is that of a cantilever, and the second is a transmission tower, as illustrated in Fig. 16. We further demonstrate that the proposed method generates Pareto designs under mass and cost constraints.

First, we consider the mid-loaded cantilever in Fig. 16 with a 20 kN force, and seek to optimize the truss cross sectional areas and select the optimal material from the database. In this problem, along with the safety constraints on buckling and yielding (with a factor of safety of 4), we impose a mass constraint. The final compliance values (\(J^*\)) and the selected optimal material, for various mass constraints, are summarized in Table 10. The resulting truss designs are illustrated in Fig. 17 (cross sectional values not included for brevity). From Fig. 17 we observe that at lower target masses, aluminum alloys are preferred, and at higher masses, steels are selected.

Next, we consider the 3D transmission tower illustrated in Fig. 16 with a 100 N force, and seek to optimize the truss cross sectional areas and select the optimal material from the database. In this problem, along with the safety constraints of buckling and yielding (with a factor of safety of 4), we impose a cost constraint. The final compliance values (\(J^*\)) and the selected optimal material, for various cost constraints, are summarized in Table 11. The truss cross sections are illustrated in Fig. 18 (cross sectional values not included for brevity). From Fig. 18 we observe that at lower target costs, Steel alloys are preferred, and at higher costs, Aluminum alloys are selected.

5 Conclusion

Engineers today have access to over 150,000 materials [35], and this number is growing as new materials are being discovered [36, 37]. This presents a significant challenge to design engineers. In this paper, using variational auto encoders (VAEs), we proposed a generic method to simultaneously select the optimal material, while optimizing the geometry. The proposed method was demonstrated using trusses.

There are several limitations to the proposed method. A heuristic confidence-metric was used, as a final step, to snap from the continuous latent space to the nearest material. While this is a well known method in integer optimization, and was found to be effective in our study, it might lead to discrepancies in performance, as was noted in the numerical experiments. On the other hand, since the proposed method suggests an optimal set of material properties, it could drive material innovation in targeted applications. A very small material database with about 100 materials, with limited number of attributes, was considered here. Larger databases [38] with additional attributes needs to be explored and scalability of method remains to be evaluated. In passing, we note that VAEs have shown promise in other applications, with datasets larger than 50,000 entries [39, 40]. The method could further benefit from better tuning of the networks, optimal choice of the NN architecture [41], and reducing the reconstruction error of VAEs [42]. Another promising direction for discrete selection within continuous frameworks is differentiable programming [29, 43] and learnable lookup tables [44]. Comparison of the proposed method against such methods will be considered in the future.

Despite these limitations, we believe that the method holds promise. For example, it could be extended to topology optimization with material selection, specifically using the NN framework proposed in [45]. Furthermore, inclusion of a diverse set of material attributes, such as thermo-elastic properties [46], auxetic properties [47], coating properties [48], global warming indices [13] can also be considered. Inclusion of uncertainty in material properties is also of significant interest. Finally, the method can be extended to the selection of discrete components in assemblies such as springs, bolts, etc., and to the selection of discrete microstructures [49] in multiscale designs.

References

Eggert R (2005) Engineering design. Pearson/Prentice Hall, Hoboken

Rozvany GIN, Bendsoe MP, Kirsch U (1995) Layout optimization of structures. Appl Mech Rev 48(2):41–119

Achtziger W (1996) Truss topology optimization including bar properties different for tension and compression. Struct Optim 12(1):63–74

Rakshit S, Ananthasuresh GK (2008) Simultaneous material selection and geometry design of statically determinate trusses using continuous optimization. Struct Multidiscip Optim 35(1):55–68

Ashby MF, Cebon D (1993) Materials selection in mechanical design. Le Journal de Physique IV 3(C7):C7-1

Ashby MF, Johnson K (2013) Materials and design: the art and science of material selection in product design. Butterworth-Heinemann, Oxford

Ashby MF (2000) Multi-objective optimization in material design and selection. Acta Materialia 48(1):359–369

Jahan A, Ismail MY, Sapuan SM, Mustapha F (2010) Material screening and choosing methods—a review. Mater Des 31(2):696–705

Venkata Rao R (2006) A material selection model using graph theory and matrix approach. Mater Sci Eng A 431(1–2):248–255

Zhou C-C, Yin G-F, Hu X-B (2009) Multi-objective optimization of material selection for sustainable products: artificial neural networks and genetic algorithm approach. Mater Des 30(4):1209–1215

Ananthasuresh GK, Ashby MF (2003) Concurrent design and material selection for trusses. Workshop: Optimal Design at Laboratoire de Mécanique des Solides, Ecole Polytechnique, Palaiseau, France. November 26-28, 2003

Stolpe M, Svanberg K (2004) A stress-constrained truss-topology and material-selection problem that can be solved by linear programming. Struct Multidiscip Optim 27(1):126–129

Ching E, Carstensen JV (2021) Truss topology optimization of timber—steel structures for reduced embodied carbon design. Eng Struct 113540 (Vol: 252)

Roy S, Crossley WA, Jain S (2021) A hybrid approach for solving constrained multi-objective mixed-discrete nonlinear programming engineering problems. IntechOpen, 2021 [Online]

Arora JS, Huang MW, Hsieh CC (1994) Methods for optimization of nonlinear problems with discrete variables: a review. Struct Optim 8(2):69–85

Martins JRRA, Ning A (2021) Engineering design optimization. Cambridge University Press, Cambridge

Lee J, Leyffer S (2011) Mixed integer nonlinear programming. The IMA volumes in mathematics and its applications. Springer, New York

Köppe M (2012) On the complexity of nonlinear mixed-integer optimization. In: Mixed integer nonlinear programming. Springer, New York, pp 533–557

Kingma DP, Welling M (2019) An introduction to variational autoencoders. arXiv:1906.02691

Wang L, Chan Y-C, Ahmed F, Liu Z, Zhu P, Chen W (2020) Deep generative modeling for mechanistic-based learning and design of metamaterial systems. Comput Methods Appl Mech Eng 372:113377

Li X, Ning S, Liu Z, Yan Z, Luo C, Zhuang Z (2020) Designing phononic crystal with anticipated band gap through a deep learning based data-driven method. Comput Methods Appl Mech Eng 361:112737

Guo T, Lohan DJ, Cang R, Ren MY, Allison JT (2018) An indirect design representation for topology optimization using variational autoencoder and style transfer. In: 2018 AIAA/ASCE/AHS/ASC Structures, Structural Dynamics, and Materials Conference (p. 0804).

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Schmidhuber J (2015) Deep learning in neural networks: an overview. Neural Netw 61:85–117

Systèmes Dassault (2021) Solidworks. http://www.solidworks.com, Access date: 1 Oct 2021

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, Desmaison A, Kopf A, Yang E, DeVito Z, Raison M, Tejani A, Chilamkurthy S, Steiner B, Fang L, Bai J, Chintala S (2019) Pytorch: an imperative style, high-performance deep learning library. In: Advances in neural information processing systems, vol 32. pp 8024–8035

Kingma DP, Ba JL (2015) Adam: a method for stochastic optimization. In: 3rd International conference on learning representations, ICLR 2015—conference track proceedings, Dec 2015. arXiv:1412.6980

Shi L, Li B, Hašan M, Sunkavalli K, Boubekeur T, Mech R, Matusik W (2020) Match: differentiable material graphs for procedural material capture. ACM Trans Graph 39(6):1–15

Hu Y, Anderson L, Li T-M, Sun Q, Carr N, Ragan-Kelley J, Durand F (2019) Difftaichi: differentiable programming for physical simulation. 2019 Oct 1. arXiv:1910.00935

Suresh K (2021) Design optimization using MATLAB and SOLIDWORKS. Cambridge University Press, Cambridge

Kervadec H, Dolz J, Yuan J, Desrosiers C, Granger E, Ayed IB (2019) Constrained deep networks: Lagrangian optimization via log-barrier extensions 2(3):4. 2019 Apr 8. arXiv:1904.04205

Segerlind LJ (1984) Applied finite element analysis. John Wiley & Sons; 1991 Jan 16.

Chandrasekhar A, Sridhara S, Suresh K (2021) Auto: a framework for automatic differentiation in topology optimization. Struct Multidiscip Optim 64(6):4355–4365

Duchi J, Hazan E, Singer Y (2011) Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res 1:12(7)

Ashby MF (2011) Chapter 1–introduction. In: Ashby MF (ed) Materials selection in mechanical design, 4th edn. Butterworth-Heinemann, Oxford, pp 1–13

Ward L, Agrawal A, Choudhary A, Wolverton C (2016) A general-purpose machine learning framework for predicting properties of inorganic materials. npj Comput Mater 2(1):1–7

Ge X, Goodwin RT, Gregory JR, Kirchain RE, Maria J, Varshney LR (2019) Accelerated discovery of sustainable building materials. arXiv:1905.08222

Design G (2018) CES Selector. Cambridge, UK: Material Universe. Zugriff unter. https://www.grantadesign.com

Razavi A, Van den Oord A, Vinyals O (2019) Generating diverse high-fidelity images with vq-vae-2. Advances in neural information processing systems. 2019;32

Vahdat A, Kautz J (2020) NVAE: a deep hierarchical variational autoencoder. Adv Neural Inf Process Syst 33:19667–19679

Leung FH-F, Lam H-K, Ling S-H, Tam PK-S (2003) Tuning of the structure and parameters of a neural network using an improved genetic algorithm. IEEE Trans Neural Netw 14(1):79–88

Hou X, Shen L, Sun K, Qiu G (2017) Deep feature consistent variational autoencoder. In: 2017 IEEE winter conference on applications of computer vision (WACV). IEEE, (pp 1133–1141)

Peng X, Tsang IW, Zhou JT, Zhu H (2018) k-meansnet: when k-means meets differentiable programming. arXiv:1808.07292

Wang L, Dong X, Wang Y, Liu L, An W, Guo Y (2022) Learnable lookup table for neural network quantization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12423–12433

Chandrasekhar A, Suresh K (2021) TOuNN: topology optimization using neural networks. Struct Multidiscip Optim 63(3):1135–1149

Giraldo-Londoño O, Mirabella L, Dalloro L, Paulino GH (2020) Multi-material thermomechanical topology optimization with applications to additive manufacturing: design of main composite part and its support structure. Comput Methods Appl Mech Eng 363:112812

Takenaka K (2012) Negative thermal expansion materials: technological key for control of thermal expansion. Sci Technol Adv Mater 13:013001

Clausen A, Aage N, Sigmund O (2015) Topology optimization of coated structures and material interface problems. Comput Methods Appl Mech Eng 290:524–541

Chan Y-C, Da D, Wang L, Chen W (2021) Remixing functionally graded structures: data-driven topology optimization with multiclass shape blending. arXiv:2112.00648

Acknowledgements

The authors would like to thank the support of National Science Foundation through Grant CMMI 1561899.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Reproduction of Results

The Python code pertinent to this paper is available at https://github.com/UW-ERSL/MaTruss. The material data were sourced from SolidWorks.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chandrasekhar, A., Sridhara, S. & Suresh, K. Integrating material selection with design optimization via neural networks. Engineering with Computers 38, 4715–4730 (2022). https://doi.org/10.1007/s00366-022-01736-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-022-01736-0