Abstract

The main purpose of this paper is to design a numerical method for solving the space–time fractional advection-diffusion equation (STFADE). First, a finite difference scheme is applied to obtain the semi-discrete in time variable with convergence order \(\mathcal {O}(\tau ^{2-\beta })\). In the next, to discrete the spatial fractional derivative, the Chebyshev collocation method of the fourth kind has been applied. This discrete scheme is based on the closed formula for the spatial fractional derivative. Besides, the time-discrete scheme has studied in the \(L_{2}\) space by the energy method and we have proved the unconditional stability and convergence order. Finally, we solve three examples by the proposed method and the obtained results are compared with other numerical problems. The numerical results show that our method is much more accurate than existing techniques in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus (FC) is extended variants of classical integer-order ones that are produced by replacing fractional integer-order derivatives. Many applications of FC are in the branch of science and engineering such as physics, optimal control, chemistry, economics, polymeric materials and social science [3, 15, 17, 21,22,23,24,25]. Because of FC’s non-local, the solution of fractional differential equations (FDEs), such as the diffusion and reaction-diffusion models, has been considerable attention in the physical environment, statistical mechanics and continuum [1, 7, 8]. These physical models explain the action of the plural movement of microparticles in a material resulting from the random movement of each microparticle. It is also suitable as a topic relevant to the Markov system in mathematics as well as in different fields. These subjects can be explained using the diffusion equations named Brown equations. In the Brownian case, diffusion with an additional field of velocity and diffusion under the influence of a constant field of external force are both based on the equation of advection-dispersion. This is no longer true in the case of anomalous diffusion, i.e., the fractional generalization that varies in the case of advection and carries an external force field in [20]. A straightforward extension of the model of continuous-time random walk results in a fractional advection-dispersion equation (FADE).

Space and time-fractional diffusion equation are two main types of FADEs [13]. In the current paper, we investigate the STFADE, as follows:

where a(x, t) and b(x, t) are known functions and q(x, t) is the source term. An initial condition and the boundary conditions are also assumed:

where \({}_{0}\mathcal {D}_{t} ^{\beta }\) is the right Caputo fractional of the order \(\beta\). For \(n-1<\vartheta \le n,~ n\in \mathbb {N},\) the left and right Caputo fractional derivative of order \(\vartheta\), is defined by

If \(n-1<\vartheta <n \in \mathbb {N}\), then we have \(\lim _{\vartheta \rightarrow n}{}^{C}_{a}{\mathcal {D}}_{x}^{\vartheta }u(x, t)=\lim _{\vartheta \rightarrow n}{}^{C}_{x}{\mathcal {D}}_{b}^{\vartheta }u(x, t)=\frac{{\partial }^{n} u(x, t) }{\partial {x}^{n}}\). For \(a=0\), we introduce the notation \(\mathcal {D}^{\vartheta }_{x}\) for the space derivative. Equation (1) is the classical advection-dispersion equation (ADE) in the case of \(\beta =\gamma =1\) and \(\alpha =2\). We presume that STFADE has a unique and smooth solution under the initial and boundary conditions of relation (2).

In recent years, several methods have been proposed for solving the ADEs. Liu et al. applied the Mellin and Laplace transform for solving the time-fractional ADE in [18]. Moreover, [16] represented practical numerical schemes with variable coefficients on a finite domain to solve the one-dimensional space fractional ADE. Huang and Nie [9] by using Green functions and applying the Fourier–Laplace transforms approximated the STFADE. Momani et al. [12] produced an accurate algorithm for the Adomian decomposition to build a numerical solution of STFADE. Recently, the theorems of existence and uniqueness for STFADE in [10, 19, 23] and collocation method by using the shifted Chebyshev and rational Chebyshev polynomials in [4] are discussed.

The main aim of this paper is to represent a new numerical scheme to solve STFADE. The proposed scheme is founded on a finite difference method and a Chebyshev collocation method of the fourth kind. In Sect. 2, we investigate the time-discrete approach in the convergence and unconditional stability case. Then, we apply the Chebyshev collocation method to discrete the spatial direction and to get a full-discrete plan in Sect. 3. Eventually, we introduce three numerical examples to depict the efficiency of the new manner.

2 The convergence analysis of the time-discrete method

In this section, we obtain the semi-discrete scheme and prove the convergence and stability of the new numerical technique for Eq. (1). To obtain this, we need some preliminaries. Now, we define the functional space

where \(\mathcal {D}^{\alpha }_{x}= \frac{\partial ^{\alpha }}{\partial x^{\alpha }}\) and \(L^{2}(\Omega )\) is the Lebesgue integrable in \(\Omega\) with the inner product.

and the standard norm \(\Vert u(x)\Vert _{2}=\langle u(x), u(x)\rangle ^{\frac{1}{2}}\). For \(\gamma >0\) , the semi-norm and norm for right fractional derivative space \(J_\mathrm{R}^{\gamma }\) are defined as follows

respectively. Similar to the above relationship for left fractional derivative space \(J_\mathrm{L}^{\gamma }\), we have

It should be noticed that the notations \(J_\mathrm{L}^{\gamma }, J_\mathrm{R}^{\gamma }\) denote the closure of \(\mathbb {C}_{0}^{\infty }(\mathbb {R})\) with respect to \(\Vert \cdot \Vert _{J_\mathrm{L}^{\gamma }}, \Vert \cdot \Vert _{J_\mathrm{R}^{\gamma }}\), respectively. We define symmetric fractional derivative space \(J_\mathrm{S}^{\gamma }\) for \(\gamma >0,~\gamma \ne n-\frac{1}{2},~n\in \mathbb {N}\) with the semi-norm and norm

respectively. Let us introduce some lemmas for developing and proving the stability of the numerical solution of (1) that are listed below.

Lemma 1

(See [24].) Assume \(\theta\) be non-negative constant, \(s_{m}\) and \(r_{ k}\) are non-negative sequences that the sequence \(\upsilon _{m}\) satisfies

then \(\upsilon _{m}\) satisfies

Lemma 2

(See [6, 9].) For any \(u, \nu \in \mathcal {H}_{\Omega }^{\frac{\alpha }{2}}\) we have

Lemma 3

(See [5].) If \(\varphi \in J_{L}^{\gamma }, J_{R}^{\gamma }\) and \(0<\gamma <\alpha\), then we have

where C is constant. The analogous results exist for \(u\in J_\mathrm{S}^{\gamma }\) with \(\gamma >0, \gamma \ne n-\frac{1}{2}, n\in \mathbb {N}\).

Lemma 4

(See [14].) Let \(\beta \in (0,1)\) then the following \(\tau ^{2-\beta }\)-order of the Caputo derivative approximation formula (CDAF) into each subintervals [0, T] with the uniform step size \(\tau =\frac{T}{M}\) such that the node points are \(t_{j}=j\tau ,~ j=0, 1, \ldots , M\), holds

where

Lemma 5

The coefficients \(\mathcal {S}_{M,j},~ j=0, 1, \ldots , M\), in the CDAF defined by Lemma 4satisfy the properties:

-

1.

\(\mathcal {S}_{M,M}=1\),

-

2.

\(-1<\mathcal {S}_{M,j}<0, ~~j=0, 1,\ldots , M-1\),

-

3.

\(\left| \sum _{j=1}^{M-1}\mathcal {S}_{M,j}\right| <1\),

-

4.

\(-2<\mathcal {S}_{M,0}+\sum _{j=1}^{M-1}\mathcal {S}_{M,j}<1.\)

Proof

Let \(f(x)=(x)^{1-\beta }\), that is strictly increasing for all \(x>0\). By using the mean value theorem and describing \(f(M-j)=(M-j)^{1-\beta },~ M>j\), we can prove the lemma. We are not going to cover the details here.□

Now, we obtain the semi-discrete form of Eq. (1) at points \(\lbrace t _{j} \rbrace _{j=0}^{j=M}\). By using Lemma 4, we can write

where \(U^{M}=u(x,t_{M}), Q^{M}=\Gamma (2-\beta )q(x,t_{M}), \mathfrak {a}=\Gamma (2-\beta )a(x,t_{M}), \mathfrak {b}=\Gamma (2-\beta )b(x,t_{M})\) and \(\overline{\mathcal{S}}_{M,j}=-{\mathcal{S}}_{M,j}\). The truncation term is \(\mathfrak {R}^{M}\) where a positive constant C exists such that \(\mathfrak {R}^{M}\le C\mathcal {O}({ \tau } ^{2-\beta })\). By omitting the truncation term, we can get the following numerical approach, in which \(U^{M}\) and N are the approximation solution of Eq. (5) and the total number of the points in the x domain, respectively.

We suppose that in the current section for \(x\in [0,1]\), we have \(\mathfrak {a}, \mathfrak {b} >0\).

Lemma 6

Let \(U^{k}\in \mathcal {H}^{n}_{\Omega }, k=1, 2, \ldots , M\) and \(C_{1}, C_{2}\in \mathbb {R}\), be the solution of time discrete (6), then

Proof

We use the mathematical induction on k to prove the above inequality. For \(k=1\), we get

Multiplying Eq. (7) by \(U^{1}\) and integrating on \(\Omega\), it results

By using of Lemma 2, the second term of the left-hand side of the above inequality is negative, i.e.,

Regarding Lemmas 2 and 3 for the third term of left-hand side Eq. (8), one can obtain

The aforesaid relation and by using Lemma 5 can be rewritten as

Now, assume that the induction principle is true for all \(k=1, 2, \ldots , M-1\),

Multiplying Eq. (6) by \(U^{M}\) and integrating on \(\Omega\), it follows that

From Lemmas 2, 3 and 5 and using the Cauchy–Schwarz inequality, we deduce the following relation

where \(C_{1}\) and \(C_{2}\) are constants. This concludes the proof of Lemma 6.□

Theorem 1

Assume \(U^{M}\in \mathcal {H}^{n}_{\Omega }\) is the solution of semi-discrete scheme (6). Then, system (6) is unconditionally stable.

Proof

We suppose that \(\widehat{U}^{j}, j=1, 2, \ldots , M\), is the solution of scheme (6) with the initial condition \(\widehat{U}^{0}=u(x, 0)\). Then, given the error \(\varepsilon ^{j}=U^{j}-\widehat{U}^{j}\), Eq. (5) is converted as follows

from Lemma 6 and the above relation, we have

which completes the proof of the unconditional stability.

Theorem 2

If \(\varepsilon ^{k}=U^{k}-\widehat{U}^{k}, ~k=1, 2, \ldots , M\), be the errors to Eq. (6), then the time-discrete scheme is convergent with the convergence order \(\mathcal {O}({ \tau })\).

Proof

From Eq. (5), we get the following round-off error equation

There is a positive constant C where \(\mathfrak {R}^{M}\le C\mathcal {O}({ \tau } ^{2-\beta })\).

With multiplying Eq. (12) by \(\varepsilon ^{k}\) and integrating, we get the following relation

Using the Cauchy–Schwarz inequality, Lemmas 2, 3 and 5, we can write the following inequality

or in the equivalent form, we can get

Summing the above equation for k form 1 to M and since \(\Vert \varepsilon ^{0}\Vert =0\), we get

by changing index, the above relation simply can be rewritten as

We will write on the other hand

Now, using Lemmas 1 and 5 we gain

where \(C_{T}\) is a constant. The proof is finished.□

3 Space-discrete method

In this section, we employ the Chebyshev collocation method to discrete the space direction and obtain a full-discrete scheme of (5). Firstly, we define some notations and get the closed form of the fractional derivative of the Chebyshev polynomials of fourth kind (CPFK) that have applications in the current section.

Definition 1

The Jacobi polynomials \(J_{i}^{(r , s)}(x)\) are orthogonal polynomials with respect to the Jacobi weight function \(\omega ^{(r , s)}(x)=(1-x)^{r}(1+x)^{s}\) in interval \([-1, 1]\) as follows

By the analytical form of the Jacobi polynomials, the CPFK \(\mathcal {W}_{i}(x)\) of degree i can be restated as below

where

For using these polynomials on the interval [0, 1], we define the shifted CPFK (SCPFK) \(\mathcal {W}_{i}^{*}(x)=\mathcal {W}_{i}(2x-1)\) by the change of variable. The analytic form of SCPFK as follows

These polynomials are orthogonal in the interval [0, 1] with respect to the following inner product

The square-integrable function g(x) in the interval [0, 1] can be expanded in series of SCPFK as

where the coefficients \(v_{i}, i=0, 1, 2, \ldots ,\) are defined by

Now, by using the linearity of the Caputo fractional differentiation and Eq. (13) one can get the closed form of the fractional derivative of SCPFK, as the form

where \(\lceil \omega \rceil\) denotes the ceiling part of \(\omega\) and \(N_{i, k, \xi }^{\omega ,\lceil \omega \rceil }\) is given by

Notice that for \(i=0, 1, 2, \ldots , \lceil \omega \rceil -1,\) we have \(\mathcal {D}_{x}^{\omega }(\mathcal {W}_{i}^{*}(x))=0\). In practice, only the first N-terms of SCPFK are considered in the approximate case. Then we have:

In addition, by means of Caputo fractional differentiation properties and combinations Eqs. (15) and (16), one can obtain

As is discussed in Section 2, the time-discrete scheme is

To get a full-discrete scheme based on the SCPFK, we apply the following approximate

From Eqs. (17) and (18), we have

where \(\mathfrak {u}_{i}^{j}\) is the coefficients in the points of \((x_{i},t_{j})\). For positive integer N, \(\lbrace x_{r}\rbrace _{r=1}^{N+1-\lceil \alpha \rceil }\) denote the collocation points that they are the roots of SCPFK \(\mathcal {W}_{N+1-\lceil \alpha \rceil }^{*}(x)\). We collocate Eq. (20) with the collocation points \(\lbrace x_{r}\rbrace _{r=1}^{N+1-\lceil \alpha \rceil }\) as below

By substituting Eq. (19) in Eq. (2), in case of \(x=0\) and \(x=1\) we obtain the boundary conditions to get on \(\lceil \alpha \rceil\) equations as

Equation (21), together with boundary conditions (22), gives \(N+1\) of linear algebraic equations which can be determined the unknown \(\mathfrak {u}^{j}_{i}, i=0, 1, 2, \ldots , N\), in every step of time j. For obtaining the initial solution \(\mathfrak {u}_{i}^{0}\), we use the initial condition \(u(x, 0)=g(x)\) combining with Eq. (14).

4 Numerical examples

The main of this section is to give the numerical conclusion of the present method. We checked the stability of the developed method for various values of N and M. We will calculate the computational order (denoted by \(\mathcal {C}_{\tau }\)) by the following formula

in which \(E_{1}\) and \(E_{2}\) are errors corresponding to grids with mesh size \({\tau }_{1}\) and \({\tau }_{2}\), respectively.

Example 1

Consider the following STFADE

with the known functions \(a(x,t)=\Gamma (1.2)x^{1.8}\) and \(b(x,t)=0\). The boundary and initial conditions are \(u(0, t)=u(1, t)=0\) and \(u(x,0)=x^{2}(1-x)\), respectively. The source term is \(q(x,t)=3x^{2}e^{-t}(2x-1)\). This problem has exact solution \(u(x, t)=x^{2}e^{-t}(1-x)\) in the case of \(\beta =1\).

We solve this example based on the approach method and report the results in Tables 1, 2 and 3. In Table 1, the comparison of \(L_{\infty }\) between the proposed method and methods described with the Chebyshev estimates solved by the finite difference method in [11], the classical Crank–Nicholson method in [26], the Legendre polynomials in [2], and on the other hand, shifted Chebyshev polynomials for space and rational Chebyshev functions for the time-discrete in [4], are reported at \(T=2, T=10\) and \(T=50\) for \(M=400\) with 5 collocation points in the space domain. The reports show a better estimation of the current method than the methods mentioned. In addition, the absolute error of the presented method (PM) and the method of [4] are compared in Table 2 which indicates our method gives much better than the method [4]. In Table 3, the computational orders with \(\beta =0.99\) at \(T=1, N = 5, 7\) and different values of M, are shown. From this table, we can conclude that the computational orders are closed to the theoretical order.

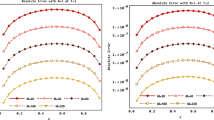

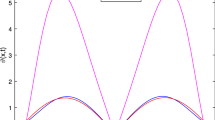

Figure 1 shows the approximation solution and absolute error for \(N=3\) and the different values of M at \(T=1\). Figure 2 demonstrates the numerical solution at \(T=1\) (left panel) and \(T=2\) (right panel) with \(M=N=5\) and several values of \(\beta\). Moreover, Fig. 3 depicts the numerical solution for the different values M and N at \(T=1\) with various qualities of \(\alpha\), which they display the convergence of the proposed method when \(\beta\) and \(\alpha\) tend to 1 and 1.8, respectively.

Graphs of the absolute error (left-side) and approximate solution (right-side) of Example 1 at \(T=1\) and \(N=3\)

The numerical solution of Example 1 at \(T=1\) (left-side) and \(T=2\) (right-side), for \(M=N=5\)

The compression of numerical solution for Example 1 at \(T=1\), for the different values M, N

Example 2

The smooth initial condition for problem (1) to be considered: homogeneous boundary conditions, \(u(x,0)=x^{2}-x^{3}\), the space fractional orders \(\alpha =1.8 , \gamma =0.8\) and \(a(x,t)=\frac{\Gamma (2.2)}{2}x^{1.8}, b(x,t)=-\frac{\Gamma (3.2)}{2}x^{0.8}\) are known functions. The source term can be achieved corresponding to the exact solution \(u(x,t)=x^{2}(1-x)(1+t^{\beta })\) when \(\beta =1\).

Table 4 presents a comparison between \(L_{\infty }\) and \(L_{2}\) for \(N=5, 7\) and the various values of M at \(T=1\) with \(\beta =0.9\), and also investigating this table, we can conclude that the developed method has the order \(\mathcal {O}(\tau )\) in the time direction. Figure 4 clearly reveals the absolute error based on the present technique for \(N=5\) (left side) and \(N=9\) (right side) at \(T=1\) with \(\beta =0.2\). From this figure, it is clear that the absolute error is decreased when the time step is increased. In Fig. 5, we plotted the numerical solution for values of \(\alpha\), \(\gamma\) and \(\beta =0.2\) based on \(M=N=5\) that displays the convergence.

The absolute error of Example 2 for \(N=5\) (left-side) and \(N=9\) (right-side), at \(T=1, \beta =0.2\)

The numerical solution of Example 2 at \(T=1\) for \(M=N=5\) with various values \(\alpha\) , \(\gamma\) and \(\beta =0.2\)

Example 3

Consider the following STFADE

where \(q(x,t)=exp(-t)(-0.5x+(1+\frac{\Gamma (1.2)}{\Gamma (2.2)})x^{2})\). This example has exact solution \(u(x, t)=x(1-x)exp(-t)\) in the case of \(\beta =1\).

The results of this problem are listed in Table 5 at various given parameters M and N with \(\beta =0.9\). It is shown that the convergence order of the time derivative supports the theoretical result, that is, \(\mathcal {O}( \tau )\). Figure 6 depicts the approximate solution at \(T=1\) for \(M=N=9\) with different values \(\alpha\) , \(\gamma\) and \(\beta =0.9\).

The numerical solution of Example 3 at \(T=1\) for \(M=N=9\) with different values \(\alpha\) , \(\gamma\) and \(\beta =0.9\)

5 Conclusion

This paper presented a new numerical scheme for approximating the STFADE. First of all, a finite difference method is utilized to discrete the fractional derivative in time directly with the \(\mathcal {O}(\tau ^{2-\beta })\) accuracy. In Lemma 5, the relation of approximating coefficients is stated for establishing the convergence analysis. In Theorem 1, the unconditional stability of the time-discrete scheme is proved by using the energy method and mathematical induction. Moreover, we obtained the linear convergence order in Theorem 2. Also, we applied the Chebyshev collocation method to discrete the space direction and obtain a full-discrete scheme. Finally, the numerical conclusion is illustrated to demonstrate and support the accuracy of the proposed scheme.

References

Aghdam YE, Mesgrani H, Javidi M, Nikan O (2020) A computational approach for the space-time fractional advection–diffusion equation arising in contaminant transport through porous media. Engineering with Computers pp. 1–16. https://doi.org/10.1007/s00366-020-01021-y

Alavizadeh S, Ghaini FM (2015) Numerical solution of fractional diffusion equation over a long time domain. Applied Mathematics and Computation 263:240–250

Atangana A, Gómez-Aguilar J (2018) Numerical approximation of Riemann-Liouville definition of fractional derivative: from Riemann-Liouville to Atangana-Baleanu. Numerical Methods for Partial Differential Equations 34(5):1502–1523

Baseri A, Abbasbandy S, Babolian E (2018) A collocation method for fractional diffusion equation in a long time with chebyshev functions. Applied Mathematics and Computation 322:55–65

Ervin VJ, Roop JP (2006) Variational formulation for the stationary fractional advection dispersion equation. Numerical Methods for Partial Differential Equations: An International Journal 22(3):558–576

Ervin VJ, Roop JP (2007) Variational solution of fractional advection dispersion equations on bounded domains in \(\mathbb{R}^{d}\). Numerical Methods for Partial Differential Equations: An International Journal 23(2):256–281

Gómez-Aguilar J, Atangana A (2017) New insight in fractional differentiation: power, exponential decay and Mittag-Leffler laws and applications. The European Physical Journal Plus 132(1):13

Goufo EFD, Kumar S, Mugisha S (2020) Similarities in a fifth-order evolution equation with and with no singular kernel. Chaos, Solitons & Fractals 130:109467

Huang J, Nie N, Tang Y (2014) A second order finite difference-spectral method for space fractional diffusion equations. Science China Mathematics 57(6):1303–1317

Kemppainen J (2011) Existence and uniqueness of the solution for a time-fractional diffusion equation with robin boundary condition. In: Abstract and Applied Analysis, vol. 2011. Hindawi

Khader M (2011) On the numerical solutions for the fractional diffusion equation. Communications in Nonlinear Science and Numerical Simulation 16(6):2535–2542

Khader M, Sweilam N, Mahdy A (2011) An efficient numerical method for solving the fractional diffusion equation. Journal of Applied Mathematics and Bioinformatics 1(2):1

Kumar A, Kumar S, Yan SP (2017) Residual power series method for fractional diffusion equations. Fundamenta Informaticae 151(1–4):213–230

Kumar K, Pandey RK, Sharma S (2017) Comparative study of three numerical schemes for fractional integro-differential equations. Journal of Computational and Applied Mathematics 315:287–302

Kumar S, Ghosh S, Samet B, Goufo EFD (2020) An analysis for heat equations arises in diffusion process using new Yang-Abdel-Aty-Cattani fractional operator. Mathematical Methods in the Applied Sciences 43(9):6062–6080

Kumar S, Kumar A, Argyros IK (2017) A new analysis for the keller-segel model of fractional order. Numerical Algorithms 75(1):213–228

Kumar S, Kumar R, Cattani C, Samet B (2020) Chaotic behaviour of fractional predator-prey dynamical system. Chaos, Solitons & Fractals 135:109811

Liu F, Anh VV, Turner I, Zhuang P (2003) Time fractional advection-dispersion equation. Journal of Applied Mathematics and Computing 13(1–2):233

Liu F, Zhuang P, Anh V, Turner I, Burrage K (2007) Stability and convergence of the difference methods for the space-time fractional advection-diffusion equation. Applied Mathematics and Computation 191(1):12–20

Metzler R, Klafter J (2000) The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Physics reports 339(1):1–77

Nikan O, Golbabai A, Machado JT, Nikazad T (2020) Numerical approximation of the time fractional cable equation arising in neuronal dynamics. Engineering with Computers pp. 1–19. https://doi.org/10.1007/s00366-020-01033-8

Nikan O, Machado JT, Golbabai A, Nikazad T (2020) Numerical approach for modeling fractal mobile/immobile transport model in porous and fractured media. International Communications in Heat and Mass Transfer 111:104443

Podlubny I (1998) Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications, vol 198. Elsevier, New York

Quarteroni A, Valli A (2008) Numerical approximation of partial differential equations, vol. 23. Springer Science & Business Media

Safdari H, Mesgarani H, Javidi M, Aghdam YE (2020) Convergence analysis of the space fractional-order diffusion equation based on the compact finite difference scheme. Comput. Appl. Math 39(2):1–15

Tadjeran C, Meerschaert MM, Scheffler HP (2006) A second-order accurate numerical approximation for the fractional diffusion equation. Journal of Computational Physics 213(1):205–213

Acknowledgements

José Francisco Gómez Aguilar affirms the help provided by CONACyT, Mexico: Cátedras CONACyT para jóvenes investigators 2014 and SNI-CONACyT, Mexico.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Safdari, H., Aghdam, Y.E. & Gómez-Aguilar, J.F. Shifted Chebyshev collocation of the fourth kind with convergence analysis for the space–time fractional advection-diffusion equation. Engineering with Computers 38, 1409–1420 (2022). https://doi.org/10.1007/s00366-020-01092-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-020-01092-x

Keywords

- Fractional derivatives and integrals

- Diffusion processes

- Partial differential equations

- Stability

- Convergence