Abstract

In this study, the Ritz–Galerkin method based on Legendre multiwavelet functions is introduced to solve multi-term time-space convection–diffusion equations of fractional order with variable coefficients and initial-boundary conditions. This method reduces the problem to a set of algebraic equations. The coefficients of approximate solutions are obtained from the coefficients of this system. A convergence analysis for function approximations is also presented together with an upper bound for the error of estimates. Numerical examples are included to demonstrate the validity and applicability of the technique.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, considerable attention has been given to the fractional calculus, which is used in modeling and analysis of a wide range of problems in science and engineering, including chemistry, finance, physics, aerodynamics, electrodynamics, polymer rheology, economics and biophysics [2, 3, 7, 11, 27, 32, 35, 36].

Fractional calculus extends the concept of ordinary differentiation and integration to an arbitrary non-integer order. Recently, there has been a growing interest in fractional differential equations. Since most fractional differential equations do not have analytic solutions, several numerical methods such as the homotopy-perturbation method [1], variational iteration method [33], Adomian decomposition method [5] and finite difference approximation methods [39] have been used to obtain approximate solutions.

Here, we recall the basic definitions of fractional calculus theory [24, 27] which will be used further in this article.

Definition 1

Suppose that \(f \in L_1[a,b], t>0, \alpha ,t \in {\mathbb {R}}\), then the fractional operator

is referred to as the Caputo fractional derivative of order \(\alpha\).

Caputo’s differential operator coincides with the usual differential operator of an integer order and has the linear operation property as follows:

Also, the Caputo fractional derivative of power function \(f(x)=x^k,k \in {\mathbb {N}}\) is (see [24])

For a constant as c, we have

The convection–diffusion equation is an equation that appears when a particle, energy or in general physical quantities are transferred in a system. It is a combination of the diffusion and convection. The most famous form of convection–diffusion equation is

where u is the variable of interest, D is the diffusivity, such as mass diffusivity for particle motion or thermal diffusivity for heat transport, \(\nu\) is the average velocity at which the quantity is moving, \(\nabla\) represents gradient and \(\nabla \cdot\) represents divergence. Application of convection–diffusion equation in fluid dynamics, heat transfer and mass transfer is discussed in Refs. [10, 12, 20]. Several numerical methods for solving this equation is introduced by the authors, such as the variational iteration method [23], Adomian’s decomposition method [26], homotopy perturbation method [9], Bessel collocation method [40], B-spline collocation method [16, 17], finite element method [21], Crank–Nicolson method [15] and rational spectral method [8]. A history of analytical methods for solving diffusion equations can be found in Ref. [14]. However, most fractional diffusion equations do not have analytical solutions. In (2011), Khader [18] used the Chebyshev collocation method as well as the finite difference method and in Ref. [19], the authors used the Legendre pseudospectral method to obtain numerical approximations. Again, in Ref. [22], Li et al. derived numerical solutions by the finite difference method. In Ref. [31], Saha Ray and Bera have applied Adomian’s decomposition method to find the solution of a time-fractional diffusion equation of order \(\beta =\frac{1}{2}\). In Ref. [6], Das has used variational iteration method for time-fractional diffusion equation of order \(0<\beta \le 1\). Fractional calculus of convection–diffusion equations has been widely considered in recent years. But there are few works devoted to numerical solutions of fractional convection–diffusion equations. Zhong et al. [41] applied the Legendre polynomials and also the associated operational matrix for solutions.

In this study, we present the Ritz–Galerkin method based on Legendre multiwavelet functions to numerically solving multi-term time-space convection–diffusion equation of fractional order:

with initial and boundary conditions:

Here \(0<\alpha _r< \ldots<\alpha _1<\alpha \le 1, 0<\beta _1\le 1<\beta \le 2\), the functions \(a_i(x)\) for \(i=1,2, \ldots ,r, b(x), c(x), d(x), f_1(x), g_1(t)\) and \(g_2(t)\) are known and the function u(x, t) is unknown. The terms \(b(x)D^{\beta }_xu, c(x)D^{\beta _1}_xu\) and h(x, t), respectively, are called the diffusive or viscous term, the convection term and the source term. The operator \(D^{\alpha _i}_t\) is the fractional derivative in the Caputo sense of order \(\alpha _i\) with respect to variable t.

The theory of wavelets is a relatively new and an emerging area in mathematical research. It has been applied in a wide range of engineering disciplines and, in particular, has been very successfully used in signal analysis for waveform representation and segmentations, time–frequency analysis, and can be used to construct fast algorithms for easy implementation [4]. Moreover, wavelet analysis has many useful properties, such as orthogonality, compact support, and the ability to obtain exact representation of polynomials up to a given degree, and to represent functions at different level of resolution [25].

Recently, Yousefi [34]–[37], Razzaghi and Yousefi [28, 29] and Yousefi and Razzaghi [38], and Jafari et al. [13] have used the Legendre multiwavelet method to obtain approximate solutions of hyperbolic telegraph equations, the differential equation of Lane–Emden, the Emden–Fowler equations, Abel’s integral equations, as well as solutions to variational problems, nonlinear problems in the calculus of variations, the nonlinear Volterra–Fredholm integral equations and fractional differential equations.

The paper is organized as follows: in Sect. 2, we give the basic definition of Legendre multiwavelet functions and state their properties. In Sect. 3, we discuss approximations to functions using Legendre multiwavelet functions basis and present a convergence analysis for function approximations together with an upper bound for the error of the estimates. In Sect. 4, we solve Eqs. (2)–(4) using the Ritz–Galerkin method based on the Legendre multiwavelet functions for solutions. Numerical results to demonstrate the accuracy of this technique are reported in Sect. 5 and, finally, Section 6 contains a conclusion.

2 Legendre multiwavelet functions

The Legendre multiwavelet functions on interval [0, T) are defined by [13, 34]

where \(m=0,1, \ldots ,M-1, n=0,1, \ldots ,2^{k}-1, k\) can assume any positive integer, m is the order for Legendre polynomials and t is the normalized time. \(\left\{ \psi _{nm}(t) \right\}\) is an orthonormal set. The coefficient \(\sqrt{2m+1}\) is needed for orthonormality.

Legendre polynomials on the interval [0, 1] can be determined using the following recursive formula:

The two-dimensional Legendre multiwavelet functions on interval \([0,L)\times [0,T)\) are defined by [34]

where

for \(n=0,1, \ldots ,2^{p}-1, l=0,1, \ldots ,M-1, i=0,1, \ldots ,2^{k}-1,j=0,1, \ldots ,N-1,\) and p, k are positive integers. Here l, j are the order of Legendre polynomials. \(\left\{ \psi _{nlij}(x,t) \right\}\) is an orthonormal set. The coefficient \(\sqrt{(2l+1)(2j+1)}\) is needed for orthonormality. It is obvious that \(\psi _{nlij}(x,t)=\psi _{nl}(x)\psi _{ij}(t)\).

3 Function approximation

Consider the function f(x, t) defined over \({\mathscr {R}}:=[0,L)\times [0,T )\). We can approximate f(x, t) as

where

with

are the elements of \({\mathbf {A}}\) which is a \({\hat{m}}\times {\hat{n}}({\hat{m}}=2^{p}M,\;{\hat{n}}=2^{k}N)\) matrix. Also, the vectors of \({\Psi }(x)\) and \({\Psi }(t)\) are, respectively, \({\hat{m}}\times 1\) and \({\hat{n}}\times 1\) matrices such that

Theorem 1

Let \(M,N\rightarrow \infty\), then the truncated series (6) converges to f(x, t).

Proof

We shall use the following notations for convenience:

We will prove that the sequence of partial sums of \(f_{MN}(x,t)\) is a Cauchy sequence in the Hilbert space \({\mathscr {H}}=L^2({\mathscr {R}})\). Assume \(f_{RS}(x,t)\) be an arbitrary partial sums of \(a_{rs}^{qz}\psi _{rsqz}(x,t)\), and \(M > R\), \(N> S\). Then we have

Since \(\sum\nolimits_{\begin{subarray}{l} l = 0 \\ j = 0 \end{subarray} }^{{\infty \infty }} {{\mid }a_{{nl}}^{{ij}} {\mid }} ^{2}\) is a monotone series and bounded by \(\Vert f\Vert _2^2\), it converges and hence its partial sums form a Cauchy sequence. Thus, \(\sum\nolimits_{\begin{subarray}{l} l = R \\ j = S \end{subarray} }^{{MN}} {{\mid }a_{{nl}}^{{ij}} {\mid }} ^{2}\) converges to zero as \(M,N,R,S\rightarrow \infty .\) So \(\Vert f_{MN}(x,t)-f_{RS}(x,t)\Vert _2^2\) converges to zero as \(M,N,R,S\rightarrow \infty .\) Thus, \(f_{MN}(x,t)\) is a Cauchy sequence and hence \(f_{MN}(x,t)\) converges to \(g \in {\mathscr {H}}.\) We claim that \(g(x,t)=f(x,t)\). By (7), we have

hence, \(g(x,t)=f(x,t)\). This completes the proof of the theorem. \(\square\)

The following lemma gives an upper bound for the error of estimate.

Lemma 1

Let \(f:{\mathscr {R}}\rightarrow {\mathbb {R}}\) be a function with J (maximum of M and N) continuous partial derivatives, and suppose that \({\Psi }^T(x){\mathbf {A}}{\Psi }(t)\) approximates f(x, t). Then an upper bound for the error of approximation is as follows:

where

Proof

A Taylor polynomial approximation for f(x, t) is

where \(\left( {\begin{array}{c}p\\ k\end{array}}\right)\) is the binomial coefficient and computed as

We know that

where S was defined in (9). Since \({\Psi }^T(x){\mathbf {B}}{\Psi }(t)\) is the polynomial of degree \(J-1\) with respect to variables x and t that approximates f(x, t) with the minimum mean error bound, we have by (10),

Taking square roots, we have (8). \(\square\)

The upper bound of the error depends on \(\frac{(J+1)\left( {\begin{array}{c}J\\ \left[ \frac{J}{2}\right] \end{array}}\right) {(LT)}^{\frac{2J+1}{2}}}{J!{(2J+1)}(2^{p+k})^\frac{2J+1}{2}}\) which shows that as J increases, the error rapidly approaches zero. This is an advantage of Legendre multiwavelet function approximations.

4 Method of solution

4.1 Ritz approximation function

Consider

We set

Now, Eqs. (2)–(4) are equivalent to

with the initial condition:

and homogenous boundary conditions:

where

Now a Ritz approximation function for (11) is in the form

where w(x, t) is an interpolating function:

The function w(x, t) satisfies nonhomogeneous conditions and so \(v_{MN}(x,t)\) satisfies the initial and boundary conditions (12) and (13). This approximation provides greater flexibility when imposing initial and boundary conditions. In general, w(x, t) is not unique.

4.2 Ritz–Galerkin method

Without loss of generality, we set \(M=N\) and \(p=k\) in relation (14). Consider

for \(n,i=0,1, \ldots ,2^{p}-1\) and \(l,j=0,1, \ldots ,M-1\). We may write the series solution \(v_{MN}(x,t)\) in (14) as follows:

where

and \({\mathbf {Q}}\) is a \({\hat{m}}^2\times 1\) matrix with unknown coefficients (see “Appendix”). We know that the elements of \({\Phi }(x)\) are the polynomials of degree no greater than \(M+1\). Hence, we can write \({\Phi }(x)\) using the Taylor vector as

where

and \({\mathbf {D}}\) is the \((M+2)\times {\hat{m}}\) transformation matrix of \({\Phi }(x)\) to \({\mathbf {T}}_{M+1}(x)\). Similarly,

where \({{\mathbf {M}}}\) is the \((M+1)\times {\hat{m}}\) transformation matrix of \({\Gamma }(t)\) to \({\mathbf {T}}_M(t)\). We write the fractional derivative of \({\mathbf {T}}_{M+1}(x)\) and \({\mathbf {T}}_{M}(t)\) as follows:

Using (17), (19) and (21) gives us

By (1), (16) and (23), the \(\beta\)-order fractional derivative of \(v_M(x,t)\) with respect to x is

Similarly, by (1), (16) and (22), the \(\alpha\)-order fractional derivative of \(v_M(x,t)\) with respect to t is

Now, substituting (16), (24) and (25) into (11), we have

where

Suppose

is a \(1\times {\hat{m}}^2\) basis vector for \(L^{2}({\mathscr {R}})\).

Now, to apply the Ritz–Galerkin method to compute the unknown coefficients of \({\mathbf {Q}}\), we take the inner product of Eq. (26) with the \({\hat{m}}^2\) elements of \({\Psi }(x,t)\) as

where

and \(\left\langle .\right\rangle\) denotes the inner product defined by

Hence, we have

Equation (27) corresponds to a system of \({\hat{m}}^2\) linear algebraic equations with unknown coefficients. If \(\mathrm{rank}({\mathbf {G}})=\mathrm{rank}([{\mathbf {G}};{\mathbf {P}}])={\hat{m}}^2\), then Eq. (11) has a unique solution and so the solution of Eq. (2) is also unique. If \(\mathrm{rank}({\mathbf {G}})=\mathrm{rank}([{\mathbf {G}};{\mathbf {P}}])<{\hat{m}}^2\), then Eq. (11) and thus (2) have a particular solution that may find and if \(\mathrm{rank}({\mathbf {G}})\ne \mathrm{rank}([{\mathbf {G}};{\mathbf {P}}])\), then it is not a solution.

In this section, we give examples to demonstrate the accuracy of approximation solution to multi-term time-space convection–diffusion equations of fractional order using our technique. The error functions is defined as

Example 1

Consider the time-space convection–diffusion equation of fractional order

where

with the initial and boundary conditions, respectively,

The exact solution is \(u(x,t)=x^2(1-x)t^2\). The Ritz–Galerkin solution \(v_M(x,t)\) for \(M=2\) and \(p=0\) is

where \(a_1(x)=0.5, b(x)=-x, c(x)=x, d(x)=0, f_1(x)=0, g_1(t)=g_2(t)=0, w(x,t)=0, {\Phi }(x)=\left[ x(x-1), \sqrt{3}x(x-1)(2x-1) \right] , {\Gamma }(t)=\left[ t, \sqrt{3}t(2t-1) \right],\)

The fundamental matrix relation of the problem is

where

with

We put \({\Psi }(x,t)=[1,t,x,xt]\). By taking the inner product of Eq. (30) for \(\alpha _1=0.1, \beta =1.8, \beta _1=0.8\) and \(\alpha =0.7\) with the elements of \({\Psi }(x,t)\), we have the augmented matrix \([{\mathbf {G}};{\mathbf {P}}]\) as

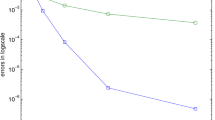

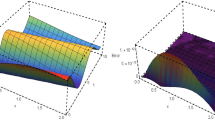

The absolute error functions for \(\alpha _1=0.1\), \(\beta =1.8\), \(\beta _1=0.8\) when \(\alpha =1,\) 0.9, 0.8 and 0.7 of Example 1

By solving this system, the unknown coefficient matrix \({\mathbf {Q}}\) is obtained as

Substituting the elements of the column matrix \({\mathbf {Q}}\) into (29), we have

Similarly for other cases, we obtain the approximate solution of the problem by the present method as for case \(\alpha _1=0.1, \beta =1.8, \beta _1=0.8, \alpha =0.8\):

for case \(\alpha _1=0.1, \beta =1.8, \beta _1=0.8, \alpha =0.9\):

for case \(\alpha _1=0.1, \beta =1.8, \beta _1=0.8, \alpha =1\):

Figure 1 displays the graphs of the absolute error functions \(e_2(x,t)\) for \(\alpha _1=0.1, \beta =1.8, \beta _1=0.8\) when \(\alpha =1, 0.9, 0.8, 0.7\). It is clear that even with \(M=2\), our approximations are very good.

Example 2

Consider the following time-fractional diffusion equation [30]

where

with the initial and boundary conditions, respectively:

The exact solution for \(\beta =2\) is \(u(x,t)=t^3sin(\pi x)\). The Ritz–Galerkin solution \(v_M(x,t)\) for \(M=3\) and \(p=0\) is

where \(a_1(x)=-1, b(x)=1, c(x)=d(x)=0, f_1(x)=0, g_1(t)=g_2(t)=0, w(x,t)=0,\)

The fundamental matrix relation of Eq. (31) is

where

with

We put \({\Psi }(x,t)=[1,t,t^2,x,xt,xt^2,x^2,x^2t,x^2t^2]\). By taking the inner product of Eq. (33) for \(\alpha =0.9, \alpha _1=0.1\) and \(\beta =2\) with the elements of \({\Psi }(x,t)\), we have the augmented matrix \([{\mathbf {G}};{\mathbf {P}}]\) as

By solving this system, the unknown coefficients matrix \({\mathbf {Q}}\) is obtained as

Substituting the elements of the column matrix \({\mathbf {Q}}\) into (32), we have

Similarly for other cases, we have for case \(\alpha =1, \alpha _1=0.1, \beta =2\):

for case \(\alpha =1.1, \alpha _1=0.1, \beta =2\):

for case \(\alpha =1.2, \alpha _1=0.1, \beta =2\):

Figure 2 displays the graphs of the absolute error functions for \(\alpha _1=0.1, \beta =2\) when \(\alpha =1.2, 1.1, 1, 0.9\). Table 1 is used to show the numerical results by the present method at \(t=0.3\) and \(t=0.5\) for \(\alpha =\alpha _1=1, \beta =2\). According to Table 1, the present method gives better results as compared to [30].

The absolute error functions for \(\alpha _1=0.1, \beta =2\) when \(\alpha =1.2, 1.1, 1\) and 0.9 of Example 2

5 Conclusion

In this paper, we have presented a numerical method to solve multi-term time-space convection–diffusion equations of fractional order with variable coefficients and initial-boundary conditions. We have successfully applied the Ritz–Galerkin method based on Legendre multiwavelet functions and obtained very good approximate solutions using only a few terms. The main advantage of the present method is that the approximate solutions are very easily and rapidly calculated using computer programs such as Matlab.

References

Abdulaziz O, Hashim I, Momani S (2008) Solving systems of fractional differential equations by homotopy-perturbation method. Phys Lett A 372:451–459

Agarwal PP, Andrade B, Cuevas C (2010) Weighted pseudo-almost periodic solutions of a class of semilinear fractional differential equations. Nonlinear Anal Real World Appl 11:3532–3554

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier Science Limited, Amsterdam

Chui CK (1997) Wavelets: a mathematical tool for signal analysis. SIAM, Philadelphia

Daftardar-Gejji V, Jafari H (2007) Solving a multi-order fractional differential equation using adomian decomposition. Appl Math Comput 189:541–548

Das S (2009) Analytical solution of a fractional diffusion equation by variational iteration method. Comput Math Appl 57:483–487

Diethelm K (2010) The analysis of fractional differential equations. Springer, Berlin

Du L, Wu XH, Chen S (2011) A novel mathematical modeling of multiple scales for a class of two dimensional singular perturbed problems. Appl Math Model 35:4589–4602

Ghasemi M, Kajani MT (2010) Applications of He’s homotopy perturbation method to solve a diffusion convection problem. Math Sci 4:171–186

Guvanasen V, Volker RE (1983) Numerical solutions for solute transport in unconfined aquifers. Int J Numer Methods Fluids 3:103–123

Hilfer R (2000) Applications of fractional calculus in physics. World Scientific Publishing Company, Singapore

Isenberg J, Gutfinger C (1973) Heat transfer to a draining film. Int J Heat Mass Transf 16:505–512

Jafari H, Yousefi SA, Firoozjaee MA, Momani S, Khalique CM (2011) Application of Legendre wavelets for solving fractional differential equations. Comput Math Appl 62:1038–1045

Jiang H, Liu F, Turner I, Burrage K (2012) Analytical solutions for the multi-term time-space Caputo-Rieszfractional advection–diffusion equations on a finite domain. J Math Anal Appl 389:1117–1127

Kadalbajoo MK, Awasthi A (2006) A parameter uniform difference scheme for singularly perturbed parabolic problem in one space dimension. Appl Math Comput 183:42–60

Kadalbajoo MK, Gupta V, Awasthi A (2008) A uniformly convergent B-spline collocation method on a nonuniform mesh for singularly perturbed one dimensional time-dependent linear convectiondiffusion problem. J Comput Appl Math 220:271–289

Kadalbajoo MK, Gupta V (2009) Numerical solution of singularly perturbed convectiondiffusion problem using parameter uniform B-spline collocation method. J Math Anal Appl 355:439–452

Khader MM (2011) On the numerical solutions for the fractional diffusion equation. Commun Nonlinear Sci Numer Simul 16:2535–2542

Khader MM, Sweilam NH, Mahdy AMS (2011) An efficient numerical method for solving the fractional diffusion equation. J Appl Math Bioinform 1:1–12

Kumar N (1983) Unsteady flow against dispersion in finite porous media. J Hydrol 63:345–358

Lenferink W (2002) A second order scheme for a time-dependent, singularly perturbed convectiondiffusion equation. J Comput Appl Math 143:49–68

Li G, Sun C, Jia X, Du D (2016) Numerical solution to the multi-term time fractional diffusion equation in a finite domain. Numer Math Theor Methods Appl 9:337–357

Liu Y, Zhao X (2010) He’s variational iteration method for solving convection diffusion equations. Adv Intell Comput Theor Appl 6:246–251

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Ming Q, Hwang C, Shih YP (1996) The computation of wavelet-Galerkin approximation on a bounded interval. Int J Numer Methods Eng 39:2921–2944

Momani S (2007) An algorithm for solving the fractional convection diffusion equation with nonlinear source term. Commun Nonlinear Sci Numer Simul 12:1283–1290

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Razzaghi M, Yousefi SA (2000) Legendre wavelets direct method for variational problems. Math Comput Simul 53:185–192

Razzaghi M, Yousefi SA (2001) Legendre wavelets method for the solution of nonlinear problems in the calculus of variations. Math Comput Model 34:45–54

Saeedi H (2018) A fractional-order operational method for numerical treatment of multi-order fractional partial differential equation with variable coefficients. SeMA J 75:421–43

Saha Ray S, Bera RK (2006) Analytical solution of a fractional diffusion equation by Adomian decomposition method. Appl Math Comput 174:329–336

Samko SG, Kilbas AA, Marichev OI (1993) Fractional integrals and derivatives: theory and applications. Gordon and Breach Science Publishers, Yverdon

Wu G, Lee EWM (2010) Fractional variational iteration method and its application. Phys Lett A 374:2506–2509

Yousefi SA (2010) Legendre multiwavelet Galerkin method for solving the hyperbolic telegraph equation. Numer Methods Partial Differ Equ 26:535–543

Yousefi SA (2007) Legendre scaling function for solving generalized Emden–Fowler equations. Int J Inf Syst Sci 3:243–250

Yousefi SA (2006) Legendre wavelets method for solving differential equations of Lane–Emden type. Appl Math Comput 181:1417–1422

Yousefi SA (2006) Numerical solution of Abel’s integral equation by using Legendre wavelets. Appl Math Comput 175:574–580

Yousefi SA, Razzaghi M (2005) Legendre wavelets method for the nonlinear VolterraFredholm integral equations. Math Comput Simul 70:1–8

Yuste SB (2006) Weighted average finite difference methods for fractional diffusion equations. J Comput Phys 216:264–274

Yüzbaşı Ş, Şahin N (2013) Numerical solutions of singularly perturbed one-dimensional parabolic convection–diffusion problems by the Bessel collocation method. Appl Math Comput 220:305–315

Zhong G, Yi M, Huang J (2018) Numerical method for solving fractional convection diffusion equations with time-space variable coefficients. IAENG Int J Appl Math 48:62–66

Acknowledgements

The authors wish to thank the referees for carefully reading the paper and for their many constructive comments and suggestions to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The \({\hat{m}}^2\times 1\) matrix \({\mathbf {Q}}\) with unknown coefficients \(c_{nl}^{ij}\) is as follows:

where

Rights and permissions

About this article

Cite this article

Sokhanvar, E., Askari-Hemmat, A. & Yousefi, S.A. Legendre multiwavelet functions for numerical solution of multi-term time-space convection–diffusion equations of fractional order. Engineering with Computers 37, 1473–1484 (2021). https://doi.org/10.1007/s00366-019-00896-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-019-00896-w