Abstract

This paper develops a simple and computationally efficient parametric approach to the estimation of general hidden Markov models (HMMs). For non-Gaussian HMMs, the computation of the maximum likelihood estimator (MLE) involves a high-dimensional integral that has no analytical solution and can be difficult to approach accurately. We develop a new alternative method based on the theory of estimating functions and a deconvolution strategy. Our procedure requires the same assumptions as the MLE and deconvolution estimators. We provide theoretical guarantees about the performance of the resulting estimator; its consistency and asymptotic normality are established. This leads to the construction of confidence intervals. Monte Carlo experiments are investigated and compared with the MLE. Finally, we illustrate our approach using real data for ex-ante interest rate forecasts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, a hidden non-linear Markov model (HMM) with heteroskedastic noise is considered; we observe n random variables \(Y_1,\ldots ,Y_{n}\) having the following additive structure

where \((X_i)_{i \ge 1}\) is a strictly stationary, ergodic unobserved Markov chain that depends on two known measurable functions \(b_{\theta _0}\) and \(\sigma _{\theta _0}\) up to the unknown parameter \(\theta _0\). In addition to its initial distribution, the chain \((X_i)_{i\ge 1}\) is characterized by its transition, i.e., the distribution of \(X_{i+1}\) given \(X_i\) and by its stationary density \(f_{\theta _0}\). We assume that the transition distribution admits a density \(\Pi _{\theta _0}\), defined by \(\Pi _{\theta _0}(x,x')dx'= \mathbb {P}_{\theta _0}(X_{i+1} \in dx' \vert X_i = x)\). For the identifiability of (1), we assume that \(\varepsilon _1\) admits a known density with respect to the Lebesgue measure denoted by \(f_{\varepsilon }\).

Our objective is to estimate the parameter vector \(\theta _0\) for non-linear HMMs with heteroskedastic innovations described by the function \(\sigma _{\theta _0}\) in (1) assuming that the model is correctly specified, i.e., \(\theta _0\) belongs to the interior of a compact set \(\Theta \subset \mathbb {R}^{r}\), with \(r\in \mathbb {N}^{*}\).

Many articles have focused on parameter estimation and on the study of asymptotic properties of estimators when \((X_i)_{i \ge 1}\) is an autoregressive moving average (ARMA) process (see Chanda (1995), Staudenmayer and Buonaccorsi (2005) and Costa and Alpuim (2010)) or in a regression context with measurement error (see, e.g., Zhou et al. (2010), Miao et al. (2013) or Fan et al. (2013)). However, for more general models, (1) is known as HMM with potentially a non-compact continuous state space. This model constitutes a very famous class of discrete-time stochastic processes, with many applications in various fields such as biology, speech recognition or finance. In Douc et al. (2011), the authors study the consistency of the MLE estimator for general HMMs, but they do not provide a method for calculating it in practice. It is well-known that its computation is extremely expensive due to the non-observability of the Markov chain and the proposed methodologies are essentially based on expectation-maximization (EM) approach or Monte Carlo-based methods (e.g., Markov chain Monte Carlo (MCMC), Sequential Monte Carlo (SMC) or particle Markov chain Monte Carlo, see Andrieu et al. (2010), Chopin et al. (2013) and Olsson and Rydén (2007)). Except in the Gaussian and linear setting, where the MLE can be processed by a Kalman filter, the calculation will be relatively fast but there are few cases where real data satisfy this assumption.

In this paper, we do not consider the Bayesian approach; we consider the model (1) as a so-called convolution model, and our approach is therefore based on Fourier analysis. The restrictions on error distribution and rate of convergence obtained for our estimator are also of the same type. If we focus our attention on (semi-)parametric models, few results exist. To the best of our knowledge, the first study that gives a consistent estimator is Comte and Taupin (2001). The authors propose an estimation procedure based on least squares minimization. Recently, in Dedecker et al. (2014), the authors generalize this approach to the models defined as \(X_i=b_{\theta _0}(X_{i-1})+\eta _i\), where \(b_{\theta _0}\) is the regression function assumed to be known up to \(\theta _0\) and for homoscedastic innovations \(\eta _i\). Also, in El Kolei (2013) and El Kolei and Pelgrin (2017), the authors propose a consistent estimator for parametric models assuming knowledge of the stationary density \(f_{\theta _0}\) up to the unknown parameters \(\theta _0\) for the construction of the estimator. For many processes, this density has no analytic expression, and even in some cases where it is known, it may be more complex to apply deconvolution techniques using this density rather than the transition density. For example, the autoregressive conditional heteroskedasticity (ARCH) processes form a family of processes for which transition density has a closed form as opposed to the stationary density. These processes are widely used to model economic or financial variables.

In this work, we aim to develop a new computationally efficient approach whose construction does not require the knowledge of the invariant density. We provide a consistent estimator with a parametric rate of convergence for general models. Our approach is valid for non-linear HMMs with heteroskedastic innovations, and our estimation principle is based on the contrast function as proposed proposed in a nonparametric context by Lacour (2007) and Brunel et al. (2007). Thus, we aim to adapt their approach in a parametric context, assuming that the form of the transition density \(\Pi _{\theta _0}\) is known up to some unknown parameter \(\theta _0\). The proposed methodology is purely parametric, and we go a step further by proposing an analytical expression of the asymptotic variance matrix \(\Sigma (\hat{\theta }_{n})\), which allows us to consider the construction of confidence intervals.

Under general assumptions, we prove that our estimator is consistent and gives some conditions under which the asymptotic normality can be stated and also provides an analytical expression of the asymptotic variance matrix. We show that this approach is much less greedy from a computational point of view than the MLE for non-Gaussian HMMs, and its implementation is straightforward since it requires only Fourier transforms, as in Dedecker et al. (2014). In particular, a numerical illustration is given for the three following models: a Gaussian AR(1) model for which our approach can be well understood, an AR(1) process with Laplace’s noise in order to study the influence of the smoothness of observation noise on the estimation of the parameters since it is known in deconvolution to affect the convergence rate (see, e.g., Fan et al. (1991)), and a stochastic volatility model (SV) also referred to as the unobserved components/stochastic volatility model (see, e.g., Stock and Watson (2007), Chan (2017) and Ebner et al. (2018)). There is a large literature on the fitting of SV models (see, e.g., the reviews in Ghysels et al. (1996), Bos and Shephard (2006) and Omori et al. (2007)). All are based on Bayesian methods, and in particular, Markov chain Monte Carlo (MCMC) methods. We therefore propose an alternative estimation method that is simple to implement and quick to calculate for this model, which is widely used in practice. We provide a simulation study for both linear and nonlinear examples and in a Gaussian and non-Gaussian setting. We then compare the empirical performance of the proposed method with other methods from the literature. We also illustrate the applicability of our procedure on a real dataset to estimate the ex-ante real interest rate, since it is shown in Holston et al. (2017) and more recently in Laubach and Williams (2003) that interest rates are subject to considerable real-time measurement error. In particular, we focus on the great inflation period. We show that during this period, the Gaussianity hypothesis of observation noise is not verified and that in this study, an SV-type model gives better results for the latent variable estimation. In this context, the Kalman filter is no longer optimal and therefore leads to a bias in parameter estimation, since in this case we approach the noise density by a Gaussian density to construct the MLE. This bias in the parameters propagates in the estimation of the latent variable (see El Kolei and Patras (2018)). This cannot be overlooked in models where the latent variable to be predicted is used to make political decisions. It seems important to study estimators other than the MLE that cannot be calculated by the Kalman filter. In this regard, our approach therefore provides better results than the (quasi-)MLE estimate.

The remainder of the paper is organized as follows. We present our assumptions about the Markov chain in Sect. 2. Sect. 3 describes our estimator and its statistical properties, and also presents our main results: the consistency and asymptotic normality of the estimator. Simulated examples are provided in Sect. 4 and the real data application is in Sect. 5. The proofs are gathered in Sect. 6.

2 Framework

Before presenting in detail the main estimation procedure of our study, we introduce some preliminary notations and assumptions.

2.1 Notations

The Fourier transform of an integrable function u is denoted by \(u^{*}(t)=\int _{}^{}e^{-itx}u(x)dx\), and it satisfies the equation \((u^{*})^{*}(x)=2\pi u(-x)\). We denote by \(\nabla _{\theta }g\) the vector of the partial derivatives of g with respect to (w.r.t.) \(\theta \). The Hessian matrix of g w.r.t. \(\theta \) is denoted by \(\nabla ^{2}_{\theta }g\). For any matrix \(M=(M_{i,j})_{i,j}\), the Frobenius norm is defined by \(\left\| M\right\| =\sqrt{\sum _{i}\sum _{j}|M_{i,j}}|^2\). Finally, we set \(\mathbf {Y}_i=(Y_i,Y_{i+1})\), and \(\mathbf {y}_{i}=(y_{i},y_{i+1})\) is a given realization of \(\mathbf {Y}_i\). We set \((t\otimes s)(x,y)=t(x)s(y)\).

In the following, for the sake of conciseness, \(\mathbb {P}, \mathbb {E}, \mathbb {V}ar\) and \(\mathbb {C}ov\) denote respectively the probability \(\mathbb {P}_{\theta _0}\), the expected value \(\mathbb {E}_{\theta _0}\), the variance \(\mathbb {V}ar_{\theta _0}\) and the covariance \(\mathbb {C}ov_{\theta _0}\) when the true parameter is \(\theta _0\). Additionally, we write \(\mathbf {P}_n\) (resp. \(\mathbf {P}\)) the empirical expectation (resp. theoretical), that is, for any stochastic variable \(X=(X_i)_i\), \(\mathbf {P}_{n}(X) = (1/n)\sum _{i=1}^{n} X_i\) (resp. \(\mathbf {P}(X)=\mathbb {E}[X]\)). For the purposes of this study, we work with \(\Pi _{\theta }\) on a compact subset \(A = A_1 \times A_2\). For more clarity, we write \(\Pi _{\theta }\) instead of \(\Pi _{\theta }\mathbf {1}_A\) and we denote by \(\vert \vert .\vert \vert _A\) (resp. \(\vert \vert .\vert \vert ^2_A\)) the norm in \(\mathbb {L}_1(A)\) (resp. \(\mathbb {L}_2(A)\)) defined as

2.2 Assumptions

For the construction of our estimator, we consider three different types of assumptions.

A 1

Smoothness and mixing assumptions

-

(i)

The function to estimate \(\Pi _{\theta }\) belongs to \(\mathbb {L}_1(A)\cap \mathbb {L}_2(A)\) and is twice continuously differentiable w.r.t. \(\theta \in \Theta \) for any \((x,x')\) and measurable w.r.t. \((x,x')\) for all \(\theta \) in \(\Theta \). Additionally, each coordinate of \(\nabla _{\theta }\Pi _{\theta }\) and each coordinate of \(\nabla ^{2}_{\theta }\Pi _{\theta }\) belongs to \(\mathbb {L}_1(A)\cap \mathbb {L}_2(A)\).

-

(ii)

The \((X_i)_{i}\) is strictly stationary, ergodic and \(\alpha \)-mixing with invariant density \(f_{\theta _0}\).

Assumptions on the noise \(\varepsilon _t\) and innovations \(\eta _t\)

-

(iii)

-

The errors \((\varepsilon _i)_{i}\) are independent and identically distributed (i.i.d.) centered random variables with finite variance, \(\mathbb {E}\left[ \varepsilon _1^2\right] = \sigma ^2_{\varepsilon }\). The random variable \(\varepsilon _1\) admits a known density, \(f_{\varepsilon }\), belongs to \(\mathbb {L}_2(\mathbb {R})\), and for all \(x \in \mathbb {R}\), \(f^{*}_{\varepsilon }(x) \ne 0\).

-

The innovations \((\eta _i)_{i}\) are i.i.d. centered random variables.

-

Identifiability assumptions

-

(iv)

The mapping \(\theta \mapsto \mathbf {P}m_{\theta }=\left\| \Pi _{\theta }-\Pi _{\theta _0}\right\| _{A}^{2}-\left\| \Pi _{\theta _{0}}\right\| _{A}^{2}\) with \(m_{\theta }\) defined in (2) admits a unique minimum at \(\theta _0\) and its Hessian matrix denoted by \(\mathcal {V}_{\theta }\) is non-singular in \(\theta _0\).

Sect. 3.3 provides further analysis and comments on these assumptions.

3 Estimation procedure and main results

3.1 Least squares contrast estimation

A key ingredient in the construction of our estimator of the parameter \(\theta _0\) is the choice of a “contrast function” depending on the data. Details about contrast estimators can be found in Van der Vaart (1998). For the purpose of this study, we consider the contrast function initially introduced by Lacour (2007) in a nonparametric setting, inspired by regression-type contrasts and later used in various works (see, e.g., Brunel et al. (2007) and Lacour (2008a, 2008b, 2008c)), that is

with \(m_{\theta }: \mathbf {y}\mapsto Q_{\Pi ^{2}_{\theta }}(y)-2 V_{\Pi _{\theta }}(\mathbf {y})\). The operators Q and V are defined for any function \(h \in \mathbb {L}_1(A)\cap \mathbb {L}_2(A)\) as

and must meet the following integrability condition:

A 2

The functions \(\Pi ^{*}_{\theta }/f^*_{\varepsilon }, ({\partial \Pi _{\theta }}/{\partial \theta _j})^*/f^*_{\varepsilon }\) and \(({\partial ^2 \Pi _{\theta }}/{\partial \theta _j \partial \theta _k})^*/f^*_{\varepsilon }\) for \(j,k=1,\ldots ,r\) belong to \(\mathbb {L}_1(A)\).

This assumption can be understood as \(\Pi ^*_{\theta }\) and its first two derivatives (resp. \((\Pi ^2_{\theta })^*\)) have to be smooth enough compared to \(f^*_{\varepsilon }\).

We are now able to describe in detail the procedure of Lacour (2007) to understand the choice of this contrast function (see, e.g., Lacour (2007) and Brunel et al. (2007) for the links between this contrast and regression-type contrasts). A full discussion of the hypothesis is given in Sect. 3.3.

Owing to the definition of the model (1), the \(\mathbf {Y}_i\) are not i.i.d.. However, by assumption A1(ii), they are stationary ergodic,Footnote 1 so the convergence of \(\mathbf {P}_{n}m_{\theta }\) to \(\mathbf {P}m_{\theta }\) as n tends to infinity is provided by the ergodic theorem. Moreover, the limit \(\mathbf {P}m_{\theta }\) of the contrast function can be analytically computed. To do this, we use the same technique as in the convolution problem (see Lacour (2008a, 2008b)). Let us denote by \(F_X\) the density of \(\mathbf {X}_i\) and \(F_Y\) the density of \(Y_i\). We remark that \(F_Y= F_X \star (f_{\varepsilon } \otimes f_{\varepsilon })\) and \(F_Y^{*}=F_{X}^{*}(f^{*}_{\varepsilon }\otimes f^{*}_{\varepsilon })\), where \(\star \) stands for the convolution product, and then by the Parseval equality we have

Similarly, the operator Q is defined to replace the term \(\int \Pi _{\theta }^{2}(X_i,y)dy\). The operators Q and V are chosen to satisfy the following Lemma (see (Lacour 2008a, 6.1. Proof of Lemma 2) for the proof).

Lemma 3.1

For all \(i \in \{1, \ldots , n \}\), we have

-

1.

\(\mathbb {E}[V_{\Pi _{\theta }}(\mathbf {Y}_i)]=\int \int \Pi _{\theta }(x,y)\Pi _{\theta _0}(x,y)f_{\theta _0}(x) dx dy\).

-

2.

\(\mathbb {E}[Q_{\Pi _{\theta }}(Y_i)]=\int \int \Pi _{\theta }^2(x, y) f_{\theta _0}(x) dx dy\).

-

3.

\(\mathbb {E}[V_{\Pi _{\theta }}(\mathbf {Y}_i) \vert X_{1},\ldots , X_n]=\Pi _{\theta }(\mathbf {X}_i)\)

-

4.

\(\mathbb {E}[Q_{\Pi _{\theta }}(Y_i)\vert X_{1},\ldots , X_n]=\int \Pi _{\theta }(X_i,y)dy\)

It follows from Lemma 3.1 that

Under the identifiability assumption A1(iv), this quantity is minimal when \(\theta \)=\(\theta _{0}\). Hence, the associated minimum-contrast estimator \(\widehat{\theta }_n\) is defined as any solution of

3.2 Asymptotic properties of the estimator

The following result shows the consistency of our estimator and the central limit theorem (CLT) for \(\alpha \)-mixing processes. To achieve this aim, we further assume that the following assumptions hold true:

A 3

- (i):

-

Local dominance: \(\mathbb {E}\left[ \sup _{\theta \in \Theta }\left| Q_{\Pi ^{2}_{\theta }}(Y_1)\right| \right] <\infty \).

- (ii):

-

Moment condition: For some \(\delta >0\) and for \(j \in \left\{ 1,\ldots ,r\right\} \):

$$\begin{aligned} \mathbb {E}\left[ \left| Q_{\frac{\partial \Pi ^{2}_{\theta }}{\partial \theta _j}}(Y_{1})\right| ^{2+\delta }\right] <\infty . \end{aligned}$$ - (iii):

-

Hessian local dominance: For some neighborhood \(\mathcal {U}\) of \(\theta _0\) and for \(j,k \in \left\{ 1,\ldots ,r\right\} \)

$$\begin{aligned} \mathbb {E}\left[ \sup _{\theta \in \mathcal {U}}\left| Q_{\frac{\partial ^2 \Pi ^{2}_{\theta }}{\partial \theta _j\partial \theta _k}}(Y_{1})\right| \right] <\infty \end{aligned}$$

Let us now introduce the matrix \(\Sigma (\theta )\) given by

where \(\Omega _{0}(\theta )=\mathbb {V}ar\left( \nabla _{\theta }m_{\theta }(\mathbf {Y_{1}})\right) \) and \(\Omega _{j-1}(\theta )=\mathbb {C}ov\left( \nabla _{\theta }m_{\theta }(\mathbf {Y_{1}}),\nabla _{\theta }m_{\theta }(\mathbf {Y_{j}})\right) \).

Theorem 3.1

Under Assumptions A1–A3, let \(\widehat{\theta }_{n}\) be the least square estimator defined in (4). Then we have

Moreover,

The proof of Theorem 3.1 is provided in Sect. 6.1.

The following corollary gives an expression of the matrices \(\Omega (\theta _0)\) and \(\mathcal {V}_{\theta _0}\) defined in \(\Sigma (\theta )\) of Theorem 3.1.

Corollary 3.1

Under Assumptions A1–A3, the matrix \(\Omega (\theta _0)\) is given by

where

and the covariance terms are given for \(j >2\) as

where the differential \(\nabla _{\theta }\Pi _{\theta }\) is taken at point \(\theta =\theta _0\).

Furthermore, the Hessian matrix \(\mathcal {V}_{\theta _0}\) is given by

The proof of Corollary 3.1 is given in Sect. 6.2.

Sketch of proof Let us now state the strategy of the proof. The full proof is given in Sect. 6. Clearly, the proof of Theorem 3.1 relies on M-estimator properties and on the deconvolution strategy. The following observation explains the consistency of our estimator: if \(\mathbf {P}_{n}m_{\theta }\) converges to \(\mathbf {P}m_{\theta }\) in probability, and if the true parameter solves the limit minimization problem, then the limit of the argument of the minimum \(\widehat{\theta }_n\) is \(\theta _0\). By using the uniform convergence in probability and the compactness of the parameter space, we show that the argmin of the limit is the limit of the argmin. Combining these arguments with the dominance argument A3(i), we prove the consistency of our estimator, and then, the first part of Theorem 3.1.

Asymptotic normality follows essentially from CLT for mixing processes (see Jones (2004)). Thanks to the consistency, the proof is based on a moment condition of the Jacobian vector of the function \(m_{\theta }(\mathbf {y})=Q_{\Pi ^2_{\theta }}(y)-2V_{\Pi _{\theta }}(\mathbf {y})\) and on a local dominance condition of its Hessian matrix. These conditions are given in A3(ii) and A3(iii). To refer to likelihood results, one can see these assumptions as a moment condition of the score function and a local dominance condition of the Hessian.

3.3 Comments on the assumptions

In the following, we provide a discussion of the hypotheses.

-

Assumption A1(i) is not restrictive; it is satisfied for many processes. Taking the process \((X_i)_{i}\) defined in (1), we provide conditions on the functions b, \(\sigma \) and \(\eta \) ensuring that Assumption A1(ii) is satisfied (see Doukhan (1994) for more details).

-

(a)

The random variables \((\eta _i)_{i}\) are i.i.d. with an everywhere positive and continuous density function independent of \((X_i)_i\).

-

(b)

The function \(b_{\theta _0}\) is bounded on every bounded set; that is, for every \(K>0\), \(\sup _{\vert x \vert \le K}\vert b_{\theta _0}(x)\vert <\infty \).

-

(c)

The function \(\sigma _{\theta _0}\) satisfies, for every \(K>0\) and constant \(\sigma _1\), \(0<\sigma _1\le \inf _{\vert x \vert \le K} \sigma _{\theta _0}(x)\) and \(\sup _{\vert x \vert \le K} \sigma _{\theta _0}(x)<\infty \).

-

(d)

There exist constants \(C_{b} >0\) and \(C_{\sigma } >0\), sufficiently large \(M_1>0\), \(M_2>0\), \(c_1 \ge 0\) and \(c_2 \ge 0\) such that \(\vert b_{\theta _0}(x)\vert \le C_{b}\vert x \vert +c_1, \text { for } \vert x\vert \ge M_1\) and \(\vert \sigma _{\theta _0}(x)\vert \le C_{\sigma }\vert x \vert +c_2, \text { for } \vert x\vert \ge M_2\) and \(C_b+\mathbb {E}[\eta _1]C_{\sigma }<1\).

Assumption A1(iii) on \(f_{\varepsilon }\) is quite usual when considering deconvolution estimation. In particular, the first part is essential for the identifiability of the model (1). This assumption cannot be easily removed: even if the density of \(\varepsilon _i\) is completely known up to a scale parameter, the model (1) may be non-identifiable as soon as the invariant density of \(X_i\) is smoother than the density of the noise (see Butucea and Matias (2005)). The second part of A1(iii) is a classical assumption ensuring the existence of the estimation criterion.

-

(a)

-

For some models, Assumption A2 is not satisfied. In particular, for models where this integrability assumption is not valid, we propose inserting a weight function \(\varphi \) or a truncation kernel as in (Dedecker et al. 2014, p. 285) to circumvent the issue of integrability. More precisely, we define the operators as follows:

$$\begin{aligned} Q_{h \star K_{B_n}}(x)=\frac{1}{2\pi }\int e^{ixu}\frac{(h\star K_{B_n})^{*}(u,0)}{f^{*}_{\varepsilon }(-u)}du, \quad \quad V^{*}_{h \star K_{B_n}}=\frac{(h\star K_{B_n})^{*}}{\overline{f^{*}_{\varepsilon }}\otimes \overline{f^{*}_{\varepsilon }}}, \end{aligned}$$where \(K_{B_n}^{*}\) denotes the Fourier transform of a density deconvolution kernel with compact support and satisfies \(\vert 1-K_{B_n}^{*}(t)\vert \le \mathbf {1}_{\vert t\vert > 1}\) and \(B_n\) is a sequence which tends to infinity with n. The contrast is then defined as

$$\begin{aligned} \mathbf {P}_{n}m_{\theta }=\frac{1}{n}\sum _{i=1}^{n-1}Q_{\Pi ^{2}_{\theta } \star K_{B_n}}(Y_i)-2 V_{\Pi _{\theta } \star K_{B_n}}(\mathbf {Y}_i). \end{aligned}$$(6)This contrast is still valid under Assumptions A1–3 by taking \(K_{B_n}(t)^{*}=\mathbf {1}_{\vert t \vert \le B_n}\) with \(B_n= +\infty \).

-

It should be noted that the construction of the contrasts (2)-(6) does not need to know the stationary density \(f_{\theta _0}\). The second part of Assumption A1(iv) is an exeption because the first part concerns the uniqueness of \(\mathbf {P}m_{\theta }\), which is strictly convex w.r.t. \(\theta \). Nevertheless, the second part requires computing the Hessian matrix of \(\mathbf {P}m_{\theta }\) to ensure that it is invertible and secondly to calculate confidence intervals. For the latter, we propose to use in practice the following consistent estimator for the confidence bounds:

$$\begin{aligned} \mathcal {V}_{\hat{\theta }_n}=\frac{1}{n}\sum _{i=1}^{n-1}Q_{\frac{\partial ^2 \Pi ^2_{\theta }}{\partial \theta ^2}}(Y_i)-2V_{\frac{\partial ^2 \Pi _{\theta }}{\partial \theta ^2}}(\mathbf {Y}_i), \end{aligned}$$(7)because, under the integrability assumption A2 and the Hessian local dominance Assumption A3(iii), the matrix \(\mathcal {V}_{\hat{\theta }_n}\) is a consistent estimator of \(\mathcal {V}_{\theta _0}\). This matrix and its inverse can be computed in practice for a large class of models.

-

The local dominance Assumptions A3(i) and (iii) are not more restrictive than Assumption A2 and are satisfied if for \(j,k=1,\ldots ,r\) the functions \({\sup _{\theta \in \Theta }\Pi ^{*}_{\theta }}/{f^*_{\varepsilon }}\) and \({\sup _{\theta \in \Theta }({\partial ^2 \Pi _{\theta }}/{\partial \theta _j \partial \theta _k})^*}/{ f^*_{\varepsilon }}\) are integrable.

-

In most applications, we do not know the bounds of the true parameter. The compactness assumption can be replaced with: \(\theta _0\) is an element of the interior of a convex parameter space \(\Theta \subset \mathbb {R}^{r}\). Then, under our assumptions except for the compactness, the estimator is also consistent. The proof is the same and the existence is proved by using convex optimization arguments. One can refer to Hayashi (2000) for this discussion.

4 Simulations

4.1 Linear autoregressive processes

We start from this following HMM:

where the noises \(\varepsilon _i\) and the innovations \(\eta _i\) are supposed to be i.i.d. centered random variables with variance respectively \(\sigma ^2_{\varepsilon }\) and \(\sigma ^2_{0,\eta }\).

Here, the unknown vector of parameters is \(\theta _0=(\phi _0,\sigma ^2_{0,\eta })\) and for stationary and ergodic properties of the process \(X_i\), we assume that the parameter \(\phi _0\) satisfies \(|\phi _0|<1\) (see Doukhan (1994)). In this setting, the innovations \(\eta _i\) are assumed Gaussian with zero mean and variance \(\sigma ^2_{0,\eta }\). Hence, the transition function \(\Pi _{\theta _0}(x,y)\) is also Gaussian with mean \(\phi _{0} x\) and variance \(\sigma ^2_{0,\eta }\). To analyse the effect of the regularity of the density of observation noises on the estimation of parameters, we consider three types of noises:

Case 1: ARMA model with Gaussian noise (super smooth) The density of \(\varepsilon _1\) is given by

We have \(f^{*}_{\varepsilon }(x)=\exp \left( -\sigma _{\varepsilon }^2x^2/2\right) \). The vector of parameters \(\theta _0\) belongs to the compact subset \(\Theta \) given by \(\Theta = [-1+r; 1-r]\times [\sigma ^{2}_{\mathrm {min}}; \sigma ^{2}_{\mathrm {max}}]\) with \(\sigma ^{2}_{\mathrm {min}}\ge \sigma _{\varepsilon }^2+\overline{r}\) where r, \(\overline{r}\), \(\sigma ^{2}_{\mathrm {min}}\) and \(\sigma ^{2}_{\mathrm {max}}\) are positive real constants. We consider this condition (\(\sigma ^{2}_{0,\eta }>\sigma _{\varepsilon }^2\)) for integrability assumption but one can relax this assumption (see Sect. 6.3 for the discussion on Assumptions A 1-A 3).

Case 2: ARMA model with Laplace’s noise (ordinary smooth) The density of \(\varepsilon _1\) is given by

It satisfies \(f^{*}_{\varepsilon }(x)=1/(1+\sigma _{\varepsilon }^2x^2/2)\).

Case 3: SV model: \(\log -\mathcal {X}^{2}\) noise (super smooth) The density of \(\varepsilon _1\) is given by

We have \(f^{*}_{\varepsilon }(x)=(1/\sqrt{\pi }) 2^{ix}\Gamma \left( 1/2+ix\right) e^{-i\mathcal {E}x}\),where \(\mathcal {E}=\mathbb {E}[\log (\xi ^{2}_{i+1})]\) and \(\mathbb {V}ar[\log (\xi ^{2}_{i+1})]\)= \(\sigma ^{2}_{\varepsilon }={\pi ^2}/{2}\), and \(\Gamma (x)\) denotes the gamma function defined by \(\Gamma (x)=\int _{0}^{\infty } t^{x-1}e^{-t}dt\). For the cases 2 and 3, the vector of parameters \(\theta =(\phi ,\sigma ^2)\) belongs to the compact subset \(\Theta \) given by \([-1+r; 1-r]\times [ \sigma ^{2}_{min} ; \sigma ^{2}_{max}]\) with r, \(\sigma ^{2}_{min}\) and \(\sigma ^{2}_{max}\) positive real constants. Furthermore, this latter case corresponds to the SV model introduced by Taylor in Taylor (2005):

where \(\beta \) denotes a positive constant. The noises \(\xi _{i+1}\) and \(\eta _{i+1} \) are two centered Gaussian random variables with standard variance \(\sigma ^{2}_{\varepsilon }\) and \(\sigma ^{2}_{0}\).

In the original paper Taylor (2005), the constant \(\beta \) is equal to 1Footnote 2. In this case, by applying a log transformation \(Y_{i+1}=\log (R^{2}_{i+1})-\mathbb {E}[\log (\xi ^{2}_{i+1})]\) and \(\varepsilon _{i+1}=\log (\xi ^{2}_{i+1})-\mathbb {E}[\log (\xi ^{2}_{i+1})]\), the log-transform SV model is a special case of the defined model (8).

For all these models, we report in Sect. 6.3 the verification of Theorem 3.1 assumptions.

4.1.1 Expression of the contrasts

For all the models described above, we can express the theoretical and empirical contrasts regardless of the type of observation noise used. These expressions are given in the following proposition.

Proposition 4.1

For the HMM model (8), the theoretical contrast defined in (3) is given by

and the empirical contrasts used in our simulations are obtained as follows:

where \(\mathcal {A}_i=(Y_{i+1}-\phi Y_i)^2\).

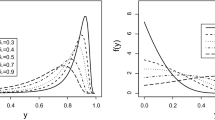

The notations \(\mathbf {P}_nm_{\theta }^{G}\) and \(\mathbf {P}_nm_{\theta }^{L}\) correspond to the case 1 and case 2, respectively, (see Fig. 1 for a visual representation of these contrasts as a function of the parameters \(\phi \) and \(\sigma _{\eta }^2\)).

Contrast functions. a \(\mathbf {P}m_{\theta }\) as a function of the parameters \(\phi \) and \(\sigma _{\eta }^2\) for one realization of (8), with \(n=2000\). b \(\mathbf {P}_{n}m_{\theta }^G\). c \(\mathbf {P}_{n}m_{\theta }^L\). d \(\mathbf {P}_{n}m_{\theta }^{\mathcal {X}}\). e–h Corresponding contour lines. The red circle represents the global minimizer \(\theta _0\) of \(\mathbf {P}m_{\theta }\) and the blue circle, the one of \(\mathbf {P}_{n}m_{\theta }^G\), \(\mathbf {P}_{n}m_{\theta }^G\) and \(\mathbf {P}_{n}m_{\theta }^{\mathcal {X}}\), respectively

For the SV model (case 3), the inverse Fourier transform of \(\Pi _{\theta }^{*}/f_{\varepsilon }^{*}\) does not have an explicit expression in particular because of the gamma function \(\Gamma \) in \(f_{\varepsilon }^{*}\). Nevertheless, the contrast can be approached numerically using a fast Fourier transform (FFT) and is also represented. Figure 1 depicts the three empirical and true contrast curves as functions of the parameters \(\phi \) and \(\sigma _{\eta }^2\) for one realization of (8), with \(n=2000\). It can be observed that these three empirical contrasts give reliable estimates for the theoretical contrast, and in turn, also a high-quality estimate of the optimal parameter for the three types of noise distributions considered in this simulation study.

4.1.2 Monte Carlo simulation and comparison with the MLE

Let us present the results of our simulation experiments. First, we perform a Monte Carlo (MC) study for the first two cases to study the influence of the noise regularity on the performance of the estimate (case 3 has the same regularity as case 1). For the first case, we compare our approach to the MLE. This case is favorable for the MLE since its calculation is fast via the Kalman filter. Indeed, the linearity of the model and the gaussianity of the observation noises make the use of the Kalman filter suitable for the computation of the MLE. However, this is no longer the case for non-Gaussian noises such as Laplace noises. For noises other than Gaussian noises, the calculation of the MLE is generally more complicated in practice and requires the use of algorithms such as MCMC, EM, Stochastic Approximation of EM (SAEM), SMC, or alternative estimation strategies (see Andrieu et al. (2010), Chopin et al. (2013) and Olsson and Rydén (2007)), which require a longer computation time. In the case of Laplace’s noise, we use the R package tseries Trapletti and Hornik (2019) to fit an ARMA(1,1) model to the \(Y_i\) observations by a conditional least squares method (Hannan and Rissanen 1982). Moreover, it is important to note that even in the most favourable case for calculating the MLE, our approach is faster than the Kalman filter as it only requires the minimization of an explicitly known contrast function as opposed to the MLE where the Kalman filter is used to construct the likelihood of the model to be maximized.

For each simulation, we consider three different signal-to-noise ratios denoted by \(\mathrm {SNR}\) (i.e., \(\mathrm {SNR}=\sigma ^2_\eta /\sigma _\varepsilon ^2=1/\sigma _\varepsilon ^2\), with \(\sigma _\varepsilon ^2\) equals to 1/40, 1/20 and 1/10 corresponding to low, medium, and high noise levels, respectively). For all experiments, we set \(\phi =0.7\) and generate samples of different sizes (i.e., \(n=500\) up to 2000) while keeping the financial and economic context in mind. We represent the results obtained, on boxplots for each parameter and for 100 repetitions in Figs. 2 and 3 (corresponding to cases 1 and 2, respectively). We can see that, as already noticed in the deconvolution setting, there is little difference between Laplace and Gaussian \(\varepsilon _i\)’s. The convergence is slightly better for Laplace noise. Moreover, increasing the sample size leads to noticeable improvements in the results. In the Gaussian case (Fig. 2), the MLE provides better overall results, which is not surprising since in case 1, it is the best unbiased linear estimator. However, we can see that for the \(\phi \) parameter, our estimator is slightly better. The estimation of the variance \(\sigma ^2_{\eta }\) is more difficult, especially as the SNR decreases. For Laplace’s noise (Fig. 3), our contrast estimator provides better results, whatever the number of observations and the SNR. The differences with the MLE are even more significant when estimating the variance \(\sigma ^2_{\eta }\). The contrast approach is an interesting alternative to the MLE in the non-Gaussian case.

Our estimation procedure allows us to deepen our analysis since Theorem 3.1 applies and as we already mentioned, Corollary 3.1 allows to compute confidence intervals (CIs) in practice, i.e., for \(i=1,2\):

as \(n \rightarrow \infty \) where \(z_{1-\alpha /2}\) is the \(1-\alpha /2\) quantile of the Gaussian distribution, \(\theta _{0,i}\) is the ith coordinate of \(\theta _0\) and \(\mathbf {e}_{i}\) is the ith coordinate of the vector of the canonical basis of \(\mathbb {R}^2\). The covariance matrix \(\Sigma (\hat{\theta }_{n})\) is computed by plug-in the variance matrix defined in (7).

We investigate the coverage probabilities for sample sizes ranging from 100 to 2000 with a replication number of 1000. We compute the confidence interval for each sample and plot the proportion of samples for which the true parameters are contained in the corresponding confidence intervals for the two cases 1 and 2. The results are shown in Fig. 4. It can be seen that the computed proportions provide good estimators for the empirical coverage probability for the confidence intervals of both parameters, whatever the type of noise. We can also see that these proportions deviate slightly from the theoretical value as the noise level increases. Indeed, the size of the ICs tends to increase as the noise level increases.

4.1.3 Sensibility of the contrast w.r.t. \(\sigma ^2_{\varepsilon }\)

In this section, we focus on the sensitivity of our contrast estimator when we relax the assumption A1(iii), that is, we assume that \(f_{\varepsilon }\) is known up to the unknown \(\sigma ^2_{\varepsilon }\). Many authors have addressed this issue from both a theoretical and practical perspective. We can highlight the following works, although this list is not exhaustive: in the context of Efromovich (1997) and Neumann and Hössjer (1997), where the error density is unknown and estimated from additional direct observations which come from the error density. Meister (2004) considered a testing procedure for two possible densities competing to be the error density. Butucea and Matias (2005) introduced a uniformly consistent estimation procedure when the error variance \(\sigma ^2_{\varepsilon }\) is unknown but restricted to a known compact interval. In Kappus and Mabon (2014), the authors considered two different models: a first one where an additional sample of pure noise is available, as well as the model of repeated measurements, where the contaminated random variables of interest can be observed repeatedly, with independent errors. In Delaigle and Hall (2016), the authors proposed a completely new approach to this problem, which does not require any extra data of any kind and does not require smoothness assumptions on the signal distribution.

To estimate \(\sigma ^2_{\varepsilon }\) here, we use the approach proposed in Meister (2004), which is very simple to implement and yields good results. Let us present the methodology. We consider the absolute empirical Fourier transform defined by

In the sequel we denote \((k_n)_{n \in \mathbb {N}}, (\omega _n)_{n \in \mathbb {N}}\) and \((\sigma ^{2}_n)_{n \in \mathbb {N}}\) three sequences of positive numbers described below. We set

where C and \(\beta >0\) are arbitrary constants not stipulated to be known, so they might be misspecified in practice. If we know the regularity of the density of \(X_i\), that is, if \(f_{\theta } \in \mathcal {F}\), where \(\mathcal {F}\) is the ordinary smooth or super smooth function class, one can choose these parameters according to it. For all examples considered in this section, the stationary density \(f_{\theta }\) is super smooth. Otherwise, we fix these parameters arbitrarily and set \(\omega _n={k_n}/{\log (k_n)}\) with \(k_n \rightarrow \infty \). Thus, the estimator of \(\sigma ^2_{\varepsilon }\) is defined as

To analyse the sensitivity of our approach w.r.t. the noise variance \(\sigma ^2_{\varepsilon }\), we applied our contrast estimator defined in Proposition 4.1 for the AR Gaussian model where we plugged the estimator given in (10). We then compared our results with the MLE estimation where the same variance estimator is used. The results are shown in Fig. 5 for each parameter and for a different number of observations. For the construction of the estimator (10), we took the following parameters \(C=1/4\), \(\beta =4\), \(\sigma ^{2}_n=1\) and \(k_n=\sqrt{n}\) and a number of MC trials equal to 50. We tested different sets of parameters to see the influence of these parameters. As the influence was negligible, we did not report all the tests.

The results obtained are similar to those of the Sect. 4.1.2. We note that whatever the number of observations, the MLE is more robust in this favourable case (Gaussian measurement noise). Nevertheless, our approach gives as good results as when the variance is assumed to be known. The given estimator (10) is a good alternative in practice when we relax the hypothesis on the knowledge of the observation noises and is readily implementable.

4.1.4 Sensibility of the contrast w.r.t. the truncature of the asymptotic variance matrix

The asymptotic variance of our estimator \(\Sigma (\theta )\) defined in (5) suggests computing the covariance matrix \(\Omega (\theta )\), which requires the computation of the infinite sum of covariance terms. In practice, we use the following empirical covariance with lag j defined as

and the sum in (5) has been truncated for a large value of j. In this part we wish to analyze the influence of this truncation on the asymptotic variance of our estimator. The illustration is made for the Gaussian AR model. In Table 1, we report the asymptotic variance w.r.t. the truncation denoted J and the number of observations n. We note that the asymptotic variance decreases w.r.t. J and no longer varies for a given moderate lag, whatever the number of observations. This phenomenon is explained by the fact that at a certain rank \(j_0\), we have that for \(j\ge j_0\) the covariance terms \(\Omega _j\) are infinitely small and negligible in front of \(\Omega _1\), which is in agreement with the mixing assumption A1(ii), satisfied for the AR process. Furthermore, as expected, the asymptotic variance also decreases with the number of observations.

4.2 NonLinear autoregressive processes

We conclude this simulation study with an example of a nonlinear process of the form

where the noises \(\varepsilon _i\) and the innovations \(\eta _i\) are supposed to be i.i.d. centered random variables with variance respectively \(\sigma ^2_{\varepsilon }\) and \(\sigma ^2_{0,\eta }\). The transition density is given by \(\Pi _{\theta _0}(x,y)=f_\eta (y-\phi _0\sin (x))\) (see Mokkadem (1987)). In spite of the explicit expressions of the Fourier transforms of \(\Pi _{\theta _0}\) and \(f_\varepsilon \), the contrast does not admit an explicit expression but can easily be calculated by FFT or numerical integration. More precisely, in this typical example, the operator \(V_{\Pi _{\theta }}\) in the contrast is not explicit, but we give in Sect. 6.3 some details of its computation. For this example, we compare our contrast to the MLE computed with the Extended Kalman filter (EKF) adapted to nonlinear models. The latter, after a linearization of the model, can be used to compute the likelihood. Nevertheless, the properties of EKF are known to be good when the model is not strongly nonlinear, which is not the case for the drift function given by the sinus. We therefore propose to also compare our approach with the more general SAEM (see Delyon et al. (1999) for more details). Given the unobservable character of the variables \(X_i\), the latter uses a conditional particle filter (see Lindsten (2013)) in the expectation step. For this experiment we take \(\mathrm {SNR}=1/40\), \(\phi _0=0.7\), \(\sigma ^2_{0,\eta }=0.5\), a number of replications \(MC=50\) and we generate samples of different sizes \(n=500\), 1000 and 2000. For the SAEM, we took a number of particles equal to 50 in the conditional particle filter and a number of iterations (denoted nbIter) for the convergence of the EM equal to 400 (below this threshold, there was no convergence). The results are represented by boxplots for each parameter (see Fig. 6) and synthesized in Table 2, where the mean square error (MSE) is given and computed as

We observe from Table 2 that the EKF gives worse results than the two other approaches, in terms of MSE. This result was expected and in accordance with the properties of the EKF when the model is not linear. The contrast gives better results than the SAEM method, whatever the number of observations and the number of iterations considered in the EM step. From Table 2, we can see that the results for SAEM tend to be better and closer to the contrast when we increase the number of iterations (nbIter=100 to nbIter=400). Indeed, the results of SAEM depend strongly on the initial condition for \(\theta \) and the number of iterations that control the convergence of the algorithm. We voluntarily tested different thresholds for the number of iterations since, for nbIter=100, the convergence did not take place for the drift parameter \(\phi \). On the other hand, the SAEM results do not depend on the number of particles chosen since a conditional filter has been used with an ancestral resampling step, which allows the use of a reasonable number of particles in terms of computation time (see Lindsten (2013) for more details). Contrary to the SAEM, our approach does not require parameter calibration and gives good results, even in a nonlinear framework, whatever the initial condition.

5 Application on the ex-ante real interest rate

Ex-ante real interest rate is important in finance and economics because it provides a measure of the real return on an asset between now and the future. It is a fruitful indicator of the monetary policy direction of central banks. Nevertheless, it is important to make a distinction between the ex-post real rate, which is the observed series, and the ex-ante real rate, which is unobserved. While the ex-post real rate is simply the difference between the observed nominal interest rate and the observed actual inflation, the ex-ante real rate is defined as the difference between the nominal rate and the unobserved expected inflation rate. Since monetary policy makers cannot observe inflation within the period, they must establish their interest rate decisions on the expected inflation rate. Hence, the ex-ante real rate is probably a better indicator of the monetary policy orientation.

There are two different strategies for the estimation of the ex-ante real rate. The first consists of using a proxy variable for the ex-ante real rate (see, e.g., Holston et al. (2017) for the US region and more recently in Laubach and Williams (2003) for Canada, the Euro Area and the UK). The second strategy consists of treating the ex-ante real rate as an unknown variable using a latent factor model (see, e.g., Burmeister et al. (1986) and Hamilton (1994b, 1994a)). Our procedure is in line with this second strategy in which the factor model is specified as follows:

where \(Y_t\) is the observed ex-post real interest rate, \(X_t\) is the latent ex-ante real interest rate adjusted by a parameter \(\alpha \), \(\phi \) is a parameter of persistence and \(\varepsilon _t\) (resp. \(\eta _t\)) is a centered Gaussian random variables with variance \(\sigma ^2_{\varepsilon }\) (resp. \(\sigma ^2_{\eta }\)).

This model is derived from the fact that if we denote by \(Y_{t}^{e}\), the ex-ante real interest rate, we have that \(Y_{t}^{e}=\mathcal {R}_{t}-\mathcal {I}^{e}_t\) with \(\mathcal {R}_{t}\) the observed nominal interest rate and \(\mathcal {I}^{e}_t\) the expected inflation rate. So, the unobserved part of \(Y_{t}^{e}\) comes from the expected inflation rate. Furthermore, the ex-post real interest rate \(Y_t\) is obtained from \(Y_t=\mathcal {R}_{t}-\mathcal {I}_t\) with \(\mathcal {I}_t\) the observed actual inflation rate. As a result, expanding these expressions to allow for expected inflation rate \(\mathcal {I}^{e}_t\) gives

where \(\varepsilon _t=\mathcal {I}^{e}_t-\mathcal {I}_t\) is the random variable for inflation expectation. If people do not make systematic errors in forecasting inflation, \(\varepsilon _t\) can be assumed to be a Gaussian white noise with variance denoted by \(\sigma ^2_{\varepsilon }\). This assumption is known as the Rational Expectation Hypothesis (REH). Thus, the REH lends itself very naturally to a state-space representation (see, e.g., Burmeister and Wall (1982)), and defining \(X_t=Y_{t}^{e}-\alpha \) as the ex-ante real interest rate adjusted by its population mean, \(\alpha \), yields the modelization (12).

The dataset was split into in and out-of-sample monthly data sets. The in-sample contained \(75\%\) (it ranges from 1 January 1962 to 1 March 1973) of the total dataset and the out-of-sample contained the remaining \(25\%\) (from 1 April 1973 to 1 March 1975, i.e., for a horizon of two years). This places us in the period of great inflation for the US region. More precisely, the ex-post real interest rates, \(Y_t\) (depicted in Fig. 7a), is calculated as the difference between the logarithm annualized nominal funds rate and the logarithm annualized percentage inflation rate, \(I_t\) (depicted in Fig. 7b), obtained from the consumer price index for all urban consumers. The data are available from the Federal Reserve Economic Data and for them, we consider two of the models previously studied, the Gaussian AR model and the SV model.

Let us denote \(\theta =(\alpha ,\phi ,\sigma ^2_{\eta })\) the vector of unknown parameters to be estimated. We estimate the unknown parameter vector \(\theta \) on the in-sample in two stages: first, we minimize the contrast introduced in this paper to estimate the unknown parameter vector \(\theta \) on the in-sample. The second step is devoted to the ex-ante real rate forecasts and the expected inflation rate forecasts on the out-of-sample by plugging \(\widehat{\theta }\) obtained from the in-sample set in the first stage by running a Kalman filter. So all forecasts are computed using the pseudo out-of-sample method.

The value of the estimation obtained in the first step is as follows: \(\hat{\alpha }_{\mathrm {AR}}=1.5699\), \(\hat{\alpha }_{\mathrm {SV}}=2.1895\), \(\hat{\phi }_{\mathrm {AR}}=0.5750, \hat{\phi }_{\mathrm {SV}}=0.8500, \hat{\sigma }^2_{\mathrm {AR}}=4.4048\) and \(\hat{\sigma }^2_{\mathrm {SV}}=3.1756\) and the forecasts obtained from the out-of-sample are shown in Fig. 8. A first examination of our results reveals that our forecasts expected inflation series is plausible for the two models. Nevertheless, the results are better for the SV model: the Mean Squared Forecast Error is divided by a factor 10 for the SV model. The Lilliefors test Lilliefors (1967), a variant of the Kolmogorov-Smirnov test when certain parameters of the distributions must be estimated which is the case here, suggests that during the great inflation period the data are no longer Gaussian and exhibit more like a distribution with heavy tails. The null hypothesis is rejected at level \(\alpha =0.05\). This result may explain why the SV model is significantly better than the AR model. The mean of the forecast error \(\widehat{e}_t=\mathcal {I}_t-\widehat{\mathcal {I}}^{e}_t\) is sufficiently close to zero and the Ljung box test accepts the null hypothesis, meaning that the forecast errors are not correlated for the two models. The correlograms for the two models are given in Fig. 9. These results are consistent with the rational expectation hypothesis. If one compares the results of the parameters estimation for the two models, one can see that the persistence parameter \(\phi \) is higher for the SV model than the AR model. On the other hand, the variance is lower. Therefore, the variance of \(\widehat{\mathcal {I}}^{e}_t\) is smaller than that of \(\mathcal {I}_t\) for the SV model. These results are consistent with the economically intuitive notion that expectations are smoother than realizations. Most importantly, these results corroborate those of the thorough analysis in Stock and Watson (2007) and Pivetta and Reis (2007), whose findings show that the persistence parameter is high and close to one for this period of study.

6 Conclusion

In this paper, we propose a new parametric estimation strategy for non-linear and non-Gaussian HMM models inspired by Lacour (2008b). We also provide an analytical expression of the asymptotic variance matrix \(\Sigma (\hat{\theta }_{n})\) which allows us to consider the construction of confidence intervals. This methodology makes it possible to bypass the MLE estimate known to be difficult to calculate for these models. Our approach is not based on MC methods (MCMC or particle filtering methods), which avoids the instability problems of most of the proposed methods when minimizing the criterion following MC errors (see Doucet et al. (2001)). The parameter estimation step in HMM models is very important since it is shown in El Kolei and Patras (2018) that the bias in the parameters propagates in the estimation of the latent variable. This cannot be overlooked in models where the latent variable to be predicted is used to make political decisions. In this paper, for example, we looked at the prediction of ex-ante interest rates and found that during periods of high inflation, the annualized inflation rate has a distribution with heavy tails. Thus, in this context, the SV model seems more appropriate and gives better results. Nevertheless, since this model is no longer Gaussian, it seems important to study estimators other than the MLE that cannot be calculated by the Kalman filter. In this context, we provide a new and simple way to estimate the parameters in a Gaussian and non-Gaussian setting. This provides an alternative estimation method to those proposed in the literature that are largely based on MC methods.

7 Proofs

7.1 Proofs of Theorem 3.1

For the reader’s convenience, we split the proof of Theorem 3.1 into three parts: in Sect. 6.1.1, we give the proof of the existence of our contrast estimator defined in (3). In Sect. 6.1.2, we prove consistency, that is, the first part of Theorem 3.1. Then, we prove the asymptotic normality of our estimator in Sect. 6.1.3, that is, the second part of Theorem 3.1. Section 6.2 is devoted to Corollary 3.1.

7.1.1 Existence of the M-estimator

By assumption, the function \(m_{\theta }(\mathbf {y}_{i})=Q_{\Pi ^{2}_{\theta }}(y_i)-2 V_{\Pi _{\theta }}(\mathbf {y}_i)\) is continuous w.r.t. \(\theta \). Hence, the function \(\mathbf {P}_{n}m_{\theta }=\frac{1}{n}\sum _{i=1}^{n}m_{\theta }(\mathbf {Y}_i)\) is continuous w.r.t. \(\theta \) belonging to the compact subset \(\Theta \). So, there exists \(\tilde{\theta }\) belongs to \(\Theta \) such that \(\inf _{\theta \in \Theta }\mathbf {P}_{n}m_{\theta }=\mathbf {P}_{n}m_{\tilde{\theta }}\). \(\square \)

7.1.2 Consistency

For the consistency of our estimator, we need to use the uniform convergence given in the following Lemma. In this regard, let us consider the following quantities:

where \(h_{\theta }(y)\) is a real function from \(\Theta \times \mathcal {Y}\) with value in \(\mathbb {R}\).

Lemma 6.1

Uniform Law of Large Numbers (see Newey and McFadden (1994a) for the proof). Let \((Y_i)_{i\ge 1}\) be an ergodic stationary process and suppose that:

-

1.

\(h_{\theta }(y)\) is continuous in \(\theta \) for all y and measurable in y for all \(\theta \) in the compact subset \(\Theta \).

-

2.

There exists a function s(y) (called the dominating function) such that \(\left| h_{\theta }(y)\right| \le s(y)\) for all \(\theta \in \Theta \) and \(\mathbb {E}[s(Y_1)]<\infty \). Then

Moreover, \(\mathbf {P}h_{\theta }\) is a continuous function of \(\theta \).

By assumption \(\Pi _{\theta }\) is continuous w.r.t. \(\theta \) for any x and measurable w.r.t. x for all \(\theta \) which implies the continuity and the measurability of the function \(\mathbf {P}_{n}m_{\theta }\) on the compact subset \(\Theta \). Furthermore, the local dominance assumption A3(i) implies that \(\mathbb {E}\left[ \sup _{\theta \in \Theta }\left| m_{\theta }(\mathbf {Y}_i)\right| \right] \) is finite. Indeed, by assumption A3(i), we have

Lemma 6.1 gives the uniform convergence in probability of the contrast function: for any \(\varepsilon >0\), we have

Combining the uniform convergence with (Newey and McFadden 1994b, Theorem 2.1 p. 2121 chapter 36) yields the weak (convergence in probability) consistency of the estimator. \(\square \)

7.1.3 Asymptotic normality

For the CLT, we need to define the \(\alpha \)-mixing property of a process (we refer the reader to Doukhan (1994) for a complete review of mixing processes).

Definition 6.1

(\(\alpha \)-mixing (strongly mixing process)) Let \(Y:=(Y_{i})_i\) denote a general sequence of random variables on a probability space \((\Omega , \mathcal {F}, \mathbb {P}_{\theta })\) and let \(\mathcal {F}_{k}^{m}=\sigma (Y_{k}, \ldots , Y_m)\). The sequence Y is said to be \(\alpha \)-mixing if \(\alpha (n)\rightarrow 0\) as \(n \rightarrow \infty \), where

The proof of the CLT is based on the following Lemma.

Lemma 6.2

Suppose that the conditions of the consistency hold. Suppose further that:

-

(i)

\((\mathbf {Y}_i)_i\) is \(\alpha \)-mixing.

-

(ii)

(Moment condition): for some \(\delta >0\) and for each \(j\in \left\{ 1,\ldots ,r\right\} \)

$$\begin{aligned} \mathbb {E}\left[ \left| \frac{\partial m_{\theta }(\mathbf {Y}_{1})}{\partial \theta _j}\right| ^{2+\delta }\right] <\infty . \end{aligned}$$ -

(iii)

(Hessian Local condition): for some neighborhood \(\mathcal {U}\) of \(\theta _0\) and for \(j,k\in \left\{ 1,\ldots , r\right\} \):

$$\begin{aligned} \mathbb {E}\left[ \sup _{\theta \in \mathcal {U} }\left| \frac{\partial ^2m_{\theta }(\mathbf {Y}_{1})}{\partial \theta _j \partial \theta _k}\right| \right] < \infty . \end{aligned}$$

Then, \(\widehat{\theta }_{n}\) defined in (4) is asymptotically normal with asymptotic covariance matrix given by

where \(\mathcal {V}_{\theta _0}\) is the Hessian of the mapping \(\mathbf {P}m_{\theta }\) given in (3).

Proof

The proof follows from Hayashi (2000) (Proposition 7.8, p. 472) and Jones (2004), and by using the fact that, by regularity assumptions A1(i) and the Lebesgue Differentiation Theorem, we have \(\mathbb {E}[\nabla _{\theta }^{2}m_{\theta }(\mathbf {Y}_1)]=\nabla _{\theta }^{2}\mathbb {E}[m_{\theta }(\mathbf {Y}_1)]\). \(\square \)

It just remains to check that the conditions (ii) and (iii) of Lemma 6.2 hold under our assumptions A3(ii) and A(iii).

(ii): As the function \(\Pi _{\theta }\) is twice continuously differentiable w.r.t. \(\theta \), \(\forall \mathbf {y}_{i}\in \mathbb {R}^2\) and so also \(\Pi ^2_{\theta }\), the mapping \(m_{\theta }(\mathbf {y}_{i}): \theta \in \Theta \mapsto m_{\theta }(\mathbf {y}_{i})=Q_{\Pi ^{2}_{\theta }}(y_i)-2 V_{\Pi _{\theta }}(\mathbf {y}_i)\) is twice continuously differentiable \(\forall \theta \) \(\in \) \(\Theta \) and its first derivatives are given by

By assumption, for each \(j\in \left\{ 1,\ldots ,r\right\} \), \(\frac{\partial \Pi _{\theta }}{\partial \theta _j}\) and \(\frac{\partial \Pi ^2_{\theta }}{\partial \theta _j}\) belong to \(\mathbb {L}_{1}(A)\), therefore one can apply the Lebesgue Differentiation Theorem and Fubini Theorem to obtain

Then, for some \(\delta >0\), by the moment assumption A3(ii), we have

where \(C_{1}\) and \(C_{2}\) denote two positive constants.

(iii) For \(j,k \in \left\{ 1,\ldots ,r\right\} \), \(\frac{\partial ^2 \Pi _{\theta }}{\partial \theta _j \partial \theta _k}\) and \(\frac{\partial ^2 \Pi ^2_{\theta }}{\partial \theta _j \partial \theta _k}\) belong to \(\mathbb {L}_{1}(A)\), the Lebesgue Differentiation Theorem gives

and, for some neighborhood \(\mathcal {U}\) of \(\theta _0\), by the local dominance assumption A3(iii),

This ends the proof of Theorem 3.1. \(\square \)

7.2 Proof of Corollary 3.1

By replacing \(\nabla _{\theta }m_{\theta }(\mathbf {Y}_{1})\) by its expression (13), we have, for \(j=1\),

Owing to Lemma 3.1, we obtain

In a similar manner, using again Lemma 3.1, we have

and

Hence

For \(j=2\), we have

where the different terms are obtained from Lemma 3.1. Thus

Now, by using the stationarity assumption A1(iv) of \((X_i)_{i\ge 1}\) we obtain that

Calculus of the covariance matrix of Corollary 3.1for \(j>2\): By replacing \(\nabla _{\theta }m_{\theta }(\mathbf {Y}_{1})\) by its expression (13), we have

It follows from Lemma 3.1 and the stationarity assumption A1(iv) of \((X_i)_{i\ge 1}\) that

Moreover

Hence

On the other hand, we have

Furthermore, conditioning by \(X_{1:n}\) and using the Tower property, we obtain

Similarly, we have

Noting that for \(j>2\) the stationarity of \((X_i)_{i\ge 1}\) implies that \(\mathbb {E}[Q_{\nabla _{\theta }\Pi ^{2}_{\theta }}(Y_1)V_{\nabla _{\theta }\Pi _{\theta }}(\mathbf {Y}_j)]=\mathbb {E}[Q_{\nabla _{\theta }\Pi ^{2}_{\theta }}(Y_j)V_{\nabla _{\theta }\Pi _{\theta }}(\mathbf {Y}_1)]\). Hence,

By using Lemma 3.1, the last term is equal to

Therefore, the covariance matrix is given by

Thus

Expression of the Hessian matrix \(\mathcal {V}_{\theta }\): We have

Under A1(i), \(\forall \theta \) in \(\Theta \), the mapping \(\theta \mapsto \mathbf {P}m_{\theta }\) is twice differentiable w.r.t. \(\theta \) on the compact subset \(\Theta \). For \(j\in \left\{ 1,\ldots ,r\right\} \), at the point \(\theta =\theta _{0}\), we have

and for \(j,k \in \left\{ 1,\ldots ,r\right\} \):

The proof of Corollary 3.1 is completed. \(\square \)

7.3 Contrast and checking assumptions for the simulations

Contrasts for the linear AR simulations To compute the several contrasts defined in Proposition 4.1, the following quantities are essentially required: \((\Pi ^2_{\theta }(x,0))^*, \Pi ^*_{\theta }(x,y)\) and \(f^{*}_{\varepsilon }(x)\). For the model defined in (8), the square of the transition density is also Gaussian up to the parameter \(1/(2\sqrt{\pi \sigma ^2_{\eta }})\) with mean \(\phi x\) and variance \(\sigma ^2_{\eta }/2\). Hence, we are interested in computing the following Fourier transform:

By integration of the Gaussian density, we have that \(\tilde{\Pi }_{\theta }(x)=1/\left( 2\sqrt{\pi \sigma ^2_{\eta }}\right) \) \(\forall x\), which is integrable on \(\mathbb {L}_{1}(A)\). Nevertheless, for the cases 1 and 3 (super smooth noises), Assumptions A 2 and A 3(i)–(iii) are not satisfied since \( x \mapsto (\tilde{\Pi }_{\theta }(x))^{*}/f^{*}_{\varepsilon }(x)\) is not integrable despite the fact that the numerator and denominator taken separately can be integrated. In this case, we introduce a weight function \(\varphi \) belongs to \(\mathcal {S}(\mathbb {R})\), where \(\mathcal {S}(\mathbb {R})\) is the Schwartz space of functions defined by \(\mathcal {S}(\mathbb {R})=\{ f \in \mathcal {C}^{\infty }(\mathbb {R})\), \(\forall \alpha , N\) there exists \(C_{N,\alpha }\) s.t. \(\vert \nabla ^{\alpha }_{x} f(x)\vert \le C_{N,\alpha }(1+\vert x\vert )^{-N}\}\).

Hence, \(\forall \varphi \in \mathcal {S}(\mathbb {R})\), we have

where \(\delta _x\) is the Dirac distribution at point x.

Hence, by taking \(\varphi : u \mapsto \tilde{\varphi }(u)e^{ixu}/f^*_{\varepsilon }(-u) \in \mathcal {S}(\mathbb {R})\) with \( \tilde{\varphi }: u \mapsto 2\pi e^{-\sigma ^2_{\varepsilon }u^2}\), we obtain the operator Q as follows

where \(\varphi (0)=1\) for all cases in Sect. 4. Here, we take \(\tilde{\varphi }\) dependent of \(\sigma ^2_{\varepsilon }\) since we assume that this variance is known but one can take any function \(\tilde{\varphi }\) such that \(\tilde{\varphi }/f^*_{\varepsilon }\) is in \(\mathcal {S}\).

For \(\Pi ^*_{\theta }(x,y)\) we make the same analogy, that is let \(\Pi _{u,\theta }(v)\) the function \(v \mapsto \Pi _{\theta }(u,v)\) \(\forall u\). For the Gaussian transition density \(\Pi _{\theta }\) we have \(\forall u\),

Let \(\Pi _{y,\theta }(u)\) be the function \(u \mapsto (\Pi _{u,\theta }(y))^{*}\) \(\forall y\). Then, we have \(\forall \varphi \in \mathcal {S}\) and \(\forall y\)

Hence, the operator \(V_{\Pi _{\theta }}\) is obtained as follows for the case 1, i.e.,

where \(\varphi : u \mapsto e^{ixu}\tilde{\varphi }_1(u)/f^*_{\varepsilon }(-u)\) with \( \tilde{\varphi }_1: u \mapsto 2\pi e^{-\sigma ^2_{\varepsilon }u^2}\) and \(\tilde{\varphi }_2: v \mapsto e^{-ivy-\sigma ^2_{\varepsilon }v^2}\) and such that \(\varphi , \varphi _1\) and \(\varphi _2 \in \mathcal {S}\). For the cases 2 and 3, one can make the same computations by replacing \(f^{*}_{\varepsilon }\) by its expression given in Sect. 4.

Checking assumptions A1–A3 By inspecting the function \(b_{\theta _0}:x \mapsto \phi _0 x\) one can easily see that regularity assumptions are well satisfied and, if \(\phi _0\) satisfies \(|\phi _0|<1\), the process is strictly stationary. It remains to check Assumptions A1(iv) and A2–A3. The strict convexity of the function \(\mathbf {P}m_{\theta }\) implies that \(\theta _0\) is a minimum and Assumption A1(iv) also requires to compute the Hessian matrix belonging to \(\mathcal {S}^{ym}_{2\times 2}\) (where \(\mathcal {S}^{ym}\) represents the space of symmetric matrix). The stationary density \(f_{\theta _0}\) is here a centered Gaussian density with zero mean and variance \(\sigma ^{2}_{0,\eta }/(1-\phi _0^2)\), so the Hessian matrix \(\mathcal {V}_{\theta _0}\) is given by

(see Corollary 3.1). Nevertheless, we assume here that \(f_{\theta _0}\) is unknown, so the Hessian matrix is consistently estimated by

The computation of this matrix can be easily done for Gaussian AR processes whatever the noises since all derivatives of the Gaussian densities are explicit.

As we have pointed out, the integrability Assumptions A2 and A3(i) and (iii) are not satisfied for Gaussian AR processes with super smooth noises (cases 1 and 3), hence the introduction in practice of a weight function \(\varphi \) belonging to the Swchartz space \(\mathcal {S}\) is then mandatory. On the other hand, for Laplace noises the convergence towards zero of the modulus of the Fourier transform is polynomial and the functions \((\Pi _{\theta }^{*}/f^*_{\varepsilon })\), \(({\partial \Pi _{\theta }}/{\partial \theta _{j}})^{*}/f^*_{\varepsilon }\) and \(({\partial ^{2} \Pi _{\theta }}/{\partial \theta _{j}\partial \theta _{l}})^{*}/f^*_{\varepsilon }\) have the following form \(C_1(\theta )P(x)\exp (-C_2(\theta )x^2)\) (meaning that they are super smooth and so integrable) where \(C_1(\theta )\) and \(C_2(\theta )\) are two constants well-defined in the compact parameter set \(\Theta \) and P(x) a polynomial function independent of \(\theta \). Hence, moment conditions and local dominance are satisfied.

Contrasts for the nonlinear AR simulations Consider the nonlinear process in (11). For this model the transition density is Gaussian with mean \(b_{\theta _0}(x)=\phi _{0}\sin (x)\) and variance \(\sigma ^2_{0,\eta }\). In the same manner the square of the transition density is also Gaussian with the same mean \(b_{\theta _0}(x)\) and variance \(\sigma ^2_{0,\eta }/2\) up to the constant of normalization \(1/(2\sqrt{\pi }\sigma _{0,\eta })\). Hence, the computation of the operator \(Q_{\Pi ^2_{\theta }}\) remains unchanged, and we have to compute the operator \(V_{\Pi _{\theta }}\). Because of the nonlinearity of the drift function, this operator does not admit an explicit form and is given by

where \(\varphi : u \mapsto 2\pi e^{ixu} e^{-\sigma ^2_{\varepsilon }u^2}/f^*_{\varepsilon }(-u)\) and \(\tilde{\varphi }_2: v \mapsto e^{-ivy-\sigma ^2_{\varepsilon }v^2}\).

Notes

We refer the reader to Doukhan (1994) for the proof that if \((X_i)_i\) is an ergodic process then the process \((Y_i)_i\), which is the sum of an ergodic process with an i.i.d. noise, is again stationary ergodic. Moreover, by the definition of an ergodic process, if \((Y_i)_i\) is an ergodic process then the couple \(\mathbf {Y}_i=(Y_i, Y_{i+1})\) inherits the property (see Genon-Catalot et al. (2000)).

We argue that our approach can be applied when we introduce a long mean parameter \(\mu \) in the volatility process.

References

Andrieu C, Doucet A, Holenstein R (2010) Particle Markov chain Monte Carlo methods. J R Stat Soc B 72(3):269–342

Bos CS, Shephard N (2006) Inference for adaptive time series models: stochastic volatility and conditionally gaussian state space form. Econom Rev 25(2–3):219–244

Brunel E, Comte F, Lacour C (2007) Adaptive estimation of the conditional density in the presence of censoring. Sankhyā 69:734–763

Burmeister E, Wall KD (1982) Kalman filtering estimation of unobserved rational expectations with an application to the german hyperinflation. J Econom 20(2):255–284

Burmeister E, Wall KD, Hamilton JD (1986) Estimation of unobserved expected monthly inflation using kalman filtering. J Bus Econ Stat 4(2):147–160

Butucea C, Matias C (2005) Minimax estimation of the noise level and of the deconvolution density in a semiparametric convolution model. Bernoulli 11(2):309–340

Chan JC (2017) The stochastic volatility in mean model with time-varying parameters: an application to inflation modeling. J Bus Econ Stat 35(1):17–28

Chanda KC (1995) Large sample analysis of autoregressive moving-average models with errors in variables. J Time Ser Anal 16(1):1–15

Chopin N, Jacob PE, Papaspiliopoulos O (2013) Smc2: an efficient algorithm for sequential analysis of state space models. J R Stat Soc B 75(3):397–426

Comte F, Taupin M-L (2001) Semiparametric estimation in the (auto)-regressive \(\beta \)-mixing model with errors-in-variables. Math Methods Stat 10(2):121–160

Costa M, Alpuim T (2010) Parameter estimation of state space models for univariate observations. J Stat Plann Inference 140(7):1889–1902

Dedecker J, Samson A, Taupin M-L (2014) Estimation in autoregressive model with measurement error. ESAIM Prob Stat 18:277–307

Delaigle A, Hall P (2016) Methodology for non-parametric deconvolution when the error distribution is unknown. J R Stat Soc B 231–252

Delyon B, Lavielle M, Moulines E (1999) Convergence of a stochastic approximation version of the em algorithm. Ann Stat 94–128

Douc R, Moulines E, Olsson J, van Handel R (2011) Consistency of the maximum likelihood estimator for general hidden Markov models. Ann Stat 39(1):474–513

Doucet A, De Freitas N, Gordon N (2001) An introduction to sequential monte carlo methods. In: Sequential Monte Carlo methods in practice, pp 3–14. Springer

Doukhan P (1994) Mixing. Properties and examples, volume 85 of Lecture notes in statistics. Springer, New York

Ebner B, Klar B, Meintanis SG (2018) Fourier inference for stochastic volatility models with heavy-tailed innovations. Stat Pap 59(3):1043–1060

Efromovich S (1997) Density estimation for the case of supersmooth measurement error. J Am Stat Assoc 92(438):526–535

El Kolei S (2013) Parametric estimation of hidden stochastic model by contrast minimization and deconvolution. Metrika 76(8):1031–1081

El Kolei S, Patras F (2018) Analysis, detection and correction of misspecified discrete time state space models. J Comput Appl Math 333:200–214

El Kolei S, Pelgrin F (2017) Parametric inference of autoregressive heteroscedastic models with errors in variables. Stat Probab Lett 130:63–70

Fan J, Truong YK, Wang Y (1991) Nonparametric function estimation involving errors-in-variables. In: Nonparametric functional estimation and related topics, pp 613–627. Springer

Fan G-L, Liang H-Y, Wang J-F (2013) Empirical likelihood for heteroscedastic partially linear errors-in-variables model with \(\alpha \)-mixing errors. Stat Pap 54(1):85–112

Genon-Catalot V, Jeantheau T, Larédo C (2000) Stochastic volatility models as hidden Markov models and statistical applications. Bernoulli 6(6):1051–1079

Ghysels E, Harvey AC, Renault E (1996) Stochastic volatility. Handb Stat 14:119–191

Hamilton JD (1994a) State-space models. Handb Econ 4:3039–3080

Hamilton JD (1994b) Time series analysis, vol 2. Princeton University Press, Princeton

Hannan EJ, Rissanen J (1982) Recursive estimation of mixed autoregressive-moving average order. Biometrika 69(1):81–94

Hayashi F (2000) Econometrics. Princeton University Press, Princeton

Holston K, Laubach T, Williams JC (2017) Measuring the natural rate of interest: international trends and determinants. J Int Econ 108:S59–S75

Jones GL (2004) On the Markov chain central limit theorem. Probab Surv 1:299–320

Kappus J, Mabon G (2014) Adaptive density estimation in deconvolution problems with unknown error distribution. Electron J Stat 8(2):2879–2904

Lacour C (2007) Adaptive estimation of the transition density of a markov chain. Annales de l’IHP Probabilités et statistiques 43:571–597

Lacour C (2008a) Adaptive estimation of the transition density of a particular hidden markov chain. J Multivar Anal 99(5):787–814

Lacour C (2008b) Least squares type estimation of the transition density of a particular hidden Markov chain. Electron J Stat 2:1–39

Lacour C (2008c) Nonparametric estimation of the stationary density and the transition density of a markov chain. Stoch Process Appl 118(2):232–260

Laubach T, Williams JC (2003) Measuring the natural rate of interest. Rev Econ Stat 85(4):1063–1070

Lilliefors HW (1967) On the kolmogorov-smirnov test for normality with mean and variance unknown. J Am Stat Assoc 62(318):399–402

Lindsten F (2013) An efficient stochastic approximation EM algorithm using conditional particle filters. In: 2013 IEEE international conference on acoustics, speech and signal processing, pp 6274–6278. IEEE

Meister A (2004) On the effect of misspecifying the error density in a deconvolution problem. Can J Stat 32(4):439–449

Miao Y, Zhao F, Wang K, Chen Y (2013) Asymptotic normality and strong consistency of LS estimators in the EV regression model with NA errors. Stat Pap 54(1):193–206

Mokkadem A et al (1987) Sur un modèle autorégressif non linéaire, ergodicité et ergodicité géométrique. J Time Ser Anal 8(2):195–204

Neumann MH, Hössjer O (1997) On the effect of estimating the error density in nonparametric deconvolution. J Nonparametric Stat 7(4):307–330

Newey WK, McFadden D (1994a) Large sample estimation and hypothesis testing. In: Handbook of econometrics, Vol. IV, volume 2 of Handbooks in econom., pp 2111–2245. North-Holland, Amsterdam

Newey WK, McFadden D (1994b) Large sample estimation and hypothesis testing. Handb Econ 4:2111–2245

Olsson J, Rydén T (2007) Particle filter-based approximate maximum likelihood inference asymptotics in state-space models. In: Conference Oxford sur les méthodes de Monte Carlo séquentielles, volume 19 of ESAIM Proc., pp 115–120. EDP Sci., Les Ulis

Omori Y, Chib S, Shephard N, Nakajima J (2007) Stochastic volatility with leverage: fast and efficient likelihood inference. J Econom 140(2):425–449

Pivetta F, Reis R (2007) The persistence of inflation in the united states. J Econ Dyn Control 31(4):1326–1358

Staudenmayer J, Buonaccorsi JP (2005) Measurement error in linear autoregressive models. J Am Stat Assoc 100(471):841–852

Stock JH, Watson MW (2007) Why has us inflation become harder to forecast? J Money Credit Bank 39:3–33

Taylor S (2005) Financial returns modelled by the product of two stochastic processes, a study of daily sugar prices, vol 1. Oxford University Press, Oxford, pp 203–226

Trapletti A, Hornik K (2019) tseries: time series analysis and computational finance. R package version 0.10-47

Van der Vaart AW (1998) Asymptotic statistics, vol 3. Cambridge series in statistical and probabilistic mathematics. Cambridge University Press, Cambridge

Zhou H, You J, Zhou B (2010) Statistical inference for fixed-effects partially linear regression models with errors in variables. Stat Pap 51(3):629–650

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chesneau, C., El Kolei, S. & Navarro, F. Parametric estimation of hidden Markov models by least squares type estimation and deconvolution. Stat Papers 63, 1615–1648 (2022). https://doi.org/10.1007/s00362-022-01288-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-022-01288-x