Abstract

In this article, a dynamic reliability measure based on ranked set sampling is introduced, and its properties are investigated in theory and simulation. The results support the preference of the suggested index over the analogous one in simple random sampling. A data set from an agricultural experiment is analyzed for illustration.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ranked set sampling (RSS) is a sampling design that often leads to improved statistical inference as compared with simple random sampling (SRS). It is appropriate when the sampling units are expensive and/or difficult to measure but are reasonably simple and cheap to order according to the variable of interest. Ranking can be based on concomitant variables, expert judgment or any mean that does not involve actual measurement.

A basic description of the RSS procedure is as follows: \(n^2\) units are collected as iid draws from the population. These units are randomly partitioned into n sets, each of size n. In the first set, the response judged to be smallest is taken for full quantification; In the second set, the response judged to be second smallest is taken; and so on, until in the last set, the response judged to be largest is taken. The full measurement, along with the associated ranks form a ranked set sample of size n. Let \(X_{[i]}\) be the ith judgement order statistic from the ith set. (The ith true order statistics is represented by \(X_{(i)}\) for clarity.) Then, the resulting ranked set sample is denoted by \(X_{[1]},\ldots ,X_{[n]}\). A ranked set sample, consisting of independent order statistics, is more informative than a simple random sample of the same size. The results in the literature have shown that statistical procedures based on RSS tend to be superior to their SRS analogues. For a book-length treatment of RSS and its applications, see Chen et al. (2004).

The RSS was first introduced by McIntyre (1952) in an agricultural experiment for estimating the mean pasture yield. Since then, it has been well adopted to environmental, ecological and health studies. Kvam and Samaniego (1994) provided an example in reliability context. Consider the lifetime of a k-out-of-n system in which components have independent and identically distributed lifetimes is equal to the \((n-k+1)\)th order statistic from a sample of size n. When independent lifetimes of several k-out-of-n systems with varying k and n are observed, the experimental data constitute a ranked set sample. In addition, when observations consist of lifetimes of coherent systems together with the number of failed components, the data are stochastically equivalent to a collection of independently observed order statistics. Thus RSS arise naturally in life testing experiments in which one observes the system lifetime rather than the lifetimes of the individual components.

The estimation of system reliability has drawn much attention in the statistical literature. The most widely used approach for this purpose is the well-known stress-strength model. It employs \(R=P(X>Y)\) as an index to quantify reliability of a component with strength X which is subjected to stress Y. The estimation of R has been extensively investigated in the literature when X and Y are independent variables, and belong to the same family of distributions. A comprehensive account of this topic appear in Kotz et al. (2003). For example, Díaz-Francés and Montoya (2013) discuss the profile likelihood method in statistical inference for R when X and Y are independent exponential random variables.

Given the fact ranked set sample data could represent lifetime, it is relevant to research on reliability measures in RSS scheme. Interpretation of such indices in other disciplines (as exemplified in Sect. 6) would be an additional support. Sengupta and Mukhuti (2008) studied unbiased estimation of R based on RSS in nonparametric setting. They showed that the proposed estimator is more efficient than its SRS rival, even in the presence of ranking errors. We intend to introduce a time-dependent reliability measure, and to investigate its theoretical properties.

Section 2 presents the new measure along with some notions and results which will be used in the sequel. Some theoretical results are established in Sect. 3. Perfect ranking setup is discussed in Sect. 4. Results of Monte Carlo experiment conducted to get insight of the behavior of the proposed index appear in Sect. 5. An application is discussed in Sect. 6. A summary and direction for future research are given in Sect. 7. Figures are postponed to an appendix.

2 Preliminaries

In reliability theory, there are several approaches to compare lifetimes of two components. Among the most popular methods are to compare the survival functions, the failure rates and the mean residual lifetime functions. Let the random variables X and Y be the lifetimes of two systems. The density, distribution and survival function of X (Y) are denoted by f (g), F (G) and \(\bar{F}=1-F (\bar{G}=1-G)\), respectively. Assume that both systems are operating at time \(t>0\). Then the residual lifetimes of them are \(X_t=(X-t|X>t)\) and \(Y_t=(Y-t|Y>t)\), respectively. Taking into account the age of systems, Zardasht and Asadi (2010) introduced a time-dependent criterion to compare the two residual lifetimes. They considered function \(R(t)=P(X_t>Y_t)\). Note that R(t) can be written as

where \(R_1(t)=P(X>Y>t)\) and \(R_2(t)=P(X>t,Y>t)\). Using simple random samples \(X_1,\ldots ,X_m\) and \(Y_1,\ldots ,Y_n\) from F and G, an estimate of R(t) can be made as

where

and

Although \(\hat{R}_1(t)\) and \(\hat{R}_2(t)\) are respectively unbiased estimators of \(R_1(t)\) and \(R_2(t)\), \(\hat{R}(t)\) is only asymptotically unbiased.

Let \(X_{[1]},\ldots ,X_{[m]}\) and \(Y_{[1]},\ldots ,Y_{[n]}\) be two ranked set samples from F and G, respectively. The estimator of R(t) in RSS is given by

where

and

When \(t=-\infty \), the above estimator reduces to that proposed by Sengupta and Mukhuti (2008). In the next section, we study properties of \(\hat{R}^*(t)\). It is to be noted that \(\hat{R}(t)\) and \(\hat{R}^*(t)\) are defined for \(t \in (-\infty ,t^*)\), where \(t^*=\min \{t_X,t_Y\}\) with \(t_X\) and \(t_Y\) being the supremum of the set of values in the support of X and Y, respectively. However, in practice, there may be situations that all sample units of X and/or Y are less than the value of t. In such cases, the numerator and denominator of the corresponding estimator are zero, and we define it to be zero at t.

As the ranking process in RSS is done either by expert judgment or using an easily available covariate, it need not match the true ranking according to the variable of interest. This situation is called imperfect ranking. It is unavoidable because true orders are unknown unless all units are actually measured. There are certain models that allow for considering such cases. We build on an imperfect ranking model introduced by Bohn and Wolfe (1994). It is now briefly described for the two-sample problem mentioned above.

The density, distribution and survival function of the ith true (judgement) order statistic of a random sample of size m from F are denoted by \(f_{(i)} (f_{[i]})\), \(F_{(i)} (F_{[i]})\), and \(\bar{F}_{(i)} (\bar{F}_{[i]})\), respectively. Similar notations are used for a random sample of size n from G. We postulate an imperfect ranking model \(M_X\) under which \(X_{[i]}\)’s are assumed to be independently distributed as

where \(p_{\,ir}\) is the probability that the rth order statistic is judged to have rank i, and thus \(\sum _{r=1}^m p_{\,ir}=1\). It is further assumed that \(\sum _{i=1}^m p_{\,ir}=1\). Obviously, this is true in the perfect ranking scenario, i.e. when \(p_{\,ii}=1\) and \(p_{\,ir}=0 \,(r \ne i)\). Similarly, we postulate an imperfect ranking model \(M_Y\) under which \(Y_{[j]}\)’s are assumed to be independently distributed as

where \(q_{\,js}\) is the probability that the sth order statistic is judged to have rank j, and therefore \(\sum _{s=1}^n q_{\,js}=1\). Moreover, it is assumed that \(\sum _{j=1}^n q_{\,js}=1\). The model considering \(M_X\) and \(M_Y\) together is referred to as M.

We close this section by pointing out some results which are repeatedly used in this work. According to a basic identity in RSS,

It is easy to verify that these equations hold under the model M, i.e.

All identities in (1) and (2) can be expressed in terms of density functions.

3 Main results

First we show that \(\hat{R}_1^*(t)\) and \(\hat{R}_2^*(t)\) are unbiased estimators. Next, it is proved that they are more precise than their SRS analogs.

Proposition 1

The estimators \(\hat{R}_1^*(t)\) and \(\hat{R}_2^*(t)\) are unbiased.

Proof

Using equations in (2) we have

Similarly,

\(\square \)

Proposition 2

The variances of \(\hat{R}_1 (t)\) and \(\hat{R}_1^*(t)\) (under model M) are given by

and

Proof

It is easy to show that

where

and

From (5)–(9) and unbiasedness of \(\hat{R}_1 (t)\), the proof of the first part is complete. Similarly,

where

and

Now the second part follows from (10)–(13) and unbiasedness of \(\hat{R}_1^*(t)\). \(\square \)

The variances of \(\hat{R}_1 (t)\) and \(\hat{R}_1^*(t)\) are compared in the next proposition.

Proposition 3

Under model M, \(Var\left( \hat{R}_1^*(t)\right) \le Var\left( \hat{R}_1 (t)\right) \), and the equality holds if and only if \(F_{[i]}=F\, (i=1,\ldots ,m)\) and \(G_{[j]}=G\, (j=1,\ldots ,n)\). The latter happens when either \(m=n=1\), or \(p_{ir}=1/m\, (i,r=1,\ldots ,m)\) and \(q_{js}=1/n\, (j,s=1,\ldots ,n)\).

Proof

Using equations (3) and (4), it can be shown

where

and

Clearly, \(\Gamma _i \ge 0\, (i=1,2,3)\), and hence \(Var\left( \hat{R}_1^*(t)\right) \le Var\left( \hat{R}_1 (t)\right) \).

If \(F_{[i]}=F\, (i=1,\ldots ,m)\) and \(G_{[j]}=G\, (j=1,\ldots ,n)\), then \(\Gamma _1=\Gamma _2=\Gamma _3=0\). Conversely, if \(\Gamma _1=\Gamma _2=\Gamma _3=0\), we have the following conclusions. Having \(\Gamma _3=0\) implies that for \(j=1,\dots ,n\),

and thus

By continuity of F and G, the above result is equivalent to

or \(G_{[j]}=G\). Putting this and \(\Gamma _1=0\) together, it follows that for \(i=1,\dots ,m\),

or \(F_{[i]}=F\). \(\square \)

We now present counterparts of propositions 2 and 3 in estimating \(R_2(t)\).

Proposition 4

The variances of \(\hat{R}_2 (t)\) and \(\hat{R}_2^*(t)\) (under model M) are given by

and

Proof

It is readily seen that

where

and

The first part follows from (20)–(24) and unbiasedness of \(\hat{R}_2(t)\). By a similar argument,

where

and

From (25)–(29) and unbiasedness of \(\hat{R}_2^*(t)\), the second part is concluded. \(\square \)

The variances of \(\hat{R}_2 (t)\) and \(\hat{R}_2^*(t)\) are compared in the next proposition.

Proposition 5

Under model M, \(Var\left( \hat{R}_2^*(t)\right) \le Var\left( \hat{R}_2 (t)\right) \), and the equality holds if and only if \(F_{[i]}=F\, (i=1,\ldots ,m)\) and \(G_{[j]}=G\, (j=1,\ldots ,n)\). The latter happens when either \(m=n=1\), or \(p_{ir}=1/m\, (i,r=1,\ldots ,m)\) and \(q_{js}=1/n\, (j,s=1,\ldots ,n)\).

Proof

Using equations (18) and (19), it is straightforward to see that

where

and

Let \(u_i=\bar{F}_{[i]}\,(i=1,\ldots ,m)\) and \(v_j=\bar{G}_{[j]}\,(j=1,\ldots ,n)\) with the corresponding means \(\bar{u}=\sum _{i=1}^m u_i /m\) and \(\bar{v}=\sum _{j=1}^n v_j/n\), and variances \(S^2_u=\sum _{i=1}^m (u_i-\bar{u})^2/m\) and \(S^2_v=\sum _{j=1}^n (v_j-\bar{v})^2/n\). Also, assume \(\overline{u^2}=\sum _{i=1}^m u_i^2 /m\) and \(\overline{v^2}=\sum _{j=1}^n v_j^2/n\). Then, we have

and

The first part of the proposition holds owing to the following inequality

where the first inequality follows from the fact that \(\bar{u}\ge \overline{u^2}\) and \(\bar{v}\ge \overline{v^2}\).

If \(F_{[i]}=F\, (i=1,\ldots ,m)\) and \(G_{[j]}=G\, (j=1,\ldots ,n)\), then \(\Delta _1=\Delta _2=\Delta _3=0\). Conversely, if \(\Delta _1=\Delta _2=\Delta _3=0\), then from (30) we get \(S^2_u=S^2_v=0\). That is to say \(F_{[i]}=F\, (i=1,\ldots ,m)\) and \(G_{[j]}=G\, (j=1,\ldots ,n)\). \(\square \)

The following proposition provides components needed to approximate \(Var(\hat{R}(t))\) and \(Var(\hat{R}^*(t))\).

Proposition 6

The covariances between \(\hat{R}_1(t)\) and \(\hat{R}_2(t)\), and \(\hat{R}_1^*(t)\) and \(\hat{R}_2^*(t)\) (under model M) are given by

and

Proof

It can be shown that

where

and

From (33)–(37) and unbiasedness of \(\hat{R}_1(t)\) and \(\hat{R}_2(t)\), we get (31). Similarly,

where

and

Now, (32) is immediate from (38)–(42) and unbiasedness of \(\hat{R}_1^*(t)\) and \(\hat{R}_2^*(t)\). \(\square \)

The next result shows asymptotic unbiasedness of \(\hat{R}(t)\) and \(\hat{R}^*(t)\), and provides approximations of their variances.

Proposition 7

The estimators \(\hat{R}(t)\) and \(\hat{R}^*(t)\) are asymptotically unbiased with approximate variances

and

Proof

Using continuous map proposition (Shao 2003, p. 59, theorem 1.10(i)) it can be concluded that \(\hat{R}(t)\) and \(\hat{R}^*(t)\) are strongly consistent. This and the dominated convergence theorem imply asymptotic unbiasedness of the two estimators.

Let \(T_1,\ldots ,T_k\) be random variables with means \(\theta _1,\ldots ,\theta _k\), and define \(\mathbf {T}=(T_1,\ldots ,T_k)\) and \(\varvec{\theta }=(\theta _1,\ldots ,\theta _k)\). Suppose there is a differentiable function \(g(\mathbf {T})\) (an estimator of some parameter) for which we want an approximate estimate of variance. Using the first-order Taylor series expansion of g about \(\varvec{\theta }\), we get

where

Now, (43) and (44) are concluded by takeing \(g(\mathbf {T})=T_1/T_2\), where \(T_1\) and \(T_2\) are the numerator and the denominator of each estimator, respectively. The components needed for the two approximations are given in propositions 2, 4 and 6. \(\square \)

In view of propositions 1, 2 and 4, it is expected that \(\hat{R}^*(t)\) will be more efficient than \(\hat{R}(t)\). It may be difficult to show this using the above proposition as we only have approximations for \(Var\left( \hat{R}(t)\right) \) and \(Var\left( \hat{R}^*(t)\right) \).

4 Perfect ranking setup

The judgment ranking process is of high importance in drawing a representative sample by RSS. In extreme cases, ranking may be so poor to yield a simple random sample. Hence, RSS is at least as efficient as SRS, intuitively. In the case of estimating \(R_1(t)\) and \(R_2(t)\), this was formally shown in propositions 3 and 5. The maximum efficiency is expected to arise in the absence of ranking errors, i.e. the perfect ranking scenario. This section aims to establish some properties in this respect. To this end, we need a few notions and results from matrix algebra, and a lemma which are set out here.

The \(L_1\), \(L_\infty \) and \(L_2\) norms for an \(r \times c\) matrix \(\mathbf {A}=[a_{\,i j}]\) are defined as

and

where \(\lambda _{\max }(\mathbf {A' A})\) is the largest eigenvalue of \(\mathbf {A' A}\) matrix. If the product of matrices \(\mathbf {A}\) and \(\mathbf {B}\) is defined, then

and

See Datta (2010) for more details.

Lemma 1

If \(h=\sum _{j=1}^n \bar{F}\left( Y_{(j)}\right) I\left( Y_{(j)}>t\right) \) and \(H=\sum _{j=1}^n \bar{F}\left( Y_{[j]}\right) I\left( Y_{[j]}>t\right) \), then \(Var(h) \le Var(H)\).

Proof

Using conditional variance formula, we have

as was asserted. \(\square \)

Let \(\tilde{R}_1^*(t)\) and \(\tilde{R}_2^*(t)\) denote the estimators \(\hat{R}_1^*(t)\) and \(\hat{R}_2^*(t)\) under perfect ranking assumption, respectively. Then we have the following two propositions. It should be mentioned that the approach adopted in proofs is distinctly different from that of similar result in Sengupta and Mukhuti (2008).

Proposition 8

Under model M, \(Var\left( \tilde{R}_1^*(t)\right) \le Var\left( \hat{R}_1^*(t)\right) \)

Proof

In view of (4), it is sufficient to show that

and

We proceed with proving the first inequality. Assume that \(Z_{(i)}=\sum _{j=1}^n \bar{F}_{(i)}\left( Y_{[j]}\right) I\left( Y_{[j]}>t\right) \) and \(Z_{[i]}=\sum _{j=1}^n \bar{F}_{[i]}\left( Y_{[j]}\right) I\left( Y_{[j]}>t\right) \). Then

Let \(\Omega _Y\) be the sample space on which Y is defined. If \(\mathbf {P}=[p_{\,i r}]\) and \(\mathbf {Z}'=(Z_{(1)}(\vartheta ),\ldots ,Z_{(m)}(\vartheta ))\) given a fixed \(\vartheta \in \Omega _Y\), then using (45), (46) and (49) it follows that

The last equality holds because \(\sum _{i=1}^m p_{\,i k}=\sum _{k=1}^m p_{\,i k}=1\). Accordingly,

Now, (47) is concluded if

For \(i=1,\ldots ,m\), suppose \(h_{(i)}=\sum _{j=1}^n \bar{F}_{(i)}\left( Y_{(j)}\right) I\left( Y_{(j)}>t\right) \) and h be as in Lemma 1. We note that

So, \(\bar{F}_{(i)}(t)\) is an increasing function in i, and thereby \(h_{(1)}<\cdots < h_{(m)}\) are order statistics. Therefore,

where \(f_{h_{(i)}}\) and \(f_h\) denote the density function of \(h_{(i)}\) and h, respectively. Similarly, one can define \(H_{(i)}=\sum _{j=1}^n \bar{F}_{(i)}(Y_{[j]}) I(Y_{[j]}>t)\) and H as in Lemma 1, and conclude that

From (51) and (52), (50) reduces to \(E(h^2) \le E(H^2)\). It is equivalent to \(Var(h) \le Var(H)\) as \(E(h)=E(H)\). This holds by virtue of Lemma 1.

Let \(\Omega _X\) be the sample space on which X is defined. If \(\mathbf {Q}=[q_{\,j s}]\) and \(\mathbf {G}'=( G_{(1)}(X(\eta ))-G_{(1)}(t) ,\ldots , G_{(n)}(X(\eta ))-G_{(n)}(t) )\) for each fixed \(\eta \in \Omega _X\), then (48) follows by applying (45) and (46) using \(\mathbf {Q}\) and \(\mathbf {G}\). \(\square \)

Proposition 9

Under model M, \(Var( \tilde{R}_2^*(t) ) \le Var( \hat{R}_2^*(t) )\).

Proof

According to (19) it is sufficient to show that

and

Let \(\mathbf {P}\) and \(\mathbf {Q}\) be as in the the previous proposition, \(\bar{\mathbf {F}}'=\left( \bar{F}_{(1)} (t),\ldots ,\bar{F}_{(m)} (t)\right) \) and \(\bar{\mathbf {G}}'=\left( \bar{G}_{(1)} (t),\ldots ,\bar{G}_{(n)} (t)\right) \). Then using (45) and (46)

Proceeding as in the above with \(\mathbf {Q}\) and \({\bar{\mathbf {G}}}\), it is proved that \(\sum _{j=1}^n \bar{G}_{[j]}^2 (t) \le \sum _{j=1}^n \bar{G}_{(j)}^2 (t)\) \(\square \)

5 Simulation study

We used Monte Carlo simulation to assess the performance of the proposed estimator. In doing so, it was assumed that both populations follow Weibull, normal or uniform distribution. Let X be distributed as Weibull with distribution function

which is denoted by \(X \sim W(\alpha ,\beta )\). If \(X \sim W(\alpha _1,\beta _1)\) and \(Y \sim W(\alpha _2,\beta _2)\) are independent random variables, then it can be shown that

Similarly, if X has a normal distribution with mean zero and variance \(\sigma ^2\), \(X \sim N(0,\sigma ^2)\), and Y is a standard normal random variable, \(Y \sim N(0,1)\), then

where \(\Phi (.)\) is the distribution function of Y. Finally, suppose X and Y are uniformly distributed on intervals (0, a) and (0, b), respectively, where \(a<b\). Then

We considered the following pairs of distributions:

-

\(X\sim W(2,1)\) versus \(Y\sim W(2,2),\)

-

\(X\sim W(2,1)\) versus \(Y\sim W(1,1),\)

-

\(X\sim W(1,1)\) versus \(Y\sim W(0.5,3),\)

-

\(X\sim N(0,(1.25)^2)\) versus \(Y\sim N(0,1),\)

-

\(X\sim U(0,1.5)\) versus \(Y\sim U(0,2).\)

Also, the selected two groups of sample sizes are \(m,n=2,5,10\) and \(m,n=20,50,100\).

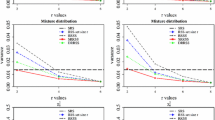

Given a fixed t, the efficiency of \(\tilde{R}^*(t)=\tilde{R}_1^*(t)/\tilde{R}_2^*(t)\) relative to \(\hat{R}(t)\) is estimated as follows. For each combination of distributions and sample sizes, 10,000 pairs of samples were generated in SRS and RSS schemes. The two estimators were computed from each pair of samples in the corresponding designs, and their mean squared errors (MSEs) were determined. The relative efficiency (RE) is defined as the ratio of \(\widehat{MSE}(\hat{R}(t))\) to \(\widehat{MSE}(\tilde{R}^*(t))\). The RE values larger than one indicate that \(\tilde{R}^*(t)\) is more efficient than \(\hat{R}(t)\). Figures 1, 2, 3, 4, 5, 6, 7, 8, 9 and 10 in the appendix display the results.

It is observed that that RSS based estimator is more efficient that its SRS competitor as was shown in theory. Also for each pair of distributions and fixed m, the larger n, the higher RE, generally. This trend is sometimes violated, e.g. see Fig. 4, when \(m=50\) and t is small. The interesting thing to note is that how RE is affected by the time. Although RE (as a function of t) may show fluctuations at the beginning, it becomes monotone decreasing as time goes on. The point that RE is maximized depends on the choice of parent distributions. For example, when \(X\sim W(2,1)\) and \(Y \sim W(1,1)\), the maximum RE is nearly obtained at \(t=0\), while this is not the case with \(X\sim W(2,1)\) and \(Y \sim W(2,2)\).

To have better understanding of the estimators’ behavior, we provide graphs for MSEs of the estimators as a function of t. To save space, three of the above mentioned pairs of distributions were only considered. Moreover, the sample sizes were limited to \((m,n)=(5,5),(10,10),(20,20)\). The results are given in Figs. 11 and 12. It is seen that under both SRS and RSS designs, MSEs are decreasing in sample sizes, given a fixed t. Finally, Table 1 contains estimated biases of the estimators at some points, where the entries given in parenthesis are based on RSS. Analogs of Figs. 11 and 12 for bias (not provided here) suggest that biases in the two schemes are bounded above as a function of t.

6 Application

Although the use of stress-strength models was originally motivated by problems in physics and engineering, it is not limited to these contexts. It is worth mentioning that R provides a general measure of the difference between two populations, and has found applications in different fields such as economics, quality control, psychology, medicine and clinical trials. For instance, if Y is the response of a control group, and X is that of a treatment group, then R is a measure of the treatment effect.

We now illustrate the proposed procedure using a data set collected by Murray et al. (2000). They conducted an experiment in which apple trees are sprayed with chemical containing fluorescent tracer, Tinopal CBS-X, at 2 % concentration level in water. Two nine-tree plots were chosen for spraying. One plot was sprayed at high volume, using coarse nozzles on the sprayer to give a large average droplet size. The other plot was sprayed at low volume, using fine nozzles to give a small average droplet size. Fifty sets of five leaves were identified from the central five trees of each plot, and used to draw 10 copies a ranked set sample of size five, from each plot. The variable of interest is the percentage of area covered by the spray on the surface of the leaves. The formal measurement entails chemical analysis of the solution collected from the surface of the leaves, and thereby is a time-consuming and expensive process. The judgment ranking within each set is based on the visual appearance of the spray deposits on the leaf surfaces when viewed under ultraviolet light. Clearly, the latter method is cheap, and fairly accurate if implemented by an expert observer.

The data are given in Table 2, where measurements obtained from the plot sprayed at high (low) volume constitute the control (treatment) group. Suppose the interest is in knowing whether the sprayer settings affect the percentage area coverage. Then, \(\hat{R}^*(t)\) can serve as a measure of the treatment effect. Finally, threshold t in our proposed estimator may be interpreted as a lower bound on the response values which is easily available from previous studies or experts’ opinions. Figure 13 shows \(\hat{R}^*(t)\) as a function of t for the apple trees data. The horizontal line indicates the estimated value of the usual stress-strength reliability, \(R=P(X>Y)\), which is equal to 0.6184. It is to be noted that how information about t could update the basic estimate of the treatment effect.

7 Conclusion

The challenge we have set ourselves in this work is estimation of a time-dependent reliability measure using RSS. Under an imperfect ranking model, components of the suggested estimator are shown to be more efficient than their competitors in SRS. It is further established that their variances are minimized in the absence of ranking errors. The findings are supported by results of Monte Carlo experiment conducted to get insight of the behavior of the new measure.

The proposed estimator is the ratio of two estimators based on empirical distributions. A deficiency shared by such estimators is that they fail to capture smoothness of the corresponding attributes being estimated. Moreover, the empirical estimators may reveal large bias close to the boundaries which stems from the fact that they are unable to estimate beyond the largest observation. Thus, it would be interesting to overcome these shortcomings by adopting a proper approach. This can be done using kernel density estimation in line with Zardasht et al. (2012). The method merits investigation in the context of estimating the usual stress-strength reliability based on RSS, as well. These will be studied in subsequent works.

References

Bohn LL, Wolfe DA (1994) The effect of imperfect judgment rankings on properties of procedures based on the ranked-set samples analog of the Mann-Whitney-Wilcoxon statistic. J Am Stat Assoc 89:168–176

Chen Z, Bai Z, Sinha BK (2004) Ranked set sampling: theory and applications. Springer, New York

Datta BN (2010) Numerical linear algebra and applications, 2nd edn. SIAM, Philadelphia

Díaz-Francés E, Montoya JA (2013) The simplicity of likelihood based inferences for P(XY) and for the ratio of means in the exponential model. Stat Pap 54:499–522

Kotz S, Lumelskii Y, Pensky M (2003) The stress-strength model and its generalizations. Theory and applications. World Scientific, Singapore

Kvam PH, Samaniego FJ (1994) Nonparametric maximum likelihood estimation based on ranked set samples. J Am Stat Assoc 89:526–537

McIntyre GA (1952) A method of unbiased selective sampling using ranked sets. Aust J Agric Res 3:385–390

Murray RA, Ridout MS, Cross JV (2000) The use of ranked set sampling in spray deposit assessment. Asp Appl Biol 57:141–146

Sengupta S, Mukhuti S (2008) Unbiased estimation of P(XY) using ranked set sample data. Statistics 42:223–230

Shao J (2003) Mathematical statistic, 2nd edn. Springer, New York

Zardasht V, Asadi M (2010) Evaluation of \(P(X_t>Y_t)\) when both \(X_t\) and \(Y_t\) are residual lifetimes of two systems. Stat Neerl 64:460–481

Zardasht V, Zeephongsekul P, Asadi M (2012) On nonparametric estimation of a reliability function. Commun Stat 41:983–999

Acknowledgments

The authors are indebted to the reviewer and the Associate Editor for helpful comments on the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mahdizadeh, M., Zamanzade, E. A new reliability measure in ranked set sampling. Stat Papers 59, 861–891 (2018). https://doi.org/10.1007/s00362-016-0794-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-016-0794-3