Abstract

Lagrangian particle tracking experiments are a key tool to understanding particle transport in fluid flows. However, tracking particles over long distances is expensive and limited by both the intensity of light and number of cameras. In order to increase the length of measured particle trajectories in a large fluid volume with minimal cost, we developed a large-scale particle-shadow-tracking method. This technique is able to accurately track millimeter-scale particles and their orientations in meter-scale laboratory fluid flows. By tracking the particles’ shadows cast by a wide beam of collimated light from a high-power LED, 2D particle position and velocity can be obtained, as well as their 3D orientation. Compared with traditional volumetric particle tracking techniques, this method is able to measure particle kinematics over a larger area using much simpler imaging and tracking techniques. We demonstrate the method on sphere, disk, and rod particles in a wavy wind-driven flow, where we successfully track particles and reconstruct their orientations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Particle-laden flows in the environment are ubiquitous: examples include saltating sediments or aeolian transport, blowing snow, and falling ash. Laboratory experiments often offer the best way to study the transport of particles in these flows under controlled conditions, but tracking particles over long times in large facilities (i.e., with regions of interest (ROIs) on the order of 1 m\(^3\)) poses significant challenges, and existing methods have several limitations.

Currently, most experimental methods involve optical particle tracking with high-speed cameras, where illumination is provided by a laser, LED backlight, or ambient or volumetric lighting. A laser can be focused into either a 2D sheet for planar particle tracking velocimetry (PTV) or a 3D volume for Lagrangian particle tracking (LPT), which uses cameras viewing the ROI from multiple viewing angles. For example, 2D tracking over a relatively large ROI of 30 cm \(\times\) 30 cm was done by Petersen et al. (2019) using a laser sheet to track hollow glass microspheres in homogeneous isotropic turbulence in air. Gerashchenko et al. (2008) performed 2D tracking on particles in a turbulent boundary layer. They expanded a laser over a 3.5 cm\(^3\) volume and extended their particle tracking distance by mounting their camera and optics on a sled that moved with the freestream velocity for 50 cm. A similar technique was used by Zheng and Longmire (2014) to track tracer particles moving through an array of cylinders in a turbulent boundary layer. Large-scale 3D particle tracking using a single camera was performed by Hou et al. (2021) using a technique based on particle glare-point spacing.

Laser illumination is limited in spatial extent, and while a 2D laser sheet can illuminate a larger area than a 3D laser volume at a given intensity, particles only intersect the laser sheet for short amounts of time, resulting in short trajectories. An LED backlight solves this issue by lighting particles from behind throughout a volume of fluid. In this way, particle shadows can be measured with a camera placed opposite of the backlight. LED backlighting was used by Fong and Coletti (2022) and Fong et al. (2022), for example, to track glass particles in a riser over a length of 20 cm. It has also been used to track bubbles in water flows (Hessenkemper and Ziegenhein 2018; Bröder and Sommerfeld 2007; Tan et al. 2020). However, most LED backlights are also limited in size, and it can be difficult to diffuse the light enough to create a uniformly-lighted background. Imaging particles inside a 3D volume with ambient light is a third option. This is dependent on the particles having naturally strong contrast with the background, and like LPT requires multiple camera viewpoints unless the particles are naturally limited to a nearly 2D plane, as may occur in bed transport of sediment, for example. Large-scale 3D tracking has been carried out on tracer particles inside a room using ambient lighting (Fu et al. 2015) and over a large region of an atmospheric boundary layer (Rosi et al. 2014). In addition, pulsed and volumetric LED illumination has been used to perform large-scale measurements in air, including at volumes exceeding 10 m\(^3\) (e.g. Huhn et al. 2017; Godbersen et al. 2021; Schröder et al. 2022). Similar methods have also been used in water flows for sufficiently sized particles; Kim et al. (2022) used this technique to perform joint 3D tracking of bubbles and the fluid phase in a jet flow over a \(\sim\)(0.3 m)\(^3\) volume. Even larger-scale experiments have been performed to measure airflow around wind turbines using a spotlight and snowflakes as flow tracers (Toloui et al. 2014; Wei et al. 2021).

In the following, we present a large-scale shadow tracking (LSST) method for measuring the 2D transport and 3D orientation of particles in a flow. In this method, a light source shines through the transparent front wall of the experimental facility onto the back wall, projecting shadows of particles in the illuminated volume of fluid. The light source is collimated, so the shadow’s location exactly maps to the particle’s streamwise and vertical location (x and z), albeit with no spanwise (y) information. In other words, we can measure the 2D position and velocity components of particles in a large 3D volume. This method is less complex than other LPT methods because the particle shadows only need to be imaged by one camera. This techique works for any particles large enough to cast a shadow, and we demonstrate it here on particles as small as 2 mm in diameter. We use multiple cameras to increase the field of view and image resolution. In addition, if the particle geometry is known, then the particle’s orientation can be reconstructed from the geometry of the projected shadow.

We also demonstrate the LSST method in an experiment to simulate plastic particle transport near the free surface of the ocean by tracking spherical and nonspherical particles in turbulent wind-driven waves in a water tank. A large ROI is required because the flow facility must be large enough to create realistic waves, and particle motion must be tracked over several wave periods to get robust Lagrangian statistics. Microplastic particles are ubiquitous, but their transport and fate in the ocean is not well quantified (Geyer et al. 2017; van Sebille et al. 2015). Wind-mixing has been established as an important driver of vertical transport of these particles in the ocean and can submerge particles up to tens of meters (Kukulka et al. 2012; Thoman et al. 2021). While passive tracer species are often the focus of these studies, many particles in the ocean have variable size and shape, the effects of which have yet to be rigorously investigated in this context.

2 Shadow tracking method

2.1 Imaging

In order to track particles in both the vertical and streamwise directions, we constructed a facility to image particle shadows over a large region of interest in a wave tank. The method uses collimated light rays which shine spanwise horizontally through the water to produce shadows of the particles on the far sidewall of the tank. The shadows are imaged with an array of four cameras; see Fig. 1a and b for schematics depicting the setup.

The light source is a 4 W LED (Luxeon Star Saber Z5 20-mm quad LED, wavelength 470 nm) powered by direct current from an LED driver to eliminate flicker (ThorLabs T-Cube driver). A monochromatic LED is used to minimize chromatic aberration due to wavelength-dependent indices of refraction when the light shines through the collimating lenses. The blue-green color of light is chosen by optimizing two competing constraints: blue wavelengths transmit through water with less scattering, but the camera sensor is more sensitive to red wavelengths. The blue-green 470 nm wavelength is chosen because it is the shortest wavelength to which the camera sensor is at least 80% sensitive. The LED has a light-emitting area of 2.6 mm x 2.6 mm, so it is effectively a point source relative to the region of interest. A 1 m \(\times\) 0.7 m Fresnel lens (Knight Optical) is mounted against the glass sidewall of the tank, and the light source is placed at the lens’ focal length. The lens collimates the light rays so that they shine in parallel normal to the sidewalls of the tank. Because the light is collimated, particles that enter the illuminated area cast shadows on the far sidewall that are the same size as the particles themselves, regardless of their spanwise location. To maximize the intensity of the illumination, the light source is concentrated by reducing its spreading angle from 125\(^\circ\) to about 60\(^\circ\) with a plano-convex spherical lens of focal length 25 mm (Edmund Optics) mounted at a distance of 3 mm from the LED. To fine-tune the collimation and achieve highly parallel light rays, the position of the light source was adjusted slightly in the y direction until the width of the rectangular illuminated region on the back wall of the tank matched the width of the Fresnel lens to within 0.1%.

A 4\(\times\)1 array of monochrome machine vision cameras (Basler Boost boA4096-93cm, Sensory Labs) mounted with 35 mm lenses take composite images of the ROI with a total image size of 8192 px \(\times\) 4336 px at approximately 9 px/mm resolution. Each camera is focused on one quarter of the illuminated sidewall, and they are synchronized by a PC running StreamPix software (Sensory Labs). At the full frame rate, roughly 3 GB/s of image data are generated, necessitating the use of a frame grabber. A frame grabber reads images from the cameras to the PC, allowing for a frame rate of up to 90 Hz from all cameras simultaneously at full resolution. In these experiments, a frame rate of 30 Hz is used.

2.2 Image rectification

To convert between the image coordinates and world coordinates, we need to rectify the images. Ideally, the tank in the experiment has both a transparent front and back wall. This allows shadows to be projected from the front wall onto the back wall, on which translucent paper or plastic would be mounted to increase the contrast of the shadows. Finally, cameras would image the projected shadows straight-on from the back of the tank. However, in our experimental facility only the front wall is transparent, so the cameras must view the projected shadows through the front wall and thus they are mounted on either side of the light source at an angle (see Fig. 1). The oblique viewing angle of the cameras distorts the images, which can be corrected with a linear rectification transformation; however, the short focal length of the camera lenses also creates lens distortion, which requires a higher-order transformation. To correct for both types of distortion, we apply a quadratic rectification transformation of the form

where (x, y) is a point in image coordinates, (X, Y) is a point in world coordinates, and \(b_1, b_2, ..., b_{14}\) are the transformation coefficients, adapted from the linear transformation described in Fujita et al. (1998). The coefficients are determined by finding a least-squares solution to the equation

where

Here, \((x_1, y_1), ..., (x_n, y_n)\) and \((X_1, Y_1), ..., (X_n, Y_n)\) are the locations of a set of n calibration points in image coordinates and world coordinates, respectively. The least-squares solution to this equation is

Each of the four camera views is calibrated from a set of 80 to 190 calibration points. To generate the calibration points directly on the back wall of the tank without any offset, a 0.9 m \(\times\) 1.2 m transparent acrylic plate marked with a grid pattern of opaque 5 mm circles is mounted in the tank against the front wall. Shadows of the circles are cast on the back wall and imaged by the camera array, and their known locations in both image and world coordinates are used to determine B.

2.3 Particle detection

The captured images appear bright with dark shadows cast by the plastic particles and by the free surface. Due to the position of the cameras, the real particles are sometimes visible in the images alongside the shadows. In order to avoid any effects from the real particles, we have made them white to minimize their contrast with the white background. We also focus the cameras on the back wall with the aperture fully open (producing a narrow depth of focus) so that the real particles will be out of focus. Example raw images are shown in Fig. 2, as well as a demonstration of the image processing method. The following steps are carried out to detect particle shadows in each image. First, the background is subtracted from the image. The background is obtained from a set of particle-free images taken before experiments start. The image intensity is then inverted so shadows show up as bright objects on a dark background. At this point, we use MATLAB’s adaptive binarization function to segment the image into bright and dark regions. Most of the bright regions are particle shadows, but there are some false positives: bubbles, shadows from the free surface, or actual particles captured in the camera view. The false positives are rejected by applying bounds on the object area and major and minor axis lengths; checking that its position is below the free surface; and checking that it is not a perfect double of an object right next to it, which can happen when a particle is near the back wall and the camera captures both the particle and its shadow.

Once the particle shadows are detected, five key points are extracted from each silhouette: the centroid and the endpoints of the major and minor axes. These key points correspond to the shadow position in image coordinates. To convert the key points into world coordinates, we use Eq. 1. Finally, the key points, in world coordinates, are converted into physically meaningful centroids, major and minor axis lengths, and tilt angles of the major axis using trigonometry. This process is repeated for the images from each of the four cameras taken at the same point in time. The data from all four cameras are then merged to obtain instantaneous particle silhouette information throughout the entire ROI. Because the camera views overlap slightly at the edges, duplicate overlapping particles sometimes exist; in this case, the particle farther from the edge of its image is kept, to ensure that we keep whichever particle is fully captured in the frame, and the other is discarded. This procedure is carried out for the entire image series.

Image processing steps to detect particle shadows: (a) a raw image from one camera (the right-most camera in this case); (b) image inversion and background subtraction; (c) adaptive binarization and particle detection, with the major and minor axes of the shadows shown with red and blue lines, respectively; (d) four simultaneous images covering the entire field of view; and (e) all detected particles

2.4 Orientation measurement

This imaging setup is also capable of measuring the orientation of non-spherical particles. We consider rods and disks in this section, and define a three-dimensional orientation vector \({\varvec{p}}\) as the unit vector passing through the particle’s axis of symmetry (Fig. 3). Our measurements are only in 2D, and thus we cannot measure \({\varvec{p}}\) explicitly. Nevertheless, because we know the particles’ true length (for rods) or diameter (for disks) \(D_p\), we can reconstruct the orientation of the detected particles from the tilt angle of the shadow’s major axis with respect to the horizontal, \(\theta\), as well as the apparent major axis length \(d_1\) (for rods) or minor axis length \(d_2\) (for disks) of its shadow (Fig. 4). A similar method is described in Baker and Coletti (2022) and is summarized here.

One obstacle in accurately measuring \(d_1\) and \(d_2\) is that the particle shadows are blurred by optical aberration from both the Fresnel lens and the scattering of light passing through the water and suspended particulates. To obtain \({\varvec{p}}\), the blur is corrected by subtracting an offset \(\delta\) from \(d_1\) or \(d_2\) for rods or disks, respectively. The offset \(\delta\) is found by taking advantage of the fact that one lengthscale of the particles should always be a constant length in the projected shadows, regardless of particle’s orientation. For rods, \(\delta\) is calculated from the mean minor axis length of the shadows, i.e. \(d_2\) in Fig. 4a. The minor axis length should correspond to the actual rod thickness, regardless of orientation; thus, \(\delta\) is the difference between the measured \(d_2\) and known rod thickness. Similarly for disks, \(\delta\) is calculated from the difference between the mean major axis length of the shadows, \(d_1\) in Fig. 4, and the known disk diameter. PDFs of the original and corrected axis lengths are shown in Fig. 5(a) (10 mm rods) and (b) (7 mm disks). If \(d_1 - \delta\) or \(d_2 - \delta\) is negative or greater than \(D_p\), it is not included in the results. Then, the formulas in Table 1 are used to compute the orientation \({\varvec{p}}\). Note that there will always be ambiguity in the out-of-plane orientation, and thus the sign of \(p_y\) cannot be determined from the 2D shadows.

2.5 Tracking

Once the particle centroids are obtained, the centroids are tracked between frames using a nearest-neighbor method. We define a search radius corresponding to the maximum distance a particle is expected to move from one snapshot to the next, which is about 10 mm; the tracking algorithm then links particles in each snapshot with their nearest neighbor within the search radius in the next snapshot. Occasionally, a tracked particle will not be detected for one or more frames before showing up again. We repair most of these tracks if five or fewer frames are skipped: we find tracks that end in the middle of the ROI and use the particle’s last known position and velocity to predict where it should be in the next five frames. If a new track starts within the search radius of one of those predictions, it is assumed to belong to the same particle and the tracks are joined, interpolating the particle position and orientation in the missing frame(s).

To remove measurement noise from the tracks, the particle positions in each track are convolved with a Gaussian smoothing kernel, G. They are also convolved with the first and second derivatives of the Gaussian kernel, \({\dot{G}}\) and \({\ddot{G}}\), respectively, to obtain velocities and accelerations (Tropea et al. 2007). The optimal width of the kernel \(t_k\) is determined from the particle acceleration variance as a function of kernel width: the smallest value for which the variance decays exponentially with kernel width is corresponds to \(t_k\) such that most of the noise is filtered out but most of the physical accelerations are not (Voth et al. 2002; Mordant et al. 2004; Gerashchenko et al. 2008; Nemes et al. 2017; Ebrahimian et al. 2019; Baker and Coletti 2021). Here, this corresponds to a duration of 5 successive snapshots, or 167 ms.

Similarly, \({\varvec{p}}\) is smoothed and the time rates of change of the orientation vector \(\dot{{\varvec{p}}}\) and \(\ddot{{\varvec{p}}}\) are obtained by convolving the components of \({\varvec{p}}\) in each track with the same G, \({\dot{G}}\), and \({\ddot{G}}\) kernels. A particle’s solid-body rotation rate is composed of a spinning component and a tumbling component, \({\varvec{\Omega }}=\Omega _p{\varvec{p}}+ {\varvec{p}}\times \dot{{\varvec{p}}}\), where spinning is rotation about the symmetry axis (\({\varvec{\omega }}_s = \Omega _p{\varvec{p}}\)) and tumbling is rotation of the symmetry axis (\({\varvec{\omega }}_t = {\varvec{p}}\times \dot{{\varvec{p}}}\)). We are unable to measure spinning motion with our shadow tracking. However, the tumbling component of angular velocity, and similarly the tumbling component of angular acceleration, can be calculated by

However, sign ambiguities can arise when the components of \({\varvec{p}}\) wrap around the limits of their ranges given in Table 1, which must be resolved before smoothing and differentiating to get \(\dot{{\varvec{p}}}\) and \(\ddot{{\varvec{p}}}\) (see Baker and Coletti 2022). Sign ambiguities are identified by finding tracks where any of the components of \({\varvec{p}}\) change sign or approach 0, 1, or -1, and are also a local minimum or maximum, which indicates a possible discontinuity. The ambiguity resolved by applying a minimum angular acceleration condition to the track. Four sets of sign changes are applied to the track after the discontinuity: (1) flip only \(p_x\) and \(p_z\), (2) flip only \(p_y\), (3) flip \(p_x\), \(p_y\) and \(p_z\), and (4) no sign changes. We chose the case with the minimum tumbling angular acceleration magnitude \(\ddot{{\varvec{p}}}\cdot \ddot{{\varvec{p}}}\), and propagate the sign change forward in time along the remainder of the track. Even though there are four possible choices of sign changes, generally the \(\ddot{{\varvec{p}}}\cdot \ddot{{\varvec{p}}}\) value associated with the correct set will be at least an order of magnitude lower than the other three.

3 Experimental demonstration

3.1 Experimental setup

Experiments are performed in the Washington Air-Sea Interaction Facility (WASIRF) (Long 1992; Masnadi et al. 2021), a long wave tank in which wind blows over the surface of the water (Fig. 6). The test section of the tank is 12.2 m long and 0.91 m wide, and is filled with tap water to a depth of 0.6 m, leaving 0.6 m of headspace for airflow. The top of the tank is covered by removable panels except where instrumentation must pass through. Wind is generated by a suction fan (Trane) that drives air through the headspace in the test section and recirculates it via an overhead duct; the windspeed is set to 16 m/s for these experiments. The water pump is not used; mean flow of the water is only due to the wind stress. A sloped foam beach is placed at the downstream end of the tank to prevent wave reflections. The region of interest (ROI) in which measurements are taken is centered on a fetch of 7.0 m. The ROI is 1 m long in the streamwise direction and spans the full width and depth of the tank.

We add plastic rods, disks, and roughly spherical nurdles spanning a range of sizes into the wind-driven flow. Particles are all made of high-density polyethylene (HDPE), which is positively buoyant in water. Large nurdles (4 mm diameter) are obtained from McMaster; small nurdles (2 and 2.5 mm diameter) are obtained from Cospheric. The rods are cut from 1.75 mm thick 3D-printing filament using a razor blade. The disks are cut from a 0.79 mm thick sheet using circular dies mounted to an arbor press. The dimensions of the particles are defined in terms of their axis lengths: a is the length of the axis of rotational symmetry (i.e., the axis through the length of a rod and through the thickness of a disk), and b is the length of the perpendicular axes. The major axis length (the larger of a and b) is denoted by \(D_p\). Table 2 summarizes the dimensions of the particle types used in this experiment.

3.2 Uncertainty analysis

The measurement error is estimated by imaging calibration particles which are glued to a glass plate. The images are analyzed using the algorithm described in sect. 2 to measure particle positions and orientations. Five particles of each type are glued to the plate. The plate is mounted at known orientations within the tank, giving the particles glued to the plate known orientations as well. These orientations are compared to those computed by the detection algorithm to obtain the uncertainties \(\beta _{p_x}\), \(\beta _{p_y}\), and \(\beta _{p_z}\). The distances between the particles glued on the plate are also known, so we compare these with the detected interparticle distances to obtain an uncertainty on particle centroid locations \(\beta _{x_p}\). The uncertainties on the centroid location and orientation are estimated as the mean absolute difference between measured and actual values. These measurement errors are reported in Table 3. Overall, the measurement error for the particle centroids is 0.25 mm, which is much smaller than the particle diameters (\(2 - 10\) mm) and the ROI (\(\sim 1\) m). The largest errors are present in the y component of the rod orientations. This is likely due to the variation in rod lengths caused by hand-cutting them; the standard deviation of the rod lengths is about 1 mm, or 5 to 10% of the mean, which introduces error into the projected lengths captured by the cameras.

3.3 Reconstructed tracks

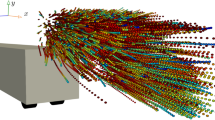

With this method, we are able to successfully reconstruct 2D projections of particle trajectories from a 3D volume and with 3D orientation information. A subset of the tracks of particle centroids is shown in Fig. 7.

This method returns long tracks, many of which span the entire 1-m field of view. Particles are temporarily undetectable near the wavy free surface, where the surface itself periodically blocks optical access; this breaks up many of the tracks that are near the surface, resulting in shorter tracks. Because the particles in the experiment are buoyant, they tend to rise to the free surface. The distribution of track lengths is shown in Fig. 8 for (a) all tracks and (b) tracks with at least one observation deeper than 0.1 m below the water surface (to avoid counting some of the tracks that are cut off by the wavy surface). Track length is computed by integrating particle speed over the duration of the track, \(\int _{t_{start}}^{t_{end}} (u^2 + w^2)^{1/2} dt\). Even in the challenging imaging conditions imposed by the free surface, 56% of the deeper set of tracks were greater than 0.2 m in total length.

Samples of these reconstructed tracks with 3D orientations for disks and rods are shown in Fig. 9. The tracks are subsampled so that every fifth snapshot is shown for clarity. The particle color corresponds to the instantaneous value of \(p_z\).

Reconstructed tracks of disks (a, c, e) and rods (b, d, f). The blue to red color scale indicates the instantenous value of \(p_z\), where blue corresponds to \(p_z=0\) (the particle axis \({\varvec{p}}\) is perpendicular to the z axis) and red corresponds to \(p_z=1\) (\({\varvec{p}}\) is parallel to the z axis)

4 Conclusion

We have presented a method for measuring 2D projections of particle position by tracking their shadows cast by a wide beam of collimated light. The novelty of this technique is its accurate tracking of particles and their orientations within a large volume with a relatively simple imaging setup. This method allows highly-resolved tracking of particles over long times and distances in a large volume (in this case a volume of 1 m \(\times\) 1 m \(\times\) 0.6 m), scales over which conventional methods of particle tracking struggle. The four-camera array also provides enough spatial resolution to reconstruct the 3D orientation of nonspherical disk and rod particles from the geometry of their shadows, even accounting for the blur due to the Fresnel lens.

The shadow tracking method is demonstrated with buoyant plastic particles of varying shape and size in a wind-wave tank. We demonstrated that we were able to track particles throughout almost the entire field of view, enabling long trajectories, and were only limited by when particles rose to the free surface where we were unable to image them. We were also able to successfully reconstruct their 3D orientations. This imaging method provides a new way to obtain robust Eulerian and Lagrangian statistics of quantities such as particle velocity, depth, and orientation, which is challenging for conventional imaging methods over large length and time scales.

We expect this technique to be useful in large tanks and channels with a working fluid of either air or water where particle behavior and transport are of interest. The demonstrated experiments have particle image densities of O(10 m\(^{-2}\)). However, the method could be reliably extended to number densities of at least O(100 m\(^{-2}\)). Additionally, we anticipate that particles smaller than those tested here could also be tracked. Tracking smaller particles would be assisted by increasing the image contrast. Higher contrast could be enabled by using a brighter light source, more sensitive cameras, or highly-filtered water or air to reduce light scattering. In applications where particle orientation is not needed, less resolution is needed and thus fewer cameras are necessary, which would further reduce the complexity and cost of the experiment.

References

Baker LJ, Coletti F (2021) Particle-fluid-wall interaction of inertial spherical particles in a turbulent boundary layer. J Fluid Mech 908:A39

Baker LJ, Coletti F (2022) Experimental investigation of inertial fibres and disks in a turbulent boundary layer. J Fluid Mech 943:A27

Bröder D, Sommerfeld M (2007) Planar shadow image velocimetry for the analysis of the hydrodynamics in bubbly flows. Meas Sci Technol 18(8):2513

Ebrahimian M, Sanders RS, Ghaemi S (2019) Dynamics and wall collision of inertial particles in a solid-liquid turbulent channel flow. J Fluid Mech 881:872–905

Fong K, Coletti F (2022) Experimental analysis of particle clustering in moderately dense gas-solid flow. J Fluid Mech. https://doi.org/10.1017/jfm.2021.1024

Fong K, Xue X, Osuna-Orozco R et al (2022) Two-fluid coaxial atomization in a high-pressure environment. J Fluid Mech 946:A4

Fu S, Biwole P, Mathis C (2015) Particle tracking velocimetry for indoor airflow field: A review. Build Environ 87:34–44

Fujita I, Muste M, Kruger A (1998) Large-scale particle image velocimetry for flow analysis in hydraulic engineering applications. J Hydraul Res 36(3):397–414

Gerashchenko S, Sharp NS, Neuscamman S et al (2008) Lagrangian measurements of inertial particle accelerations in a turbulent boundary layer. J Fluid Mech 617:255–281

Geyer R, Jambeck J, Law K (2017) Production, use, and fate of all plastics ever made. Sci Adv 3(7):e1700782

Godbersen P, Bosbach J, Schanz D et al (2021) Beauty of turbulent convection: a particle tracking endeavor. Phys Rev Fluids 6(11):110–509

Hessenkemper H, Ziegenhein T (2018) Particle shadow velocimetry (PSV) in bubbly flows. Int J Multip Flow 106:268–279

Hou J, Kaiser F, Sciacchitano A et al (2021) A novel single-camera approach to large-scale, three-dimensional particle tracking based on glare-point spacing. Exp Fluids 62(5):1–10

Huhn F, Schanz D, Gesemann S et al (2017) Large-scale volumetric flow measurement in a pure thermal plume by dense tracking of helium-filled soap bubbles. Exp Fluids 58(9):1–19

Kim D, Schanz D, Novara M et al (2022) Experimental study of turbulent bubbly jet. Part 1. Simultaneous measurement of three-dimensional velocity fields of bubbles and water. J Fluid Mech 941:A42

Kukulka T, Proskurowski G, Morét-Ferguson S et al (2012) The effect of wind mixing on the vertical distribution of buoyant plastic debris. Geophys Res Lett. https://doi.org/10.1029/2012GL051116

Long S (1992) NASA wallops flight facility air-sea interaction research facility. National Aeronautics and Space Administration, Scientific and Technical Information Program

Masnadi N, Chickadel C, Jessup A (2021) On the thermal signature of the residual foam in breaking waves. J Geophys Res Oceans 126(1):e202JC0016511

Mordant N, Crawford AM, Bodenschatz E (2004) Experimental Lagrangian acceleration probability density function measurement. Physica D 193(1–4):245–251

Nemes A, Dasari T, Hong J et al (2017) Snowflakes in the atmospheric surface layer: observation of particle-turbulence dynamics. J Fluid Mech 814:592–613

Petersen AJ, Baker L, Coletti F (2019) Experimental study of inertial particles clustering and settling in homogeneous turbulence. J Fluid Mech 864:925–970

Rosi G, Sherry M, Kinzel M et al (2014) Characterizing the lower log region of the atmospheric surface layer via large-scale particle tracking velocimetry. Exp Fluids 55(5):1–10

Schröder A, Schanz D, Bosbach J et al (2022) Large-scale volumetric flow studies on transport of aerosol particles using a breathing human model with and without face protections. Phys Fluids 34(3):035–133

Tan S, Salibindla A, Masuk A et al (2020) Introducing OpenLPT: new method of removing ghost particles and high-concentration particle shadow tracking. Exp Fluids 61(2):1–16

Thoman T, Kukulka T, Gamble K (2021) Dispersion of buoyant and sinking particles in a simulated wind-and wave-driven turbulent coastal ocean. J Geophys Res: Oceans 126(3):e2020JC016868

Toloui M, Riley S, Hong J et al (2014) Measurement of atmospheric boundary layer based on super-large-scale particle image velocimetry using natural snowfall. Exp Fluids 55(5):1–14

Tropea C, Yarin AL, Foss J. F. (2007) Springer handbook of experimental fluid mechanics. Springer, Berlin

van Sebille E, Wilcox C, Lebreton L et al (2015) A global inventory of small floating plastic debris. Environ Res Lett 10(12):124006

Voth GA, La Porta A, Crawford AM et al (2002) Measurement of particle accelerations in fully developed turbulence. J Fluid Mech 469:121–160

Wei N, Brownstein I, Cardona J et al (2021) Near-wake structure of full-scale vertical-axis wind turbines. J Fluid Mech 914:A17

Zheng S, Longmire E (2014) Perturbing vortex packets in a turbulent boundary layer. J Fluid Mech 748:368–398

Acknowledgements

We thank A. Aggarwal, I. Garrey, J.E. Chávez-Dorado, and C. Abarca for their assistance in performing the experiments.

Funding

This work is supported by the National Science Foundation Division of Ocean Sciences under Grant No. 2126193.

Author information

Authors and Affiliations

Contributions

Both authors contributed to the study conception and design. Data collection and analysis were performed by LB under the supervision of MD. The manuscript was drafted by LB and both authors commented on the manuscript. Both authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Baker, L., DiBenedetto, M. Large-scale particle shadow tracking and orientation measurement with collimated light. Exp Fluids 64, 52 (2023). https://doi.org/10.1007/s00348-023-03578-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-023-03578-y