Abstract

We present an optical system that performs polarimetric spectral imaging with a detector with no spatial resolution. This fact is possible by applying the theory of compressive sampling to the data acquired by a sensor composed of an analyzer followed by a commercial fiber spectrometer. The key element in the measurement process is a digital micromirror device, which sequentially generates a set of intensity light patterns to sample the object image. For different configurations of the analyzer, we obtain polarimetric images that provide information about the spatial distribution of light polarization at several spectral channels. Experimental results for colorful objects are presented in a spectral range that covers the visible spectrum and a part of the NIR range. The performance of the proposed technique is discussed in detail, and further improvements are suggested.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multispectral imaging (MI) is a useful optical technique that provides two-dimensional images of an object for a set of specific wavelengths within a selected spectral range. Dispersive elements (as prisms or gratings), filter wheels or tunable band-pass filters are typical components used in MI systems to acquire image spectral content [1]. In certain applications, MI can be improved by adding spatially resolved information about the light polarization. Multispectral polarimetric imaging facilitates the analysis and identification of soils [2], plants [3] and surfaces contaminated with chemical agents [4]. In the field of biomedical optics, multispectral polarimetric imaging has been applied to the characterization of human colon cancer [5] or the pathological analysis of skin [6]. In many cases, polarimetric analysis can be performed by just including a linear polarizer in an imaging system to record images for various selected orientations of its transmission axis [6, 7]. A simple configuration that includes two orthogonal polarizers integrated in a spectral system has been used for noninvasively imaging of the microcirculation through mucus membranes and on the surface of solid organs [7]. An illustrative example of a spectral camera with polarimetric capability is a system that combines an acousto-optic tunable filter with a liquid–crystal-based polarization analyzer [8].

In an apparently different context, compressive sampling (CS) has emerged in recent years as a novel sensing theory that goes beyond the Shannon–Nyquist limit [9]. In the field of imaging, CS states that an N-pixel image of an object can be reconstructed from M < N linear measurements. This sub-Nyquist condition is achieved by exploiting the “sparsity” of natural images. According to this property, when images are expressed in a proper function basis, most terms are negligible or zero-valued. CS theory ensures that it is possible to reconstruct the object images from a relatively small collection of well-chosen measurements, typically by an iterative acquisition process. The object reconstruction is obtained from experimental data by solving a convex optimization program.

One of the most outstanding applications of CS is the design of a single-pixel camera [10, 11], which offers promising benefits at spectral regions where image sensors are impractical or inexistent [12]. In contrast to conventional image sensors, which typically perform intensity measurements, single-pixel detectors can provide information about other properties of an incoming light field, as its spectrum or its polarization. The substitution of the photodiode of a CS single-pixel camera by a spectrometer without spatial resolution permits to perform hyperspectral imaging [13, 14]. In the same way, single-pixel polarimetric imaging has been demonstrated with a CS camera that includes a commercial beam polarimeter [15]. CS has also been applied to biological fluorescence microscopy [16]. In this case, the CS fluorescence microscope includes a photomultiplier tube as a point detector, since biological samples often have low fluorescence.

In this work, we present a CS imaging system able to provide spatially resolved information about the spectrum and the polarization of the light reflected by an object. As a detector, we use a polarization analyzer followed by a fiber spectrometer with no spatial resolution. The key element of our system is a digital micromirror device (DMD), which makes possible the CS acquisition process. To this end, a set of binary intensity patterns is sequentially generated by the DMD to sample the image of an object of interest. The experimental data are subsequently processed to obtain a set of multispectral data cubes, one for each selected configuration of the analyzer. For a given spectral channel, the corresponding polarimetric images can be linearly combined to derive the spatial distribution of the Stokes parameters of light [8, 15]. In this sense, the single pixel described in this paper is a first step toward the realization of an imaging Stokes polarimeter like in Ref. [15], but with the ability of performing polarization analysis for a large variety of wavelengths.

2 Outline of compressive sampling

The basis of single-pixel imaging by CS can be briefly presented as follows. Let us consider a sample object, whose N-pixel image is arranged in an N × 1 column x. This image is supposed to be compressible when it is expressed in terms of a basis of functions, Ψ = {Ψ l } (l = 1,…, N). From a mathematical point of view, x can be written as \({\mathbf{x}} = {{\Uppsi}}{\mathbf{s}}\), where Ψ is a N × N matrix that has the vectors \(\left\{ {{\varvec{\Uppsi}}_{l} } \right\}\) as columns and \({\mathbf{s}}\) is the N × 1 vector composed of the expansion coefficients. The assumed sparsity of the image implies that only a small group of these coefficients is nonzero. In order to determine \({\mathbf{x}}\), we implement an experimental system able to measure the projections of the object image on a basis of M intensity patterns φ m (m = 1, …, M) of N-pixel resolution. This acquisition process can be written as

where \({\mathbf{y}}\) is the M × 1 column formed by the measured projections, and Φ is the M × N sensing matrix. Each row of Φ is an intensity pattern φ m, and the product of Φ and Ψ gives the M × N matrix Θ acting on \({\mathbf{s}}\). The underlying mathematical formalism of CS states that there is a high probability of reconstructing \({\mathbf{x}}\) from a random subset of coefficients \(\left( {M < N} \right)\) in the Ψ domain. Equation (1) constitutes an underdetermined matrix relation, so it must be resolved by means of a proper reconstruction algorithm. The best strategy to perform this step is based on the minimization of the l 1-norm of \({\mathbf{s}}\) subjected to the restriction given by Eq. (1). As the measurements {y m } are affected by noise, the CS recovery process is usually reformulated with inequality constrains [9, 10]. In this case, the proposed reconstruction \({\mathbf{x}}^{{\mathbf{*}}}\) is given by \({\mathbf{x}}^{{\mathbf{*}}} = \Uppsi {\mathbf{s}}^{{\mathbf{*}}}\), where \({\mathbf{s}}^{{\mathbf{*}}}\) is the solution of the optimization program

where ε is an upper bound of the noise magnitude and the l 2-norm is used to express the measurement restriction.

3 Description of the polarimetric imaging spectrometer

3.1 Optical system

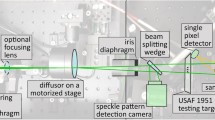

The scheme of our polarimetric spectral camera is shown in Fig. 1a. A white light source illuminates a sample object, and a CCD camera lens images the object on a DMD, which is a reflective spatial light modulator that selectively redirects parts of an input light beam [17]. A DMD consists of an array of electronically controlled micromirrors that can rotate about a hinge, as is schematically depicted in Fig. 1b. Every micromirror is positioned over a CMOS memory cell. The angular position of a specific micromirror admits two possible states (+12° and −12° respect to a common direction), depending on the binary state (logic 0 or 1) of the corresponding CMOS memory cell contents. In this way, the light can be reflected at two angles depending on the signal applied to the mirror. The DMD of our system is a Texas Instrument device (DLP Discovery 4100) with a resolution of 1,920 × 1,080 micromirrors and a panel size of 0.95″. The mirror pitch is 10.8 μm, and the fill factor is greater than 0.91. The optical system 1 has its optical axis forming an angle respect to the orthogonal direction to the DMD panel that approximately corresponds to twice the tilt angle of the device mirrors (24°). As is shown in Fig. 1c, in such a configuration, a micromirror oriented at +12° orthogonally reflects the light into the next part of the system, appearing as a bright pixel (ON state). In their turn, micromirrors oriented at −12° result to be dark pixels (OFF state). The light emerging from the bright pixels of the DMD is collected by a second lens system similar to the first one (optical system 2 in Fig. 1a). This lens system couples the light into a silica multimode fiber with a diameter of 1,000 μm and a spectral range of 220–1,100 nm, which is connected to a commercial concave grating spectrometer (Black Comet CXR-SR from StellarNet). The wavelength resolution of this spectrometer is 8 nm (with a slit of 200 μm), and the maximum signal-to-noise ratio (SNR) is 1,000:1. Just before the fiber, the light passes through a configurable polarization analyzer. In our camera, this analyzer consists of a film polarizer mounted in a rotating holder.

3.2 Operation principle

The CS single-pixel camera shown in Fig. 1a performs the iterative acquisition process synthesized in Eq. (1). The DMD sequentially produces the set of M irradiance patterns of N-pixel resolution that composes the sensing matrix Φ. The collected data consist of a succession of spectra, one for each pattern sent to the DMD. The M irradiances measured by the spectrometer for each spectrum channel form the vector \({\mathbf{y}}\) of Eq. (1). As spectrum channels have a prefixed bandwidth, the quantities {y i } that feed the CS algorithm are actually an average of the measured irradiances within the considered range.

For the practical implementation of the CS acquisition process, it is essential to determine which incoherent basis should be selected (when no prior information on the object is accessible). A suitable choice for image basis results to be the Hadamard–Walsh functions, which constitute a basis known to be incoherent with the Dirac basis [10]. Hadamard matrices are square arrays of plus and minus ones, whose rows (and columns) are orthogonal relative to one another. Each row of a Hadamard matrix can be interpreted as a rectangular wave ranging from ±1 (Walsh function). In this context, the Hadamard matrix performs the decomposition of a function by a set of rectangular waveforms, instead of the usual harmonic waveforms associated with the Fourier transform [18]. From an experimental point of view, a CS acquisition process using the Hadamard–Walsh basis can be carried out by generating a collection of binary intensity patterns, easily imprinted on a DMD. To represent a Hadamard function \(H\) on the DMD, we use two binary patterns H + = (J + H)/2 and H − = (J − H)/2 that are related by H = H + − H −. Here, J is the matrix consisting of all 1s. Generating H + and H − sequentially and subtracting the measured intensities, we obtain the one that corresponds to H. In principle, when the total light intensity is known, the acquisition process can be performed by using only one of the above sequences of binary patterns, taking a unique measurement for every Hadamard function. This would lead to a reduction in the acquisition time by a factor two. However, this procedure is sensitive to the existence of source intensity fluctuations. In such a common situation, the method based on two successive measurements increases the signal-to-noise ratio (SNR) of the acquired data.

The sequential measurement process requires the synchronization between the DMD and the fiber spectrometer with the aid of a computer (not shown in Fig. 1). For each intensity pattern generated by the DMD, the spectrum of the light coming from the object is measured by the spectrometer, configured with an integration time that ensures an acceptable SNR for the acquired data. The minimum integration time provided by our spectrometer (1 ms) represents the main limiting factor on the measurement rate of our device, since DMDs are modulators that can work at relatively high frequencies (upper than 1 kHz). For a given acquisition frequency, the total time required to take image data increases with the number of measurements, which is, in accordance with the CS theory, a pre-established fraction of the image resolution. Therefore, a single-pixel camera shows a trade-off between image resolution and image acquisition time.

In our experimental setup, the measurement process is executed and controlled by means of custom software written with LabVIEW. The programming code used in the off-line CS reconstruction is called l1eq-pd, which solves the standard basis pursuit problem using a primal–dual algorithm [19]. This code includes a collection of MATLAB routines and is a well-tested algorithm for CS problems. However, other selections are possible and, in fact, the search of improved CS algorithms (more robust to data noise, with lower computation time, etc.) is currently an active area in the field of convex optimization (e.g., see Ref. [20]).

4 Experimental results

4.1 Numerical analysis

The aim of CS is to provide an accurate reconstruction of an object from an undersampled signal, but the exact number of measurements that allows attaining it is not a priori known. In addition, this number strongly depends on the features of the object under consideration. For this reason, when CS single-pixel imaging is attempted, it is useful to begin with relatively low-resolution reconstructions to estimate the parameters of the acquisition process. In accordance with this approach, and also to evaluate the image quality achievable with our camera, we performed multispectral imaging sending to the modulator Hadamard–Walsh patterns of 64 × 64 unit cells (N = 4,096), each one composed of 8 × 8 DMD pixels. The covered square window on the modulator panel had a width of ~5.5 mm. As a sample object, we used two square capacitors with a width of 7 mm. The illumination source was a Xenon white light lamp, and the polarization analyzer was removed. The number of measurements was M = 4,096, which allowed us to fulfill the Nyquist criterion. Eight central wavelengths λ 0 were selected in the visible spectrum. The bandwidth of the corresponding spectral channels was 20 nm \(\left( {\lambda_{0} \pm 10{\text{ nm}}} \right)\). In order to determine the object spectral reflectance, a spectrum was taken from a white reference (Spectralon diffuse 99 % reflectance target from Labsphere, Inc.) to normalize the measured spectra during the CS acquisition process. The integration time of the spectrometer was set at 300 ms.

For each spectral channel, we resolved the off-line CS algorithm with the complete set of measurements (M = N). After a suitable filtering, the recovered matrix served as a reference (lossless) image I ref(i, j), where (i, j) indicates the location of an arbitrary image pixel. The reconstruction process was then repeated using decreasing fractions of the total number of pixels. Concretely, the value of M was varied from 5 to 90 % of N. The fidelity of the reconstructed images was estimated by calculating the mean square error (MSE), given by

where I(i, j) is the noisy image obtained for a given value of M. We used another metric to evaluate the quality of the reconstruction, the so-called peak signal-to-noise ratio (PSNR), which is defined as the ratio between the maximum possible power of a signal and the power of the noise that affects the fidelity of its representation. In mathematical terms, [21]

Here, I max is the maximum possible pixel value of the reference image. For each spectral channel, the reference images were represented by 28 gray-levels, so I max = 255. Figure 2a, b shows the curves for the MSE and the PSNR versus M for the different values of λ 0. As is expected, both figures point out that the image quality improves as the number of measurements grows and approximates to the Nyquist limit. However, when M ≥ 0.4 N, the slope of both curves becomes visibly smoother for all spectral channels. In the case, for instance, of λ 0 = 610 nm, MSE = 0.13 (I max)2 and PSNR = 28.72 dB for M = 0.4 N (the PSNR for 0.9 N is only somewhat greater, 29.10 dB).

If images of higher resolution are considered, the priority is to minimize the value of M, since even small fractions of N can imply a huge volume of data feeding the CS algorithm, which, in addition, operates with higher dimensional matrices. The consequence is a dramatic increase in the total computation time. In our system, it is possible to change the SNR of the reconstructed image (for a given value of M) by controlling the spectrometer integration time, since the noise of the data used in the CS algorithm considerably depends on this parameter. To illustrate this point, the whole CS acquisition process was repeated for different values of the integration time. The number of measurements in all series was M = 0.2 N. The resulting curves for the PSNR versus λ 0 are shown in Fig. 3. As can be observed, the PSNR is incremented in approximately 8 dB when the integration time is varied from 50 to 300 ms.

4.2 Multispectral imaging

As a first application of our camera, we performed multispectral imaging of a sample object composed of an unripe cherry tomato together with a red one. The Walsh–Hadamard patterns addressed to the DMD had a resolution of 256 × 256 unit cells (N = 65,536). Each unit cell was composed of 2 × 2 DMD pixels. With this resolution, in accordance with the discussion of the previous section, the number of measurements was chosen to be M = 812, which corresponds to ~10 % of N (i.e., a compression rate of 10:1). The integration time of each spectrometer measurement was 300 ms.

The object spectral reflectance was determined by means of the white reference used in Sect. 4.1. In the case of plants, this magnitude has been used, for example, to investigate the chlorophyll content in leaves [22, 23]. The noisy data collected by the spectrometer for wavelengths lower than 500 nm imposed an inferior boundary to the useable spectral range. The results of the CS reconstruction for 15 spectral channels are shown in Fig. 4. The selected central wavelengths λ 0 in the visible spectrum (VIS) range from 510 to 680 nm. The bandwidth of each spectrum channel was 10 nm \(\left( {\lambda_{0} \pm 5{\text{ nm}}} \right)\). The recovered images were pseudo-colored, and the color assignment (the wavelength to RGB transform) was carried out with the aid of standard XYZ color-matching functions [24].

Multispectral image cube reconstructed by means of the CS algorithm. In the VIS spectrum, the reflectance for each spectral channel is a 256 × 256 pseudo-colored image. A gray-scale representation is used for the CS reconstruction in the NIR spectrum range. A colorful image of the scene made up from the conventional RGB channels is also included

In the near-infrared spectrum, the CS algorithm provided an acceptable reconstruction around 860 nm, which is presented by means of a gray-level image. Figure 4 also includes a colorful image of the object obtained from the combination of the conventional three RGB channels.

4.3 Polarimetric multispectral imaging

In this case, the sample object was the same as that used in Sect. 4.1, but the light emerging from each element of the scene had different linear polarizations. This effect was achieved by locating a linear polarizer after the object with its area split in two parts, each of which had its transmission axis oriented at orthogonal directions (0° and 90°, respectively). The resolution of the patterns addressed to the DMD was 128 × 128 unit cells (N = 16,384) composed of 4 × 4 DMD pixels. The number of measurements was M = 572, which corresponds to ~20 % of N (i.e., a compression rate of 5:1). As the source light was in principle unpolarized, half of light was lost after the object polarizer, so the integration time of the spectrometer was increased until 500 ms. The white reference of Sect. 4.1 was employed again to normalize the measured spectra. Eight central wavelengths λ 0 were selected in the VIS spectrum. The bandwidth of the channels was 20 nm \(\left( {\lambda_{0} \pm 10{\text{ nm}}} \right)\). Aside from the channels at the boundaries of the spectral range, the values of λ 0 correspond to the peak emissions of commercial light-emitting diodes. For each channel, four orientations of the polarization analyzer were sequentially considered in separated measurement series. To simplify data display, image reconstructions are arranged in a table, as can be observed in Fig. 5. Each column corresponds to a spectral channel, and each row shows the results for a given orientation of the analyzer. As shown in Fig. 4, a colorful image of the object is also presented. This RGB image was made up from the data taken for the second configuration of the analyzer (45°). The result for 680 nm is presented by means of a gray-level image due to its proximity to the near-infrared range.

5 Discussion and conclusions

We have performed polarimetric multispectral imaging by using a detector with no spatial resolution, which is composed of a configurable polarization analyzer and a commercial spectrometer. This single-pixel camera employs a DMD that generates a collection of binary intensity patterns that samples the image of the object under study. For a given analyzer configuration, a succession of spectra is sequentially acquired (one for each DMD realization). From this data, the object spectral image cube is recovered off-line by means of a CS algorithm, which makes possible to achieve a sub-Nyquist limit, that is, the total number of measurements is a fraction of the number of image pixels.

In contrast to cameras based on tunable band-pass filters, which carry out a wavelength sweep to measure the spectral content, our system collects the spectral information of all channels at once (albeit at the expense of sequentially acquiring the spatial information). As a result, the number of channels, their spectral resolution and the total wavelength range are those provided by the spectrometer integrated in the system. This fact facilitates to exploit the high performance of commercial devices. Our spectral system can in principle cover the whole VIS spectrum and part of the NIR range (up to 1.1 microns). In the infrared region, conventional multispectral systems require pixelated sensors specifically designed for that wavelength range (like InGaAs cameras).

As in other multispectral systems, the illumination is a main question to ensure a minimum signal along the selected spectral range. We have used a high-power Xe arc lamp, which produces a continuous and roughly uniform spectrum across the VIS region and a complex line spectrum in the 750–1,000 nm region of the NIR range. However, the decreasing source irradiance at the “blue” side of the VIS spectrum, as well as the low reflectance of samples at that region, limited our spectral range to wavelengths higher than 470 nm.

As is discussed in the previous section, our single-pixel camera presents a trade-off between image resolution and total acquisition time. Increasing the illumination level or reducing the spectral resolution (which permits lower integration times) could make the acquisition time to drop in at least one order of magnitude. A comparable drawback can be found in cameras that employ acousto-optic or liquid crystal tunable filters. In such systems, the higher spectral resolution (number of channels), the longer acquisition time, with a strong dependence on the exposure time of the pixelated sensor used as a detector. For image resolutions similar to those presented in this work, a hyperspectral camera (i.e., with more than 100 spectral channels) can take a few minutes in acquiring a data cube [25].

The single-pixel spectral system presented here also provides spatially resolved information about light polarization. To this end, the camera detector includes a polarizing film mounted in a rotating holder. This element limits the total spectral range, since the optical behavior of polarizing films is wavelength dependent. As a consequence, when the polarimetric multispectral imaging is carried out, the upper boundary of the spectral range is ~700 nm. The use of high-grade crystalline polarizers can resolve this limitation. For the successive configurations of the analyzer, a separated series of measurements must be taken. From the polarimetric images recovered for each spectral channel, it is possible to obtain information about the spatial distribution of the Stokes parameters of light, S i (i = 0, …, 3). If a linear polarizer is used as analyzer, the spatial distribution of S 1 and S 2 can be straightforward derived. A full Stokes polarization analysis should be performed by means of a rotating circular (or elliptic) polarizer. It is also possible to avoid mobile polarization elements by using an analyzer that combines voltage-controlled linear retarders (as those based on liquid crystal technology) with linear polarizers [8]. In that case, all polarimetric information could be acquired in a unique series of measurement, changing sequentially the configuration of the variable retarders for each DMD realization.

References

R.G. Sellar, G.D. Boreman, Classification of imaging spectrometers for remote sensing applications. Opt. Eng. 44(1), 013602 (2005)

K.L. Coulson, Effects of reflection properties of natural surfaces in aerial reconnaissance. Appl. Opt. 5, 905–917 (1966)

V.C. Vanderbilt, L. Grant, L.L. Biehl, B.F. Robinson, Specular, diffuse, and polarized light scattered by two wheat canopies. Appl. Opt. 24, 2408–2418 (1985)

S.M. Haugland, E. Bahar, A.H. Carrieri, Identification of contaminant coatings over rough surfaces using polarized infrared scattering. Appl. Opt. 31, 3847–3852 (1992)

A. Pierangelo, A. Benali, M-R. Antonelli, T. Novikova, P. Validire, B. Gayet, A. De Martino, Ex-vivo characterization of human colon cancer by Mueller polarimetric imaging. Opt. Express 19, 1582–1593 (2011)

Y. Zhao, L. Zhang, Q. Pan, Spectropolarimetric imaging for pathological analysis of skin. Appl. Opt. 48, D236–D246 (2009)

W. Groner, J.W. Winkelman, A.G. Harris, C. Ince, G.J. Bouma, K. Messmer, R.G. Nadeau, Orthogonal polarization spectral imaging: a new method for study of the microcirculation. Nat. Med. 5, 1209–1213 (1999)

N. Gupta, D.R. Suhre, Acousto-optic tunable filter imaging spectrometer with full Stokes polarimetric capability. Appl. Opt. 46, 2632–2637 (2007)

E.J. Candès, M.B. Wakin, An introduction to compressive sampling. IEEE Signal Process. Mag. 25, 21–30 (2008)

M.F. Duarte, M.A. Davenport, D. Takhar, J.N. Laska, T. Sun, K.F. Kelly, R.G. Baraniuk, Single-pixel imaging via compressive sampling. IEEE Signal Process. Mag. 25, 83–91 (2008)

F. Magalhaes, F.M. Araújo, M.V. Correia, M. Abolbashari, F. Farahi, Active illumination single-pixel camera based on compressive sensing. Appl. Opt. 50, 405–414 (2011)

W.L. Chan, K. Charan, D. Takhar, K.F. Kelly, R.G. Baraniuk, D.M. Mittleman, A single-pixel terahertz imaging system based on compressed sensing. Appl. Phys. Lett. 93, 121105 (2008)

T. Sun, K. Kelly, Compressive Sensing Hyperspectral Imager, Computational Optical Sensing and Imaging, OSA Technical Digest (CD) (Optical Society of America, 2009)

Y. Wu, I.O. Mirza, G.R. Arce, D.W. Prather, Development of a digital-micromirror-device-based multishot snapshot spectral imaging system. Opt. Lett. 36, 2692–2694 (2011)

V. Durán, P. Clemente, M. Fernández-Alonso, E. Tajahuerce, J. Lancis, Single-pixel polarimetric imaging. Opt. Lett. 37, 824–826 (2012)

V. Studer, J. Bobin, M. Chahid, S.H. Shams Mousavi, E. Candès, M. Dahan, Compressive fluorescence microscopy for biological and hyperspectral imaging. PNAS 109, E1679–E1687 (2012)

J. Sampsell, An overview of the digital micromirror device (DMD) and its application to projection displays, SID Int. Symp. Digest of Technical Papers 24, 1012 (1993)

W.K. Pratt, J. Kane, H.C. Andrews, Hadamard transform image coding. Proc. IEEE 57, 58–67 (1969)

M.A.T. Figueiredo, R.D. Nowak, S.J. Wright, Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1, 586–597 (2007)

W. K. Pratt, Digital Image Processing, 4th edn. (Wiley, 2007)

J. Vila-Francés, J. Calpe-Maravilla, J. Muñoz-Mari, L. Gómez-Chova, J. Amorós-López, E. Ribes-Gómez, V. Durán-Bosch, Configurable-bandwidth imaging spectrometer based on an acousto-optic tunable filter. Rev. Sci. Instr. 77, 073108 (2006)

Z. Xiaobo, S. Jiyong, H. Limin, Z. Jiewen, M. Hanpin, C. Zhenwei, L. Yanxiao, M. Holmes, In vivo non-invasive detection of chlorophyll distribution in cucumber (Cucumis sativus) leaves by indices based on hyperspectral imaging. Anal. Chim. Acta 706, 105–112 (2011)

http://www.mathworks.com/matlabcentral/fileexchange/7021-spectral-and-xyz-color-functions

K.J. Zuzak, M.D. Schaeberle, E.N. Lewis, I.W. Levin, Visible reflectance hyperspectral imaging: characterization of a noninvasive, in vivo system for determining tissue perfusion. Anal. Chem. 74, 2021–2028 (2002)

Acknowledgments

This work has been partly funded by the Spanish Ministry of Education (project FIS2010-15746) and the Excellence Net from the Generalitat Valenciana about Medical Imaging (project ISIC/2012/013). Also funding from Generalitat Valenciana through Prometeo Excellence Programme (project PROMETEO/2012/021) is acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Soldevila, F., Irles, E., Durán, V. et al. Single-pixel polarimetric imaging spectrometer by compressive sensing. Appl. Phys. B 113, 551–558 (2013). https://doi.org/10.1007/s00340-013-5506-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00340-013-5506-2