Abstract

Stimuli occurring in multiple sensory modalities that are temporally synchronous or spatially coincident can be integrated together to enhance perception. Additionally, the semantic content or meaning of a stimulus can influence cross-modal interactions, improving task performance when these stimuli convey semantically congruent or matching information, but impairing performance when they contain non-matching or distracting information. Attention is one mechanism that is known to alter processing of sensory stimuli by enhancing perception of task-relevant information and suppressing perception of task-irrelevant stimuli. It is not known, however, to what extent attention to a single sensory modality can minimize the impact of stimuli in the unattended sensory modality and reduce the integration of stimuli across multiple sensory modalities. Our hypothesis was that modality-specific selective attention would limit processing of stimuli in the unattended sensory modality, resulting in a reduction of performance enhancements produced by semantically matching multisensory stimuli, and a reduction in performance decrements produced by semantically non-matching multisensory stimuli. The results from two experiments utilizing a cued discrimination task demonstrate that selective attention to a single sensory modality prevents the integration of matching multisensory stimuli that is normally observed when attention is divided between sensory modalities. Attention did not reliably alter the amount of distraction caused by non-matching multisensory stimuli on this task; however, these findings highlight a critical role for modality-specific selective attention in modulating multisensory integration.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Our perception of the environment depends critically on accurately combining information from individual sensory systems. Intuitively we know that pairing matching information from multiple sensory modalities can be helpful; if someone is speaking quietly, it is easier to understand what they are saying if you can see their lips moving in addition to hearing their voice. This is borne out in the laboratory, where numerous studies in animals and humans have demonstrated the utility of temporally and spatially coincident multimodal stimuli in improving subjects’ detection, discrimination, and localization abilities, a phenomenon known as multisensory integration (Hershenson 1962; Morrell 1968; Stein et al. 1989; Jiang et al. 2002; Burnett et al. 2004). Interestingly, even when multisensory stimuli are temporally coincident, but coming from different spatial locations, this information can be integrated, as can be observed in a ventriloquist’s act, or in numerous studies showing multisensory integration of visual and auditory stimuli presented in different locations (e.g., on a monitor and through headphones) (Calvert et al. 2000; Laurienti et al. 2004; Macaluso et al. 2004). Studies in humans have also shown that the semantic content or meaning of a stimulus can impact cross-modal interactions, as multisensory stimuli that represent contextually congruent or matching information have been shown to enhance performance in a feature discrimination task (Laurienti et al. 2004).

Although integrating spatially or temporally synchronous, semantically congruent stimuli from multiple sensory modalities can potentially enhance perception, our environment normally contains many stimuli from different sensory modalities simultaneously conveying unrelated information. The integration of these temporally coincident, but semantically non-matching stimuli from various sensory modalities can potentially impair one’s ability to process relevant stimuli (Laurienti et al. 2004). For example, when you are reading a book, the information that you see typically does not match the auditory stimuli in your environment. If you paired the visual and auditory information together, you would have difficulty understanding the book. Multiple studies have demonstrated that participants experience performance decrements when cross-modal distractors or non-matching multisensory stimuli are presented (Stein et al. 1989; Fan et al. 2003; Laurienti et al. 2004).

However, given that most people are able to read despite the presence of auditory stimuli, it is apparent that our brains do not integrate all temporally coincident information. Rather, there are neural mechanisms for selecting relevant stimuli and suppressing irrelevant or distracting information. Selective attention is a top–down control mechanism that allows us to focus on a particular feature or stimulus while ignoring other possible stimuli (Posner and Driver 1992). Single cell recordings in monkeys have demonstrated that selective attention to a particular location in space can bias processing such that neuronal responses to visual stimuli occurring in the attended location are enhanced compared to responses to the same stimuli in unattended spatial locations (Spitzer et al. 1988; Motter 1993). Attention can also influence the processing of auditory and tactile stimuli, as focusing attention on a location in space can enhance perception of stimuli that occur in that location, regardless of stimulus modality (Spence et al. 1998; Macaluso et al. 2000; Johnen et al. 2001; McDonald et al. 2003). Spatial attention has been shown not only to enhance responses to stimuli presented in attended locations, but also to suppress responses to stimuli in other locations. In the visual cortex, selective attention to a particular location can suppress the influence of adjacent stimuli, thus filtering out information from unattended locations (Kastner et al. 1998; Reynolds et al. 1999).

These enhancing and suppressing mechanisms of selective attention apply not only when attention is directed to particular locations, but also when attention is directed to specific stimulus features (Corbetta et al. 1990; Moore and Egeth 1998) or sensory modalities (Woodruff et al. 1996; Macaluso et al. 2002). The behavioral effects of modality-specific selective attention have been observed when participants are instructed to attend to a single sensory modality (auditory or visual) before performing speeded detection and discrimination tasks (Spence and Driver 1997). Participants generally respond quicker and more accurately when the target appears in the expected modality than when the target appears in the unexpected modality, but these benefits are typically very small (Spence et al. 2001). In contrast with spatial attention, the more consistent effect produced by selective attention to a particular sensory modality is a reduction in the efficiency of processing stimuli in the unattended modality (Spence et al. 2001). This decrease in processing is referred to as a cost, and is reflected in poorer accuracy and slower response times (RT) for targets presented in a unexpected modality compared to responses made in that same modality when it the expected target modality (Spence et al. 2001). These benefits and costs are also observed in imaging experiments where selective attention to a particular sensory modality can increase activity in the corresponding primary and secondary sensory cortices, while decreasing activity for stimuli presented in the unattended modality (Kawashima et al. 1995; Macaluso et al. 2000; Laurienti et al. 2002; Loose et al. 2003; Johnson and Zatorre 2006). Additionally, activity corresponding to attended stimuli decreases when participants must divide attention between two sensory modalities (Loose et al. 2003).

Although there is substantial evidence that attention can modulate the processing of information within individual sensory systems, limited data exist on how attention may impact the integration of information across sensory systems (Bertelson and Radeau 1981; Bertelson et al. 2000; Vroomen et al. 2001; McDonald et al. 2003; Alsius et al. 2005; Shore and Simic 2005; Talsma and Woldorff 2005). Under the high attention demands produced by dual-task conditions, Alsius et al. (2005) demonstrated that audiovisual integration often fails. These data suggest that audiovisual integration is not an automatic process, and can be modulated when tasks must be performed in multiple modalities (Alsius et al. 2005). In contrast, evidence from studies on spatial attention and the ventriloquist effect indicated that participants’ ability to localize a sound is an automatic sensory process not influenced by directing visual attention to different visual locations (Bertelson et al. 2000; Vroomen et al. 2001). However, spatial attention presumably exerts its influence on perception via different mechanisms than modality-specific attention, as the behavioral manifestations of attention to a location and attention to a modality have been shown to differ. For example, attention to a particular location in space typically results in perceptual enhancements at that location regardless of the sensory modality, while attention to a particular sensory modality generally leads to perceptual decrements in the unattended sensory modality with only small or no enhancements for the attended modality (Spence et al. 1998, 2001). Indeed, the ventriloquist effect (which is not impacted by spatial attention) can be influenced by modality-specific attention, as participants’ localizations of audiovisual stimuli are different depending on whether they are asked to localize the auditory or the visual component of the stimuli (Bertelson and Radeau 1981; Bertelson et al. 2000).

Thus, the information outlined above suggests that attention to a single sensory modality should reduce multisensory integration due to the suppression of stimuli in the ignored modality. Additionally, experiments utilizing event related potentials (ERPs) demonstrate that integration effects observed on the p50 components of multisensory ERPs are absent when participants attend to only one sensory modality (Talsma et al. 2007). Observations from these studies indicate an important role for top–down mechanisms in mediating interactions between sensory modalities; however, the behavioral impact that directing attention to a single sensory modality has on multisensory integration has not yet been fully explored. Thus, the purpose of the current study was to utilize behavioral measures to rigorously characterize the effects of attention on multisensory integration. For this study, we were interested in how attention impacts the integration of semantically matching, temporally coincident bimodal stimuli. These matching stimuli consisted of a visual component and an auditory component that conveyed information with the same semantic content; for example, a visually presented blue circle and an auditory verbalization of the word “blue”. Additionally, we were also interested in how attention influences the integration of semantically non-matching stimuli, which we defined as stimuli where the visual stimulus and the auditory stimulus do not convey the same information; for example, a visually presented blue circle and an auditory verbalization of the word “green”. Our hypotheses were as follows: (1) selective attention to a single sensory modality (auditory or visual) should limit the integration of matching information presented in multiple modalities; (2) the distracting effects of non-matching multisensory information should be reduced during selective attention compared to divided attention conditions. The two experiments that follow were designed to test our hypotheses.

Experiment 1

The objective of this experiment was to compare how selective attention to a single sensory modality (auditory or visual) and divided attention (between auditory and visual modalities) modulate the integration of semantically matching multisensory stimuli. To do this, we either directed participants to selectively attend to a single sensory modality or to divide their attention between sensory modalities and respond to unisensory and multisensory targets. Response accuracy and speed were then assessed for each target type under the different attention conditions to determine the effects of attention on multisensory integration.

Methods

Participants

Fourteen healthy adults participated in this experiment (mean age = 24.0, nine females). Participants were screened for inclusion criteria which included normal or corrected-to-normal vision and hearing, accurate color vision, normal cognitive function, and no history of neurological disorder or brain injury. All participants gave written informed consent to participate in this study, which was approved by the Institutional Review Board at Wake Forest University Baptist Medical Center, and performed in accordance with the standards of the Declaration of Helsinki. All participants were compensated for their time, and were naïve to the experimental tasks.

Paradigm

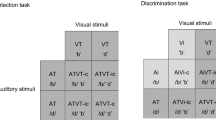

For this experiment, participants completed a cued, multisensory discrimination paradigm that required them to choose between the colors red and blue (Laurienti et al. 2004). During each trial, stimuli were presented in either the auditory modality, the visual modality, or simultaneously in both the auditory and the visual modality for multisensory conditions. Auditory stimuli were verbalizations of the words “red” and “blue”. Visual stimuli were red and blue color-filled circles that subtended 7.7° of visual angle, presented on a black background. Multisensory stimuli were simultaneous presentations of matching auditory and visual stimuli (e.g., the verbalization of the word “red” paired with a red color-filled circle). Eighty-four unisensory auditory trials, 84 unisensory visual trials, and 126 multisensory trials were presented pseudo-randomly.

Each trial began with participants fixating on a white cross in the middle of a black screen for an interval that varied randomly between 1,000 and 1,500 ms. Following fixation, a 1,000-ms visual cue was presented on the screen that alerted participants to direct their attention to the modality in which the target would appear. These cues consisted of white line drawings of an ear and an eye that each subtended 5.4° of visual angle presented on a black background. Cues were presented 3.8° to the left and right of a central fixation cross. Participants were instructed that the presentation of two ears indicated that an auditory stimulus would appear during the trial. Participants were made aware that the presentation of this cue to pay attention to the auditory modality did not preclude the appearance of an additional visual stimulus during the trial. However, there were no trials in which the cue was invalid (i.e., the target following the cue to attend to audition always contained an auditory component). The presentation of two eyes cued participants to attend to the visual modality. Again, this cue indicated that a visual stimulus would appear during the trial; although the trial could also contain an auditory stimulus, participants were to pay attention to the visual modality. The presentation of one eye and one ear indicated that the trial could include an auditory stimulus, a visual stimulus, or both. This cue alerted participants to divide their attention between the auditory and visual modalities.

Thus, the cues did not indicate which type of trial would follow (e.g., the presentation of two eyes could be followed by a unisensory visual trial or a multisensory trial and the eye and ear cue could likewise be followed by a unisensory visual trial, a multisensory trial, or an auditory trial). However, the cues did direct participants to selectively attend to either the auditory or visual modalities or to divide their attention between those modalities. The combination of three cue conditions and three potential targets produced seven possible trial types (see Table 1 for a comprehensive list). As all attention cues were presented in the visual modality, we can not exclude the possibility that these external events could bias attention toward the visual modality. However, unpublished data from our laboratory suggest that this bias is quite small for this type of task. Additionally, experiments on attentional shifts between modalities demonstrate that the modality of one stimulus has little effect on responses to a second stimulus when the two stimuli are separated by intervals greater than 1,000 ms (Turatto et al. 2002, 2004). The latency between the cue and target in the present experiment varies between 1,000 and 1,500 ms; therefore, we do not expect that the exogenous effects of the cue will impact participants’ ability to direct their attention to the auditory or visual modality. Additionally, if the results of these experiments show that cueing attention to audition or to vision impacts multisensory integration in the same way, this would suggest that any exogenous effects of cue modality do not preclude the observation of the role played by endogenous or voluntary control of attention in this task.

Following each cue, there was a delay that varied randomly between 1,000 and 1,500 ms. After the delay, the target was presented. Visual targets were 150 ms in duration and auditory targets were presented for 300 ms. For multisensory targets, the onsets of the visual and auditory stimuli were simultaneous and stimulus timing remained the same. As soon as the participant responded, the next trial began; however if the subject did not respond within 8 s, the experiment continued with the next trial. The sequence of events for experimental trials is depicted in Fig. 1.

The sequence of trial events. Each trial began with participants fixating on a cross in the middle of the screen. After fixation, the attention cue was presented to alert participants to selectively attend to either the auditory modality, the visual modality, or to divide their attention between the auditory and visual modalities (shown). Targets followed the cue by a variable delay of 1,000–1,500 ms and could be visual (red or blue color-filled circles), auditory (verbalizations of the words red or blue), or multisensory (shown—simultaneous presentation of both the auditory and visual stimuli). Each trial ended upon participant response; if no response was made, the next trial began after 8 s

Experimental setup

Participants were seated in a sound and light-attenuated testing booth (Whisper Room, Morristown, TN, USA) with their chins in a chin-rest to ensure that they were comfortably positioned 24 in from the computer monitor with the center of the screen at eye-level. All visual stimuli were presented on the computer screen. Auditory stimuli were presented through speakers located on the left and right sides of the monitor. The volume of the stimuli was adjusted to an easily discriminable level for each participant (typically ∼75 dB). E-prime software and a Serial Response box (Psychology Software Tools, Pittsburg, PA, USA) were used to control stimulus presentation and to record participants’ RT and accuracy. Participants responded by pressing buttons with the index finger of each hand. A corresponding red or blue light was presented above the response buttons throughout the experiment to ensure that participants did not forget button assignments. The mapping of red and blue responses to the left and right hand was counterbalanced across participants.

Data analysis

Each participant’s RT were analyzed to remove outliers (±3 standard deviations) and separate incorrect responses. Average accuracy, RT, and response distributions for each of the seven trial types were calculated for each subject. Data were then collapsed across participants and compared using 2(cue) × 2(target) repeated measures analyses of variance (ANOVAs) to determine if mean accuracy or RT differed by cue (selective attention versus divided attention) or by target (unisensory or multisensory). Separate ANOVAs were used to compare responses to auditory and visual trial types because we were not interested in directly comparing responses in these two modalities, as previous studies have demonstrated differences between the neural mechanisms and behavioral outcomes of attention to visual versus auditory modalities (O’Leary et al. 1997; Talsma and Kok 2001).

Although the ANOVA comparison of RT can identify responses for multisensory stimuli that are faster than responses to either unisensory stimuli, this analysis does not take into account the fact that faster responses on multisensory trials could possibly be due simply to the presence of two stimuli in the multisensory trials and only one stimulus in a unisensory trial. To control for the redundant nature of multisensory trials, it must be determined if responses to multisensory stimuli are faster than what would be predicted based on responses to both auditory and visual stimuli. Thus, the preferred method for identifying multisensory integration is to compare the distribution of multisensory responses to the joint probability of visual and auditory responses. This model of statistical facilitation is known as the independent race model (Miller 1982, 1986). If the probability of responding to the multisensory stimulus is significantly greater at a given time point than the response predicted by the summed probability of responding to each of the unisensory stimuli, then the multisensory response is said to violate the race model. This outcome suggests that there has been neural integration of the two unisensory inputs comprising the multisensory stimulus, resulting in an interaction that speeds responses to multisensory stimuli. To perform this analysis, cumulative distribution functions (CDFs) for each trial type were generated for each subject using 4 ms time bins. Each participant’s unisensory CDFs were then used to calculate the race distribution, using the following formula at each time bin:

In this formula, P(A) is the probability of responding by a given time on a unisensory auditory trial, and P(V) is the probability of responding by a given time on a unisensory visual trial. Two different race model predictions were generated for each participant: (1) the selective attention race model, which was based on responses made to unisensory targets when participants had been cued to selectively attend either to vision or audition; and (2) the divided attention race model, based on responses made to unisensory targets when participants had been cued to divide their attention between vision and audition.

After these individual CDFs were completed, group mean CDFs were generated for each trial type and individual race models were averaged to obtain group predictions for responses made under selective attention and divided attention. To determine if significant differences existed between the probability of responding to the multisensory targets and the predicted probability of responding to unisensory targets based on the race model, paired t-tests were performed between the multisensory distribution for each attention condition (selective auditory, selective visual, and divided attention) and the corresponding race model distributions (selective attention race model or divided attention race model) at each time bin. To correct for the multiple comparisons made between multisensory and race distributions at each time bin, we only consider there to be a significant difference between the two distributions if comparisons of at least ten contiguous bins each yielded t-scores with P < 0.05. The chance of ten consecutive bins being false positives is approximately P < 0.001.

Results

Accuracy

Response accuracy was very high, with participants averaging 98.07% correct over all trial types. Mean accuracy and standard deviations can be found in Table 1. The 2 × 2 repeated measures ANOVA comparing accuracy on unisensory and multisensory targets during selective and divided attention indicated that there were no differences in by cue or target for the auditory/multisensory comparison or the visual/multisensory comparison.

Response time

Mean RT and standard deviations can be found in Table 1. In the 2 × 2 ANOVA comparing RT for auditory and multisensory trials under divided and selective attention, there was a main effect of target (F 1,13 = 116.89, P < 0.001), no significant effect of cue (F 1,13 = 0.26, P = ns), and a marginally significant interaction (F 1,13 = 3.60, P = 0.08). These results indicated that under both divided and selective auditory attention conditions, RTs for multisensory targets were significantly faster than RTs for unisensory auditory targets. The marginally significant interaction suggests that under divided attention conditions, there was a trend toward a greater speeding of RTs for multisensory targets than under selective attention.

The 2 × 2 ANOVA comparing RT for visual and multisensory targets during divided and selective attention also yielded a significant main effect of target (F 1,13 = 24.67, P < 0.001), but no effect of cue (F 1,13 = 0.20, P = ns), and no significant interaction (F1,13 = 0.12, P = ns).

The fact that there was no effect of attentional cue is not surprising given that selective attention to a single sensory modality generally does not result in large performance enhancements in the attended modality (Spence and Driver 1997; Spence et al. 2001). For these experiments, we were primarily interested in the effects of modality-specific attention on multisensory integration and thus we did not present any invalidly cued trials (i.e., where a participant is cued to one modality and receives a unisensory target in the unattended modality) that would have enabled us to assess the performance decrements that are associated with unisensory stimuli presented in the unattended sensory modality.

Cumulative distribution function

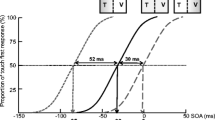

Due to the redundant nature of multisensory targets, the significant effects of target in the RT comparisons are a liberal estimate of multisensory performance enhancements. That is, it is possible that the speeding of RT to multisensory targets noted in this analysis was due to the availability of multiple pieces of information in these conditions and not to integration of the stimuli. To account for the increased probability of speeded responses to multisensory targets, the distributions for multisensory responses were compared to the race model, a summed probability of unisensory responses. Responses to multisensory targets under divided attention were compared to the summed probability of response to auditory and visual targets (race model distribution) in the divided attention condition (Fig. 2a). Two similar comparisons evaluated responses to multisensory targets under selective auditory attention and selective visual attention versus a selective attention race model distribution (Fig. 2b).

Cumulative distribution functions (CDFs) for responses on auditory, visual, and multisensory trials during divided and selective attention in Experiment 1. a For trials with divided attention cues, CDFs for response times are depicted for unisensory auditory targets (blue dotted curve) unisensory visual targets (red dashed curve), and multisensory targets (green solid curve). Response probabilities predicted by summing the unisensory response probabilities (race model) are depicted with the gray solid curve. Note that fast response times are more likely for multisensory targets than predicted by the race model in several time bins. b CDFs are depicted for response times to auditory targets (blue dotted curve) and multisensory targets (yellow solid curve) during selective auditory attention and for visual targets (red dashed curve) and multisensory targets (green solid curve) during selective visual attention. The gray solid curve depicts the race model predictions based on the summed probability of unisensory responses during selective attention. Fast responses to multisensory targets during either selective attention condition are typically less likely than predicted by the race model. c The difference in response probability between multisensory trials and race model predictions during divided attention (yellow solid curve), selective auditory attention (blue dotted curve), and selective visual attention (red dashed curve) illustrates that significant multisensory enhancements are only present under divided attention conditions (shaded bar, 356–408 ms)

In contrast to the mean RT comparisons that found similar significant multisensory gains under all cue conditions, these comparisons indicated that significant multisensory enhancements were present only under divided attention conditions (Fig. 2c). Paired t-tests were performed at 4 ms time bins across the entire response distribution in order to compare response probabilities for multisensory trials to the predicted response probabilities of the race model. Under divided attention, at times between 356 and 408 ms, responses probabilities were significantly higher (P < 0.05) for multisensory targets than race model probabilities. During selective attention conditions, similar paired t-tests comparisons indicated that the probability of responding to a multisensory stimulus under selective auditory or visual attention was not significantly greater than predicted by the race model at any time bin.

Discussion

When attention is focused on a single sensory modality, activity is suppressed in the ignored cortex, presumably resulting in less sensory information being available for integration (Haxby et al. 1994; Kawashima et al. 1995; Laurienti et al. 2002; Johnson and Zatorre 2005, 2006). This led us to hypothesize that multisensory integration would be attenuated during modality-specific selective attention. To test this hypothesis we used a multisensory discrimination task that included modality-specific and divided attention cues to direct participants’ attentional resources. Comparisons between multisensory response distributions and the race model were used to identify multisensory gains that surpass statistical facilitation. The data demonstrated that significant multisensory integration only occurred when participants divided their attention between modalities. When selectively attending to either the auditory or the visual modality, participants obtained no significant performance enhancements from matching multisensory stimuli compared to the race model. Given that attention to either sensory modality abolished multisensory enhancements, it also appears that if any exogenous bias was produced by the visual cues it was not strong enough to counteract volitional control of attention. Due to the fact that during matching multisensory trials, both the auditory stimulus and the visual stimulus were associated with the same response choice (e.g., left button for blue and right button for red), it is possible that the speeding of responses observed on the multisensory trials was due to response priming rather than multisensory integration. However, a previous study conducted in our laboratory demonstrated that responses to targets with two visual components did not result in performance enhancements that surpassed those predicted by the race model, as was seen in the case of multisensory stimuli with both a visual and an auditory component (Laurienti et al. 2004). These data suggest that the multisensory enhancements observed on the very similar paradigm used in this study are the result of integration of the two sensory inputs, and are not simply due to priming interactions at the level of the response.

The behavioral findings from this experiment are in accord with results from an ERP study suggesting that the multisensory integration effects that can be detected when participants attend to both the visual and auditory modality are absent when participants attend to only one sensory modality (Talsma et al. 2007). Mean RT were faster to matching multisensory targets than to unisensory targets during all attention conditions. While this result may seem counterintuitive, it simply demonstrates that modality-specific selective attention did not prevent the statistical facilitation that occurs with the presentation of multiple pieces of information. Because mean multisensory RT were compared to individual unisensory conditions, this comparison can not be used to assess whether multisensory responses are indicative of integration.

The results from this experiment highlight an important point concerning the role of attention in modulating the integration of stimuli from various sensory modalities. It would be counterproductive for modality-specific selective attention to function only as a method for reducing the apparently helpful integration of multisensory stimuli conveying semantically congruent information. It is beneficial to be able integrate and respond quickly and accurately to complementary stimuli such as the sight and sound of an oncoming car. In these situations, selective attention to a single sensory modality may not be advantageous for reacting to the environment. However, our environment usually consists of many unrelated sounds and sights that, if constantly combined together, would make our sensory perceptions incomprehensible. Imagine if you could not read this paper without merging the information that you are reading with the sounds that you are currently hearing. Indeed, the presence of non-matching stimuli in two modalities can cause distraction and impair task performance (Rees et al. 2001; Laurienti et al. 2004). Thus, rather than solely reducing the performance enhancements gained from the integration of matching multisensory information, a more critical function of modality-specific selective attention may be to filter non-matching or distracting stimuli that occur in unattended modalities.

To determine if modality-specific selective attention limits the distracting effects of non-matching multisensory stimuli, a second experiment was designed using a similar paradigm as in the first experiment, but with the addition of non-matching auditory/visual target pairings.

Experiment 2

In Experiment 1, multisensory targets always consisted of a matched audiovisual pairing (e.g., a red circle and a verbalization of the word “red”). In Experiment 2, we wanted to assess the impact of attention on the processing of multisensory stimuli that are temporally and spatially coincident, but semantically non-matching. As described earlier, semantically non-matching stimuli were defined in this study as audiovisual stimuli where the visual component of the stimulus did not convey the same information as the auditory component (e.g., a red circle and a verbalization of the word “green”). For this experiment, the non-matching multisensory stimuli always consisted of an answer choice in one modality (i.e., red or blue) and a stimulus in the other modality that was not a possible answer choice (i.e., green). This non-matching stimulus was used to present distracting information that was semantically relevant to the task and would increase the task difficulty without leading to direct conflict between answer choices. Our expectation was that selective attention to a single sensory modality would reduce the distracting effects of non-matching stimuli presented in another sensory modality, and as a consequence, participants would not be slowed as much by non-matching stimuli during selective attention trials as during divided attention trials.

Methods

Participants

Fourteen healthy adults participated in this experiment (mean age = 25.6, eight females). Participants were screened according to the same criteria as in Experiment 1, and no subject who enrolled in Experiment 1 participated in Experiment 2. All participants gave written informed consent to participate in this study, which was approved by the Institutional Review Board at Wake Forest University Baptist Medical Center and conducted in accordance with the Declaration of Helsinki. Participants were compensated for their time, and were naïve to all experimental tasks.

Paradigm

For this experiment, participants performed a cued, multisensory discrimination paradigm very similar to the task completed in Experiment 1. This paradigm included all of the same conditions used in Experiment 1, plus 168 additional trials with non-matching multisensory pairings. Eighty-four non-matching multisensory trials were presented during divided attention and 84 were presented during selective attention. As in Experiment 1, participants were instructed to discriminate between the colors red and blue presented as auditory, visual, and multisensory targets. Green was added as a non-matching color and could be paired with either a red or blue target in any attention condition to create a non-matched multisensory pairing (e.g., participant is cued to selectively attend to vision, and then sees a red color-filled circle and hears the word “green”). Green color-filled circles and verbalizations of the word “green” were always non-matching stimuli, as a green stimulus was always paired with a red or blue target. The attentional cue that preceded the target always correctly identified the sensory modality in which the red or blue target would appear. Thus, a cue to selectively attend to the auditory modality was always followed by either a unisensory auditory target, a matching multisensory target, or a non-matching multisensory stimulus with the task-irrelevant green stimulus presented in the visual modality. The timing of stimulus delivery was also modified from the paradigm used in Experiment 1 in order to offset the increase in experimental time caused by including additional non-matching multisensory trials. Cues were presented for 750 ms rather than 1,000 ms and the interval between trials varied randomly between 750 and 1,250 ms rather than 1,000 and 1,500 ms, reducing the duration of each trial used in this paradigm compared to the trials in Experiment 1.

Experimental setup

Testing for Experiment 2 was conducted in the same manner as in Experiment 1. All testing materials, stimulus presentation, and response collection remained the same.

Data analysis

The procedures used to analyze the data in Experiment 2 were very similar to those detailed above. The combination of the three possible attention cues and four possible target types yields 11 different trial types, listed in Table 2. Outliers and incorrect responses were separated from the data set and mean accuracy, RT, and response distributions for each of the 11 trial types were compiled for each subject before averaging across participants. The main effects and interactions of attention condition and target type and were analyzed using 2(cues) × 3(targets) repeated measures ANOVA. As in the first experiment, auditory and visual trials were analyzed in separate ANOVAs. Post-hoc tests utilizing a Bonferroni correction for multiple comparisons were used to determine accuracy and RT differences between specific target types.

As in Experiment 1, the race model and matching multisensory target response distributions were compared to identify performance gains resulting from the integration of matched multisensory pairings. In addition, the CDFs for responses to unisensory targets were subtracted from the CDFs for responses to non-matching multisensory targets to obtain a measure of the distracting effects of non-matching stimuli. At each time bin, paired t-tests between the distractor effects in divided and selective attention conditions were then performed to determine if cross-modal distraction was significantly reduced during selective attention compared to divided attention conditions.

Results

Accuracy

Accuracy remained high on this task with participants averaging 97.3% correct on all trial types. Mean accuracy and standard deviations for each trial type can be found in Table 2. A 2(cues) × 3(targets) repeated measures ANOVA comparing accuracy for auditory, multisensory, and non-matching trials (auditory target, visual distractor) indicated that there was significant effect of target (F 2,26 = 17.90, P < 0.001), no effect of cue (F 1,13 = 0.31, P = ns), and a significant interaction (F 2,26 = 3.98, P = 0.03). Post-hoc comparisons with a Bonferroni correction demonstrated that accuracy was similar for unisensory and matched multisensory targets, but significantly lower than both of these for the non-matching multisensory targets that consisted of an auditory target with a visual distractor. A similar ANOVA comparing accuracy for visual, multisensory, and non-matching trials (visual target, auditory distractor) also demonstrated a significant effect of target, (F 2,26 = 4.41, P = 0.02), but no effect of cue (F 1,13 = 1.95, P = ns), and no significant interaction (F 2,26 = 2.49, P = ns). There was a trend for lower accuracy on unisensory trials, however, after implementing the Bonferroni correction, post-hoc comparisons demonstrated that accuracy was not significantly different for any target type.

Response time

Mean RT and standard deviation for all trial types can be found in Table 2. The ANOVA comparing RT for auditory, multisensory, and non-matching targets under selective and divided attention indicated that there was a significant effect of target, (F 2,26 = 53.82, P < 0.001), no effect of cue (F 1,13 = 1.50, P = ns), and no interaction (F 2,26 = 1.67, P = ns). The analogous ANOVA for comparison of visual targets also revealed a significant effect of target, (F 2,26 = 21.98, P < 0.001), no effect of cue (F 1,13 = 2.85, P = ns), and no interaction (F 2,26 = 0.25, P = ns). Post-hoc tests for both the auditory and visual ANOVAs showed the same pattern: responses to unisensory targets were significantly faster than responses to non-matching multisensory targets, and responses to matching multisensory targets were significantly faster than responses to both unisensory and non-matching multisensory targets.

Cumulative distribution functions

Although significant differences in mean RT were found between matching multisensory trials and unisensory trials under all attention conditions, as stated previously, these comparisons do not account for the presence of multiple stimuli in multisensory trials. In comparisons made between the response distributions for matched multisensory trials and the summed probability of unisensory response (race model) under each attention condition, significant multisensory enhancements were noted only on trials where participants were cued to divided attention between sensory modalities (Fig. 3). As in Experiment 1, paired t-tests were performed at each 4 ms time bin across the distribution to compare response probabilities for the matching multisensory targets to the race model predictions. Under divided attention conditions, response probabilities were significantly higher on matched multisensory trials than predicted by the race model, P < 0.05 at each time bin between 342 and 426 ms. Similar paired t-tests between the response distributions for matching multisensory trials and the race model predictions for selective attention conditions indicated that, during selective auditory or visual attention, the probability of responding to a matching multisensory target was not significantly greater than predicted by the race model at any time bin (Fig. 3).

Cumulative distribution functions (CDFs) for responses on auditory, visual, and matching multisensory trials during divided attention and selective attention in Experiment 2. a For trials with divided attention cues, CDFs for response times are depicted for unisensory auditory targets (blue dotted curve) unisensory visual targets (red dashed curve), and matching multisensory targets (green solid curve). Response probabilities predicted by summing the unisensory response probabilities (race model) are depicted with the gray solid curve. As in Experiment 1, fast response times are more likely for multisensory targets than predicted by the race model across several time bins. b CDFs are depicted for response times to auditory targets (blue dotted curve) and multisensory targets (yellow solid curve) during selective auditory attention and to visual targets (red dashed curve) and multisensory targets (green solid curve) during selective visual attention. The gray solid curve depicts the race model predictions based on the summed probability of unisensory responses under selective attention. As in Experiment 1, fast responses to multisensory targets under both selective attention conditions are less likely than predicted by the race model. c The difference in response probability between matching multisensory trials and race model predictions during divided attention (yellow solid curve), selective auditory attention (blue dotted curve), and selective visual attention (red dashed curve) illustrates that significant multisensory enhancements are only present under divided attention conditions (shaded bar, 342–426 ms)

To assess the effects of non-matching cross-modal distractors, the response distributions for unisensory trials were compared to the response distributions for non-matching multisensory trials, yielding a relative distractor effect (Fig. 4). A comparison between response distributions for unisensory auditory targets and non-matching multisensory targets (auditory targets with visual distractors) yielded the visual distractor effect (Fig. 4a). This distractor effect was found for both divided and selective auditory attention conditions. The comparison between unisensory visual targets and non-matching multisensory targets (visual targets with auditory distractors) produced the auditory distractor effect (Fig. 4b). This distractor effect was also calculated for both divided and selective visual attention conditions. Paired t-tests at each time bin compared distractor effects during divided and selective attention. Results indicated that selective attention to the auditory modality did not reduce the visual distractor effect at any time bin (Fig. 4c). Similarly, selective attention to the visual modality did not reduce the effect of auditory distractors at any time bin (Fig. 4d). In other words, there was no significant reduction in the degree of distraction produced by non-matching cross-modal stimuli during selective attention.

Visual and auditory distractor effects under selective and divided attention. In the top panels, the response probabilities for unisensory trials (auditory in a and visual in b) and non-matching multisensory trials under selective and divided attention are plotted as a function of response time. a Response distributions for unisensory auditory trials are plotted as solid curves (red for selective auditory attention, yellow for divided attention). Distributions for non-matching multisensory trials, Aud + Non-Match Vis (A + NMV) are plotted as dotted curves. b Response distributions for unisensory visual trials are plotted as solid curves (blue for selective visual attention, green for divided attention). Distributions for non-matching multisensory trials, Vis + Non-Match Aud (V + NMA) are plotted as dotted curves. c, d The subtraction of the unisensory distribution from the non-matching distribution yields the distractor effect plotted in the bottom panels. Selective auditory attention did not reduce the effects of visual distractors (c), and selective attention to the visual modality did not significantly reduce the effects of non-matching auditory distractors (d)

Discussion

In a replication of the results from Experiment 1, our second experiment demonstrated that selective attention to either the visual or the auditory modality attenuates multisensory performance gains normally observed under divided attention conditions. The inclusion of non-matching multisensory targets in this paradigm also allowed us to characterize the effects of modality-specific selective attention on cross-modal distraction. The results of this experiment indicated that selective attention did not provide any benefit over divided attention in reducing the detrimental effects of cross-modal distractors. This result was unexpected given that modality-specific selective attention is known to result in reduced processing of stimuli in the unattended sensory modality (Spence et al. 2001).

Due to the fact that selective attention prevents the integration of matching multisensory stimuli, but not non-matching multisensory stimuli, it seems likely that modality-independent factors are impacting performance on non-matching trials. One such factor may be the conflict effects produced by semantically non-matching stimuli. This concept is illustrated by previous data from our laboratory showing that the behavioral deficits associated with non-matching stimulation were present both in dual visual and multisensory paradigms, whereas significant enhancements due to semantic congruence were found only during multisensory paradigms (Laurienti et al. 2004). Thus, unlike performance enhancements that are dependent on the integration of stimuli in different sensory modalities, performance decrements occur regardless of whether two conflicting stimuli occur in the same modality or in different modalities. While selective attention is known to modulate the behavioral consequences of conflict, it does not eliminate them (Weissman et al. 2003; Egner and Hirsch 2005). Consequently, the behavioral decrements observed in this task that are due to modality-independent conflict effects would not be prevented by focusing attention on a single sensory modality.

The results of this study indicate that it is possible that different neural substrates underlie how modality-specific attention modulates the behavioral enhancements observed during matching multisensory stimulation and the decrements observed during non-matched pairings. For example, facilitation mechanisms that occur early in perceptual processing may be susceptible to the effects of modality-specific attention, while higher level processes that mediate distraction and conflict may be insensitive to these effects. We do not currently have brain imaging data on this task; however, Talsma and Woldorff (2005) have demonstrated in ERP experiments that spatial attention can impact the integration of temporally coincident audiovisual stimuli very early in perceptual processing.

While it is likely that selective attention plays a role in minimizing cross-modal distraction, the semantically conflicting stimuli utilized in the current study task were not susceptible to modulation by modality-specific attention. The non-matching multisensory stimuli presented in this task were very salient, task-relevant distractors that conflicted with target stimuli (e.g., green distractors and blue targets). Outside of the laboratory environment, most stimuli that are emitted from independent but simultaneous events in different sensory modalities simply convey irrelevant information that does not directly conflict with the task at hand. It remains possible that selective attention suppresses the integration of task-irrelevant stimuli but does not suppress performance decrements associated with conflicting stimuli. A paradigm that utilizes task-irrelevant information, rather than conflicting information, may be able to demonstrate that modality-specific selective attention can suppress multisensory integration when it is not behaviorally advantageous.

Another possibility to consider is that conflict effects and multisensory integration contributed to the behavioral decrements during non-matching trials, and we simply did not have enough power to detect the small changes in performance produced by selective and divided attention. We did observe a trend for reductions in the magnitude of the performance decrements under selective attention, but such changes were quite small. Given the trends in the data for the difference in distraction during divided and selective attention conditions (mean difference = 25.01 ms, SD = 87.32 ms), it would require approximately 75 participants in each group to detect significant reductions in distraction during selective attention to a singe sensory modality (assuming power = 80% and P-value of 0.05).

Conclusion

Modality-specific selective attention is one potential means by which information from multiple sensory modalities could be filtered in order to maintain the salience of task-relevant information and limit distractions from task-irrelevant information. When stimuli in multiple sensory modalities provide redundant information, the integration of these stimuli can potentially enhance the processing of the information (Stein et al. 1989; Spence et al. 1998; Laurienti et al. 2004). The experiments detailed here indicated that the performance enhancements associated with matching multisensory stimuli were only present when participants divided their attention between sensory modalities. Selective attention to a single modality eliminated performance enhancements associated with matched multisensory stimuli (Figs. 2, 3).

The attenuation of multisensory integration observed during modality-specific attention was likely not due to ceiling effects in the selective attention conditions as neither accuracy nor RT were significantly different during selective and divided attention condition, yet multisensory integration was observed only during divided attention conditions. As this study utilized complex verbal stimuli in order to assess how modality-specific attention impacts the behavioral enhancements and decrements caused by semantically matching and non-matching cross-modal stimuli, it is difficult to say whether modality-specific attention would have the same effect on the integration of simple multisensory stimuli such as lights and tones. Using ERPs, Talsma et al. (2007) used tones and simple visual stimuli to demonstrate that integration effects were not apparent during attention to a single sensory modality, however additional studies will be required to explore the influence of stimulus complexity on attention and integration effects.

In many situations, stimuli from different sensory modalities will likely convey non-matching information, potentially impairing the ability to process one or more of the stimuli. One common example of this situation occurs during a telephone conversation, when it is highly unlikely that the visual stimuli in your environment match the auditory stimuli of the telephone conversation. We hypothesized that modality-specific selective attention would reduce the amount of distraction caused by stimuli in the unattended sensory modality; however, the results of Experiment 2 demonstrated that selective attention did not significantly reduce either auditory or visual cross-modal distraction. Additional studies on the impact of conflict and semantic congruence will be necessary to determine how attentional mechanisms influence distraction within and between sensory modalities.

These experiments demonstrate the utility of divided attention in improving participants’ ability to make quick and accurate discriminations between multisensory stimuli. The benefits of multisensory integration under divided attention, however, may be outweighed by increased susceptibility to distractors during divided attention that can lead to impairments in encoding information into memory (Craik et al. 1996). The finding that attention can alter multisensory interactions may also have some interesting real-world applications, as it has been demonstrated that older adults integrate stimuli from multiple sensory modalities to a greater extent than do younger adults (Laurienti et al. 2006; Poliakoff et al. 2006). While this may be beneficial in the presence of congruent multisensory information, it can also potentially lead to greater distraction from cross-modal stimuli for older adults. Training programs targeting selective attention and conflict resolution may, therefore, be a possible mechanism for maintaining healthy sensory function with age.

References

Alsius A, Navarra J, Campbell R, Soto-Faraco S (2005) Audiovisual integration of speech falters under high attention demands. Curr Biol 15:839–843

Bertelson P, Radeau M (1981) Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Percept Psychophys 29:578–584

Bertelson P, Vroomen J, de Gelder B, Driver J (2000) The ventriloquist effect does not depend on the direction of deliberate visual attention. Percept Psychophys 62:321–332

Burnett LR, Stein BE, Chaponis D, Wallace MT (2004) Superior colliculus lesions preferentially disrupt multisensory orientation. Neuroscience 124:535–547

Calvert GA, Campbell R, Brammer MJ (2000) Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol 10:649–657

Corbetta M, Miezin FM, Dobmeyer S, Shulman GL, Petersen SE (1990) Attentional modulation of neural processing of shape, color, and velocity in humans. Science 248:1556–1559

Craik FI, Govoni R, Naveh-Benjamin M, Anderson ND (1996) The effects of divided attention on encoding and retrieval processes in human memory. J Exp Psychol Gen 125:159–180

Egner T, Hirsch J (2005) Cognitive control mechanisms resolve conflict through cortical amplification of task-relevant information. Nat Neurosci 8:1784–1790

Fan J, Flombaum JI, McCandliss BD, Thomas KM, Posner MI (2003) Cognitive and brain consequences of conflict. Neuroimage 18:42–57

Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL (1994) The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci 14:6336–6353

Hershenson M (1962) Reaction time as a measure of intersensory facilitation. J Exp Psychol 63:289–293

Jiang W, Jiang H, Stein BE (2002) Two corticotectal areas facilitate multisensory orientation behavior. J Cogn Neurosci 14:1240–1255

Johnen A, Wagner H, Gaese BH (2001) Spatial attention modulates sound localization in barn owls. J Neurophysiol 85:1009–1012

Johnson JA, Zatorre RJ (2005) Attention to simultaneous unrelated auditory and visual events: behavioral and neural correlates. Cereb Cortex 15:1609–1620

Johnson JA, Zatorre RJ (2006) Neural substrates for dividing and focusing attention between simultaneous auditory and visual events. Neuroimage 31:1673–1681

Kastner S, De Weerd P, Desimone R, Ungerleider LG (1998) Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI. Science 282:108–111

Kawashima R, O’Sullivan BT, Roland PE (1995) Positron-emission tomography studies of cross-modality inhibition in selective attentional tasks: closing the “mind’s eye”. Proc Natl Acad Sci USA 92:5969–5972

Laurienti PJ, Burdette JH, Wallace MT, Yen YF, Field AS, Stein BE (2002) Deactivation of sensory-specific cortex by cross-modal stimuli. J Cogn Neurosci 14:420–429

Laurienti PJ, Kraft RA, Maldjian JA, Burdette JH, Wallace MT (2004) Semantic congruence is a critical factor in multisensory behavioral performance. Exp Brain Res 158:405–414

Laurienti PJ, Burdette JH, Maldjian JA, Wallace MT (2006) Enhanced multisensory integration in older adults. Neurobiol Aging 27:1155–1163

Loose R, Kaufmann C, Auer DP, Lange KW (2003) Human prefrontal and sensory cortical activity during divided attention tasks. Hum Brain Mapp 18:249–259

Macaluso E, Frith CD, Driver J (2000) Modulation of human visual cortex by crossmodal spatial attention. Science 289:1206–1208

Macaluso E, Frith CD, Driver J (2002) Directing attention to locations and to sensory modalities: multiple levels of selective processing revealed with PET. Cereb Cortex 12:357–368

Macaluso E, George N, Dolan R, Spence C, Driver J (2004) Spatial and temporal factors during processing of audiovisual speech: a PET study. Neuroimage 21:725–732

McDonald JJ, Teder-Salejarvi WA, Di Russo F, Hillyard SA (2003) Neural substrates of perceptual enhancement by cross-modal spatial attention. J Cogn Neurosci 15:10–19

Miller J (1982) Divided attention: evidence for coactivation with redundant signals. Cognit Psychol 14:247–279

Miller J (1986) Timecourse of coactivation in bimodal divided attention. Percept Psychophys 40:331–343

Moore CM, Egeth H (1998) How does feature-based attention affect visual processing? J Exp Psychol Hum Percept Perform 24:1296–1310

Morrell LK (1968) Temporal characteristics of sensory interaction in choice reaction times. J Exp Psychol 77:14–18

Motter BC (1993) Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J Neurophysiol 70:909–919

O’Leary DS, Andreasen NC, Hurtig RR, Torres IJ, Flashman LA, Kesler ML, Arndt SV, Cizadlo TJ, Ponto LLB, Watkins GL, Hichwa RD (1997) Auditory and visual attention assessed with PET. Hum Brain Mapp 5:422–436

Poliakoff E, Ashworth S, Lowe C, Spence C (2006) Vision and touch in ageing: crossmodal selective attention and visuotactile spatial interactions. Neuropsychologia 44:507–517

Posner MI, Driver J (1992) The neurobiology of selective attention. Curr Opin Neurobiol 2:165–169

Rees G, Frith C, Lavie N (2001) Processing of irrelevant visual motion during performance of an auditory attention task. Neuropsychologia 39:937–949

Reynolds JH, Chelazzi L, Desimone R (1999) Competitive mechanisms subserve attention in macaque areas V2 and V4. J Neurosci 19:1736–1753

Shore DI, Simic N (2005) Integration of visual and tactile stimuli: top–down influences require time. Exp Brain Res 166:509–517

Spence C, Driver J (1997) On measuring selective attention to an expected sensory modality. Percept Psychophys 59:389–403

Spence C, Nicholls ME, Gillespie N, Driver J (1998) Cross-modal links in exogenous covert spatial orienting between touch, audition, and vision. Percept Psychophys 60:544–557

Spence C, Nicholls ME, Driver J (2001) The cost of expecting events in the wrong sensory modality. Percept Psychophys 63:330–336

Spitzer H, Desimone R, Moran J (1988) Increased attention enhances both behavioral and neuronal performance. Science 240:338–340

Stein BE, Meredith MA, Huneycutt WS, McDade L (1989) Behavioral indices of multisensory integration: orientation to visual cues is affected by auditory stimuli. J Cogn Neurosci 1:12–24

Talsma D, Kok A (2001) Nonspatial intermodal selective attention is mediated by sensory brain areas: evidence from event-related potentials. Psychophysiology 38:736–751

Talsma D, Woldorff MG (2005) Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J Cogn Neurosci 17:1098–1114

Talsma D, Doty TJ, Woldorff MG (2007) Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb Cortex 17:679–690

Turatto M, Benso F, Galfano G, Umilta C (2002) Nonspatial attentional shifts between audition and vision. J Exp Psychol Hum Percept Perform 28:628–639

Turatto M, Galfano G, Bridgeman B, Umilta C (2004) Space-independent modality-driven attentional capture in auditory, tactile and visual systems. Exp Brain Res 155:301–310

Vroomen J, Bertelson P, de Gelder B (2001) The ventriloquist effect does not depend on the direction of automatic visual attention. Percept Psychophys 63:651–659

Weissman DH, Giesbrecht B, Song AW, Mangun GR, Woldorff MG (2003) Conflict monitoring in the human anterior cingulate cortex during selective attention to global and local object features. Neuroimage 19:1361–1368

Woodruff PW, Benson RR, Bandettini PA, Kwong KK, Howard RJ, Talavage T, Belliveau J, Rosen BR (1996) Modulation of auditory and visual cortex by selective attention is modality-dependent. Neuroreport 7:1909–1913

Acknowledgments

We would like to thank Ms Elizabeth Bennett for her assistance with data collection and analyses. This research was supported by NIH grant #NS042568.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mozolic, J.L., Hugenschmidt, C.E., Peiffer, A.M. et al. Modality-specific selective attention attenuates multisensory integration. Exp Brain Res 184, 39–52 (2008). https://doi.org/10.1007/s00221-007-1080-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-007-1080-3