Abstract

We consider two inverse Gaussian populations with a common mean but different scale-like parameters, where all parameters are unknown. We construct noninformative priors for the ratio of the scale-like parameters to derive matching priors of different orders. Reference priors are proposed for different groups of parameters. The Bayes estimators of the common mean and ratio of the scale-like parameters are also derived. We propose confidence intervals of the conditional error rate in classifying an observation into inverse Gaussian distributions. A generalized variable-based confidence interval and the highest posterior density credible intervals for the error rate are computed. We estimate parameters of the mixture of these inverse Gaussian distributions and obtain estimates of the expected probability of correct classification. An intensive simulation study has been carried out to compare the estimators and expected probability of correct classification. Real data-based examples are given to show the practicality and effectiveness of the estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The inverse Gaussian (IG) distribution has applications in various fields such as engineering, actuarial science, medical science, environmental, and management sciences. The IG distribution is a good choice for modeling data with a long right tail and a relatively small mean. ‘Together with the normal and gamma distributions, the inverse Gaussian completes the trio of families that are both an exponential and a group family of distributions’ (Lehmann and Casella 2006, p. 68). The IG distribution is widely applied in life-testing and reliability analysis. Consider a toy example for classifying an observation into IG distributions. Suppose the observed lifetimes of two types of electronic components used in an automatic machine are available. It is known that the lifetime of two kinds of components follow IG distributions and their mean lifetimes are equal. One of the components has failed, and the failure time has been recorded. The problem is identifying the component type based on the observed lifetime. If the component is of type-1 and the classification rule assigns it to type-2 or vice versa, it will be misclassified. It is desired that the error rate in classiifcation (ERC) be minimized. We aim to derive several confidence intervals (CIs) and credible interval of the conditional ERC using the training samples from each population.

Ahmad et al. (1991) first considered the model of k IG distributions having an equal mean \(\mu\) and developed MLE, and Graybill-Deal type estimator of \(\mu\). They explored the decision-theoretic properties of the estimator. Gupta and Akman (1995) considered the mixture model of IG and length-biased IG distribution and derived the Bayes estimators of the model parameters. Since the Bayes estimator of the mean is not in explicit form, different numerical techniques were used to solve it. Karlis (2002) considered the mixture model of normal and IG distribution and derived the estimators of the parameters using the method of moments. He also calculated the MLE of the parameters using the EM algorithm. Tian and Wilding (2005) used a modified direct likelihood ratio statistic to derive the CI of the ratio of two means of IG distributions. They used reciprocal root IG distribution to simplify the CI. Sindhu et al. (2018) studied different properties of the mixture of half-normal distributions. They proposed Bayes estimators of the parameters of the mixture model using noninformative priors under different loss functions.

Noninformative priors provide satisfactory results when little or no prior information is available. A probability matching prior is a noninformative prior designed to match the frequentist coverage probabilities (CPs) of certain regions. This means that the posterior probability of a region will be equal to the frequentist CP of that region. Bernardo (1979) derived noninformative priors by separating the parameters of interest and nuisance parameters. This approach is known as the reference prior approach. Berger and Bernardo (1989) introduced the idea of reverse reference prior, in which the parameter of interest and nuisance parameters are pretended to be interchanged. Kim et al. (2006) used noninformative priors to study the Bayesian inference for a linear combination of normal means. They derived the second order probability matching priors for the linear combination of the normal means as a function of other nuisance parameters. They also showed that these priors match the alternative CPs up to the second order. Considering two IG populations having an equal mean, the noninformative priors for the parameters are not studied in the literature.

There is extensive literature on classifying observations into normal populations. A few articles are focused on classification into non-normal or skewed distributions. Amoh (1985) derived the estimated classification function for a mixture of IG distributions with a common scale-like parameter. They analyzed the efficiency of the classification function for small samples. Conde et al. (2005) proposed classification rules for two exponential distributions under the restrictions on parameter. The proposed rule has lower misclassification probabilities than the likelihood ratio-based rule. Batsidis and Zografos (2006) studied the classification techniques for elliptically contoured populations with a common scale matrix. They considered separate discriminant functions for complete and incomplete samples when missing data were observed. Their proposed discriminant function is a linear combination of those two discriminant functions. For small samples, different point estimators of error rate are nearly unbiased but not consistent. In such situations, interval estimation of the conditional error rate provides a better alternative. The conditional ERC measures the probability of misclassification given a training data set. Chung and Han (2009) derived CIs of the conditional and unconditional error rate for classifying into two or more p-dimensional normal populations with a common covariance matrix. The CIs are obtained using bootstrap and jackknife methods, which improve other methods such as binomial approximation, k-fold cross-validation, and parametric approach. Jana and Chakraborty (2023) investigated classification problem for several normal populations with an equal mean and different variances. They used the bootstrap and jackknife procedures to calculate the conditional error rate’s CI. Jana and Kumar (2019) studied the classification problem for two IG populations in various cases under order restriction on parameters. They also derived the likelihood ratio-based rule for two IG populations without restrictions and generalized the same for k populations. Note that the estimation of conditional ERC has not been studied under the Bayesian framework for IG populations.

In estimating the ERC, one requires the estimation of a function of parameters. While dealing with multiple parameters, as in frequentist inference, one may encounter challenges in constructing suitable pivotal quantities that effectively remove nuisance parameters. In such cases, probability-matching priors can be used to create approximate CIs. The current study has two objectives. First, noninformative priors are derived for the ratio of scale-like parameters \(\lambda _i\)s of two IG populations having a common mean. Second, the classification problem for this model has been considered to show applications of the estimators besides proposing other classical intervals and credible intervals of conditional ERC. Since the finite mixtures of two IG distributions are used to model data sets robustly, we study classification into mixture of two IG populations.

The paper is arranged as follows. Sect. 2 introduces IG distributions having an equal mean. In Sect. 3.1, we derive noninformative prior for the ratio of scale-like parameters through the orthogonal parametrization. In Sect. 3.2, Bayes estimation of parametric functions of these distributions is obtained. In Sect. 4.1, we derive the credible intervals and generalized variable-based CIs besides other CIs of conditional error rate. Section 4.2 considers classification of observations into a mixture of IG distributions. Sect. 5 presents a thorough simulation study together with real-world instances. Finally, some conclusions are made in Sect. 6.

2 Preliminaries

Consider two independent inverse Gaussian populations \(\varPi _1\) and \(\varPi _2\) having an equal mean \(\mu\) and scale-like parameters \(\lambda _1\) and \(\lambda _2\) respectively. The probability density function (pdf) corresponding to the population \(\varPi _i\) is

for \(i=1,2.\) Suppose \(X_{i1},X_{i2},\ldots ,X_{in_i}~(n_i\ge 2)\) represent a random sample from the population \(\varPi _i\). Denote \(\bar{X_i}={n_i}^{-1}\sum _{j=1}^{n_i} X_{ij}\) and \({S_i^{-1}}={n_i}^{-1}{\sum _{j=1}^{n_i}(X_{ij}^{-1}-\bar{X_i}^{-1})} \text {~for~} i=1,2.\) Note that \((\bar{X_1},\bar{X_2}, S_1, S_2)\) is a minimal sufficient statistic for the parameter \((\mu , \lambda _1,\lambda _2)\) of the distributions. [Chhikara and Folks (1989)]. The statistics \(S_1\) and \(S_2\) are also the MLEs of \(\lambda _1\) and \(\lambda _2\), respectively.

3 Bayes estimation

3.1 Noninformative priors for the ratio of scale-like parameters

In Bayesian inference, a noninformative prior is used when limited or negligible information available about the data. To estimate a parameter of interest with some nuisance parameters, the orthogonality among them with respect to the expected Fisher information matrix plays an important role. A parameter \(\theta _1\) is said to be totally orthogonal to a set of parameters, say, \((\theta _2,\theta _{3},\ldots ,\theta _k)\) if the information matrix is diagonal. The orthogonalization process and numerical simplification ensure that the corresponding rules are asymptotically independent. Noninformative priors help to achieve coverage error of function of parameters up to a particular order in the frequentist sense. Suppose the random samples \({\mathop {x}\limits _{\sim }}=(x_1,\ldots ,x_{n_1})\) and \({\mathop {y}\limits _{\sim }}=(y_1,\ldots ,y_{n_2})\) are drawn from IG\((\mu ,\lambda _1)\) and IG\((\mu ,\lambda _2)\) respectively. The log-likelihood function is

Consider a prior distribution \(\pi\) to estimate the parameter \(\theta _1=\lambda _2/\lambda _1\). Let \(\theta _1^{\alpha }(\pi ;\textbf{x})\) denotes the \((1-\alpha )\)th percentile of the posterior distribution of \(\theta _1\), that is,

\(P^{\pi }[\theta _1\le \theta _1^{\alpha }(\pi ;\) \(\textbf{x})|\textbf{x}] =1-\alpha ,\) where \(\theta _1\) is the parameter of interest. We want to find the priors \(\pi\) for which \(P^{\pi }\left[ \theta _1\le \theta _1^{\alpha }(\pi ;\textbf{x})|\textbf{x}\right] =1-\alpha +o(n^{-1})\) and make the prior a second-order matching prior. To find such priors, we consider the orthogonal parametrization techniques (Cox and Reid 1987; Tibshirani 1989). We find the orthogonal parameters \(\theta _1,\theta _2\) and \(\theta _3\). Denote \({\mathop {\theta }\limits _{\sim }}=(\theta _1,\theta _2,\theta _3)\). Under the transformations \(\lambda _1\rightarrow \phi _1,~\lambda _2\rightarrow \phi _1\psi ,~~\mu \rightarrow \phi _2,\) the expression (2) becomes

The orthogonal equations to find \(\phi _1,\phi _2,\psi\) and subsequently the value of \(\theta _{2}, \theta _{3}\) are provided in the supplementary material. Using orthogonal parametrization of the original parameters, we get \(\theta _1=\lambda _2/\lambda _1,~~\theta _2=\lambda _1^{n_1}\lambda _2^{n_2},~~\theta _3=\mu\) which can be written as \(\lambda _1=\theta _1^{-n_2/(n_1+n_2)}\theta _2^{1/(n_1+n_2)},\) \(\lambda _2=\theta _1^{n_1/(n_1+n_2)} \theta _2^{1/(n_1+n_2)},\) \(\mu =\theta _3.\) Then the log-likelihood function (2) is written in the form of \(\theta _1,\theta _2\) and \(\theta _3\) as

From Eq. (3), the elements of the information matrix are obtained as

Since \(\theta _1\) is orthogonal to \(\theta _2\) and \(\theta _3\), Tibshirani (1989) defines the class of first-order probability matching (FOPM) prior as

where \(d(\theta _2,\theta _3)\) is an arbitrary differentiable function.

Theorem 1

The second-order probability matching (SOPM) priors are given by \(\pi ^{(2)}({\mathop {\theta }\limits _{\sim }})=\theta _1^{-1}\theta _2^{-1}d(\theta _3),\) where \(d(\theta _3)\) is any smooth function of \(\theta _3\).

Proof

The proof of the theorem is provided in the supplementary material. \(\square\)

If the following set of differential equations are satisfied, under orthogonal parametrization, a SOMP prior agrees with alternative CPs up to the second-order (Mukerjee and Reid 1999). The equations are

Under this setup,

After incorporating the expressions of \(L_{111}, L_{1,11}, L_{2,11}, L_{3,11}\), the above equations hold.

Note 1

It can be verified that \(I_{11}^{-3/2}L_{111}\) is independent of \(\theta _1\). Hence the SOMPs proposed here are highest posterior density (HPD) matching priors up to the second-order.

First, we established that the parameters \(\theta _1,\theta _2,\theta _3\) are orthogonal. Then, the reference priors for various groups of the ordering of the parameters \(\theta _1,\theta _2,\theta _3\) are derived by following the work of Datta and Ghosh (1995).

Group ordering: \(\{(\theta _1,\theta _2,\theta _3)\}:\) The reference prior is of the form

\(\{\theta _1,\theta _2,\theta _3\}, \{(\theta _1,\theta _2),\theta _3\}:\) The form of the reference prior is given by

\(\{\theta _1,(\theta _2,\theta _3)\}:\) The reference prior is of the form

\(\{(\theta _1,\theta _3),\theta _2)\}:\) The form of the reference prior is given below.

Note 2

Note that the proposed reference priors \(\pi _2({\mathop {\theta }\limits _{\sim }})\) and \(\pi _3({\mathop {\theta }\limits _{\sim }})\) are the FOPMs. The prior \(\pi _2({\mathop {\theta }\limits _{\sim }})\) is the SOPM prior.

In Sect. 4.1, we obtain credible interval of ERC using the reference prior of the form \(\pi _2({\mathop {\theta }\limits _{\sim }})\).

3.2 Bayes estimators of function of parameters

Let X be IG\((\mu ,\lambda )\) distributed with the pdf of the form (1). The gamma family is a conjugate prior to the IG distribution with a known mean. However, when \(\mu\) and \(\lambda\) are unknown, the conjugate prior is unknown for the IG distribution. We have considered two IG distributions having an equal mean but different \(\lambda _i\)s where finding a conjugate prior distribution is a real challenge. The coefficient of variation of X is \(\sqrt{\mu /\lambda }\). Considering the reparametrization of \(\lambda =\mu \phi\), the pdf is written as

Let \(x_1,x_2,\ldots ,x_n\) be a random sample from the IG\((\mu ,\phi )\) distribution. The likelihood function based on \(x_1,x_2,\ldots ,x_n\) is

where \(\bar{x}=n^{-1}\sum _{i=1}^{n}x_i\) and \(\bar{x}_r=n^{-1}\sum _{i=1}^{n}x_i^{-1}\). Assume the prior information about \(\mu\) and \(\phi\) is summarized in the density \(\pi (\mu ,\phi )=\pi (\mu |\phi )\pi (\phi )\), where

Hence

The joint probability density function of \(x_1,x_2,\ldots ,x_n,\mu ,\phi\) is

Now the joint posterior density function of \((\mu ,\phi )\) is given by

where c is the normalizing constant. The posterior density of \(\phi\) is

where \(K_n\) is the Bessel function of third kind with index n. Next, we consider two IG distributions IG\((\mu ,\phi _1)\) and IG\((\mu ,\phi _2)\). The ratio of the coefficient of variation becomes ratio of \(\lambda _i\)s of the distributions. We find an estimator of \(\lambda _1/\lambda _2\). Since two populations are independent, the joint density of \((\phi _1,\phi _2)\) given the data is \(\pi (\phi _1,\phi _2|{\mathop {x}\limits _{\sim }},{\mathop {y}\limits _{\sim }})=\pi (\phi _1|{\mathop {x}\limits _{\sim }})\pi (\phi _2|{\mathop {y}\limits _{\sim }}).\) The Bayes estimator of \(\lambda _1/\lambda _2\) is

Next, we consider estimation of \(\mu\). The joint density function of \(\mu , \phi _1\) and \(\phi _2\) is

Then the likelihood function is

The posterior density of \(\mu\) is

where \(\tilde{L}(\mu ,\phi _1,\phi _2)=L(\mu ,\phi _1,\phi _2|{\mathop {x}\limits _{\sim }}_1,{\mathop {x}\limits _{\sim }}_2)\pi (\mu ,\phi _1,\phi _2).\) The Bayes estimator of \(\mu\) is

The estimator \(\hat{\mu }\) is used to estimate the classification function and compare error rates in the next section.

4 Application to classification problem

The present section deals with applications of the considered IG distributions to the classification problem. Suppose P(i|j) is the probability of misclassification of an observation from \(\varPi _j\) to \(\varPi _i\) and C(i|j) is the coressponding cost of misclassification \(i\ne j(=1,2)\). Given a new observation z, the following classification regions

are obtained by minimizing the expected misclassification cost, where \(q_i\) represents the prior probability of belonging an observation into the population \(\varPi _i, ~i=1,2\). The expected probability of correct classification (EPC) is defined as \(\sum _{i=1}^2q_iP(i|i).\) Assume that \(C(1|2)=C(2|1)\) and \(q_1=q_2\). Following (Anderson 2003), the Bayes classification rule (R) for assigning a new observation z is: classify z into \(\varPi _1\) if \(W>0\), otherwise classify it to \(\varPi _2\), where

4.1 Confidence intervals of ERC

We want to estimate the ERC for two such IG populations. Suppose \(\gamma =(P_1+P_2)/2=(P[W<0|z\in \varPi _1]+P[W>0|z\in \varPi _2])/2\), denotes the unconditional error rate. Since \(\mu ,\lambda _1,\lambda _2\) are unknown, we use their estimates to get the conditional error rate \(\gamma ^{*}=(P_1^{*}+P_2^{*})/2,\) where \(P_1^*=P(W<0|\bar{x}_1,\bar{x}_2,S_1,S_2;z\in \varPi _1)\) and \(P_2^*=P(W\ge 0|\bar{x}_1,\bar{x}_2,S_1,S_2;z\in \varPi _1).\) Then

where \(\hat{\lambda }_i\) is an estimator of \(\lambda _i\), \(g(\cdot )\) is the cumulative distribution function of \(\chi ^2_1\) and \(\hat{\lambda }^*={(\ln \hat{\lambda }_1-\ln \hat{\lambda }_2)}/{(\hat{\lambda }_1-\hat{\lambda }_2)}\).

An estimator \(\hat{\gamma }^*\) of \(\gamma ^*\) is obtained from a set of random samples from the populations and the corresponding B estimators \(\hat{\gamma }_1^*,\ldots ,\hat{\gamma }_B^*\) of \(\gamma ^*\) is calculated from B bootstrap samples. Let \(s_\gamma ^2\) denote the sample variance of \(\gamma _i^*\)’s and \(\hat{\gamma }_{(i)}^*\) is the ordered estimates of \(\gamma _i^*\)’s. Four types of \(100 (1-2\eta )\%\) CIs are presented below:

-

(a)

The conditional CI of \(\gamma ^*\) using the symmetric method is \((\hat{\gamma }^*-z_{\eta }s_{\gamma },\) \(\hat{\gamma }^*+z_{\eta }s_{\gamma })\).

-

(b)

The conditional CI using the percentile method is given by \((\hat{\gamma }^*_{(r)},\hat{\gamma }^*_{(s)})\). [Jana and Chakraborty (2023)].

-

(c)

Suppose q denotes the number of bootstrap estimates of \(\gamma ^*\) that are smaller than \(\hat{\gamma }^*\). Define \(z_0=\phi ^{-1}(q/B)\), \(\eta _{BL}=\varPhi (2z_0-z_{\eta })\) and \(\eta _{BR}=\varPhi (2z_0+z_{\eta })\), where \(\varPhi (z_{\eta })=1-\eta\) and \(\varPhi\) is the standard normal distribution function. The conditional CI using the bias-corrected percentile method is given by \((\hat{\gamma }^*_{(j)},\hat{\gamma }^*_{(k)})\), where \(j=(B+1)\eta _{BL}\) and \(k=(B+1)\eta _{BR}\). The conditional error rate using the accelerated bias-corrected percentile (Abcp) method is given by \((\hat{\gamma }^*_{(u)},\hat{\gamma }^*_{(v)})\), where \(u=(B+1)\eta _{AL}\) and \(v=(B+1)\eta _{AR}\). We refer to Jana and Chakraborty (2023) for details.

-

(d)

We use the jackknife resampling method to create the following confidence interval (CI) for the conditional error rate. [see Jana and Chakraborty (2023)]

$$\begin{aligned} \left\{ \hat{\gamma }^{**}-t_{n-1,\alpha /2}\sqrt{\frac{\sum \limits _{i=1}^{n}(\hat{\gamma }^{**}_i -\hat{\gamma }^{**})^2}{n(n-1)}}, \hat{\gamma }^{**}+t_{n-1,\alpha /2}\sqrt{\frac{\sum \limits _{i=1}^{n}(\hat{\gamma }^{**}_i -\hat{\gamma }^{**})^2}{n(n-1)}}\right\} , \end{aligned}$$

Next, the pivotal quantities for the parameters proposed by Ye et al. (2010) are used to obtain the CI. Suppose \(T(X,x,\theta _{1},\theta _{2})\) is a generalized pivot quantity for the parameter of interest \(\theta _{1}\), where x denotes the observed value of the random variable X and \(\theta _{2}\) is the nuisance parameter. Then \(T(X,x,\theta _{1},\theta _{2})\) must satisfy the following conditions:

-

1.

The distribution function of \(T(X,x,\theta _{1},\theta _{2})\) is free from the unknown parameters.

-

2.

The observed value \(T(x,x,\theta _{1},\theta _{2})\) of the pivot quantity \(T(X,x,\theta _{1},\theta _{2})\) is free from the parameter \(\theta _{2}\).

Suppose \(\bar{x}_i\) and \(s_i\) are the observed values of \(\bar{X}_i\) and \(S_i\), respectively. A generalized pivot quantity for \(\lambda _i\) is defined as

Define \(\displaystyle T=\frac{(n_1-1)n_2S_2}{(n_2-1)n_1S_1}\). Note that T follows a F-distribution with degrees of freedom with \((n_1-1), (n_2-1)\) and T is a generalized variable for the parameter \(\lambda _1/\lambda _2\). We propose the following Algorithm for deriving CI of error rate.

Algorithm 1

Step 1. Generate \(x_1,x_2,\ldots ,x_{n_1}\sim\)IG\((\mu ,\lambda _1)\) and \(y_1,y_2,\ldots ,y_{n_2}\sim\)IG\((\mu ,\lambda _2)\).

Step 2. Find \(\bar{x},\bar{y},S_1\) and \(S_2\).

Step 3. Generate a random sample from \(F(n_1-1,n_2-1)\).

Step 4. Let \(T^*=\log T/(T-1)\). Consider \(T^*\) as an estimate of \(\lambda ^*\).

Step 5. Calculate \(\gamma ^*\).

Step 6. Repeat Steps 3 to 5, B times.

Step 7. Find the order statistic corresponding to the \(\gamma ^*\)s. The \(100(1-\alpha ) \%\) CI of \(\gamma ^*\) is \((\gamma ^*_{(1)},\gamma ^*_{[(1-\alpha )*B]})\).

In Sect. 5.1, we perform a detailed simulation study to compare the CIs using the proposed methods for two IG populations having an equal mean. Comparisons of the CIs for three populations using bootstrap and jackknife techniques are also studied.

In addition, we propose to use Bayesian credible intervals to estimate the classification error rate. The HPD credible intervals are the shortest credible intervals that contain the true error rate with a certain probability. All points within the HPD interval have a higher posterior probability than any points outside the interval. The technique to compute such intervals was introduced by Chen and Shao (1999), involving the utilization of samples generated from the posterior density through the Markov chain Monte Carlo method. Assume that \({x_1,x_2,\ldots ,x_{n_1}}\) and \({y_1,y_2,\ldots ,y_{n_2}}\) are random samples originating from IG\((\mu , \lambda _1)\) and IG\((\mu , \lambda _2)\) distributions, respectively. The joint density function of \({\mathop {x}\limits _{\sim }}\) and \({\mathop {y}\limits _{\sim }}\), as per the reparameterization detailed in Sect. 3.1, is expressed as

where \(n=n_1+n_2\). Given the second-order matching prior of the form \(\pi _2({\mathop {\theta }\limits _{\sim }})\propto \theta _{1}^{-1}\theta _{2}^{-1}\theta _{3}^{-3/2}\), we derive the posterior density of \(\theta _{1}\) as follows:

To generate samples from the posterior density, we use the estimates of \(\theta _2\) and \(\theta _3\) as

respectively. Note that the error rate \(\gamma ^*\) is a function of \(\theta _{1}\) only and is written as

where \(g(\cdot )\) is the cumulative distribution function of \(\chi ^2_1\). Due to the complexity of the posterior density, we use the algorithm proposed by Chen and Shao (1999) to generate random samples from this density and subsequently find the credible interval for \(\gamma ^*\). Given random samples \({\mathop {x}\limits _{\sim }}\) and \({\mathop {y}\limits _{\sim }}\), the following procedure outlines the necessary steps to compute a \(100(1-\alpha )\%\) credible interval for \(\gamma ^*\).

Algorithm 2

Step 1. Define a current value \(\theta _{1}^{(0)}\) from the target density function.

Step 2. Generate a random sample \(z_*\) from a proposed density \(h(x|\theta _{1}^{(j)}),~j=0,1,2,\ldots\). Find the acceptance probability

Step 3. Generate a random sample u from U(0, 1) distribution. If \(u<\kappa (\theta _{1}^{(j)},z_*)\), set \(\theta _{1}^{(j+1)}=z_*\); otherwise, set \(\theta _{1}^{(j+1)}=\theta _{1}^{(j)}\).

Step 4. Obtain the error rate \(\gamma ^*\) by putting \(\theta _{1}=\theta _{1}^{(j)}\).

Step 5. Set \(j=j+1\).

Step 6. Repeat steps 2-5, M times.

Step 7. From the \(\gamma ^*\) values, obtain the order statistics \(\gamma ^*_{(1)},\gamma ^*_{(2)},\ldots , \gamma ^*_{(M)}\).

Then the \(100(1-\alpha )\%\) HPD credible interval for \(\gamma ^*\) is given by \((\gamma ^*_{([\frac{\alpha }{2}M])},\gamma ^*_{([(1-\frac{\alpha }{2})M])})\). In Sect. 5.1, we apply this algorithm to compute the credible intervals for \(\gamma ^*\).

4.2 Classification into mixture of IG distributions

In this section, we study estimation of the parameters of a mixture of two inverse Gaussian (MTIG) distributions and the corresponding discriminant function. The density of the MTIG distribution having an equal mean parameter is

where \(\varTheta =(p_1,\mu ,\phi _1,\phi _2),\varTheta _j=(\mu ,\phi _j),j=1,2\) and \(f_j(x;\varTheta _j)\) is the pdf of the jth univariate IG distribution as given in (5). Suppose \(x_1,x_2,\dots ,x_n\) is a random sample drawn from the MTIG distribution with density (6). The likelihood function based on the random sample is \(L(\varTheta ) = \prod \nolimits _{i=1}^{n}\sqrt{(\mu /({2\pi x_i^3}))}Q_i,\) where

The log-likelihood function is \(l(\varTheta ) = -\sum \nolimits _{i=1}^{n}\{\ln (2\pi x_i^3)\}/2+\sum \nolimits _{i=1}^{n}\ln Q_i.\) The likelihood equations are given by

Finding estimates of the parameters from the above equations is not always possible due to the computational complexity. So, we use the EM algorithm to find the MLEs of the parameters \(\varTheta =(p_1,\mu ,\phi _1,\phi _2)\). Let \(\textbf{x}=(x_1,\dots ,x_n)\) be an observed sample from the mixture distribution \(f(x;\varTheta )\). Assume the component from which \(x_i\) originates is unknown. This missing data is labeled as \(y_{ij}\), where \(y_{ij}\) signifies \(x_i\) derived from the jth component for \(j=1,2\). The log-likelihood function based on the observed data is

The complete data log-likelihood satisfies

In EM algorithm, we apply two steps namely an expectation step (E-step) and a maximization step (M-step) alternately to get the sequence \(\{(p_1^{(k)},\mu ^{(k)},\phi _1^{(k)},\phi _2^{(k)})\}_{k\in \mathbb {N}}\) of estimators. We continue to generate the terms of the sequence till it converges and maximizes the likelihood function. Dempster et al. (1977) proved the convergence of the EM algorithm to a local maxima. Let \(w_{ij}\) be the conditional probability that \(x_i\) arises from the mixture component indexed j, having density \(f_j(\cdot |\mu ,\phi _j)\), given the sample data \(\textbf{x}\). Using Bayes rule, for each i and j, we have

We mention the kth iteration scheme for the E-step and M-step of the EM algorithm.

E-step: For \(i=1,2,\dots ,n\) and \(j=1,2\), evaluate

M-step: By replacing \(y_{ij}\) with \(w_{ij}\), we need to maximize the log likelihood of the complete data.

To find the estimator \((\hat{p}_1,\hat{\mu },\hat{\phi }_1,\hat{\phi }_2)\) of \(\varTheta =({p}_1,{\mu },{\phi }_1,{\phi }_2)\), we choose \(\varTheta ^{(0)}=({p}_1^{(0)},{\mu }^{(0)},\) \({\phi }_1^{(0)},{\phi }_2^{(0)})\) as an initial value based on the sample and repeat the E-step and M-step simultaneously until it converges. After the convergence, last iteration values are considered as the estimates \((\hat{p}_1,\hat{\mu },\hat{\phi }_1,\hat{\phi }_2)\). Using the relation \(\lambda _j=\mu \phi _j,~j=1,2\), we obtain the estimates \((\hat{\lambda }_1,\hat{\lambda }_2)\) of the parameters \((\lambda _1,\lambda _2)\). In Sect. 5.2, we consider several parameter combinations for the MTIG distributions and computed their estimates. These estimates are used as plug-in estimate in finding estimated EPC.

5 Numerical results

5.1 Interval estimation of conditional ERC

In this section, a detailed simulation study has been performed to compute the CI of the conditional ERC into one of two independent IG distributions having an equal mean but different \(\lambda _i\)s. We generate random samples with sizes \(n_1\) and \(n_2\) from populations \(\varPi _1\) and \(\varPi _2\), respectively. Using the methods mentioned in Sect. 4.1, we calculate the CIs for sample sizes \((n_1,n_2)=(5,5),(10,10),(20,20)\). The AUL and ALL values are computed using Monte Carlo simulations with 20,000 replications. The expected length of the CI is the difference between AUL and ALL. Since the ERC and the corresponding CI depend on the ratio \(\lambda _1/\lambda _2\) but are independent of the mean parameter, we calculate the CIs by varying \(\lambda _1/\lambda _2\) from 0.1 to 0.9. The CIs using different methods are compared in terms of average lengths and CPs.

From Figs. 1, 2 and 3, it follows that the generalized variable-based CI produces better CPs than other CIs. For \((n_1,n_2)=(5,5),\) the CI using symmetric method marginally dominates the generalized interval in terms of CP when \(\lambda _1/\lambda _2>0.3\). As the sample size increases, CI using the generalized variable approach outperforms other methods. However, the average length of the CI using the generalized variable approach is longer than other methods. For all the methods described, the length of the CI decreases as the sample size increases. The bias-corrected percentile and Abcp methods perform poorly in terms of CP. But the bias-corrected percentile and Abcp CIs become more efficient than other CIs as the ratio \(\lambda _1/\lambda _2\) approaches one in terms of interval length.

Tables 1 and 2 refer to the estimated ERC for three populations. It also suggests that the symmetric and percentile methods perform better than other methods. We have analyzed the computational time required to derive CIs for error rates. Table 3 presents the execution time, measured in seconds, necessary for the bootstrap method, jackknife method, and generalized variable approach to compute CIs for error rates across various sample sizes. Each entry reflects the time for a single iteration. The bootstrap method requires more computational time compared to the other two methods. Conversely, the jackknife method consistently demonstrates the least computational time across all parameter combinations and sample sizes. Although the computational time for these methods is generally reasonable, the generalized variable approach balances efficiency and performance. It consumes slightly more time than the jackknife method but delivers comparable or even better CP than the bootstrap method.

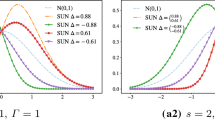

Next, Algorithm 2 is used to compute 90% and 95% credible intervals for the error rate considering N\((\theta _1^{(j)}, 1)\) as the proposed density when sample size \((n_1, n_2) = (20, 20), (25, 25)\). Credible intervals are calculated for \(\lambda _1/\lambda _2\) values ranging from 0.1 to 0.9. As the error rate remains unaffected by \(\mu\), \(\mu\) is set as one during the simulation. Monte Carlo samples are generated using 20,000 replications and setting \(M=1000\).

Figs. 4 and 5 visualize the error rates and the corresponding credible interval bands. Note that the average length of credible intervals is the smallest among all intervals explored in this study. With increasing sample sizes, the average interval length decreases while the CP increases. As the ratio \(\lambda _1/\lambda _2\) approaches one, both CPs and average lengths exhibit a consistent increment. It is evident that the CPs of the credible intervals are nearly equivalent to those obtained from other methods. However, the distinctive feature lies in shorter interval lengths compared to alternative approaches. It is worth noting that this method may perform poorly for small samples. When the sample size from each population is greater than twenty, the HPD credible interval is recommended in estimating the ERC.

5.2 Estimates of the parameters using EM algorithm

As discussed in Sect. 4.2, we use EM algorithm to find the MLEs of \(\varTheta =({p}_1,{\mu },{\phi }_1,{\phi }_2)\) for different values of the parameters \(p_1 (=0.1,0.3), \lambda _1 (=0.3,0.7), \lambda _2 (=1,3)\). Since the exact value of \(\mu\) does not affect the estimates of other parameters, we assume \(\mu\) as five throughout the simulation study. For each parameter combination, we consider sample of sizes \(n=40,50,60,70,80,90, 100\) to find the estimates of \(\varTheta =({p}_1,{\mu },{\phi }_1,{\phi }_2)\). The algorithm is repeated until the computed difference between two successive iterations is \(\le 10^{-3}\). For every parameter combination, 5000 replications are used, and the average of the estimates are taken as the estimated value. Next, 100 new samples are generated from the MTIG distribution. Using the estimators as plug-in estimators in the classification function, we obtain the probability of correct classification. Repeating this procedure 100 times and taking the average, we finally get the EPC values. The following observations are made from Table 4.

As the sample size increases, EPC increases in every case. For the parameter combination, when \(p_1\) is 0.1, the EPC values are higher than the EPC values obtained for \(p_1=0.3\). The MSEs of the estimators for the parameters decrease as the sample size increases. The EPC value is higher if the difference between \(\lambda _1\) and \(\lambda _2\) is higher.

5.3 Illustrative examples

We consider the following examples as applications of the considered model. We use goft package (González-Estrada and Villaseñor 2017) in R programming language to fit the IG distribution to the data. To check the equality of the means, we use the algorithm proposed by Shi and Lv (2012). The significance of the IG distribution can be illustrated by its application to real-world data with positive skewness. While the Gamma distribution is commonly used for many data appearing in real-world situations, the IG distribution is an alternative for fitting such data. For example, Watson and Smith (1985) studied the breaking strengths of single carbon fibers with varying lengths. Consider the data set pertaining to the breaking strength of 10 mm long single carbon fibers. The \(R^2\) value is \(98.68\%\) when the data is fitted with the Gamma distribution, and the \(R^2\) value is \(98.75\%\) when using the IG distribution. This demonstrates that both the gamma and IG distribution can be used to fit such a data set. (see Fig. 6).

Example 1

Feigl and Zelen (1965) observed the white blood cell (WBC) counts for 33 patients. These patients were divided into two groups, AG positive and AG negative. They were formed based on whether Auer rods and/or significant granulature of leukemic cells were present in the bone marrow at the time of diagnosis. They find that the survival probability depends on the WBC count for AG positive group, whereas it does not depend on the WBC count for AG negative group. The WBC data for both groups are presented below after dividing by 1000.

AG positive: 2.3,.75, 4.3, 2.6, 6, 10.5, 10, 17, 5.4, 7, 9.4, 32, 35, 100, 100, 52, 100; AG negative: 4.4, 3, 4, 1.5, 9, 5.3, 10, 19, 27, 28, 31, 26, 21, 79, 100, 100.

For testing the IG distribution fit to the data, the p values for the groups are 0.6058 and 0.5962, respectively. This implies that the null hypothesis is not rejected based on the data and the data for each patient group follows IG distribution. The p-value to check the equality of mean parameters for the two groups is 0.995. Thus, we do not reject the null hypothesis and conclude that the group means are equal. Fig. 7 represents the density and CDF plots of the empirical distribution and IG distribution. Within the IG framework, the scale-like parameter (\(\lambda\)) reflects the variability of WBC counts. A higher \(\lambda\) value indicates a wider range of WBC counts within a group. This ratio of \(\lambda _i\)s between two groups indicates the relative variability in WBC counts between the groups. In Table 5, we have presented CIs of conditional ERC into either of two groups using the proposed methods.

Example 2

Shapiro et al. (1987) recorded numbers of \(T_4\) cells (per \(\text {mm}^3\)) in the blood of 40 patients, where 20 people were affected with Hodgkin’s disease, and the rest were not diagnosed with the disease. The number of \(T_4\) cells for both the groups are available in Krishnamoorthy and Tian (2008). Chhikara and Folks (1989) showed that the data sets for both groups follow the IG distribution. We consider the data sets of two groups for studying the two-class classification problem where each class density is IG. First, the model assumptions need to be checked. To fit the IG distribution for both groups, the p-values are 0.6494 and 0.4099, respectively. The p-value to test the equality of means of both the groups is 0.058. Thus both groups follow IG distributions with an equal mean. The sample mean for the groups are 0.8232 and 0.5221, respectively and \((s_1,s_2)=(0.7105, 0.8663)\). Figure 8 represents the density and CDF plots of the empirical distribution and IG distribution for the datasets. In Table 6, we compute the CIs of the conditional ERC using the proposed methods. The 90% and 95% credible intervals for the error rate are (0.3942, 0.5639) and (0.3804, 0.5775), respectively.

Example 3

Balakrishnan et al. (2009) discussed several aspects of MIG distribution for fitting positively skewed data. They analyzed different data sets from actuarial science, engineering, and toxicology. In a monitoring station in Santiago, hourly SO\(_2\) concentrations (in ppm) are recorded as a part of an environmental air pollution data set. The frequency of each value is mentioned in the respective parentheses. The mean, median, and mode of the data set are 2.9261, 2, and 2, respectively. The figure and relation among mean, median, and mode suggest that the data follows a positively skewed distribution. The \(R^2\) value for fitting an IG distribution to the data is \(88.67\%\), whereas \(R^2\) value for fitting MIG distribution with the common mean is \(89.37\%\). The ig_test function from goft package in R indicates that an IG distribution is not a good fit since the p value is nearly zero. The estimates of the parameters for IG distribution are \(\hat{\mu }=2.9261,\hat{\lambda }=9.0213\). For MIG distribution with an equal mean, estimates of the parameters using EM algorithm are \(\hat{p}=0.5638,\hat{\mu }=2.8465,\hat{\lambda }_1=6.1731\) and \(\hat{\lambda }_2=22.0625\). Figure 9 shows that a mixture of IG distributions having an equal mean provides a better fit than an IG distribution for the dataset.

6 Conclusions

We have studied the estimation of the function of parameters for two IG populations having an equal mean. We have derived CIs of the conditional ERC using the bootstrap, jackknife, and generalized variable approaches. The CI based on the generalized variable estimator performs better than other intervals in terms of CP. A noninformative probability matching prior is used to obtain HPD credible intervals for the conditional error rate. Opting for credible intervals is advised to estimate the error rate, as these intervals tend to have shorter lengths than other CIs with the same CP. Using the EM algorithm, we have derived estimators of the parameters for a mixture of IG distributions. The estimators are used to find the EPCs. For illustration purposes, two datasets are used to find the CIs of the conditional ERC. The third dataset is an example where a mixture of IG distributions with an equal mean fit better than a single IG distribution. Based on mixtures of Gaussian distributions, model-based classification methods are useful for various practical problems. As an extension, multivariate normal IG distribution can be used for model-based classification. A copy of R code will be shared with interested researchers upon request.

References

Ahmad M, Chaubey Y, Sinha B (1991) Estimation of a common mean of several univariate inverse Gaussian populations. Ann Inst Stat Math 43(2):357–367

Amoh R (1985) Estimation of a discriminant function from a mixture of two inverse Gaussian distributions when sample size is small. J Stat Comput Simul 20(4):275–286

Anderson T (2003) An introduction to multivariate statistical analysis, 3rd edn. Wiley, New York

Balakrishnan N, Leiva V, Sanhueza A, Cabrera E (2009) Mixture inverse Gaussian distributions and its transformations, moments and applications. Statistics 43(1):91–104

Batsidis A, Zografos K (2006) Discrimination of observations into one of two elliptic populations based on monotone training samples. Metrika 64(2):221–241

Berger JO, Bernardo JM (1989) Estimating a product of means: Bayesian analysis with reference priors. J Am Stat Assoc 84(405):200–207

Bernardo JM (1979) Reference posterior distributions for Bayesian inference. J R Stat Soc Ser B Stat Methodol 41(2):113–128

Chen M-H, Shao Q-M (1999) Monte Carlo estimation of Bayesian credible and HPD intervals. J Comput Graph Stat 8(1):69–92

Chhikara R, Folks J (1989) The inverse Gaussian distribution: theory, methodology and application. Marcel Dekker, New York

Chung H-C, Han C-P (2009) Conditional confidence intervals for classification error rate. Comput Stat Data Anal 53(12):4358–4369

Conde D, Fernández M, Salvador B (2005) A classification rule for ordered exponential populations. J Stat Plann Inference 135(2):339–356

Cox DR, Reid N (1987) Parameter orthogonality and approximate conditional inference. J R Stat Soc Ser B Stat Methodol 49(1):1–18

Datta GS, Ghosh M (1995) Some remarks on noninformative priors. J Am Stat Assoc 90(432):1357–1363

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B Stat Methodol 39(1):1–22

Feigl P, Zelen M (1965) Estimation of exponential survival probabilities with concomitant information. Biometrics 21(4):826

González-Estrada E, Villaseñor JA (2017) An R package for testing goodness of fit: goft. J Stat Comput Simul 88(4):726–751

Gupta RC, Akman HO (1995) Bayes estimation in a mixture inverse Gaussian model. Ann Inst Stat Math 47(3):493–503

Jana N, Chakraborty A (2023) Estimating error rate of classification into several normal populations under equal mean restriction. Commun Stat Simul Comput. https://doi.org/10.1080/03610918.2023.2240549

Jana N, Kumar S (2019) Ordered classification rules for inverse Gaussian populations with unknown parameters. J Stat Comput Simul 89(14):2597–2620

Karlis D (2002) An EM type algorithm for maximum likelihood estimation of the normal–inverse Gaussian distribution. Stat Probab Lett 57(1):43–52

Kim DH, Kang SG, Lee WD (2006) Noninformative priors for linear combinations of the normal means. Stat Pap 47(2):249–262

Krishnamoorthy K, Tian L (2008) Inferences on the difference and ratio of the means of two inverse Gaussian distributions. J Stat Plann Inference 138(7):2082–2089

Lehmann E, Casella G (2006) Theory of point estimation. Springer, Berlin

Mukerjee R, Reid N (1999) On a property of probability matching priors: matching the alternative coverage probabilities. Biometrika 86(2):333–340

Shapiro CM, Beckmann E, Christiansen N, Bitran JD, Kozloff M, Billings AA, Telfer MC (1987) Immunologic status of patients in remission from Hodgkin’s disease and disseminated malignancies. Am J Med Sci 293(6):366–370

Shi J-H, Lv J-L (2012) A new generalized -value for testing equality of inverse Gaussian means under heterogeneity. Stat Probab Lett 82(1):96–102

Sindhu TN, Khan HM, Hussain Z, Al-Zahrani B (2018) Bayesian inference from the mixture of half-normal distributions under censoring. J Natl Sci Found 46(4):587–600

Tian L, Wilding GE (2005) Confidence intervals of the ratio of means of two independent inverse Gaussian distributions. J Stat Plann Inference 133(2):381–386

Tibshirani R (1989) Noninformative priors for one parameter of many. Biometrika 76(3):604–608

Watson AS, Smith RL (1985) An examination of statistical theories for fibrous materials in the light of experimental data. J Mater Sci 2(9):3260–3270

Ye R-D, Ma T-F, Wang S-G (2010) Inferences on the common mean of several inverse Gaussian populations. Comput Stat Data Anal 54(4):906–915

Acknowledgements

We thank the editor, associate editor, and reviewers for their constructive suggestions, which considerably enhanced the manuscript. We are thankful to the editor for suggesting to incorporate credible intervals. The second author gratefully acknowledges the financial support under MATRICS project (No. MTR/2022/000535) received from SERB, Department of Science and Technology, India.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chakraborty, A., Jana, N. Bayes estimation of ratio of scale-like parameters for inverse Gaussian distributions and applications to classification. Comput Stat (2024). https://doi.org/10.1007/s00180-024-01554-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00180-024-01554-6