Abstract

This paper is concerned with the estimation problem of a periodic autoregressive model with closed skew-normal innovations. The closed skew-normal (CSN) distribution has some useful properties similar to those of the Gaussian distribution. Maximum likelihood (ML), Maximum a posteriori (MAP) and Bayesian approaches are proposed and compared in order to estimate the model parameters. For the Bayesian approach, the Gibbs sampling algorithm and for computing the ML and MAP estimations, the expectation–maximization algorithms are performed. The simulation studies are then conducted to compare the frequentist average losses of competing estimators and to study the asymptotic properties of the given estimators. The proposed model and methods developed in this paper are also applied to a real time series. The accuracy of the CSN and Gaussian models is compared by cross validation criterion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and motivation

The main aim of this article is to make inference on the parameters of the periodically correlated (PC) time series with a flexible class of skewed innovations. The PC time series has potential applications in describing many phenomena in different area of sciences and technology (e.g. climatology, hydrology, economics, electrical engineering and signal processing). To indicate a part of many relevant works done on the theory and application of PC time, we cite Gladyshev (1961), Noakes et al. (1985), Osborn and Smith (1989), McLeod (1993), Gardner (1994), Hipel and McLeod (1994), McLeod (1994), Franses and Paap (1994), Franses (1996), Novales and de Frutto (1997), Nematollahi and Soltani (2000), Serpedin et al. (2005), Lund et al. (2006), Hurd and Miamee (2007), Ursu and Turkman (2012), Chaari et al. (2014, 2015 and 2017) and Nematollahi et al. (2017).

Several estimation techniques are available for PAR models, namely the least squares method used by Franses and Paap (2004) for the univariate case and by Lutkepohl (2005) for multivariate case, the method of moments based on Yule–Walker equations and asymptotic properties provided by Pagano (1978), Troutman (1979) and Hipel and McLeod (1994) and the maximum likelihood estimation given by Vecchia (1985a, b). Ursu and Duchesne (2009) have studied the asymptotic distributions of the least squares estimators of the model parameters in periodic Vector-AR models. Lund and Basawa (2000) considered the recursive prediction and likelihood evaluation techniques for periodic autoregressive moving average (PARMA) time series models. The asymptotic properties of parameter estimates for causal and invertible PARMA models are studied by Basawa and Lund (2001).

In recent years, consideration has been given to the non-Gaussian time series model in analysis real data sets. Time series models with non-Gaussian innovations are well-studied in the literature. Li and McLeod (1988) considered the ARMA model with non-Gaussian innovations. Ni and Sun (2003) and Sun and Ni (2004, 2005) used the Bayesian inferences on the estimation parameters of vector autoregressive (VAR) model with multivariate normal and multivariate-t innovations and compared them with ML estimators via frequentist risk. Shao (2006, 2007) applied the ML estimation to mixture periodic autoregressive models with asymmetric or multimodal distributions. Bondon (2009) considered the estimation problem of the autoregressive (AR) model with epsilon-skew-normal innovations by using the method of moments and maximum likelihood estimations. Sharafi and Nematollahi (2016) studied the general AR model with skew normal (SN) innovation introduced by Azzalini (1985). The Maximum a posteriori (MAP) estimation of AR processes based on finite mixtures of scale-mixtures of skew-normal distributions is proposed by Maleki and Arellano-Valle (2017). Maleki et al. (2018) used a Bayesian analysis to AR models with scale mixtures of skew-normal (SMSN) innovations.

The main motivation of this paper is to provide a modification and improvement of the results derived by Manouchehri and Nematollahi (2019), where the estimation problems of the PAR(1) time series with symmetric and asymmetric innovations are discussed. In the asymmetric case, they proposed the multivariate skew-normal as the distribution of the T-dimensional innovations. In this paper, the multivariate closed skew normal (CSN) distribution is proposed, as an alternative innovation to the symmetric ones, to provide improved estimates in the PAR(p) time series.

The stationary ARMA models with multivariate skew-normal distributions introduced by Azzalini and Dalla Valle (1996) and Azzalini and Capitanio (1999) are well studied by Pourahmadi (2007), where the innovations are assumed to be correlated and the predictors are assumed to be nonlinear and heteroscedastic. In this case a limitation for modelling real time series will be occurred; the autocorrelations of the ARMA model do not converge to zero for large lags, unlike their Gaussian ARMA counterparts, as pointed out by Pourahmadi (2007). Interestingly, when the multivariate closed skew-normal distributions introduced by González-Farías et al. (2004) (and re-parametrized and re-generalized by Arellano-Valle and Azzalini (2006)) are used in the ARMA models, the autocorrelations of the ARMA model decay to zero exponentially and the predictors are linear and homoscedastic as in the Gaussian case, when the innovations are considered as a sequence of iid random variables with a univariate distribution in this family, see Pourahmadi (2007) and Bondon (2009) for more details.

The multivariate CSN distribution was first introduced by González-Farías et al. (2004). A \( {\text{p}} \)-dimensional vector \( \varvec{U} \) is said to have a CSN-distribution, in symbol \( \varvec{U} \sim CSN_{p,q} \left( {\varvec{\mu},\varvec{\varSigma},\varvec{\varGamma},\varvec{\nu},\varvec{\varDelta}} \right) \), if its density function is given by

where \( \varvec{\mu}\in {\mathbb{R}}^{p} \), \( \varvec{\upsilon}\in {\mathbb{R}}^{q} \), \( \varvec{\varSigma}\in {\mathbb{R}}^{p \times p} \) and \( \varvec{\varDelta}\in {\mathbb{R}}^{q \times q} \) are both covariance matrices, \( \varvec{\varGamma}\in {\mathbb{R}}^{q \times p} \), \( \varphi_{p} \left( {\cdot ;\varvec{\mu},\varvec{\varSigma}} \right) \) and \( \varPhi_{p} \left( {\cdot ;\varvec{\mu},\varvec{\varSigma}} \right) \) are the density and distribution functions of a p-dimensional normal with the indicated mean vector and covariance matrix. Comprehensive listing of the existing references is presented by Genton (2004) and Azzalini (2005). The multivariate CSN distribution has some useful properties similar to those of the Gaussian distribution. Linear combinations of components CSN random variables are also CSN random variables, thus, the CSN distribution is closed under linear transformations. The sum of two independent CSN variables will also be CSN. The CSN random variables conditional of the components are also CSN. The composition of two independent CSN variables will be also CSN. For more details, see the work of González-Farías et al. (2004). These favorable characteristics of the CSN distribution make them analytically tractable, relatively simple to fit observed data with lack of symmetry but with shape of the empirical distribution like normal distribution.

In this paper, it is shown that application of the multivariate CSN for the distribution of T-dimensional innovation associated with a second order PAR(p) time series of period T, is more appropriate and the obtained results are more accurate as compared to those reported by Manouchehri and Nematollahi (2019). The Maximum likelihood (ML), Maximum a posteriori (MAP) and Bayesian estimates of the model parameters were studied and the technical difficulties which are usually encountered in handling these methods were reported. The MAP estimate can be interpreted as a Bayes estimate when the loss function is not specified. It provides a way to incorporate prior information on the estimation process, and can be regarded as an extension of the ML estimation. The MAP estimation procedures are well proposed in the literature, see e.g. Gauvain and Lee (1994), Tolpin and Wood (2015), White et al. (2015) and Maleki and Arellano-Valle (2017) and references therein.

The outline of the paper is as follows: In Sect. 2, the PAR(p) model with the CSN innovations are introduced and the relation between the PC time series and the stationary vector series is recalled here for completeness. The ML and MAP estimation are computed by the EM algorithms in Sect. 3. In this section, the Bayesian estimates of the parameters are also obtained by the MCMC algorithms. In Sect. 4, the simulation studies are performed to check the validity of the estimation method. The consistency and asymptotic normality of estimators are also discussed. The model is then applied to a real data set in Sect. 5. Finally, a brief discussion is given in the last section.

2 Periodic autoregressive models with closed skew-normal innovations

The zero mean and real second order time series \( X = \left\{ {X_{t} } \right\}_{ - \infty }^{\infty } \) is called a periodic autoregressive of order p (PAR(p)) if

where the innovations \( \left\{ {\varepsilon_{t} } \right\}_{ - \infty }^{\infty } \) are to be assumed independent and closed skew-normally distributed, denoted by \( \varepsilon_{t} \sim CSN_{1,1} \left( {0,\sigma_{t}^{2} ,\alpha_{t} ,0,\Delta_{t} } \right) \), and \( T \) is the smallest integer for which \( \phi_{jt} = \phi_{{j\left( {t + T} \right)}} , \sigma_{t}^{2} = \sigma_{t + T}^{2} , \alpha_{t} = \alpha_{t + T} , j = 1, \ldots ,p \). In our applications, it is supposed that the parameter \( \Delta_{t} \) is a function of the unknown parameter, \( \sigma_{t}^{2}. \)

Gladyshev (1961) showed that \( X \) is periodically correlated time series with period \( T \) if and only if the \( T \)-dimensional vector \( \left( {X_{tT} ,X_{tT + 1} , \ldots ,X_{tT + T - 1} } \right)^{'} \) is stationary in the wide sense. It can be shown that each periodic autoregressive time series \( X \) with period \( T \) is related to a stationary \( T \) dimensional vector autoregressive time series \( \varvec{Y} = \left\{ {\varvec{Y}_{t} = \left( {X_{tT} ,X_{tT + 1} , \ldots ,X_{tT + T - 1} } \right)^{'} , t\text{ } \in {\mathbb{Z}}} \right\} \), (Pagano (1978)). For example, for \( p = 2 \) and \( T = 4 \), the model (2.1) can be written as a vector autoregressive model [VAR(1)] given by

where

In general, each PAR(p) process with period time \( T \) has a VAR(P) model representation, where, \( P = \left[ { \frac{p + T - 1}{T} } \right] \) and [] denotes the integer part (Pagano 1978).

Therefore, assuming the period \(T\) is known, one can consider the following general VAR(P) model

where \( \varvec{\varPhi}_{j} =\varvec{\varPhi}_{0}^{ - 1}\varvec{\varPhi}^{*}_{j} , \;j = 1, \ldots , P \) are unknown \( T \times T \) coefficients matrices, \( \left\{ {\varvec{U}_{t} } \right\}_{ - \infty }^{\infty } \) are independent and closed skew normally distributed from \( CSN_{T,T} \left( {\mathbf{0},\varvec{\varSigma}^{*} ,{\varvec{\Gamma}}^{*} ,\mathbf{0},\varvec{I}_{T} } \right) \). Here, \( \varvec{\varSigma}^{*} =\varvec{\varPhi}_{0}^{ - 1}\varvec{\varSigma}\left( {\varvec{\varPhi}_{0}^{ - 1} } \right)^{'} \), \( \varvec{\varGamma}^{*} =\varvec{\varDelta}^{ - 1/2} \varvec{\alpha \varPhi }_{0} \), \( \varvec{\varSigma}= {\text{diag}}\left( {\sigma_{1}^{2} , \ldots ,\sigma_{T}^{2} } \right) \) and \( \varvec{\alpha}= {\text{diag}}\left( {\alpha_{1} , \ldots ,\alpha_{T} } \right) \) are \( T \times T \) diagonal matrices. It can be shown that \( \varvec{Y}_{t} \left| {\varvec{Y}_{t - 1} , \ldots ,\varvec{Y}_{t - P} } \right. \sim CSN_{T,T} \left( {\mathop \sum \nolimits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} ,\varvec{\varSigma}^{*} ,\varvec{\varGamma}^{*} ,\mathbf{0},\varvec{I}_{T} } \right) \), (González-Farías et al. 2004). In Sect. 3, the ML, MAP and Bayesian approaches were examined to estimate the parameters of the VAR model (2.3) which in turn can be applied to estimate the parameters of PAR model (2.1).

3 Inference method for estimation of parameter

In this section, three technical methods were applied to estimate parameters in the proposed model (2.1).

3.1 ML estimation

The ML estimation of the parameters is the values for which the exact (full) likelihood \( f\left( {\varvec{Y}_{1} , \ldots ,\varvec{Y}_{n} } \right) = f\left( {\varvec{Y}_{1} } \right)f\left( {\varvec{Y}_{2} } \right) \cdots f\left( {\varvec{Y}_{P} } \right)f\left( {\varvec{Y}_{P + 1} , \ldots ,\varvec{Y}_{n} |\varvec{Y}_{1} ,\varvec{Y}_{2} , \ldots ,\varvec{Y}_{P} } \right) \), is maximized. In this section, we apply the conditional likelihood \( f\left( {\varvec{Y}_{P + 1} , \ldots ,\varvec{Y}_{n} |\varvec{Y}_{1} ,\varvec{Y}_{2} , \ldots ,\varvec{Y}_{P} } \right) \) due to the stationarity condition, see Manouchehri and Nematollahi (2019). When the non-normal innovations are found in a general framework and under the stationarity condition, Li and McLeod (1988) have shown that the conditional MLE and the MLE are consistent and have the same limiting normal distribution.

The conditional likelihood function of \( \varvec{\theta}= (\varvec{\varPhi}_{1} , \ldots ,\varvec{\varPhi}_{P} ,\varvec{\varSigma}^{*} ,\varvec{\varGamma}^{*} ) \) provided the observed data matrix \( \varvec{Y} = (Y_{1} , \ldots ,Y_{n} ) \) is

where \( n^{*} = n - P \).

The objective is to find the conditional MLE of θ which requires a high dimensional nonlinear procedure. Instead, the latent structure of the model proposed by Theorem 3.1 was applied for the beneficial EM-based methods.

Theorem 3.1

Let\( \varvec{V}_{t} \sim N_{T} \left( {\mathop \sum \nolimits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} ,\varvec{G}} \right) \)and\( \varvec{W}_{t} \sim N_{T}^{0} \left( {0,\varvec{\varLambda}} \right) \) (\( N_{T}^{0} \)denotes the truncated multivariate normal at0) and\( \varvec{V}_{t} \)is independent of\( \varvec{W}_{t} \)and

where \( \varvec{D} =\varvec{\varSigma}^{*}\varvec{\varGamma}^{*'} {\varvec{\Lambda}}^{ - 1} \) is a full rank matrix, \( \varvec{G} =\varvec{\varSigma}^{*} - \varvec{D\varLambda D^{\prime}} \) and \( {\varvec{\Lambda}} = \varvec{I}_{T} +\varvec{\varGamma}^{*}\varvec{\varSigma}^{*}\varvec{\varGamma}^{*'} \) , then

-

(a)

\( \varvec{ Y}_{t} \sim CSN_{T,T} \left( {\mathop \sum \nolimits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} ,{\varvec{\Sigma}}^{*} ,{\varvec{\Gamma}}^{*} ,\mathbf{0},\varvec{I}_{T} } \right), \)

-

(b)

\( \varvec{ Y}_{t} |\varvec{W}_{t} = \varvec{w}_{t} \sim N_{T} \left( {\mathop \sum \nolimits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} + \varvec{Dw}_{t} ,\varvec{G}} \right), \)

-

(c)

\( \varvec{ W}_{t} |\varvec{Y}_{t} = \varvec{y}_{t} \sim C\left( {\varvec{y}_{t} ,\varvec{\theta}_{1} } \right)N_{T}^{0} \left( {\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right), \)

where\( {\varvec{\Lambda}}^{*} = \left( {\varvec{\varLambda}^{ - 1} + \varvec{D^{\prime}G}^{ - 1} \varvec{D}} \right)^{ - 1} \), \( \varvec{\nu}^{*} = {\varvec{\Lambda}}^{*} \varvec{D^{\prime}G}^{ - 1} \left( {\varvec{y}_{t} - \mathop \sum \nolimits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} } \right) \)and\( C \)is a function of parameters\( \varvec{\theta}_{1} = \left( {\varvec{\varPhi}_{j} ,{\varvec{\Lambda}},\varvec{G},\varvec{D}} \right) \)and observed data\( \varvec{y}_{t} \). Also we have

where

and\( \frac{{\partial \varPhi_{T} \left( {\varvec{s}; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}{{\partial \varvec{s}}} \), \( \frac{{\partial^{2} \varPhi_{T} \left( {\varvec{s}; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}{{\partial \varvec{s}\partial \varvec{s^{\prime}}}} \)are the first and second derivatives of multivariate normal distribution function, respectively.

The proof is left to “Appendix A”.

It is not possible to compute the high dimensional Eqs. (3.2) and (3.3), analytically, since there is no any analytical solution for \( \varPhi_{T} \left( {.;.,.} \right) \). Thus the numerical approximations is needed to solve these equations. Alternatively, \( E\left( {W_{i} } \right) = \int_{{w_{i} }} {w_{i} p_{i} \left( {w_{i} } \right)dw_{i} } \) can be approximated by a numerical method. For example, a sample of size \( N \), say \( \varvec{x}_{1} ,\varvec{x}_{2} , \ldots ,\varvec{x}_{N} \) can be generated from \( N_{T}^{0} \left( {\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right) \) and so the traditional estimates are given by

Therefore, the conditional log-likelihood function of \( \varvec{\theta}= \left( {\varvec{\varPhi},{\varvec{\Sigma}},\varvec{\alpha}} \right) \) based on the complete data \( \varvec{Y} = \left( {\varvec{Y}_{1} ,..,\varvec{Y}_{n} } \right) \) (observed data) and \( \varvec{W} = \left( {\varvec{W}_{1} ,..,\varvec{W}_{n} } \right) \) (hidden variables or missing data) is given by

And by simplifying the parameters \( \varvec{G} =\varvec{\varPhi}_{0}^{ - 1} {\varvec{\varSigma \varLambda }}^{ - 1}\varvec{\varPhi}_{0}^{{ - 1\varvec{'}}} \), \( \varvec{D} =\varvec{\varPhi}_{0}^{ - 1} {\varvec{\varSigma \Delta }}^{ - 1/2}\varvec{\alpha}{\varvec{\Lambda}}^{ - 1} \) and \( {\varvec{\Lambda}} = \varvec{I}_{T} + {\varvec{\Delta}}^{ - 1} {\varvec{\Sigma}}\varvec{\alpha} \) in (3.5), we have

where

The EM algorithm is a helpful technique for ML estimation in models with hidden variables \( \varvec{W} \) and has several good features such as stability of monotone convergence and simplicity of implementation (Liu and Rubin (1994)). However, if the M-step of this algorithm is not in closed form, EM loses some of its attraction. The ECM algorithm proposed by Meng and Rubin (1993) is a simple modification of EM in which the M(maximization)-step is replaced by a sequence of computationally conditional maximization (CM)-steps.

Here, the ECM algorithm is used for finding ML estimates of parameters. Conditional expectation used in the ECM algorithm is \( Q\left( {\varvec{\theta},\varvec{\theta}^{\left( k \right)} } \right) = {\text{E}}_{{\varvec{\theta}^{\left( k \right)} }} \left[ {Cl\left( {\varvec{\theta}|\varvec{Y},\varvec{W}} \right) |\varvec{Y}} \right] \), where, \( \varvec{\theta}^{\left( k \right)} = \left( {\varvec{\varPhi}^{\left( k \right)} ,\varvec{\alpha}^{\left( k \right)} ,{\varvec{\Sigma}}^{\left( k \right)} ,\varvec{\varPhi}_{0}^{\left( k \right)} } \right) \) is the estimated value of \( \varvec{\theta } \) in the \( k \)-th step of algorithm as follows:

E-step Calculate \( \varvec{M}^{\left( k \right)} \) and \( \varvec{R}^{\left( k \right)} \) obtained from conditional expectations of \( N_{q}^{0} \left( {\varvec{\nu}^{ *} ,{\varvec{\Lambda}}^{ *} } \right) \), given by (3.4),

So in the E-step of the algorithm, we have

-

CM-steps

Updating of parameters in the CM-steps evidently, will be done in the following parts:

-

CM-step 1 Update \( \varvec{\varPhi}^{\left( k \right)} \) by maximizing (3.8) over \( \varvec{\varPhi} \) which gives

$$ \varvec{\varPhi}^{{\left( {k + 1} \right)}} = \left( {\varvec{Z}_{ - P}^{'} \varvec{Z}_{ - P} } \right)^{ - 1} \left[ {\varvec{Z}_{ - P}^{'} \varvec{Y}_{ - P} - \varvec{Z}_{ - P}^{'} \varvec{M}^{\left( k \right)} \left( {\varvec{\varPhi}_{0}^{{\left( k \right)^{ - 1} }}\varvec{\varDelta}^{{\left( k \right)^{{ - \frac{1}{2}}} }}\varvec{\varSigma}^{\left( k \right)}\varvec{\alpha}^{\left( k \right)}\varvec{\varLambda}^{{\left( k \right)^{ - 1} }} } \right)^{'} } \right]. $$(3.9) -

CM-step 2 Update \( \varvec{\varSigma}^{\left( k \right)} \) by maximizing (3.8) over \( \varvec{\varSigma} \) by solve the following equation

$$ \begin{aligned} &\varvec{\varSigma}^{{\left( {k + 1} \right)}} - \frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}} } \right]}}{{n^{*} }} \\ & \quad +\varvec{\varSigma}^{{2\left( {k + 1} \right)}} \left( {\frac{{\delta\varvec{\varDelta}^{ - 1} }}{{\delta\varvec{\varSigma}}}} \right)^{{\left( {k + 1} \right)}} \\ & \quad \times\frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\alpha}^{\left( k \right)2} } \right]}}{{n^{*} }} \\ & \quad +\varvec{\varSigma}^{{2\left( {k + 1} \right)}} \left( {\frac{{\delta\varvec{\varDelta}^{{ - \frac{1}{2}}} }}{{\delta\varvec{\varSigma}}}} \right)^{{\left( {k + 1} \right)}} \frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \varvec{M}^{\left( k \right)}\varvec{\alpha}^{\left( k \right)} } \right]}}{{n^{*} }} = 0. \\ \end{aligned} $$(3.10) -

CM-step 3 Update \( \varvec{\alpha}^{\left( k \right)} \) by maximizing (3.8) over \( \varvec{\alpha} \) which gives

$$ \begin{aligned}\varvec{\alpha}^{{\left( {k + 1} \right)}} & = \left\{ {{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\varDelta}^{{\left( {k + 1} \right)^{ - 1} }} } \right]} \right\}^{ - 1} \\ & \quad {\text{diag}}\left[ {\varvec{\varDelta}^{{\left( {k + 1} \right) ^{- \frac{1}{2}}}}\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \varvec{M}^{\left( k \right)} } \right]. \\ \end{aligned} $$(3.11)

The simple starting values of the parameters \( \varvec{\theta } \) can be the diagonal positive matrix (identity matrix) for \( \varvec{\varSigma} \) and \( \varvec{\alpha} \) and also \( \left( {\varvec{Z}_{ - P}^{'} \varvec{Z}_{ - P} } \right)^{ - 1} \varvec{Z}_{ - P}^{'} \varvec{Y}_{ - P } \) for \( \varvec{\varPhi} \). The E- and CM-steps are alternated repeatedly until a suitable convergence rule is satisfied, for example \( \left| {Cl\left( {\varvec{\theta}^{{\left( {k + 1} \right)}} |\varvec{Y}} \right)/Cl\left( {\varvec{\theta}^{\left( k \right)} |\varvec{Y}} \right) - 1} \right| \le {\text{tolerance}} \). The value considered here is \( 10^{ - 3} \), but the choice of tolerance may vary with different users.

3.2 Bayesian estimation

The Bayesian analysis is implemented here for the proposed model. So, we need to consider prior distribution for all the unknown parameters \( \varvec{\theta}= \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \). Since no any prior information is available from historical data or from previous experiment, we choose noninformative prior distributions for the parameters. We also suppose that the prior distributions of parameters are independent. The prior for \( \varvec{\varPhi} \) is assumed to be the constant prior \( \left( {\pi_{C} \left(\varvec{\varPhi}\right) \propto 1} \right) \) and the Jeffreys prior and RATS (Regression Analysis of Time Series) prior (a modified version of the Jeffreys prior, a software package popular among macroeconomists) are chosen for \( \varvec{\varSigma} \) given by \( \pi_{J} \left(\varvec{\varSigma}\right) \propto \left|\varvec{\varSigma}\right|^{{ - \frac{T + 1}{2}}} \) and \( \pi_{A} \left(\varvec{\varSigma}\right) \propto \left|\varvec{\varSigma}\right|^{{ - \left( {T + 1} \right)}} \), respectively, See Sun and Ni (2004, 2005). Similar to the approximate Jeffreys priors for \( \alpha \) in the univariate case, which is \( t\left( {0,\frac{{\pi^{2} }}{4},\frac{1}{2}} \right) \) (Bayes and Branco (2007)), we consider its multivariate version given by \( \pi_{J} \left(\varvec{\alpha}\right) = \mathop \prod \nolimits_{j = 1}^{T} \sqrt {\frac{\pi }{2}} \left( {1 + \frac{{2\alpha_{j}^{2} }}{{\frac{{\pi^{2} }}{4}}}} \right)^{{ - \frac{3}{2}}} \).

So, the joint prior distributions of \( \varvec{\theta } \) are

and

where CJJ and CAJ indicate the Constant–Jeffreys–Jeffreys and Constant–RATS–Jeffreys priors.

The full conditional posteriors of \( \left( {\varvec{W},\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \) are as follows

-

(a)

\( \varvec{W}_{t} |\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha},\varvec{Y}_{ - P} \sim C\left( {\varvec{y}_{t} ,\varvec{\theta}_{1} } \right)N_{q}^{0} \left( {\varvec{\nu}^{ *} ,{\varvec{\Lambda}}^{ *} } \right), t = P, \ldots ,n \), where, \( \varvec{\nu}^{ *} \) and \( {\varvec{\Lambda}}^{ *} \) are given by part (c) in Theorem 3.1.

-

(b)

The conditional distribution of \( \varvec{\varPhi} \) given \( \left( {\varvec{\varSigma},\varvec{\alpha},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \) is given by

$$\begin{aligned} & \pi (\varvec{\varPhi}| \varvec{\varSigma},\varvec{\alpha},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} ) \propto {\text{etr}}\left\{ { - \frac{1}{2}\left[ {\varvec{\varLambda \varSigma }^{ - 1} \left( {\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right) }\right.}\right.\\ & \quad \left.{\left.{- \left( {\varvec{\varDelta}^{{ - \frac{1}{2}}} (\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \varvec{W}_{ - P}\varvec{\alpha}+ \varvec{\alpha^{\prime}W}_{ - P}^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right)} \right]} \vphantom{\frac{1}{2}}\right\}. \end{aligned}$$(3.14) -

(c)

The conditional density of \( \varvec{\varSigma} \) given \( \left( {\varvec{\varPhi},\varvec{\alpha},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \) for CJJ priors is given by

$$ \begin{aligned} &\pi \left( {\varvec{\varSigma}|\varvec{\varPhi},\varvec{\alpha},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \propto \left|\varvec{\varSigma}\right|^{{ - \frac{{n^{*} + p + 1}}{2}}} {\text{etr}}\left\{ { - \frac{1}{2}\left[ {\varvec{\varSigma}^{ - 1} \left( {\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} }\right.}\right.}\right.\\ & \quad \times \left.{\left.{\left.{ \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right) +\varvec{\varDelta}^{ - 1}\varvec{\alpha}^{2} \left( {\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right) }\right.}\right.\\ & \quad\quad \left.{\left.{-\varvec{\varDelta}^{{ - \frac{1}{2}}} \left( {\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \varvec{W}_{ - P}\varvec{\alpha}+ \varvec{\alpha W}_{ - P}^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right)} \right]} \vphantom{\frac{1}{2}}\right\}. \end{aligned}$$(3.15) -

(d)

The conditional density of \( \varvec{\alpha} \) given \( \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \) is given by

$$ \begin{aligned} & \pi \left( {\varvec{\alpha}|\varvec{\varPhi},\varvec{\varSigma},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \propto {\text{etr}}\left\{ { - \frac{1}{2}\left[ {\varvec{\alpha}^{2}\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} {\varvec{\Delta}}^{ - 1} }\right.}\right.\\ & \quad \left.{\left.{- {\varvec{\Delta}}^{{ - \frac{1}{2}}} \left( {\varvec{\varPhi}_{0} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)^{'} \varvec{W}_{ - P} + \varvec{W}_{ - P}^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}} \right)\varvec{\varPhi}_{0}^{'} } \right)\varvec{\alpha}} \right]} \vphantom{\frac{1}{2}}\right\}\\ & \quad \times\mathop \prod \limits_{j = 1}^{T} \sqrt {\frac{\pi }{2}} \left( {1 + \frac{{2\alpha_{j}^{2} }}{{\frac{{\pi^{2} }}{4}}}} \right)^{{ - \frac{3}{2}}} . \end{aligned}$$(3.16)

In this study, the Gibbs sampling Markov chain Monte Carlo (MCMC) methods were applied for sample from the posteriors. The conditional posterior density of \( \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \) are not available in closed form. Thus, a MC algorithm was developed for sample \( \varvec{W}_{ - P} \) directly from the posterior distribution and sample from the conditional distribution of \( \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \), adopting a hit-and-run algorithm. For this, the one-to-one transformation \( \varvec{\varSigma}^{*} = { \log }\left(\varvec{\varSigma}\right) \), or \( \varvec{\varSigma}= { \exp }\left( {\varvec{\varSigma}^{*} } \right) \) was considered. It can be shown that the conditional posterior density of \( \varvec{\varSigma}^{*} \) for CJJ prior given \( \left( {\varvec{\alpha},\varvec{\varPhi},{\varvec{\Sigma}},\varvec{Y}_{ - P} ,\varvec{W}_{ - P} } \right) \) is then

where \( \varvec{\varSigma}^{*} = \varvec{OD}^{*} \varvec{O^{\prime}} \), \( \varvec{O} \) is \( T \times T \) orthogonal matrix, and \( \varvec{D}^{*} = {\text{diag}}\left( {d_{1}^{*} , \ldots ,d_{T}^{*} } \right) \), satisfies \( d_{1}^{*} \ge d_{2}^{*} \ge \cdots \ge d_{T}^{*} \). Assume we have a Gibbs sample \( \left( {\varvec{W}_{k} ,\varvec{\varPhi}_{k} ,\varvec{\varSigma}_{k} ,\varvec{\alpha}_{k} } \right) \) from the previous cycle. At cycle \( k + 1 \), we then use our proposed algorithm (CJJ) for sample \( \left( {\varvec{W}_{k + 1} ,\varvec{\varPhi}_{k + 1} ,\varvec{\varSigma}_{k + 1} ,\varvec{\alpha}_{k + 1} } \right) \). Details and steps of the CJJ algorithm can be found in “Appendix B”.

Finally, the Bayes estimators depend on the loss function that is characterized as follows:

-

1.

The following three loss functions for \( {\varvec{\Sigma}} \) were considered

$$ L_{\varvec{\varSigma}}^{\left( 1 \right)} \left( {\hat{\varvec{\varSigma }},\varvec{\varSigma}} \right) = {\text{tr}}\left( {\hat{\varvec{\varSigma }}^{ - 1}\varvec{\varSigma}} \right) - { \log }\left| {\hat{\varvec{\varSigma }}^{ - 1}\varvec{\varSigma}} \right| - T, $$(3.18)$$ L_{\varvec{\varSigma}}^{\left( 2 \right)} \left( {\hat{\varvec{\varSigma }},\varvec{\varSigma}} \right) = {\text{tr}}\left( {\hat{\varvec{\varSigma }}^{ - 1}\varvec{\varSigma}- \varvec{I}} \right)^{2} , $$(3.19)and

$$ L_{\varvec{\varSigma}}^{\left( 3 \right)} \left( {\hat{\varvec{\varSigma }},\varvec{\varSigma}} \right) = {\text{tr}}\left( {\varvec{\hat{\varSigma }\varSigma }^{ - 1} } \right) - { \log }\left| {\varvec{\hat{\varSigma }\varSigma }^{ - 1} } \right| - T. $$(3.20) -

2.

The following two well-known loss function for \( \varvec{\varPhi} \) were also considered

$$ L_{\varvec{\varPhi}}^{\left( 1 \right)} \left( {\hat{\varvec{\varPhi }},\varvec{\varPhi}} \right) = {\text{tr}}\left\{ {\left( {\hat{\varvec{\varPhi }} -\varvec{\varPhi}} \right)^{ '} \varvec{W}\left( {\hat{\varvec{\varPhi }} -\varvec{\varPhi}} \right)} \right\}, $$(3.21)where, \( \varvec{W} \) is a constant weighting matrix, and

$$ L_{\varvec{\varPhi}}^{\left( 2 \right)} \left( {\hat{\varvec{\varPhi }},\varvec{\varPhi}} \right) = \mathop \sum \limits_{i = 1}^{PT} \mathop \sum \limits_{j = 1}^{T} \left[ {{ \exp }\left\{ {a_{ij} \left( { \hat{\phi }_{ij} - \phi_{ij} } \right)} \right\} - a_{ij} \left( { \hat{\phi }_{ij} - \phi_{ij} } \right) - 1} \right], $$(3.22)where, \( a_{ij} \) is a given constant.

-

3.

The most common loss for \( \varvec{\alpha} \) are the quadratic loss

$$ L_{\varvec{\alpha}}^{\left( 1 \right)} \left( {\hat{\varvec{\alpha }},\varvec{\alpha}} \right) = {\text{trace}}\left\{ {\left( {\hat{\varvec{\alpha }} -\varvec{\alpha}} \right)^{ '} \varvec{A}\left( {\hat{\varvec{\alpha }} -\varvec{\alpha}} \right)} \right\}, $$(3.23)where, \( \varvec{A} \) is a constant weighting matrix. If the weighting matrix \( \varvec{A} \) is the identity matrix, then the loss of \( L_{\varvec{\alpha}}^{\left( 1 \right)} \) is simply the sum of squared errors of all elements of \( \varvec{\alpha} \), \( \mathop \sum \nolimits_{i = 1}^{T} \left( {\hat{\alpha }_{i} - \alpha_{i} } \right)^{2} . \)

The Bayesian estimates of \( {\varvec{\Sigma}} \), \( \varvec{ \alpha } \) and \( \varvec{\varPhi} \) under the above loss functions can be derived separately from minimizing expected posterior loss functions regarding \( \left( {\varvec{\varPhi},{\varvec{\Sigma}},\varvec{ \alpha }} \right) \) provided the minimum is finite. In the following well-known facts, the results are summarized.

-

(a)

The generalized Bayesian estimators of \( {\varvec{\Sigma}} \) under loss functions \( L_{\varvec{\varSigma}}^{\left( 1 \right)} \), \( L_{\varvec{\varSigma}}^{\left( 2 \right)} \) and \( L_{\varvec{\varSigma}}^{\left( 3 \right)} \) are given by

$$ \hat{\varvec{\varSigma }}_{1} = {\text{E}}\left( {\varvec{\varSigma}|\varvec{Y}_{ - P} } \right), $$(3.24)$$ {\text{vec}}\left( {\hat{\varvec{\varSigma }}_{2} } \right) = \left[ {{\text{E}}\left\{ {\left( {\varvec{\varSigma}^{ - 1} \otimes\varvec{\varSigma}^{ - 1} } \right) |\varvec{Y}_{ - P} } \right\}} \right]^{ - 1} {\text{vec }}\left\{ {{\text{E}}\left( {\varvec{\varSigma}^{ - 1} |\varvec{Y}_{ - P} } \right)} \right\}, $$(3.25)and

$$ \hat{\varvec{\varSigma }}_{3} = \{ {\text{E}}(\varvec{\varSigma}^{ - 1} |\varvec{Y}_{ - P} )\}^{ - 1} , $$(3.26)respectively.

-

(b)

The generalized Bayesian estimators of \( \varvec{\varPhi} \) under loss functions \( L_{\varvec{\varPhi}}^{\left( 1 \right)} \) and \( L_{\varvec{\varPhi}}^{\left( 2 \right)} \) are given by

$$ \hat{\varvec{\varPhi }}_{1} = {\text{E}}\left( {\varvec{\varPhi}|\varvec{Y}_{ - P} } \right), $$(3.27)and

$$ \hat{\phi }_{ij} = - \frac{1}{{a_{ij} }}\log \left[ {{\text{E}}\left\{ {{ \exp }\left( { - a_{ij} \phi_{ij} } \right) |\varvec{Y}_{ - P} } \right\}} \right], $$(3.28)For \( i = 1, \ldots ,PT \), \( j = 1, \ldots ,T \), where \( \hat{\phi }_{ij} \) is the \( \left( {i,j} \right) \)-th element of the Bayesian estimator \( \hat{\varvec{\varPhi }}_{2} \), respectively.

-

(c)

Under the loss \( L_{\varvec{\alpha}}^{\left( 1 \right)} \), the generalized Bayesian estimator of \( \varvec{\alpha} \) is given by

$$ \hat{\varvec{\alpha }}_{1} = {\text{E}}\left( {\varvec{\alpha}|\varvec{Y}_{ - P} } \right). $$(3.29)

3.3 MAP estimation

In this part, the ECM algorithm was applied to obtain the MAP estimates of the parameters \( \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \). Constant–Jeffreys–Jeffreys and Constant–RATS–Jeffreys priors were used for \( \varvec{\theta}= \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \) given by (3.12) and (3.13), respectively. According to the results obtained in the previous sections, the posterior function based on data to be maximized is \( \pi \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}|\varvec{Y}} \right) \propto CL\left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}|\varvec{Y}} \right)\pi \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \), where \( CL\left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}|\varvec{Y}} \right) \) and \( \pi \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \) are given by (3.6) and (3.12 and 3.13), respectively.

E-step Conditional expectation used in the ECM algorithm for finding MAP estimates of parameters \( \varvec{\theta} \) is

where \( {\text{E}}_{{\varvec{\theta}^{\left( k \right)} }} \left[ {l\left( {\varvec{\theta}|\varvec{Y},\varvec{W}} \right) |\varvec{Y}} \right] \) is given by (3.8).

CM-steps Updating of parameters in the CM-steps obviously, will be done in the following parts:

-

CM-step 1 Update \( \varvec{\varPhi} \) by (3.9)

-

CM-step 2 Update \( \varvec{\varSigma} \) by solving the following equation

$$ \begin{aligned} &\varvec{\varSigma}^{{\left( {k + 1} \right)}} - \frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}} } \right]}}{{n^{*} + T + 1}} \\ & \quad -\varvec{\varSigma}^{{2\left( {k + 1} \right)}} \left( {\frac{{\delta\varvec{\varDelta}^{ - 1} }}{{\delta\varvec{\varSigma}}}} \right)^{{\left( {k + 1} \right)}} \\ & \quad \quad \times\frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\alpha}^{\left( k \right)2} } \right]}}{{n^{*} + T + 1}} \\ & \quad +\varvec{\varSigma}^{{2\left( {k + 1} \right)}} \left( {\frac{{\delta\varvec{\varDelta}^{{ - \frac{1}{2}}} }}{{\delta\varvec{\varSigma}}}} \right)^{{\left( {k + 1} \right)}} \frac{{{\text{diag}}\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \varvec{M}^{\left( k \right)}\varvec{\alpha}^{\left( k \right)} } \right]}}{{n^{*} + T + 1}} = 0. \\ \end{aligned} $$(3.31) -

CM-step 3 Update \( \varvec{\alpha} \) by solving the following equation

$$ \begin{aligned} & \alpha_{j}^{3} - \frac{{\left[ {\varvec{\varDelta}^{{\left( {k + 1} \right) ^{- \frac{1}{2}}}}\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \varvec{M}^{\left( k \right)} } \right]_{j,j} }}{{\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\varDelta}^{{\left( {k + 1} \right)^{ - 1} }} } \right]_{j,j} }}\alpha_{j}^{2} \\ & \quad + \left\{ {\frac{{\pi^{2} }}{8} + \frac{3}{{\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\varDelta}^{{\left( {k + 1} \right)^{ - 1} }} } \right]_{j,j} }}} \right\}\alpha_{j} \\ & \quad - \frac{{\pi^{2} \left[ {{\varvec{\Delta}}^{{\left( {k + 1} \right) ^{- \frac{1}{2}}}}\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \varvec{M}^{\left( k \right)} } \right]_{j,j} }}{{8\left[ {\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)^{'} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P}\varvec{\varPhi}^{{\left( {k + 1} \right)}} } \right)\varvec{\varPhi}_{0}^{{\left( {k + 1} \right)'}}\varvec{\varDelta}^{{\left( {k + 1} \right)^{ - 1} }} } \right]_{j,j} }} = 0,\cr& \quad \quad j = 1, \ldots ,T. \\ \end{aligned} $$(3.32)

4 Simulation studies

This section includes the numerical results of two simulation studies for different models. In the first part, performance of the ML, MAP and Bayes estimators in the PAR models with closed skew normal innovation are compared by using the frequentist risks under a loss function \( L \), that is, \( {\text{E}}_{Y|\theta } L\left( {\theta , \hat{\theta }} \right) \) which is estimated by \( \frac{1}{n}\mathop \sum \nolimits_{i = 1}^{n} L\left( {\theta , \hat{\theta }_{i} } \right) \), where \( \hat{\theta }_{i} \) is the estimate \( \theta \) in the \( i \)-th sample \( \left( {i = 1, \ldots ,n} \right) \). In the second part, some numerical results are provided to show the consistency and asymptotic distribution of the estimators.

4.1 Simulation 1

In this part, a PAR(1) model with \( T = 4 \) and closed skew normal innovations for a sample of \( N = 200 \) observations was considered. In the study, it is supposed that \( \Delta_{t} = \sigma_{t}^{2} \). 200 samples were generated from three PAR(1) models with true different parameters shown in Table 1. To compare the ML, MAP and Bayesian estimates under priors and loss functions proposed in Sect. 3, 10,000 MCMC iterations were run after 500 burn-in cycles. The weighting matrix in the first loss function for \( \varvec{\varPhi} \) (3.21) and \( \varvec{\alpha} \) (3.23) is the identity matrix. The parameter \( a \) in the second loss function for \( \varvec{\varPhi} \) (3.22) for all elements is also assumed to be − 3.

Tables 2, 3 and 4 show the frequentist average losses and standard deviations (in parentheses) of \( \varvec{\varSigma} \), \( \varvec{\varPhi} \) and \( \varvec{\alpha} \), respectively, under different loss functions and Constant–Jeffreys–Jeffreys (CJJ) and Constant–RATS–Jeffreys (CAJ) priors for three different considered models. For example, in these tables \( \hat{\varvec{\varSigma }}_{1CJJ} \) represents the estimator of \( \varvec{\varSigma} \) under loss \( L_{\varvec{\varSigma}}^{\left( 1 \right)} \) and Constant–Jeffreys–Jeffreys prior.

Tables 5, 6 and 7 provide the means and standard deviations (in parentheses) of the estimated coefficient, scale and skewness parameters of three different PAR models with skew-normal innovation, respectively, under different loss functions and CJJ or CAJ priors. In these tables, the best results are highlighted in bold and also, the MAP1 and MAP2 denote the maximum a posteriori estimates form CJJ and CAJ priors, respectively.

In general, it is observed that the Bayes estimates provide the best performance with the lowest frequentist average losses as compared to the ML and MAP estimates in all parameters. The estimates of \( \varvec{\varSigma} \), \( \varvec{\varPhi} \) and \( \varvec{\alpha} \) under Constant–Jeffreys–Jeffreys (CJJ) prior also performed better than Constant–RATE–Jeffreys (CAJ) prior in almost all the cases.

According to Table 2, the estimates of \( \varvec{\varSigma} \) under the loss functions \( L_{\varvec{\varSigma}}^{\left( 1 \right)} \) and \( L_{\varvec{\varSigma}}^{\left( 3 \right)} \) and CJJ prior provided the best result. Table 6 also shows that, in most cases, the estimates of the scale parameters under loss functions \( L_{\varvec{\varSigma}}^{\left( 1 \right)} \) and CJJ prior are very close to the true parameters and has the lowest biases.

The frequentist average losses of \( \varvec{\varPhi} \) under CJJ and CAJ priors with loss function \( L_{\varvec{\varPhi}}^{\left( 1 \right)} \) have almost similar performances; however, it seems that the CAJ prior performs better than CJJ prior with LINEX loss function (Table 3). In all the cases and methods, the estimate of the coefficient parameters showed the same bias but the results for the estimate of CAJ prior with \( L_{\varvec{\varPhi}}^{\left( 1 \right)} \) perform were better than that of CJJ prior with LINEX loss (Table 5).

The results shown in Tables 4 and 7, also suggest that the estimates of skewness parameter \( \alpha \) under CAJ prior has lower bias and lower frequentist average losses than CJJ prior.

In summary, the Bayes approach showed the best results for estimation of unknown parameters of the PAR models with closed skew normal innovation in almost all the cases.

4.2 Simulation 2

In this part, we conduct Monte Carlo simulation to evaluate the accuracy and asymptotic properties of the various estimation methods. We generate samples from the CSN PAR(1) model with \( T = 2 \) and true parameters shown in Table 8 for small \( \left( {n = 60} \right) \), moderate \( \left( {n = 100} \right) \) and large \( \left( {n = 140, 200} \right) \) sample sizes. In this study, in addition of ML estimate, we also consider the posterior mean for Bayes estimate and MAP estimate under CJJ and CAJ prior distributions.

We compute the mean square error (MSE) and deficiency (Def) criteria for different sample sizes. The Def criterion is an essential measure for comparing the joint efficiencies of the different methods used for estimating a set of parameters (here, \( \left( {\varvec{\varPhi},\varvec{\varSigma},\varvec{\alpha}} \right) \)). It is defined as the sum of the MSE values of the estimators of the unknown parameters (Gebizlioglu et al. (2011) and is given by

where \( MSE \left( {\hat{\theta }} \right) = Var \left( {\hat{\theta }} \right) + \left( {Bias \left( {\hat{\theta }} \right)} \right)^{2} \).

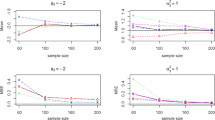

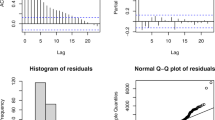

The result based on 200 Monte Carlo runs are provided in Figs. 1, 2, 3, 4 and 5. Figure 1 and 2 show the MSE and Def criteria of the proposed estimates. Figures 3, 4, 5 show the theoretical Gaussian density super-imposed over the histograms of the proposed estimates when \( N = 200 \).

Figure 1 clearly illustrates the consistency properties of the estimators. When N is small, all estimation methods suffer from the bias problem, especially for the scale and skewness parameters, however, the ML and Bayesian estimates has the lowest biased in all the parameters. By increasing the sample size, the MSE of parameters converge to zero, which indicate the consistency of the parameter estimators. For large N, the Bayesian estimator has the lowest bias in all the parameters.

Figure 2 indicates that as the sample size increases, the Def criteria decreases in all methods and the Bayesian estimators show the best performance among the estimators.

Figures 3, 4 and 5 are demonstrating that the histograms match pretty well to the asymptotic normal distribution, especially for coefficients parameters in all method estimations. The results of the Kolmogorov–Smirnov test (not given here for the reason of space limitation) also show that the asymptotic normality of the proposed estimates. The p value of Kolmogorov–Smirnov normality test for all methods are greater than 0.05.

5 Real data analysis

In this section, as an illustrative case study of the proposed estimation methods, we present an analysis of the quarterly United Kingdom macroeconomic variables. We consider the average quarterly Final Consumption Expenditure in the United Kingdom (FCEUK) from January 1, 1988, to December 31, 2017, from the National Statistical Institute of the UK (https://www.ons.gov.uk). This data set consists of 120 data points. The first 104 data points, that is, data from January, 1988 to December, 2013 were used for model building, and the remaining 16 data points, that is, data from January, 2014 to December, 2017, were used for model validation. After removing the linear trend and seasonality by applying two differencing of order 4 and 1 from the original quarterly data, respectively, some diagnostic methods are applied to determine the presence of periodic correlation. The coherent, incoherent and the measure of fitness (MoF) statistics are good tools for detecting the period of periodically correlated processes, see e.g. Broszkiewicz-Suwaj et al. (2004) and Nematollahi et al. (2017) for more details. These statistics take real values in the interval \( \left[ {0,1} \right] \) and due to the symmetry are plotted only in the interval \( \left( {0,N/2} \right) \). Peaks at points \( w_{d} \), \( w_{2d} \), \( w_{3d} \), etc. indicate periodic correlation with the period of length \( T = 1/w_{d} \). Figures 6 and 7 show the descriptive plot of the original time series, Coherent statistic, Incoherent statistic and MoF statistic of differenced data.

The coherent, incoherent and MoF statistics detect a 4-quarter period; peaks appear at frequencies being multiples of \( \frac{1}{4} \). Table 9 provide the result of fitting the PAR model with normal and skew normal innovations (Manouchehri and Nematollahi (2019)) and closed skew normal innovations for quarterly FCEUK data. The root mean square prediction error (RMSPE) value, mean absolute prediction error (MAPE) value, relative mean absolute prediction error (RMAPE) value and also, AIC and BIC for validation of the forecasts for fitted PAR model for the present data are summarized in these tables.

The results of modeling and prediction of the PAR model with the normal, skew-normal and closed skew normal innovations based on the ML, MAP and Bayes estimates which are computed in several proposed methods, are also listed.

Figure 7 shows the plots of the individual elements of one step predicted, that is, \( \hat{X}_{104 + j} , \) for \( j = 1, \ldots ,12 \) in terms of \( X_{1} , \ldots ,X_{104 + 1} , \ldots ,X_{104 + j - 1} \), assuming that the innovations are distributed according to the normal, skew-normal and closed skew normal laws. The figure also includes the 95% prediction intervals of the one step forecasts which are calculated by \( \hat{X}_{104 + 4t + k} \mp c_{1 - \alpha /2} \hat{\sigma }_{\text{k}} \), where, \( c_{1 - \alpha /2} \) is the \( (1 - \alpha /2 \))-th quantile of the normal and skew-normal distributions, and \( t = 0,1,2 \) and \( k = 1,2,3,4 \) and \( \hat{\sigma }_{\text{k}} \) denotes the square root of the variance of one step forecasts.

Results in Table 9 and Fig. 8 suggest that the PAR model with closed skew-normal innovation gives the best results.

6 Concluding remarks

In this paper, it is shown that application of the multivariate CSN for the distribution of T-dimensional innovation associated with a second order PAR(p) time series of period T, is more appropriate with respect to the multivariate SN. The multivariate CSN has some useful properties similar to those of the Gaussian distribution, which make them analytically tractable, relatively simple to fit observed data with lack of symmetry but with shape of the empirical distribution like to normal distribution. The Maximum likelihood, Maximum a posteriori and Bayesian estimates of the model parameters are examined and some technical recommendations are recommended to the users.

References

Arellano-Valle RB, Azzalini A (2006) On the unification of families of skew-normal distributions. J Stat Theory Appl 33(3):561–574

Azzalini A (1985) A class of distribution which includes the normal ones. Scand J Stat 12(2):171–178

Azzalini A (2005) The skew normal distribution and related multivariate families. Scand J Stat 32(2):159–200 (with discussion)

Azzalini A, Capitanio A (1999) Statistical applications of the multivariate skew-normal distribution. J R Stat Soc B 61:579–602

Azzalini A, Dalla Valle A (1996) The multivariate skew-normal distribution. Biometrika 83:715–726

Basawa IV, Lund RB (2001) Large sample properties of parameter estimates for periodic arma models. J Time Ser Anal 22:651–663

Bayes CL, Branco MD (2007) Bayesian inference for the skewness parameter of the scalar skew-normal distribution. Braz J Probab Stat 21:141–163

Bondon P (2009) Estimation of autoregressive models with epsilon-skew-normal innovations. J Multivar Anal 100(8):1761–1776

Broszkiewicz-Suwaj E, Makagon A, Weron R, Wylomanska A (2004) On detecting and modeling periodic correlation in financial data. Physica A 336(1–2):196–205

Chaari F, Leskow J, Napolitano A, Sanchez-Ramirez A (eds) (2014) Cyclostationarity: theory and methods. Lecture notes in mechanical engineering. Springer, Cham

Chaari F, Leskow J, Napolitano A, Zimroz R, Wylomanska A, Dudek A (eds) (2015) Cyclostatioarity: theory and methods II. Applied condition monitoring. Springer, Cham

Chaari F, Leskow J, Napolitano A, Zimroz R, Wylomanska A (eds) (2017) Cyclostationarity: theory and methods III. Applied condition monitoring. Springer, Cham

Franses PH (1996) Periodicity and stochastic trends in economic time series. Oxford University Press, Oxford

Franses PH, Paap R (1994) Model selection in periodic autoregressive. Oxf Bull Econ Stat 56(4):421–439

Franses PH, Paap R (2004) Periodic time series models. Oxford University Press, Oxford

Gardner WA (1994) Cyclostationarity in communications and signal processing. IEEE Press, New York

Gauvain J, Lee C (1994) Maximum a posteriori estimation for multivariate Gaussian mixture observations of markov Chains. IEEE Trans Speech Audio Process 2(2):291–298

Gebizlioglu OL, Senoglu B, Kantar YM (2011) Comparison of certain value-at-risk estimation methods for the two-parameter Weibull loss distribution. J Comput Appl Math 235(11):3304–3314

Genton ME (2004) Skew elliptical distributions and their applications: a journey beyond normality. CRC, London

Gladyshev EG (1961) Periodically correlated random sequences. Sov Math 2:385–388

González-Farías G, Domı́nguez-Molina J, Gupta A (2004) Additive properties of skew normal random vectors. J Stat Plan Inference 126:521–534

Hipel KW, Mcleod AI (1994) Time series modelling of water resources and environmental systems. Elsevier, Amsterdam

Hurd HL, Miamee A (2007) Periodically correlated random sequences: spectral theory and practice. Wiley, Hoboken

Li WK, McLeod AI (1988) ARMA modelling with non-Gaussian innovations. J Time Ser Anal 9(2):155–168

Liu C, Rubin DB (1994) The ECME algorithm: a simple extension of EM and ECM with faster monotone convergence. Biometrika 81:633–648

Lund RB, Basawa IV (2000) Recursive prediction and likelihood evaluation for periodic arma models. J Time Ser Anal 21:75–93

Lund R, Shao Q, Basawa I (2006) Parsimonious periodic time series models. Aust N Z J Stat 48(1):33–47

Lutkepohl H (2005) New introduction to multiple time series analysis. Springer, Berlin

Maleki M, Arellano-Valle RB (2017) Maximum a posteriori estimation of autoregressive processes based on finite mixtures of scale-mixtures of skew-normal distributions. J Stat Comput Simul 87(2):1061–1083

Maleki M, Arellano-Valle RB, Dey DK, Mahmoudi MR, Jalili SMJ (2018) A Bayesian approach to robust skewed autoregressive processes. Calcutta Stat Assoc Bull 69(2):165–182

Manouchehri T, Nematollahi AR (2019) On the estimation problem of periodic autoregressive time series: symmetric and asymmetric innovations. J Stat Comput Simul 89(1):71–97

McLeod AI (1993) Parsimony, model adequacy, and periodic autocorrelation in time series forecasting. Int Stat Rev 61:387–393

Mcleod AI (1994) Diagnostic checking of periodic autoregression models with application. J Time Ser Anal 15(2):221–233

Meng XL, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80:267–278

Nematollahi AR, Soltani AR (2000) Discrete time periodically correlated Markov processes. Math Stat 20:127–140

Nematollahi AR, Soltani AR, Mahmoudi MR (2017) Periodically correlated modeling by means of the periodograms asymptotic distributions. Stat Pap 1(1):1–12

Ni S, Sun D (2003) Noninformative priors and frequentist risks of bayesian estimators of vector-autoregressive models. J Econom 115:159–197

Noakes DJ, McLeod AI, Hipel KW (1985) Forecasting monthly river-flow time series. Int J Forecast 1:179–190

Novales A, de Frutto RF (1997) Forecasting with periodic models: a comparison with time invariant coefficient models. Int J Forecast 13:393–405

Osborn D, Smith J (1989) The performance of periodic autoregressive models in forecasting seasonal U.K. consumption. J Bus Econ Stat 7:117–127

Pagano M (1978) On periodic and multiple autoregressions. Ann Stat 6:1310–1317

Pourahmadi M (2007) Skew-normal ARMA models with nonlinear heteroscedastic predictors. Commun Stat Theory Methods 36:1803–1819

Serpedin E, Panduru F, Sarı I, Giannakis GB (2005) Bibliography on cyclostationarity. Signal Process 85(12):2233–2303

Shao Q (2006) Mixture periodic autoregressive time series models. Stat Probab Lett 76(6):609–618

Shao Q (2007) Robust estimation for periodic autoregressive time series. J Time Ser Anal 29:251–263

Sharafi M, Nematollahi AR (2016) AR(1) model with skew-normal innovations. Metrika 79(8):1011–1029

Sun D, Ni S (2004) Bayesian analysis of vector-autoregressive models with noninformative priors. J Stat Plan Inference 121(2):291–309

Sun D, Ni S (2005) Bayesian estimates for vector autoregressive models. J Bus Econ Stat 23(1):105–117

Tolpin D, Wood F (2015) Maximum a posteriori estimation by search in probabilistic programs. In: The 8th annual symposium on combinatorial search will take place in EinGedi, the Dead Sea, Israel, from June 11–13, 2015. The proceedings and workshop technical reports will be published by AAAI Press

Troutman BM (1979) Some results in periodic autoregression. Biometrika 66:219–228

Ursu E, Duchesne P (2009) On modelling and diagnostic checking of vector periodic autoregressive time series models. J Time Ser Anal 30:70–96

Ursu E, Turkman KF (2012) Periodic autoregressive model identification using genetic algorithm. J Time Ser Anal 33:398–405

Vecchia AV (1985a) Periodic autoregressive-moving average (PARMA) modeling with applications to water resources. Water Resour Bull 21:721–730

Vecchia AV (1985b) Maximum likelihood estimation for periodic autoregressive moving average models. Technometrics 27:375–384

White M, Wen J, Bowling M, Schuurmans D (2015) Optimal estimation of multivariate ARMA models. In: Proceedings of the twenty-ninth AAAI conference on artificial intelligence

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof of Theorem 3.1

In order to prove of Theorem 3.1, we need some preliminary definitions and properties.

Definition 1

(Truncated multivariate normal). If \( \varvec{W} \sim N_{q} \left( {\varvec{\mu},{\varvec{\Sigma}}} \right) \) and \( \varvec{U} = \left\{ {\begin{array}{*{20}l} \varvec{W} & {if\quad \varvec{W} \ge \varvec{c}} \\ {\mathbf{0}} & {if\quad ~\varvec{W} < \varvec{c}} \\ \end{array} } \right. \) where \( \varvec{W} \ge \varvec{c} \) means \( W_{j} \ge c_{j} , j = 1, \ldots ,q \), then the density function of \( \varvec{U} \) is:

\( \varvec{U} \) is truncated multivariate normal denote by \( \varvec{U} \sim N_{q}^{\varvec{c}} \left( {\varvec{\mu},{\varvec{\Sigma}}} \right) \).

Property 1

If \( \varvec{U} \sim N_{q}^{\varvec{c}} \left( {\varvec{\mu},{\varvec{\Sigma}}} \right) \) then the moment generating function of \( \varvec{U} \) is given by

Property 2

If\( \varvec{Z} \sim CSN_{p,q} \left( {\varvec{\mu},\varvec{\varSigma},\varvec{\varGamma},\varvec{\nu},\varvec{\varDelta}} \right) \), then the moment generative function of\( \varvec{Z} \)is given in González-Farías et al. (2004) as

Proof of Theorem 3.1

The result of part (a) is proved by using the uniqueness property of the moment generating functions. Note that

where \( \varvec{Z} \sim CSN_{T,T} \left( {\mathop \sum \limits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} ,\varvec{\varSigma}^{*} ,\varvec{\varGamma}^{*} ,{\mathbf{0}},\varvec{I}_{\varvec{T}} } \right) \).

(b) It is proved by using the linearity property of the multivariate normal distributions.

(c) It can be proved by the following arguments:

where \( {\varvec{\Lambda}}^{*} = \left( {\varvec{\varLambda}^{ - 1} + \varvec{D^{\prime}G}^{ - 1} \varvec{D}} \right)^{ - 1} \), \( \varvec{\nu}^{*} = {\varvec{\Lambda}}^{*} \varvec{D^{\prime}G}^{ - 1} \left( {\varvec{y}_{t} - \mathop \sum \limits_{j = 1}^{P}\varvec{\varPhi}_{j} \varvec{y}_{t - j} } \right) \), and \( C \) is function of parameters \( \varvec{\theta}_{1} = \left( {\varvec{\varPhi}_{j} ,{\varvec{\Lambda}},\varvec{G},\varvec{D}} \right) \) and observed data \( \varvec{y}_{t} \).

The moment generating function of \( \varvec{W}_{t} |\varvec{Y}_{t} \) is given by

and so

where

Therefore

where \( \xi_{1} = \frac{{\frac{{\partial \varPhi_{T} \left( {\varvec{s}; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}{{\partial \varvec{s}}}}}{{\varPhi_{T} \left( {0; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}|_{{\varvec{s} = 0}} . \) Also,

Where

Therefore

where \( \xi_{2} = \frac{{\frac{{\partial^{2} \varPhi_{T} \left( {\varvec{s}; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}{{\partial \varvec{s}\partial \varvec{s^{\prime}}}}}}{{\varPhi_{T} \left( {0; -\varvec{\nu}^{*} ,{\varvec{\Lambda}}^{*} } \right)}}|_{{\varvec{s} = \varvec{s^{\prime}} = 0}} . \)

Appendix B. Algorithm CJJ

Step 1 Compute \( \varvec{\nu}_{{\left( {k + 1} \right)}} = \varvec{I}_{T} \) and \( \varvec{m}_{{\left( {k + 1} \right)}} =\varvec{\alpha}_{k} {\varvec{\Delta}}_{k}^{ - 1/2}\varvec{\varPhi}_{0k}^{\varvec{'}} \left( {\varvec{Y}_{ - P} - \varvec{Z}_{ - P} {\varvec{\Phi}}_{k} } \right).\varvec{ } \) Simulate \( \varvec{W}_{k + 1} \) from a multivariate truncated normal with mean \( \varvec{m}_{{\left( {k + 1} \right)}} \) and \( T \times T \) variance–covariance matrix \( \varvec{\nu}_{k + 1} \).

Step 2 Select a \( T \)-dimention random vector \( \varvec{V}_{1} \) with elements \( v_{1i} = z_{1i} /( {\mathop \sum \limits_{j} z_{1j}^{2} } )^{1/2} \), where, \( z_{1i} \), \( 1 \le i \le T \) are \( iid \sim N\left( {0,1} \right) \). Generate \( \lambda_{1} \sim N\left( {0,1} \right) \) and set \( {\varvec{\Upsilon}}_{1} =\varvec{\varPhi}_{k} + \lambda_{1} \varvec{V}_{1} \). Compute

Simulate \( u_{1} \sim {\text{Unif}}\left( {0,1} \right) \). If \( u_{1} \le { \hbox{min} }\left( {1,{ \exp }\left( {\tau_{k + 1} } \right)} \right) \)., let \( \varvec{\varPhi}_{k + 1} = {\varvec{\Upsilon}}_{1} \). Otherwise, let \( \varvec{\varPhi}_{k + 1} =\varvec{\varPhi}_{k} \).

Step 3 Decompose \( {\varvec{\Sigma}}_{k} = \varvec{ODO^{\prime}} \), where, \( \varvec{D} = {\text{diag}}( {d_{1} , \ldots ,d_{T} }) \), \( d_{1} \ge d_{2} \ge \ldots \ge d_{T} \), and \( \varvec{OO^{\prime}} = \varvec{I} \). Let \( d_{i}^{*} = { \log }\left( {d_{i} } \right) \), \( \varvec{D}^{*} = {\text{diag}}\left( {d_{1}^{*} , \ldots ,d_{T}^{*} } \right) \) and \( {\varvec{\Sigma}}_{k}^{*} = \varvec{OD}^{*} \varvec{O^{\prime}}. \)

Select a random symmetric \( T \times T \) matrix \( \varvec{V}_{2} \) with elements \( v_{2ij} = z_{2ij} /( {\mathop \sum \nolimits_{l \le m} z_{2lm}^{2} } )^{1/2} \), where, \( z_{2ij} \), \( 1 \le i \le j \le T \times \left( {T + 1} \right)/2 \), are \( iid \sim N\left( {0,1} \right) \). (the other elements of \( \varvec{V}_{2} \) are defined by symmetry).

Generate \( \lambda_{2} \sim N\left( {0,1} \right) \) and set \( {\varvec{\Upsilon}}_{2} = {\varvec{\Sigma}}_{k}^{*} + \lambda_{2} \varvec{V}_{2} \). Decompose \( {\varvec{\Upsilon}}_{2} = \varvec{QC}^{*} \varvec{Q^{\prime}} \), where, \( \varvec{C}^{*} = diag( {c_{1}^{*} , \ldots ,c_{T}^{*} }) \), \( c_{1}^{*} \ge c_{2}^{*} \ge \ldots \ge c_{T}^{*} \), and \( \varvec{QQ^{\prime} } = \varvec{I} \). Compute

Simulate \( u_{2} \sim {\text{Unif}}\left( {0,1} \right) \). If \( u_{2} \le { \hbox{min} }\left( {1,exp\left( {\tau_{k + 1} } \right)} \right) \), let \( {\varvec{\Sigma}}_{k + 1}^{*} = {\varvec{\Upsilon}}_{2} \), \( \varvec{C} = {\text{diag}}\left( {e^{{c_{1}^{*} }} , \ldots ,e^{{c_{T}^{*} }} } \right) \) and \( {\varvec{\Sigma}}_{k + 1} = \varvec{QCQ^{\prime}} \). Otherwise, let \( {\varvec{\Sigma}}_{k + 1}^{*} = {\varvec{\Sigma}}_{k}^{*} \) and \( {\varvec{\Sigma}}_{k + 1} = {\varvec{\Sigma}}_{k} \).

Step 4 Select a \( T \)-dimention random vector \( \varvec{V}_{3} \) with elements \( v_{3i} = z_{3i} /( {\mathop \sum \limits_{j} z_{3j}^{2} } )^{1/2} \), where, \( z_{3i} \), \( 1 \le i \le T \) are \( iid \sim N\left( {0,1} \right) \). Generate \( \lambda_{3} \sim N\left( {0,1} \right) \) and set \( {\varvec{\Upsilon}}_{3} =\varvec{\alpha}_{k} + \lambda_{3} \varvec{V}_{3} \). Compute

Simulate \( u_{3} \sim {\text{Unif}}\left( {0,1} \right) \). If \( u_{3} \le { \hbox{min} }\left( {1,exp\left( {\tau_{k + 1} } \right)} \right) \), let \( \varvec{\alpha}_{k + 1} = {\varvec{\Upsilon}}_{3} \). Otherwise, let \( \varvec{\alpha}_{k + 1} =\varvec{\alpha}_{k} \).

Rights and permissions

About this article

Cite this article

Manouchehri, T., Nematollahi, A.R. Periodic autoregressive models with closed skew-normal innovations. Comput Stat 34, 1183–1213 (2019). https://doi.org/10.1007/s00180-019-00893-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-019-00893-z