Abstract

We propose a weld quality measurement system specifically designed for T-joints using a surface-structured light stereo scanner. The T-joint, which consists of a rib plate and a base plate, undergoes assessment based on the welding angle, height, and width. The system begins by employing a scanner to capture point cloud data of the rib plate and base plate. To simplify this data, pass-through filtering and voxel meshing methods are incorporated. Following this, the T-joint point cloud is segmented using a region-growing algorithm, and the normal vectors of the rib plate and base plate are calculated using the random sample consensus (RANSAC) algorithm. To extract the weld lines from the T-joint point cloud, the fast point feature histogram (FPFH) algorithm is utilized. The welding angle is subsequently determined from the normal vectors of the two plates, while the weld height and width are extracted using the weld line point cloud. To evaluate the effectiveness of the system, it is applied to a T-joint in a Ti-6Al-4 V alloy–stiffened plate. The results demonstrate that the system can accurately detect the welding angle, height, and width, thus providing valuable technical support for the timely monitoring of weld quality in T-joints throughout the welding process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Welding is a critical technology for the processing and manufacturing of stiffened plates [1]. T-joints are the primary welding form used in stiffened plate applications, with dual-beam laser welding being the preferred technique due to its favorable welding results and reduced inherent defects [2,3,4]. The evaluation of weld quality primarily involves assessing parameters such as welding angle, height, and width. However, there are currently some challenges in measuring weld quality, including low efficiency, low precision, and high cost.

Machine visual inspection has emerged as a promising solution for automatic seam tracking and accurate measurement due to its advantages of high efficiency, stability, accuracy, and noncontact operation [5,6,7]. Li et al. [8] proposed a vision-based weld appearance inspection algorithm, including the measurement of weld forming dimensions and the detection of weld appearance defects. A sub-pixel stripe centerline extraction algorithm based on the combination of the Hessian matrix method and the center of gravity method is proposed, which improves the efficiency and accuracy of weld appearance inspection and meets the needs of automation and intelligence of the whole welding process. Zhang et al. [9] propose a method based on a deep-learning algorithm and traditional computer vision (TCV) algorithm to achieve laser weld joints in the battery production process quality inspection. A TCV heuristic algorithm was also proposed to implement three types of error detection, i.e., weld pad slant placement, electrode tab height placement, and weld joint solder-through. Li et al. [10] proposed a fine-grained flexible graph convolutional network (FFGCN) for visual detection of resistance-spot welds combining natural language processing with computer vision. The features in the point-by-point space are combined with visual features through dot products to achieve the classification of weld appearance. Cheng et al. [11] proposed a ray detection (RT) weld image enhancement method based on phase symmetry for RT images with low gray values and low contrast. This method was also compared with commonly used methods, and the results showed that the proposed method achieved improvements over state-of-the-art methods. Soares et al. [12] used a passive monocular camera as part of a vision system to quantify and analyze the weld bead texture using a principal component analysis-based algorithm to identify the presence of weld discontinuities. A machine learning approach was used to classify new weld beads as healthy or defective, and the accuracy of the proposed texture identification method reached 96.4%.

Several studies have utilized laser vision sensors and structured light vision inspection systems to measure the geometric parameters and dimensions of welds, enabling automatic measurement, assembly, and quality inspection of welded components [13,14,15,16]. Ye et al. [17] proposed a weld evaluation method that integrates the use of line-structured light and weak magnetic detection techniques. The method detects internal weld defects by the weak magnetic detection technique and reconstructs the three-dimensional morphology of the weld pool surface using a line-structured light system to obtain a high-precision weld profile and detect surface defects. Through comprehensive analysis of the inspection results, surface and internal defects can be effectively classified, and the equivalent size of defects can be estimated, enabling a multidimensional evaluation of weld formation quality. Li et al. [18] proposed a 3D reconstruction technique for highly reflective weld surfaces based on binocular structured light stereo vision. The point cloud of the welded surface is generated using the edge projection parameter, the mapping and alignment of the 2D image to the 3D point cloud is established, the refined point cloud is manipulated using a bidirectional slicing method, the discrete points formed by the projection are fitted using a smooth spline model, and the blank areas are filled using an iterative algorithm. It was experimentally confirmed that the method can accurately reconstruct various weld surfaces with improved accuracy. Cai et al. [19] proposed a vision service system based on structured light vision sensors for the inspection of multi-layer and multi-rail welds. The 3D model of the weld seam is obtained by calibration methods such as camera calibration, structured light plane calibration, and hand-eye calibration. Finally, a virtual model of the weld seam is reconstructed using the least square method and greedy triangulation, which in turn evaluates the welding process based on the width and height characteristics of the welding leg.

In current aircraft manufacturing processes, automated assembly and laser welding using assembly robots and welding robots are commonly used. However, the use of thin-walled components made of titanium alloys often leads to distortion and significant assembly errors, resulting in insufficient weld accuracy. This, in turn, can lead to substantial welding angle distortion and inconsistent weld quality. To address this problem, we propose a T-joint weld quality measurement system using a surface structured light 3D scanner, which combines multiple point cloud processing techniques to achieve accurate measurement of the welding angle, height, and width of T-joints. The high accuracy of the system is confirmed by comparing the system measurement results with manual measurements. This T-joint weld quality measurement system offers a promising solution to the challenges faced in the aircraft manufacturing process. By ensuring accurate and reliable measurements, it helps maintain welding standards and improve overall weld quality.

2 Design of weld quality measurement system

The T-joint welding quality measurement system consists of a welding robot, a scanner, and a fixture, as shown in Fig. 1, where the welding robot is a KUKA KR360R2830 six-axis robot. Because Ti-6Al-4 V alloy–stiffened plates are widely used in the aerospace industry, it was chosen as the welding material with a size of 300 × 100 × 2 mm and the welding method is dual-beam laser welding.

In addition, the fixture shown in Fig. 2 is mounted on the end of the welding robot to securely hold the welding torch, wire, and scanner. The scanner is internally composed of two CCD cameras, a DLP projector, and an image acquisition card, as shown in Fig. 3. It uses the principle of binocular vision to acquire point clouds from the rib plate and the base plate, and the parameters of the scanner are shown in Table 1.

3 Point cloud processing

After dual-beam laser welding of the Ti-6Al-4 V alloy stiffened plate is shown in Fig. 4. The point cloud data of the T-joint was subsequently acquired with a scanner. The scanner’s image acquisition card used the parallax principle and triangulation to calculate the depth information of the rib plate and base plate, and a total of 2,613,942 points were obtained, but these data included irrelevant field points and redundant information. Therefore, multiple point cloud processing algorithms are needed to streamline and classify the point clouds.

3.1 Simplification of T-joints point cloud

In order to enhance the efficiency and accuracy of weld quality inspection, it is necessary to eliminate irrelevant point cloud data. To achieve this, we utilize the pass-through filtering technique provided by the Point Cloud Library (PCL), an open-source tool specifically designed for processing 3D images and point cloud data. The pass-through filtering process involves defining coordinate thresholds based on the size of the T-joint. In this case, the ranges along the X, Y, and Z axes are set as follows: ± 90 mm, − 5 to 15 mm, and − 5 to 33 mm, respectively. By applying this filtering method, a total of 230,138 points were obtained in the processed point cloud, as illustrated in Fig. 5. This filtering process effectively removes irrelevant data, ensuring that only the essential information related to the weld quality assessment is preserved for further analysis.

To further reduce the point cloud density, the voxel mesh method in PCL is used to process the filtered point cloud. First, a three-dimensional voxel grid is created based on the filtered point cloud. Second, the edge lengths of the mesh are specified, and each point within the mesh is approximately replaced by the center point of the mesh. Finally, to achieve the best simplification of the point cloud, the edge length of the voxel mesh is set to 1 mm, resulting in a simplified point cloud consisting of 10,562 points, the result of which is shown in Fig. 6.

3.2 Segmentation of T-joints point cloud

After simplifying the point cloud of the T-joint, the region-growing algorithm in PCL is utilized to segment the point cloud into the rib-plate and base-plate regions. The method is as follows:

Firstly, seed points are determined based on the point with the smallest curvature in the point cloud. Secondly, an empty seed point sequence Cseed, cluster array Darray, and four parameters are set: the number of neighbor points of a seed point Nneighbor, the smoothing threshold Hsmooth, the curvature threshold Zcurvature, and the minimum number of point cloud clusters Snumber. Finally, the angle between Nneighbor and the normal vector of the seed point is denoted as Vangle. The curvature of each neighboring point is denoted as Ci. The number of segmented point clouds is denoted as Nsegment. The algorithm flowchart is illustrated in Fig. 7.

During the segmentation process, only the points that satisfy all judgments are classified into the current cluster array. Points that do not meet the judgments are excluded from the current cluster array. After comparing the results of point cloud segmentation with different parameters, the optimized values for Nneighbor, Hsmooth, Zcurvature, and Snumber are determined to be 30, 3.0, 1.0, and 50, respectively. The segmented point cloud for the rib plate and base plate is illustrated in Fig. 8. It is evident that the segmentation process has successfully separated the rib-plate and base-plate regions.

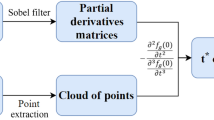

To calculate the height and width of the welding leg, the welding line point cloud needs to be extracted from the T-joint point cloud. However, because the welding line point cloud often has a narrow distribution, it cannot be directly simplified. Therefore, the FPFH algorithm in PCL is employed to extract the welding line point cloud. The following is the step for extracting the welding line point cloud using FPFH:

-

(1)

The FPFH method divides the feature space into 33 intervals, referred to as bins. Each bin represents a one-dimensional attribute of the target point.

-

(2)

For each point, the neighborhood is considered, and the percentage of points falling into each bin in relation to the total number of points in the neighborhood is calculated. This percentage serves as the attribute value for each dimension.

-

(3)

By comparing the attribute values of the welding line point cloud and the non-welding line point cloud, it is determined that bin [16] is the most significant.

-

(4)

Each point in the point cloud, after pass-through filtering, is iterated to determine whether the FPFH bin [16] value of each point is below a threshold β. If bin [16] < β, then the point is classified as belonging to the welding line point cloud.

By following this process, the FPFH method effectively extracts the welding line point cloud, enabling the subsequent calculation of the height and width of the welding leg.

Significantly, the FPFH requires setting two parameters, the number of normal neighboring points N1 and the normal search radius r, where the value of r affects the accuracy of the final extraction of the welded line point cloud. Therefore, it is necessary to choose an appropriate value, which is determined by calculating the average distance density d1. In a point cloud with n points, dis(e, f) is used to represent the distance between point e and any other point f, and de is used to represent the minimum distance between point e and other points; the calculation formula of de is as follows:

The average distance density d1 of the point cloud is:

The normal search radius r is:

In this study, the values of N1 and r are set to 100 and 1.70, respectively. The resulting feature histogram of the point cloud is depicted in Fig. 9, where “Weld” represents the welding line point cloud and “Non-weld” represents the non-welding line point cloud in the feature histogram.

By analyzing these histograms, it was determined that setting the threshold β to 50 allows for the effective extraction of the weld line point cloud from the T-joint point cloud, as illustrated in Fig. 10. This analysis demonstrates the effectiveness of the proposed method in accurately identifying and extracting the weld line from the surrounding point cloud data. By setting the appropriate threshold value, the weld line point cloud can be precisely isolated, facilitating further analysis and evaluation of weld quality.

Up to this point, the point clouds of T-joints have been simplified by pass-through filtering and the voxel grid method. Additionally, the point cloud of the rib plate and the base plate has been segmented separately using the region-growth algorithm. Furthermore, the point cloud specifically at the welding line of the T-joint has been extracted using the FPFH algorithm.

4 Measurement method of T-joints

The system aims to measure the welding angle, height, and width of T-joints. After segmenting the point cloud of the T-joint, further processing is necessary to obtain the required parameters for measuring the welding angle, height, and width.

4.1 Measurement method of welding angle

In order to accurately measure the welding angle, the RANSAC algorithm is used to fit the rib plate and base plate so that their respective normal vectors can be derived, and these normal vectors are then used to calculate the welding angle. Taking the fitting process for the rib-plate as an example, the detailed steps of the RANSAC algorithm are as follows:

-

(1)

Select 3 points randomly from the rib-plate point cloud and form a plane by these 3 points to calculate the plane model parameters.

-

(2)

Set the distance threshold Tdis from the point to the plane and then calculate the distance from the unselected point to the plane in (1). If the distance is less than Tdis, mark it as a point in the plane; the total number of points in the plane is counted finally.

-

(3)

Repeat steps (1) and (2), count and sort the total points of each plane, and select the plane with the most total points.

-

(4)

Repeat steps (1) ~ (3), and set the maximum iteration times as miter. When the number of iterations is greater than miter, the plane parameter with the largest total number of points is the normal vector of the rib plate.

Through repeated experiments and comparing the fitting results, it is concluded that Tdis is 0.01 and miter is 10,000; the effect of the fitting point cloud of rib-plate and base-plate is the best.

The cross-sectional view of the T-joint is shown in Fig. 11. Since the angle of the normal vectors of the two plates is equal to the welding angle, the welding angle can be obtained according to Eq. 4:

where (A1, B1, C1) and (A2, B2, C2) are the normal vectors of the rib plate and base plate, respectively.

4.2 Measurement method of the height h and width b of welding leg

To calculate the height and width of the welding leg, it is essential to convert the three-dimensional information of the welding line point cloud into two-dimensional contour information. To achieve this, the extracted welding line point cloud needs to be sliced. By determining an appropriate thickness for the point cloud slice and selecting the direction of the cutting plane, accurate contour information of the welding line point cloud can be obtained. This contour information is crucial for subsequent calculations.

The slice thickness of the point cloud is:

The specific method to determine the direction of the cutting plane is as follows:

The plane equation of the rib plate is:

The plane equation of the base plate is:

Therefore, the direction vector u of the intersection line lT of the two plates is:

The coordinate of any point m on the intersection line lT is:

The intersection line lT can be determined by the point m on the line and the direction vector u of the line. Making the cutting plane perpendicular to the line lT, the extracted contour points are shown in Fig. 12.

In order to extract the cross-sectional contour points of the welding line point cloud, the intersection line lT between the rib plate and base plate is obtained. By extracting two endpoints of the cross-sectional profile points and calculating the distances between these endpoints and the intersection line lT, the height h and width b of the welding leg can be determined. The extraction method for the two endpoints is as follows:

Firstly, an initial point is randomly selected within the cross-sectional profile points. Then, all contour points are traversed to find the point that is farthest from the initial point based on the Euclidean distance. This farthest point is defined as the endpoint pd. Finally, from the endpoint pd, the point that is farthest is found as the second endpoint qd, as illustrated in Fig. 13. These two endpoints are crucial for calculating the height and width of the welding leg.

The height h and width b of the welding leg are calculated as in Eqs. 10 and 11, respectively.

where (x1, y1, z1) and (x2, y2, z2) are the coordinates of pd and qd, and (x0, y0, z0) is the coordinate of point m on the intersection line lT.

5 Experimental verification

It is necessary to calibrate the 3D scanner before using it for the first time. The marble dot calibrating plate is used for calibrating the scanner. The calibration steps are as follows:

(1) The surface-structured light 3D scanner was installed on the end effector of the KUKA robot through the fixture, and the calibration board was placed on the work table, as shown in Fig. 14.

(2) The distance between the scanner and the calibration board is adjusted by controlling the movement of the robot. The calibration plate is then rotated 90° counterclockwise, capturing one image per rotation, for a total of four images.

(3) Instruct the scanner on the robot to move up 100 mm along the Z-axis of the global coordinate system. Capture four images using the same method as in step (2); the scanner on the robot is then instructed to move 100 mm down the Z-axis and take four more images.

(4) Next, control the scanner to rotate 30° around the global coordinate system X-axis, while rotating the calibration plate 180° counterclockwise. Each rotation captures one image, for a total of two images. The scanner is then controlled to rotate − 30° along the X-axis of the global coordinate system to obtain two more images. Finally, the scanner is rotated around the Y-axis and four images are captured using the same program. A total of 20 images were obtained during the correction process. The result of the calibration after processing is shown in Fig. 15.

The workpiece being tested is coated with developing powder and placed in a specially designed lighting environment to assess the uniformity of the spray coating, as shown in Fig. 16. Subsequently, the workpiece to be measured is positioned on the work table to evaluate the quality of the weld, as illustrated in Fig. 17.

To validate the accuracy of this measurement system, multiple measurements of the welding angle, height, and width of T-joints are conducted using both the measurement system and manual methods, utilizing a universal angle ruler and vernier caliper. The following verification steps are performed.

5.1 Verification of welding angle

The welding angle of the T-joints was measured using a weld quality measurement system and a universal angle gauge, respectively. Both methods were measured several times for six different T-joints, and the average value was used as the final result. The results obtained by the measurement system and the manual method are expressed as \(\theta_{c}\) and \(\theta_{m}\), respectively, as shown in Table 2. For the convenience of comparison, Fig. 18 is drawn. It can be concluded that the error result is within ± 0.3°, which satisfies the specified requirements, indicating that the system is capable of accurately measuring the welding angle of T-joints, thus proving its ability to replace the manual angle gauge measurement method. The average time of measuring the weld forming angle of these 6 workpieces by this system is 2.4 ms, which meets the industrial requirements.

5.2 Verification of the height and width of welding leg

Similar to the method used to measure the welding angle, the height and width of the welding leg are calculated at multiple locations on the weld line of six different T-joints. The average value obtained from the measurement system was used as the final measurement result. And the same test was performed using a vernier caliper with an accuracy of 0.02 mm, the results of which were used as a control.

The height and width of the welding leg measured by the measurement system are denoted as hc and bc, respectively, while those measured by the vernier caliper are denoted as hm and as bm, respectively. The errors in welding leg height and width are represented as \(\Delta h\) and \(\Delta b\), respectively, as shown in Table 3. For the convenience of comparison, Fig. 19 is drawn. It can be concluded that the error results are within ± 0.2 mm, indicating that the system accurately measures the height and width of the solder foot within the specified error range, thus demonstrating its ability to replace manual measurement methods. The average time of measuring the height and width of the weld seams of these 6 workpieces by this system is 9.3 ms, which also meets the industrial requirements.

The sources of error primarily include multi-view point cloud stitching, image noise, calibration error, and calculation method error. Analyzing these sources of error and mitigating controllable errors will help enhance the measurement accuracy of the system. This aspect will be the focus of our future research endeavors.

6 Conclusions

This paper presents a welding quality measurement system for T-joints using a surface-structured light 3D scanner. The system uses a combination of algorithms to achieve the processing of point clouds. First, pass-through filtering and voxel grid method are used to simplify the scanned point cloud. Second, the segmentation of the point cloud and the extraction of the weld point cloud are realized by the region-growing algorithm and FPFH, respectively. Finally, the weld angle and the height and width of the welding leg of the T-joint were measured by the RANSAC algorithm.

By comparing the accuracy of the system with traditional measurement methods, the system’s efficiency in weld quality measurement was confirmed. The functions of the system provide important technical support for timely monitoring of the weld quality of T-joints during the welding process.

References

Karayel E, Bozkurt Y (2020) Additive manufacturing method and different welding applications. J Mater Res Technol-JMRT 9(5):11424–11438. https://doi.org/10.1016/j.jmrt.2020.08.039

Cha JH, Choi HW (2022) Characterization of dissimilar aluminum-copper material joining by controlled dual laser beam. Int J Adv Manuf Technol 119(3–4):1909–1920. https://doi.org/10.1007/s00170-021-08324-4

Ai YW, Cheng J, Yu L, Lei C, Yuan PC (2022) Numerical investigation of weld bead porosity reduction in the oscillating laser T-joint welding of aluminum alloy. J Laser Appl 34(1):8. https://doi.org/10.2351/7.0000495

Li JJ, Shen JQ, Hu SS, Zhang H, Bu XZ (2019) Microstructure and mechanical properties of Ti-22Al-25Nb/TA15 dissimilar joint fabricated by dual-beam laser welding. Opt Laser Technol 109:123–130. https://doi.org/10.1016/j.optlastec.2018.07.077

Fang WH, Xu XL, Tian XC (2022) A vision-based method for narrow weld trajectory recognition of arc welding robots. Int J Adv Manuf Technol 121(11–12):8039–8050. https://doi.org/10.1007/s00170-022-09804-x

Faria ICS, Filleti RAP, Helleno AL (2022) Evolution of process automation in welding cells: a literature review. Soldagem Insp 27:16. https://doi.org/10.1590/0104-9224/si27.04

Rodriguez-Gonzalvez P, Rodriguez-Martin M (2019) Weld bead detection based on 3D geometric features and machine learning approaches. IEEE Access 7:14714–14727. https://doi.org/10.1109/access.2019.2891367

Li Y, Hu M, Wang T, Maseleno A, Yuan X, Balas VE (2020) Visual inspection of weld surface quality. J Intell Fuzzy Syst 39(4):5075–5084. https://doi.org/10.3233/jifs-179993

Zhang HX, Di XG, Zhang Y (2020) Real-time CU-net-based welding quality inspection algorithm in battery production. IEEE Trans Ind Electron 67(12):10942–10950. https://doi.org/10.1109/tie.2019.2962421

Li Q, Yang B, Wang SL, Zhang ZP, Tang XL, Zhao CY (2022) A fine-grained flexible graph convolution network for visual inspection of resistance spot welds using cross-domain features. J Manuf Process 78:319–329. https://doi.org/10.1016/j.jmapro.2022.04.025

Chang YS, Gao JM, Jiang HQ, Wang Z (2019) A novel method of radiographic image enhancement based on phase symmetry. Insight 61(10):577–583. https://doi.org/10.1784/insi.2019.61.10.577

Soares LB, Costa HL, Botelho SSC, Souza D, Rodrigues RN, Drews P (2023) A robotic passive vision system for texture analysis in weld beads. J Braz Soc Mech Sci Eng 45(1):7. https://doi.org/10.1007/s40430-022-03914-z

Dong ZX, Mai ZH, Yin SQ, Wang J, Yuan J, Fei YN (2020) A weld line detection robot based on structure light for automatic NDT. Int J Adv Manuf Technol 111(7–8):1831–1845. https://doi.org/10.1007/s00170-020-05964-w

Kim C-H, Choi T-Y, Jang LJ, Suh J, Kyoungtaikpark, Kang H- (2019) Study of intelligent vision sensor for the robotic laser welding. J Korean Soc Ind Converg 22(4):447–57. https://doi.org/10.21289/ksic.2019.22.4.447

Rout A, Deepak B, Biswal BB, Mahanta GB (2022) Weld seam detection, finding, and setting of process parameters for varying weld gap by the utilization of laser and vision Sensor in Robotic Arc Welding. IEEE Trans Ind Electron 69(1):622–632. https://doi.org/10.1109/tie.2021.3050368

Fan JF, Jing FS, Yang L, Long T, Tan M (2019) A precise initial weld point guiding method of micro-gap weld based on structured light vision sensor. IEEE Sens J 19(1):322–331. https://doi.org/10.1109/jsen.2018.2876144

Ye J, Xia GS, Liu F, Fu P, Cheng QQ (2021) Weld defect inspection based on machine vision and weak magnetic technology. Insight 63(9):547–553. https://doi.org/10.1784/insi.2021.63.9.547

Li BZ, Xu ZJ, Gao F, Cao YL, Dong QC (2022) 3D reconstruction of high reflective welding surface based on binocular structured light stereo vision. Machines 10(2):15. https://doi.org/10.3390/machines10020159

Cai SB, Bao GJ, Pang JQ (2021) A structured light-based visual sensing system for detecting multi-layer and multi-track welding. Int J Robot Autom 36(4):264–273. https://doi.org/10.2316/j.2021.206-0576

Funding

This work was supported by the basic research project of the Ministry of Industry and Information Technology (KZ191902 and KZ191909).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

All the authors consent to participate in this work.

Consent for publication

All the authors in this work have consented to publish this manuscript.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, J., Wang, H. & Zhang, Y. Weld quality measurement of T-joints based on three-dimensional scanner. Int J Adv Manuf Technol 133, 6059–6070 (2024). https://doi.org/10.1007/s00170-024-13847-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-024-13847-7