Abstract

Electrical discharge machining (EDM) is a widely used non-conventional machining technique in manufacturing industries, capable of accurately machining electrically conductive materials of any hardness and strength. However, to achieve low production costs and minimal machining time, a comprehensive understanding of the EDM system is necessary. Due to the stochastic nature of the process and the numerous variables involved, it can be challenging to develop an analytical model of EDM through theoretical and numerical simulations alone. This paper conducts an extensive review of the various experimental (or empirical) modeling techniques used by researchers over the past two decades, including a geographic and temporal analysis of these approaches. The major methods employed to describe the EDM process include regression, response surface methodology (RSM), fuzzy inference systems (FIS), artificial neural networks (ANN), and adaptive neuro-fuzzy inference systems (ANFIS). Additionally, the optimization methods used in conjunction with these methods are also discussed. Although RSM is the most commonly used empirical modeling technique, recent years have seen an increase in the use of ANN for providing the most accurate predictions of EDM process responses. The review of the literature shows that most of the investigations on experimental EDM modeling were conducted in Asia.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Electrical discharge machining (EDM) is an electro-thermal-based subtractive manufacturing process that uses repetitive electrical discharges to remove material from a conductive workpiece. Since there is no direct contact between the workpiece and the tool, EDM eliminates the problems encountered in conventional machining, like mechanical stresses, tool chatter, and vibration. Hence, it is an excellent alternative to traditional mechanical cutting methods such as drilling, turning, milling, grinding, and sawing. Due to its unique ability to erode every conductive material to produce complex 3D cavities, regardless of its hardness and strength, it is widely used in the aerospace, automotive, medical, and oil and gas industries [1]. The process can remove precise amounts of material from a surface to create cavities, grooves, and other geometries. During the EDM, controlled and repeated sparks create small channels in the workpiece that are perfect for guiding fluids and gasses.

In 1770, English scientist Joseph Priestly found that electrical discharges or sparks could erode metal, laying the groundwork for EDM [2]. However, not until 1943, the destructive features of electrical sparks were first utilized for productive application by two soviet scientists at Moscow University. B. R. Lazarenko and N. I. Lazarenko figured out how to machine difficult-to-cut metals by vaporizing particles off the metal’s surface in a controlled manner. The Lazarenko system pioneered using a resistance-capacitor power supply in EDM machines in the 1950s, and its design has since been adopted by other manufacturers [3]. Simultaneously, the efforts of three American engineers, Stark, Harding, and Beaver, served as the foundation for the vacuum tube EDM systems. Their patented servo mechanism maintained the necessary gap between the electrode and workpiece for sparking [4]. The evolution of EDM technology has brought significant advancements in manufacturing industries, from using RC supplies and vacuum tubes to fast-switching transistors and implementing computer numerical control (CNC) mechanisms. These developments have not only brought economic benefits but also sparked research interest in the field.

The basic premise of EDM is to put a piece of conductive material known as a workpiece in a tank of dielectric fluid with a sacrificial (tool) electrode, as shown in Fig. 1. The tool electrode is connected to an electrical power source that generates an extremely high voltage. The metal removal procedure is carried out by applying high-frequency pulsed electrical current to the workpiece through the electrode either by a transistor-based or an RC-based circuit, as shown in the inset of Fig. 1. The above-described mechanism allows the material to be removed from the workpiece in a controlled manner. When the power source is activated, an electrical discharge occurs between the electrode and the workpiece. The controlled repeated discharges cause the material to be removed from the workpiece because of melting and vaporization, with the interfacial temperatures reaching as high as 20,000 °C [5]. The electrode is retracted when the process is completed leaving the desired geometry on the workpiece. It is to note that the electrode also wears off during the process; however, it is far more insignificant as compared to the material removal from the workpiece.

The working principle explained above is the mechanism of one particular variant of EDM known as die-sinking EDM, which is the focus of this paper’s discussion. Die-sinking EDM, also known as conventional or sinker EDM, is a process that utilizes an electrode that has been precisely machined to match the inverse shape of the desired end product. The electrode or die, usually made from graphite or copper, is lowered towards the workpiece submerged in a dielectric fluid, and the material is removed through spark erosion [6]. It is used in applications where the quality of machined products is the primary goal [7]. Micro-EDM, a type of EDM, evolved as a need to generate intricate high aspect ratio micro features on very hard and brittle materials [8]. It includes micro die-sinking EDM, micro wire EDM, micro-milling, and drilling EDM with the ability to machine parts accurately to 5–10 µm [9]. Other modifications of the traditional EDM systems to improve machining performance exist, such as ultrasonic vibration-assisted EDM, powder-mixed EDM, and magnetic field-assisted EDM [10-12].

The dynamic complexity of the EDM process and its dependence on various factors make it essential, both technologically and economically, to attain a high level of responsiveness in output. EDM has several controllable parameters, such as gap voltage, peak current, pulse on-time, pulse off-time, and duty cycle. Table 1 lists the abbreviations of the most commonly used output parameters in EDM studies. Modifying any of these variables will significantly affect the various EDM performance measures such as MRR, EWR, SR, surface integrity, OC, and taper. Incorrectly assigned input parameters may result in undesirable impacts on the workpiece, such as surface cracking, excessive electrode wear, poor MRR, and reduced yield [13]. Furthermore, parameters are often adjusted based on the operator’s prior expertise, which is prone to human errors. Thus, even for skilled engineers, it might be challenging to find the right balance between input parameter optimization and machine output consistency. Developing a proper relationship between the performance measures and machining factors is crucial for accurately predicting output in a stochastic manufacturing process like EDM. Implementation of modeling and optimization methods allows for a more substantial enhancement in decision-making with a new technical solution that can simultaneously meet and regulate the several diverse and conflicting goals of the EDM process.

Research on modeling and simulation techniques for studying the relationship between input and output variables of EDM processes has been the object of attention in recent years. These models can generally be grouped as theoretical, numerical, and empirical [14]. Theoretical modeling, also known as analytical modeling, is the earliest modeling approach that involves the utilization of thermodynamic principles to describe the spark erosion process in EDM. This technique assumes that heat erodes metal, with conduction being the primary mode of heat transport. Popular methods include (1) the two-dimensional heat transfer model, where the plasma channel formed between the electrodes is assumed to be a disc heat source [15], and (2) the cathode erosion model, where the photoelectric effect is assumed to be the primary source of energy supplied to the cathode surface rather than ion-bombardment and the variable mass [16], and (3) cylindrical plasma model where superheating is the dominant mechanism for erosion [17]. However, these thermal analysis-based models have a limited scope of application as they make assumptions on specific processes such as fixed discharge radius, point heat source, uniform shape, and stable thermal characteristics of workpieces and tool materials [18]. Moreover, such methods carry considerable uncertainties due to the poor correlation between experimental data and theoretical results of the heat transfer equations. Numerical modeling generally employs finite element method (FEM) or finite difference method (FDM) to numerically solve differential equations related to heat transfer in the EDM process [19]. With the advent of more powerful computer systems and simulation software, this technique gained much attention; however, it suffers from the same discrepancies as the analytical method since the input to the numerical (or simulation) models is not based on real-time data. Most theoretical investigations have focused on small metal removal processes caused by a single spark, modeling the effects using heat conduction principles and thermodynamic factors. Even though theoretical and numerical models are based on EDM physics, these models cannot be extrapolated to explain the actual multispark process of EDM. Factors such as stochastic electrical discharge distribution between electrodes, the simultaneous effects of two successive sparks on the surface of the workpiece, and the inherent generalizations and simplification strategies make the analytical and simulation models different from reality [5]. The contemporary process of modeling the complex EDM system is based on the experimental method. This modeling technique is based on finding the mathematical function defining a system based on a given set of inputs and outputs. This function may be as simple as a linear equation to a complex neural network consisting of nodes and activation functions. The principal advantage of experimental modeling in EDM is that it enables the prediction and control of the machining process. In addition, this modeling approach can be employed to optimize the EDM process to improve the efficiency and accuracy of the machining operation. This can help minimize cost, time, and material consumption while obtaining the desired quality of the machined surface.

In this paper, an in-depth literature study has been conducted to explore the various empirical techniques employed to help comprehend the correlation between the input and output of the EDM process over the past twenty years. The research articles were searched in established databases such as SCOPUS, Google Scholar, Science Direct, Springer, MDPI, and Taylor and Francis. Based on the relevant terms related to the empirical modeling of EDM, the following keywords have been used to facilitate the search process: EDM, Experimental modeling, optimization, regression, RSM, fuzzy logic, ANN, and ANFIS. The following sections explore the experimental methods: statistical regression, RSM, FIS, ANN, and ANFIS, used to model the EDM operation. Metaheuristic search-based optimization algorithms such as genetic algorithm (GA), desirability-function approach (DFA), and particle swarm optimization (PSO), along with some less common techniques to determine the Pareto optimal solution set in an EDM process, have also been discussed. In addition, optimization techniques such as grey relational analysis (GRA) and the Taguchi method, which provide a unique optimal solution set, have been reviewed. Finally, the article is concluded with a geographical and temporal research trend of the experimental modeling strategy.

2 Experimental modeling techniques used for the EDM process

Various input parameters influence the different system responses in the EDM operation. The EDM process is considered to be stochastic due to the random nature of the electrical discharges, the interaction between the different factors and responses, and the thermos-physical distortions of the machined area [20, 21]. Hence, for the success of this machining operation, direct control over the setting of these parameters is highly critical. The ability to predict performance metrics based on a particular set of input values is a valuable tool to satisfy all the conflicting objectives of the EDM process, such as high MRR and low TWR and SR. However, due to the inherent complexity and unpredictability of the EDM manufacturing process, conventional modeling approaches, such as theoretical and numerical modeling, can only provide rough estimates of expected outcomes. Accordingly, researchers used several soft computing approaches, widely used to forecast process output due to their remarkable ability to learn from experimental data, to describe the relationship between input parameters and the predicted process responses. Numerous researchers over the past few decades have applied different empirical techniques to establish a correlation between machining variables and important EDM outputs like MRR, SR, EWR, and OC. This section provides an in-depth literature review that confined its attention to studies of the traditional EDM process between 2002 and 2022 to present a comprehensive explanation of empirical methodologies for modeling and optimization.

2.1 Regression-based experimental modeling method

Predictive modeling methods, such as statistical regression analysis, encompass all strategies for modeling and evaluating multiple variables where the emphasis is on the correlation between a dependent variable and one or more independent variables. The goal of a regression model is to produce a mathematical function

that characterizes the causal relationship between a set of explanatory variables \({X}_{i}\) with a response or target variable \({Y}_{i}\), with scalar unknowns and residual or experimental error terms denoted by \(\beta\) and \({e}_{r}\), respectively [22]. The function \(f\left({X}_{i},\beta \right)\) depends on the type of regression implemented. The most widely used method for the multivariable process is the multiple linear regression function

where \(i=n\) observations and \(p\) is the number of independent variables [22]. This function assumes a linear connection between the variables. In the case of non-linear regression, the function \(f\left({X}_{i},\beta \right)\) is replaced with some non-linear equation like exponential, power, or logarithmic function. The choice of regression function in both methods depends on the degree of non-linearity of the process being modeled. However, linear regression can still accommodate non-linear curves when polynomial terms are included in the regression equation. The procedure to obtain a regression model from experimental data is shown in Fig. 2. After a regression model has been developed, it becomes necessary to validate the model’s goodness-of-fit and the statistical significance of the predicted parameters. The coefficient of determination (R-squared) is the most common metric to evaluate goodness-of-it. It can only take values between 0 and 1 and describes how the change in the independent variables may explain the variance in the dependent variable. A value of 0 implies that independent factors cannot anticipate the result, while 1 suggests that the independent variables can accurately predict the outcome. An F-test of the general fit and then t-tests of each parameter can be utilized to verify statistical significance. The whole process, nowadays, is done with statistical software programs.

The regression method, one of the earliest techniques, is still popular among researchers for modeling the EDM process. Initial experimental works were primarily based on multiple input single output (MISO) systems. Çogun et al. [23] used multiple non-linear regression to model the inner and outer edge wear of a hollow tool electrode. Circular, exponential, and power functions were used to model the edge wear characteristics by altering input discharge current, pulse time, and dielectric flushing pressure. Observations indicate that the exponential function adequately represents the edge wear patterns of the electrodes, with 99 out of 104 profiles having significant correlation coefficients. Keskin et al. [24] investigated the effect of power, pulse on-time, and off-time on surface roughness using copper electrodes on a steel workpiece. The authors conducted 504 experiments and modeled the process using multiple linear regression analysis. The R-squared value obtained from the equation was calculated to be 0.96, although pulse off-time was omitted. The researchers asserted that only the interaction of input power and spark time was statistically significant. Azadi et al. [25] employed three different regression functions, linear, curvilinear, and logarithmic, to model the input–output relationship in an EDM of AISI-2312 steel. Among the models tested for validation, the curvilinear model produced the lowest prediction error with 6.34%, 3.54%, and 4.25% for MRR, SR, and EWR, respectively. Kuriachen et al. [26] established a second-order linear regression function to determine the relationship between voltage and capacitance with spark radius during micro-EDM of titanium grade 5 alloy. The model achieved an R-squared value of 0.9379 with a strong correlation between capacitance and spark diameter. However, only 13 experiments were conducted to develop the model, although the authors cited a prediction error of 4.258% from validation runs. Optimization algorithms are usually employed with systems modeled with multiple inputs and multiple outputs (MIMO). Dang et al. [27] developed an intelligent model based on the Kriging regression (KR) technique to predict MRR, EWR, and SR in the EDM of P20 steel. Consequently, the PSO algorithm was used to optimize input parameters voltage, current, pulse on-time, and pulse off-time to improve MRR and reduce EWR with SR assumed as a constraint. The R-squared value of 0.97, 0.96, and 0.93 was achieved along with relative percentage errors of 3.7%, 5.4%, and 2.0% between predicted and actual experimental values for MRR, EWR, and SR, respectively. Kumaresh et al. [28] carried out a comparative study on the performances of four modeling techniques polynomial regression (PR), KR, radial basis function (RBF), and gene expression programming (GEP) on two different EDM processes. It was concluded that GEP outperforms all other methods in both processes, having maximum R-squared value for both examples, followed by PR, Kriging and RBF. However, a limited number of data were used for training and testing. Numerous researchers have conducted modeling studies of die-sinking EDM using linear regression functions with an average determination coefficient of 0.9 [29-40]. However, few works employed non-linear regression functions for modeling since non-linear behavior can still be modeled with linear regression and needs less computation [41-43].

A summary of the EDM process modeling using the regression technique is highlighted in Table 2. Some common input variables among the reviewed works include current, voltage, pulse on-time, and pulse off-time. Similarly, MRR, EWR, and SR have been the widely measured modeling response parameters with regression. The average value of the determination coefficient for the regression equations is approximately 0.911, with a mean percentage prediction error of around 8.55%. Based on the results of previous works, it is evident that regression models have predictive strength that is comparable to other competing models. However, even though regression produces a straightforward equation based on elementary statistical ideas like correlation and least-squares error, they have some shortcomings. For example, regression models do not automatically handle nonlinearity. Hence, a user must consider adding additional terms required to enhance the fit of the regression model. The dependability of regression models also reduces as the number of factors increases. Moreover, regression models are subject to collinear issues. If the independent variables are highly linked, their predictive power will diminish, and the regression coefficients will become less robust. Additionally, if more training data is needed to enhance the model, the regression technique requires that the modeling process be restarted from the beginning, which is time-consuming.

2.2 Response surface methodology (RSM)

RSM is a collection of statistical techniques first introduced by Box et al. [44] in 1951 for analyzing the relationships between independent and dependent variables with context to chemistry. The method is used to find the optimum combination of independent variables that result in the maximum or minimum value of a function, which is typically a response function. RSM can be used to optimize processes by finding the ideal values of input parameters that result in the desired output. Moreover, response surface plots can be used to study how changes in input parameters affect output responses and to identify regions where process performance is optimal. The flow chart for the RSM process is displayed in Fig. 3. RSM involves utilizing data obtained from design of experiments (DOE) techniques such as fractional factorial design or central composite design (CCD) and constructing a mathematical model that describes the relationship between the explanatory factors and the outcome variables. The process parameters can be numerically expressed in RSM as

where \(Y\) is the output response, \(f\) is the response function, \({X}_{1},{ X}_{2}, {X}_{3}, \dots , {X}_{N}\) are the input factors, and \({e}_{r}\) is the experimental error [45]. A response surface plot in two or three dimensions is obtained by graphing the expected response Y against one or two input variables, respectively. The response function \(f\) is not known and might be quite complex. Hence, RSM attempts to represent \(f\) by an appropriate lower-order polynomial function in some areas of the independent process variables. However, if there is a curvature in the surface plot, higher-order polynomial functions like quadratic equations can be used. Usually, the whole process, nowadays, can be achieved with the aid of computer programs.

RSM has been used by various researchers to determine the relationships between EDM process parameters and machining criteria and to investigate the impact of these variables on the responses. The EDM system behavior is modeled with quadratic equations, and the R-squared value is used to check the appropriateness of the model. Kung et al. [46] investigated the influence of discharge current, spark time, particle size, and concentration of aluminum powder on MRR and TWR in a powder-mixed EDM process using RSM. The face-centered CCD technique was used to plan the experiment with 30 runs. The second-order polynomial equations obtained had an R-squared value of 0.9798 and 0.9562 for MRR and EWR, respectively, indicating the goodness-of-fit of the developed model. From the response surface, the authors concluded that MRR improved with a concentration of aluminum powder, then decreased after a specific threshold. Sohani et al. [47] explored the effects of electrode tool shape, size, and area on MRR and EWR, along with the process value of current, pulse on-time, and pause time. Based on 31 experimental observations designed with CCD DOE and the impact of each tool shape modeled with RSM, the authors concluded that the circular-shaped tool led to improved MRR and less EWR with R-squared values above 0.98 for all four electrode shapes. The percentage prediction error of the proposed models was calculated to be between ± 5%. Kalajahi et al. [48] used RSM to model the MRR in EDM of AISI H13 steel. By removing insignificant factors from ANOVA analysis, the authors surmised that a quadratic model with an R-squared value of 0.9955 is appropriate in predicting the MRR. Mohanty et al. [49] combined RSM and multiobjective PSO optimization techniques to improve the EDM performance of Inconel 718 alloy using different electrode materials. Process parameters such as voltage, current, pulse on-time, duty cycle, and dielectric flushing pressure were used as input to model the process using data from 54 experimental runs designed with the Box-Behnken experimental design strategy. R-squared values of 0.97, 0.97, 0.9337, and 0.992 were obtained for MRR, TWR, SR, and OC, respectively. It was concluded that graphite electrodes performed better than copper and brass in most machining aspects. Nishant et al. [50] conducted a comparative analysis between RSM and semi-empirical modeling through dimensional analysis in a gas-assisted die-sinking EDM of chromium steel. The authors concluded that both models had similar performance with a prediction error of less than 5% for SR. Phate et al. [51] compared three different modeling techniques, dimensional exponential model (DEM), RSM, and adaptive neuro-fuzzy inference-based system (ANFIS), for predicting SR based on input variables such as workpiece material constitution, spark time, pause time, and current. The authors found ANFIS and RSM performance to be similar but better than that of DEM, each having R-squared values of 0.999958, 0.995649, and 0.830626, respectively. However, data from only 18 observations were used to develop the model. Papazoglou et al. [52] combined heat transfer analysis and RSM to develop a semi-empirical model to predict MRR, TWR, and WLT in EDM of titanium grade 2 alloy. R-squared values of 0.9586, 0.7871, and 0.965 were obtained for the output responses. Research works based on CCD [53-61] and BBD [62-67] DOE technique of RSM have been explored widely with different factors and responses. Other less utilized RSM strategies include Taguchi OA [68-70] and full-factorial design [71].

A synopsis of the literary works utilizing the RSM-based empirical modeling technique is provided in Table 3. Similar to the regression method, current, voltage, spark time, and pause time are the four input variables considered chiefly in RSM. In addition, MRR, EWR, and SR are also the most often assessed modeled response parameters. However, compared to regression, a slightly better average percentage prediction error of 7% and a mean R-squared value of 0.933 can be achieved with RSM. Based on the articles reviewed, the number of datasets used to develop an RSM-based model ranges from 9 to 54. Hence, a considerable quantity of information may be gained from a minimal number of experiments which is less expensive and timesaving than the traditional experimental techniques and ways of data assessment. However, although RSM can evaluate the interaction effects of input parameters on an observed response via response surface plots, it cannot be utilized to understand why such interactions have occurred. Moreover, second-order polynomials used in RSM typically fail to handle data fitting for systems with considerable curvature adequately.

2.3 Fuzzy inference systems (FIS)

FIS allows the prediction of an output response from a set of input variables using fuzzy logic. First introduced in 1965 by Lotfi Zadeh [72], fuzzy logic relies on multivalued logic, where the logical value of a variable can be any number in the range of 0 and 1. Such systems can deal with a spectrum of truth values, from entirely true to utterly false, mimicking how humans make decisions. Therefore, it is distinct from binary or classical logic, which has just two possible truth values (1 or 0, True or False, Yes or No). Figure 4 illustrates the complete fuzzy logic process. A FIS operates on four basic steps: fuzzification, formation of knowledge base, decision-making, and defuzzification. Fuzzification is the process of mapping each point of an input variable to intermediate membership values, known as fuzzy sets, between 0 and 1 by means of membership functions. A knowledge base must then be formed using a list of if–then statements called fuzzy rules. The decision-making unit transforms these intermediate fuzzy sets using logical operators to arrive at a final output set whose memberships represent degrees of satisfaction with respect to some objective criterion defined in the fuzzy rules. As a final step, the defuzzification step translates the fuzzy conclusions to crisp output values. Two popular types of FIS used in literature are Mamdani inference systems [73] and Sugeno inference systems [74]. The methods used to determine the outputs in these two inference systems are quite different. From control theory to artificial intelligence applications, FIS is widely used to model and optimize various process responses.

Many researchers have applied FIS to model the numerous responses to EDM processes since the early 2010s. Shabgard et al. [75] used the fuzzy logic technique to model the conventional EDM process and ultrasonic vibration-assisted EDM (UA-EDM) using pulse width and spark current as input parameters. Observational variables, such as MRR, TWR, and SR, were chosen as the output of the modeling system, where the Mamdani fuzzy inference method was employed for defuzzification. The average prediction accuracy of EDM and UA-EDM was similar and was found to be 96.49% and 95.068% for MRR, 96.06% and 96.84% for TWR, and 95.49% and 95.85% for SR, respectively. The high accuracy can be attributed to the lower number of validation runs employed in the study. A fuzzy logic-based MIMO system has been presented by Belloufi et al. as a means of determining machining parameters concerning voltage, current, and spark time as input factors [76]. Low values of mean percentage error obtained for MRR, EWR, WR, SR, OC, CIR, and CYL were 1.51%, 3.386%, 2.924%, 5.285%, 4.004%, 4.381%, and 2.937% respectively. Rodic et al. [77] compared the performance of a Mamdani FIS and an ANN model to predict SR in EDM of manganese alloyed tool steel. The ANN model was slightly better in prediction accuracy (95.9%) than the fuzzy system (95.14%). Researchers have also used FIS as an optimization technique to find ideal process values for the best machining output. Majumder [78] utilized a fuzzy model to generate a fitness function that was used as an input to a PSO algorithm to optimize MRR and TWR. Input parameters such as current, spark time, and pause time were used to model the EDM process by regression technique. Finally, the outputs obtained were combined using FIS to an equivalent performance index. At optimum conditions, the percentage error was found to be 27.5% and 4.75% for MRR and TWR. The high percentage error can be attributed to the lower number of experiments to train the regression model. Payal et al. [79] used the Taguchi-Fuzzy-based approach to generate the optimum solution for MRR and SR. A Mamdani FIS was used to unify the output values to a single performance index which was then utilized to categorize the 36 experimental observations to find the best optimum input values. The authors claimed an improvement of 103.25% and 32.144% in MRR and SR at the optimum condition from confirmation experiments. Rodic et al. [80] developed a Sugeno FIS to predict optimal process values in the EDM of AISI tool steel. Relative percentage errors of 16.98% and 12.55% were obtained for DE and MRR, respectively. A hybrid optimization technique based on multiobjective optimization based on ratio analysis (MOORA)-Fuzzy-GA has been developed by Kumar et al. to find optimal input values for EDM of a titanium alloy [81]. The authors converted the multiple responses to MRR and EWR into a single performance index using Mamdani-FIS, after which non-linear regression analysis was utilized to find the fitness function for GA optimization. Further hybrid optimization techniques like Fuzzy-TOPSIS [82, 83] and Fuzzy-GRA [84] with RSM modeling have been explored.

Table 4 presents an overview of the existing publications incorporating the FIS-based empirical modeling approach. The FIS model’s development relied on a relatively limited number of input and output variables, in contrast to regression and RSM approaches. Again current, voltage, pulse duration, and pause time are the four primary input variables considered, while their effects on MRR, EWR, and SR were measured in most studies. Based on the results of the reviewed works, an average prediction error of 6% has been obtained. The advantage of FIS is that it is a resilient system that requires no exact inputs. Hence, such systems can tolerate a variety of inputs, including inaccurate, distorted, or unclear information. Moreover, fuzzy systems resemble human-like decision-making, rendering them appropriate for uncertain or imprecise reasoning, particularly for systems with mathematical models which are difficult to derive. However, such systems’ accuracy relies on forming a rule base and selecting proper membership functions, which is entirely dependent on user knowledge and expertise.

2.4 Artificial neural network (ANN)

In the last decade, ANNs have developed as a highly versatile modeling technique, usable in a wide range of manufacturing processes [85]. Its incorporation as an empirical technique to model the EDM process has found significant success among researchers. ANNs are inspired by the structure and function of the human brain, composed of multiple layers of interconnected nodes, or neurons, that can learn to recognize the connection between input and output. Hence, such networks can predict future results based on input information by applying previously learned patterns to the incoming data. In a neural network, the connections between nodes in adjacent layers are assigned different weights. Moreover, each neuron has an activation function that determines whether it will fire in response to an input signal. The output layer includes all the desired response information. Between these two layers, there may be a significant number of hidden layers, each with weights and activation values. The number of hidden neurons affects a network’s ability to categorize data. If the number of hidden neurons is too few, it might lead to underfitting, whereas too many neurons might cause overgeneralization of the input data and cause overfitting. Hence, selecting the correct value of hidden neurons for a particular application is important. Generally, the number of hidden layers and neurons is determined through trial and error. The response of a neuron at any layer is defined by the equation below

where \({Y}_{j}=\) output of jth neuron at current layer, \(f=\) activation function, \(n=\) total number of neurons in the previous layer, \({w}_{ij}=\) weight values of the synapses between ith and jth neuron, \({x}_{i}=\) activation of ith neuron in the previous layer and \({b}_{j}=\) bias value at jth neuron [86].

Before the network can be used, it must be trained with labeled data in two phases: a forward phase and a backward phase, as shown in Fig. 5 [86]. The forward phase involves presenting and propagating input vectors to each neuron to calculate its output. Mean square error (MSE) is computed for each pattern in the training set while the synaptic weights, which were initially assigned randomly, remain fixed. During the backward phase, the errors are reduced iteratively in the reverse order, from the output nodes to the input nodes. This is done by modifying the weights and biases to minimize the cost function using a gradient descent algorithm, enhancing the network’s performance on training data. Popularized by Rumelhart et al. [87], one of the mainstream techniques to calculate the gradient of the cost function of a network is the backpropagation algorithm, which propagates the error from output nodes to input nodes while simultaneously adjusting the weights and biases from the previous training iteration [88]. This is the basis of supervised machine learning widely employed to model process parameters in EDM. Most manufacturing applications benefit from supervised machine learning because they generate labeled data [89].

Neural networks (NN) are defined by the type of architecture employed, the activation function, and the learning algorithm [86]. Multilayer perceptrons (MLP) or multilayer feedforward systems with backpropagation is the network of choice in most EDM modeling literature combined with learning algorithms such as gradient descent (GD), GD with momentum (GDA), Levenberg–Marquardt (LM), conjugate gradient (CG), and scaled conjugate gradient (SCG) algorithms [18]. Such learning algorithms are used to update the synaptic weights of the network during training. Radial basis function neural networks (RBFNN) and recurrent neural networks (RNN) have also become popular in the past decade. Joshi et al. [18] used process responses from numerical FEM simulation to train a BPNN and RBFNN. The authors concluded that RBFNN was less accurate than BPNN in their studies due to the limited availability of training data and the inherent difficulty of extrapolating results. Somashekhar et al. [90] utilized Backpropagation Neural Network (BPNN) in a 4–6-6–1 configuration combined with the GA optimization technique to predict and improve MRR in a micro-EDM system. Low mean percentage prediction errors of 0.8312% and 3.94% were obtained for MRR during training and testing, respectively. Teimouri et al. [91] compared two different ANN models, BPNN and RBFNN, to predict MRR and SR in a dry EDM process. It was found that the 6–8-5–2 BPNN model outperforms the 6–25-2 RBFNN, with root mean square error (RMSE) for MRR and SR of 0.2411 and 0.2132 with BPNN and 0.2998 and 0.2773 with RBFNN, respectively. The authors explained that RBF networks are good for low-data problems, whereas BPNN modeling is suitable for larger data sets. An experimental model of the Micro-EDM process using BPNN with a teaching learning-based optimization (TLBO) algorithm has been developed by Maity et al. [92]. The NN model can be used to predict MRR, overcut, and thickness of the recast layer based on input parameters accurately up to 96.1%, 99.7%, and 93.5%, respectively. The authors found a 4–12-3 network structure to give the least prediction error with tan-sigmoid activation and linear function in the hidden and output layer, respectively. Singh et al. [93] compared three models: BPNN, ANFIS, and ANFIS-GA, to predict MRR and SR in a gas-aided EDM of D3 steel. Mean prediction errors from confirmation experiments with ANN, ANFIS, and ANFIS-GA were found to be 1.87%, 1.33%, and 1.27% for MRR and 2.36%, 1.34%, and 0.14% for SR, respectively. The authors concluded that ANFIS-GA had improved performance compared to ANN and ANFIS. Singh et al. [94] conducted a comparative study between RSM and machine learning-based models: ANN and ANFIS, in an EDM of D3 steel under helium. From ANOVA analysis, it was concluded that a quadratic is sufficient to fit the model data. Moreover, both ANN and ANFIS models outperformed RSM with respect to prediction accuracy. Dey et al. [95] studied five NN models feed-forward NN (FNN), RNN, convolutional neural network (CNN), general regression neural network (GRNN), and long short-term memory-based RNN (LSTM-RNN) for an electro-discharge turning process (EDT) to model MRR and overcut (OC). The authors concluded that LSTM-RNN outperformed all the other NN models in predicting MRR and OC with the best statistical error values for both training and testing data. Comparative research between GRNN and GPR has been carried out by Singh et al. in a micro-EDM process. It was surmised that the performance of GPR modeling was better regarding prediction accuracy [96]. Among the different training strategies utilized in the NNs, GD and LM algorithms have frequently been studied [97-103] and [104-112], respectively. Compared to the traditional GD method, the LM method has a faster convergence speed and higher prediction accuracy. SCG is another training algorithm that requires fewer epochs than the LM method but at the cost of lower prediction accuracy [18, 113, 114]. To locate global minima in a multidimensional search space, evolutionary algorithms such as GA and PSO have been applied in NNs to optimize the weights and biases for minimum error in the predicted output [115-117]. Other learning algorithms that have been studied in ANN-based EDM modeling include the adaptive moment estimation (AdaM), Broyden-Fletcher-Goldfarb-Shanno optimization algorithm (BFGS), and pseudo-inverse algorithm [95, 118, 119].

An overview of published works that use the ANN-based empirical modeling technique is presented in Table 5. Compared to the previous techniques, a significant number of input and output parameters have been explored by researchers to develop an ANN-based EDM model. In most investigations, the effects of the input factors, current, voltage, spark time, and pause time on MRR, EWR, and SR were assessed. The BPNN architecture, combined with the GD training technique, has been investigated more regularly than any other NN designs used in EDM modeling. However, the slow convergence speed and small learning rates of GD have been overcome using LM and SCG algorithms. Moreover, the activation functions for the hidden and output layers included tan-sigmoid, log-sigmoid, rectified linear activation unit (ReLU), Gaussian, exponential, and linear functions. ReLU offers the advantage of sparsity and a lower probability of gradient vanishing in contrast to sigmoid functions. Based on the outcomes of referenced articles, ANN-based models have an average percentage prediction error of 2.74%, the lowest among all methods considered in this review. Hence, this demonstrates the ability of ANN to model a complex, non-linear system like EDM, allowing the prediction of output responses based on input values outside of the training data set. Despite this, ANNs can sometimes produce erroneous outputs without cause. The black-box nature of such networks makes it difficult to have complete control over the responses. Although no general guidelines for establishing the structure of ANN exist and trial and error investigations are the only means, evolutionary algorithms such as GA and PSO have recently been used to efficiently determine an appropriate network configuration and evaluate the synaptic weights and biases.

2.5 Adaptive-network-based fuzzy inference system (ANFIS)

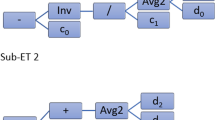

Recent trends include combining different strategies to maximize their benefits and minimize their drawbacks. This integrated approach is called "hybrid modeling methodology." Reasons for hybridization include the need to improve existing techniques, complete a wide variety of practical tasks, and achieve a high level of functionality in one package. One such computational intelligence technique developed by Jang, known as the adaptive network-based fuzzy inference system (ANFIS), combines the strengths of both ANNs and fuzzy logic systems [120]. ANN is essentially a black box, so it is not simple to derive understandable rules from the structure of the network. On the other hand, a fuzzy system requires fine-tuning the rules and membership values in the face of insufficient, incorrect, or conflicting information. Since there is no systematic method for this, adjustment is made through trial and error, which effectively takes time and often results in mistakes. In situations where traditional ANNs often struggle to find an accurate solution, while fuzzy logic systems can sometimes produce results that are too imprecise, ANFIS uses both supervised learning to train the network weights and unsupervised learning to tune the membership function parameters [121]. According to Jang [120], ANFIS is an adaptive neural network that mimics the operation of a Takagi–Sugeno FIS with five layers: the premise layer, multiplication or AND operation layer, weight normalization layer, the consequent rule-base layer, and the output summation layer as shown in Fig. 6. An in-depth explanation of the functioning of each layer can be found in the said literature. Each network layer is composed of nodes with directed connections linking them to the nodes of the following layer, and so on. The nodes in each layer contain a function with either changeable or defined parameters. Parameter selection is achieved by a hybrid learning approach that utilizes the least squares estimate (LSE) technique in the forward pass to adjust consequent parameters and the gradient approach in the backward pass to tune premise parameters [122].

Various researchers have explored ANFIS as an experimental modeling approach for the EDM process. Pradhan et al. [123] conducted a comparative study on the modeling methodologies for predicting MRR, EWR, and OC in an EDM of D2 steel, employing an ANN and two neuro-fuzzy systems (of the Sugeno and Mamdani types). In their conclusion, all models performed similarly in prediction accuracy and learning speed; however, the error in forecasting EWR was more significant (greater than 14%) in all models. Prabhu et al. [124] compared regression and Sugeno-type ANFIS techniques to model SR in an improved EDM process with carbon nanotube (CNT) mixed dielectric fluid. According to testing data, the ANFIS model accurately predicted SR by 99.70% compared to regression. However, the high value of accuracy could be due to the fewer number of data points used to train the model. A similar study was conducted by Al-Ghamdi et al. with 81 experimental data, where a 21-rule ANFIS structure outperformed the traditional polynomial models with a prediction error of 1.55% [125]. Singh et al. [126] combined grey relational analysis (GRA) with Mamdami-FIS and Sugeno-ANFIS to optimize multiple responses like MH and SR in a powder-mixed EDM process. The developed models allowed grey reasoning grades (GRG) to be predicted, which in turn were used to find the best combination of input parameters. It was concluded that the ANFIS model was better able to predict the GRG value than its fuzzy counterpart, accurate to 99.79%. Goyal et al. [127] utilized a Mamdani-FIS and ANFIS to model SR and CR in a nano-graphene particle-mixed EDM process. The authors surmised that ANFIS was a superior modeling technique with a mean prediction error of 3.48% and 1.85% for SR and CR, respectively, compared to the fuzzy method with a mean prediction error of 5.37% and 5.43% for SR and CR, respectively. Multiple input single output (MISO) systems involving MRR and SR have been frequently modeled with ANFIS [124, 125, 130128-]. However, the traditional ANFIS structure allows only one output to be modeled at a time. Hence, studies involving multiple input and multiple output (MIMO) systems like EDM have to be modeled individually for each response [131-133]. In a stochastic process like EDM, the outputs are interdependent, so an isolated model does not accurately define the whole system. Therefore, a coactive neuro-fuzzy inference system (CANFIS) was employed by Wan et al. to predict micro-EDM responses MRR, OC, taper, and DPN based on input parameters tool feed rate, gap voltage, capacitance, and workpiece type that overcome the single output limitation of ANFIS architectures and allow modeling of MIMO systems with high accuracy [134]. Based on 27 data sets out of 81 experiments, the developed model had an average prediction error of 4.5%, 15.4%, 15.2%, and 6.8% for MRR, OC, TA, and DPN, respectively.

Table 6 provides a review of existing publications on the empirical modeling of EDM using the ANFIS approach. A modest number of input parameters were involved in the modeling process, and their effects on output responses, particularly MRR, SR, and OC, were explored. Additionally, the arithmetic average of the prediction error based on the results of the referenced articles was around 6%. The benefit of the ANFIS technique over ANN is that a heuristic approach is not required to find the optimal network structure for the model. Moreover, the training of an ANFIS model takes fewer epochs than ANN to converge to a minimum mean squared error (MSE) value, resulting in significantly less calculation time for ANFIS. Also, with ANFIS, setting up primary membership functions intuitively and initiating the learning process to build an array of fuzzy rules to predict a particular data set may be done even without human experience. However, ANFIS has significant constraints, such as the “curse of dimensionality” and the complexity of training, which restrict its applications to situations involving large datasets. Furthermore, ANFIS’s hybrid learning algorithm uses the gradient descent technique in the backward pass, which is slow and tends to stop at a local minimum instead of absolute minima.

2.6 Overall summary of various modeling techniques

Even though statistical methods for developing empirical models such as regression and RSM offer benefits such as decreased trial numbers, optimal design selection, experimental uncertainty evaluation, determining broad patterns between variables, and qualitative analysis of the effect of different parameters, such approaches have their assumptions and limitations. In such techniques, selecting the transforms for fitting curves with nonlinear datasets is inherently intuitive and turns out to be challenging when several inputs are involved. Moreover, misleading outcomes may be deduced with data consisting of significant variations. These concerns led to utilizing fuzzy and neural network methods, which addresses some of these challenges. Fuzzy-based systems provide human-like decisions regarding system processes. However, the success of fuzzy-based methods hinges on the ruleset used, which might differ from one programmer to the next. Contrarily, ANNs are well-proven methodologies when enough training information is available, allowing for the rapid development of a model and the correct prediction of process dynamics through its output. There is also a reduced need for experimentation when updating an existing system because the existing ANN models may be used. Despite their widespread success in multiple applications, ANNs using gradient-based learning algorithms are susceptible to becoming stuck in local minima, lengthy training periods, and slow convergence rates. For this reason, different training methods like LM and SCG have been considered to overcome such issues. Evolutionary algorithms have also improved the efficiency of selecting appropriate network structures in ANN. ANFIS modeling techniques incorporate the advantages of both ANN and fuzzy systems. Nevertheless, a substantial amount of experimental data is required for the automated creation of rules. There is also a limitation to modeling only one output at a time, which becomes problematic if the outputs are interdependent. As a result, CANFIS is used to resolve this problem as it allows the creation of non-linear rules based on several output responses. In CANFIS, the premises are identical to ANFIS, but the consequences vary depending on how many outputs are needed. To represent associations between outputs, fuzzy rules are developed using common membership values. Based on the results of the reviewed articles, as given in Tables 2–6, a comparison of the mean percentage prediction error for the five modeling methods is given in Fig. 7. ANN-based models can provide better forecasting accuracy than other modeling techniques, followed by fuzzy, ANFIS, RSM, and regression. The higher precision of ANN systems can be attributed to the ability to incorporate different modifications such as network configurations, training strategies, transfer functions, learning rate, and epochs to improve the process output. Since general ANFIS structures are limited to MISO systems, it does not consider the interdependency between the different responses. Such limitation creates discrepancies between the predicted and expected outputs leading to higher errors than ANNs. Similarly, the performance of FIS depends on the expertise of the user in the creation of the ruleset and the selection of membership functions. The reliability of RSM and regression is lower than those of the other techniques due to the lack of adaptability of such models.

2.7 Various optimization techniques used alongside the empirical modeling

Most of the above modeling techniques discussed in the previous sections involve the utilization of various optimization methods to find the optimal set of input parameters. Hence, modeling the system and determining which input factors have the most influence on output responses is a prerequisite to optimizing process variables. Due to the unpredictable nature of the EDM process, it is crucial to improve the system parameters in such a machining technique. Some of these research techniques have been discussed in the following sections.

Developed in the 1960s and 70 s by Holland et al., GA belongs to the class of evolutionary algorithms that uses the principle of natural selection in a self-replicating population to generate a highly effective and robust search approach [135]. This algorithm optimizes a given function by iteratively trying to improve it, employing operations influenced by evolutionary biology, such as selection, crossover, and mutation [136]. For problems with no clear algorithmic answer, GAs can generate candidates for Pareto optimal solution set, which can then be tested and refined until the desired result is achieved [137]. A population of potential objective function solutions is kept initially. Next, the solutions are ranked by their fitness values, and the fittest solutions are crossed to create a new generation that may be closer to the optimal solution. In each generation of solutions, the less resilient ones progressively die without reproducing, while the more conspicuous one’s mate, combining their greatest qualities to develop children that are likely to be better than their parents. To improve population diversity and find a better solution, mutations are introduced into solution strings. This cycle is performed until a specific number of generations or Pareto-optimal solutions are found [138]. As a result, GA is a powerful optimization approach that differs from the problem-solving strategies employed by more conventional algorithms, which are often more linear and likely to become trapped at local minima. Moreover, it depends entirely on the fitness value of the objective function (obtained from the modeling of the process) and requires no further information to operate. Some popular forms of GA include the multiobjective genetic algorithm (MOGA), the vector-evaluated genetic algorithm (VEGA), and the non-dominated sorted genetic algorithm (NSGA) [139]. Abidi et al. [140] employed RSM to determine objective functions, which was utilized in a MOGA-II optimization technique to find the Pareto optimal solution of response variables in a micro-EDM process of Nickel-Titanium based alloy using tungsten and brass tool electrodes. From the generated solution set that took 2000 generations, the authors identified three optimal points that correspond to Tungsten as better tool material and also satisfied the requirements of low EWR and SR while obeying the prescribed constraints. Gostimirovic et al. [141] developed model equations using GA and NSGA-II for multiple objective optimization problems with 600 generations to find the range of acceptable values of MRR and SR that provide high energy efficiency. Model equations generated from the GA could predict MRR and SR with a mean error percentage of 29.9% and 14.7%, respectively. Kumar et al. [142] have used regression modeling and modified the NSGA-II strategy to determine the best combinations of process variables in a micro-EDM of a titanium alloy for improving the DR while reducing TWR. Validation experiments at the Pareto optimal set yielded DR and TWR with an absolute mean percentage prediction error of 4.54% and 5.77%, respectively. Singh et al. [143] conducted a comparative study of three different optimization techniques: DFA, GA and TLBO in EDM of a copper shape memory alloy. For both single and multiple objective optimizations of EWR and DA, optimum conditions determined through GA and TLBO were similar, while DFA conditions were quite different. Statistical-based modeling techniques like regression and RSM have been used to generate the optimization function for GA in references [32, 40, 41] and [56, 66, 68], respectively. Moreover, multiobjective GA approaches have been combined with artificial intelligence-based methods like ANN, FIS, and support vector machine (SVM) in references [81, 90, 94, 102, 106, 111] and [144] respectively.

Optimizing complicated EDM processes with a large number of response variables has been found to benefit significantly from the DFA. First developed in 1965 by Harrington and then modified by Derringer and Suich, the desirability function is a technique for selecting input parameter settings that maximize the tradeoffs among different process metrics [145, 146]. The approach involves creating a mathematical function between 0 and 1 representing the optimization process's desired outcome from each output modeling equation. This desirability response function is minimized or maximized to find the best possible solution. Finally, input factors are selected to maximize the geometric average of individual desirability functions, representing the total desirability [147]. Mehfuz et al. [148] combined regression modeling and DFA to optimize a micro-EDM milling process with prediction errors of 0%, 5.56%, 11.11%, and 16.98% for SR, roughness height, MRR, and TWR, respectively. The high percentage errors for MRR and TWR were attributed to the low measurement resolution of the balance used in the study. Sengottuvel et al. [149] aimed to optimize the tool electrode shape that generated the highest MRR with minimal EWR and SR using fuzzy logic modeling and DFA. Prediction errors of less than 5% were obtained with rectangular copper electrodes producing the best set of results. Experimental modeling using RSM and CCD DOE and multiresponse optimization with DFA was carried out by Dikshit et al. [150]. Prediction errors of 2.19% and 2.58% at optimal conditions were reported for MRR and SR, respectively. Sahu et al. [151] adopted a blended optimization approach known as desirability function-based GRA (DGRA) in conjunction with the firefly algorithm (FA) to concurrently enhance all performance indicators along with the least square support vector machine (LSSVM) to model the EDM process. The authors concluded that AlSi10Mg is the best tool electrode to EDM a titanium workpiece. A combination of RSM-based modeling and DFA optimization can be found in works [53, 54, 57, 66] and [152].

PSO, a member of the evolutionary algorithms family, is a search heuristic that mimics the cooperative problem-solving strategies of animals like hives of bees or swarms of birds to locate Pareto-optimal answers to a variety of optimization challenges. Developed by Kennedy and Eberhart, PSO uses the concept of a “swarm” of particles representing the potential solutions that move around in a search space according to a fitness function, looking for the best solution to a given problem [153]. Each particle keeps its own “personal best” solution and is also aware of the best global solution obtained by any particle in the swarm to date. These two solutions direct the travel of the particle through the search space. The search is iterative; when either the maximum number of cycles is achieved or the least error criterion is met, the procedure is considered complete. PSO is advantageous since it uses real data as particles, is simple to implement, and has few adjusting factors [154]. PSO is very successful at addressing optimization issues that are challenging or impossible to solve using conventional approaches like gradient descent and quasi-Newton methods. For instance, PSO has been used to optimize the design of neural networks, antennas, and other complicated systems [155, 156]. Various forms of PSO exist, each with its own set of advantages and disadvantages [157]. In general, however, PSO is a robust optimization approach that can resolve a broad range of optimization issues. Aich et al. [158] utilized PSO to obtain the ideal working parameters of a support vector machine used for modeling a die-sinking EDM process. Consequently, PSO was used the find optimal process values for maximum MRR and minimum SR with mean absolute percent error (MAPE) of 8.09% and 7.08%, respectively, of the developed model. Majumder et al. [159] compared three different PSO techniques PSO-original, PSO-inertia weight, and PSO-constriction factor, for multiobjective optimization of an EDM process, the latter of which was found to be the most efficient with prediction errors of 2.81% and 7.95% for MRR and EWR respectively. A mathematical model was developed using RSM, from which an objective function for PSO was generated using DFA. Nano powder-mixed EDM (PMEDM) processes were optimized with PSO by Mohanty et al. [160] and Prakash et al. [161] with great success. In both studies, RSM was utilized to obtain the model functions for optimization. RSM and regression modeling-based PSO works can be found in the literature [49] and [27, 43], respectively. Moreover, machine-learning-based approaches with PSO can be obtained in references [78, 100, 101, 117]. Some non-traditional optimization techniques like the Jaya algorithm, Rao-1, biogeography-based optimization (BBO), and ant colony optimization (ACO) have also been reported in articles [39, 59], and [70], respectively.

Although single-response optimization from experimental data has been used in EDM processes [162, 163], statistical methods can provide a more efficient, accurate, and robust approach to optimization. Ranked-based optimization techniques like the Taguchi method and GRA rely on statistical approaches to optimize a system by identifying the key factors that affect the performance of the system and controlling these factors to improve the overall performance. Such methods do not require a prior modeling process to generate the fitness functions used in optimization algorithms like GA, DFA, and PSO. Taguchi optimization is based on robust DOE by utilizing orthogonal arrays (OA) to select a subset of factors most likely to impact the output significantly. Using OA reduces the number of experiments, making the process more efficient and cost-effective than traditional DOE techniques. Pilot experiments utilizing a single-factor experimental method can be performed before setting up the OA to determine the range of the significant factors in an EDM process [164, 165]. The drawback of only using a traditional DOE technique of varying one factor at a time for optimization is that it does not account for potential interactions between the factors [166]. The Taguchi method optimizes a system by analyzing the performance variation due to noise or environmental variation through signal-to-noise (S/N) ratio. The S/N ratio can be calculated using different formulas depending on the type of response being measured [167]. This technique has been utilized to optimize the different responses in EDM processes [168, 169]. However, the Taguchi method is a univariate optimization technique, which attempts to identify an ideal combination of input factors that maximizes or minimizes a single response. Hence, GRA is used instead to perform multiresponse optimization.

Based on grey system theory, GRA is a mathematical method used in the field mathematics and computer science to compare multiple datasets and find patterns of similarity between them [170]. Dr. Deng first introduced it in 1982, and since then, it has found application in many other areas, including optimization, modeling, and experimental design [171]. It is particularly effective for correlating input variables with response parameters to optimize a system or process. There are generally three stages in GRA: data preprocessing or normalization, determination of the grey relation matrix and interpretation of results [172]. The result is the generation of grey relational grade (GRG) values pertaining to the multiresponse feature, ranked from highest to lowest, with the maximum GRG value indicating the optimal experimental condition for that process. Thus, the optimization of multiple outputs may be transformed into the optimization of a particular relational grade. EDM is a complex machining technique with numerous performance attributes in which responses like MRR need to be as high as possible, while SR, TWR, and OC need to be low. Conventional optimization techniques such as the Taguchi method help find an optimal solution for single responses only [173]. However, an enhancement in one response variable might have a detrimental effect on other performance metrics. Hence, a combination of Taguchi DOE and GRA method can effectively address this challenge of optimizing interdependent multiperformance features in EDM by transforming the multiple outputs into a single parameter for optimization [174-183].

Since different process factors have varied effects on the performance characteristics, it is challenging to determine a single ideal combination of process parameters for the EDM process. Therefore, a multiobjective optimization approach is required to solve this issue. Classical ranked-based optimization techniques like GRA and TOPSIS reduce a multiobjective issue to a single target by assigning relative weights to each variable. However, such methods give unreliable results if the function becomes discontinuous or too many optimization goals are added [181]. Hence, search-based algorithms like GA, DFA, and PSO, which provide a Pareto optimal set of results, have been found to be more efficient in the optimization of the EDM process.

3 Geographical and time-based trends of the research

Experimental modeling of EDM is a popular trend in the machining industry. This modeling approach enables manufacturers to predict how their system will behave during machining operations and to optimize their processes accordingly. Such modeling can investigate a wide range of factors that affect machining performance, including MRR, tool wear, and SR. In recent years, considerable progress has been made in developing EDM modeling techniques, and it is now possible to create highly accurate models that can be used to predict machining behavior with great precision. Figure 8 depicts a geographical heat map of the research contributions of each country toward the empirical modeling of EDM. This chart was generated based on the provenance of the first and second writers of each publication. As the chart shows, literary works published in this field mainly originated in Asia, with a modest number of publications from parts of Europe and North America. Moreover, India contributed the most to this scope of EDM research, with 69 publications between 2002 and 2022 based on the origin of the first author, followed by Türkiye, Iran, Malaysia, and China. Meanwhile, Algeria and Egypt are the only countries from Africa that conducted research on experimental modeling during this time period.

Experimental EDM modeling research publications by country based on the corresponding country affiliations of the first and second authors of the reviewed articles between 2002 and 2022; the intensity of the colors represents the number of authors from each country; India has the highest contribution to experimental EDM research

A temporal trend can also be observed from the bar chart in Fig. 9 based on the number of reviewed articles on experimental modeling between 2002 and 2022. Despite the fact that few research papers were published on this topic in the early 2000s, interest has steadily grown over the past few years, as shown by the upward trend, with the graph reaching its peak in 2022. The majority of the literature considered in this review was made available between 2013 and 2022. Hence, it is evident that more research has been conducted in recent years on the empirical modeling of the EDM system.

The percentage of the five experimental modeling methods (based on the number of reviewed articles between 2002 and 2022): regression, RSM, FIS, ANN, and ANFIS, discussed in this paper is shown in Fig. 10. More than 50% of the paper in this review is based on statistical-based methods like regression and RSM. This can be attributed to the widespread use of statistical software packages that allows the convenient and quick generation of such models from experimental data. Among artificial intelligence techniques, ANN is by far the most prevalent experimental modeling approach utilized by researchers in the past few years. Its widespread utilization among researchers is rising with the incorporation of meta-heuristic learning algorithms to improve the network structure, which overcomes the trial-and-error procedure of determining the hyperparameters of traditional ANN algorithms. Moreover, the ability to provide human-like decisions about the responses in a dynamic process like EDM and adaptability to changing inputs makes such machine learning-based models more prominent. In general, there is a rise in the popularity of all the modeling techniques being considered in this review.

4 Conclusion

An in-depth literature evaluation on the experimental modeling strategies for the EDM system covering the years 2002–2022 is compiled and summarized in this article. Various process and response factors, state-of-the-art algorithms, and optimization techniques employed by the researchers for EDM model development are also presented. Each modeling approach has been critically evaluated to determine the benefits and drawbacks. In addition, country-specific trends have been identified regarding the intensity of research, frequency of articles published, and classification of the different empirical techniques employed over the period considered. According to the literature, the following conclusions can be made:

-

Statistical techniques like regression and RSM suffer from inaccuracies due to non-linearities and high variations in training data.

-

The usefulness of FL-based modeling methodologies is restricted by the need for human skill and process knowledge in establishing the rule base and membership functions.

-

A neural network-based model can accurately forecast process dynamics, even for inputs outside the training data set.

-

The performance of ANN depends on network topology, training method, size of training and testing datasets, training period, hidden and output layer transfer function, synaptic weights, and biases, which adds to the complexity of setting up such methods.

-

ANFIS combines the strengths of FIS and ANN in automatic rule-base generation and accurate prediction of process outputs.

-

A modification to the ANFIS technique is required to allow the modeling of multiple responses simultaneously.

-

Based on author affiliations of reviewed articles, India has the highest number of publications related experiment modeling of EDM.

-

RSM is the most widely used modeling approach used by researchers and is closely followed by ANN.

-

An increase in the use of ANN and ANFIS techniques has been observed in recent years.

-

ANNs demonstrate higher prediction accuracy in EDM modeling compared to the other techniques.

-

A general upward trend is evident from reviewed literature, revealing the growing research interest in developing accurate models from experimental data.

References

Meshram DB, Puri YM (2017) Review of research work in die sinking EDM for machining curved hole. J Braz Soc Mech Sci Eng 39:2593–2605

Webzell S (2001) The first step into EDM in machinery. Findlay Publications Ltd, Kent, UK

Ho KH, Newman ST (2003) State of the art electrical discharge machining (EDM). Int J Mach Tools Manuf 43:1287–1300. https://doi.org/10.1016/S0890-6955(03)00162-7

Jameson EC (2001) Electrical discharge machining. Society of Manufacturing Engineers (SME), Michigan

McGeough JA (1988) Advanced methods of machining. Chapman and Hall, London, UK

Lonardo PM, Bruzzone AA (1999) Effect of flushing and electrode material on die sinking EDM. CIRP Ann Manuf Technol 48:123–126. https://doi.org/10.1016/S0007-8506(07)63146-1

Panda RC, Sharada A, Samanta LD (2021) A review on electrical discharge machining and its characterization. Mater Today Proc. https://doi.org/10.1016/j.matpr.2020.11.546

Jahan MP, Rahman M, Wong YS (2014) Micro-electrical discharge machining (Micro-EDM): processes, varieties, and applications. Comp Mater Process 11:333–371. https://doi.org/10.1016/B978-0-08-096532-1.01107-9

Pham DT, Dimov SS, Bigot S et al (2004) Micro-EDM - recent developments and research issues. J Mater Process Technol 149:50–57. https://doi.org/10.1016/j.jmatprotec.2004.02.008

Mollik MS, Saleh T, Bin Md Nor KA, Ali MSM (2022) A machine learning-based classification model to identify the effectiveness of vibration for μEDM. Alex Eng J 61:6979–6989. https://doi.org/10.1016/j.aej.2021.12.048

Jahan MP, Rahman M, Wong YS (2011) Study on the nano-powder-mixed sinking and milling micro-EDM of WC-Co. Int J Adv Manuf Technol 53:167–180. https://doi.org/10.1007/s00170-010-2826-9

Zhang Z, Zhang Y, Ming W et al (2021) A review on magnetic field assisted electrical discharge machining. J Manuf Process 64:694–722

Muthuramalingam T, Mohan B (2015) A review on influence of electrical process parameters in EDM process. Arch Civ Mech Eng 15:87–94

Raju L, Hiremath SS (2016) A state-of-the-art review on micro electro-discharge machining. Procedia Technol 25:1281–1288. https://doi.org/10.1016/j.protcy.2016.08.222

Tariq Jilani S, Pandey PC (1982) Analysis and modelling of edm parameters. Precis Eng 4:215–221. https://doi.org/10.1016/0141-6359(82)90011-3

DiBitonto DD, Eubank PT, Patel MR, Barrufet MA (1989) Theoretical models of the electrical discharge machining process. I. A simple cathode erosion model. J Appl Phys 66:4095–4103. https://doi.org/10.1063/1.343994

Eubank PT, Patel MR, Barrufet MA, Bozkurt B (1993) Theoretical models of the electrical discharge machining process. III. the variable mass, cylindrical plasma model. J Appl Phys 73:7900–7909. https://doi.org/10.1063/1.353942

Joshi SN, Pande SS (2009) Development of an intelligent process model for EDM. Int J Adv Manuf Technol 45:300–317. https://doi.org/10.1007/s00170-009-1972-4

Izquierdo B, Sánchez JA, Plaza S et al (2009) A numerical model of the EDM process considering the effect of multiple discharges. Int J Mach Tools Manuf 49:220–229. https://doi.org/10.1016/j.ijmachtools.2008.11.003

Bańkowski D, Młynarczyk P (2022) Influence of EDM process parameters on the surface finish of alnico alloys. Materials 15. https://doi.org/10.3390/ma15207277

Zhang Y, Zhang G, Zhang Z et al (2022) Effect of assisted transverse magnetic field on distortion behavior of thin-walled components in WEDM process. Chin J Aeronaut 35:291–307. https://doi.org/10.1016/j.cja.2020.10.034

Ostertagová E (2012) Modelling using polynomial regression. Procedia Eng 48:500–506. https://doi.org/10.1016/j.proeng.2012.09.545

Çogun C, Akaslan S (2002) The effect of machining parameters on tool electrode edge wear and machining performance in electric discharge machining (EDM). 46 KSME Int J 16:46–59. https://doi.org/10.1007/BF03185155

Keskin Y, Halkaci HS, Kizil M (2006) An experimental study for determination of the effects of machining parameters on surface roughness in electrical discharge machining (EDM). Int J Adv Manuf Technol 28:1118–1121. https://doi.org/10.1007/s00170-004-2478-8

Azadi Moghaddam M, Kolahan F (2015) Optimization of EDM process parameters using statistical analysis and simulated annealing algorithm. Int J Eng Trans A 28:157–166. https://doi.org/10.5829/idosi.ije.2015.28.01a.20

Kuriachen B, Mathew J (2016) Spark radius modeling of resistance-capacitance pulse discharge in micro-electric discharge machining of Ti-6Al-4V: an experimental study. Int J Adv Manuf Technol 85:1983–1993. https://doi.org/10.1007/s00170-015-7999-9

Dang XP (2018) Constrained multi-objective optimization of EDM process parameters using kriging model and particle swarm algorithm. Mater Manuf Processes 33:397–404. https://doi.org/10.1080/10426914.2017.1292037

Dey K, Kalita K, Chakraborty S (2022) A comparative analysis on metamodel-based predictive modeling of electrical discharge machining processes. Int J Interact Des Manuf. https://doi.org/10.1007/s12008-022-00939-5

Ay M, Çaydaş U, Hasçalik A (2013) Optimization of micro-EDM drilling of inconel 718 superalloy. Int J Adv Manuf Technol 66:1015–1023. https://doi.org/10.1007/s00170-012-4385-8

Torres A, Puertas I, Luis CJ (2014) Modelling of surface finish, electrode wear and material removal rate in electrical discharge machining of hard-to-machine alloys. Precis Eng 40:33–45. https://doi.org/10.1016/j.precisioneng.2014.10.001

Laxman J, Raj KG (2015) Mathematical modeling and analysis of EDM process parameters based on Taguchi design of experiments. J Phys Conf Ser 662. https://doi.org/10.1088/1742-6596/662/1/012025

Mausam K, Kumar Singh P, Sharma K, Gupta RC (2016) Investigation of process parameter of EDM using genetic algorithm (GA) approach for carbon fiber based two phase epoxy composites. Mater Today Proc 3:4102–4108. https://doi.org/10.1016/j.matpr.2016.11.081

Selvarajan L, Manohar M, Udhayakumar A, Dhinakaran P (2017) Modelling and experimental investigation of process parameters in EDM of Si3N4-TiN composites using GRA-RSM. J Mech Sci Technol 31:111–122. https://doi.org/10.1007/s12206-016-1009-5

Salcedo AT, Arbizu IP, Luis Pérez CJ (2017) Analytical modelling of energy density and optimization of the EDM machining parameters of inconel 600. Metals (Basel) 7. https://doi.org/10.3390/met7050166

Niamat M, Sarfraz S, Shehab E et al (2019) Experimental characterization of electrical discharge machining of aluminum 6061 T6 alloy using different dielectrics. Arab J Sci Eng 44:8043–8052. https://doi.org/10.1007/s13369-019-03987-4

Meslameni W, Kamoun T, Hbaieb M (2019) Experimental modeling of EDM process using the experimental design method. Int J Appl Res Technol 2:39–47

Kalyon A (2046) Optimization of machining parameters in sinking electrical discharge machine of caldie plastic mold tool steel. https://doi.org/10.1007/s12046-020-1305-8S

Gaikwad MU, Krishnamoorthy A, Jatti VS (2021) Implementation of Jaya algorithm for process parameter optimization during EDM processing of NiTi 60alloy. Mater Today Proc 47:5701–5708. https://doi.org/10.1016/j.matpr.2021.04.157