Abstract

Impellers are widely used in industrial equipment. Currently, the welding of impeller blades is mainly accomplished by manual welding. Aiming at the current situation of impeller production, this paper mainly introduces a novel welding path planning method based on point cloud for robotic welding of impeller blades. Firstly, in order to get rid of the traditional teaching-playback mode and offline programming method of welding robots, this paper adopts the scheme of the automatic welding path planning based on point cloud obtained by a three-dimensional vision structured light camera. To facilitate subsequent sampling and filtering of point cloud, a novel method for three-dimensional camera pose planning is proposed to accurately and efficiently obtain the point cloud and coordinates containing the welding seam information. After filtering the impeller point cloud, a novel algorithm for rough extraction of impeller blades welding seam scattered point cloud based on distance information is proposed. We use MATLAB simulation to choose a polynomial fitting method based on least squares to fit the welding seam scattered point cloud to adapt to the spatial characteristics and diversity of welding seam. Finally, we perform discrete interpolation on the fitted welding seam point cloud to realize the impeller blade welding path planning. Experimental results show that the proposed method can accurately and efficiently realize the welding path planning for impeller blades robotic welding and complete the welding task without teaching and programming before welding.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The impeller is an important component for various industrial equipment pieces, such as centrifugal compressor, impeller aerator and agitator. However, due to the influence of the impeller structure, the welding of impeller blades is mainly done by manual welding at present. The manual welding has the following disadvantages. The low welding efficiency and poor welding consistency reduce equipment stability and work efficiency. Besides, the welding environment is harmful to the human body. With the rapid development of automation and robot technologies, the welding robots are widely applied into the welding environment to replace human work. The wide application of welding robots has completely solved the above problems and greatly promoted the improvement in production level [1, 2]. Therefore, the research on the welding of impeller blades by welding robots has important practical significance.

After industrial field research, it is found that the “teaching-playback” modes and the offline programming (OLP) are still two main working modes of modern welding robots. However, the operator needs to carry out complicated teaching and programming work on the welding path for this mode of welding robot before welding. Meanwhile, this work has high requirements for the operator’s operation level and the accuracy. To conquer the above problems, many researchers study on welding seam extraction and welding path planning by different methods for different types of workpieces.

By reading the relevant documents, the solutions to complicated teaching and programming problems of welding robots before welding require the assistance of computer vision. Previous studies have conducted in-depth research on image processing and established viable methods across several visual sensors, including mono camera, stereo camera and CCD camera [3]. However, with the development of structured light visual sensing technology, the solutions have become diversified. Structured light sensors are mainly divided into laser structured light sensors and surface structured light sensors. For the use of laser structured light vision sensors, Zeng et al. [4] proposed a narrow butt three-dimensional (3D) offline welding path planning method based on a laser structured light sensor. Hou et al. [5] proposed the non-instructional welding method of robotic gas metal arc welding (GMAW) based on laser structured light vision sensing system (LVSS). Since the laser structured light is local-type sensor, it could not perceive the global environment [6]. Therefore, it is mainly applied into real-time seam tracking and identification, but not suitable for the offline 3D path planning of the welding robot.

Compared with the laser structured light sensors, the surface structured light sensors have better performance on 3D reconstruction and could well perceive global information. Therefore, some researchers gradually use surface structured light sensors to generate point cloud to complete accurate and efficient offline 3D path planning. For example, Xu et al. [7] proposed a welding path planning method based on the combination of point cloud and deep learning for the problem of highly redundant and uneven dense point clouds to realize automatic welding. Lei et al. [8] proposed a novel 3D path extraction method of weld seams based on stereo structured light sensor, which could well serve for the 3D path teaching task before welding. Therefore, using the point cloud processing method for welding robot path planning has become a new solution to the problem of complex teaching and programming of welding robot before welding.

However, at present, most of workpiece point clouds are obtained by scanning the entire workpiece with a laser scanning sensor. This process takes a long time, and we need to calculate the coordinates of the point cloud. Aiming at this problem, this paper introduces a 3D surface structured light camera, which can quickly obtain the point cloud of the workpiece and 3D coordinates of the point cloud at same time. This process takes a short time and greatly improves the welding efficiency.

As the core innovation of this paper, a novel welding path planning method based on point cloud for robotic welding of impeller blades is proposed. This method can realize the robot automatic welding of the impeller blades instead of manual welding. Moreover, it achieves accurate and efficient impeller blade welding path planning without teaching and programming before welding. To achieve this goal, this paper has accomplished the following works. In Section 2, the experimental system and framework used in this experiment are introduced. In Section 3, in order to accurately and efficiently obtain the point cloud and coordinates containing the welding seam information, the paper proposes a novel 3D camera pose planning method and subsequently preprocesses the point cloud obtained by the 3D camera. In Section 4, in order to obtain an accurate welding path, a novel algorithm for rough extraction of impeller blades welding seam scattered point cloud based on distance information is proposed. Moreover, to adapt to the spatial characteristics and diversity of welding seam, through MATLAB simulation, a welding path planning algorithm suitable for the welding seam scattered point cloud based on least squares polynomial fitting and discrete interpolation is proposed. In Section 5, we validate the efficiency and accuracy of the welding path planning method based on point cloud for robotic welding of impeller blades through analysis of method efficiency and welding platform experiment results. Section 6 describes the conclusion and prospect.

2 Description of experiment system and framework

2.1 Experiment system

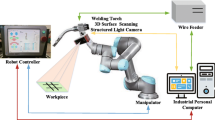

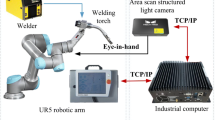

As shown in Fig. 1, the experimental system used in this paper is divided into two parts: the welding execution system and the 3D vision system. The welding execution system is composed of welding torch, the wire feeder, the manipulator, the robot controller, impeller and impeller turntable. The used manipulator is universal robots 5 (UR5).

The 3D vision system includes a 3D surface scanning structured light camera and an industrial personal computer (IPC) [9]. As shown in Fig. 2, the 3D surface scanning industrial camera is Chishine Surface 120. It should be noted that the installation position of the 3D surface scanning structured light camera must ensure that the welding torch does not enter the field of view. The characteristics of 3D surface scanning structured light camera are shown in Table 1.

2.2 Experiment model

As shown in Fig. 3, this paper uses the impelling part of the impeller aerator as an experimental model. This kind of impeller needs to be welded twice in the industrial production process [10]. In the first welding, the rough welding is accomplished to fix the impeller blades. This process only needs the welding worker to fix the impeller blade to the impeller by spot welding according to the approximate position of the blade, which is not the focus of this article. The second time is the refined welding for the purpose of strengthening the impeller blades. This process requires high welding skill of the welding workers, resulting in low welding efficiency. Therefore, this paper aims to solve the core problem of using the robot welding to replace the manual welding in this process. The welding robot is applied to secondary welding of impeller blades through the method of this paper, which can improve welding efficiency and liberate labor.

2.3 System framework

We need to fix the impeller on the impeller turntable before welding the impeller blades using the proposed method. Impeller turntable can rotate the impeller at a fixed angle; there is no need to adjust the shooting position and posture of the 3D camera every time. For the same impeller, we only need to fix the initial shooting position and posture of the 3D camera at initial impeller blade welding. The camera pose planning method is introduced in the following section.

After planning the pose of the 3D camera, we take a picture of an impeller blade welding seam and generate the point cloud, which is transferred into the IPC through the MicroB [11]. Subsequently, the IPC obtains the welding path through the proposed method and sends it to the robot controller. Finally, the robot completes the current welding task of the impeller blade according to the robot controller instructions. At this time, the IPC sends the completion instruction to the impeller turntable and rotates the next blade into the 3D camera field of view at a fixed angle according to the instruction, and then continues the welding task of the next impeller blade. The specific operation process is shown in Fig. 4.

3 Camera pose planning and point cloud preprocessing

3.1 Camera pose planning

In the experiment, since the 3D camera light source is a coded surface structured light, which is composed of multiple striped structured lights arranged in sequence, the impeller point cloud is arranged layer by layer according to the extending direction of the striped structured light [12]. If the 3D camera shoots according to the shooting posture shown in Fig 5a, the order of the distance between the points on the same striped structured light and the 3D camera is from near to far and then near. At this time, the position of the furthest distance from the 3D camera is located on the welding seam. If the 3D camera shoots according to the shooting posture shown in Fig 5b, the points on the same striped structured light have approximately the same distance from the 3D camera. Under this situation, we cannot use the distance information to extract the welding seam point cloud. Therefore, we plan the camera pose with the shooting posture shown in Fig 5a.

As shown in Fig. 6, the 3D camera is installed on the front end of the welding robot. It has three degrees of freedom in the camera coordinate system, namely the pitch along the x-axis shown in Fig. 6b, the yaw along the y-axis shown in Fig. 6c and the roll along the z-axis shown in Fig. 6d. In the same position, we can determine the pose of the 3D camera by adjusting the three orientation angles and further determine the projection surface of the structured light.

As shown in Fig. 7, the shape between an impeller blade and the impeller is approximately an angle. It is essential to determine the structured light projection surface through the reasonable 3D camera pose planning. That ensures the position of the furthest distance from the 3D camera in the same striped structured light is located on the welding seam.

In the experimental process, we found that many 3D camera poses can achieve that the position of the furthest distance from the 3D camera in the same striped structured light is located on the welding seam. As shown in Fig. 8, when the 3D camera rotates only around its y-axis, it only needs to meet two conditions that can ensure the position farthest from the 3D camera in the same striped structured light is located on the weld seam: (1) the structured light projection surface cover the welding seam. (2) The angle between the 3D camera horizontal plane and the structured light projection plane is an acute angle.

In order to avoid the welding path planning failure caused by the uncertainty of 3D camera pose, we determine the final pose of the 3D camera through analysis, which satisfies the following three conditions: (1) As shown in Fig. 9a, regardless of a straight or curved welding seam, the 3D camera y-axis is approximately parallel to the welding seam along the welding seam extension direction; (2) as shown in Fig. 9b, the 3D camera z-axis approximately coincides with the bisector of the angle where the welding seam is located; and (3) the 3D camera structured light projection surface can completely cover the welding seam. Satisfying the above three conditions ensures that the position of the furthest distance from the 3D camera in the same striped structured light is located on the welding seam.

After determining the final pose, the 3D camera is moved in this pose through reasonable control of the manipulator [13]. However, the 3D camera structural light and coordinate system are invisible. Therefore, we use the manipulator joint axis coordinate system to qualitatively represent the 3D camera coordinate system. As shown in Fig. 10, the joint 6-axis line of the manipulator is consistent with the 3D camera z-axis direction, so adjusting the joint angle 6 can rotate the 3D camera around the z-axis. Similarly, controlling joint angles 4 and 5 can achieve the 3D camera rotation around the x-axis and y-axis.

To meet condition 1, we need to adjust the robotic joint angles 4 and 6. As shown in Fig. 11a, adjust the manipulator joint angle 4 so that the joint 5 is approximately parallel to the tangent plane at the welding seam. Meanwhile, the 3D camera is moved to the middle of the welding seam. Then, according to Fig. 11b, we adjust the manipulator joint angle 6 so that the y-axis of the camera is parallel to the welding seam. Since this process does not require high precision, we can complete the adjustment by naked-eye observation.

To meet condition 2, according to Fig. 11c, we adjust the manipulator joint angle 5 to make the 3D camera z-axis coincide with the bisector of the angle where the welding seam is located. This process requires high precision. Therefore, we use the laser rangefinder, shown in Fig. 12, as an auxiliary tool. The measurement accuracy of the laser rangefinder is 0.5mm, which does not affect the welding process. Therefore, it can not only assist in completing the posture adjustment of condition 2, but also can be used to adjust the position of condition 3. The laser emitting port of the laser is aligned with the structured light transmitter of the 3D camera. As a result, the light of the laser rangefinder is visible light and can qualitatively represent the z-axis of the 3D camera. We rotate the 3D camera around the y-axis by adjusting the joint angle 5. When the laser spot is on the welding seam, we stop adjusting the manipulator joint angle 5. The laser track is shown as the red dashed line in Fig. 11c.

To meet condition 3, it is necessary to determine the reasonable shooting distance of the 3D camera and move it to this position. The best shooting distance of the 3D camera used in this paper is 500 ± 250mm. After a number of experiments, the point cloud will cover more irrelevant information if the 3D camera shooting distance is too far. This is not conducive to the subsequent point cloud processing. If the 3D camera shooting distance is too close, the 3D camera structured light projection surface cannot completely cover the welding seam. Therefore, the best shooting distance for the impeller model in this paper is determined as 350 ± 50mm. As the laser rangefinder can read the distance information in real time, we use it to adjust the shooting position of the 3D camera and move the 3D camera along the z-axis to the best shooting distance without changing the current 3D camera posture. The final actual 3D camera shooting pose is shown in Fig. 13.

3.2 Point cloud preprocessing

As shown in Fig. 14, the initial impeller point cloud contains all the features in the camera’s field of view. In order to prevent the interference of irrelevant features on the welding path planning of the impeller blade and reduce the number of points to increase the calculation speed, the initial impeller point cloud need filtering the irrelevant features using a pass-through filter. The principle of a pass-through filter is to perform a simple filtering along a specified dimension, that is, cutting off values that are either inside or outside a given user range.

We use the graphic display method to determine the filtering range. In order to facilitate the determination of the filtering range, we designed the point cloud filtering software as shown in Fig. 15 according to the principle of pass-through filter. The software can display the point cloud after pass-through filtering by adjusting the range of the x, y and z axes. When the point cloud reaches the desired state, it reads the value of the coordinate axis to determine the filter range. Due to the 3D camera shooting posture, the impeller turntable rotation angle and the information within 3D camera field of view remain unchanged. Therefore, this filtering parameter can be used in subsequent shooting, which is determined in the first shooting.

According to the running results of the software, the accepted interval values of x, y and z axes are set to (-24, 88), (-91, 72) and (224, 295), respectively. The filtered impeller point cloud is shown in Fig. 16. The number of points in the point cloud is reduced from 132951 to 68995.

4 Steps of welding path planning

Through the point cloud preprocessing, the impeller point cloud only retains the blade and welding seam information. Based on this, we first roughly extract the welding seam scattered point cloud and then perform fitting and discrete interpolation for it. Finally, we could realize the welding path planning of the impeller blade.

4.1 Rough extraction the welding seam scattered point cloud

In the aforementioned 3D camera pose planning process, we aim to ensure that the position of the furthest distance from the 3D camera in the same striped structured light is located on the welding seam and realize the 3D camera pose planning. Therefore, this paper proposes a novel algorithm for rough extraction of impeller blades welding seam scattered point cloud based on distance information, which realizes the automatic extraction of the scattered point cloud of multi-shaped impeller blade welding seam [14].

We divide the remaining point cloud of the impeller into multiple segments along the y-axis direction. Each segment contains several groups of point cloud distributed along the direction of the stripe structural light, and each group of point cloud contains a farthest point from the 3D camera. We compare the points that are farthest from the 3D camera in each group in the same segment, and take out one of the furthest points as the point farthest from the 3D camera in the segment to complete the rough extraction of the welding seam point cloud. The specific schematic diagram is shown in Fig. 17.

In this experiment, the impeller blade point cloud is divided into 100 segments along the y-axis direction. The rough extraction result of the welding seam scattered point cloud is shown in Fig. 18a. Since the blade has a certain thickness, the roughly extracted welding seam scattered point cloud is located on the lower surface of the blade as shown in Fig. 18b.

4.2 Welding seam fitting

The roughly extracted welding seam scattered point cloud basically locates at the welding seam position. However, due to the effect of the point cloud voids and the welding nodules by the first fixed blade welding, there is a small amount of scattered welding seam point cloud deviating from the welding seam position. To avoid affecting the subsequent path planning, we need to fit the scattered welding seam point cloud.

The impeller blade welding seam is generally a 3D curve in space, but there is a special case of a straight line in space. To adapt the spatial characteristics and diversity of welding seam, we chose a polynomial fitting method based on least squares to fit the welding seam [15].

Least Square Method (LSM) is to find the optimal function of matching data by minimizing the sum of squares of the errors (also called residual). Let \(P\left( {x,y} \right)\) denote a set of sample data. Each datum \(P\left( {{x_i},{y_i}} \right)\) in P is acquired by multiple sampling of Eq. 1.

n is the order of the polynomial and \({\theta _j}\left( {j = 1,2,3, \ldots ,n} \right)\) is the coefficients of the polynomial. The sum of squared errors for each data point in the sample data set P is:

m is the sample dimension. The least square method believes that the coefficients \({\theta _j}\left( {j = 1,2,3, \ldots ,n} \right)\) of the optimal function should minimize the sum of squares of error S.

We use the matrix method to solve the polynomial coefficients \({\theta _j}\left( {j = 1,2,3, \ldots ,n} \right)\). Disassembling the sum of squared errors S in Eq. 2 into a matrix form leads to

\({X_v}\) is a Vandermonde matrix. The coefficient vector \(\theta\) formed by the polynomial coefficients is shown in Eq. 4.

The output vector \({Y_r}\) of the sample data has the form of

The sum of squared errors S can be written as

For the optimal function, Eq. 7 should be satisfied.

According to Eq. 7, we can get the polynomial coefficient vector of the optimal function as

Finally, according to the obtained coefficient vector \(\theta\), we can get the fitting polynomial based on the least squares on the sample data \(P\left( {x,y} \right)\).

For the 3D sample data \(P\left( {x,y,z} \right)\), we can fit the space curve by solving the fitting polynomials of \(P\left( {x,y} \right)\) and \(P\left( {x,z} \right)\), respectively, based on the least squares. This method is also applicable to a straight line fitting in space. Since the highest power of the independent variable of the linear function is the first power, the coefficients, whose powers of the independent variables are higher than the first power, are all 0 in Eq. 1. The final result is shown in Eq. 9:

The above process proves the feasibility of fitting the welding seam scattered point cloud based on the polynomial fitting method of least squares from the perspective of principle. Then, we verify its accuracy through simulation experiments [16].

We assumed a space curve, and the relationship between x, y and z coordinates satisfies Eq. 10:

The space curve is shown in Fig. 19a. The relationship between the space curve y and x is shown in Fig. 19b, and the relationship between the space curve z and x is shown in Fig. 19c.

We fit the space curve through a polynomial fitting method based on least squares. Due to the complexity of the space curve, after many experiments, we set the order n of the polynomial to 5 and get the final fitting expression as

The fitting result is shown in Fig. 19d, e, f.

By comparing the y and z obtained by Eq. 10 and Eq. 11, we use the root-mean-square error (rmse) as the index to evaluate the fitting results. We get that the RMSE of y fitting and z are 0.0043 and 0.0184, respectively. From the fitting errors of y and z shown in Fig. 20, it can be concluded that the errors are all within 0.1, which fully meets the fitting requirements. In practice, the complexity of the impeller blade welding seam curve is much lower than the assumed curve. Therefore, we can use the least squares polynomial fitting method to fit the welding seam scattered point cloud.

We use a polynomial fitting method based on least squares to fit the welding seam scattered point cloud and set the order n of the polynomial to 2. Since the welding seam extends along the y-axis, in order to facilitate the determination of the welding seam length, we take the y-axis coordinate as the independent variable. The final fitting expression is shown in Eq. 12.

The y-axis coordinate of the point cloud ranges from -91 to 72. The fitted welding seam scattered point cloud is shown in Fig. 21.

Since the welding torch needs to be welded on the upper surface of the welding seam, we need to correct the fitted welding seam so that it is located on the upper surface of the welding seam. According to the principle of camera pose adjustment, we only need to move the fitted welding seam point cloud along the z-axis by the distance of the impeller blade thickness to make it located on the upper surface of the welding seam, which means that the z coordinate minus the blade thickness [17]. Through the measurement of vernier caliper, the thickness of impeller blade used in this paper is 4mm. Therefore, the new fitting relation is Eq. 13.

The fitted welding seam point cloud after the correction is shown in Fig. 22.

4.3 Welding path planning

The welding robot has certain requirements for coordinates recognition accuracy. It is impossible to directly transfer the coordinates of the fitted welding seam point cloud to the welding robot. Therefore, we perform discrete interpolation on the fitted welding seam point cloud. In other words, we take the points from the fitted welding seam point cloud according to a certain step [18].

Since the obtained welding path is a curve, we use the “straight instead of curved” welding mode for robot welding. Under the premise of not affecting the welding accuracy, after repeated experiments and debugging, the step length is set to 5 for the impeller model used in this paper. The fitted welding seam point cloud after discrete interpolation is shown in Fig. 23.

The fitted welding seam point cloud after discrete interpolation is the welding path of the welding robot based on the 3D camera coordinate system. We need to carry out reasonable and accurate hand-eye calibration experiments to convert the welding path from the camera coordinate system to the robot coordinate system, and then send all the point coordinates to the welding robot to perform the welding task [19].

The value of the y coordinate in the welding path decreases from the initial point of the top to the end. Therefore, we make the welding robot pass through the target points in the order of y value from large to small in the point coordinates to complete the welding task of the impeller blade.

5 Experiments and results

After obtaining the welding path of impeller blades, we verify the efficiency and accuracy of the welding path planning method based on point cloud for robotic welding of impeller blades through analysis of method efficiency and welding platform experiment results [20].

5.1 Method efficiency analysis

Running time is a key factor to reflect the performance of the method. Due to the high requirements for welding efficiency in industrial production, there are certain requirements for the time of point cloud generation and welding path planning. Through many experiments, we record the average time required for the method in this paper to generate a single impeller blade welding path. The results are shown in Table 2.

This method takes about 4s to plan the welding path of a single blade. Therefore, the efficiency of this method can fully adapt to the needs of industrial production.

5.2 Welding platform experiment results analysis

We use the above steps to get the welding path under the camera coordinate system. We needs to carry out reasonable and accurate hand-eye calibration experiments to convert the welding path from the camera coordinate system to the robot coordinate system and realize the accurate positioning of the front end of the welding torch to the position of the welding point. The principle of hand-eye calibration in this experiment is shown in Fig. 24 [21].

In the eye-in-hand calibration method, Eq. 14 is applicable to any two postures of the robot in the process of moving.

According to Eq. 14, after multiple calibrations, the external matrix \({}^{End}{T_{{}^{Camera}}}\) with the smallest error is selected as follows:

The shooting posture of the 3D camera is recorded as (-474.05, 447.86, 368.16, -144.43, -20.54 , 33.53). According to the shooting posture and the external matrix, we get the coordinates of the front end of welding torch corresponding to the welding points at the welding positions under the robot base coordinate system. The path is shown in Fig. 25a

In order to verify the accuracy of the welding path obtained by the method in this paper, we took 13 points of the welding position by manual teaching and fitted the 13 points to obtain the manual teaching welding path under the robot base coordinate system. The manual teaching welding path is shown in Fig. 25b.

Because the welding seam extends along the y-axis, we take the y-coordinate of the welding path obtained by the method in this paper as the reference, and adopt the same y-coordinate value for the manual teaching welding path. As shown in Fig. 26a, we have selected 33 reference points for the two welding paths, and calculated the distance error of them. The error obtained is shown in Fig. 26b.

Finally, we analyze the error shown in Fig. 26b. The distance error between the welding points acquired by the proposed method and manual teaching can less than 2mm, which fully meets the error requirements of the welding process. Therefore, the welding path obtained by the method in this paper can completely replace the obtained by manual teaching.

After reasonable planning of the robot pose and position, we send the welding path obtained by the method in this paper to the welding robot. The robot drives the front end of the welding torch to accurately find the welding point and execute the welding task according to the planned welding path. We have verified the accuracy of the welding path planning method through the actual operation of the experimental platform.

6 Conclusion

From the perspective of industrial application, this paper mainly introduces a novel welding path planning method based on point cloud for robotic welding of impeller blades. It lays the foundation for the accurate planning of the impeller blades welding path and independent welding while eliminating the complicated teaching and programming work in welding path planning. The main contributions of this paper are summarized as follows:

-

1)

The 3D surface scanning structured light camera is applied to the industrial welding scene. The novel method of 3D camera pose planning is proposed to accurately and efficiently obtain the point cloud and coordinates containing the information of the impeller blades welding seam.

-

2)

A novel algorithm for rough extraction of impeller blades welding seam scattered point cloud based on distance information is proposed, which realizes the automatic extraction of various shapes impeller blade scattered welding seam point cloud.

-

3)

A welding path planning algorithm suitable for the welding seam scattered point cloud based on least squares polynomial fitting and discrete interpolation is proposed to realize the welding path planning of impeller blades. In the future work, we will improve and complete our work. Meanwhile, the proposed method also has some weaknesses. For example, the proposed method in this paper has higher requirements for the camera’s shooting position and pose. We will reduce the limitations of this method and improve its universality in future.

Availability of data and materials

Not applicable.

References

Liu Y, Liu J, Tian XC (2019) An approach to the path planning of intersecting pipes weld seam with the welding robot based on non-ideal models. Robotics and Computer-Integrated Manufacturing 55:96–108

Manuel RM, Pablo RG, Diego GA, Jesus FH (2017) Feasibility Study of a Structured Light System Applied to Welding Inspection Based on Articulated Coordinate Measure Machine Data. IEEE Sens J 48:4217–4224

Muhammad J, Altun H, Essam AS (2017) Welding seam profiling techniques based on active vision sensing for intelligent robotic welding. Int J Adv Manuf Technol 88:127–145

Zeng JL, Chang BH, Du D, Peng GD, Chang SH, Hong YX, Wang L, Shan JG (2017) A Vision-Aided 3D Path Teaching Method before Narrow Butt Joint Welding. Sensors 17

Hou Z, Xu YL, Xiao RQ, Chen SB (2020) A teaching-free welding method based on laser visual sensing system in robotic GMAW. IEEE/ASME Trans Mechatron 109:1755–1774

Rout A, Deepak BBVL, Biswal BB (2019) Advances in weld seam tracking techniques for robotic welding: A review. Robot. Comput. Integr. Manuf 56:12–37

Xu CQ, Wang JL, Zhang J, Lu C (2020) A new welding path planning method based on point cloud and deep learning. IEEE International Conference on Automation Science and Engineering 786–791

Yang L, Li E, Long T, Fan JF, Liang ZZ (2019) A Novel 3-D Path Extraction Method for Arc Welding Robot Based on Stereo Structured Light Sensor. IEEE Sens J 19:763–773

Zhou P, Peng R, Xu M, Wu V (2021) Path Planning With Automatic Seam Extraction Over Point Cloud Models for Robotic Arc Welding. IEEE ROBOT AUTOM LET 6:5002–5009

Shi L, Tian XC (2014) Automation of main pipe-rotating welding scheme for intersecting pipes. Int J Adv Manuf Technol 77:955–964

Kucuk S (2017) Optimal trajectory generation algorithm for serial and parallel manipulators. Robotics and Computer-Integrated Manufacturing 48:219–232

Pan ZX, Polden J, Larkin N, Duin S (2012) V, Norrish J, Recent progress on programming method of for industrial robots. Robotics and Computer-Integrated Manufacturing 28:87–94

Yang L, Liu YH, Long T, Peng JZ, Liang ZZ (2020) A novel system for off-line 3D seam extraction and path planning based on point cloud segmentation for arc welding robot. Robotics and Computer-Integrated Manufacturing 64

Yu JP, Chen B, Yu HS, Lin C, Zhao L (2018) Neural networks-based command filtering control of nonlinear systems with uncertain disturbance. Inf Sci 2018(426):50–60

Fan CL, Liu C (2015) A novel algorithm for circle curve fitting based on the least square method by the points of the Newton’s rings, International Conference on Computers, Communications and Systems, 256-260

Zhao J, Hu SS, Shen JQ (2011) Changliang Chen and Wei Ding. Mathematical model based on MATLAB for intersection seam of sphere and tube, Trans China Weld Instit 2011(32):89–92

Fu C, Wang QG, Yu JP, Lin C (2020) Neural Network-Based Finite-Time Command Filtering Control for Switched Nonlinear Systems With Backlash-Like Hysteresis. IEEE Transactions on Neural Networks and Learning Systems 99:1–6

Liu Y, Tang Q, Tian XC (2019) A discrete method of sphere-pipe intersecting curve for robot welding by offline programming. Robotics and Computer-Integrated Manufacturin 57:404–411

Yu JP, Shi P, Liu JP, Lin C (2020) Neuroadaptive Finite-Time Control for Nonlinear MIMO Systems With Input Constraint. IEEE Transactions on Cybernetics 99:1–8

Yu JP, Shi P, Chen XK, Cui GZ (2021) Finite-time command filtered adaptive control for nonlinear systems via immersion and invariance. Sci China Inf Sci 64:1–14

Li MY, Du ZJ, Ma XX, Dong W, Gao YZ (2021) A robot hand-eye calibration method of line laser sensor based on 3D reconstruction. Robotics and Computer-Integrated Manufacturing 71

Funding

The authors gratefully thank the research funding from the Shandong Provincial Key Research and Development Program (Major Scientific and Technological Innovation Project) (No. 2019JZZY010441) and National Natural Science Foundation of China (No. U20A20201).

Author information

Authors and Affiliations

Contributions

Yusen Geng was a major contributor in writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent to publish

Not applicable.

Competing interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Geng, Y., Zhang, Y., Tian, X. et al. A novel welding path planning method based on point cloud for robotic welding of impeller blades. Int J Adv Manuf Technol 119, 8025–8038 (2022). https://doi.org/10.1007/s00170-021-08573-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-021-08573-3