Abstract

Plane welds are a common type of weld in industrial sites. When the welding robot welds multiple types of plane welds at the same time, the traditional teaching and programming modes become relatively complicated. Therefore, in order to solve the problem of automatic robot welding of various types of plane welds, taking plane V-type butt, plane I-type butt, and plane lap welds as examples, this paper proposes a plane weld extraction method based on 3D vision. Firstly, in order to realize the line and plane segmentation of the workpiece point cloud, we establish the concept of plane point cloud density. Secondly, to segment workpiece edge lines, an iterative segmentation algorithm based on RANSAC is proposed. Then, based on the geometric features of the workpiece, a method for extracting weld feature points based on centroid positioning is proposed. Finally, the least squares method is used to fit the feature points of the welding seam to complete the welding trajectory planning. The experimental results show that the method can well solve the problem of automatic planning welding trajectory of plane V-type butt, plane I-type butt, and plane lap welds, to realize that the welding robot can simultaneously weld various plane welds without teaching and programming.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Traditional manual welding has shortcomings such as high technical requirements for personnel, poor working environment, poor consistency of welding quality, and low welding efficiency. In order to overcome the above shortcomings, welding robots have begun to be widely used in industrial scenarios [1]. At present, the welding robot relies on robot programming to realize automatic welding. The traditional robot programming methods include manual teaching programming and offline programming (OLP) [2]. However, the manual teaching programming workload is large, and the clamping accuracy of the workpiece is relatively high. The offline programming highly depends on the CAD model of the workpiece, and the programming is complicated. In recent years, some researchers have gradually used structured light vision sensors to guide robotic welding, to achieve intelligent welding with less programming and free programming.

A structured light vision sensor generally consists of a laser transmitter and a camera, whose measurement principle is optical triangulation [3,4,5]. According to the different types of emitted lasers, structured light vision sensors can be roughly divided into line structured light vision sensors and area array structured light vision sensors.

The welding seam feature extraction methods based on the line structured light vision sensor process the RGB-D information. Xu et al. extracted the feature points of the weld by improving the canny algorithm, and then matched the depth information to obtain the three-dimensional information of the feature points of the weld, and finally realized the real-time tracking of the weld of argon tungsten arc welding (GTAW) [6]. The welding seam information obtained using a single line laser is limited, and the feature extraction algorithm has limitations. Therefore, some researchers achieve a better weld extraction effect by changing the amount and type of structured light [7, 8]. Such as, Shao et al. changed the number of laser stripes and the wavelength of the laser. They combined the measurement information obtained by different wavelengths of lasers to calculate and designed an extraction method of I-type narrow welds [9]. Gao et al. studied the interference problems such as arc light in welding. They optimized the dark channel prior anti-jamming processing algorithm based on contour and OTSU threshold, which can effectively remove metal vapor and plasma sputtering during welding [10]. The research on weld feature extraction methods based on the line structured light vision sensor is relatively mature. However, line structured light vision sensors can only be used for welding seam tracking due to the limitation of their own information acquisition, and rough teaching of the welding path is still required before welding. Therefore, those methods have little effect on the programming simplification of the robotic welding process.

Area array structured light vision sensors are known as area array structured light cameras or 3D cameras. Compared with the linear structured light vision sensor, the spatial information obtained by the area array structured light vision sensor is more abundant. Therefore, using area array structured light to guide robotic welding can further simplify the programming effort.

There are mainly two types of welding seam feature extraction methods based on area array structured light camera. One is to process the obtained RGB-D information [11,12,13]. The essence of such methods is to process two-dimensional images. It is more sensitive to light, so this type of method is generally not used in industrial sites. The other is to process point cloud information. Then, point cloud-based weld feature extraction methods can be divided into two categories. The first class of methods is based on the local features of point clouds [14, 15]. For example, Gao et al. studied the spatial curve weld and extracted the spatial weld using the point cloud’s normal feature [16]. The second class of methods is based on the global geometric features of point clouds [17, 18]. For example, Yang et al. proposed a weld extraction method based on the point-to-plane distance for plane butt V-type welds and realized the extraction of plane V-type welds [19].

Plane welds generally refer to plane butt welds and plane lap welds, which are common types of weld joints spliced between sheets and are extremely common in the industry. However, there are the following difficulties in using the existing point cloud-based methods to extract plane welds:

-

1.

When using the method based on the local features of point clouds to extract the weld seam, the difficulty is that the local features of the neighboring points tend to be consistent, which makes it difficult to distinguish the feature points and non-feature points.

-

2.

When using the method based on the global geometric features of point clouds to extract the weld, the difficulty lies in the poor adaptability of the method, and different judgment rules need to be set for each specification of the weld joint.

In order to overcome the above difficulties, this paper proposes a feature extraction method for plane welds based on plane point cloud density. The main contributions of this paper are as follows:

-

1.

This paper realizes the efficient and accurate segmentation of the plane and line features of the point cloud by combining the local features of the point cloud with the imaging principle of the area array structured light camera.

-

2.

This paper fully uses the common geometric features of plane welds and is suitable for weld extraction of plane V-type butt welds, plane I-type butt welds, and plane lap welds.

-

3.

A comparative experiment is designed, and the accuracy and feasibility of the proposed method are verified by error analysis.

This article is organized as follows: Section 2 introduces the composition and framework of the experimental system in detail. Section 3 proposes a line and plane classification method of the plane weld workpiece model based on plane point cloud density. Section 4 presents a method for extracting weld seam features based on centroid positioning. Section 5 is the analysis of experiments and results to verify the feasibility, accuracy, and efficiency of the method in this paper. Section 6 concludes the work of this paper.

2 System configuration and framework

2.1 System platform

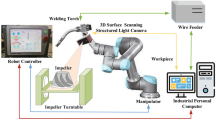

As shown in Fig. 1, the composition framework of the robot welding experimental platform built in this paper mainly includes the welding execution system and the 3D vision system. The welding execution system is mainly composed of a robotic arm and welding equipment. It realizes the photographing action and welding action. The 3D vision system is mainly composed of an area array structured light camera and an industrial computer. Its main functions include the acquisition of 3D point cloud information, the extraction of weld features, and the planning of welding trajectories. The two systems are connected by TCP/IP communication.

In order to shoot the weld area of the workpiece more flexibly, the area array structured light camera and the robot are combined in the Eye-in-Hand manner. The parameters of the area array structured light camera selected in this experiment are shown in Table 1.

2.2 Workpiece model

In this paper, the plane V-type butt joint, the plane I-type butt joint, and the plane lap joint among the plane weld types are selected for research. As shown in Fig. 2, this study built three workpiece models of welded joints in the laboratory environment. Figure 2a is the workpiece model of plane V-type butt joint, Fig. 2b is the workpiece model of plane I-type butt joint, and Fig. 2c is the workpiece model of plane lap joint.

2.3 System framework

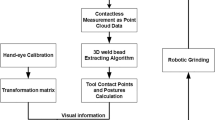

In this experiment, the 3D camera shoots the workpiece to form a point cloud and transmits the point cloud data to the industrial computer through TCP/IP. The point cloud is processed on the industrial computer. First, in order to simplify the extraction of weld features, the initial point cloud is preprocessed to obtain the three-dimensional information of the workpiece. Then, according to the workpiece’s three-dimensional information, the weld’s feature is extracted, and the welding trajectory is planned. Finally, the industrial computer transmits the welding trajectory data to the robot controller through TCP/IP, and the robot executes the welding trajectory motion. The system framework flowchart is shown in Fig. 3.

3 Point cloud preprocessing

The geometric features of the plane weld workpiece are composed of simple lines and planes. To extract the weld feature points, it is necessary to analyze the lines and planes information of the plane weld workpiece. In order to extract the plane points and line points of the point cloud of the plane weld workpiece, this paper proposes the concept of plane point cloud density. On this basis, the plane point criterion and the plane edge point criterion are set.

3.1 Plane point cloud density

Figure 4 shows the measurement principle of the area array structured light camera. The area array structured light projected by the laser projector is modulated by the object surface, collected by the camera, and transmitted to the computer. Then, by analyzing and calculating the original data using the principle of pinhole imaging and triangulation [20], we obtain the point cloud data of the measured surface in the camera coordinate system with the camera optical center as the origin.

As shown in Fig. 5, the field of view of the area array structured light camera refers to the plane parallel to the xoy plane of the camera coordinate system that is covered by the structured light within the working distance of the camera. Due to the property of light traveling in a straight line, the width and height of the field of view are proportional to the distance from the field of view to the camera coordinate xoy plane.

Therefore, we set the width and height of the field of view as W and H, respectively. The distance from the field of view to the xoy plane of the camera coordinate system is denoted by L. We can get Eq. 2. Since the field of view is parallel to the xoy plane of the camera coordinate system, L can be measured using the z coordinate in the camera coordinate system.

The area of the camera’s field of view S can be obtained by:

Let F be the resolution of the area array structured light camera. Then we can calculate the plane point cloud density D as:

For plane weld workpieces, when the 3D camera shoots with the z-axis of the camera coordinate system perpendicular to the workpiece plane, the workpiece plane coincides with the field of view. The obtained plane point density on the workpiece point cloud satisfies Eq. 4. Therefore, the plane point cloud density can be used as a theoretical basis for segmenting lines and planes of the workpiece.

According to Table 1, the resolution of the area array structured light camera used in this paper is 1920 × 1200. We set l1 = 1000mm, w1 = 700mm, h1 = 440mm, and substitute them into Eq. 4:

3.2 Plane point cloud extraction

3.2.1 Theoretical number of neighborhood points

In this experiment, we define a standard 3D camera pose for shooting in which the z-axis of the camera coordinate system is perpendicular to the plane weld workpiece. For the workpiece point cloud captured in this posture, the point cloud density of the plane point can be calculated by Eq. 5. At this point, we delineate a nearby area of radius R for any plane point. The product of its density and the area of the nearby area is the theoretical number of neighborhood points N:

However, in the actual operation process, it is tough to control the robot’s end to achieve the standard posture for shooting. As shown in Fig. 6, under the non-standard shooting posture, the obtained plane point cloud has a certain inclination angle with the camera’s field of view plane, which will cause the plane point cloud density to be uneven. At this time, using Eq. 6 to obtain the number of theoretical neighborhood points for plane points will lead to errors. Therefore, we need to analyze this error.

As shown in Fig. 7, in the plane point cloud with an included angle of 𝜃 to the field of view plane, we select a point to find a neighborhood with a radius R and select two edge points in the direction of the plane’s inclination. In the neighborhood area, the difference between the two edge points and the center point is the largest on the z-axis:

By analyzing the function characteristics of Eq. 4, we know that among all the neighboring points, the difference between the plane point cloud density of the Boundary point1 and the center point is the largest, so there are:

From Eq. 8, we can estimate the maximum error in finding the theoretical number of neighborhood points in the neighborhood as:

The recommended working distance range of the camera in this experiment is 500–1000mm. At this time, Eq. 9 is a decreasing function. When L = 500mm, E1 takes the maximum value:

The size of 𝜃 is limited in actual operation. As shown in Fig. 8, when the angle between the laser and the plane is large, the surface coverage of the laser is large, and the quality of the obtained point cloud is good. On the contrary, when the angle between the laser and the plane is small, part of the laser can be blocked, the surface coverage of the laser is small, and the quality of the obtained point cloud is poor. The red ellipses in Fig. 8 show the difference in laser coverage under the two projection angles. Therefore, after a lot of experiments, when the camera is shooting, its posture can generally keep the angle between the z-axis and the normal of the workpiece plane less than 30 degrees, namely 𝜃 < 30∘. Moreover, the neighbor radius R in this experiment is 1mm. Substituting R = 1mm and 𝜃 = 30∘ into Eq. 10, the maximum error of the theoretical number of neighborhood points brought by the shooting posture can be reduced to 0.06. Therefore, this error can be ignored.

3.2.2 The plane point criterion

There is a certain measurement error in the point cloud obtained by the 3D camera, which will result in the calculation error of the area of the neighbor area. This is another error source in the theoretical number of neighborhood points. The utilized 3D camera has a nominal error of 0.1mm within a working range of 1000mm. Let e be the measurement error of R , then − 0.1mm ≤ e ≤ 0.1mm.

As shown in Fig. 9, the red circle is the measured neighbor area, and the black circle is the theoretical neighbor area. Therefore, the calculation formula of the maximum error of the theoretical number of neighborhood points brought by the area of the neighbors is:

Since R = 1mm, substituting L = 500mm and \(\lvert e \rvert =0.1\text {mm}\) into Eq. 11, the maximum value of E2 can be obtained as 19.74. Therefore, this error cannot be ignored. At this point, Eq. 6 is corrected to obtain:

According to the monotonicity of the function, when e = − 0.1mm, we can get the minimum value of \(N^{\prime }\):

By introducing an error, Eq. 13 finds the minimum value of the number of neighbor points in the plane point neighborhood. For any point in the point cloud, the nearest neighbor search with radius R can get the actual number of neighbor points Nr. When the point is a plane point, Nr is greater than or equal to the minimum value of \(N^{\prime }\). When the point is a non-plane point, the obtained Nr is less than the minimum value of \(N^{\prime }\) because the laser light is blocked. According to the above theory, we can get the plane point criterion: for any point P in the point cloud, Eq. 14 is satisfied.

In this experiment, we use the plane point criterion to extract the plane point cloud of the plane weld workpiece point cloud, and the result is shown in Fig. 10.

3.3 Remove the background from the workpiece

As seen from Fig. 10, the extracted plane point cloud contains the workpiece and background planes. We need to remove the background plane to facilitate further workpiece processing. We can see from the plane point cloud that the three planes are not connected. Therefore, in this experiment, the Euclidean clustering method is used to separate the three planes, then the three planes are identified, and the background plane is eliminated.

We set the threshold of Euclidean clustering to 2mm and divided the plane point cloud into three independent plane point clouds. As shown in Fig. 11, among the three planes, the xoy projection area of the background plane is the largest. This paper uses this feature to identify the background plane and remove it. However, the segmented point cloud is not a regular rectangle, so we construct a bounding box on the xoy plane according to the maximum and minimum values of the x and y coordinates of the point cloud. The point cloud with the largest projected area on the xoy plane has the largest bounding box on the xoy plane. Therefore, the point clouds of the workpiece planes are obtained by removing the largest face of the xoy bounding box.

3.4 Extract workpiece edge lines

3.4.1 Extract the edge points of the workpiece

After the above point cloud processing, we have obtained the plane information of the plane weld workpiece. In this section, we extract the edge of the workpiece plane to get the line information of the workpiece.

Each point’s neighborhood of radius R is delineated on the plane point cloud. We can obtain each point’s theoretical number of neighborhood points according to Eq. 6. However, as shown in Fig. 12, edge points’ actual number of neighbor points is much smaller than their theoretical number of neighborhood points due to the limitation of the point cloud boundary. According to the above characteristics, we tend to find the nearest neighbor area for any point on the plane point cloud. When its actual number of neighborhood points Nr and its theoretical number of neighborhood points N satisfy Nr < N × μ(μ < 1), the point is regarded as an edge point. In order to accurately extract the edge, after experimental judgment, we finally determined the threshold μ = 0.5. Therefore, the above theory is summarized into the plane edge point criterion of this experiment: for point P on the plane point cloud, Eq. 15 is satisfied.

According to the above criteria, the plane edge points are obtained from the workpiece plane point clouds. The results are shown in Fig. 13, where the green and red represent the edge points of the two workpiece planes, respectively.

3.4.2 Segment plane edge point cloud

Due to the disordered characteristics of the point cloud, the obtained workpiece plane edge point cloud is still indistinguishable edges. In this paper, the extraction of weld feature points requires the linear fitting of the point cloud of each edge. Therefore, we need to further segment the plane edge point cloud.

This paper proposes an iterative segmentation algorithm based on the Random Sample Consensus algorithm (RANSAC) [21] to segment all point cloud subsets with the same geometric model in a point cloud at one time. The algorithm has the following procedures. After selecting the geometric model, iteratively update the point cloud input to the RANSAC segmentation algorithm each time. Compare the number of the remaining points after segmentation with the set threshold of remaining points, and the point cloud less than the threshold will not be segmented. The specific steps are shown in Fig. 14. Finally, we set a threshold of 1mm and use this algorithm to segment the edge point cloud of the workpieces. The results are shown in Fig. 15, where different colors represent different plane workpiece edge lines.

4 Weld extraction

4.1 Weld edge line extraction

By preprocessing the initial point cloud, we can get the workpiece’s line and plane geometry information. On this basis, we extract the weld feature information. Aiming at plane weld workpieces, this paper proposes a method for extracting weld feature points based on centroid positioning. The principle of this method is introduced by taking the plane I-type butt workpiece model as an example. As shown in Fig. 16, we first denote the extracted workpiece planes as Plane1 and Plane2. Then, the weld feature of the plane weld workpiece refers to the edges of the two planes on the side of the weld, denoted as Edge1 and Edge2, respectively. We find the centroids of Plane1 and Plane2, denoted as Centroid1 and Centroid2. It can be seen that, among all the edges, Centroid1 and Centroid2 are only distributed on both sides of Edge1 and Edge2. We refer to Edge1 and Edge2 collectively as the weld edge line. Due to the characteristics of affine transformation, when the workpiece plane point cloud is projected onto the xoy plane, the above geometric features remain unchanged. Therefore, on the xoy plane, the edge line of the weld can be extracted according to the position of the centroid of the two planes.

We assume that the projection equation of any one of the edge lines on the xoy plane is fedge(x,y). The xoy plane projection coordinates of Centroid1 and Centroid2 are denoted as C1(xc1,yc1) and C2(xc2,yc2), respectively. When Centroid1 and Centroid2 are distributed on both sides of an edge line, the following relationship exists.

In Section 3.4.2, we obtained the equations of these lines when using the RANSAC-based method to segment the plane edge point cloud. Therefore, we can extract the edge lines Edge1 and Edge2 of the weld using the above geometric principle. Finally, the extraction effect of the weld edge lines is shown in Fig. 17.

4.2 Weld edge line fitting

Due to the limitations of the RANSAC algorithm, the equations of the extracted weld edge lines are not accurate enough and need to be re-fitted. Here, we use the least squares method for fitting.

The main idea of the least squares method is to solve the unknown parameters so that the sum of the squares of the difference between the theoretical value and the observed value is minimized. The observed value is multiple sets of samples, and the theoretical value is the value obtained by a hypothetical fitting function. For example, we have m samples (xi,yi)(i = 1,2,3,…,m) with only one feature. The sample is fitted with a polynomial ht(x) of degree n.

In Eq. 17, t(t0,t1,t2,⋯ ,tn) denotes the parameter to be solved. The residual sum of squares is:

The least squares method considers that when C takes the minimum value, t(t0,t1,t2,⋯ ,tn) is the optimal solution. The polynomial function at this time is the optimal fit of the sample. In this experiment, the matrix solution method of the least squares method is used to solve the parameters, which will not be elaborated on here.

The point cloud data is a three-dimensional data sample. We can complete the least squares polynomial fitting of the point cloud by selecting one-dimensional data as features and the other two-dimensional data as observations to form two sets of samples. The obtained two polynomials complete the description of the space curve. This experiment aims at the plane weld. For simplicity, we choose the dimension with a larger distribution range in x and y as the feature.

When x is selected as the feature, then y and z are respectively used as observations, and the samples are (xi,yi),(xi,zi)(i = 1,2,3,⋯ ,m). When y is selected as the feature, then x and z are respectively used as observations, and the samples are (yi,xi),(yi,zi)(i = 1,2,3,⋯ ,m).

For straight welds, we set the order n of the polynomial as 1. Finally, the fitting equations of the weld edge of the plane V-type butt workpiece model are obtained as Eqs. 19 and 20. Equations 21 and 22 show the fitting equations of the weld edge of the plane I-type butt workpiece model.

For the plane lap workpiece model, Edge1 and Edge2 need to be sorted for subsequent weld extraction due to the drop between the workpiece planes. When shooting with a 3D camera, the z-axis is basically perpendicular to the workpiece plane. Therefore, when the features are the same, the z value of the weld edge of the lower layer is larger. Equations 23 and 24 show the fitting equations of the lower weld edge Edge1 and upper weld edge Edge2, respectively.

Finally, the fitting effect of the weld edge lines is shown in Fig. 18.

4.3 Solving weld equation

We set the fitting equations of Edge1 and Edge2 to be fE1 and fE2, and the fitting equations of the three welds to be fV, fI, and fD. Analyze the geometric relationship between Edge1 and Edge2 of each workpiece and its weld, and solve the weld equation.

As shown in Fig. 19a, due to the symmetry of the weld, the weld of the plane V-type butt workpiece model is obtained by shifting the midline of Edge1 and Edge2 downward by the groove depth d. Equation 25 can be obtained, where \(\overrightarrow {n_{V}}\) is the normal vector of the upper plane of the workpiece pointing to the lower plane.

The solution to \(\overrightarrow {n_{V}}\) is shown in Fig. 20. First, select three non-collinear points A, B, and C on the plane, use these three points to form any two vectors, and normalize the vector product of the two vectors to be the normal vector \(\overrightarrow {n_{1}}\) of the plane. Here, in order to reduce the amount of computation, we choose the endpoints of Edge1 and Edge2. Equation 26 shows the solution of \(\overrightarrow {n_{1}}\).

Then, according to the shooting posture of the camera, the angle β between the positive direction of the z axis of the camera coordinate system and \( \overrightarrow {n_{V}}\) is an acute angle. Therefore, take the inner product of \(\overrightarrow {n_{1}}\) and the unit vector \(\overrightarrow {Z_{\text {cam}}}(0,0,1)\) in the positive direction of the z-axis of the camera coordinate system, and adjust the direction of \(\overrightarrow {n_{1}}\) according to the inner product to obtain \(\overrightarrow {n_{V}}\). \(\overrightarrow {n_{V}}\) can be solved by:

After measurement, the groove depth d is 5mm, yielding \( \overrightarrow {n_{V}}=(0.127008, -0.0211309, 0.991677)\). It is known that fE1 and fE2 of the plane V-type butt workpiece weld have the form of Eqs. 19 and 20. Substitute them into Eq. 25, and the equation of the weld can be obtained, as shown in Eq. 28.

Finally, according to the definition domain, after the line is translated, after sampling the equation according to a certain step size, the obtained weld line is shown in Fig. 21a, where the red points represent the weld.

As shown in Fig. 19b, due to the symmetrical characteristics of the weld, the weld of the plane I-type butt workpiece model is the midline of Edge1 and Edge2. The available formula is Eq. 29.

fE1 and fE2 of the known plane I-type butt workpiece weld are Eqs. 21 and 22. Substitute them into Eq. 29, and the equation of the weld can be obtained, as in Eq. 30. Finally, the weld is obtained as shown in Fig. 21b.

As shown in Fig. 19c, the weld of the plane lap workpiece model is Edge1. It is known that the fE1 of the weld of the plane lap workpiece is Eq. 23. That is, the equation of the weld is Eq. 23. Finally, the weld is obtained as shown in Fig. 21c.

5 Experiments and results

The extracted welds are located in the camera coordinate system. In order to realize the welding trajectory planning, we first convert the welding seam feature points to the robot base coordinate system and then sample the feature points according to the robot’s path control rules to form the robot’s welding trajectory. Finally, a comparative experiment is designed, and the experimental results are analyzed to verify the rapidity, accuracy, and feasibility of the proposed method in this paper.

5.1 Welding trajectory planning

Converting the weld feature points to the robot base coordinate system requires a high-precision hand-eye calibration. As shown in Fig. 22, in the process of hand-eye calibration, the positions of the robot’s base and the calibration plate are fixed. And when the robot shoots the calibration board in different poses, the camera position relative to the robot’s end is also unchanged. Therefore, there is a relationship in the calibration process, as shown in Eq. 32, where T represents the homogeneous transformation matrix[22].

After shifting the terms in Eq. 32, we can get:

Schematic diagram of hand-eye calibration [17]

Because \({{~}^{\mathrm {End2}}T_{\mathrm {Camera2}}}={{~}^{\mathrm {End1}}T_{\mathrm {Camera1}}}\), the problem is transformed into a solution problem of AX = XB. By taking multiple shots in different robot poses, EndTCamera can be obtained at the end.

We need to transform the points in the camera coordinate system into the base coordinate system through the transformation matrix BaseTCamera. The formula is shown in Eq. 35, where p denotes any point in space.

In this experiment, the robot poses when shooting the plane V-type butt joint workpiece model, the plane I-type butt joint workpiece model, and the plane lap joint workpiece model are as follows: (− 613.50, − 284.81, 296.28, 178.28, 1.04, 107.12), (− 516.72, − 231.54, 186.02, 176.89, 6.33, 105.70), and (− 516.72, − 231.54, 186.02, − 176.89, 6.33, 105.70), respectively. The format of the pose data is (x,y,z,rx,ry,rz), the first three variables are coordinate values in mm, and the last three are RPY angle values in degrees. Converting the robot pose data into a homogeneous coordinate matrix is BaseTEnd.

Finally, bring the fitted weld points into Eq. 35 to get the weld in the robot base coordinate system, as shown in Fig. 23.

5.2 Experimental result analysis

In order to verify the accuracy of the welding track, we take the manual teaching welding method as the benchmark and compare the taught track with the welding track obtained in this experiment for error analysis.

First, we manually teach points for three kinds of welds and take 12 points for each weld. As shown in Fig. 24, the black points are the teaching points, and the blue lines are the welding trajectories obtained by the experiment. Then, calculate the minimum distance from each manual teaching point to the obtained welding trajectory straight line, which represents the error between the manual welding method and the method in this paper. Finally, the error analysis is obtained in Fig. 25.

As shown in Fig. 25, for the welding trajectory planning of the plane weld, the errors of the proposed method are stable within 1mm. This accuracy fully meets the error requirements of industrial welding scenarios. Therefore, this paper’s welding trajectory planning method can completely replace the manual teaching method.

At the end of the experiment, according to the programming rules of the robot controller, we reasonably sample the fitting point cloud of the welding seam to obtain the robot welding trajectory points. After reasonably planning the robot’s end pose, the obtained welding trajectory points are sent to the robot controller. The robot can drive the welding torch to perform the welding task accurately, which further verifies the feasibility and accuracy of the method.

5.3 Algorithm efficiency analysis

In industrial sites, efficiency improvement is one of the main motivations for automated equipment to replace manual labor. The algorithm design of this experiment was initially deduced using Matlab and finally implemented using C++. Due to the large point cloud data, this experiment uses multi-threaded programming, which improves the algorithm’s efficiency. Through many experiments, we finally get the average running time of the algorithm, as shown in Table 2.

It can be seen from Table 2 that the time for this algorithm to process the point cloud of a plane weld is 4.308s. Therefore, the algorithm meets the efficiency requirements in industrial welding scenarios and has rapidity.

6 Conclusion

Facing industrial requirements, this paper proposes a novel 3D vision-based plane weld trajectory planning method. This method finally realizes the automatic robot welding of three types of plane welds without teaching and programming. The advantages of this method are as follows:

-

1.

This method liberates labor, improves welding efficiency, and solves the problems of poor manual welding precision and consistency.

-

2.

The method realizes the automatic trajectory planning of the plane welding workpiece, avoids the complicated programming work of the welding robot, and improves the automation degree of the robot welding.

References

Wang X, Zhou X, Xia Z, Gu X (2021) A survey of welding robot intelligent path optimization. J Manuf Process 63:14–23

Maiolino P, Woolley R, Branson D, Benardos P, Popov A, Ratchev S (2017) Flexible robot sealant dispensing cell using RGB-D sensor and off-line programming. Robot Comput Integr Manuf 48:188–195

Wu Z, Sun C (2010) Modeling and parameters analysis of vision measuring sensor

Geng J (2011) DLP-based structured light 3D imaging technologies and applications. In: Emerging Digital Micromirror Device Based Systems and Applications III, SPIE, pp 95–109

Li Z, Cui J, Wu J, Tan J (2016) Structural parameters analysis and optimization of line-structured light measuring system. In: 2016 23rd International conference on mechatronics and machine vision in practice (M2VIP), IEEE, pp 1–6

Xu Y, Yu H, Zhong J, Lin T, Chen S (2012) Real-time seam tracking control technology during welding robot GTAW process based on passive vision sensor. J Mater Process Technol 212(8):1654–1662

Kiddee P, Fang Z, Tan M (2016) An automated weld seam tracking system for thick plate using cross mark structured light. Int J Adv Manuf Technol 87(9-12):3589–3603

Liang B, Xu X, Gong S, Wang Q, Wang X (2016) Dual-beam laser welding and seam tracking control technology for 3D T-beam. Trans China Welding Inst 37(2):47–50

Shao WJ, Huang Y, Zhang Y (2018) A novel weld seam detection method for space weld seam of narrow butt joint in laser welding. Optics & Laser Technol 99:39–51

Gao Y, Zhong P, Tang X, Hu H, Xu P (2021) Feature extraction of laser welding pool image and application in welding quality identification. IEEE Access 9:120,193–120,202

Jing L, Fengshui J, En L (2016) RGB-D sensor-based auto path generation method for arc welding robot. In: Proceedings of the 28th chinese control and decision conference (2016 Ccdc). IEEE, New York, pp 4390–4395

Ahmed SM, Tan YZ, Chew CM, Mamun AA, Wong FS (2018) Edge and corner detection for unorganized 3D point clouds with application to robotic welding. In: 2018 IEEE/RSJ International conference on intelligent robots and systems (IROS). IEEE, Madrid, pp 7350–7355

Peng R, Navarro-Alarcon D, Wu V, Yang W (2020) A point cloud-based method for automatic groove detection and trajectory generation of robotic arc welding tasks

Gao Y, Ping C, Wang L, Wang B (2021) A simplification method for point cloud of t-Profile steel plate for shipbuilding. Algorithms 14(7):202

Patil V, Patil I, Kalaichelvi V, Karthikeyan R (2019) Extraction of weld seam in 3D point clouds for real time welding using 5 DOF robotic arm. In: 2019 5Th international conference on control, automation and robotics (ICCAR). IEEE, Beijing, China, pp 727–733

Gao J, Li F, Zhang C, He W, He J, Chen X (2021) A method of d-Type weld seam extraction based on point clouds. IEEE Access 9:65,401–65,410

Geng Y, Zhang Y, Tian X, Shi X, Wang X, Cui Y (2021) A method of welding path planning of steel mesh based on point cloud for welding robot. Int J Adv Manuf Technol 116(9):2943– 2957

Geng Y, Zhang Y, Tian X, Shi X, Wang X, Cui Y (2022) A novel welding path planning method based on point cloud for robotic welding of impeller blades. Int J Adv Manuf Technol, 1–14

Yang L, Liu Y, Peng J, Liang Z (2020) A novel system for off-line 3D seam extraction and path planning based on point cloud segmentation for arc welding robot. Robot Comput Integr Manuf 64:101,929

Wang Z (2020) Review of real-time three-dimensional shape measurement techniques. Measurement 156:107,624

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395

Li M, Du Z, Ma X, Dong W, Gao Y (2021) A robot hand-eye calibration method of line laser sensor based on 3D reconstruction. Robot Comput Integr Manuf 71:102,136

Funding

This work was supported by the National Natural Science Foundation of China (No. U20A20201), Shandong Provincial Key Research and Development Program (2022CXGC010101), Taishan Industry Leading Talent Project, Shandong Provincial Natural Science Foundation (No. ZR2021QF024), and Shandong Provincial Postdoctoral Innovation Program (No. 202102009).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Author contribution

Yuankai Zhang was a major contributor in writing the manuscript. All authors read and approved the final manuscript.

Availability of data and materials

Not applicable.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent to publish

Not applicable.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yuankai, Z., Yong, J., Xincheng, T. et al. A point cloud-based welding trajectory planning method for plane welds. Int J Adv Manuf Technol 125, 1645–1659 (2023). https://doi.org/10.1007/s00170-022-10699-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-10699-x