Abstract

Laser cladding is a complex manufacturing process. As the laser beam melts the feedstock powder, small changes in laser power or traverse speed reflect on deviations of the deposition’s geometry. Thus, fine-tuning these process parameters is crucial to achieve desirable results. In order to monitor and further understand the laser cladding process, an automated method for clad bead final geometry estimation is proposed. To do so, six different convolutional neural network architectures were developed to analyze the process’ molten pool image acquired by a 50-fps coaxial camera. Those networks receive both the camera image and the process parameters as inputs, yielding width and height of the clad beads as outputs. The results of the network’s performances show testing error mean values as little as 8 μm for clad beads around a millimeter in height. For the width dimension, in 95% of the cases, the error remained under 15% of the bead’s width. Plots of the target versus the estimated values show coefficients of determination over 0.95 on the testing set. The architectures are then compared, and their performances are discussed. Deeper convolutional layers far exceeded the performance of shallower ones; nonetheless, deeper densely connected layers decreased the performances of the networks when compared with shallower ones. Those results represent yet another alternative on intelligent process monitoring with potential for real-time usage, taking the researches one step further into developing a closed-loop control for this process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Laser cladding (LC) is an additive manufacturing process in which a laser beam melts feedstock material into a substrate, producing a clad bead. Disturbances on the resulting clad bead geometry usually lead to deviances on the part’s final shape, loss of surface quality, and even structural defects, leading to the disposal of the final part. It is, thus, crucial to closely monitor this geometry. An alternative for its monitoring is through the acquisition of the molten pool image.

Through optical monitoring of the molten pool, one can acquire important information about the process.

The molten pool size reveals information of the final geometry of that clad bead, while its brightness is closely related to molten pool temperature.

The first approaches aiming at molten pool image acquisition for the laser cladding process consisted of the use of multiple cameras with different specifications directed to the molten pool, successfully measuring the clad bead height and width. The very first approaches, by Meriaudeau et al., consisted of two different cameras directed at the molten pool, on different angles [1,2,3,4]. It was then possible to acquire the molten pool image for the first time, even measuring clad bead height, width, and molten pool temperature. A later approach, by Hu et al., developed a first closed-loop controller where the laser power was regulated accordingly to the area of the molten pool [5, 6].

Following, Toyserkani et al. developed a closed-loop PID-based control system based on clad bead height measurement [7]. Xing [8] developed an optical monitoring system based on colorimetry, achieving both molten pool temperature and clad bead height measurement through laser triangulation. Later, Hofman et al. gathered molten pool infrared (IR) wavelengths with a laser coaxial IR camera [9]. After some image processing, the molten pool boundary could be segmented, and its area, width, length, and rotation angle were measured. Molten pool width was then used for a PID-based closed-loop control, which regulated molten pool temperature by adjusting laser power. Lei et al. achieved a molten pool image acquisition on a CO2 laser-based cladding process, measuring molten pool temperature through the image’s brightness [10].

Arias et al. [11] developed an FPGA-based system which gathered mostly IR wavelengths detecting molten pool based on blob detection. With the molten pool width as the biggest detected blob’s width, a closed-loop control was implemented by adjusting laser power. From the molten pool image also came the work of Ocylok et al. [12], where image thresholding successfully segmented the molten pool and relations between its geometry, and process parameters were found.

Moralejo et al. [13] developed a PI-based control-loop for molten pool geometry in real time. Molten pool border was previously detected by laser cladding experiments without the addition of powder. Its width was chosen as the control variable due to its overall stability.

Iravani-Tabrizipour et al. [14, 15] continued the work from Toyserkani et al. [7] implementing a trinocular system for measuring clad bead height from three radially spaced cameras. After fitting the molten pools into ellipses, their main features were fed into a recurrent neural network (RNN) which calculated clad bead height. This became the first works that made use of neural networks for geometry estimation on laser cladding.

Mondal et al. [16] aimed at finding a relationship between bead geometry and process input parameters. Through cross sectioning, bead geometry was acquired. This bead geometry, along with the process input parameters (laser power, travel speed, and mass flow) was fed into an artificial neural network (ANN), yielding a coefficient of determination (R2) of 0.981 for the best fit line.

Aggarwal et al. [17], targeting at bead geometry optimization, took three approaches—one experimental, one based on predictive models, and the last on ANN, all of them are based on cross section measurements. The ANN approach surpassed the remaining ones, with 96.3% of confidence level on its results.

Caiazzo et al. [18] acquired data from cross sections to develop an ANN capable of estimating process parameters. At first, the ANN was used to estimate the process parameters from bead geometries that were already deposited, based on their cross sections. Later, it was reversed, using the bead geometry to estimate process parameters, achieving errors below 6% for travel speed and powder feed rate, and of 2% for laser power.

Finally, Huaming et al. [19] predicted geometric characteristics from input process parameters by a genetic algorithm and backpropagation neural network-based approach (GA-BPNN). Again, based on cross section measurements, the ANN was trained, but their architectures were optimized by the use of genetic algorithms (GA). The resulting networks were trained much faster than the initial ones, achieving superior results. It was also observed the better performance of the networks which output only a single parameter at a time.

Other approaches involving either the molten pool or the clad bead measurement consist on low-cost alternatives [20], laser triangulation over newly formed clad bead [21], and even a methodology based on previous experiments calibration [22]. An approach to temperature acquisition through black body calibration could also be found [23].

An attentive reader may observe mainly two types of research. While most of them use the molten pool image in order to acquire molten pool-related dimensions, others use solely bead geometry measurements acquired from cross sections for bead geometry estimation with ANN techniques. An approach where both molten pool image and process parameters are combined and fed to an appropriate ANN has not been found on this review.

This work presents a novel approach where the molten pool image is directly processed by a convolutional neural network (CNN). As input, the network takes both molten pool image and input process parameters, estimating clad bead width and height in return. Six different CNN architectures were tested. To make the network training feasible, measurements from the clad beads were acquired by active photogrammetry means. Different height and width values were measured to match each corresponding image frame. After training, the CNN estimated width and height values in great agreement with the experimental values.

2 Experimental setup

2.1 Image acquisition and bead deposition

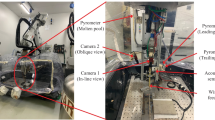

The experiments were performed on a laser cladding system with coaxial powder nozzle head COAX-50-S, provided by the Fraunhofer ILT. The laser source is a fiber laser from IPG Photonics® with a maximum laser power of 10 kW. Feedstock material consisted of AHC 100.29 Höganäs-manufactured iron (99.98%) powder, fed at 6.51 g/min. The substrate material is composed of ASTM A36 steel, in the shape of a plate with the 50 × 200 × 9.52 mm dimensions.

Along with this system, there is a beam splitter, which allows a camera to be coaxially set to the laser beam; its optical path and schematic can be seen in Fig. 1. The camera used is a grayscale CMOS device from PointGrey, model BFLY-PGE-20E4M-CS, with a resolution of 1600 × 1200 pixels, acquiring images at 50 frames per second (fps). Due to the laser’s high luminosity, neutral density filters from Newport were used, resulting in a combined neutral density of 2. An iris is also present on the system to further regulate the amount of light entering the system.

For acquiring the images, the Spinview software from PointGrey was used. On the software, the camera gain was set to 15. On the computer configurations, the maximum transmit unit between the computer and camera was set to 9000.

Regarding the process parameters, six values of laser power and four values of travel speed were used, resulting in 24 unique combinations, here named a set of beads. A total of 3 sets of 20 mm long beads were deposited with the same input configuration presented in Table 1. From those, one whole set of beads was destinated to the test phase of the network training, while the remaining ones were used for the training phase.

2.2 Bead measurement

The clad beads’ width and height measurements were acquired through active photogrammetry by an ATOS Gom system. The compact scan model was used with its minimum scanning volume configuration. After acquiring the data cloud, the three sets of beads resulted in 72 individual beads that were manually segmented. Each of those beads was then sectioned into a specific number of cross sections.

Because the camera recorded images at 50 fps, there is a theoretical number of recorded frames for each bead which depends on its travel speed, as seen in Table 2. Those numbers of sections were then extracted from each bead with the use of the GOM Inspect software.

From those sections, bead width and height were measured, according to their definition in Fig. 2. Bead height is here defined similarly to the literature, as the largest distance between substrate level and the bead’s surface, normal to the substrate. The bead width dimension, however, is slightly different. It is defined as the largest distance parallel to the substrate between points on the clad bead surface that has at least 5% of the total clad bead height. This definition was incorporated on the measurement algorithm so that the extraction of those dimensions could be performed automatically and accurately.

With the height and width dimensions for each frame, everything was then linked together through a comma-separated values (CSV) file by a Python script. This CSV file was later used as a Pandas library DataFrame for the CNN training.

The matching between image frames and sections was not perfect and did introduce errors on the measurement, as there would happen in any measurement procedure. The performances of the CNN, however, were not significantly affected by those errors.

2.3 CNN development

The Keras library was chosen as the main tool for the CNN development, on the Python 3.6 language, due to its easy to use interface and completeness. The Scipy library was also used for support calculations, along with the Pandas and the Glob libraries for file manipulation.

The OpenCV library was used for image preprocessing, resulting in images like the one in Fig. 3. The preprocessing consisted of eliminating completely black images (acquired on process start, end, and between beads), cropping the image region corresponding to the inside of the nozzle, masking out the ring-shaped region where there were nozzle inner reflections, and resizing the images to 128 × 128 pixels for processing time saving on the following CNN training. Resizing the images did reduce the resolution of them by a factor of 23.7% when considering the quantity of pixels per image. Although, they still retained enough information for the networks to successfully estimate the bead dimensions.

Regarding the architecture, the developed networks are hybrid CNN, meaning they have two different types of inputs—molten pool images and process parameters values. A CNN branch is dedicated to the molten pool image input, while a standard, densely connected layer is used as an input for the process parameter branch. Both branches are later concatenated together, followed by more densely connected layers, which are ultimately responsible for outputting the estimated bead geometry.

Six different architectures were tested, which can be seen in Table 3. A previous experimental approach leads to architecture A. Then, the effects of the number of convolutional layers was analyzed, leading to architectures B and C. As the A architecture performed the best, its structure was used to further analyze the quantity of dense layers, leading to architectures D and E. Finally, after resulting as the best performance once more, another optimizer was tested, resulting on architecture F.

Those architectures variated on the number of layers on the CNN branch, the number of neurons on the parameter branch, and on the number of densely connected layers after the branches’ concatenation. The parameter input layer always consisted of a single densely connected layer, although the number of neurons was set as the same as the number of neurons on the CNN branch after flattening. It was done so that both branches had the same number of neurons prior to concatenation. Each architecture was trained three times, each one with a different output type: clad bead height, clad bead width, and both simultaneously. Each architecture with each output type was trained for 200 epochs.

A total of 72 clad beads were deposited for this work, from which 6561 image frames were acquired. A total of 4372 of those frames correspond to two full sets of beads and were destinated to CNN training dataset, while the remaining 2189 frames, corresponding to the last set of beads, were destinated to CNN testing dataset.

3 Results and discussion

The beads were deposited with the parameters presented on Table 1. Their images were acquired, and their cross sections were obtained as explained on the “Bead measurement” section. The 10 most central cross sections from the clad bead that deposited with 2100 W and 800 mm/min can be seen on Fig. 4. This specific clad bead has a height of approximately half millimeter and almost 3 mm in width. A contrast can be seen on Fig. 5, where the only change on the input parameters is on the traverse speed, achieving a bead height of only 0.3 mm.

As for the influence of laser power in bead geometry, more laser power usually results on a flatter clad bead, as observed from Fig. 4 to Fig. 6. In this comparison, the clad bead, which was deposited with the smaller laser power, 700 W, results on a higher deposition, although a more irregular one than the previous. On this last cross section, it is also possible to see that the peak of the bead has a slight offset to the right. This is considered to have happened due to a physical misalignment of laser and powder foci at the equipment setup.

As shown, parameters do influence the final geometry of the clad bead. The remaining question is the existence of a relation between the process parameters, the process images, and the final geometry, such is the goal of this work.

3.1 Loss value

For 200 epochs, the different CNN architectures were trained. The mean squared error (mse) between target and estimation values was used as the loss function. The training performances can be seen in Fig. 7 for CNN with multiple outputs.

It can be observed that the architectures F and A had the best performances. They are the same architecture but trained with different optimizers. Those are the CNN which trained the fastest, while the D architecture is the one that trained the slowest. Here, training speed relates directly to network size—specifically, the number of neurons on each architecture. The largest architecture, D, took longer to train, while the architectures A and F trained the quickest, a reason why the loss value achieved by architecture F is ten times lower than the one achieved by architecture D. Although they have more convolutional layers, it does not increase the number of neurons right after the CNN branch, before concatenation, but reduce it instead. As the fastest CNN, architectures A and F presented the lowest loss values.

Those same CNN architectures were also trained to output each dimension separately. Their behavior during their training remained just like the multiple output case.

3.2 Coefficient of determination

Perhaps the most straightforward way to analyze a network’s performance is through its targets and prediction plot. Its coefficient of determination (R2) is a quantitative indicator of a neural network (NN) accuracy. Those values were calculated and are presented in Table 4 for all CNN architectures with multiple outputs.

According to what was observed with the loss value, architectures A and F presented the highest coefficient of determination (R2) values, thus, the highest accuracy. Here, the architecture A performed slightly better than the architecture F, although the difference is so small, it cannot be concluded which is effectively the best. One may notice that the training was not conducted until 100% of accuracy. There is no reason for training a network perfectly on an imperfect dataset. Those errors originated from the matching between frames and cross sections, as well as from irregularities on the clad beads, particularly on the ones that were deposited with the lowest laser power.

The test phase resulted in slightly worse performance, as expected. Even with the errors previously mentioned, the networks were able to achieve R2 values above 0.97. The best performance was again from the architecture A, with the value of 0.9859, closely followed by F, achieving 0.9851. Architecture A’s performance from both the training phase and the test phase is depicted, respectively, in Fig. 8 and Fig. 9, as target versus prediction plots.

When the architectures were trained to output a single geometry at a time, most of their performances increased. The performances for the CNN with the width dimension as output can be seen in Table 5, while the ones with the height dimension as output are in Table 6. On those tables, there is a comparison between the performance of the single output CNN and the multiple output CNN. For this comparison to be fair, the R2 values for the multiple outputs CNN were recalculated only with the target and prediction values of each dimension.

The R2 values are much smaller than on the multiple output case. One reason for such is the reduced number of samples for the R2 calculation, which emphasizes larger errors on the dataset. Even so, the A and F architectures are still the top performances on all cases.

Another factor that can be observed is the higher difference between training and testing R2 values. The best architectures achieved R2 values above 0.98 on their train phases, although, on their test phases, they decrease below 0.95. It shows the presence of overfitting. It happens when the NN decorates the training dataset, outstanding on it, although performing poorer on everything else. Even with such overfitting, those architectures did not lose on performance when compared with the remaining ones.

Although the coefficient of determination clearly indicates which performances outstand, it does not indicate any order of magnitude. To overcome this issue, the error can be analyzed, which is detailed in the next section.

3.3 Error analysis

Another way to evaluate the CNN performances is through the error between target and prediction values. By fitting those errors into a Gaussian distribution, one can once again observe the CNN performances. The error Gaussian distributions for the CNN with multiple outputs are depicted in Fig. 10 for the training phase and in Fig. 11 for the test phase.

There is a big difference in magnitude between both plots, a clear indication of the presence of overfitting. The architectures A, B, and F present the highest peaks—thus the lowest standard deviations—in the training case, in Fig. 10. Those peaks are drastically reduced on the testing case. Even with strong overfitting, those architectures still present the best performances, in agreement with the previous result.

Finally, a comparison between all test phase performances with single and multiple outputs can be seen in Table 7.

Again, architectures A and F presented the lowest standard deviation values in all cases, except for single width output. Even if the D architecture presented outstanding performances on the multiple outputs mean value and on the single width output standard deviation value, these are taken as coincidences, which can happen because of the random initialization of the CNN weights. Another indication of this being a coincidence is the remaining values for this architecture, which stay way behind in performance when compared with the other architectures.

Taking in consideration the results already presented, it was considered that the best way to deepen this analysis would be through study of the means and standard deviations calculated as percentages from the true height and width measurements. In this way, one could have a better notion of what the values in Table 7 represent proportionally. Table 8 presents those values calculated as percentages of the target value.

From this table, it is noticeable that the networks with multiple outputs have the greatest variability, as the neural network could not specialize itself as well as on the single output ones. Also, in the later networks (E and F), the width dimension presents itself with the lowest variability among all. This is explainable since bead width is more directly measurable from the input images, which were perpendicularly taken from its plane, while the height is not.

Another remarkable result is a comparison between architectures A and F on the single width output. Architecture A presented the worst mean value, while architecture F, the best. This is the first result with a significant difference between these architectures, which are only different on the used optimizer, apart from the weight values initialization. Because of this difference, architecture F presents itself with the best results, thus, as the best architecture.

4 Conclusions

This work consisted of a CNN approach for clad bead geometry estimation. Systematic research was conducted on intelligent solutions for laser cladding optical monitoring, but none that took this same approach was found. Later, an adequation of the optical monitoring system started. After that, the clad beads were deposited, and their molten pool images were acquired. Clad bead height and width dimensions corresponding to each acquired frame were then measured from the deposited clad beads by using active photogrammetry. Finally, six different CNN architectures were developed. The training revealed the best architecture to be the one with most convolutional layers and less densely connected layers, trained by the Adadelta optimizer. This architecture achieved a coefficient of determination value above 0.98 for multiple output test phase, which means an error mean as low as 2.4 μm with a standard deviation of 134.8 μm. For single outputs, this value remained on 0.950 for the width output and on 0.959 for the height output, meaning error means of 5.1 μm and 10.9 μm and standard deviations of 153.7 μm and 86.3 μm, respectively.

As a direction independent method, this approach is yet another alternative for laser cladding bead geometry estimation, with the potential to be used in real time. Many improvements are yet to be done, such as enlarging the number of deposit beads for more data—which would be useful for reducing overfitting—and to try different alterations on the architectures, particularly on the number of neurons. Different materials and processing conditions could also be explored.

References

Meriaudeau F, Renier E (1996) Truchetet F CCD technology applied to laser cladding. In: Anagnostopoulos Constantine N, Blouke Morley M, Lesser Michael P (eds) Solid state sensor arrays and CCD cameras. CA, USA, San Jose, pp 299–309

Meriaudeau F, Truchetet F (1996) Image processing applied to the laser cladding process. Proc SPIE Int Soc Opt Eng 2789:93–103

Meriaudeau F, Truchetet F (1996) Control and optimization of the laser cladding process using matrix cameras and image processing. Journal of Laser Applications 8(6):317–324. https://doi.org/10.2351/1.4745438

Meriaudeau F, Truchetet F, Dumont C, Renier E, Bolland P Acquisition and image processing system able to optimize laser cladding process. In: Proceedings of the 1996 3rd International Conference on Signal Processing, ICSP‘96. Part 1 (of 2), Piscataway, NJ, United States, Beijing, China, 1996. IEEE, pp 1628–1631

Hu D, Mei H, Kovacevic R (2002) Improving solid freeform fabrication by laser-based additive manufacturing. Proc Inst Mech Eng B J Eng Manuf 216(9):1253–1264. https://doi.org/10.1243/095440502760291808

Hu D, Kovacevic R (2003) Modelling and measuring the thermal behaviour of the molten pool in closed-loop controlled laser-based additive manufacturing. Proc Inst Mech Eng B J Eng Manuf 217(4):441–452. https://doi.org/10.1243/095440503321628125

Toyserkani E, Khajepour A (2006) A mechatronics approach to laser powder deposition process. Mechatronics 16(10):631–641. https://doi.org/10.1016/j.mechatronics.2006.05.002

Xing F, Liu W, Wang T Real-time sensing and control of metal powder laser forming. In: 6th World Congress on Intelligent Control and Automation, WCICA 2006, Dalian, 2006. pp 6661–6665. doi:https://doi.org/10.1109/WCICA.2006.1714372

Hofman JT, De Lange DF, Meijer J Camera based feedback control of the laser cladding process. In: ICALEO 2006 - 25th International Congress on Applications of Laser and Electro-Optics, Scottsdale, AZ, 2006

Lei J, Wang Z, Liu L (2010) Design of forming shape measurement system for laser molten pool in laser fabricating. International Conference on Engineering Design and Optimization, ICEDO 2010, vol 37–38. Ningbo. doi:https://doi.org/10.4028/www.scientific.net/AMM.37-38.327

Arias JL, Montealegre MA, Vidal F, Rodríguez J, Mann S, Abels P, Motmans F Real-time laser cladding control with variable spot size. In: Laser 3D Manufacturing, San Francisco, CA, 2014. SPIE. doi:https://doi.org/10.1117/12.2040058

Ocylok S, Alexeev E, Mann S, Weisheit A, Wissenbach K, Kelbassa I Correlations of melt pool geometry and process parameters during laser metal deposition by coaxial process monitoring. In: Schmidt M, Merklein M, Vollertsen F (eds) International Conference on Laser Assisted Net Shape Engineering, LANE 2014, 2014. Elsevier B.V., pp 228–238. doi:https://doi.org/10.1016/j.phpro.2014.08.167

Moralejo S, Penaranda X, Nieto S, Barrios A, Arrizubieta I, Tabernero I, Figueras J (2017) A feedforward controller for tuning laser cladding melt pool geometry in real time. Int J Adv Manuf Technol 89(1–4):821–831. https://doi.org/10.1007/s00170-016-9138-7

Iravani-Tabrizipour M, Toyserkani E (2007) An image-based feature tracking algorithm for real-time measurement of clad height. Mach Vis Appl 18(6):343–354. https://doi.org/10.1007/s00138-006-0066-7

Iravani-Tabrizipour M, Asselin M, Toyserkani E Development of an image-based feature tracking algorithm for real-time clad height detection. In: 4th IFAC Symposium on Mechatronic Systems, MX 2006, Heidelberg, 2006. pp 914–920

Mondal S, Bandyopadhyay A, Pal PK An experimental investigation into the optimal processing conditions for the co2 laser cladding of 20 MnCr5 steel using taguchi method and ANN. In: International Conference on Modeling, Optimization, and Computing, ICMOC 2010, Durgapur, West Bengal, 2010. pp 392–398. doi:https://doi.org/10.1063/1.3516337

Aggarwal K, Urbanic RJ, Saqib SM (2018) Development of predictive models for effective process parameter selection for single and overlapping laser clad bead geometry. Rapid Prototyping J 24(1):214–228. https://doi.org/10.1108/RPJ-04-2016-0059

Caiazzo F, Caggiano A (2018) Laser direct metal deposition of 2024 al alloy: trace geometry prediction via machine learning. Mater 11(3). https://doi.org/10.3390/ma11030444

Huaming LX, Qin; Song, Huang; Lei, Jin; Yongliang, Wang; Kaiyun, Lei (2018) Geometry characteristics prediction of single track cladding deposited by high power diode laser based on genetic algorithm and neural network. Int J Precis Eng Manuf 19 (7):1061–1070. doi:https://doi.org/10.1007/s12541-018-0126-8

Barua S, Sparks T, Liou F (2011) Development of low-cost imaging system for laser metal deposition processes. Rapid Prototyping J 17(3):203–210. https://doi.org/10.1108/13552541111124789

Davis TA, Shin YC (2011) Vision-based clad height measurement. Mach Vision Appl 22(1):129–136. https://doi.org/10.1007/s00138-009-0240-9

Liu J, Wu Y, Wang L In-situ measurement based on prior calibration with analogist samples for laser cladding. In: High-Power Lasers and Applications VI, November 5, 2012 - November 5, 2012, Beijing, China, 2012. Proceedings of SPIE - The International Society for Optical Engineering. SPIE, pp The Society of Photo-Optical Instrumentation Engineers (SPIE); Chinese Optical Society (COS). doi:https://doi.org/10.1117/12.2000253

Doubenskaia M, Pavlov M, Grigoriev S, Smurov I (2013) Definition of brightness temperature and restoration of true temperature in laser cladding using infrared camera. Surf Coat Technol 220:244–247. https://doi.org/10.1016/j.surfcoat.2012.10.044

Acknowledgments

The authors would like to thank the Laboratório de Mecânica de Precisão (Precision Mechanics Laboratory) staff for making this work possible. They would also like to thank the Labmetro for the hardware support.

Funding

The authors would like to thank the Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq) for funding this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gonçalves, D.A., Stemmer, M.R. & Pereira, M. A convolutional neural network approach on bead geometry estimation for a laser cladding system. Int J Adv Manuf Technol 106, 1811–1821 (2020). https://doi.org/10.1007/s00170-019-04669-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-019-04669-z