Abstract

Bayesian optimization (BO) is a global optimization method that has the potential for design optimization. However, in classical BO algorithm, the variables are considered as continuous. In real-world engineering problems, both continuous and discrete variables are present. In this work, an efficient approach to incorporate discrete variables to BO is proposed. In the proposed constrained mixed-integer BO method, the sample set is decomposed into smaller clusters during sequential sampling, where each cluster corresponds to a unique ordered set of discrete variables, and a Gaussian process regression (GP) metamodel is constructed for each cluster. The model prediction is formed as the Gaussian mixture model, where the weights are computed based on the pair-wise Wasserstein distance between clusters and gradually converge to an independent GP as the optimization process advances. The definition of neighborhood can be flexibly and manually defined to account for independence between clusters, such as in the case of categorical variables. Theoretical results are provided in concert with two numerical and engineering examples, and two examples for metamaterial developments, including one fractal and one auxetic metamaterials, where the effective properties depend on both the geometry and the bulk material properties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Designing materials is to identify structures at micro- and nanoscales to achieve the desirable properties. The major process of design is to establish structure-property relationships, based on which design optimization can be performed. Simulation tools at multiple scales (from atomistic to continuum) have been developed to accelerate this process. Nevertheless, the major technical challenges of efficiency and accuracy still exist. The first one is searching in high-dimensional design space to find the global optimum of material compositions and structural configurations. The second one is the uncertainty associated with the high-dimensional structure-property relationships, which are usually constructed as surrogate models or metamodels. Particularly, aleatory uncertainty can be linked to natural randomness of materials (e.g., grain sizes and grain shapes in polycrystalline materials). Epistemic uncertainty is mainly due to approximations and numerical treatments in surrogates and simulation models. Methods of searching globally for optimal and robust solutions are needed.

Bayesian optimization (BO) is a metamodel-based methodology to seek for the global optimal solution under uncertainty in the search space with sequential sampling. Compared to other bio-inspired global optimization algorithms, such as ant colony systems, particle swarm, and genetic algorithm (GA), it has the advantage of maintaining the global search history by constructing a metamodel to approximate the objective function. Typically the metamodel is based on the Gaussian process (GP) method, and actively updated as more samples are collected. However, in current formulation of GP, input variables are restricted to be continuous. In real-world engineering problems, input design variables and parameters can be categorical or discrete. For example, binary variables can be used to enable or disable a design feature. The number of features has integer values. Therefore, extending BO method to accommodate discrete variables is an important topic for solving real-world problems.

Another major issue that prohibits the BO and GP framework is its lack of scalability in searching the high-dimensional space when the number of input variables is large. The required number of sample points grows exponentially as \(\mathcal {O}(s^{d})\) with respect to the dimension of search space d, where s is the number of sampling point for each dimension. The phenomenon is referred to as the curse-of-dimensionality in the literature. As a result, the size of the covariance matrix in GP also grows exponentially with respect to the dimensionality, creating the computational bottleneck in computing the inverse of the covariance matrix.

In this paper, a new BO method is proposed for constrained mixed-integer optimization problems to incorporate discrete design variables into the BO algorithm. In the proposed method, the large dataset of samples is decomposed into smaller clusters, where each cluster corresponds to a unique combination of discrete variable values, which is referred to as a discrete tuple. A GP is then constructed within each cluster. During the search and metamodel update processes, the mean and variance predictions are formulated as a Gaussian mixture model, where the weighted average predictions are combined from those of neighboring clusters, based on the pair-wise distance between the main and the neighboring clusters. The neighborhood of each cluster is constructed only once during the initialization.

Because of the decomposition approach, the number of sampling points to construct each cluster is significantly reduced compared to the whole dataset, and the GP thus is faster to construct for each cluster. This approach, however, leads to an undesirable effect of sparsity within each GP cluster. As a result, the posterior variance might be slightly overestimated. To circumvent the sparsity effect of the decomposition approach, a weighted average scheme is adapted to “borrow” the sampling points from neighboring clusters, where the discrete tuples of the neighbors slightly differ from the discrete tuple of the original cluster. The definition of neighborhood is completely controlled by users, and neighbors can be added or removed accordingly.

The unique advantage of the proposed method is that the optimization problem of both continuous and discrete variables and the acceleration of GP for high-dimensional problems are solved simultaneously. Theoretical results are provided and discussed in concert with computational metamaterials design applications.

In the remainder of the paper, Section 2 provides a literature review for BO methodology, its extension, such as constrained and mix-integer optimization problems, and its applications. Section 3 describes the proposed constrained mixed-integer BO algorithm using Gaussian mixture model, including theoretical analysis of algorithmic complexity as well as lower and upper bounds of the predictions. The methodology is demonstrated with applications in computational design of metamaterials. Metamaterials are an emerging class of engineered materials that exhibit interesting and desirable macroscopic properties, which can be tailored, because of their engineered geometric structures rather than the material composition.

In Section 4, the proposed method is verified using an analytical function that is modified based on a discrete version of the Rastrigin function, an engineering example of welded beam design, where the discrete variables encode the material selection and design configuration of the beam. In the first engineering example of Section 5.1, we focus on designing high-strength and low-weight fractal metamaterials, where the effective material properties, such as effective Young’s modulus, are obtained using finite element method (FEM). In the second engineering example of Section 5.2, the method is demonstrated using an auxetic metamaterials for polymers, where the effective negative Poisson’s ratio is optimized. Section 6 includes the discussion of the limitations in the proposed approach, and Section 7 concludes the paper, respectively.

2 Related work

Here, we conduct a literature review on related BO work and its design applications. In Section 2.1, the widely used acquisition functions for BO are introduced. The constrained optimization problem in BO is reviewed in Section 2.2. In Section 2.3, the mixed-integer optimization problem in BO and its related work is discussed. In Section 2.4, the applications of GP in design optimization is provided.

2.1 Acquisition function

BO is a metamodel-based optimization framework that uses GP as the metamodel. The major difference between BO- and GP-based optimization is the sampling strategy to construct the metamodel.

The significant extension of BO is the implementation of the so-called acquisition function that dictates the location of the next sampling design site. This acquisition function reconciles the trade-off between exploration (navigating to the most uncertain region) and exploitation (driving the solution to the optimum) in the optimization process.

Given the objective function y = f(x), the acquisition function \(a(\boldsymbol {x};\{\boldsymbol {x}_{i},y_{i} \}_{i = 1}^{N},\theta )\) depends on previous N observations or samples \(\{\boldsymbol {x}_{i},y_{i} \}_{i = 1}^{N}\) and GP hyperparameters θ and must be defined to strike a balance between exploration and exploitation. In exploration, the acquisition function a would lead to the next sampling point in an unknown region where the posterior variance σ2(x) is large. In exploitation, the acquisition function a would result in the next sampling point where posterior mean μ(x) is large for a maximization problem (or small for minimization).

There are mainly three types of acquisition functions: probability of improvement (PI), expected improvement (EI), and upper confidence bound (UCB). They are defined as follows.

Let \(\boldsymbol {x}_{\text {best}} = \underset {\boldsymbol {x}_{i}}{\arg \max } f(\boldsymbol {x}_{i})\) be the best sample achieved so far during sequential sampling for a maximization problem, ϕ(⋅) and Φ(⋅) be the probability density function and cumulative distribution function of the standard normal distribution respectively. The PI acquisition function (Mockus and Mockus 1991) is defined as

where

indicates the deviation away from the best sample.

The EI acquisition function (Mockus 1975; Huang et al. 2006) is mathematically expressed as

Recently, Srinivas et al. (2009, 2012) proposed a new form of UCB acquisition function,

where κ is a hyperparameter describing the exploitation-exploration balance.

2.2 Constrained BO

Constrained BO is a natural and important extension of the classical BO method. Constrained optimization problems based on engineering model and simulation can be classified as two types: known and unknown constraints. The known constraints, or a priori constraints, are the ones known before the simulation, and thus can be evaluated independently without running simulations. On the other hand, the unknown constraints are the ones that are unpredictable without running the simulation, and thus can be only incorporated once the simulation is over, e.g., no solution because of numerical divergence. Generally speaking, the unknown constraints are more difficult to assess because it involves handling the classification problem, satisfied or violated, with respect to the optimization problem.

Digabel and Wild (2015) summarized and provided a systematic classification and taxonomy for constrained optimization problem. Gardner et al. (2014) proposed a penalized acquisition function approach to limit the searching space for the next sampling location. Gelbart et al. (2014) suggested an entropy search criterion to search for the next sampling point under the formulation of the EI acquisition function. Hernández-Lobato et al. (2015, 2016) introduced a predictive entropy search and predictive entropy search with constraints, respectively, which maximizes the expected information gained with respect to the global maximum. Rehman and Langelaar (2017) modeled constraints as a simple model and incorporated probability of feasibility measure to alternate the EI acquisition function. Li et al. (2018) proposed a sequential Monte Carlo approach with radial basis function as surrogate model to solve for the constrained optimization problem.

2.3 Mixed-integer Bayesian optimization

The BO extension to mixed-integer problems is rather limited, partly because mixed-integer problems carry difficulties from both discrete and continuous optimization problems. Another approach is that the discrete optimization can be converted to continuous optimization, using simple rounding operation. The approach is not mathematically rigorous, but is still widely accepted in practice. Here, we review several contributions in term of methodology to incorporate discrete variables.

Davis and Ierapetritou (2009) combined a branch-and-bound approach with BO method to solve the mixed-integer optimization problems.

Müller et al. (2013, 2014, 2016) introduced three algorithms, which are Surrogate Optimization-Mixed Integer (Müller et al. 2013), Surrogate Optimization-Integer (Müller et al. 2014), and Mixed-Integer Surrogate Optimization (Müller 2016), which differ in the perturbation sampling strategies and utilize GP as the surrogate model, to solve for the mixed-integer nonlinear problems. Hemker et al. (2008) compared the performance of a GA, the implicit filtering algorithm, and a branch-and-bound approach formulated on BO algorithm to solve for a set of constrained mix-integer problems in groundwater management.

For mixed-integer extension for GP, van Stein et al. (2015) proposed a distributed kriging approach, where the dataset is decomposed for continuous variables using k-mean algorithm, and the optimal weights are computed based on the inverse posterior variance of each cluster. Gramacy and Lee (2008a, b, 2010) developed a treed GP that is naturally extensible to handle discrete variables. In the case of discrete variables, the GP is one-hot encoded by the binary combination of the discrete variables. Storlie et al. (2011) developed the adaptive component selection shrinkage operator (ACOSSO) method extended from Lin and Zhang (2006a, b), which uses the smoothing spline ANOVA decomposition to decompose the total variance to multivariate functions. Qian et al. (2008) and Zhou et al. (2011) approached the mixed-integer problem from the covariance kernel of GP, proposing the exchange correlation, the multiplicative correlation, and the unrestricted correlation functions to handle discrete variable that is reminiscent of categorical regression. Swiler et al. (2014) compared three above methods and concluded that GP with special correlation kernel (Qian et al. 2008; Zhou et al. 2011) performs most consistently among the test functions.

2.4 GP-based design optimization

GP, also known as kriging, has been widely applied in constructing surrogates or metamodels for design optimization. Simpson et al. (2001), Queipo et al. (2005), Martins and Lambe (2013), Sóbester et al. (2014), and Viana et al. (2014) provided comprehensive reviews on the use of kriging and other surrogate models for multi-disciplinary design optimization. More recently, Li et al. (2008) proposed a kriging metamodel assisted multi-objective GA to solve multi-objective optimization problems. Jang et al. (2014) used dynamic kriging to solve a design optimization in fluid-solid interaction. Zhang et al. (2014) also used kriging to approximate the pump performance and optimize two objective functions with respect to four design variables. Kim et al. (2017a) optimized and verified a fluid dynamic bearings simulation using kriging approach. Kim et al. (2017b) applied multi-fidelity kriging and optimized film-cooling hole arrangement. Liu et al. (2017) employed surrogate-based parallel optimization method to reduce the computational time for a computational fluid dynamics problem with six design variables. Song et al. (2017) used a gradient-enhanced hierarchical kriging to optimize drag on airfoils at a specified angle of attack. Zhou et al. (2017, 2018) developed a multi-fidelity kriging scheme to approximate the lift coefficient as a function of Mach number and angle of attack in airfoils with computational fluid dynamics analysis.

In the above work, design variables are all continuous. Compared to these GP-based optimization, BO formulation provides a more generic and robust searching procedure.

3 Proposed mixed-integer Bayesian optimization

The proposed mixed-integer BO based on distributed GP provides an efficient searching method for large scale design problems, where design variables can be either continuous or discrete. The discrete variables include both categorical and integer variables, regardless of the existence of order relations. Let x = (x(d), x(c)) be the design variables, where \(\boldsymbol {x}^{(d)} \in \mathbb {D}\) are discrete variables in n-dimensional space \(\mathbb {D}\) and \(\boldsymbol {x}^{(c)} \in \mathbb {R}^{m-n}\) are continuous variables in (m − n)-dimensional space \(\mathbb {R}^{m-n}\). Together, they form a vector of design variables in the m-dimensional space \(\mathcal {X}\). Let f(x) be the objective function. The design optimization problem solves the maximization problem

subject to some inequality constraints

where ic is the number of inequality constraints.

Here, the notation for the rest of the paper is as follows. μl(x) is used to denote the posterior mean of the l th cluster at the query point x. \(\hat {\mu }\) is the prediction formed by Gaussian mixture model of all the clusters. \(\bar {\mu }_{l}\) is the mean of the l th cluster.

In the proposed mixed-integer BO, the large dataset of observations is decomposed into smaller local clusters, where each cluster is used to construct a local GP. Because the large dataset has been decomposed and the number of data points has reduced, the prediction within each cluster is not as accurate, and can be improved by “borrowing” from neighboring dataset under a weighted average scheme. The large dataset with continuous and discrete variables can be decomposed to finitely many clusters, according to the tuple of discrete variables. In each cluster, the data points share the same discrete variable values. The classical GP approach is then applied to the dataset in each cluster to construct a GP model.

Because of the decomposition scheme, the number of data points within each cluster is reduced, compared to the number of data points of the whole dataset. This leads to a sparser dataset within a cluster, and the posterior variance is enlarged. To improve the prediction, the datasets from neighboring clusters are initially “borrowed” to improve the prediction on the tuple of continuous variables \(\boldsymbol {x}^{(c)} \in \mathbb {R}^{m-n}\), where the “borrowed” data points are gradually eliminated as the optimization process converges via the weight computation algorithm. On the other hand, the sparsity induced by the decomposition scheme reduces the cost of computing the inverse of the covariance matrix. In this weighted average scheme, the weights are computed and penalized based on the pair-wise Wasserstein distance between clusters, as well as the posterior variance of the cluster to obtain a more accurate predictions to aid in the convergence of the optimization process.

Figure 1 presents an overview of the workflow for the proposed mixed-integer BO method in this paper. First, initial samples, typically obtained from Monte Carlo or Latin hypercube sampling, are used to construct the metamodel, where a local GP is associated with each individual cluster. Next, a next sampling point is located within each cluster according to its acquisition functional value. Then, a global sampling point for all clusters is determined among the collection of all the next sampling points from each cluster. The objective function is then called to evaluate at the global sampling location. A local GP is updated at the cluster corresponding to the global sampling point. A new local sampling point is located within the same cluster, and the process repeats until some optimization criteria are met.

The following subsections are organized as follows. Section 3.1 briefly reviews the GP formulation. Section 3.2 discusses the enumeration algorithm for clusters and the discrete tuple. Section 3.3 describes the definition of cluster neighborhood that is used to form a Gaussian mixture model. Section 3.4 details the weight computations for each individual cluster in the Gaussian mixture model. Section 3.5 presents the computation of posterior mean and posterior variance of the Gaussian mixture model. Section 3.6 describes the penalized scheme to incorporate constraints into the acquisition function. Section 3.7 analyzes the theoretical bounds and computational cost of the proposed mixed-integer BO method.

3.1 Gaussian process

We follow the notation introduced by Shahriari et al. (2016) to briefly introduce GP formulation for continuous variables. GP(μ0, k) is a nonparametric model that is characterized by its prior mean \(\mu _{0}: \mathcal {X} \mapsto \mathbb {R}\) and its covariance kernel \(k:\mathcal {X} \times \mathcal {X} \mapsto \mathbb {R}\). Define fi = f(xi) and y1:N as the unknown function values and noisy observations, respectively. In the GP formulation, it is assumed that the f = f1:N are jointly Gaussian and y = y1:N are normally distributed given f; then, the prior distribution induced by the GP can be described as

where the elements of mean vector and covariance matrix are described by mi := μ0(xi) and Ki, j := k(xi, xj).

Equation 7 describes the prior distribution induced by the GP, where X is the sampling location, and f is the objective function. In the GP formulation, y is the noise-corrupted stochastic output of f(x) with the variance of σ2, at the sampling location X. The objective function f is assumed to be a multivariate normal distribution function with mean m(x) and covariance K(x).

Let N be the number of sampling locations, and \(\mathcal {D}_{N} = \{ \boldsymbol {x}_{i},y_{i} \}_{i = 1}^{N}\) be the set of observations. The covariance kernel k is a choice of modeling the correlation between input locations xi. Covariance functions where length-scale parameters can be inferred through maximum likelihood function is known as automatic relevance determination kernels. One of the most widely used kernels in this kernel family is the squared-exponential kernel,

where r2 = (x −x′)Γ(x −x′), Γ is a diagonal matrix of (m − n) × (m − n), and θi is the length scale parameter.

The posterior Gaussian for the sequential BO is characterized by the mean

and the variance

where k(x) is the vector of covariance terms between x and x1:N.

3.2 Clustering and enumeration algorithm

Assuming that the discrete variables are independent of each other, a clustering and enumeration algorithm is devised to automatically decompose the large dataset to smaller clusters based on the discrete tuple and tag a cluster with a unique index from the enumeration scheme. For the case when some discrete variables are dependent on others, the neighborhood can be manually changed to reflect the knowledge. The set of discrete variables for each cluster is represented as a discrete tuple where each element is a positive integer.

For an integer variable where order relation exists, the discrete variable can simply be represented as a positive integer, e.g., 1 ≤ 2. For a categorical variable where order relation does not exist, such as the type of cross section (square or circular), colors (red or blue), type of materials (aluminum or copper), and configuration settings, positive integers can still be used. The choice of using tuple of positive integers as a general representation does not affect the clustering and enumeration scheme, but would affect the construction of neighborhood for each cluster, depending on the nature of discrete variables.

Suppose that the input x = (x(d), x(c)) = (x1, ⋯ , xn, xn+ 1, ⋯ , xm) includes n discrete and m − n continuous variables. If pi is denoted as the total number of possible values for discrete variable xi,1 ≤ i ≤ n, then the number of clusters is \(L = \prod \limits _{i = 1}^{n} p_{i}\). Due to the complexity of possible combinations, each cluster is assigned a unique index in such a way that the map between their discrete variables and cluster index is one-to-one. The index is calculated based on the total ordering of tuples. Without loss of generality, assume that each discrete variable xi is bounded by 1 ≤ xi ≤ pi, i.e., xi ∈ {1, ⋯ , pi} for 1 ≤ i ≤ n. Then, the relation of lexicographical order, denoted as ≺, can be defined for a pair of tuples on the set of all tuples as

if and only if ∃k : 1 ≤ k ≤ n : (∀j : 1 ≤ j < k : ai = bi) andak < bk, and 1 ≤ ai, bi ≤ pi for all i. With the definition of lexicographical order ≺, the cluster index l for the tuple (a1, ⋯ , an) can now be calculated as

Because the index of cluster is uniquely defined based on the tuple of discrete variables, the tuple describing the set of discrete variables can be reconstructed using the index of the cluster, with the quotient and remainder algorithm recursively shown in Algorithm 1. It describes how to construct the set of discrete variables from the cluster index l.

The implementation of Algorithm 1 can be based on existing functions such as MATLAB function ind2sub(). Equation 12, which is a reverse operation of Algorithm 1, can also be implemented using MATLAB function sub2ind().

3.3 Construction of neighborhood

Consider a cluster with index l, with the tuple of discrete variables (a1, ⋯ , an), the neighbors of the l th cluster \(\mathcal {B}(l)\) is the collection of clusters that share most of similarity with the original cluster. Intuitively, the neighborhood is constructed based on the belief of whether there exists a relationship between two clusters.

For example, for integer variables, the discrete tuples of the neighboring clusters may differ in one or a few different integer variables compared to that of the original cluster. In the same manner, for categorical variables, the discrete tuples of the neighboring clusters may differ in one or a few categorical variables compared to that of the original cluster. Based on this description, a possible choice to define the neighborhood \(\mathcal {B}(l)\) of the l th cluster can be mathematically expressed as

where \(d\left ((a)_{i = 1}^{n}, (a^{*})_{i = 1}^{n}\right )\) is some metric on a discrete topological space \(\mathbb {D}\), and dth is a user-defined threshold. The metric d(⋅,⋅) can be any lp-norm, for example, Manhattan distance (l1-norm), or a counting metric of how many discrete (integer and categorical) variables are different between two tuples. It is noted that the metric d(⋅,⋅) does not have to strictly obey the definition of mathematical norm. In the special case when this metric is set to zero, i.e., \(d\left ((a)_{i = 1}^{n}, (a^{*})_{i = 1}^{n} \right ) = 0\), it means that all the clusters are considered to be completely independent of each other. The construction of neighborhood only occurs once during the initialization.

Furthermore, it should be emphasized that the neighboring list can be manually changed to reflect the physics-based knowledge from the users, or manually constructed to reflect the dependency of the discrete variables. In the case of categorical variables where independence is usually observed, one can simply remove the neighboring cluster from the corresponding categorical variable, as the neighborhood can be manually changed during the initialization phase of the optimization process.

It is recommended to define the neighborhood carefully, as the neighborhood definition has an impact on both convergence rate, and whether the optimization would be trapped at local optimum. The safest setting is to assign dth = 0, where clusters are assumed to be completely independent of each other. Small values of dth, e.g., dth = 1 or dth = 2, might be beneficial, depending on the specific applications. Large value is not recommended.

Figure 2 shows an example of constructing clusters for two discrete variables (x1, x2), where 1 ≤ x1 ≤ 4 and 1 ≤ x2 ≤ 3. According to Algorithm 1, the tuple p is (4, 3), cluster 1 is associated with (1, 1), cluster 2 is associated with (1, 2), cluster 4 is associated with (2, 1), etc. The cluster index is denoted as an italic number on the top right corner of the square. Consider cluster 8, which is associated with the discrete tuple (3,2). If the Manhattan distance is chosen to define the neighborhood, then the choice of dth = 0 in (13) would make every cluster the only neighbor of itself, e.g., the neighbor of cluster 8 is cluster 8. The choice of dth = 1 would include clusters 5, 7, 8, 9, and 11 in cluster 8’s neighborhood. Similarly, the choice of dth = 2 would include clusters 2, 4, 5, 6, 7, 8, 9, 10, 11, and 12 in cluster 8’s neighborhood.

3.4 Weight computation

The weight of each cluster’s prediction is determined by the Wasserstein distance between the Gaussian posterior of the main cluster with that of the neighboring clusters. Combined together, they form a Gaussian mixture model to predict a response at a query point x.

Consider a query point x in the l th cluster, which has the continuous tuple x(c) = (xn+ 1, ⋯ , xm). Denote the neighborhood of the l th cluster as B(l) = {l∗}, where the cardinality of |B(l)| = k, i.e., there are k neighbors in the l th cluster neighborhood.

Each of the neighboring cluster l∗ can form its own prediction \(\mathcal {N}(\mu _{l^{*}}, \sigma ^{2}_{l^{*}})\) from the continuous tuple, including \(\mathcal {N}(\mu _{l}, {\sigma ^{2}_{l}})\) for l th cluster. However, the prediction must be adjusted by accounting for the bias, i.e., \(\text {Bias}_{l^{*}} [\mu _{l^{*}}] = \mathbb {E}[\mu _{l^{*}} - \mu _{l}] = \bar {\mu }_{l^{*}} - \bar {\mu }_{l}\) as the difference between the posterior means of two clusters, and the variance \(\sigma ^{2}_{l^{*}}\).

The weight \(w_{l^{*}}\) associated with the prediction from the l∗ cluster should be larger with smaller bias \((\bar {\mu }_{l^{*}} - \bar {\mu }_{l})\) and smaller posterior variance \(\sigma _{l^{*}}^{2}\). The necessity of bias correction is explained later in Theorem 4. The Wasserstein distance between two univariate Gaussian \(\mathcal {N}(\mu _{l^{*}}, \sigma ^{2}_{l^{*}})\) and \(\mathcal {N}(\mu _{l}, {\sigma ^{2}_{l}})\) is provided by Givens et al. (1984) as

Here, we propose a deterministic way to compute the numerical weights based on the pair-wise Wasserstein distance, which eventually converges to an independent GP as the optimization process advances. It is easy to see that the W2-distance of the l th cluster’s prediction to itself is zero, as W2 is a distance. The weights are computed according to an inverse W2-distance with a term \({\sigma ^{2}_{l}}\) from the l th cluster, as

In (15), \(w_{l^{*}}\) are computed based on two factors, the W2-distance, and the \({\sigma _{l}^{2}}\) prediction of the l th cluster. As the optimization process advances, the posterior variance approaches zero, i.e., \({\sigma _{l}^{2}} \to 0\). As a result, the weight scheme converges to a single GP prediction of the corresponding l th cluster.

3.5 Prediction using weighted average of k-nearest neighboring clusters

We model the prediction of a query point using a Gaussian mixture distribution, where the weights are computed on the statistical Wasserstein distance. To predict an unknown query point x = (xd, xc) = (x1, ⋯ , xn, xn+ 1, ⋯ , xm), we first find the cluster in which x belongs to, and its neighboring clusters. Assume that x belongs to the l th cluster, and there are k-neighboring clusters.

The principle for weight computation is as follows. As the bias increases, the contributed weight of the prediction \(w_{l^{*}}\) from the l∗th cluster to l th cluster is reduced to a smaller value. As the bias or the pair-wise distance between clusters increases, the contributed weights also decrease. The weight vector is normalized at every step, and eventually converges to a single GP prediction with the weight vector of [0, ⋯ , 1, ⋯ , 0], where 1 is located as the l th cluster.

Since x is located within the l th cluster, the weight from the l th cluster is the highest, i.e., if l∗ = l, then \(\mu _{l^{*}} + \bar {\mu }_{l} - \bar {\mu }_{l^{*}} = \mu _{l}\), which is the GP prediction for the l th cluster. The posterior mean of the proposed method is written as

where the sum is taken over the list of neighboring cluster from the main cluster l th. \(\bar {\mu }_{l}\) and \(\bar {\mu }_{l^{*}}\) denote the means of the l-th and l∗-th clusters, respectively. w∗ denotes the weight corresponding to the l∗th cluster, which is computed once the discrete tuple x(d) of the query point x = (x(d), x(c)) is determined. The posterior variance of the proposed method is calculated as

where \(\sigma _{l^{*}}^{2}\) denotes the posterior variance associated with the continuous tuple x(c) of the query point x = (x(d), x(c)).

The prediction scheme for mean \(\hat {\mu }(\boldsymbol {x})\) and variance \(\hat {\sigma }^{2}(\boldsymbol {x})\) for an arbitrary location x using Gaussian mixture model can be summarized in Algorithm 2.

3.6 Constrained acquisition function in mixed-integer Bayesian optimization

The acquisition function is adopted from Gardner et al. (2014) for inequality constraints, and further extended to accommodate discrete and continuous variables to solve for the constrained mixed-integer optimization problems.

First, the constraint is checked using an indicator function \(\mathcal {I}(\boldsymbol {x})\) for all ic constrained inequalities, as

The constrained acquisition function can be considered as the product of the classical acquisition function. As a result, the acquisition function is assigned to have zero value for infeasible region. The penalized approach can be implemented directly into the auxiliary optimizer, which is used to maximize the acquisition function in BO.

In distributed GP, an input xnext = (x1, ⋯ , xn, xn+ 1, ⋯ , xm) is comprised of both discrete and continuous variables. For each cluster corresponding to a unique set of discrete tuple (x1, ⋯ , xn), a distinct next sampling point associated with each cluster is located by maximizing the acquisition function on the tuple of continuous variables (xn+ 1, ⋯ , xm) for each iteration, in the same manner as classical BO. These next sampling points are retained within the respective clusters. However, only the sampling point corresponding to the maximal value of acquisition function among all clusters is chosen, and a new sampling point within that cluster is located and updated for the corresponding cluster. The sampling procedure repeats until the optimization criterion is met. In other words, the next sampling point is chosen as

where the l∗th cluster corresponds to the tuple of discrete variables (x1, ⋯ , xn), and \(\mathcal {I}(\boldsymbol {x})\) is the constraint indicator function.

Equation 19, which describes the searching procedure for the next sampling point by maximizing the penalized acquisition function, is explained as follows. Two loops are constructed to search for the global sampling point. In the inner loop which searches for the local sampling point within each cluster, the penalized acquisition function is the objective function. Maximizing this penalized acquisition function using an auxiliary optimizer yields the local sampling point for each cluster. In the outer loop, the cluster with the maximized acquisition function value is determined. The discrete tuple corresponding to the cluster index, which contains the sampling point with the maximum value for the acquisition function, is reconstructed using Algorithm 1. In other words, the sampling location x is decomposed to two parts: the inner loop searches for the continuous tuple, whereas the outer loop yields the discrete tuple. Theoretically, once the functional evaluation is over, only the cluster that contains the last sampling location needs to be updated. Practically, all the clusters need to update their corresponding sampling locations xnext after certain number of iterations, in order to avoid trapping in local optimum.

The tuple of continuous variables is found by maximizing the acquisition function, whereas the tuple of discrete variables is assigned according to the cluster index. For the EI and PI acquisition functions, xbest is modified to be the best point achieved so far among all clusters. For the UCB acquisition function, no modification is needed, assuming the hyperparameter κ is uniform for all clusters. It is noted that the balance between exploration and exploitation is preserved locally within each cluster and thus is also preserved globally for all the clusters.

3.7 Theoretical bounds and computational cost

Here, we provide the theoretical lower and upper bounds for predictions and algorithm complexity under the formulation of Gaussian mixture model in Theorem 1 and Theorem 2. Theorem 3 proves that under the formulation of the proposed method, the largest weight is associated with the main cluster. Theorem 4 explains the necessity of translation in mean prediction so that the expected value of the mean is the same with the expected mean in the main cluster.

Theorem 1

The Gaussian mixture posterior mean \(\hat {\mu } = \sum \limits _{l^{*} \in \mathcal {B}(l)} w_{l^{*}} \left (\mu _{l^{*}} + \bar {\mu }_{l} - \bar {\mu }_{l^{*}} \right )\) is bounded by

Proof

The proof for the posterior mean is straightforward, noting that \(w_{l^{*}} \geq 0, \forall l^{*}\) and \(\sum w_{l^{*}} = 1\). □

Theorem 2

The Gaussian mixture posterior variance \(\hat {\sigma }^{2} = \sum \limits _{l^{*} \in \mathcal {B}(l)} w^{2}_{l^{*}} \sigma _{l^{*}}^{2}\) is bounded by

Proof

For the right-hand side of the variance inequality, observe that

For the left-hand side of the variance inequality, recall the Jensen’s inequality: \(\rho \left (\frac {{\sum }_{i} a_{i} x_{i}}{{\sum }_{i} {a_{i}}} \right ) \leq \frac {{\sum }_{i} a_{i} \rho (x_{i})}{{\sum }_{i} a_{i}} \), where ρ(⋅) is a convex function. Substituting \(w^{2}_{l^{*}} \to a_{i}\), \(\sigma _{l^{*}} \to x_{i}\) and ρ(x) = x2 into the Jensen’s inequality, we have

Now, note that \(\sum \limits _{l^{*}} w_{l^{*}}^{2} \leq \sum \limits _{l^{*}} w_{l^{*}} = 1\). We obtain the left-hand side of the inequality. □

Theorem 3

The largest weight is associated with the lth cluster.

Proof

Based on the weight formula,

it is easy to see that the Wasserstein distance between a cluster with itself is zero.

Thus, the right-hand side is always less than \({\sigma _{l}^{2}}\), i.e.,

Inversing the last inequality completes the proof. The equality occurs when l∗ = l. □

Theorem 4

The expectation of the posterior mean \(\hat {\mu } = \sum \limits _{l^{*} \in \mathcal {B}(l)} w_{l^{*}} \left (\mu _{l^{*}} + \bar {\mu }_{l} - \bar {\mu }_{l^{*}} \right )\) is \(\bar {\mu }_{l}\) , i.e., \(\mathbb {E}[\hat {\mu }] = \bar {\mu }_{l}\) .

Proof

Take the expectation of (9) for any lth cluster over the continuous domain, and note that \(\mathbb {E}[\boldsymbol {y} - \boldsymbol {m}] = 0\), the mean of the posterior is recovered to the mean of the cluster, i.e.,

Equation 26 holds for any l th under the GP formulation. In the similar manner, taking the expectation of the posterior mean \(\hat {\mu }\) from the proposed method over the continuous domain, we arrive at

where the second equality is formed by distributing the expectation operator under linear combination rule. The third equality follows (26) as described above. The fourth equality is formed by canceling two identical terms \(\bar {\mu }_{l^{*}}\). □

A major problem of GP is its scalability, which originates from the computation of the inverse of correlation matrices. The dataset decomposition has a favorable computational aspect in which the scalability is alleviated. Here, we analyze the computational cost based on the assumption that the size of each cluster is roughly equal. Denote the number of data points for the whole dataset as N, and the number of clusters as k. The computational cost to compute all covariance matrices is reduced by a factor of k2, as k covariance matrices are involved, and each covariance matrix has the computational complexity \(\mathcal {O}\left (\frac {N}{k}\right )^{3}\), thus resulting in the total cost of \(k \mathcal {O}\left (\frac {N}{k}\right )^{3} = \frac {1}{k^{2}} \mathcal {O}\left ({N}^{3}\right )\). Similarly, the cost of storing covariance matrices is also reduced by a factor of k, since \(k \mathcal {O}\left (\frac {N}{k}\right )^{2} = \frac {1}{k} \mathcal {O}(N^{2})\). However, the computational cost of predicting the posterior mean μ and posterior variance σ2 stays the same, since \(k \mathcal {O}\left (\frac {N}{k}\right ) = \mathcal {O}(N)\).

The decomposition approach in the proposed mixed-integer BO has a computational advantage to mitigate the scalability problem in GP, even though it is not completely eliminated.

4 Analytical examples

In this section, the proposed mixed-integer BO is compared with the genetic algorithm (GA) with various settings.

The settings for the GA are described as follows. To verify the robustness of the proposed method, three GA settings are chosen. In the first setting, the population size and the elite count parameters are set to be 50 and 3, respectively. In the second setting, the population size and the elite count parameters are set to be 150 and 10, respectively. In the third setting, they are 1500 and 10, respectively. Other parameters are left to be the default values in MATLAB function ga().

In Section 4.1, a discrete modification of the multi-modal Rastrigin function is used as a benchmark function, where two variables are discrete and the other two are continuous. In Section 4.2, a welded beam design optimization with two discrete and four continuous variables is used to evaluate the performance of the proposed mixed-integer BO method where discrete variables come from the configuration and material of the beam. In Section 4.3, a pressure vessel design optimization with four continuous variables is benchmarked. In Section 4.4, a speed reducer design optimization function with one discrete and six continuous variables is utilized. In Section 4.5, a modification of discrete sphere function is devised to demonstrate the proposed mixed-integer BO method on high-dimensional optimization problems, with 5 discrete and 50 and 100 continuous variables.

4.1 Discrete Rastrigin function

In this example, the proposed method is applied on the discrete version of the Rastrigin function, which is an analytical function for testing different optimization methods. To evaluate the effectiveness of the proposed mixed-integer BO method, the optimization performance is compared against GA optimization performance.

4.1.1 Problem statement

The DACE toolbox (Nielsen et al. 2002) for classical GP is extended to include the proposed distributed GP and Bayesian optimization. In this section, the hybrid Bayesian optimization is to find the global minimum on a tiled version of the Rastrigin function on 25 clusters, where each cluster corresponds to two discrete variables. The input x = (i, j, x, y) is comprised of four variables, in which the first two are discrete and the last two are continuous, as illustrated in Fig. 3. The original two-dimensional Rastrigin function is f(x, y) = 20 + [x2 − 10cos(2πx) + y2 − 10cos(2πy)], where − 5.12 ≤ x, y ≤ 5.12. The tiled Rastrigin function is constructed based on a tiled domain of the Rastrigin function, where each domain is characterized by a discrete tuple (i, j), and the continuous domain is translated to − 0.75 ≤ xtiled, ytiled ≤ 0.75 for all clusters. Figure 3 illustrates the construction of the tiled Rastrigin function, and its relationship with the original Rastrigin function. The relationship between the tiled and original Rastrigin can simply be described by an affine function,

where − 0.75 ≤ xtiled, ytiled ≤ 0.75.

4.1.2 Numerical results

In this example, to find the minimum of the Rastrigin function, we flip the sign of tiled Rastrigin and use the UCB acquisition function to locate the maximum of the negative tiled Rastrigin function. The covariance matrix adaptation evolution strategy (CMA-ES) (Hansen et al. 2003) method is employed to find the next sampling point within each cluster by locating the point with the maximum acquisition function. The parameters are set as follows: κ = 5, dpenalty = 10− 4, Nshuffle = 15, where Nshuffle is the number of steps which CMA-ES is reactivated with different initial positions to search for the next sampling point on each local GP in order to avoid trapping in the local minima. To construct the initial GP response surface, 5 random data points are sampled from each cluster.

Because the global minimum of the original Rastrigin function is at (x = 0, y = 0) with the functional evaluation f(0, 0) = 0, the hybrid Bayesian optimizer on the tiled Rastrigin function is expected to converge to cluster 13, as illustrated in Fig. 3. The neighbor list of cluster 13 includes clusters 8, 12, 13, 14, and 18. Figure 4 compares the numerical performance between the proposed mixed integer BO and the GA with three different settings.

Figure 4 presents the performance of the proposed method (solid line) with five different settings, and the GA method (dash line) with three different settings. For the proposed mixed-integer BO, the threshold distance dth is changed. The proposed mixed-integer BO performs best with small dth parameter, which measures the dissimilarity between discrete tuples.

4.2 Welded beam design problem

To verify the result of the proposed method, an analytical engineering model for welded beam design is adapted from Deb and Goyal (1996), Gandomi and Yang (2011), Rao (2009), and Datta and Figueira (2011), as shown in Fig. 5, with some slight modifications.

Welded beam design problem (Datta and Figueira 2011)

4.2.1 Problem statement

The low-carbon steel (C-1010) beam is welded to a rigid base to support a designated load F. The thickness of the weld h, the length of the welded joint l, the width of the beam t, and the thickness of the beam b are the design continuous variables. Two different welding configurations can be used, four-sided welding and two-side welding (Deb and Goyal 1996). The bulk material of the beam can be steel, cast iron, aluminum, or brass, which is associated with different material properties. The stress, deflection, and buckling conditions are derived from Ravindran et al. (2006), where the constant parameters are as follows: L = 14inch, δmax = 0.25 inch, and F = 6,000lb. The input x is comprised of (w, m, h, l, t, b), where w and m are discrete variables and h, l, t, and b are continuous variables. We note that h, t, and b are commonly considered as discrete variables in multiples of 0.0625 in, as well as continuous variables, bounded between lower and upper bounds.

Under this formulation, the objective is to minimize

subject to the five inequality constraints:

where

where w is the binary variable to model the type of weld, w = 0 is used for two-sided welding and w = 1 is used for four-sided welding. C1(m), C2(m), σd(m), and E(m), G(m) are material-dependent parameters (Deb and Goyal 1996; Gandomi and Yang 2011) listed in Table 1. The lower and upper bounds of the problem are 0.0625 ≤ h ≤ 2, 0.1 ≤ l ≤ 10, 2.0 ≤ t ≤ 20.0, and 0.0625 ≤ b ≤ 2.0 (Datta and Figueira 2011).

4.2.2 Numerical results

Here, the input vector is encoded as x = (w, m, h, l, t, b), where w ∈ {0, 1}, where w = 0 and w = 1 correspond to the two-sided and four-sided welding, respectively; m ∈ {1, 2, 3, 4} corresponds to steel, cast iron, aluminum, and brass, respectively.

In this example, there are 8 clusters, because there are two choices for w and four choices for m. The neighborhood \(\mathcal {B}(\cdot )\) is considered as universal, i.e., the neighborhood for each cluster includes all clusters, such that they are all aware of others.

The bounds for hyperparameters θ for the GP in each cluster are set as follows: \(\underline {\theta } = (0.1, 0.1, 0.1, 0.1)\), \(\overline {\theta } = (20.0, 20.0, 20.0, 20.0)\). Every four iterations, the sampling point location in each cluster is computed again to avoid trapping in local minima. CMA-ES (Hansen et al. 2003) is used as an auxiliary optimizer for maximizing the acquisition function. There are two random sampling points in each cluster to initialize the GP construction. The EI acquisition function is used.

Figure 6 shows the convergence plot of the cost function in the welded beam design, where the circle, cross, triangle, and square corresponds to steel, cast iron, aluminum, brass, respectively. The optimal cost value f(x) evolves at iterations 0, 1, 2, 3, 5, and 132, with the values of 20.1995, 5.0605, 3.7949, 3.2436, 1.7420, and 1.6297, respectively, with the last one being four-sided welded. Compared to Datta and Figueira (2011), where the optimal value is f(x) = 1.9553, our obtained result f(x) = 1.6297 is smaller, because in our formulation h, t, and b are continuous variables, in contrast to Datta and Figueira (2011) with h, t, and b as discrete variables. Furthermore, the convergence occurs relatively fast, as the optimization algorithm exploits the most promising cluster by maximizing the acquisition function. This behavior can be explained by the fact that in this welded beam design example, different materials have significantly different cost objective functional value, which aids the optimization convergence.

To further demonstrate the effectiveness of the proposed method, we compare with GA. Two versions of the proposed method are used. In the first version, every cluster are considered as independent, leaving no neighbor in the neighborhood, whereas in the second version, all the clusters are considered as neighbors.

The performance comparison is presented in Fig. 7, showing that both variants of the mixed-integer BO clearly outperforms the GA in the welded beam design problem. The solution obtained from the GA is [0, 1, 0.24920115, 5.30060037, 7.12520087, 0.25345267], where the objective function is evaluated at 2.04016262. On the other hand, from the first variant (none is neighbor) of the proposed method, the solution obtained is [1, 1, 0.16934934, 5.61720010, 4.90884889, 0.27985016], where the objective function is evaluated at 1.68206763. From the second variant (all are neighbors) of the proposed method, the solution obtained is [1, 1, 0.16934934, 5.61720010, 4.90884889, 0.27985016], where the objective function is evaluated at 1.66457625. The convergence plots of these two variants are very similar. The asymptotic value using the second variant is slightly better than that using the first variant. However, we note that as the optimization process advances, the prediction converges to a single GP prediction, and thus both variants are similar at the later stage of search. The proposed mixed-integer method clearly outperforms the GA in all settings.

4.3 Pressure vessel design problem

Here, the proposed mixed-integer BO method is applied to solve the pressure vessel design optimization problem. The objective of this problem is to minimize the cost of a storage tank with 3⋅ 103 psi internal pressure shown in Fig. 8, where the minimum volume is 750 ft3. The shell is made by joining two hemispheres and forming the longitudinal cylinder with another weld. The design variables are listed as follows: x1 is the thickness of the hemisphere, x2 is the shell thickness, x3 is the inner radius of the hemisphere, x4 is the length of the cylinder.

Pressure vessel design optimization problem (Cagnina et al. 2008)

The objective function that accounts for the cost is

where the imposed constraints are

and 0.00625 ≤ x1, x2 ≤ 0.61875, 10.0 ≤ x3, x4 ≤ 200.0. All variables are considered as continuous in this example.

Figure 9 shows the performance comparison between the proposed mixed-integer BO and the GA with various settings in terms of number of functional evaluations. Again, the BO clearly shows its advantage in term of convergence speed for continuous variables. The optimal input is [0.193114320, 0.0954997100, 10, 76.2478356], where the corresponding objective functional value is 125.02822748.

4.4 Speed reducer design problem

Figure 10 shows the design optimization problem of a speed reducer (Cagnina et al. 2008). Seven design variables are described as follows: x1 is the face width, x2 is the module of teeth, x3 is the number of teeth on pinion, x4 is the length of the first shaft between bearings, x5 is the length of the second shaft between bearings, x6 is the diameter of the first shaft, x7 is the diameter of the second shaft. x3 is the discrete variable, whereas the rest of the variables are continuous. The problem is 7-dimensional, one discrete and six continuous. With the formulation of the problem, there are 12 local GPs corresponding to 12 discrete values of x3.

Speed reducer design optimization problem (Cagnina et al. 2008) from NASA

In iteration 148, the mixed-integer BO converges to the global minimum of f(x∗) = 2996.29614837, where x∗ = [3.50000447, 0.7, 17, 7.30566156, 7.8, 3.35022572, 5.28668406]. The result is comparable with Cagnina et al. (2008), where particle swarm optimization is employed, yielding the optimal f(x∗) = 2996.348165, where x∗ = [3.5, 0.7, 17, 7.3, 7.8, 3.350214, 5.286683].

To evaluate the effect of initial sample size, the mixed-integer BO is performed with different number of initial samples. Figure 11 shows the convergence plot of the GA and the mixed-integer BO, each with various settings. In terms of the number of functional evaluations, the mixed-integer BO clearly shows the advantages with faster convergence, compared to the GA. The effect of initial samples is also shown in Fig. 11. It is observed that the proposed mixed-integer BO converges relatively fast after the initial sampling stage. Thus, for low-dimensional problems, it may not be necessary to sample extensively at the initial sampling stage. The balance between exploration and exploitation is well-tuned by the acquisition function, which is GP-UCB (Srinivas et al. 2012) in this case.

4.5 High-dimensional discrete sphere function

To evaluate the performance of the proposed mixed-integer BO in high-dimensional problems, two discrete sphere functions with 5-dimensional discrete variables and 50-dimensional and 100-dimensional continuous variables, respectively, are used to benchmark. The discrete sphere function is

where 1 ≤ xi ≤ 2(1 ≤ i ≤ n) are n integer variables and − 5.12 ≤ xj ≤ 5.12(n + 1 ≤ j ≤ m) are m − n continuous variables. Again, GA is used to compare against the proposed mixed-integer BO method. The global optimal of this function is f(x∗) = 0, where x∗ = [1, 1, 1, 1, 1, 0, … , 0]. The number of clusters in this example is 2 × 2 × 2 × 2 × 2 = 32, where each cluster corresponds to a local GP. Figure 12 shows the convergence plot of the proposed mixed-integer BO with different number of initial samples and GA with different settings for the (50 + 5)D discrete spherical function, where 5 variables are discrete and 50 variables are continuous.

As seen in Fig. 12, the proposed mixed-integer BO quickly identifies the discrete tuple (1, 1, 1, 1, 1) that corresponds to the minimal response, with respect to the discrete tuple. The rest of the convergence plot focuses on the optimization of the continuous variables. The GA with population size of 50 and elite count of 3 performs on par with the proposed mixed-integer BO, whereas other GA settings converge much slower. The mixed-integer BO with 2 initial samples converges relatively fast at the beginning. However, the convergence at the later stage stagnates over a long period. On the contrary, the mixed-integer with 20 initial samples converge very fast right after the initial sampling stage. One of the reasons is that the local GP is able to approximate the objective function more accurately with more initial samples, compared to the one with less initial samples.

Similarly, Fig. 13 shows the convergence plot of the proposed mixed-integer BO with a different number of initial samples and GA with different settings for (100 + 5)D discrete spherical function, where 5 variables are discrete. The mixed-integer with 2 initial samples converges poorly, whereas other variants perform better. One of the reasons is that with the low initial sample size, the discrete tuple is incorrectly identified as (1, 1, 1, 1, 2), as opposed to (1, 1, 1, 1, 1). The other variants of the proposed mixed-integer BO are able to identify the correct tuple immediately after the initial sampling stage. Thus, it may be beneficial to have sufficient number of initial samples.

5 Metamaterials design examples

In this section, we demonstrate the applicability of the proposed method to the design of metamaterials, in which properties can be tailored depending on the geometric design of the structures. In Section 5.1, a mechanical metamaterial is considered, where the objective is to design a low-weight and high-strength unit cell. In Section 5.2, an auxetic metamaterial unit cell is considered. The proposed BO method is applied to minimize the negative Poisson’s ratio.

5.1 An example of designing high-strength low-weight fractal metamaterials

Motivated by the recent experimental work of Meza et al. (2014) in designing high-strength and low-weight metamaterials at nano-scale for ceramic systems where the effective mechanical strength can be enhanced by hierarchical structure. We demonstrate the proposed methodology in searching for high-strength and low-weight metamaterials for multiple classes of materials.

Particularly, our metamaterials are constructed with fractal geometry. Fractal geometry has the special property of self-similarity at different length scales. A parametric design and optimization approach for fractal metamaterials is demonstrated here. In this example, the goal is to maximize the effective strength of the structure.

The effective strength is defined as the ratio between the effective Young modulus and the volume of material with the assumption of homogenized material for the bulk properties. The material selection, including Ashby chart, is formulated as an inequality constraint to limit the searching space of materials.

5.1.1 Parametric design of fractal truss structures

Mathematically, fractals can be constructed iteratively using the so-called iterated function systems (IFSs). An IFS is a finite set of contraction mappings \(\{f_{i}\}_{i = 1}^{N}\) on a complete metric space X (Barnsley 2014). Starting from an initial set \(\mathcal {P}_{0}\), the fractal can be constructed iteratively as \(\mathcal {P}_{k + 1}=\cup _{i = 1}^{N} f_{i}(\mathcal {P}_{k})\). Geometrically, the IFSs fi can be expressed in terms of rotation, translation, scaling, and other set topological operations, such as complement, union, or intersect.

In this example, the fractal truss structures are constructed from the 2D profiles shown in Fig. 14c. They are based on the square shape, even though in principle they can be constructed from any arbitrary polygon such as triangle and hexagon. Figure 14c presents the first three levels of IFS construction. The IFSs are inspired by the projection of Keplerian 3D fractals onto its corresponding 2D plane. Here, the IFS operators include the translation matrix T = diag {±d/2, ±d/2, 1} and the scaling matrix S = diag {1/2, 1/2, 1}. The rotation is not considered. Physically, the first four IFSs simply scale the design of previous fractal level by 1/2, and translate them to the northwest, northeast, southwest, and southeast, respectively. The fifth IFS scales the design of previous fractal level by one half and deletes other features that overlap within the region.

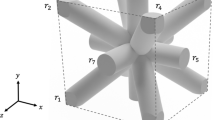

Figure 14 illustrates the square basis with three design options: (1) diagonal truss, (2) inner square truss, and (3) perpendicular truss. The diagonal truss option enables edges connecting nodes 4, 8, 12, 16, and 20 and nodes 0, 6, 12, 18, and 24. The inner square truss option enables edges connecting nodes 2, 6, 10, 15, 22, 18, 14, and 8. The perpendicular truss option enables edges connecting nodes 2, 7, 12, 17, and 22 and nodes 10, 11, 12, 13, and 14. In the example of Fig. 14c, only the inner square truss option is enabled. In the construction process, the options are enabled by setting the truss control parameters to 0 or 1, respectively. The fundamental adjacency matrix of fractal level 0 is built to indicate whether a pair of nodes are connected. With the design of level 0 unit cell, the IFSs are applied recursively to create the more complicated geometry at the desired level. Once the profile is constructed, additional offset operations are applied to generate thickness of the 2D truss elements for a full 3D structure. Figure 15a shows a complete 2D fractal face. With the square face defined, a complete 3D fractal unit cell is built with six of the faces, as shown in Fig. 15b.

5.1.2 Constitutive material model and the finite element analysis

A general anisotropic material has 21 independent elastic constants to describe the stress-strain (σ-ε) relationship. To simplify the materials constitutive model, we assume isotropic and linear elastic materials behavior at small strain regime, where σ-ε relationship for bulk material properties can be obtained via Young’s modulus E and Poisson’s ratio ν, i.e.,

where i and j can be either x, y, or z, and δij is the Kronecker delta of i and j. The material properties E and ν, as well as material ρ, are taken as inputs to describe the linear elastic regime in the FEM simulation to obtain stress.

In simulations, we are concerned with an uniaxial compression. Therefore, to simplify the terminology, we refer to the component of effective stiffness tensor in the loading direction as effective Young’s modulus. It is noteworthy that the effective stiffness tensor of the designed fractal truss structure is not the same as the bulk material stiffness tensor. Two displacement boundary conditions are imposed on the unit cube. One is the fixed boundary condition for both translation and rotation, and the other is the constant displacement on the opposite side of the cube. The stress is obtained by taking the maximum nodal stress in the active direction. The effective Young’s modulus is calculated as the ratio of the maximal nodal stress σ33 at the designated engineering strain ε = 0.01. The quadratic tetrahedral element (C3D10 in ABAQUS) is utilized for the FEM simulation. The total number of elements is between 5000 and 10,000. The exact number varies with respect to the finite element simulation. The size of the cube is around 1 mm (10− 3 m).

The dimension of the design space is 9, in which 4 discrete and 5 continuous variables are combined to create an input x = (x1, x2, x3, x4, x5, x6, x7, x8, x9). The discrete variables include fractal level, the diagonal, inner square, and perpendicular truss options. The fractal level x1 is an integer of either 0, 1, or 2, whereas each of the truss options x2, x3, and x4 is a binary variable from design space, taking a value of 0 or 1. The continuous variables include thickness x5 = t of the truss, the extrusion depth x6 = et of the unit face, the material bulk density x7 = ρ, bulk elastic Young’s modulus x8 = E, and bulk Poisson’s ratio x9 = ν.

Three constraints are imposed as follows. Thickness and extrusion depth are limited to a constant that is related to the fractal level to preserve the fractal geometry of the structure. The higher the fractal level is, the smaller is the constant. Similarly, the material bulk density, Young’s modulus, and Poisson’s ratio are bounded within a physical limit, where values are taken from Table 3.1 of Bower (2011) for woods, copper, tungsten carbide, silica glass, and alloys. As a result, the imposed constraints are

where \(\underline {T} = 10^{-6}\) is the threshold for manufacturability and \(\overline {T}\) is the threshold for the truss thickness as

We expect the simulations to converge on the high-strength and low-density type of materials. However, Ashby chart indicates a high correlation between compressive strength and density among all types of materials. To circumvent this problem, another constraint is introduced to limit the search region, based on the upper bound of longitudinal wave speed as \(\sqrt { E/\rho } = \sqrt { {x_{8}}/{x_{7}}} \leq 10^{4.25}\) m/s.

5.1.3 Simulation and results

Figure 16 shows an example of von Mises stress during the uniaxial compression of the architected metamaterial cell, as described in Section 5.1.2. In the simulation settings and its post-process, only σzz is concerned.

The lower bounds of continuous variables (x5, x6, x7, x8, x9) are (2 ⋅ 10− 6,2 ⋅ 10− 6,0.4 ⋅ 10+ 3,9 ⋅ 10+ 9,0.16). The lower bounds of x7, x8, and x9 correspond to the density of wood, bulk Young’s modulus of wood, and Poisson’s ratio of silica glass, respectively. The upper bounds of continuous variables (x5, x6, x7, x8, x9) are (0.5 ⋅ 10− 3, 0.5 ⋅ 10− 3, 8.9 ⋅ 10+ 3, 650 ⋅ 10+ 9, 0.35). The upper bounds of x7, x8, and x9 correspond to the density of copper, bulk Young’s modulus of tungsten carbide, and Poisson’s ratio of a general alloy, respectively.

To initialize the optimization process, two random inputs are sampled to construct the GP model for each cluster. The number of clusters in this example is 2 × 2 × 2 × 3 = 24. The EI acquisition is used to locate the next sampling location x. The CMA-ES (Hansen et al. 2003) is used as an auxiliary optimizer to maximize the penalized acquisition function. The optimization process is carried out for 170 iterations, as shown in Fig. 17. At iterations 0, 1, 2, 11, 14, 26, and 148, better objective function values of 1.9723, 2.7827, 10.4725, 12.1207, 22.1071, 23.3766, and 36.8316 ⋅ 106 GPa/kg are identified, respectively. The relatively fast convergence plot demonstrates the effectiveness of the proposed BO method for the mix-integer optimization problems. Due to the expensive computational cost of the FEM simulation, the number of iterations is limited to 200.

5.2 Design optimization of fractal auxetic metamaterials

In the second example, we study the auxetic metamaterial with application in flexible and stretchable devices. Inspired by the experimental work of Cho et al. (2014) in designing auxetic metamaterials using fractal cut, and its subsequent numerical and experimental work by Tang and Yin (2017) in developing shape-programmable materials, we use auxetic metamaterials to demonstrate the proposed BO methodology. The goal of this example is to minimize the effective Poisson’s ratio, which is negative and evaluated through a FEM simulation.

5.2.1 Parametric design of auxetic metamaterials

Here, a parametric design of the unit cell, where the fractal level is fixed at 2, is devised. The cut motif α and β for one level of the auxetic cell is shown in Fig. 18. Basically, this cut motif controls the free rotational hinges of the architected structure, such that the deformation energy dissipates through rotational motion, rather than translational motion. The principle of cut design is based on the connectivity of the rotating units, where the connectivity depends on the cut patterns, which in turn determines the maximum stretchability of the designed specimen. For further details about the fractal cut and its rotating mechanisms, readers are referred to the work of Cho et al. (2014) and Tang and Yin (2017). To create a fractal cut, a simple IFS is imposed on the cut to create subsequent level, with the scaling ratio of 1/2, and is then translated to four corners.

To tailor the negative Poisson’s ratio, the shape of the cut is modeled as splines, where the coordinates of the control points are considered as inputs. The choice of α and β cut is formulated using discrete variables. The dimension of this problem is 18, in which 2 discrete and 16 continuous variables are used. The parametric input x includes x1 and x2 as discrete variables, which takes the value of either 1 (α-motif) or 2 (β-motif) for level 1 and level 2 cuts, respectively. The first 4 continuous variables x3, x4, x5, and x6 are used to describe the shape of the large center cut of level 1. The next 4 continuous variables x7, x8, x9, and x10 describe the shape of two small side cuts of level 1. In the same manner, the last 8 continuous variables are used to model the large center cut and two small side cuts of level 2. Figure 19 shows an example of the parametric design implementation of the designed auxetic metamaterials in the ABAQUS environment. The solid dots represent the control points of the cut. (Color is available on the electronic version. The blue solid dots denote the level 1 control points, whereas the red solid dots denote the level 2 control points.)

5.2.2 Constitutive material model and the finite element analysis

The study of Tang and Yin (2017) has demonstrated that the effective Poisson’s ratio νeff is indeed a function of strain ε. In this work, we assume that the base material is natural rubber reinforced by carbon black. The Mooney-Rivlin constitutive model is used to describe the hyperelastic material behavior, where the suitable energy function W is expressed as

where J is the elastic volume ratio and I1, I2, and I3 are the three invariants of Green deformation tensor defined in term of principal stretch ratios λ1, λ2, λ3, i.e.,

and \(\overline {I}_{1} = I_{1} J^{-2/3}\), \(\overline {I}_{2} = I_{2} J^{-4/3}\). The materials parameter is adopted from Shahzad et al. (2015), where C10 = 0.3339 MPa, C01 = − 3.37 ⋅ 10−4, and D1 = 1.5828 ⋅ 10− 3.

The initial size of the square is 20 cm × 20 cm, and the thickness of the specimen is 1 mm. The specimen is then deformed in a uniaxial tension configuration in y-direction, where the displacement is fixed at 10 cm in one direction. The configuration for the simulation is plane-strain configuration, where displacement in the extrusion direction (z-direction) is fixed as zero.

In the deformed configuration, we extract the displacement in x-direction to infer the engineering transverse strain, and compute the effective Poisson’s ratio as the ratio between transverse and longitudinal engineering strains.

The element used in this FEM simulation is the eight-node brick element (C3D8R, C3D6, and C3D4). The FEM is developed in the ABAQUS environment. The number of elements for each simulation is approximately 5000.

In this example, several constraints are imposed on the design variables, which are

where \(\underline {t} = 0.0015\)m is the smallest thickness of the specimen. Two other constraints include the implementation of convexity for the large center cut of level 1 and level 2. Figure 20 presents an example of deformed configuration after the simulation converges.

5.2.3 Simulation and results

The lower bounds of the continuous variables are (0.25; 3.5; 0.50; 1.75; 8.0; 0.25; 4.0; 0.50; 0.25; 3.5; 0.50; 1.75; 4.0; 0.25; 3.0; 0.50)⋅ 10− 3. The upper bounds of the continuous variables are (2.00; 6.5; 1.75; 3.00; 9.5; 1.50; 8.0; 1.75; 2.00; 6.5; 1.75; 3.00; 5.5; 1.50; 4.0; 1.75)⋅ 10− 3.

Two random initial sampling points are created within each cluster. Because the fractal level is fixed at 2, where each fractal level corresponds to one cut motif α or β, 4 clusters are created during the initialization. The initial hyperparameters θi for all i are set at 0.2. The lower and upper bounds for the hyperparameters θi for all i are (0.01, 20).

The optimization process is carried out for 790 iterations. Figure 21 shows the convergence plot of the optimization process, where the best objective function value νeff is updated in iterations 0, 4, 24, 26, 30, 45, 63, 66, 69, 78, 81, 84, 513, 582, and 647, with the value of − 0.6603,− 0.6605, −0.6628, −0.6628, −0.6902, −0.6941, −0.7143, −0.7410, −0.7517, −0.7576, −0.7627, −0.7784, −0.7785, −0.7802, and − 0.7804, respectively. The proposed BO shows relatively fast convergence for mid-level dimensionality d = 16, thus demonstrating the effectiveness in tackling mix-integer nonlinear optimization problems.

6 Discussion

One of the advantages of the proposed BO algorithm is its extension to incorporate discrete variables for nonlinear mixed-integer optimization problems. The discrete variables include both categorical and integer variables, thus can be applied with or without the notion of order. The neighborhood of each cluster is built once during the initialization of the process and can be customized to adapt to specific user-defined requirements. Additionally, because the neighborhood can be modified and/or defined manually, the independence between clusters can be achieved by removing the corresponding clusters. Such independence is quite common in the case of categorical variables. However, the optimization performance of the proposed method does not depend on the enumeration of the clusters. We emphasize that if the cluster is ceased to exist, then it can be manually removed, and the cluster indices can be reenumerated manually by a slight modification of (12) and Algorithm 1.

The weight computation scheme is devised in such a way that asymptotically, the weight prediction converges to a single GP prediction, by imposing a weight vector which has 0 everywhere, except for a single 1 that corresponds to the corresponding cluster.

It is recommended to choose the neighbors carefully. One way to do so is to set a small threshold discrete distance dth, which measures the dissimilarity between clusters based on the discrete tuples, e.g., dth ≤ 1, and manually remove clusters that are known to be independent beforehand at the end of initialization. The safest setting is dth = 0, which assumes clusters are completely independent of each other. This setting has some negative effect on the convergence rate, but would eventually reach the global optimal solution, and would not be trapped at local optima.

The initial sample size plays a role in the performance of the proposed mixed-integer BO method. It has been shown that for some low-dimensional problems, the initial sample size does not affect the optimization performance. However, for high-dimensional problems, the initial sample size does impact the optimization performance. Too many initial samples at the beginning would prevent the optimization from quick convergence. However, with moderate amount of initial samples, and thus a more accurate local GP, the mixed-integer BO converges faster, compared with fewer initial samples. As a general rule of thumb, the total initial sample size is recommended at between 5d and 10d, where d is the dimension of the problem, including both discrete and continuous variables.

Here, the scalability of GP for high-dimensional problems is alleviated, but not completely eliminated. It is noted that the decomposition approach and weighted average approach have been adopted (Nguyen-Tuong and Peters 2008; Nguyen-Tuong et al. 2009a, b, 2010; van Stein et al. 2015; Tran et al. 2018) for continuous variables. The decomposition method for continuous variables is typically referred to as local GP. This approach is promising in tackling the scalability problem. Particularly, in one of our previous studies (Tran et al. 2018), we have shown that the local GP is computationally one-order cheaper, compared to the classical GP, while maintaining a reasonable approximation error.

Nevertheless, further research is required to develop an efficient and robust decomposition scheme for both discrete and continuous variables.

One of the limitations in the proposed approach is the scalability with respect to discrete variables. Because of the decomposition scheme, the number of the clusters is the number of the combinatorial possibilities, i.e., the product of the number of choices for each discrete variable, and thus resulting in the sparsity problem in each cluster. To mitigate the undesirable sparsity effect, a Gaussian mixture model that combines all the predictions from neighboring clusters is used to exploit some useful information from the neighborhood. As mentioned previously, the mixed-integer optimization problem, in general, is difficult, because it combines the difficulties for both discrete and continuous optimization. Particularly, some discrete and combinatorial optimization problems are NP-complete, such as the traveling salesman problem, knapsack problem, and graph coloring problem, to name a few. Another extension is to model the weights as stochastic variables, so that the metaheuristic methodologies can be applied (Bianchi et al. 2009).

The clustering and enumeration algorithm described in Algorithm 1 is based on the assumption of the independence of discrete variables. Algorithm 1 does not work if the discrete variables are dependent. However, in the case that discrete variables are dependent on each other, manual neighborhood definition of clusters can be introduced manually, and the proposed BO algorithm is functional with the demonstrated efficiency. However, the users must declare the neighborhood of each cluster manually. Strictly speaking, the computational efficiency of the proposed algorithm only depends on the number of clusters, not on the number of discrete variables. If all discrete variables are completely independent of each other, as demonstrated in the above examples, then the number of clusters is equal to the product of the number of choices for each discrete variable, i.e., \(L=\prod p_{i}\).

Another practical limitation for the proposed BO algorithm for engineering models and simulations is its sequential nature of sampling and search. Each run of simulations usually demands a considerable amount of computational time. In practice, for high-fidelity and dedicated simulations, one should resort to multi-fidelity or batch-parallel BO for further improvement.

7 Conclusion and future work

In this paper, we propose a new BO algorithm to solve the nonlinear constrained mixed-integer design optimization problems. In this algorithm, the large dataset is decomposed according to the discrete tuples, in which each discrete tuple corresponds to a unique GP model. The prediction for mean and variance is formulated as a Gaussian mixture model, in which the weights are computed based on the pair-wise Wasserstein distance between clusters. Constraints, which are formulated as a set of inequalities, are included during the optimization process. Theoretical bounds and algorithmic complexity are provided to demonstrate the computational efficiency compared to the classical GP.