Abstract

Point cloud analysis has a wide range of applications in many areas such as computer vision, robotic manipulation, and autonomous driving. While deep learning has achieved remarkable success on image-based tasks, there are many unique challenges faced by deep neural networks in processing massive, unordered, irregular and noisy 3D points. To stimulate future research, this paper analyzes recent progress in deep learning methods employed for point cloud processing and presents challenges and potential directions to advance this field. It serves as a comprehensive review on two major tasks in 3D point cloud processing—namely, 3D shape classification and semantic segmentation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The advancement of 3D point cloud acquisition techniques combined with the accessibility of acquisition devices has enabled the use of real-world 3D models in a variety of robotic applications, including autonomous driving, augmented reality, and robotics. 3D scanners, Light Detection and Ranging (LiDAR), RGB-D cameras (such as Kinect, RealSense, and Apple depth cameras) [1], and photogrammetry technologies allow the creation of very large and precise point clouds. Point clouds are the raw output of most 3D data gathering devices and serve as a versatile geometric representation of 3D data [2].

Effective point cloud analysis techniques are therefore essential to understanding of 3D targets. While hand-crafted features on point clouds have long been discussed in graphics and vision, the recent overwhelming success of convolutional neural networks (CNNs) for image analysis suggests that extending CNN insights to the point cloud domain could be beneficial. However, 3D point clouds are collections embedded in continuous space, unlike images, which are structured on regular pixel grids. This makes effective feature aggregation and message carrying methods among points in the cloud difficult to design, preventing the use of traditional deep network employed in computer vision. To form latent space mappings between input point coordinates and ground truth labels, pioneering research [3, 4] and subsequent work [5,6,7,8,9,10,11] have developed specialty modules for feature aggregation and message passing and yielded suitable neural networks for point cloud data.

The focus of this review is on the analysis of deep learning approaches for processing 3D point clouds for shape classification and semantic segmentation. We will also discuss some of the most prominent publicly available datesets used to handle diverse point cloud processing challenges. These datasets include ModelNet40 [12], ScanObjectNN [13], ShapeNet [14], S3DIS [15], Intra [16], Semantic3D [17], SemanticPOSS [18], and SydneyUrbanObjects [19]. Although there exists previous surveys of deep learning for 3D data, such as [20,21,22,23,24,25], this review specifically aims to bridge the gap by addressing techniques that previous surveys haven’t comprehensively covered. it offers an immersive exploration of the latest frontiers in point cloud analysis. The primary objective of this paper is to equip readers with an extensive understanding of the diverse representations present in point clouds, with a particular emphasis on recent advancements within the field of raw point-based methodologies which have surged to the forefront of innovation. The key contributions of our paper are as follows:

-

1.

Deep learning models for shape classification and semantic segmentation of 3D point clouds, covering the most up-to-date (2015–2023) advancements in this domain.

-

2.

Our review goes beyond existing papers by encompassing all existing methods for point cloud classification and segmentation that have not been extensively discussed before.

-

3.

We present a comprehensive taxonomy that encompasses both supervised and unsupervised approaches, including previously overlooked mesh-based methods. Our paper addresses the notable gaps in existing review papers by incorporating these previously unexplored methods.

-

4.

Our analysis classifies and briefly discusses the myriad models available, each leveraging distinct representations and methodologies. This enables readers to grasp the diverse range of approaches within the context of their specific strengths and applications.

-

5.

We conduct comprehensive comparisons of existing methods using multiple publicly available datasets and thoroughly expound upon the inherent strengths and limitations embedded within these diverse approaches.

-

6.

Our paper includes a thorough discussion of the current challenges in the field and offers insightful directions for future research.

Our paper’s novelty is evident not only in its coverage of recent advancements but also in its meticulous attention to previously overlooked areas in the literature. Additionally, the unique structure of our paper serves as a remarkable resource, catering to readers of all backgrounds—from newcomers seeking an approachable entry point to experts seeking a comprehensive taxonomy and insights into the latest deep learning methods for point cloud processing.

The structure of this paper is as follows: Sect. 2 introduces the datasets and evaluation metrics for the respective tasks. Moving forward, Sects. 3 and 4 reviews the state-of-the-art methods for 3D shape classification, while Sects. 5 and 6 provide comprehensive insights into the cutting-edge methods for semantic segmentation. Section 7 contains a quantitative assessment of several indicators as well as future research directions in this field and Sect. 8 concludes the paper.

2 Datasets and evaluation metrics

2.1 Datasets

A high quality dataset is crucial for both training and evaluating the effectiveness of machine learning algorithms. With the rise of deep neural networks, a reliable, well annotated and large datasets is even more crucial. In contrast to feature engineering used in traditional machine learning, deep network models rely on data and its annotations to extract appropriate feature embeddings. The purpose of 3D shape classification is to identify objects in a 3D point cloud [33,34,35,36] and assign a label to each discrete point. Thus, a large amount of well annotated training data is necessary for the model to train effectively.

In this paper, we collected a significant number of datasets to examine the performance of the state-of-the-art deep learning methods for various point cloud applications. Tables 1 and 2 lists some of the most common large-scale datasets currently used for 3D point cloud shape classification and segmentation.

The purpose of 3D shape classification is to identify objects in a 3D point cloud [33,34,35,36] and assign a label to each discrete point. Thus, a large amount of well annotated training data is necessary for the model to train effectively. For each dataset in Table 1, we present the establishment year, number of samples, number of classes and a brief description. We also categorize these datasets into two types: real-world datasets [13, 30] and synthetic datasets [12, 14]. Objects in the synthetic datasets exhibit no occlusion and are complete. Objects in real-world datasets may be partially occluded while background noise, outliers and point perturbations may be present in the data. ModelNet10 and ModelNet40 [12] are the most popular datasets employed for point cloud shape classification.

Table 2 provides an overview of commonly used large-scale datasets for 3D point cloud segmentation. These datasets are carefully curated and labeled to ensure representation of real-world scenarios and a wide range of object classes and scene types.

The datasets can be broadly classified into two groups: indoor datasets and outdoor datasets. In Table 2, we provide details such as the establishment year, number of points, number of classes, sensors used, and a brief description for each dataset. The data collection process involves various sensors, including RGB-D cameras [37], Mobile Laser Scanners (MLS) [27, 31, 38], Aerial Laser Scanners (ALS) [39, 40], and other 3D scanners [15]. Photogrammetry is often employed to map the three-dimensional distance between objects.

2.2 Evaluation metrics

Many evaluation metrics have been proposed to evaluate different point cloud Application. To evaluate classification model usually the metric ‘Accuracy’ is used. In general, “accuracy” refers to the proportion of the model that predicts the correct outcome.

Here, TP, TN, FP, FN represent true positive, true negative, false positive and false negative respectively.

For 3D point cloud classification, overall accuracy (OA) and mean class accuracy (mAcc) are the most commonly used performance standards. “OA” evaluates the average accuracy across all test instances, while “mAcc” is used to evaluate the mean accuracy across all shape classes. Nowadays, dice coefficient (F1) score is also used as a criterion for performance evaluation in classification of 3D point clouds.

The F1 score is defined as the harmonic mean of precision and recall. Where,

In 3D point cloud segmentation, several performance metrics are commonly used for evaluation, including mean intersection over union (mIoU), overall accuracy (OA), and mean class accuracy [15, 17, 31, 38]. These metrics provide insights into the quality of segmentation results. The IoU metric calculates the intersection over union between two sets, specifically the predicted bounding box (A) and the ground-truth bounding box (B). This overlap ratio is particularly relevant in segmentation tasks. The mIoU is the average IoU computed for each category, providing an overall measure of segmentation accuracy. The IoU can be computed using the following equation:

These metrics enable a comprehensive assessment of the accuracy and effectiveness of 3D point cloud application algorithms.

3 3D point cloud classification

The subject of a 3D point cloud shape classification is to produce a label for the entire point cloud determining the shape of the object it contains. Analogous to 2D image classification, methods for 3D shape classification tasks usually follow two main stages. First the embedding of each point is learned in order to generate a global embedding with an aggregation encoder. Next the embedding is passed through several fully connected layers to obtain the final shape label.

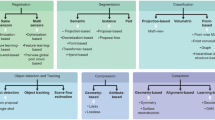

Based on the input data type, point cloud classification models can be generally divided into five major classes, i.e., mesh based methods, projection-based methods, volumetric-based methods, hybrid methods, and raw point-based methods. Mesh data is a common method for representing 3D shapes in computer graphics, consisting of interconnected vertices, edges, and faces. While mesh data provides an efficient way to store and render 3D models, it also presents challenges due to its inherent complexity and irregularity. Projection-based methods project the unstructured point cloud into 2D images (rasterization) to extract features. These features are then fed into 2D or 3D convolutional networks. In volumetric-based methods, the point cloud is discretized into a regular grid, creating a volumetric representation of the data. In contrast, point-based methods directly work on raw points in the cloud. Point-based methods have become increasingly popular since they reduce the computational complexity of the network without any explicit information loss. Papers that combine the benefits of both point and projection methods are referred to in this review as “hybrid” techniques.

In Sect. 3, we have discussed the various models for 3D shape classification with a focus on input data representation. Meanwhile, Sect. 4 presents an in-depth analysis of methodologies exclusively reliant on raw point clouds as their input. Table 3 provides a comprehensive comparison of the aforementioned methods for 3D point cloud classification across various datasets. The methods have been categorized based on the representation of the input point cloud utilized in each approach. Furthermore, the methods are arranged in chronological order within their respective categories. The evaluation of each method’s performance is based on metrics such as overall accuracy (OA), mean accuracy (mAcc), mean average precision (mAP), and F1 score.

3.1 Mesh-based methods

A mesh is the most widely used structure for representing surfaces in computer graphics and is comprised of a set of faces and vertices that define surfaces on a three-dimensional shape. As a result, this representation carries structural information about the object’s surface. Furthermore, by pruning vertices in a mesh and removing extraneous data, mesh-based representations provide a memory-efficient way to store complete geometry details. However, this representation is often overlooked as a suitable input modality for deep learning algorithms. This could be attributed to the fact that a 3D mesh does not provide a grid-like pattern for representing the data to be used in CNNs. In addition, weight sharing in mesh-based approaches presents a difficult challenge because of changes in the number of vertices, the permutation of adjacent vertices, and their pairwise distances.

To learn 3D shape representation from mesh data, Feng et al. introduced a mesh neural network as MeshNet in [54]. This approach introduces face-unit and feature splitting and proposes a general architecture with usable and efficient building blocks. The face unit takes as input the features of the vertices and edges that make up a single face and applies convolutional operations to learn representations of that face. These representations are then combined to form higher-level representations of the mesh. MeshNet can effectively manage mesh irregularity and complexity concerns for 3D shape representation. Alternatively, MeshWalker [55] directly learns the shape from a given mesh without the need for changing the mesh data representations. This is done by examining the geometry and topology of the mesh by randomly traversing its surface via a number of walks. Each walk is arranged as a list of vertices and imposes some degree of regularity on the mesh.

Instead of randomly processing vertices, PolyNet [56] can efficiently learn and extract features from a polygon mesh representation of 3D objects using continuous polynomial convolution (PolyConv). A PolyConv is a polynomial function with learnable coefficients that develops continuous distributions about the features of vertices sharing the same ploygonal face. These convolutional filters distribute appropriate weights among the vertices in the local patches formed by each vertice and its neighboring vertices on the surface of the polygon. This process is invariant with respect to the quantity of neighboring vertices, their permutations, the pairwise distances between them and the choice of central vertex in a local patch. Although mesh-based learning has gained significant popularity in the field of computer vision, it still poses some challenges. This can be attributed to the fact that 3D meshes do not conform to the grid-like structure that is typically used for representing data in convolutional neural networks (CNNs).

In order to address the challenges with meshes, recent advancements in this area have drawn inspiration from triangle meshes and curvature maps used in computer graphics. Muzahid et al. [57] recently introduced a new approach, called CurveNet, using curvature directions to capture geometric features from polygon meshes as inputs to a 3D CNN. The data structure of CurveNet enables object class label prediction by learning perceptually significant and salient features. Curvature directions provide detailed surface information from 3D objects, allowing the model to generate more precise and discriminative features for accurate object recognition. Similarly, Ran et al. [58] proposed a novel approach, Triangular RepSurf, that draws inspiration from triangle meshes in computer graphics. It can be computed by predefined geometric priors after surface reconstruction. RepSurf has two variants: Triangular RepSurf and Umbrella RepSurf. Triangular RepSurf represents each local region as a triangle mesh, while Umbrella RepSurf represents each local region as an umbrella-shaped structure [59].

Table 3 shows that RepSurf-U [58] outperforms all other mesh-based methods on ScanObjectNN [13] dataset, achieving an OA of 84.6% and an mAcc of 81.9%. PolyNet(unsqueezed) [56] achieves the highest OA and mAcc scores on ModelNet 40 [12] dataset with 92.4% and 82.86% respectively. On the other hand, among all other methods, CurveNet [57] achieves the highest F1 score of 79.3% on SydneyUrbanObjects dataset.

3.2 Projection-based methods

Projection-based methods are popular approaches for analyzing unstructured 3D point clouds. By projecting the point cloud onto multiple two-dimensional (2D) planes (or views), the data can be more effectively analyzed using standard image processing techniques. The resulting view-wise features can be collected and concatenated to produce a more precise classification of the point cloud’s shape. However, one significant challenge faced by projection-based methods is in combining multiple view-wise features into a distinct global representation that accurately captures the overall structure of the point cloud.

Su et al. introduced a novel approach for processing point clouds called Multi-View Convolutional Neural Network (MVCNN) [60]. This approach involves representing point clouds as a collection of 2D images captured from multiple views obtained at different angles. Features are extracted from these different views and then combined into a global descriptor through max-pooling. However, one potential drawback of max-pooling is that it only retains the most important parts of each view, resulting in a loss of information. While MVCNN does not explicitly differentiate between various views, it is beneficial to have some understanding of the relationships between them.

One such method that specifically attempts to etablish relationships between views of a point cloud is Group-view Convolutional Neural Network (GVCNN) [61]. GVCNN splits the set of views into groups based on their discrimination scores, thereby leveraging the relationship between them for better results. Other techniques such as Multi-View Harmonized Bilinear Pooling Network (MHBN) [62] use harmonized bilinear pooling to combine local convolutional features into a more compact and discriminative global descriptor. Meanwhile, Yang et al. proposed a method that exploits the inter-relationships between views and regions using a relation network to generate a more accurate 3D object representation [63]. Unlike earlier techniques, Wei et al. presented the View Graph Convolutional Network (View-GCN) which employs a directed graph to consider many views simultaneously [64]. Other strategies such as Dominant Set Clustering Network (DSCN) [65], and Learning Multi-View 3D Object Recognition (LMV3D) [66] have also been proposed to improve recognition accuracy.

Despite the popularity of methods that utilize raw point data such as PointNet [3], some projection-based methods have yielded promising classification results. For instance, Abdullah et al. recently introduced a differentiable module that predicts the optimal viewpoint for a multi-view network [67]. MVTN overcomes the static nature of existing projection-based techniques by utilizing adaptive viewpoints, which it learns to regress. These viewpoints are rendered with a differentiable module to train the task-specific network end-to-end. This results in the most appropriate views for the task at hand.

In another study, Wang et al. [68] presented a multi-view attention-convolution pooling network (MVACPN) framework using Res2Net [69] to extract features from several 2D views. MVACPN effectively resolves the issues of feature information loss caused by feature representation and detail information loss during dimensionality reduction by employing the attention-convolution pooling method.

The results in Table 3 demonstrate that the MHBN [62] method outperformed other projection-based methods with the highest mean accuracy on the ModelNet 40 [12] and ModelNet 10 datasets, while [66] obtained the highest overall accuracy on ModelNet10 among all other approaches.

The Stanford Bunny [70] model in different three-dimensional representations

3.3 Volumetric-based methods

Another approach to produce structured data for processing in traditional CNN architectures is to convert the point cloud into a regular 3D grid of cubic voxels using a process called voxelization. Each point in the point cloud is assigned to the closest voxel center in the 3D grid, resulting in a volumetric representation of the point cloud.

Wu et al. [12] introduced 3D ShapeNet, a deep belief-based convolutional network that learns the distribution of points from diverse 3D shapes. The network represents the points as a probability distribution of binary variables on voxel grids. Despite promising results, these methods struggle to scale to dense 3D data, as memory consumption increases cubically with resolution.

To enable hierarchical learning of features, Ghadai et al. in [71] presented a flexible multi-level unstructured voxel representation of spatial data in their MRCNN framework. This method uses a multi-level voxelization framework, described as a binary occupancy grid at two levels. This is done to represent a 3D object with two distinct user-specified resolutions of voxel grids. Despite the lack of structure in the multi-level data representation, MRCNN can successfully learn features, allowing for more effective and efficient 3D shape classification.

To exclusively take voxelized data as input in an end-to-end encoder-decoder CNN architecture, Cheng et al. suggested a similar technique called (AF)2-S3Net [72]. This method uses a multi-branch attentive feature fusion module to learn both global contexts and local features in the encoder. To promote generalizability, an adaptive feature selection module with feature map re-weighting is utilized on the decoder side to actively emphasize contextual information from a feature fusion module.

Table 3 illustrates that AF2M [72] achieved the highest overall accuracy on the ModelNet40 dataset. However, they did not report their mAcc result. On the other hand, VRN Ensemble [73] only provided their mAcc results for both ModelNet40 and ModelNet10 [12], which are highlighted in bold and underlined in the table as they represent the highest results for those datasets among all other approaches. Nevertheless, it is worth noting that none of the papers recorded their findings for the ScanObjectNN dataset.

3.4 Raw point-based methods

To address information loss and maintain point cloud details, Raw Point-based Methods offer a promising alternative in point cloud processing. These methods operate directly on the raw point cloud data, avoiding the need for transformation into other representations. PointNet [3] pioneered this approach by consuming unordered point sets and achieving permutation invariance through symmetric functions. This novel approach has facilitated the accurate analysis of raw point cloud data, eliminating the need for conversion into other representations. Raw point processing has been a major focus of recent models. Since a substantial body of work exists on raw point processing, we will explore the different learning strategies employed in these methods in Sect. 4.

3.5 Hybrid methods

As discussed earlier, point cloud classification techniques fall under three broad categories of projection-based, voxel-based or point-based neural network(NN) models to handle 3D input. All these approaches, however, are computationally inefficient. The memory usage and computation cost of voxel-based models expand cubically with input resolution. In point-based networks, the majority of the computational cost is on processing the sparse input points to produce data conducive for the remainder of the network. This process often leads to poor memory localization, rather than effective feature extraction. Approaches that combine a variety of input data modalities are known as Hybrid methods. These methods are relatively new, and an increasing number of researchers are investigating various challenging questions in this domain.

Integrating voxel-based learning with point-based learning into a unified framework has been the subject of recent developments in point cloud classification. For example, PointGrid, presented in [74], is a hybrid network that combines the point and grid representations. To retrieve the specifics of local geometry, it uses a 3D CNN to learn the grid cells with fixed points. The PointGrid model employs the same transformation mechanism as VoxNet [75], but it can better describe scale changes, minimizes data loss, and takes up less memory.

The development of real-time algorithms for 3D point cloud classification is highly challenging due to the large size and complexity of point clouds. To overcome this challenge, Ben-Shabat et al. [76] utilized the 3D modified Fisher Vector (3DmFV) approach to convert an input point cloud into 3D grids, followed by CNNs to extract features and fully connected layer to classify them in real-time. The 3DMFV method is an extension of the widely-used 2D modified Fisher Vector (mFV) method for image classification.

Specifically, it extends the mFV method to 3D point clouds by encoding the gradient information of the 3D grids using a Gaussian mixture model (GMM) and computing the Fisher vector (FV) representation of the point cloud. In contrast to the 3DmFV approach, PVNet [77] uses an embedding network to project high-level global features collected from multi-view images into the subspace of point clouds, which are then blended with point cloud features using a soft attention mask. Finally, for fused features and multi-view features, a residual connection is used to achieve shape recognition. To further improve accuracy, You et al., proposed to leverage the relationship between a 3D point cloud and its multiple views through a relation score module in PVRNet [78].

In DSPoint [79], Zhang et al. introduced a dual-scale point cloud recognition approach that combines local features and global geometric architecture. Unlike conventional designs, DSPoint operates concurrently on voxels and points, extracting local and global features. The network disentangles point features through channel dimensions, enabling dual-scale processing. It utilizes pointwise convolution for fine-grained geometry parsing and voxel-wise global attention for long-range structural exploration. To align and blend the local–global modalities, a co-attention fusion module is designed for feature alignment, facilitating inter-scale cross-modality interaction by incorporating high-frequency coordinate information. In contrast, PointView-GCN [80] uses multi-level Graph Convolutional Networks (GCNs) to hierarchically aggregate shape features from single-view point clouds. It captures geometrical cues and multiview relations for 3D shape classification by leveraging partial point cloud data from multiple views.

Voxel-based models have regular data locality and can efficiently encode coarse-grained features. On the other hand point-based networks preserve the accuracy of location information with the flexible fields. Inspired by this, Zhang et al. in [81] proposed a hybrid point cloud learning architecture, called PointVoxel Transformer. The authors used the Sparse Window Attention (SWA) module to gather coarse-grained local features from nonempty voxels. The module not only bypasses the expensive irregular data structuring and invalid empty voxel computation, but also obtains linear computational complexity with respect to voxel resolution. In another recent work by Yan et al., called PointCMT [82], both image and point cloud data is used to train the model for shape analysis tasks. This approach combines the advantages of both modalities, leveraging the rich texture information from images and the geometric structure from point clouds.

In [83], the authors introduced the Embedding-Querying (EQ-Paradigm), a unified approach for 3D point cloud understanding. EQ-Paradigm combines different task heads with existing 3D backbone architectures, offering advantages such as a unified framework for tasks like object detection, semantic segmentation, and shape classification. It seamlessly integrates with diverse 3D backbone architectures and efficiently handles large point clouds.

Table 3 Shows the qualitative evaluation of classification results of hybrid methods on various publicly available datasets. The best-performing method on ModelNet 40 dataset was PointView-GCN, which achieved an OA of 95.40%, while PointCMT achieved the highest mAcc and F1 scores among other methods on ScanObjectNN dataset.

4 Learning strategies for point based methods in classification

Since the development of PointNet, numerous models have emerged that can process raw point data directly without information loss [3, 95,96,97]. These models employ diverse techniques and network architectures to handle unstructured data. In this section, we will discuss about the learning strategies that these models have adopted for processing raw points. We provide a detailed discussion of each category and highlight their key differences and commonalities. Generally, methods in this category can be broadly categorized into two groups depending on the type of supervision used during training. Figure 3 provides a comprehensive categorization of raw point-based approaches for point cloud shape classification.

Supervision in training Supervision in point cloud processing involves training neural networks with labeled point clouds to make predictions on unlabeled ones. It can be divided into two categories: supervised and unsupervised training. Supervised methods use labeled data to teach the model how to predict outputs for new point clouds. Unsupervised methods, on the other hand, identify patterns and structures in the input data without prior knowledge of the output. Both supervised and unsupervised methods are crucial in point cloud processing, depending on the availability and quality of labeled data. More details about supervised and unsupervised methods of raw point cloud processing are discussed in Sects. 4.1 and 4.2 respectively.

Table 4 presents a comprehensive comparison of raw point-based methods for 3D point cloud classification across various datasets. The methods are organized chronologically within their respective categories. The table includes the number of parameters reported by each paper and specifies whether the model used only the point cloud or also incorporated point features such as normals as input. The evaluation of each method’s performance is based on metrics such as overall accuracy (OA), mean accuracy (mAcc), and F1 score. Notably, the results for the Intra dataset are showcased and obtained from [98].

4.1 Supervised training

Supervised learning for point cloud is a powerful approach for processing and analyzing 3D data. It involves training the system on labeled point clouds to extract meaningful information such as object classification, semantic segmentation, and registration [3, 99]. The algorithm is continuously improved as it compares its predictions with the desired output, allowing for more accurate results with each iteration. However, supervised learning requires large amounts of labeled data, which can be costly to obtain.

Supervised learning approaches can be classified into seven categories: pointwise MLP, hierarchical-based, convolution-based, RNN-based, graph-based, transformer-based, and other methods. These categories can be further grouped into feedforward and sequential training based on the model architecture and data processing method.

Feed-forward training It is an extensively used technique for processing point clouds, where the individual points of the point cloud are passed through multiple layers in a neural network to generate activation maps for successive layers. This allows the model to capture complex relationships by transforming the data through non-linear transformations. Based on the operations performed on points in each layer, this group encompasses the following methods: multilayer perceptron (MLP)-based, convolution-based, hierarchical-based, and graph-based architectures.

Sequential training It is a type of training method, the model is trained on a sequence of input data. In point cloud processing, the input data is treated as ordered points or patches, processed in sequence. Unlike feed-forward training, where data flows from input to output, sequential training uses the output from one time step as the input for the next. This approach is beneficial in point cloud processing as it allows the model to process local patches and predict features for the next point. Sequential training is commonly used in recurrent neural networks (RNNs) and transformer-based architectures designed to process sequential data.

Supervised learning is a crucial element of point cloud processing pipelines, particularly in cases where high accuracy is essential. In the following sections, we will provide an in-depth discussion of the various network architectures that are utilized for feature learning of individual points, with supervised learning being the primary technique.

4.1.1 Multi-layer perceptron (MLP) methods

This method is based on fully connected layers that process each point independently. The network takes a point cloud and applies a set of transforms and shared MLPs to generate features. These features are then aggregated to yield a global representation using max-pooling that describes the original input cloud. Another MLP classifies that global representation to produce output scores for each class.

PointNet [3], in particular, uses multiple MLP layers to learn pointwise features independently and a max-pooling layer to extract global features. The local structural information between points cannot be captured since features are learned independently for each point in PointNet. As a result, Qi et al. presented PointNet++ [4], a hierarchical network that captures complex geometric patterns in the neighborhood of each point. PointNet++ is inspired by standard CNNs, which use a stack of convolutional layers to capture features at different scales. The points within a sphere centered at x are defined as the local region of the point x. In particular, one set abstraction level contains a sampling layer, a grouping layer to identify local regions, and a PointNet layer. PointNet [3] and PointNet++ [4] prompted a lot of follow-up work due to their easy implementation and promising performance. Mo-Net [100] has a similar design to PointNet, but it takes a fixed collection of moments as input. SRINet [101] uses a PointNet-based backbone to extract a global feature and graph-based aggregation to extract local features after projecting a point cloud to generate rotation invariant representations.

Yan et al. [7] used an Adaptive Sampling (AS) module in their work, PointASNL, to adaptively adjust the coordinates and attributes of points. They sampled these points using the Farthest Point Sampling (FPS) technique and presented a local-non-local (L-NL) module to capture the local and long-range relationships of the sampled points. Duan et al. [97] proposed utilizing MLP to learn structural relational properties between distinct local structures using a Structural Relational Network (SRN). Lin et al. [102] used a lookup table to speed up the inference process for both the input and function spaces learned by PointNet. On a consumer grade computer, the inference time for the ModelNet and ShapeNet datasets is 1.5 ms and 32 times faster than PointNet. In RPNet, Ran et al. [103] studied the capabilities of local relation operators and developed the group relation aggregator (GRA), a scalable and efficient module for learning from both low-level and high-level relations. The module calculates a group feature by aggregating the features of inner-group points that are weighted by geometric and semantic relations. RPNet contains approximately a third of the parameters of PointNet++ and double the computation speed.

A simplified architecture of PointNet [3] where parameters n and m denote point number and feature dimension, respectively

Previous works have mainly focused on utilizing advanced local geometric extractors such as convolution, graphs, and other mechanisms to capture 3D geometries. However, these methods can lead to increased computational costs and memory usage. To address this challenge, Ma et al. [104] developed PointMLP, a pure residual MLP network that does not rely on “complex” local geometrical extractors. Despite this simplicity, PointMLP performs well due to highly optimized MLPs. They developed a lightweight local geometric affine module that adaptively modifies the point feature in a local region to boost efficiency and generalization ability. PointMLP trains two times faster and tests seven times faster than the current models. PointNext [105] overcomes the limitation of PointNet++ [4] by utilizing a thorough analysis of model training and scaling techniques. The authors add separable MLPs and an inverted residual bottleneck design to PointNet++ to facilitate effective and efficient model scaling. In PointStack [106], the authors proposed a method that utilizes multi-resolution features and learnable pooling to extract meaningful features from point cloud data. The multi-resolution features capture the underlying structure of the point cloud data at different scales, while the learnable pooling enables the system to dynamically adjust the pooling operation based on the features.

Table 4 shows that PointStack achieves the best results for both the ModelNet 40 and ScanObjectNN datasets, while PointASNL achieves the best result for the ModelNet 10 dataset, and PointNet++ for the Intra dataset among all the MLP-based methods.

4.1.2 Convolutional methods

The architecture of convolution networks is an emulation of biological processes and is closely related to the organization of the visual cortex in animals. In this architecture, each cortical neuron primarily responds to inputs within its receptive field. Multiple neurons with overlapping receptive fields respond to the entire field at a particular location. To extract features from low-level to high-level features, convolutional networks are stacked with convolution layers, rectified linear units, and pooling layers. The strengths of convolutional networks include shared weights, translation invariance, and feature extraction, as demonstrated in several works, such as ApolloCar3D [107], and Semantic3D [17]. VoxNet [75] illustrated the use of 2D grid kernels for processing 3D point cloud data. However, due to the irregularity of point clouds, constructing convolution kernels for 3D point clouds presents greater challenges compared to 2D counterparts. Modern 3D convolution methods can be categorized as discrete or continuous based on the nature of the convolution kernel used.

Discrete convolution Discrete convolution for point cloud processing involves defining a convolutional kernel on a regular grid based on a set of surrounding points that are located within a certain radius from the center point. This technique leverages the structural properties of point clouds, which can be seen as sets of irregularly spaced points in a high-dimensional space. The weights of the kernel are associated with the offsets of these surrounding points with respect to the center point, and the convolution operation is performed by sliding the kernel over the input point cloud, multiplying the weights of the kernel with the corresponding features of the surrounding points, and summing the products. This process is repeated at each location of the point cloud, resulting in a new set of features that represent the convolved output.

Pointwise-CNN [108] employs a unique approach to define convolutional kernels on each grid by transforming non-uniform 3D point clouds into uniform grids, with weights assigned to all points that fall within the same grid. The output of the current layer is determined by computing the mean features of all the nearby points on the same grid, which are weighted and aggregated from all the grids. Meanwhile, Mao et al. [109] introduced the interpolated convolution operator InterpConv to assess the geometric relations between input point clouds and kernel-weight coordinates by superimposing point features onto neighboring discrete convolutional kernel-weight coordinates.

To achieve rotation invariance, Zhang et al. [110] introduced the RIConv operator, which transforms convolution into 1D using a clustering approach on low-level rotation invariant geometric features. Another approach proposed by Zhang et al. [111] is shellConv, an efficient permutation invariant convolution for point cloud deep learning. It partitions the local point neighborhood into concentric spherical shells, extracting representative features based on the statistics of the points inside. ShellNet [111] utilizes ShellConv as the core convolution, enabling it to handle larger receptive fields with fewer layers. However, it may not capture long-range point relations and overlooks certain patterns present in point cloud structures. To overcome this limitation, Point-PlaneNet [112] introduces a new neural network that leverages spatial local correlations by considering the distance between points and planes. The proposed PlaneConv operation learns a set of planes in \(R^n\) space, allowing it to extract local geometric features from point clouds. Additionally, DeltaConv [113] introduces anisotropic filters on point clouds by mixing geometric operators from vector calculus, which allows the network to be split into scalar and vector streams that can expressively represent directional information.

Continuous convolution Current 3D convolution methods differ from traditional discrete convolution by defining convolutional kernels in a continuous space. Instead of fixed-size kernels sliding over a grid structure as in 2D convolution, these methods assign weights to neighboring points based on their spatial distribution relative to the center point. This allows for a more flexible and detailed feature extraction process, as 3D convolution can be seen as a weighted sum over a subset of points in continuous space.

In RS-CNN [115], the convolutional network is based on relation-shape convolution. The input to an RS-Conv kernel is a local subset of points around a given point. The mapping from low-level relations like Euclidean distance and relative location is learned using an MLP to high-level relations between points in the local subset. Using a collection of learnable kernel points, Thomas et al. [116] suggested both rigid and flexible Kernel Point Convolution (KPConv) operators for 3D point clouds. Liu et al. in their work DensePoint [117], described comvolution as a Single-layer Perceptron (SLP) with a nonlinear activator. To fully exploit the contextual information, features are learned by concatenating all of the previous layers’ features. The convolution kernel is divided into spatial and feature components by ConvPoint [118]. The spatial part’s positions are chosen at random from a unit sphere, and the weighting function is trained using a basic MLP. In PointConv [119], convolution is defined as a Monte Carlo estimation of a continuous 3D convolution with regard to an important sample. A weighting function and a density function are used in the procedure, which is accomplished using MLP layers and kernelized density estimation. The 3D convolution is further simplified into two operations: matrix multiplication and 2D convolution, in oreder to increase memory and computational performance. Its memory consumption can be lowered by 64 times with the same parameter settings.

Different types of point convolution [114]

Several methods have been proposed to handle large-scale point cloud scenes using feature fusion, such as SpiderCNN [120]. SpiderCNN uses a unit called SpiderConv that extends convolution operations on regular grids by combining a step function with a Taylor expansion defined on the k nearest neighbors. The Taylor expansion captures the inherent local geometric fluctuations by interpolating arbitrary values at the vertices of a cube, whereas the step function catches the coarse geometry by storing the local geometric distance. PCNN [121] is another 3D convolution network that utilizes the radial basis function for processing point clouds. Its point convolution operator is derived from extension operators that enable the transformation of point data into a continuous function space. SPHNet [122], which is based on PCNN [121], achieves rotation invariance by integrating spherical harmonic kernels during volumetric function convolution.

Designing efficient CNNs for point cloud analysis is a challenging task, requiring a delicate trade-off between accuracy and speed. Although CNNs have achieved remarkable success in image and pattern recognition, increasing the network complexity often results in decreased speed. This challenge is further amplified when dealing with point clouds, as they can contain a large number of points with varying densities.

Table 4 includes models from both discrete and continuous convolution methods. The results indicate that DeltaNet attained the highest overall accuracy (OA) on both the ModelNet40 and ScanObjectNN datasets. DensePoint, on the other hand, achieved the best OA on the ModelNet10 dataset. Moreover, PointConv exhibited the highest F1 score on the Intra dataset compared to other convolution-based methods.

4.1.3 Hierarchical methods

Hierarchical data structures like kd-trees and octrees are commonly employed in point cloud processing to construct networks. These networks represent the point cloud in a structured manner and learn features hierarchically, from leaf nodes to root nodes. By partitioning the point cloud into subsets of points at different levels of detail, these methods allow the model to capture local details at lower levels and global context at higher levels. As a result, these methods are effective in reducing the computational complexity of point cloud processing tasks.

In their paper, Lei et al. [123] introduced an octree guided CNN with spherical convolutional kernels applied to each layer corresponding to the octree layers. Compared to OctNet [124], which relies on octree data structures, Kd-Net [125] utilizes multiple K-d trees with different splitting directions, with non-leaf node representations computed using an MLP. Parameter sharing based on node the splitting type enables Kd-Net to efficiently learn hierarchical features while managing memory consumption.

To achieve feature learning and aggregation, 3DContextNet [126] utilizes a balanced K-d tree to learn and aggregate features, leveraging both local and global contextual cues. MLPs are employed to model the relationships between positions, allowing feature learning at each level. The non-leaf nodes compute features from their children nodes using MLP and max pooling, enabling classification until reaching the root node. SO-Net [127] establishes its structure through point-to-node k-nearest neighbor search and a Self-Organizing Map (SOM), ensuring permutation invariance. The SOM simulates the spatial distribution of point clouds by setting the positions of points, while individual point features are learned through fully connected layers. Pre-training with a point cloud auto-encoder is proposed to enhance network performance in various applications. However, processing large and complex scenes with this network may encounter limitations due to the massive amount of point cloud data involved.

DRNet [128] is another hierarchical network that learns local point features from the point cloud in different resolutions. The DRNet architecture consists of two branches: a Full-Resolution (FR) branch and a Multi-Resolution (MR) branch. The FR branch learns local point features from the full-resolution point cloud. The MR branch learns local point features from downsampled versions of the point cloud. The two branches are then fused to produce a final feature representation.

Table 4 clearly illustrates that among the hierarchical methods, So-Net consistently outperformed all others across the assessed datasets.

4.1.4 Graph-based methods

Graph-based networks provide an alternative approach to analyzing point clouds by representing points as vertices in a graph connected by directed edges. These networks operate in either the spatial or spectral domain for feature learning. In the spatial domain, MLP-based convolutions are applied to spatial neighbors, and pooling generates coarsened graphs by aggregating neighboring features. In the spectral domain, convolutions are achieved through spectral filtering using the eigenvectors of the graph Laplacian matrix [129, 130]. Each vertex is assigned features like coordinates, intensities, or colors, while geometric properties between connected points are assigned to edges. Numerous graph-based approaches have been proposed for point cloud analysis, each with its unique method of generating and manipulating graphs in the feature space.

PointWeb [131], based on PointNet++ [4], uses Adaptive Feature Adjustment (AFA) to improve point features in the local neighborhood context, generating a graph in the feature space that is dynamically modified after each layer. DGCNN [132] also generates a graph in the feature space, and an MLP is used for feature learning for each edge in EdgeConv’s core layer. Channel-wise symmetric aggregation is used for edge features associated with each point’s neighbors. In addition, LDGCNN [133] improves the performance of DGCNN [132] by removing the transformation network and linking the hierarchical features from different layers.

Liu et al. [134] presented a Dynamic Points Agglomeration Module (DPAM) based on graph convolution to reduce the process of point agglomeration that includes sampling, grouping, and pooling to a single step. This is accomplished by multiplying the agglomeration matrix and the points feature matrix. A hierarchical learning architecture is built by stacking multiple DPAMs based on the PointNet architecture. DPAM dynamically exploits the relationship between points and agglomerates points in a semantic space, as opposed to PointNet++’s hierarchical methodology [4]. On the other hand, KCNet [135] takes a different approach by learning features based on kernel correlation to exploit local geometric structures. By defining kernels as a collection of learnable points, KCNet characterizes the geometric types of local structures, and subsequently determines the affiliation between the kernel and a specific point’s neighborhood.

In RGCNN [136], a graph is built by linking each point in the point cloud to all other points and updates the graph Laplacian matrix in each layer. The loss function includes a graph-signal smoothness prior to improve the comparability of features among nearby vertices. Alternatively, in PointGCN [137], a graph is constructed from a point cloud using k nearest neighbors, and each edge is weighted using a Gaussian kernel. The graph spectral domain is utilized to design convolutional filters using Chebyshev polynomials. To capture both global and local properties of the point cloud, global pooling and multi-resolution pooling techniques are employed. Graph convolutional networks (GCN) surpass other point-based models by preserving data granularity and utilizing point interconnectivity. However, data structure operations such as Farthest Point Sampling (FPS) and neighbor point querying consume a significant amount of time in point-based networks, limiting their speed and scalability.

To address this issue, Xu et al. [9] introduced Grid-GCN, a fast and scalable method for point cloud learning. Grid-GCN utilizes Coverage-Aware Grid Query (CAGQ), a data structuring technique that enhances spatial coverage and reduces theoretical temporal complexity by leveraging grid space efficiency. CAGQ achieves a 50% speedup compared to common sampling methods like FPS and Ball Query.

Additionally, Yang et al. [153] proposed PointManifold, a point cloud classification method based on graph neural networks and manifold learning. PointManifold employs various learning algorithms to embed point cloud features, enhancing the assessment of geometric continuity on the surface. By acquiring the point cloud nature in a low-dimensional space and combining it with features in the original 3D space, the representation capabilities and classification network performance are improved.

In [154], a novel method called Convolution in the Cloud (CIC) is proposed for learning deformable kernels in 3D graph convolution networks. CIC involves dynamically deforming a cloud of kernels to match the local structure of the point cloud. It consists of two stages: randomly sampling initial kernels and iteratively updating them based on a loss function that measures the discrepancy with the ground truth label. Meanwhile, Xu et al.’s Position Adaptive Convolution (PAConv) [155] presents a generic convolution procedure for 3D point cloud analysis. PAConv dynamically builds convolution kernels using self-adaptively learned weight matrices from point positions via the ScoreNet module. This data-driven approach allows PAConv to handle irregular and unordered point cloud data more effectively than traditional 2D convolutions. CurveNet, a proposition by Xiang et al. [156], enhances point cloud geometry learning through a novel aggregation strategy. CurveNet utilizes a curve grouping operator and a curve aggregation operator to generate continuous sequences of point segments and effectively learn features.

Table 4 presents a comparative analysis of multiple graph-based techniques for 3D point cloud classification. Notably, CurveNet [156] demonstrated remarkable performance with the highest OA of 94.20% on the ModelNet 40 dataset, outshining other graph-based methods. Meanwhile, Grid-GCN [9] demonstrated exceptional performance by securing the top OA and mAcc on the ModelNet 10 dataset among all methodologies evaluated.

4.1.5 Recurrent neural network-based methods

RNNs are popular for processing temporal data and have been applied in point cloud analysis to capture local context. These neural networks utilize their internal state to handle variable length sequences of inputs, making them well-suited for point cloud data. Various RNN-based techniques have been developed, highlighting the significance of local context in point cloud analysis.

RCNet [157] constructs a permutation-invariant network for 3D point cloud processing using regular RNN and 2D CNN. After partitioning the point cloud into parallel beams and sorting them along a specified dimension, each beam is input into a shared RNN. For hierarchical feature aggregation, the learnt features are used as an input to an efficient 2D CNN. RCNet-E is proposed to ensemble multiple RCNets with varied partitions and sorting directions to improve its description ability. Another RNN-based model, Point2Sequence [158], identifies correlations between distinct locations in local point cloud regions. To aggregate local region features, it treats features learnt from a local region at many scales as sequences and feeds these sequences from all local regions into an RNN-based encoder-decoder structure. Several other methods also learn from both 3D point clouds and 2D images.

According to Table 4, Point2sequence achieves the highest overall accuracy on the ModelNet 40 dataset, while RCNet-E performs best on the ModelNet 10 dataset over all other RNN-based methods.

4.1.6 Transformer-based methods

One of the most significant recent breakthroughs in natural language processing and 2D vision is the Transformer [209], which has demonstrated superior performance in capturing long-range relationships. The success of Transformer has also led to notable improvements in point-based models through the use of self-attention. With the attention mechanism, the Transformer can weigh the relevance of each point to the others, enabling better feature extraction and discrimination. The development of Transformer-based architectures [160, 162] has greatly enhanced performance. Nevertheless, the bottleneck of these models still remains the time-consuming operation of sampling and aggregating characteristics from irregular sites.

Point Attention Transformers (PATs) [159] learns high-dimensional features by encoding each point’s absolute and relative positions with respect to its neighbors. To extract hierarchical features, it utilizes a trainable, permutation-invariant, and non-linear end-to-end Gumbel Subset Sampling (GSS) layer, which captures relationships between points using Group Shuffle Attention (GSA). This unique approach enables the model to capture local structures within each group while also considering the global context of the entire point cloud. Zhao et al. [160] proposed a similar model, the Point Transformer, which employs a self-attention module to retrieve spatial characteristics from local neighborhoods around each point, and encodes positional information. The network has a highly expressive Point Transformer layer, which is invariant to permutation and cardinality, making it ideal for point cloud processing.

Point Transformer V2 [166] is an enhanced version of the Point Transformer architecture for 3D point cloud processing. It introduces two innovations: grouped vector attention and partition-based pooling. Grouped vector attention reduces computational cost by performing attention only within groups of points, maintaining accuracy while learning long-range dependencies. Partition-based pooling improves accuracy on large point clouds by dividing them into smaller partitions and pooling features within each partition, enabling global feature learning with reduced computational load. Engel et al. [162] introduced another model called Point Transformer, which operates directly on unordered and unstructured point sets. The Point Transformer uses a local–global attention mechanism to capture spatial point relations and shape information, allowing it to extract both local and global aspects of the point cloud. SortNet, a component of Point Transformer, produces input permutation invariance by selecting points based on a learned score. The Point Transformer produces a sorted and permutation invariant feature list that can be utilized directly in standard computer vision applications.

Illustration of the transformer based encoder architecture [210]

Perceiver, another attention-based architecture introduced in [163] is a scalable attention-based architecture for high-dimensional inputs, such as images, movies, and audio, without domain-specific assumptions. It utilizes cross-attention and latent self-attention blocks to process a fixed-dimensional latent bottleneck. 3D medical point Transformer (3DMedPT) [98] is an attention-based model specifically designed for medical point clouds for examining the complex biological structures that are vital for disease detection and treatment. Insufficient training samples of medical data can lead to poor feature learning. To enhance feature representations in medical point clouds, it employs an attention module to capture local and global feature interactions, position embeddings for precise local geometry, and Multi-Graph Reasoning (MGR) for global knowledge transmission.

Similarly, Berg et al. [164] propose the two-stage Point Transformer-in-Transformer (Point-TnT) technique, which combines both local and global attention mechanisms by producing patches of local features via a sparse collection of anchor points. Self-attention can then be used on both the points within the patches and the patches themselves, resulting in a highly effective method for processing unstructured point cloud data. LCPFormer [167] is a recent transformer-based architecture for 3D point cloud analysis. LCPFormer introduces a novel local context propagation (LCP) module that enables the model to learn long-range dependencies between points in a point cloud. The LCP module works by first dividing the input point cloud into local regions. Then, it propagates the features of each local region to its neighboring local regions. This allows the model to learn long-range dependencies between points that are not directly connected.

To learn local and global shape contexts with reduced complexity Park et al. introduced SPoTr [168], a self-positioning mechanism that works by first randomly selecting a subset of points from the input point cloud. These points are then used to create a local coordinate system. The remaining points are then projected into this local coordinate system. This allows the model to learn local shape contexts without the need for global attention. Wu et al. [170] proposed an Attention-Based Point Cloud Edge Sampling (APES) for sampling points from a point cloud based on their importance to the outline of the object. The attention mechanism in APES is based on the self-attention mechanism used in transformer models. The self-attention mechanism computes the attention weights between each point in the point cloud and all other points in the point cloud. The points with the highest weights are then selected to form a new, downsampled point cloud.

Table 4 presents a comparison of various pointwise transformer-based methods on different datasets. Here, PTv2 [166] achieved the highest OA and mAcc on the ModelNet 40 dataset, while SPoTr [168] showed best performance on ScanObjectNN dataset.

4.1.7 Other methods

Apart from the methods discussed earlier, there are several techniques that cannot be neatly categorized into a specific class. These methods utilize multiple modalities to learn intricate representations of point clouds, thereby enabling them to capture intricate patterns and relationships. Hence, in this section, we will explore these unconventional methods that transcend traditional classification boundaries, providing a comprehensive overview of each.

With prior knowledge of kernel positions and sizes, RBFNet [171] aggregates features from sparsely distributed Radial Basis Function (RBF) kernels to explicitly characterize the spatial distribution of points. PointAugment, an auto-augmentation framework introduced by Li et al. [211], optimizes and augments point cloud data by automatically learning each input sample’s shape-wise transformation and pointwise displacement. Prokudin et al. [212] transform the point cloud into a vector with a short fixed length by encoding the point cloud as minimal distances to a uniformly distributed basis point set sampled from a unit ball. Finally, common machine learning techniques are applied to produce the encoder representation. Cheng et al. [175] present the Point Relation-Aware Network (PRA-Net), comprising two modules: intra-region structure learning (ISL) and inter-region relationship learning (IRL). The ISL module can adaptively incorporate local structural information into point features, while the IRL module dynamically and effectively preserves inter-region relations using a differentiable region partition method and a representative point-based strategy.

FG-Net [173] proposes a comprehensive deep learning framework for large-scale point cloud understanding that achieves accurate and real-time performance with a single GPU. The network incorporates a noise and outlier filtering mechanism, utilizes a deep CNN to exploit local feature correlations and geometric patterns, and employs efficient techniques such as inverse density sampling and feature pyramid-based residual learning to address efficiency concerns. Another recent work in this area is proposed by Xu et al. in GDANet [176]. It introduces the Geometry-Disentangled Attention Network, which dynamically disentangles point clouds into contour and flat parts of 3D objects. It utilizes the disentangled components to generate holistic representations and applies different attention mechanisms to fuse them with the original features. The network also captures and refines 3D geometric semantics from the disentangled components to supplement local information.

PointSCNet [177] captures the geometrical structure and local region correlation of a point cloud using three key components: a space-filling curve-guided sampling module, an information fusion module, and a channel-spatial attention module. The sampling module selects points with geometrical correlation using Z-order curve coding. The information fusion module combines structure and correlation information through a correlation tensor and skip connections. The channel-spatial attention module enhances critical sites and feature channels for improved network representation. Lu et al. [178] proposed APP-Net, a network that utilizes auxiliary points and push and pull operations to efficiently classify point cloud data. The auxiliary points guide the network’s attention to important regions, while the push and pull operations allow for efficient computation and improved feature representation.

PointMeta [179] by Lin et al. is a unified meta-architecture for point cloud analysis. It abstracts the computation pipeline into four meta-functions: neighbor update, neighbor aggregation, point update, and position embedding. These functions enable learning of local and global features, point refinement, and encoding of spatial relationships. PointMeta offers flexibility and efficiency in designing point cloud analysis models. However, a detailed computational complexity analysis is not provided in the paper.

Table 4 shows that among the models in other methods, APP-Net achieved the highest overall accuracy (OA) score of 94.00% on the ModelNet 40 dataset. However, among all the models across different methodologies, FG-Net emerged as the leader in mean accuracy (mAcc) with a score of 93.10%. On the ScanObjectNN dataset, PRA-Net achieved the highest mAcc score, and GDANet achieved the highest OA score.

4.2 Unsupervised training

Unsupervised representation learning is a technique that aims to learn useful and informative features from unlabeled data. In the context of point cloud understanding, this approach involves training deep neural networks to extract latent features from raw, unannotated point cloud data. Unsupervised representation learning for point clouds has gained significant attention in recent years due to its ability to reduce the need for labeled data and improve the performance of various point cloud applications including natural language understanding [213], object detection [214], graph learning [215], and visual localization [216]. By pre-training deep neural networks on unlabeled data, unsupervised learning uncovers latent features without human-defined annotations, reducing reliance on labeled data. It can be categorized into generative modeling, where synthetic point clouds are generated, and self-supervised learning, which involves predicting missing information from partially observed point clouds. This active research field holds promise for improving the accuracy and efficiency of point cloud processing tasks.

4.2.1 Generative model-based methods

Unsupervised approaches like generative adversarial networks (GANs) [217] and autoencoders (AEs) [184] learn representation of provided data [121]. AEs consist of an encoder, internal representation, and decoder, and are widely used for data representation and generation. They can capture point cloud irregularities and address sparsity during upsampling. GANs, on the other hand, consist of a generator and discriminator, aiming to generate realistic data samples. GANs learn to produce new data with similar statistics as the training set.

FoldingNet [180] is an end-to-end unsupervised deep autoencoder network that uses the concatenation of a vectorized local covariance matrix and point coordinates as its input. Hassani and Haley [184] suggested an unsupervised multi-task autoencoder to learn point and shape features, inspired by Inception module [218] and DGCNN [132]. Multi-scale graphs are used to build the encoder. The decoder is built utilizing three unsupervised tasks: clustering, self-supervised classification, and reconstruction, all of which are combined and trained together with a multi-task loss.

Latent-GAN [182] is one of the first networks to use GAN for raw point clouds. The authors discuss various methods such as autoencoders, variational autoencoders (VAE) [219], GAN, and flow-based models that have been proposed for learning effective representations of 3D point clouds and generating new ones. 3DAAE [220] can learn the representation of 3D point clouds by using an end-to-end approach. This model generates output by first learning a latent space for 3D shapes and then using adversarial training. The inventors of 3DAAE created a 3D autoencoder that takes 3D data as input and produces a 3D output.

The general pipeline of unsupervised representation learning on point clouds. Neural networks are trained on unannotated point clouds using unsupervised learning, followed by transfer of learned representations to downstream tasks for network initialization. Pre-trained networks can then be fine-tuned with a small amount of annotated task-specific point cloud data [221]

3D point-capsule networks [122] have been developed to address the sparsity issue in point clouds while preserving their spatial arrangements. This network extends the 2D capsule networks to the 3D domain and uses an autoencoder to handle the sparsity of point clouds. In contrast, 3DPointCapsNet [185] incorporates pointwise MLP and convolutional layers to extract point-independent features and employs several maxpooling layers to derive a global latent representation. Unsupervised dynamic routing is then used to learn representative latent capsules. In addition, Pang et al. [195] introduced a novel approach using masked autoencoders for self-supervised learning of point clouds. They addressed challenges related to point cloud properties, such as location information leakage and uneven density, by dividing the input into irregular patches, applying random masking, and using an asymmetric design with shifting mask token operation. This enabled a transformer-based autoencoder to learn latent characteristics from unmasked patches and reconstruct the masked ones.

Point clouds are discrete samples of a continuous three-dimensional surface. As a result, sample differences in the underlying 3D shapes are inescapable. The conventional autoencoding paradigm requires the encoder to record sampling fluctuations in the same way that the decoder must recreate the original point cloud. Yan et al. [193] introduced the Implicit Autoencoder (IAE) to overcome the challenge of sampling fluctuations in point clouds. By using an implicit decoder instead of a point cloud decoder, IAE generates a continuous representation that can be shared across multiple samplings of the same model. This approach allows the encoder to focus on learning valuable features by ignoring sampling changes during reconstruction.

Point-BERT [196] is a more advanced version of BERT that employs transformers to generalize 3D point cloud learning. A point cloud tokenizer with a discrete Variational AutoEncoder (dVAE) is intended to generate discrete point tokens containing significant local information once the network separates a point cloud into many local point patches. Then it feeds some patches of input point clouds into the backbone transformers, using random masking. Under the supervision of point tokens obtained by the tokenizer, the pre-training goal is to recover the original point tokens at the masked places. In [200], Zhang et al. introduced Point-M2AE, a pre-training framework for learning 3D representations of point clouds. It utilizes a multi-scale masking strategy, pyramid architectures, local spatial self-attention, and complementary skip connections to capture detailed information and high-level semantics of shapes. This paper also highlights the significance of a lightweight decoder in Point-M2AE, which contributes to the reconstruction of point tokens and promotes the quality of shape representation. [199] discusses another method for learning representations for 3D point clouds using masked autoencoders. In the proposed method, a portion of the points in the point cloud are masked out and the masked autoencoder is trained to reconstruct the masked out points.

To addresses the challenge of limited 3D datasets for learning high-quality 3D features, in [204], the authors proposed Image-to-Point Masked Autoencoders (I2P-MAE). By leveraging 2D pre-trained models, I2P-MAE reconstructs masked point tokens using an encoder-decoder architecture. It employs a 2D-guided masking strategy to focus on semantically important point tokens and capture key spatial cues for significant 3D structures. Through self-supervised pre-training and multi-view 2D feature reconstruction, I2P-MAE enables superior 3D representations from 2D pre-trained models. In their paper [203], Dong et al. proposed ACT (Autoencoders as Cross-Modal Teachers), a method for training 3D point cloud models using pretrained 2D image transformers. ACT involves two steps: pretraining a 2D image transformer on a large image dataset and fine-tuning it on a 3D point cloud dataset. The fine-tuning process utilizes the 2D image transformer to generate a latent representation of the 3D point cloud, which is then used to train the 3D point cloud model.

4.2.2 Self-supervised methods

Self-supervised learning in point cloud processing is a powerful technique that leverages unannotated data to improve performance across various applications. By incorporating geometric and topological priors, models can learn feature representations. This involves training a model to predict local geometric properties, such as normals or curvatures, using the point positions as input.

Due to the complex nature of 3D scene understanding tasks and the vast differences provided by camera perspectives, illumination, occlusions, and other factors, there are yet no effective and generalizable pre-trained models available. In their paper, Huang et al. [192] address this problem by proposing a self-supervised Spatio-temporal Representation Learning (STRL) framework that learns from unlabeled 3D point clouds. STRL utilizes two temporally correlated frames, applies spatial data augmentation, and self-supervisedly learns invariant representations.

Occlusion Completion (OcCo) is an unsupervised pre-training method proposed by Wang et al. [190], which comprises of three separate mechanisms. The first step is to use view-point occlusions to create masked point clouds. The second step is to complete reconstructing the occluded point cloud, and the final step is to use the encoder weights as the initialization for the downstream point cloud task. Sun et al. [191] developed a novel self-supervised learning technique called Mixing and Disentangling (MD) for learning 3D point cloud representations in response to the enormous success of self-supervised learning. The authors combined two input shapes and demand that the model learn to distinguish the inputs from the mixed shape. This reconstruction task serves as the pretext optimization objective for self-supervised learning, and the disentangling process drives the model to mine the geometric prior knowledge.

Xue et al. [208] introduced ULIP (Unified Language-Image-Point Cloud) as a pre-training method for learning a unified representation of language, images, and point clouds in 3D understanding. ULIP learns a common embedding space for these modalities, enabling various 3D tasks. By leveraging the shared information about 3D objects, ULIP creates informative and discriminative representations. It utilizes a large-scale dataset of language, images, and point clouds, generated with triplets describing the same object, and trains the model to predict the missing modality in each triplet. PointCaps [198] introduces a capsule network, a structured representation learning approach for point clouds. The method consists of two operations: learning local and global features of the point cloud using the capsule network, and subsequently classifying the point cloud into predefined classes. Qi et al. [207] propose ReCon (Contrast with Reconstruct), a self-supervised method for 3D representation learning. ReCon combines contrastive learning and generative pretraining in two stages. In the contrastive learning step, ReCon learns local and global features of 3D point clouds through pairwise comparisons. In the generative pretraining step, ReCon learns high-level features by generating data similar to the training set

Point2vec [206] extends the data2vec [222] framework for self-supervised representation learning on point clouds, overcoming the limitation of leaking positional information during training. Point2vec unleashes the full potential of data2vec-like pre-training on point clouds. In response to the growing popularity of Large Language Models, Chen et al. introduced PointGPT [205] that extends the GPT concept to point clouds, addressing challenges such as disorder properties and low information density. PointGPT pre-trains transformer models using a point cloud auto-regressive generation task. The method employs a dual masking strategy in the extractor-generator based transformer decoder, capturing dependencies between points and generating coherent and realistic point clouds.

Table 4 presents findings encompassing both generative-based and self-supervised-based methods. The outcomes illuminate that amid all models derived from diverse methodologies, PointGPT-L secured the top OA for both the ModelNet40 and ScanObjectNN datasets.

5 3D point cloud semantic segmentation

The task of 3D point cloud segmentation requires a comprehensive understanding of both the overall geometric structure and the specific properties of each individual point. Depending on the level of detail required, 3D point cloud segmentation techniques can be broadly classified into three categories: semantic segmentation at the scene level, instance segmentation at the object level, and part segmentation at the part level. In this paper, our exclusive focus has been on semantic segmentation, rather than encompassing all forms of segmentation.