Abstract

Foreground segmentation of moving regions in image sequences is a fundamental step in many vision systems including automated video surveillance, human-machine interface, and optical motion capture. Many models have been introduced to deal with the problems of modeling the background and detecting the moving objects in the scene. One of the successful solutions to these problems is the use of the well-known adaptive Gaussian mixture model. However, this method suffers from some drawbacks. Modeling the background using the Gaussian mixture implies the assumption that the background and foreground distributions are Gaussians which is not always the case for most environments. In addition, it is unable to distinguish between moving shadows and moving objects. In this paper, we try to overcome these problem using a mixture of asymmetric Gaussians to enhance the robustness and flexibility of mixture modeling, and a shadow detection scheme to remove unwanted shadows from the scene. Furthermore, we apply this method to real image sequences of both indoor and outdoor scenes. The results of comparing our method to different state of the art background subtraction methods show the efficiency of our model for real-time segmentation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the last decade, automatic segmentation of foreground from background in video sequences has attracted lots of attention in computer vision [1–3]. Foreground segmentation is often used as the primary step in video surveillance [4–6], optical motion capture [7, 8], and multimedia processing [9, 10] to model the background and to detect the moving objects in the scene. Background subtraction involves the extraction of a background image which does not include any moving object, reference image, then subtracting each new frame from this image and thresholding the result to highlight regions of non-stationary objects. Normally, video surveillance systems can be employed in two kinds of environments: controlled and uncontrolled. Monitoring systems in controlled or indoor environments (i.e., airports, warehouses, and production plants) are easier to implement as they do not depend on weather changes. Uncontrolled environment is used to refer to outdoor scenes where illumination and temperature changes occur frequently, and where various atmospheric conditions can be observed. Normally, when developing background subtraction algorithms, there are two major problems that must be taken into consideration, namely, robustness and adaptation. These methods should be robust to illumination and weather changes, as well as able to detect addition, occlusion, and removal of objects in the scene. To take into account these problems of robustness and adaptation, many background modeling methods have been developed (a complete detailed survey can be found in [11]).

In the past, computational barriers have limited the complexity of real-time video processing applications. As a consequence, most systems were either too slow to be practical, or succeeded by restricting themselves to very controlled situations. Recently, faster computers have enabled researchers to consider more complex, robust models for real-time analysis of streaming data. These new methods allow researchers to begin modeling real-world processes under varying conditions. Most recent methods assume that the images of the scene without the intruding objects exhibit some regular behavior that can be well described by a statistical model. If we have a statistical model of the scene, an intruding object can be detected by spotting the parts of the image that do not fit the model. In the majority of these methods, a common bottom-up approach has been applied to construct a probability density function for each pixel separately. Its idea is to segment the foreground moving objects by constructing over time a mixture model for each pixel and deciding, in a new input frame, whether the pixel belongs to the foreground or the background [12, 13]. Among the vast amount of approaches that have been proposed to accomplish this task, adaptive Gaussian mixture models (GMMs) [12, 14] have proven their outstanding suitability in several computer vision application [15] and particularly in the surveillance domain because of their ability to achieve many of the requirements of a surveillance system, e.g., adaptability and multimodality, in real time with low memory requirements. GMMs model the history of each pixel by a mixture of K Gaussian distributions. In [16], the authors implemented a pixel-wise EM framework for detection of vehicles by attempting to explicitly classify the pixel values into three separate predetermined distributions corresponding to the road color, the shadow color, and colors corresponding to vehicles. Stauffer et al. [12] generalized this idea by implementing online K-means approximation algorithm for modeling each pixel using a mixture of K Gaussians, where K was chosen in the range (3–5) depending on the computational power of the machine. In [14] the use of a negative prior evidence was introduced to discard the components that are not supported by the data, therefore being able to automatically select the number of components of the mixture used for each pixel. In [17] the use of an adaptive learning rate calculated for each Gaussian at every frame was proposed which led to an improved segmentation performance compared to the standard method. However, these methods have some drawbacks. Modeling the background using the GMM implies the assumption that the background and foreground distributions are Gaussians which is not always the case as argued by [18]. Figure 1 shows the probability density function of a pixel throughout a video. From this figure we can notice that the distribution is not symmetrical. Applying the GMM, we can observe its inefficiency in modeling the data. To overcome these problems, some researchers have shown that the generalized Gaussian mixture (GGM) can be a good choice to model non-Gaussian data [19–21]. Compared to the Gaussian distribution (GD), the generalized Gaussian distribution (GGD) has one more parameter \(\lambda \) that controls the tail of the distribution: the larger the value of \(\lambda \) is, the flatter is the distribution; the smaller \(\lambda \) is, the more peaked is the distribution. Despite the higher flexibility that GGD offers, it is still a symmetric distribution inappropriate to model non-symmetrical data. From Fig. 1, we can recognize that the GGM is not suitable in modeling our data. In this article, we suggest the use of the asymmetric Gaussian distribution (AGD) capable of modeling asymmetrical data. The AGD uses two variance parameters for left and right parts of the distribution, which allow it to change its shape to conclude the asymmetry. As shown in Fig. 1, we can notice that the asymmetric Gaussian mixture (AGM) was able to accurately model the data.

An important part of the mixture modeling problem concerns learning the model parameters and determining the number of consistent components (\(M\)) which best describes the data. For this purpose, many approaches have been suggested. The vast majority of these approaches can be classified, from a computational point of view, into two classes: deterministic and stochastic methods. Deterministic methods estimate the model parameters for different range of \(M\) then choose the best value that maximize a model selection criteria such as the Akaike’s information criterion (AIC) [22], the minimum description length (MDL) [23] and the Laplace empirical criterion (LEC) [24]. Stochastic methods such as Markov chain Monte Carlo (MCMC) can be used to sample from the full a posteriori distribution with \(M\) considered unknown [25]. Despite their formal appeal, MCMC methods are too computationally demanding, therefore cannot be applied for online applications such as foreground segmentation. For this reason, we are interested in deterministic approaches. In our proposed method, we use K-means algorithm to initialize the AGM parameters and successfully solve the initialization problem. The number of mixture components is automatically determined by implementing the minimum message length (MML) criterion [26] into the expectation–maximization (EM) algorithm. Therefore, the method can integrate simultaneously parameter estimation and model selection in a single algorithm, thus it is totally unsupervised.

Shadows, areas where direct light from a light source cannot reach due to obstruction by different objects, are an ever-present aspect of color images. As a result of the difference between the light intensity reaching a shaded region and a directly lit region, shadows are often characterized by conspicuous strong brightness gradients. In outdoor scenes, the change between shadow and non-shadow regions is not entirely a brightness difference, but a color one as well. This property makes shadow detection task a highly problematic one in a number of different fields. Recently, there have been few studies concerning shadow removal; however, the best performing methods still require user interaction with image sequences to perform optimally. In our moving shadow detection algorithm, we implement a method compatible with the RGB color model and able to use our mixture model. It is noteworthy that the proposed learning approach is completely different from recent efforts published for instance in [21, 27]. In fact, [27] proposed the use of the asymmetric Gaussian with different parametrization for pattern recognition applications. Furthermore, this method used the two-dimensional AGM for data classification when the number of components is known in advance. In [21] a Bayesian non-parametric approach based on infinite GGM was developed for pedestrian detection and foreground segmentation.

The rest of this paper is organized as follows. Section 2 describes the AGM model and its learning algorithm. In Sect. 3, we assess the performance of the new model with shadow removal scheme for foreground segmentation; while comparing it to other models. Our last section is devoted to the conclusion and some perspectives.

2 Finite AGM model

Formally we say that a \(d\)-dimensional random variable \(\mathbf{X} = [X_1,\ldots ,X_d]^T\) follows a \(M\) components mixture distribution if its probability function can be written in the following form:

where

-

\(\xi _j\) is the set of parameters of the component \(j\),

-

\(p_j\) are the mixing proportions which must be positive and sum to one,

-

\(\varTheta = \{p_1,\ldots ,p_M, \xi _1, \ldots , \xi _M\}\) is the complete set of parameters fully characterizing the mixture,

-

\(M \ge \) 1 is number of components in the mixture.

For the AGM, each component density \(p(\mathbf{X}\vert {\xi }_j)\) is an AGD given by:

where \(\xi _j = ({{\varvec{\mu }}}_{j}, {{\varvec{\sigma }}}_{l_{j}}, {\varvec{\sigma }}_{r_{j}})\) is the set of parameters of component \(j\) where \({\varvec{\mu }}_{j} = (\mu _{j1}\ldots ,\mu _{jd}), {\varvec{\sigma }}_{\mathbf{l}_{j}} = (\sigma _{l_{j1}},\ldots ,\sigma _{l_{jd}})\), and \({\varvec{\sigma }}_{\mathbf{r}_{j}} = (\sigma _{r_{j1}},\ldots ,\sigma _{r_{jd}}\)) are the mean, the left standard deviation, and the right standard deviation of the \(d\)-dimensional AGD, respectively. The AGD is chosen to be able to fit, in analytically simple and realistic way, symmetric or non-symmetric data by the combination of the left and right variances.

Let \({\mathcal {X}}= (\mathbf{X}_{1},\ldots , \mathbf{X}_{N}\)) be a set of \(N\) independent and identically distributed vectors, assumed to arise from a finite AGM model with \(M\) components. Thus, it can be expressed as follows:

where the set of parameters of the mixture with \(M\) classes is defined by \(\varTheta = ({\varvec{\mu }}_{1},\ldots , {\varvec{\mu }}_{M}, {\varvec{\sigma }}_{l_{1}},\ldots , {\varvec{\sigma }}_{l_{M}}, {\varvec{\sigma }}_{r_{1}},\ldots , {\varvec{\sigma }}_{r_{M}}, p_1,\ldots , p_M)\).

We introduce membership vectors, \(Z_{i}=(Z_{i1},\ldots ,Z_{iM})\), one for each observation, whose role is to encode to which component the observation belongs. In other words, \(Z_{ij}\), the unobserved or missing variable in each membership vector, equals \(1\) if \(\mathbf{X}_{i}\) belongs to class \(j\) and \(0\), otherwise. The complete-data likelihood for this case is then:

2.1 Maximum likelihood estimation of the mixture parameters

For the moment, we suppose that the number of mixture \(M\) is known. The maximum likelihood method consists of getting the mixture parameters that maximize the log-likelihood function given by:

by replacing each \(Z_{ij}\) by its expectation, defined as the posterior probability that the \(i\)th observation arises from the \(j\)th component of the mixture as follows:

Using Eq. 6, we can affect each observation to one of the \(M\) clusters. Now, using these expectations, we want to maximize the complete-data log-likelihood with respect to our model parameters. This can be done by taking the gradient of the log-likelihood with respect to \(p_j, {\varvec{\mu }}_j, {\varvec{\sigma }}_{l_{j}}\), and \({\varvec{\sigma }}_{r_{j}}\). When estimating \(p_j\), we actually need to introduce Lagrange multiplier to ensure that the constraints \(p_j > 0\) and \(\sum _{j=1}^{M} p_j = 1\) are satisfied. Thus, the augmented log-likelihood function can be expressed by:

where \(\varLambda \) is the Lagrange multiplier. Differentiating the augmented function with respect to \(p_j\) we get:

Taking the gradient of the complete log-likelihood with respect to \({\varvec{\mu }}_{j}, {\varvec{\sigma }}_{l_{j}}\), and \({\varvec{\sigma }}_{r_{j}}\), we obtain the following for \(k=1,\ldots ,d\):

We can notice that Eqs. 10 and 11 are non-linear, therefore we have decided to use the Newton–Raphson method for estimation:

where \(\frac{\partial ^2 L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{l_{jk}}^{2}}, \frac{\partial L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{l_{jk}}}, \frac{\partial ^2 L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{r_{jk}}^{2}}\), and \(\frac{\partial L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{r_{jk}}}\) are given in Appendix A.

2.2 Model selection using the minimum message length criterion

Different model selection methods have been introduced to estimate the number of components of a mixture. In this article, we are interested with deterministic approaches, especially MML. The MML approach is based on evaluating statistical models according to their ability to compress a message containing the data (minimum coding length criteria). High compression is obtained by forming good models of the data to be coded. For each model in the model space, the message includes two parts. The first part encodes the model, using only prior information about the model and no information about the data. The second part encodes only the data in a way that makes use of the model encoded in the first part. When applying the MML, the optimal number of classes of the mixture is obtained by minimizing the following function [26, 28]:

where \(p(\varTheta )\) is the prior probability, \(\vert F(\varTheta )\vert \) is the determinant of the Fisher information matrix minus the log-likelihood of the mixture, and \(N_p\) is the number of parameters to be estimated and is equal to \(M(3d+1)\) in our case. In the following subsections, we give the derivation of both the prior probability \(p(\varTheta )\) and the determinant of the Fisher information matrix of minus the log-likelihood of the mixture \(\vert F(\varTheta )\vert \).

2.2.1 Derivation of the prior \(p(\varTheta )\)

We specify a prior \(p(\varTheta )\) that expresses the lack of knowledge about the mixture parameters. It is reasonable to assume that the parameters of different components in the mixture are independent, since having knowledge about a parameter in one class does not provide any knowledge about the parameters of another class. Thus, we can assume that the mixture parameters are mutually independent, then:

where \(P=(p_1,\ldots ,p_M)\). In what follows, we will specify each of these priors separately. Starting with \( p(P)\), we know that \(P\) = (\(p_1,\ldots ,p_M\)) is defined on the simplex \(\{(p_1,\ldots ,p_M):\sum _{j=1}^{M}p_j=1\}\). Then, a natural choice as a prior for this vector is the Dirichlet distribution

where \((\eta _1,\ldots ,\eta _M)\) is the parameter vector of the Dirichlet distribution. When \(\eta _1,\ldots ,\eta _M=\eta =1\) we get a uniform prior over the space \(p_1+\cdots +p_M = 1\). This prior is represented by

For \({\varvec{\mu }}_j\), we take a uniform prior for each \(\mu _{jk}\). Each \(\mu _{jk}\) is chosen to be uniform in the region \((\mu _{jk}-\sigma _{l_{k}}\le \mu _{jk} \le \mu _{jk}+\sigma _{r_{k}})\), then the prior for \({\varvec{\mu }}_j\) is given by

For both \({\varvec{\sigma }}_{l_{j}}\) and \({\varvec{\sigma }}_{r_{j}}\), knowing that (\(0\le \sigma _{l_{jk}} \le \sigma _{l_{k}}\)) and (\(0\le \sigma _{r_{jk}} \le \sigma _{r_{k}}\)), then a good choice of prior for \(\sigma _{l_{jk}}\) and \(\sigma _{r_{jk}}\) is the uniform distribution:

Finally, by replacing the priors of the parameter in Eq. 15 by each prior value in Eqs. 17, 18, 19, and 20 we get

2.2.2 Derivation of the determinant of the Fisher information matrix \(\vert F(\varTheta )\vert \)

The Fisher information matrix is the expected value of the Hessian of minus the logarithm of the likelihood. It is difficult, in general, to obtain the expected Fisher information matrix of a mixture analytically. Therefore, we use the complete Fisher information matrix where its determinant is equal to the product of the determinant of the information matrix for each component times the determinant of the information matrix of \(P\)

in which \(\vert F({\varvec{\mu }}_j)\vert , \vert F({\varvec{\sigma }}_{l_{j}})\vert \), and \(\vert F({\varvec{\sigma }}_{r_{j}})\vert \) are the Fisher information with regards to \({\varvec{\mu }}_j, {\varvec{\sigma }}_{l_{j}}\), and \({\varvec{\sigma }}_{r_{j}}\), respectively, for the AGD that corresponds to component \(j\) in the mixture model. \(\vert F(P)\vert \) is the Fisher information with regards to the mixing parameters vector that satisfy the requirement \(\{\sum _{j=1}^{M}p_j=1\}\). Consequently, it is possible to consider the generalized Bernoulli process with a series of trials, each of which has \(M\) possible outcomes labeled first cluster, second cluster,..., \(M\mathrm{th}\) cluster. Therefore, the number of trials of the \(j\mathrm{th}\) cluster is a multinomial distribution of parameters \(p_1, p_2, \ldots , p_M\). Then, the determinant of the Fisher information matrix is

For \(\vert F({\varvec{\mu }}_j)\vert , \vert F({\varvec{\sigma }}_{l_{j}})\vert \), and \(\vert F({\varvec{\sigma }}_{r_{j}})\vert \) let us consider the \(j\mathrm{th}\) class \({\mathcal {X}}_j= (\mathbf{X}_l,\ldots ,\mathbf{X}_{l+n_j-1}\)) of the mixture as the data in class \(j\) after classifying all the data \({\mathcal {X}}\) using the maximum a posteriori probability defined by Eq. 6. Note that \(n_j\) is the number of data vectors belonging to the \(j\mathrm{th}\) distribution. This given choice of the \(j\mathrm{th}\) class allows us to simplify the notation without loss of generality. Then, the Hessian matrices when we consider the vectors \({\varvec{\mu }}_j, {\varvec{\sigma }}_{l_{j}}\), and \({\varvec{\sigma }}_{r_{j}}\) are given by:

where (\(k_1 ,k_2) \in (1, \ldots ,d\)). Using Appendix B to compute the derivatives in Eqs. 24, 25, 26, we obtain

2.3 The AGM model learning algorithm

In the following steps, we summarize the algorithm used for the AGM parameters estimation and model selection. Given a number of components, the mixture parameters are estimated iteratively using the EM algorithm:

-

Input: Data set \({\mathcal {X}}\) and \(M_{\max }\)

-

Output: \(\varTheta _{M^*}\) (the values of \(\varTheta \) when \(M^*\) components are chosen) and \(M^*\)

-

Step 1: For \(M = 1:M_{max}\) do{

-

}END FOR

-

Step 2: Select the model \(M^*\) with the smallest message length.

To initialize the parameters, we used the K-means algorithm. Note that, we initialized both the left and right standard deviations with the standard deviation values obtained from the K-means. To detect the convergence of the EM, we stop the iterations when the difference of the log-likelihood from two successive iterations, \(\ell \) and \(\ell + 1\), is smaller than a predefined threshold \(\epsilon \).

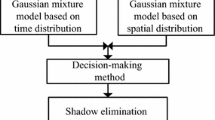

3 Background subtraction

In this section, we investigate the efficiency of the AGM algorithm for background subtraction. Our method can be divided into two main components: background modeling and shadows detection.

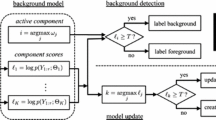

3.1 Adaptive AGM algorithm

Adaptive Gaussian mixture algorithms are widely applied for background subtraction. In [12], the authors presented an online learning of a GMM for each pixel in the video frames. Their idea was to model each pixel in the scene by a mixture of \(K\) Gaussian distributions, where \(K\) is taken in the range (3–5). Then, they ordered the \(K\) distributions based on the fitness value \(p_j/\sigma _j\) and used the first \(B\) distributions to model the background of the scene, where \(B\) is estimated as

\(T\) is a measure of the minimum portion of the data that represents the background in the scene. Then, the foreground pixels were detected as any pixel that is more than \(2.5\) standard deviations away from any of the \(B\) distributions. For the (\(\ell +1\)) frame, the first Gaussian component that matches the test value will be updated by the following equations:

where \(1/\alpha \) defines the time constant which determines change. \(\hat{p}_j^{\ell +1}, \hat{\mu }_j^{\ell +1}\), and \(\hat{\varSigma }_j^{\ell +1}\) are the estimated value of the weight, mean, and covariance of the component \(j\) of the mixture at the (\(\ell +1\)) frame, respectively. Note that \(\hat{p}(j\vert {X^{\ell +1}})\) is formulated as:

Finally, \(\rho \) is defined as:

where \({\mathcal {N}} (X^{\ell +1}; \hat{\mu }_j^{\ell }, \hat{\varSigma }_j^{\ell })\) represents the Gaussian probability density function with mean \(\hat{\mu }_j^{\ell }\) and covariance \(\hat{\varSigma }_j^{\ell })\) at \(X^{\ell +1}\).

In the case when none of the \(K\) distributions matches that pixel value, the least probable component is replaced by a distribution with the current value as its mean, an initially high variance, and a low weight parameter. According to their papers [12, 13, 29], only two parameters, \(\alpha \) and \(T\), have to be set in the method. However, there are four major problems when using this method. First, modeling both foreground and background pixels by a mixture of Gaussians implies the assumption that they are symmetrical which is not always the case. Second, using a prefixed number of components to represent all pixel mixtures is not practical in real life. The method is not robust when dealing with busy environments because a clean background is rare. Last but not least, slow adaptations in the means and the covariance matrices, therefore the tracker can fail within a few seconds after initialization. In this paper, we are trying to overcome these drawbacks by:

-

1.

Using the AGM to model the non-symmetricity of the pixels distributions.

-

2.

Using the MML to choose the right number of components for each pixel distribution.

-

3.

Using another method to update the mixture parameters.

-

4.

Removing the connection between the likelihood term and \(\rho \).

In our method, we start by representing every pixel at a given time frame \(\ell \) by a vector of three values: \(\mathbf{X}^{(\ell )}= [R,G,B]\), where \(R,G,B\) are the red, green, and blue values taken from the color camera. Then, as argued above, we model each pixel by an AGM to enhance the robustness of our algorithm in modeling the non-symmetricity of pixels distributions. To update the parameters of the AGM mixture at an input frame (\(\ell +1\)), we check whether its new value matches one of the \(M\) components of its AGM mixture. A match to a component occurs when the value of the pixel \(\mathbf{X}^{(\ell +1)}\) falls within \(K\) standard deviations of the mean of the component (depending on the position of the pixel value from the mean, we use the left or right standard deviation). If a match occurs then we update the component parameters by:

where \(B_\ell \) represents any sequence of positive numbers that decreases to zero. The derivatives in Eq. 37 are given in Appendix A. If no match occurs, we create a new component for the mixture with the mean equal to the new value of the pixel. Then, we evaluate the new model \({\mathcal {M}}^{\ell +1}\) with MML (note that \({\mathcal {M}}^{\ell +1}\) denotes the mixture model associated with the pixel \(\mathbf{X}\) at time \(\ell +1\)). In other words, we calculate the message length for the new mixture model with \(M+1\) components: if \(MessLen(\ell )>MessLen(\ell +1)\), then we use \({\mathcal {M}}^{\ell +1}\) else we use \({\mathcal {M}}^{\ell }\) and update the parameters using Eqs. (36) and (37). In the case when there is an empty component \(j, p_j=0\), we discard the component \(j\) of the mixture and set \(M \leftarrow M-1\). Finally, using the same idea of [12], we order the mixture components by the value of \(\frac{p_j}{|\vert {{\varvec{\sigma }}}_{l_j}|\vert +|\vert {{\varvec{\sigma }}}_{r_j}|\vert }\), where \(|\vert {{\varvec{\sigma }}}_{l_j}|\vert \) and \(|\vert {{\varvec{\sigma }}}_{r_j}|\vert \) are the norm of the left and right standard deviations of the component \(j\), respectively. At that point, we use Eq. 30 to model the background by the first \(B\) components.

The complete algorithm for adaptive background subtraction can be summarized in the following steps:

-

Step 1- Initialization for each pixel \(X\):

-

1.

Set \(M\)= 1, \(p_1\)= 1

-

2.

\(\forall k=1, \ldots \), d: set \(\sigma _{l_{1k}} = \sigma _{r_{1k}} = 0.2\), and \(\mu _{1k} = X_{k}^{(0)}\).

-

1.

-

Step 2- For each pixel \(\mathbf{X}^{(\ell +1)}\) in a new frame do {

-

1.

Verify if there is a match that exists for the new pixel value.

-

(a)

If True

-

(b)

If False

-

i

Add a new component to the mixture with mean equal the new pixel value.

-

ii

Evaluate the new model \(\mathcal {M}^{\ell +1}\) with MML.

-

i

-

(a)

-

2.

Check if there is any \(p_j\)= 0 then { \(M \leftarrow M-1\) }.

-

3.

Order the pixel mixture components by the values of \(\frac{p_j}{|\vert {{\varvec{\sigma }}}_{l_j}|\vert +|\vert {{\varvec{\sigma }}}_{r_j}|\vert }\).

-

4.

Use Eq. 30 to extract foreground objects.

-

1.

3.2 Shadow detection algorithm

3.2.1 Related works

Moving shadows are a major problem for foreground detection algorithms. Shadows pixels in any image differentiate themselves from the background and generally fall within the group of pixels associated with foreground objects. Labeling cast shadows as foreground objects lead to silhouette distortions and object fusions, thus reducing vision algorithm efficiency for scene monitoring and target recognition and tracking. Therefore, an effective shadow detection method is indispensable for accurate foreground segmentation.

Moving shadows in the scene are caused by the occlusion of light sources due to the moving objects. Therefore, shadow points have lower luminance values but similar chromaticity values. However, the texture characteristic around the shadow points remains unchanged since shadows do not alter the background surfaces. Shadow detection is known to be a challenging task because: (1) shadow points are mostly classified as foreground, since they differ significantly from the background; (2) shadow has the same motion as the moving object causing it, which make the task of differentiating between them very difficult; (3) shadow is always adjacent to moving object, which make it hard to remove using common segmentation techniques.

Generally, shadow detection algorithms can be classified into three categories: color-based, texture-based, and statistic-based. The color-based approaches attempt to describe the change in the color features of shadow pixels. In [30], the authors presented a robust shadow detection approach based on brightness, saturation, and hue properties in the HSV color space. Their idea is built on the hypothesis that shadows reduce surface brightness and saturation while maintaining hue properties in the HSV color space. The work in [31] addressed the shadow detection problem in YUV color space to avoid the time consuming HSV color space transformation. They distinguished the shadow regions from the foreground regions according to the observation that the YUV pixel value of shadows is lower than the linear pixels. According to the shadow model, Salvador et al. [32] identified an initial set of shadow pixels in RGB color space basing on the fact that shadow region darkens the surface. Then, combining color invariance with geometric properties of shadow they were able to detect the shadows in different scenes. In Horprasert et al. [33], built a model in the RGB color space to express normalized luminance variation and chromaticity distortions. Though color-based methods have shown their efficiency in shadow detection, they may not be reliable in the case when moving objects have similar color as moving shadows.

On the other hand, texture-based approaches are based on the fact that texture of shadow regions and the background are similar, while the texture of moving objects are different from the background. In [34], the authors explored ratio edges for shadow detection. They proved that ratio edge is illumination invariant and that the distribution of normalized ratio edge difference is a chi-square distribution. Then, a significance test was used to detect shadows. In addition to using scene brightness distortion and chromaticity distortion, Choi et al. [35] proposed three estimators which use the properties of chromaticity, brightness, and local intensity ratio. Hence, creating a chromaticity difference that obey a standard normalize distribution between the shadow region and the background. Finally, Finlayson et al. [36] used shadow edges along with illuminant invariant images to recover full color shadow-free images. Hence, texture-based methods may be the most promising technique for shadow detection because they are capable of capturing textual information of different scenes. However, they suffer from three major problems: (1) they require to set parameters for different scenes, (2) they can not handle complex and time-varying lighting conditions, and (3) they are too computationally demanding which limit their applications.

Recently, the statistical prevalence of cast shadows had been employed to learn shadows in the scenes. The principle of statistic-based methods is to build pixel-based statistical models to detect cast shadows. In [37], the authors used an adaptive Gaussian mixture model to detect moving cast shadows. The method consists of building a GMM to segment moving objects. Then, they identified the distribution of moving objects from shadows using an effective computational color model similar to the one proposed in [33]. The work in [38] proposed the use of a Gaussian mixture shadow model (GMSM). The algorithm models moving cast shadows of non-uniform and varying intensity, and builds statistical models to segment moving cast shadows using the GMM learning ability. However, the shadow models need a long time to converge while the lighting conditions should remain stable which is a major drawback. Liu et al. [39] were able to remove shadow using multilevel information in HSV color space. They attempted to improve the convergence speed of pixel-based shadow model using multilevel information. They used region-level information to increase the number of samples and global level information to update a pre-classifier. However, the method still suffers from the slow learning of the conventional GMM [12], and the low discriminativity of the pre-classifier in scenes having different types of shadows. Statistical-based methods are widely applied due to their robustness in different scenes; however, they are less effective in a real-world environment.

3.2.2 Proposed approach

In this section, we present a novel pixel-based statistical approach to model moving cast shadows. Normally, when using adaptive mixture algorithms, values that are frequently seen by a pixel are captured into stable background distributions while values that are infrequently seen are classified into foreground objects. Shadow values lie between both situations: they are not as frequent as background values, but their rate of appearance is higher than random foreground values. Therefore, in most cases, they are classified as foreground objects. Hence, the purpose of our shadow detection algorithm is to remove cast shadows classified by the adaptive AGM as foreground objects. Our idea is very simple and makes use of the property that shadows darken the surface upon which they are cast [32]. Let us consider \(\mathbf{X}^{\ell +1} = (X_R^{\ell +1}, X_G^{\ell +1}, X_B^{\ell +1})\) belonging to the foreground distribution. We classify the pixel \(\mathbf{X}^{\ell +1}\) as shadow if its distribution mean is smaller than that of the pixel background models for all three channels. The steps of this approach can be summarized as:

-

Input: The output of the asymmetric Gaussian mixture.

-

Output: Shadow candidates

-

For for each foreground distribution \(F\) do {

-

1.

Compare its mean \(\mu _R^{F}\) to the mean \(\mu _R^B\) values of the \(B\) background distributions.

-

2.

Compare its mean \(\mu _G^{F}\) to the mean \(\mu _G^B\) values of the \(B\) background distributions.

-

3.

Compare its mean \(\mu _B^{F}\) to the mean \(\mu _B^B\) values of the \(B\) background distributions.

-

4.

If (\(\mu _R^{F}-\mu _R^B < 0\) & \(\mu _G^{F}-\mu _G^B < 0\) & \(\mu _B^{F}-\mu _B^B < 0\)) then Consider this distribution to be a candidate for the shadow model.

-

1.

-

} END

where \(\mu _R^B, \mu _G^B, \mu _B^B\) are the \(B\) background distributions red, green, and blue means, respectively. Hence, after applying this algorithm we will end up with three different models for the background, foreground, and shadow.

3.3 Results

Our approach performance has been evaluated using the change detection dataset described in [40]. This dataset consists of 31 videos depicting indoor and outdoor scenes with boats, cars, trucks, and pedestrians that have been captured in different scenarios. The videos were taken with different cameras ranging from low-resolution IP cameras to thermal cameras. Therefore, spatial resolutions of the videos vary from 320 \(\times \) 240 to 720 \(\times \) 576 and the level of noise and compression artifacts varies from one video to another due to diverse lighting conditions present.

The videos are grouped into six categories according to the type of challenge each represents. The baseline category contains four videos, two indoor and two outdoor. There are six videos in the dynamic background category depicting outdoor scenes with strong background motion. The third category, Camera Jitter, contains one indoor and three outdoor videos captured by unstable cameras. Shadows: this category consists of two indoor and four outdoor videos exhibiting strong as well as faint shadows. Intermittent object motion is the fifth category which includes six videos with scenarios known for causing “ghosting” artifacts in the detected motion. The last category is composed of five (three outdoor and two indoor) sequences taken by far-infrared cameras. Figures (2,3,4,5,6,7) show some sample frames taken from this dataset.

In order to validate our method, we have compared it with six state-of-the-art methods. These methods can be divided into two main groups: pixel based and non-parametric Kernel Density Estimation (KDE) methods. For pixel based methods, we have used Stauffer et al. [12], Zivkovic [14], KaewTraKulPong et al. [37], and Evangelio et al. [41]; as for KDE methods, we have chosen the methods introduced by Elgammal et al. [42] and Nonaka et al. [43]. Figures (2–7) show the segmentations of our method with and without shadow detection as well as the six other methods. Note that in this application, we set the maximum number of components for the AGM to 9, the standard deviation factor \(K=2\), and the threshold \(T=0.6\). From Fig. 6, we can distinguish that the AGM+SD was not able to remove the shadow completely from the image because the difference in value between the shadow and foreground was not large enough to construct a model that represents this shadow distribution. However, from qualitative evaluation, we can notice the higher efficiency of our method.

In order to have a quantitative evaluation of the performance, we have used two well-known metrics, precision and recall, to quantify how well each algorithm works in classifying the data [44]. Precision (Eq. 38) represents the percentage of detected true positives to the total number of items detected by the algorithm. Recall (Eq. 39) is the percentage of number of detected true positives by the algorithm to the total number of true positives in the dataset:

where TP is the total number of true positives correctly classified by the algorithm, FP is the total number of false positives, and FN is the number of true positives that were wrongly classified as background (false negatives). Tables (1–7) show the average recall and precision for all methods. From Table 7, we can deduce that our model is capable of detecting changes under different scenarios efficiently.

According to quantitative and analytical analysis, we can conclude that the use of AGM in background detection with shadow detection increased the performance greatly.

In order to evaluate the effect of changing the parameters on the performance of our models, we have used precision-recall curves. For simplicity, we have generated precision-recall curves by systematically changing the threshold parameter \(T\) and the standard deviation factor \(K\). Figure 8 shows the effect of changing \(T\) and \(K\) on our method with and without Shadow detection.

Based on the measurements shown in Fig. 8, we can notice that both methods perform consistently very well. In addition, we can remark that varying \(T\) and \(K\) have little effect on the AGM+SD performance, as for the AGM it is affected by \(T\) alteration. Furthermore, the high overall precision of both algorithms allows our methods to operate with a low false positive rate at their sensitive operating point.

4 Conclusion

Adaptive mixture models are popular methods for background modeling. The proposed method has provided three main improvements to the well-known adaptive Gaussian mixture model [12]. First, we have adopted the asymmetric Gaussian distribution capable of modeling non-symmetrical data. Second, we have eliminated the problem of determining the number of clusters of the AGM by the consideration of the MML criterion. Finally, we have presented a novel pixel-based statistical approach for shadow detection and removal. Our shadow scheme identifies distributions of pixel values that could represent shadowed surfaces then uses them to build a second asymmetric Gaussian mixture model for shadows; hence, building shadow models capable of evolving over time. Our background subtraction approach shows good performance in terms of adaptability, accuracy and robustness, in different indoor and outdoor scenes with complex illumination variations, background movements, shadows, and ghosting artifacts.

References

Tavakkoli, A., Nicolescu, M., Bebis, G., Nicolescu, M.: Non-parametric statistical background modeling for efficient foreground region detection. Mach. Vis. Appl. 7(2), 1–15 (2009)

Farcas, D., Marghes, C., Bouwmans, T.: Background subtraction via incremental maximum margin criterion: a discriminative subspace approach. Mach. Vis. Appl. 23(6), 1083–1101 (2012)

Cheng, J., Yang, J., Zhou, Y., Cui, Y.: Flexible background mixture models for foreground segmentation. Image Vis. Comput. 24(5), 473–482 (2006)

Abbott, R.G., Williams, L.R.: Multiple target tracking with lazy background subtraction and connected components analysis. Mach. Vis. Appl. 20(2), 93–101 (2009)

Fuentes, L.M., Velastin, S.A.: People tracking in surveillance applications. Image Vis. Comput. 24(11), 1165–1171 (2006)

Tian, Y.L., Senior, A., Lu, M.: Robust and efficient foreground analysis in complex surveillance videos. Mach. Vis. Appl. 23(5), 967–983 (2012)

Mittal, A., Paragios, N.: Motion-based background subtraction using adaptive kernel density estimation. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 302–309 (2004)

Ren, Y., Chua, C.-S., Ho, Y.-K.: Motion detection with nonstationary background. Mach. Vis. Appl. 13(332–343), 93–101 (2003)

Or, S-H., Wong, Kin-H., Lee, K-S., Lao, T-K.: Panoramic video segmentation using color mixture models. In: The15th International Conference on Pattern Recognition (ICPR), Vol. 3, pp. 387–390 (2000)

El Baf, F., Bouwmans, T., Vachon, B.: Comparison of background subtraction methods for a multimedia learning space. In: The International Conference on Signal Processing and Multimedia Applications (SIGMAP), pp. 153–158 (2007)

Bouwmans, T., El Baf, F., Vachon, B.: Background modeling using mixture of gaussians for foreground detection: a survey. Recent Pat. Comput. Sci. 1(3), 219–237 (2008)

Stauffer, C., Grimson, W.E.L.: Adaptive background mixture models for real-time tracking. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 252–258 (1999)

Stauffer, C., Grimson, W.E.L.: Learning patterns of activity using real-time tracking. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 747–757 (2000)

Zivkovic, Z.: Improved adaptive Gaussian mixture model for background subtraction. In: The 17th International Conference on Pattern Recognition (ICPR), pp. 28–31 (2004)

Peng, A., Pieczynski, W.: Adaptive mixture estimation and unsupervised local Bayesian image segmentation. Graph. Models Image Process. 57(5), 389–399 (1995)

Friedman, N., Russell, S.: Image segmentation in video sequences: a probabilistic approach. In: The Thirteenth Conference on Uncertainty in Artificial Intelligence (UAI), pp. 1–3 (1997)

Lee, D.-S.: Effective Gaussian mixture learning for video background subtraction. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 827–832 (2005)

Wang, D., Xie, W., Pei, J., Lu, Z.: Moving area detection based on estimation of static background. J. Inf. Comput. Sci. 2(1), 129–134 (2005)

Allili, M.S., Bouguila, N., Ziou, D.: Finite general gaussian mixture modeling and application to image and video foreground segmentation. J. Electron. Imaging 17(1), 1–13 (2008)

Elguebaly, T., Bouguila, N.: Bayesian learning of finite generalized gaussian mixture models on images. Signal Process. 91(4), 801–820 (2011)

Elguebaly, T., Bouguila, N.: A nonparametric Bayesian approach for enhanced pedestrian detection and foreground segmentation. In: IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW), pp. 21–26 (2011)

Akaike, H.: A new look at the statistical model identification. IEEE Trans. Autom. Control 19(6), 716–723 (1974)

Rissanen, J.: Modeling by shortest data description. Automatica 14, 465–471 (1987)

McLachlan, G.J., Peel, D.: Finite Mixture Models. Wiley, New York (2000)

Bouguila, N., Ziou, D.: A Dirichlet process mixture of generalized Dirichlet distributions for proportional data modeling. IEEE Trans. Neural Netw. 21(1), 107–122 (2010)

Wallace, C.S., Boulton, D.M.: An information measure for classification. Comput. J. 11(2), 195–209 (1968)

Kato, T., Omachi, S., Aso, H.: Asymmetric gaussian and its application to pattern recognition. In: The Joint IAPR International Workshop on Structural, Syntactic, and Statistical Pattern Recognition (SSPR), pp. 405–413 (2002)

Baxter, R.A., Olivier, J.J.: Finding overlapping components with MML. Stat. Comput. 10(1), 5–16 (2000)

Grimson, W.E.L., Stauffer, C., Romano, R., Lee, L.: Using adaptive tracking to classify and monitor activities in a site. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 22–29 (1998)

Cucchiara, R., Grana, C., Piccardi, M., Prati, A., Sirotti, S.: Improving shadow suppression in moving object detection with HSV color information. In: IEEE Intelligent Transportation Systems, pp. 334–339 (2001)

Schreer, O., Feldmann, I., Goelz, U., Kauff, P.: Fast and robust shadow detection in videoconference applications. In: Fourth IEEE International Symposium on Video/Image Processing and Multimedia Communications, pp. 371–375 (2002)

Salvador, E., Cavallaro, A., Ebrahimi, T.: Cast shadow segmentation using invariant color features. Comput. Vis. Image Underst. 95(2), 238–259 (2004)

Horprasert, T., Hardwood, D., Davis, L.S.: A statistical approach for real-time robust background subtraction and shadow detection. In: IEEE International Conference on Computer Vision, pp. 1–19 (1999)

Zhang, SW., Fang, X. Z., Yang, X.K.: Moving cast shadows detection using ratio edge. IEEE Trans. Multimed. 9(6):1202–1214 (2007)

Choi, J.M., Yoo, Y.J., Choi, J.Y.: Adaptive shadow estimator for removing shadow of moving object. Comput. Vis. Image Underst. 114(9), 1017–1029 (2010)

Finlayson, G.D., Hordley, S.D., Lu, C., Drew, M.S.: On the removal of shadows from images. IEEE Trans. Pattern Anal. Mach. Intell. 28(1), 5968 (2006)

Kaewtrakulpong, P., Bowden, R.: An improved adaptive background mixture model for realtime tracking with shadow detection. In: Workshop on Advanced Video Based Surveillance Systems, pp. 1–5 (2001)

Martel-Brisson, N., Zaccarin, A.: Learning and removing cast shadows through a multidistribution approach. IEEE Trans. Pattern Anal. Mach. Intell. 29(7), 1133–1146 (2007)

Liu, Z., Huang, K., Tan, T., Wang, L.: Cast shadow removal combining local and global features. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), p. 18 (2007)

Goyette, N., Jodoin, P.-M., Porikli, F., Konrad, J., Ishwar, P.: changedetection.net: a new change detection benchmark dataset. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1–8 (2012)

Evangelio, R.H., Patzold, M., Sikora, T.: Splitting gaussians in mixture models. In: IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance, pp. 300–305 (2012)

Elgammal, A., Harwood, D., Davis, L.: Non-parametric model for background subtraction. In: The 6th European Conference on Computer Vision (ECCV), pp. 751–767 (2000)

Nonaka, Y., Shimada, A., Nagahara, H., Taniguchi, R.: Evaluation report of integrated background modeling based on spatio-temporal features. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 9–14 (2012)

Maddalena, L., Petrosino, A.: A self-organizing approach to background subtraction for visual surveillance application. IEEE Trans. Image Process. 17(7), 1168–1177 (2008)

Acknowledgments

The completion of this research was made possible, thanks to the Natural Sciences and Engineering Research Council of Canada (NSERC). The author would like to thank the anonymous referees and the associate editor for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

In this Appendix, we calculate the derivative of \(\frac{\partial L(\varTheta ,Z,{\mathcal {X}})}{\partial \mu _{jk}}, \frac{\partial L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{l_{jk}}}, \frac{\partial L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{r_{jk}}}, \frac{\partial ^2 L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{l_{jk}}^{2}} \), and \(\frac{\partial ^2 L(\varTheta ,Z,{\mathcal {X}})}{\partial \sigma _{r_{jk}}^{2}}\) used in the EM algorithm and background subtraction.

Appendix B

In this Appendix, we develop the solutions for Eqs. (24, 25, 26) used in the MML algorithm

Rights and permissions

About this article

Cite this article

Elguebaly, T., Bouguila, N. Background subtraction using finite mixtures of asymmetric Gaussian distributions and shadow detection. Machine Vision and Applications 25, 1145–1162 (2014). https://doi.org/10.1007/s00138-013-0568-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-013-0568-z