Abstract

The purpose of this paper is to prove an optimal restriction estimate for a class of flat curves in \({\mathbb {R}} ^d\), \(d\ge 3\). Namely, we consider the problem of determining all the pairs (p, q) for which the \(L^p-L^q\) estimate holds (or a suitable Lorentz norm substitute at the endpoint, where the \(L^p-L^q\) estimate fails) for the extension operator associated to \(\gamma (t) = (t, {\frac{t^2}{2!}}, \ldots , {\frac{t^{d-1}}{(d-1)!}}, \phi (t))\), \(0\le t\le 1\), with respect to the affine arclength measure. In particular, we are interested in the flat case, i.e. when \(\phi (t)\) satisfies \(\phi ^{(d)}(0) = 0\) for all integers \(d\ge 1\). A prototypical example is given by \(\phi (t) = e^{-1/t}\). The paper (Bak et al., J. Aust. Math. Soc. 85:1–28, 2008) addressed precisely this problem. The examples in Bak et al. (2008) are defined recursively in terms of an integral, and they represent progressively flatter curves. Although these include arbitrarily flat curves, it is not clear if they cover, for instance, the prototypical case \(\phi (t) = e^{-1/t}\). We will show that the desired estimate does hold for that example and indeed for a class of examples satisfying some hypotheses involving a log-concavity condition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(d \ge 2\). Let \(\gamma : I \rightarrow {\mathbb {R}}^d\) be a \(C^d\) curve defined on an interval I. The restriction of the Fourier transform of f to \(\gamma \) is given by

for Schwartz functions \(f \in \mathcal {S}({\mathbb {R}}^d)\). We are interested in the \(L^p-L^q\) estimate of the restriction of the Fourier transform:

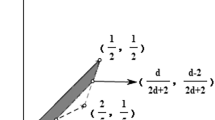

and for what \(p-q\) range the estimate holds. The trivial estimate is the \(L^1-L^\infty \) estimate. The critical line for the \(p-q\) range is \(\frac{1}{q} = \frac{d(d+1)}{2} \frac{1}{p'}\), \(q > \frac{d^2+d+2}{d^2+d}\), where \(p'\) is the Hölder conjugate exponent of p. (See [1].)

We are also interested in the conditions on \(\gamma \) that allows the \(L^p-L^q\) estimate to hold on the critical line. The simplest case is \(\gamma (t) = (t, \frac{t^2}{2!}, \ldots , \frac{t^d}{d!})\). Zygmund [18] and Hörmander [13] showed that (1) holds on the critical line for \(d=2\) and Drury [11] showed the corresponding result for \(d\ge 3\). Christ [8] proved partial results for more general curves, and Bak et al. [4] showed that the estimate (1) holds if \(\gamma \) is nondegenerate. Now consider a curve of simple type of the form \(\gamma (t) = (t, {\frac{t^2}{2!}}, \ldots , {\frac{t^{d-1}}{(d-1)!}}, \phi (t))\) where \(\phi \) is a \(C^d\) function. In this case, (1) may fail if \(\gamma \) is degenerate, unless we replace the Euclidean arclength measure by the affine arclength measure. Let w(t) be a weight function defined by

where \(\tau _{\gamma } = \det (\gamma ' ~ \gamma '' ~ \ldots ~ \gamma ^{(d)})\) is a torsion of \(\gamma \). The affine arclength measure is given by w(t)dt. Thus, we will replace the estimate (1) by

Furthermore, even though (2) fails at the endpoint \(p=q=\frac{d^2+d+2}{d^2+d}\), the restricted strong type (p, q) may hold:

Bak et al. [3] showed that (2) holds for curves satisfying some conditions on the critical line, and in [5], they showed the endpoint estimate (3) holds when \(\phi \) is any polynomial, where \(C=C_N\) depends only on the upper bound N on the degree of the polynomial. Also, Bak and Ham [2] showed the corresponding endpoint estimate for certain complex curves \(\gamma (z) \in {\mathbb {C}}^d\) of simple type. For more cases, see also [10, 16] and [17].

In this paper, we extend the result in [3] to the endpoint estimate, i.e., (3) holds for some curves that satisfy some hypotheses involving a certain log-concavity condition.

Theorem 1.1

Suppose \(d \ge 2\). Let \(\gamma \in C^d(I)\) be of the form

defined on \(I=(0,1)\). Suppose that \(\phi ^{(d)}\) is positive and increasing on I. Suppose that there exists \(\delta >0\) such that \(\phi ^{(d)}\) is log-concave on \((0,\delta )\), i.e.,

for all \(\lambda \in [0,1]\) and \(x_1,x_2 \in (0,\delta )\). Then, for \(p_d = (d^2 + d + 2)/(d^2 + d)\), there is a constant \(C < \infty \), depending only on d, such that for all \(f \in L^{p_d,1} (\mathbb {R}^d)\),

The paper is organized as follows. In Sect. 2, we establish a lower bound for a Jacobian related to an offspring curve. In Sect. 3, we collect some useful results on interpolation spaces. Section 4 is devoted to the proof of Theorem 1.1. In Sect. 5, we provide some relevant examples.

We will use the notation \(A \lesssim B\) to mean that \(A \le CB\) for some constant C depending only on d. And \(A \approx B\) means \(A \lesssim B\) and \(B \lesssim A\).

2 A Lower Bound for a Certain Jacobian

In this section, we establish the lower bound for a certain Jacobian, which plays an important role to prove Theorem 1.1. Before formulating this result, we introduce some notation before presenting the crucial proposition needed to prove Theorem 1.1.

For \(d \ge 2\) and \(x =(x_1,\ldots ,x_d) \in {\mathbb {R}}^d\), let V(x) denote the determinant of the Vandermonde matrix:

For \(0 \le t=t_1 \le \cdots \le t_d\), let \(h_i = t_{i} - t_1\). Then, \(0 = h_1 \le \cdots \le h_d\) and \(t_i = t + h_i\). Also, define

If \(\gamma : [0,1] \rightarrow {\mathbb {R}}^d\) and if \(0< t < 1-h_d\), define

which is called an offspring curve of \(\gamma \) for each fixed h. Let \(J_{\phi }(t,h)\) be the Jacobian determinant of \(\Gamma \):

Now we formulate the following proposition, which provides the lower bound of Jacobian of the offspring curve. (See also Proposition 2.1 in [3] and Proposition 3.5 in [9].)

Proposition 2.1

Let \(J_\phi (t,h)\) be defined as above, where

\(\gamma (t) = (t,\frac{t^2}{2!}, \ldots , \frac{t^{d-1}}{(d-1)!}, \phi (t))\) satisfies the condition in Theorem 1.1. Then, for \(t \in [0, \delta )\), \(h \in (0,\delta )^{d-1}\), and \(t+h_d<\delta \),

for some constant \(C_d\) which depends only on d.

Before embarking on the proof of Proposition 2.1, we need some definitions and lemmas from [3].

Lemma 2.2

(Lemma 2.2 in [3]) Fix \(\lambda \in (0,1)\). Define some intervals \((a_i , b_i)\) by

Suppose also that for \(m=1, \ldots , M\), and for \(s \in {\mathbb {R}}^N\), \(v_m(s)\) is a function having one of the three following forms:

Suppose that \(\lambda _n \in (0,1)\) and \(\lambda _n \le \lambda \) for \(n=1,\ldots ,N\). Let \(\mathcal {R}_N(a,b,\lambda )\) be the region of all \(s=(s_1,\ldots ,s_N) \in {\mathbb {R}}^N\) satisfying \((1-\lambda _n)a_n + \lambda _n b_n \le s_n \le b_n\) for \(n=1,\ldots ,N\). Then

Now, we define a function \(\zeta _d(t;h)\) recursively:

For \(d \ge 3\) and \(t \le h_d\), define

and define

if \(t \le h_{d}\), and \(\zeta _d(t;h) = 0\) if \(t > h_{d}\).

Consider a function \(\widetilde{J}_{\phi }^d(s) : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) defined by

Notice that \(\gamma '(s_i) = (1, s_i, \ldots , (s_i)^{d-2}/(d-2)!, \phi '(s_i))\).

Observe that by simple calculation,

Lemma 2.3

(Lemma 2.3 in [3]) Let \(\zeta _d\) and \(\widetilde{J}_{\phi }^d(t)\) be defined by (8), (10), and (11) with \(s_1 \le \cdots \le s_d\). Then

Lemma 2.4

Suppose that \(\phi ^{(d)}\) is log-concave on \((0,\delta )\) and \(0=h_1 \le h_2 \le \cdots \le h_d\). Then,

where \(t+h_i \in (0,\delta )\) for \(i=1,\ldots ,d\) and \(H_d(t,h) = \frac{1}{d} \sum _{i=1}^{d}(t+h_i) \in [t,t+h_d]\).

Proof

Let \(\beta (t) = -\log [\phi ^{(d)}(t)]\). Then, \(\beta \) is convex on \((0,\delta )\). Therefore, by Jensen’s inequality,

where \(t_i \in (0,\delta )\) for \(i=1,\ldots ,d\). It follows that

which implies

Namely,

which implies

If we put \(t_1=t\) and \(t_i=t+h_i\), we get

\(\square \)

Proof of Proposition 2.1

We adapt the proof of Proposition 2.1 in [3].

We will use both notations \(t_i\) and \(t+h_i\), where \(t_i = t+h_i\) for \(0=h_1 \le h_2 \le \cdots \le h_d\).

The equality follows from Lemma 2.3 and the inequality follows from nonnegativity. Since \(\phi ^{(d)}\) is increasing,

We will show that

To show (14), we will use induction on \(d\ge 2\).

It is easy to verify for the case \(d=2\) that the (14) holds with \(c_d=1/2\). Suppose that (14) holds for \(d-1\ge 2\). Consider a function \(\pi \) such that

where \(\bar{t}=\frac{1}{d}(t_1+\cdots +t_d)\). Observe that

Since \(\widetilde{J}_\phi ^d(t) = 0\) if \(t_i = t_{i+1}\), we get

By applying (15) and Lemma 2.3, we get

Let \(\lambda _i = \frac{d-i}{d}\). Note that if \(s_i \ge \lambda _it_i + (1-\lambda _i)t_{i+1}\), then \(\bar{s}=\frac{1}{d-1}(s_1 + \cdots + s_{d-1}) \ge \frac{1}{d}(t_1+\cdots +t_d)=\bar{t},\) so \(\chi _{\{u \ge \bar{t} \} }(u) \ge \chi _{\{u \ge \bar{s} \} }(u)\). Therefore,

By the induction hypotheses, we get the inequality

Using the fact that \(V_{d-1}\) is of the form \(\prod v_m(t)\) in Lemma 2.2, and

we get the inequality (14) (see [3, p. 9]). If we apply (12) and (14) to (13), we obtain (6). \(\square \)

3 Preliminaries on Interpolation Space

In this section, we provide some definitions and lemmas established in [5], which are needed to prove Theorem 1.1. Let \(\bar{X} = (X_0, X_1)\) be a compatible couple of quasi-normed spaces \(X_0\) and \(X_1\), i.e., both \(X_0\) and \(X_1\) are continuously embedded in the same topological vector space. We can define both the K-functional on \(X_0 + X_1\), given by

and the J-functional on \(X_0 \cap X_1\), given by

For \(0<\theta <1\), let the interpolation space \(\bar{X}_{\theta , q}\) be a subspace of \(X_0 + X_1\), where

is finite. Then, \(X_0 \cap X_1\) is dense in \(\bar{X} _{\theta ,q}\) when \(1 \le q < \infty \), so we can give an equivalent norm \(\Vert \cdot \Vert _{\bar{X}_{\theta , q; J}}\) on \(\bar{X} _{\theta ,q}\) by

where the infimum is taken over \(f=\sum f_n\) and \(f_n \in X_0 \cap X_1\), with convergence in \(X_0+X_1\). Note that \(\Vert \cdot \Vert _{\bar{X}_{\theta , q}}\) and \(\Vert \cdot \Vert _{\bar{X}_{\theta , q; J}}\) are equivalent when \(0< \theta < 1\). (For details, see Theorem 3.11.3 in [6].)

To present some lemmas, we introduce some definitions. Let \(0<r\le 1\). For a quasi-normed space X, its norm is called \(r-convex\) if there exists a constant \(C>0\) such that

for any finite \(x_i \in X\). Kalton [14] and Stein et al. [15] showed that the Lorentz space \(L^{r,\infty }\) is \(r-convex\) for \(0<r<1\).

For a quasi-normed space X, let \(\ell _s ^p (X)\) be a sequence space whose element \(\{f_n\}\) is X-valued and satisfies

We can also define a function space \(b_s^p(X;dw)\), where w is a weight function and X is Lorentz space on an interval I, such that \(f \in b_s^p(X;dw)\) implies \(\{ \chi _{\mathcal {W}_{w,n}} f \}_{n \in {\mathbb {Z}}} \in \ell _s ^p(X)\), i.e.,

where \(\mathcal {W}_{w,n} = \{ t \in I:2^n \le w(t) < 2^{n+1} \}\).

Then, by definition, \(b_{1/p}^p(L^p;dw)=L^p(I;dw)\).

Now, we state some lemmas that will be helpful in proving Theorem 1.1.

Lemma 3.1

(Lemma A.3 in [5]) Let \(0<r \le 1\) and V be an \(r-convex\) space. For \(i=1,\ldots ,n\), let

be couples of compatible quasi-normed spaces and let \(\mathcal {M}\) be an n-linear operator defined on \(\prod _{i=1}^n (X_0^i \cap X_1^i)\) with values in V. Suppose that

for \(0<\theta _i <1\) for all i. Then there is \(C>0\) such that for all \((f_1,\ldots ,f_n) \in \prod _{i=1}^n (X_0^i \cap X_1^i)\),

and \(\mathcal {M}\) extends to a bounded operator on \(\prod _{i=1}^n \bar{X}_{\theta _i,r}^i\).

Lemma 3.2

(Theorem 1.3 in [5]) For \(i=1,\ldots ,n\) and \(c_1,\ldots ,c_n \in {\mathbb {R}}\), \(c_1 \ne c_i\) for \(i = 2, \ldots , n\). Let \(0 < r \le 1\), and \(\bar{X} = (X_0,X_1)\) be a couple of compatible complete quasi-normed spaces. Let V be an \(r-convex\) space and \(\mathcal {M}\) be an n-linear operator defined on \(X_0+X_1\) and w be a weight function. Suppose

Then,

where \(c = \frac{1}{n} \sum _{i=1}^n c_i\).

Lemma 3.3

(Lemma A.4 in [5]) Let \(0<p \le \infty \), \(s_0,s_1 \in {\mathbb {R}}\), and \(0< \theta < 1\). Let \((X_0,X_1)\) be a compatible couple of quasi-normed spaces. If \(p \le q \le \infty \), then there is the continuous embedding

for \(s=(1-\theta )s_0 + \theta s_1\).

In fact, \(b_s^p(X)\) is a retract of \(l_s^p(X)\). Define \(r : l_s^p(X) \rightarrow b_s^p(X)\) by \(r(\{f_n\})= \sum _{n \in {\mathbb {Z}}} \mathcal {W}_{w,n} f_n\) and \(i : b_s^p(X) \rightarrow l_s^p(X)\) by \([i(f)]_n = \mathcal {W}_{w,n} f \). Then, \(r \circ i\) is the identity operator on \(b_s^p(X)\). Therefore, Lemma 3.3 implies that there is the continuous embedding

under the hypotheses of Lemma 3.3.

4 Proof of Theorem 1.1

The interval \(I = (0,1)\) can be decomposed into \((0,\delta ) \cup [\delta , 1)\). Since \(\phi ^{(d)}\) is positive and increasing on I, \(\gamma (t)\) is nondegenerate if \(t \in [\delta ,1)\) for any \(0<\delta <1\). Then, by Theorem 1.4 in [4], Theorem 1.1 holds on \([\delta ,1)\). Therefore, it is enough to show that Theorem 1.1 holds on \((0,\delta )\), if \(\gamma \) satisfies the log-concavity property (4) for some \(\delta > 0\) and \(\phi ^{(d)}\) is positive and increasing on \((0,\delta )\). Let \(q_d=p_d'= \frac{d^2+d+2}{2}\) and \(I=(0,\delta )\).

Definition 4.1

Let \(\mathfrak {C}\) be a class of \(\gamma (t)\), defined on I, given by \(\gamma (t)=(t, {\frac{t^2}{2!}}, \ldots , {\frac{t^{d-1}}{(d-1)!}}, \phi (t))\), for which \(\phi \in C^d(I)\), and \(\phi ^{(d)}\) is positive, increasing and log-concave on I.

Consider the adjoint operator \(T_w\) given by

and define \(\mathcal {C}\) by

where \(\Vert f \Vert _{L^{q_d, \infty }} ^{**} = \sup _{t>0} t^{1/q_d} f^{**}(t)\) with \(f^{**}\) is the maximal function of nonincreasing rearrangment of f.

The proof is an adaptation of the Proof of Theorem 4.2 in [5]. We will prove an \(L^2\)-estimate and an \((L^{q_d},~L^{q_d,\infty })\)-estimate for some d-linear operator \(\mathcal {M}\) which will be constructed from \(T_w\), and using a technique introduced in [7] with these two estimates, we will get a suitable estimate for the \(L^{q_d/d,\infty }\) norm of \(\mathcal {M}\). Then, we can get an estimate for a multi-linear operator \(\widetilde{\mathcal {M}}\) using Lemmas 3.1–3.3 and we can show that \(\mathcal {C}\) is bounded by some constant depending only on d.

Define a d-linear operator \(\mathcal {M}\) by

Let \(I^d = \bigcup E_{\pi }\) where

and \(\pi \) is the permutation on d. Then, without loss of generality, we can assume \(t_1 \le \cdots \le t_d\) so that the operator \(\mathcal {M}\) is defined on \(E = E_1 := \{ (t_1, \ldots , t_d) \in I^d : t_1 \le \cdots \le t_d \}\). Therefore, redefine the operator \(\mathcal {M}\) by

where \(G(t,h) = \prod _{i=1}^d g_i(t+h_i)\), \(W(t,h) = \prod _{i=1}^d w(t+h_i)\), \(h \in I^{d-1}\), and \(t+h_d < \delta \). Divide E into \(F_k, k \in {\mathbb {Z}}\), where

and define

We will obtain an upper bound for \(\mathcal {M}_k\).

\(\mathbf {L^2-estimate}\) By the change of variables \(\Gamma (t,h) \rightarrow y\), Plancherel’s theorem, and the change of variables \(y \rightarrow \Gamma (t,h)\), we get

Observe that \(J_\phi (t,h)\) is nonzero on \(F_k\). Then, by [9], the change of variables \(\Gamma (t,h) \rightarrow y\) is at most d!-to-one, so we can use the change of variables without any problem.

Since \(\Gamma \in \mathfrak {C}\), Proposition 2.1 holds, so we get the inequality

for some \(C_d>0\), which depends only on d. By (20) and the definition of w, we get

It is known (Lemma 1 of [12]) that the sublevel set estimate for v(h) is

Taking \(c=2^{-k}\), we get

Also, we can get the following inequality,

for any \(j=1,\ldots ,d\). Complex interpolation and (21) lead to

with \(\sum _{i=1}^d r_i^{-1} = \frac{1}{2}\). Finally, putting \(r_i = 2d\), we obtain

\(\mathbf {(L^{q_d}, L^{q_d,\infty })-estimate}\) Fix h and let \(I_h = (0,\delta - h_d)\). Observe that \(\Gamma (\cdot ,h) \in \mathfrak {C}\). Then,

with \(w_{\Gamma }(t) = \vert \tau _{\Gamma }(t)\vert ^{\frac{2}{d^2+d}}\). Furthermore, observe that if \(w_\epsilon (t) \le w(t)\), then we can write \(w_\epsilon (t) = \epsilon (t) w(t)\) with \(0 \le \epsilon \le 1\) and

Also, for \(\sum _{i=1}^d \epsilon _i = 1\), let \(w_{\epsilon ,h}(t) = \prod _{i=1}^d w(t+h_i)^{\epsilon _i}\). Then, by the positivity of \(\phi ^{(d)}\) and Jensen’s inequality for a convex function \(-\log \),

so we get \(w_{\epsilon ,h}\le w_{\Gamma }\).

If we put \(G(t,h) \frac{W(t,h)}{w_{\epsilon ,h}(t)}\) instead of g(t), then

So we have

where \(H=\{(h_1,\ldots ,h_d) \in I^d: 0=h_1 \le h_2 \cdots \le h_d,~2^{-(k+1)} < v(h) \le 2^{-k} \}\) and the last expression is bounded by

where \(p_d'=q_d\). Since H is a subset of \(F_k\), the sublevel set estimate of v(h) gives \(\vert H \vert \lesssim 2^{-2k/d}\). Since \(q_d' = p_d\), we get

By symmetry,

where \(\sum _{i=1}^d \epsilon _i = 1\) and \(\sum _{i=1}^d \frac{1}{s_i} = \frac{1}{q_d}\).

\(\mathbf {Estimate~on~the~L^{q_d/d,\infty }~norm~of~\mathcal {M}}\) Fix \(y>0\) and define \(G_y = \{ x : \vert \mathcal {M}[g_1,\ldots ,g_d](x)\vert > 2y \}\). Then, for any constant K,

If we choose K appropriately so that

which means

then we obtain

Since \(\frac{d-2+2q_d}{(d+2)q_d} = \frac{d}{q_d}\), we get

Observe that \(\Vert g_i w^{\frac{3-d}{4}} \Vert _{2d} \approx \sum _{k \in {\mathbb {Z}}}2^{k\frac{3-d}{4}} \Vert \chi _{\mathcal {W}_{w,k}} g_i \Vert _{2d}\) and

\(\Vert g_i w^{1-\frac{\epsilon _i}{p_d}} \Vert _{s_i} \approx \sum _{k \in {\mathbb {Z}}} 2^{k(1-\frac{\epsilon _i}{p_d})} \Vert \chi _{\mathcal {W}_{w,k}} g_i \Vert _{s_i}\), so we can write

where \(\bar{X}^i_{\frac{d-2}{d+2},1} = \big (b_{\frac{3-d}{4}}^1(L^{2d};dw), b_{1-\frac{\epsilon _i}{p_d}}^1(L^{s_i};dw) \big )_{\frac{d-2}{d+2},1}\).

Also, we can find the continuous embedding

by Lemma 3.3 with \(b_s^p\) instead of \(l_s^p\). Therefore, if we define

and

we get \((L^{2d},L^{s_i})_{\frac{d-2}{d+2}, 1} = L^{b_i, 1}\) and

where \(\sum _{i=1}^d a_i = \sum _{i=1}^d \frac{1}{b_i} = \frac{d}{q_d}\).

Now, define a multi-linear operator \(\widetilde{\mathcal {M}}\) by

for \(n > q_d\). Let \(r=\frac{q_d}{n} < 1\). Then, as we stated in Sect. 4, \(L^{r,\infty }\) is an \(r-convex\) space. We may write

and by Hölder’s inequality, it follows

Observe that if we put \(g_i = g\) and \(a_i=\frac{1}{b_i}=\frac{1}{q_d}\) for all \(i=1,\ldots ,d\) in (29), we get

By applying (29) and (31) to (30), and by using the generalized geometric means inequality, we get

where \(\sum _{i=1}^d a_i = \sum _{i=1}^d \frac{1}{b_i} = \frac{d}{q_d}\).

We will choose \(a_i\) and \(b_i\) appropriately to get a upper bound of \(\widetilde{\mathcal {M}}\). Recall that \(a_i\) depends on \(\epsilon _i\) and \(b_i\) depends on \(s_i\). Let \(\eta >0\) be small enough and let

Then,

and it is easy to check that \(\sum _{i=1}^d \frac{1}{b_i} = \frac{d}{q_d}\). Moreover, we get

Therefore, applying Lemma 3.1 in (32) allows us to get

where \(\bar{Y}_{\frac{n-d}{n-2}, 1} = \big ( {b_{a_3}^1(L^{b_3,1};dw)}, b_{1/q_d}^1(L^{q_d,1};dw) \big )_{\frac{n-d}{n-2}, 1}\). By Lemma 3.3, there is a continuous embedding

where \(c_3 = \frac{d-2}{n-2} a_3 + \frac{n-d}{n-2} \frac{1}{q_d}\). We put \(c_1=a_1\) and \(c_2=a_2\) and choose \(\epsilon _1\), \(\epsilon _2\), and \(\epsilon _3\) properly so that \(c_1\), \(c_2\), and \(c_3\) are all different. Then,

Note that the last inequality comes from the trivial embedding. If we apply Lemma 3.2 to the last expression, we get

where \(c=\frac{1}{n}\sum _{i=1}^n c_i\) and \(\bar{Z}_{\frac{1}{n},nr} = (L^{b_2,r},L^{b_1,r})_{\frac{1}{n},nr}\).

By simple calculation, we get \(c = \frac{1}{q_d}\) and

since \(\frac{1}{n}\frac{1}{b_1} + \frac{n-1}{n}\frac{1}{b_2} = \frac{1}{q_d}\). Therefore, \({b_c ^{nr}\big (\bar{Z}_{\frac{1}{n},nr};dw\big )} = b_{1/q_d}^{q_d}(L^{q_d};dw) = L^{q_d}(dw)\) and we obtain

If we put \(g = g_i\) for all \(i=1,\ldots ,n\), we get

By the definition (18) of \(\mathcal {C}\), this leads to \(\mathcal {C}^{\frac{(d-2)}{d^2+2d}} \lesssim \mathcal {C}\), which implies that \(\mathcal {C}\) is bounded by some constant depending only on d.

\(\square \)

5 Some Examples

Now we provide some examples that satisfy the hypotheses of Theorem 1.1. For a given function \(\phi ^{(d)} : (0,\delta ) \rightarrow {\mathbb {R}}^+\), define \(\psi : (\delta ^{-1},\infty ) \rightarrow {\mathbb {R}}\) by \(\psi (x) = \frac{1}{\phi ^{(d)}(1/x)}\). If \(\psi \) is log-convex, then \(\phi ^{(d)}\) is log-concave. The proof is as follows. If we assume that \(\psi \) is log-convex,

It follows that

where \(t_1 = 1/x_1\) and \(t_2=1/x_2\). Since function 1/x is convex and \(\psi ^{-1}\) is decreasing on \((0,\infty )\), we have

so \(\phi ^{(d)}\) is log-concave. Therefore, if \(\psi (x) = \psi _{\phi ^{(d)}}(x) = \frac{1}{\phi ^{(d)}(1/x)}\) is positive, increasing, and log-convex on \((\delta ^{-1},\infty )\), then \(\phi ^{(d)}\) satisfies the hypotheses of Theorem 1.1. Also, for following examples, proving \(\psi \) is log-convex is easier than proving \(\phi ^{(d)}\) is log-concave, so we will give a proof that \(\psi \) is positive, increasing, and log-convex.

1. Let \(\phi (t) = e^{-1/t}\) and \(t \in (0,\delta )\), where \(\delta \) will be chosen later. Then,

where

Then, \(\psi _{\phi ^{(d)}}(x) = e^x \big (\sum _{i=1}^d a_{i,d} x^{d+i}\big )^{-1} \). Let \(P(x) = \sum _{i=1}^d a_{i,d} x^{d+i}\). The leading coefficient of P, \(P'\), \(P''\) are 1, 2d, \(2d(2d-1)\), respectively. Therefore, if we take \(\delta \) small enough, which means x large enough, then \(P>0\) and \(PP'' \le (P')^2\), which implies that P is log-concave and \(P^{-1}\) is log-convex. So we can check that \(\psi _{\phi ^{(d)}}(x)\) is log-convex and \(\psi _{\phi ^{(d)}}(x)\) is positive and increasing for \(x \in (\delta ^ {-1}, \infty )\).

Likewise, for \(\phi (t) = e^{-{1/t^m}}\) with \(m \in {\mathbb {N}}\),

where the leading coefficient \(a_{(d-1)m} = 1\) and \(a_i\) for \(i=1, \ldots ,(d-1)m-1\) is determined by d and m. Therefore \(\psi _{\phi ^{(d)}}(x)\) is log-convex, positive, and increasing for \(x \in (\delta ^ {-1}, \infty )\).

2. Let \(\phi _2(t) = \exp (-e^{1/t})\). Then,

where the \(P_i(t)\) are certain polynomials with degree \(\le i\). Therefore,

where degree of \(\tilde{P}_i\) \(\le 2d\). Let \(P(x) = e^x \tilde{P}_{d-1}(x) + \cdots + e^{(d-1)x} \tilde{P}_1(x) + e^{dx} x^{2d}\). If x is large enough, then \(P>0\) and \(PP'' \le (P')^2\). (For x large, P acts like \(e^{dx}x^{2d}\)). Therefore, \(\psi _{\phi _2^{(d)}}(x)\) is log-convex, positive, and increasing if x is large enough.

Observe that (Likewise,) for \(\phi _n (t) = \exp (-\exp ( \ldots ( \exp (1/t) \ldots )\), \(\psi _{\phi _n^{(d)}}(x)\) satisfies the log-convexity for x large enough too.

References

Arkhipov, G.I., Chubarikov, V.N., Karatsuba, A.A.: Trigonometric Sums in Number Theory and Analysis. Translated from the 1987 Russian original. de Gruyter Exp. Math. 39. Walter de Gruyter, Berlin (2004)

Bak, J.-G., Ham, S.: Restriction of the Fourier transform to some complex curves. J. Math. Anal. Appl. 409, 1107–1127 (2014)

Bak, J.-G., Oberlin, D., Seeger, A.: Restriction of Fourier transforms to curves, II: some classes with vanishing torsion. J. Aust. Math. Soc. 85, 1–28 (2008)

Bak, J.-G., Oberlin, D., Seeger, A.: Restriction of Fourier transforms to curves and related oscillatory integrals. Am. J. Math. 131(2), 277–311 (2009)

Bak, J.-G., Oberlin, D., Seeger, A.: Restriction of Fourier transforms to curves: an endpoint estimate with affine arclength measure. J. Reine Angew. Math. 682, 167–205 (2013)

Bergh, J., Löfström, J.: Interpolation Spaces. An Introduction. Grundlehren Math. Wiss. 223. Springer, Berlin (1976)

Bourgain, J.: Estimations de certaines fonctions maximales. C. R. Acad. Sci. Paris Sér. I Math. 301(10), 499–502 (1985)

Christ, M.: On the restriction of the Fourier transform to curves: endpoint results and the degenerate case. Trans. Am. Math. Soc. 287, 223–238 (1985)

Dendrinos, S., Stovall, B.: Uniform bounds for convolution and restricted X-ray transforms along degenerate curves. J. Funct. Anal. 268, 585–633 (2015)

Dendrinos, S., Wright, J.: Fourier restriction to polynomial curve I: a geometric inequality. Am. J. Math. 132(4), 1032–1076 (2010)

Drury, S.W.: Restriction of Fourier transforms to curves. Ann. Inst. Fourier (Grenoble) 35, 117–123 (1985)

Drury, S.W., Marshall, B.: Fourier restriction theorems for curves with affine and Euclidean arclength. Math. Proc. Camb. Philos. Soc. 97, 111–125 (1985)

Hörmander, L.: Oscillatory integrals and multipliers on \(FL^p\). Ark. Mat. 11, 1–11 (1973)

Kalton, N.J.: Linear operators on \(L^p\) for \(0<p<1\). Trans. Am. Math. Soc. 259, 319–355 (1980)

Stein, E.M., Taibleson, M., Weiss, G.: Weak type estimates for maximal operators on certain \(H^p\) classes. Rend. Circ. Mat. Palermo 2(Suppl. 1), 81–97 (1981)

Stovall, B.: Endpoint \(L^p \rightarrow L^q\) bounds for integration along certain polynomial curves. J. Funct. Anal. 259(12), 3205–3229 (2010)

Stovall, B.: Uniform estimates for Fourier restriction to polynomial curves in \({\mathbb{R}}^d\). Am. J. Math. 138(2), 449–471 (2016)

Zygmund, A.: On Fourier coefficients and transforms of functions of two variables. Studia Math. 50, 189–201 (1974)

Acknowledgements

We would like to thank Jong-Guk Bak for suggesting the problem to us and also for giving us many helpful suggestions. We also thank Andreas Seeger for first mentioning the problem to him.

Funding

The author has been supported by the NRF of Korea (NRF-2020R1A2C1A01005446).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hans G. Feichtinger.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Moon, K. A Restriction Estimate with a Log-Concavity Assumption. J Fourier Anal Appl 30, 16 (2024). https://doi.org/10.1007/s00041-024-10073-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00041-024-10073-3